1. Introduction

Cardiac arrhythmia is a common manifestation of cardiovascular disease (CVD) that poses a significant health concern and contributes to high mortality rates worldwide. It involves irregular heart rhythms that can result in serious complications such as an escalated risk of stroke or sudden cardiac death [

1].

The predominant approach for identifying arrhythmias is electrocardiography (ECG), which monitors the electrical activities of the heart throughout time [

2]. ECG signals are typically acquired from multiple leads, each providing various insights into the heart’s electrical functions [

3]. Lead II explicitly plays a frequent role in arrhythmia diagnosis. With the annual worldwide recording of over 300 million ECGs and this number showing continuous growth, the importance of this diagnostic method is clear [

4].

The widespread adoption of ECG stems from its non-invasive and cost-effective nature, enabling the identification of various cardiovascular irregularities such as atrial fibrillation (AF), premature atrial contractions (PAC), and myocardial infarctions (MI) [

5,

6]. Nonetheless, the analysis of ECG signals presents a challenge, especially with more intricate arrhythmias [

2].

Diagnosing cardiac arrhythmia via ECG signal interpretation has conventionally been reported to physicians based on their clinical exposure. This conventional method involves a subjective correlation between a patient’s ECG pattern and an established taxonomy of medical conditions which depends on a subjective interpretation of medical literature [

7,

8]. While the traditional approach of ECG signal interpretation has shown some effectiveness, it often suffers from errors and wrong classification [

2]. As a result, there is an increasing need to automate medical processes in order to enhance the quality of patient care and reduce overall healthcare costs. This leads to the development of alternative methods for analyzing ECG signals using machine learning and deep learning techniques [

9,

10].

Deep learning models including recurrent neural networks (RNNs) and convolutional neural networks (CNNs) have attracted significant attention due to their strong ability to learn complex data patterns [

11,

12]. Thus, CNNs and RNNs have been used in various areas of biomedical signal processing (e.g., classification of arrhythmias based on ECG signals) [

2]. The main advantage of deep learning is its ability to extract meaningful features directly from raw data, which allows the capture of subtle signal characteristics that may be difficult to identify manually [

13]. While traditional approaches often struggle with the high unpredictability of ECG signals [

2], deep learning models have the capability to handle such challenges and manage large-scale ECG analysis [

1].

Several literature studies have shown the strong potential of DL models in automated arrhythmia classification using ECG signals. CNNs, in particular, effectively learn spatial features from ECG recordings. Kachuee, et al. [

14] developed a deep CNN model that classified five arrhythmia types according to the AAMI EC57 standard, achieving classification accuracies of 93.4% for arrhythmias and 95.9% for myocardial infarction (MI). Romdhane, et al. [

15] proposed a CNN model optimized with a focal loss function to address class imbalance, enhancing the detection of minority heartbeat classes. Their method, evaluated on the INCART and MIT-BIH datasets, yielded 98.41% accuracy, 98.38% F1-score, 98.37% precision, and 98.41% sensitivity. Ahmed, et al. [

16] introduced a one-dimensional CNN architecture for arrhythmia detection, reporting performance metrics of 99% accuracy, 94% sensitivity, and 99% precision.

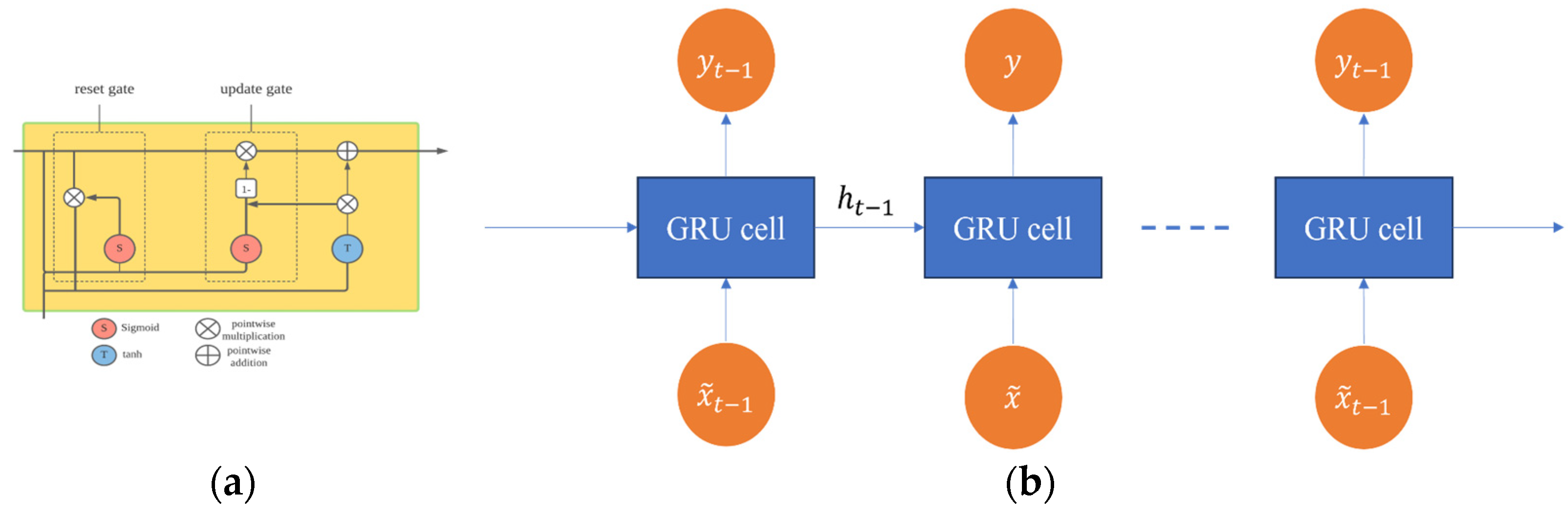

Recurrent neural networks (RNNs), particularly LSTM, are proficient in modeling temporal dependencies within ECG signals. Singh, et al. [

17] employed LSTMs on the MIT-BIH Arrhythmia Database to distinguish between regular and irregular beats, demonstrating superior performance compared to other RNN models. Several studies have also explored hybrid architectures that combine CNNs and RNNs. Xu, et al. [

18] proposed a model integrating convolutional layers, Squeeze-and-Excitation residual blocks, and bidirectional LSTMs. This architecture achieved 95.90% sensitivity, 96.34% specificity, and a classification time of 6.23 s across five ECG classes. Similarly, Maurya, et al. [

19] developed a cascaded model combining LSTM and RNN layers for classifying 12-lead ECG signals, achieving 89.9% accuracy, 93.46% sensitivity, and 84.36% specificity. More recently, Sarankumar, et al. [

20] introduced a BiGRU-based autoencoder model that achieved 97.76% accuracy and 98.31% specificity on the CPSC 2018 dataset, illustrating the effectiveness of bidirectional modeling and latent feature compression.

Despite the promising accuracy achieved by deep learning models in arrhythmia classification, several critical limitations remain unaddressed, particularly regarding interpretability, data imbalance, and the absence of attention mechanisms [

21,

22].

First and foremost, interpretability remains a fundamental challenge. While the CNN- and LSTM-based models discussed in the literature (e.g., Kachuee, et al. [

14]; Ahmed, et al. [

16]) demonstrate strong classification performance, they largely operate as “black boxes.” In clinical settings, such opacity impedes the trust and acceptance of these models among healthcare professionals, who must often justify diagnostic decisions. Especially in jurisdictions where explainability is legally mandated [

23], the inability to understand or audit model reasoning presents a significant barrier to clinical adoption [

24]. Most of the reviewed studies provide limited insight into the internal decision-making pathways of their models, neglecting the crucial requirement for transparent and accountable AI in medicine. Secondly, the issue of data imbalance persists across the reviewed works. Arrhythmia datasets such as MIT-BIH and INCART often exhibit skewed class distributions, where common arrhythmias are overrepresented and rarer ones are underrepresented. This imbalance can bias models toward majority classes, diminishing their diagnostic reliability for rare but clinically significant arrhythmias [

25]. Although Romdhane, et al. [

15] attempt to address this through the use of focal loss, such strategies are underutilized or insufficiently validated in other studies. Inadequate handling of data imbalance may inflate overall accuracy metrics while masking poor performance on minority classes, which is unacceptable in high-stakes clinical diagnostics [

26]. Finally, the lack of attention mechanisms in many of the proposed models limits their capacity to capture salient temporal or spatial features in ECG signals. Attention mechanisms—particularly those embedded within transformer or hybrid CNN-RNN architectures—have shown promise in selectively weighting important signal segments, enhancing both performance and interpretability. Yet the majority of studies rely solely on convolutional or sequential encoders without incorporating attention-based enhancements. This omission represents a missed opportunity to improve model focus, reduce noise sensitivity, and provide visualizable attention maps that could further support explainability and clinician validation.

As can be noticed, illuminating the decision-making mechanisms employed by deep learning models becomes imperative to safeguard the accuracy, impartiality, absence of bias, and ethical conduct of predictions, especially considering their potential profound impact on individuals’ lives [

27].

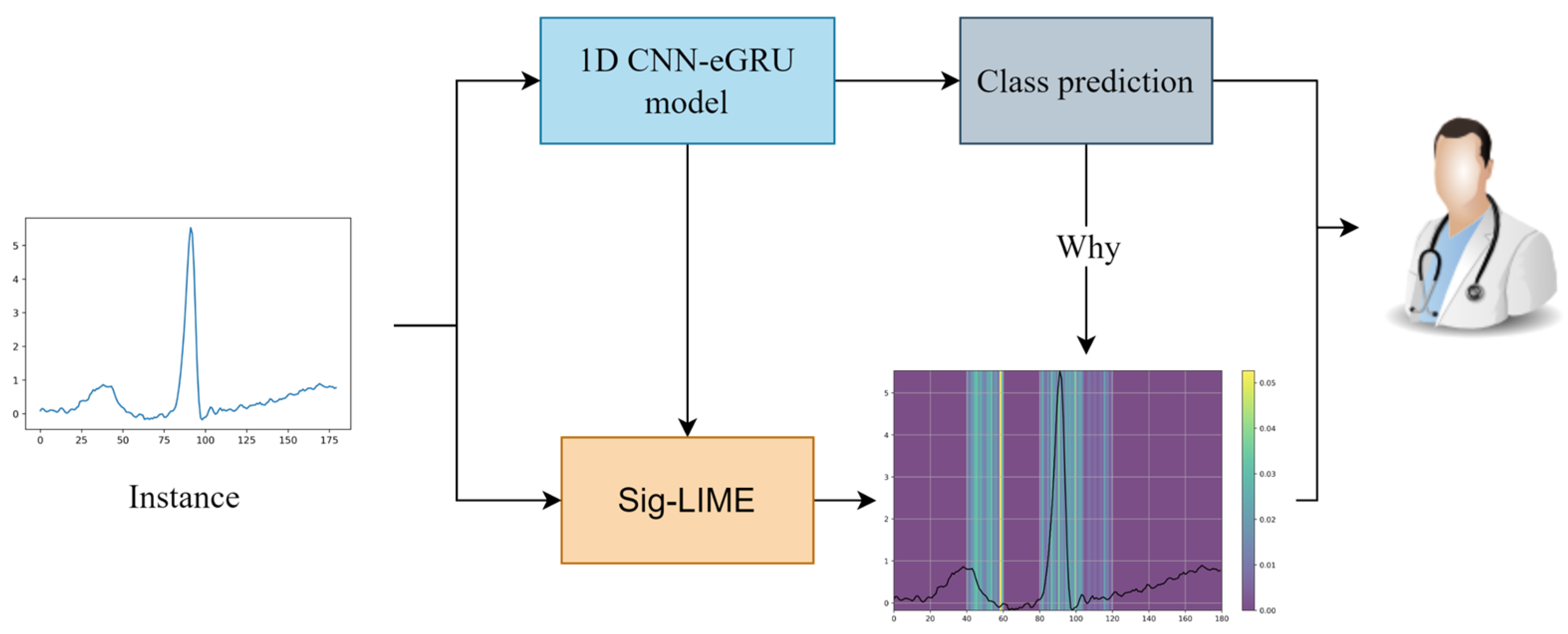

This paper aims to develop a reliable and interpretable deep learning model capable of accurately classifying a wide range of cardiac arrhythmias using single-lead electrocardiogram (ECG) signals, specifically Lead II. The proposed approach addresses key challenges in automated ECG analysis, including class imbalance, the need for robust feature extraction, and the lack of model transparency in clinical applications. The primary contributions of this study are outlined as follows:

Proposing a hybrid data balancing technique that combines resampling methods with class-weighted learning to address class imbalance in the MIT-BIH arrhythmia dataset.

Developing a hybrid 1D CNN-eGRU deep learning model with consideration for effective spatial–temporal feature extraction from ECG lead II signals.

Employing the Sig-LIME as a signal-specific interpretability method to enhance the transparency and trustworthiness of the model’s predictions.

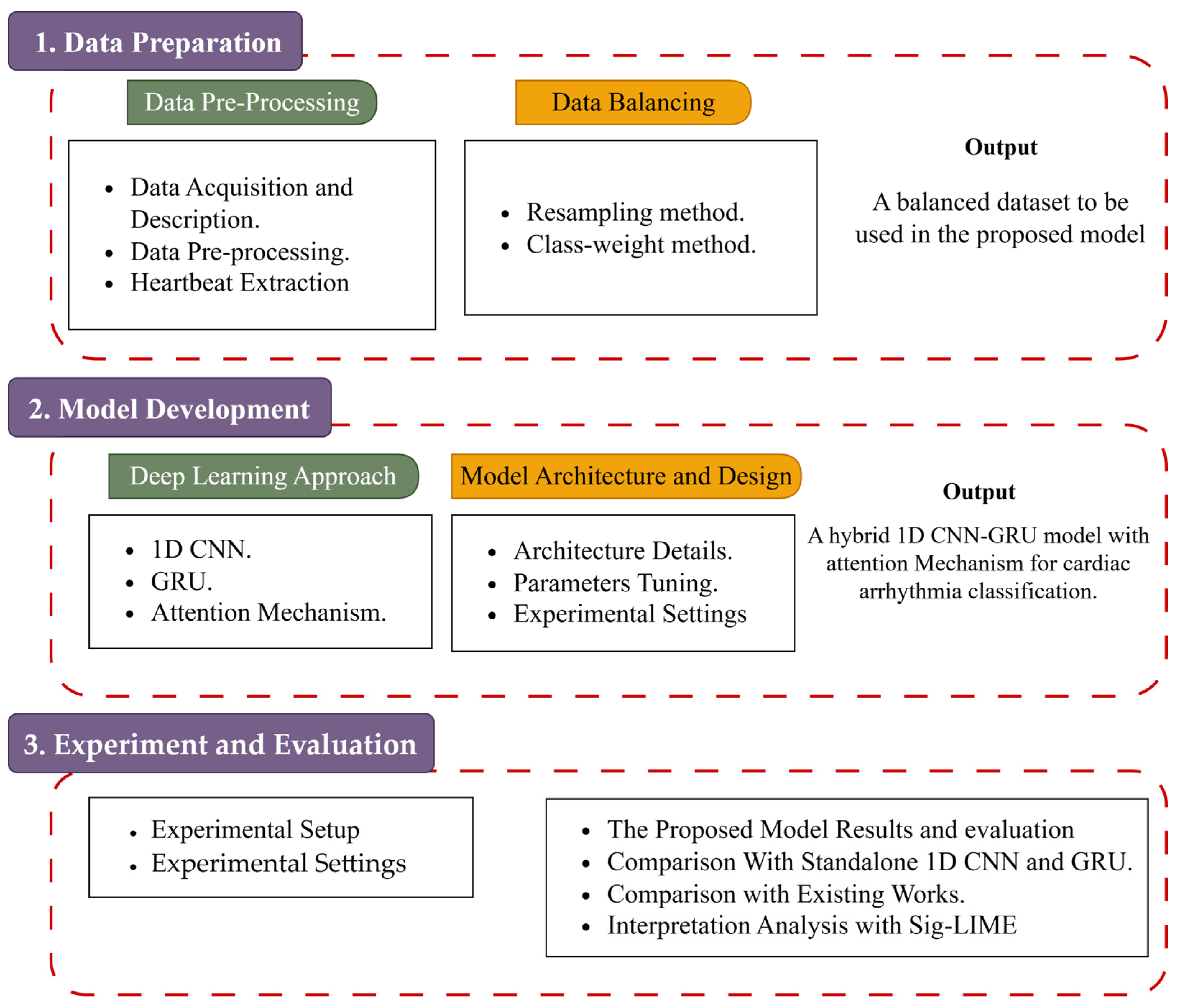

The remainder of this paper is organized as follows:

Section 2 presents the materials and methods used in this study including the architecture of the 1D CNN-eGRU model.

Section 3 presents and discusses the experimental results. Finally,

Section 4 concludes the paper by summarizing the key findings and suggesting directions for future research.

3. Results and Discussion

The Results and Discussion Section unveils the empirical findings garnered from the implemented methodologies for cardiac arrhythmia classification based on ECG signals. This section meticulously presents and interprets the performance outcomes of the developed models, emphasizing their efficacy in classifying arrhythmias.

3.1. Experimental Setup

The experimental setup utilized for conducting our study is explained in this section. All experiments were performed on a computing system equipped with an Intel® Xeon(R) CPU E3-1226 v3 @ 3.30 GHz × 4 processor (Intel Corporation, Santa Clara, CA, USA), an NVIDIA GeForce GTX 1070 GPU, a 1TB hard disk, and 23.4 GB of RAM (NVIDIA, Santa Clara, CA, USA). The software environment was based on Python 3.12.6 that was managed using the Anaconda distribution, with Jupyter Lab and Spyder that were utilized for interactive development and experimentation. Essential libraries were used in the experiments: NumPy for numerical computations, Pandas for data manipulation and processing, SciPy for scientific computing tasks, Scikit-learn 1.7.1 for implementing machine learning models and evaluation metrics, WFDB package 4.3.0 for accessing and processing physiological signal data, and TensorFlow 2.15.0 for developing and training the deep learning models.

The carefully curated experimental setup enabled the efficient execution of our research tasks, ensuring reliable results and fostering reproducibility. The hardware’s computational power, combined with the versatile software and essential libraries, facilitated a seamless and robust research environment for exploring the proposed explainable deep learning classification approach.

3.2. Experimental Setting

In our pursuit of enhancing the performance of the hybrid 1D CNN-eGRU suggested for cardiac arrhythmia classification, we systematically fine-tuned critical hyperparameters using a trial-and-error approach. We selected and adjusted hyperparameters including filter size, kernel size, dropout rate, GRU, dense nodes, decay rate, learning rate, training epochs, and batch size. The goal was to identify the best combination that would maximize the performance measures. We conducted a series of experiments, adjusting one or more hyperparameters while keeping others constant, recording the model’s performance metrics in each case. These experiments spanned 100 epochs, enabling a comprehensive exploration of hyperparameter effects on performance.

After each experiment, we rigorously analyzed the results, with a focus on accuracy, F1-score, recall, and precision. We identified the hyperparameters with the most significant impact on performance, leading to the selection of optimal values. The best parameters of the suggested hybrid 1D CNN-eGRU are outlined in

Table 1.

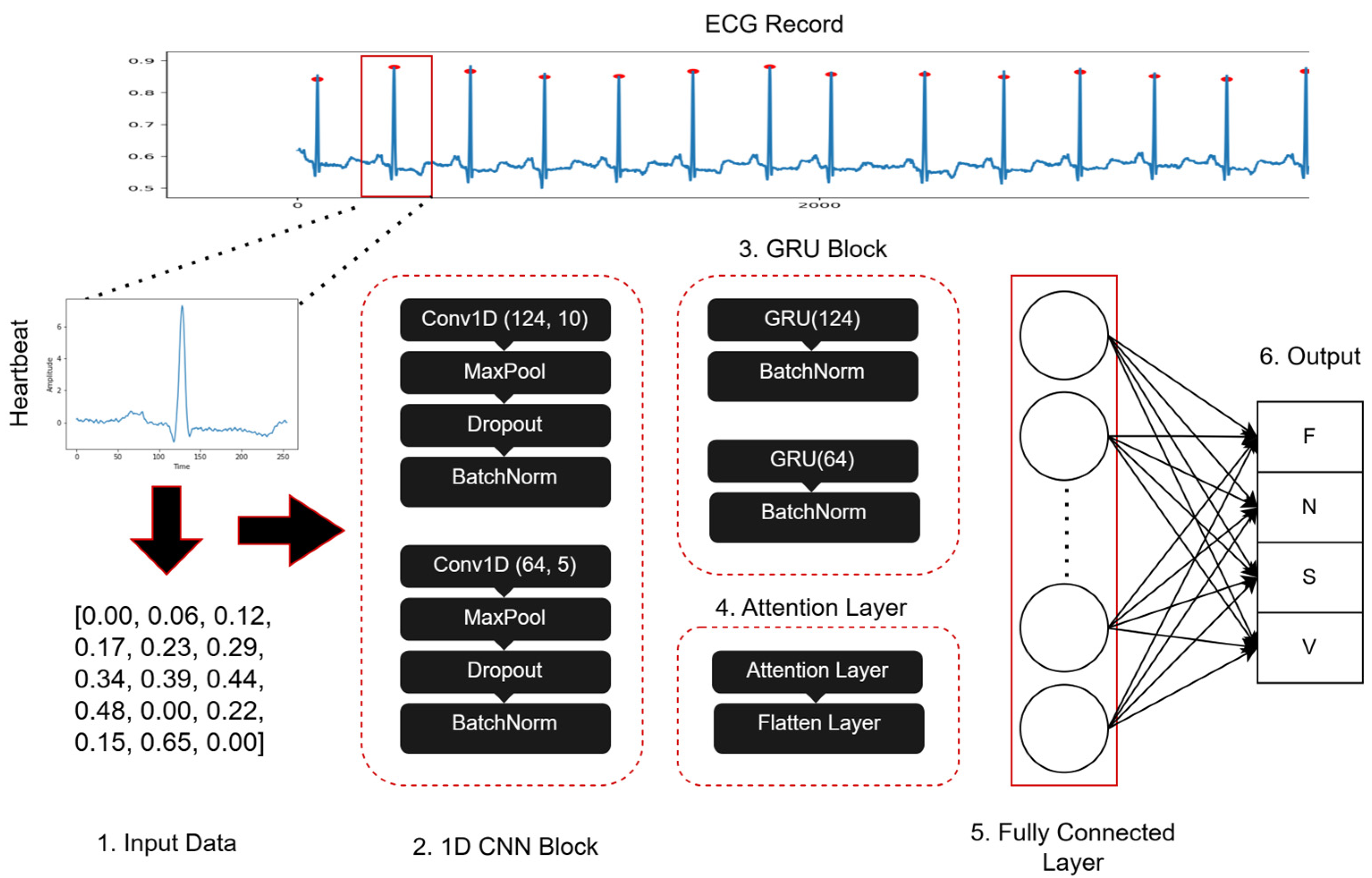

The model architecture incorporated two 1D CNN layers, with filter sizes of 124 and 64, respectively. These filters are responsible for extracting relevant features and capturing intricate relationships within the ECG data to aid in accurate arrhythmia classification. The receptive field of the convolutional filters is determined by the kernel size, which is set to 10 and 5 for the first and second CNN layers, respectively. It specifies the number of adjacent data points considered during the convolution operation to enable the model to catch local patterns and variations in the signals of ECG. A dropout layer with a rate of 0.2 was added to the model in order to avoid overfitting. During training, this layer arbitrarily sets a portion of inputs to zero to avoid the network from overly relying on specific features and promoting generalization. Additionally, a kernel regularizer with a value of 0.001 was applied to the convolutional and dense layers to avoid overfitting by punishing high weight values, thereby increasing the capability of generalization.

In the model architecture, two GRU layers also were included with 124 and 64 units. The GRU algorithm facilitated the extraction of sequential features and long-term dependencies within the ECG data, increasing the ability of the model to capture temporal dynamics crucial for accurate arrhythmia classification. An attention mechanism was integrated into the model to further enhance its performance. This mechanism dynamically focuses on the most significant portions of the input sequence, computing a weighted total of the input features, where each feature’s importance is indicated by its weight. This process increases the model’s interpretability and performance by emphasizing critical features for arrhythmia classification.

The fully connected layer consisted of two dense layers. The first dense layer comprised 512 nodes, which introduced non-linearity through the Rectified Linear Unit (ReLU) activation function. This layer enabled CNN to learn complex combinations of features extracted by previous layers, enhancing its discriminative capabilities. The second dense layer produced class probabilities using the softmax activation function and had four nodes, corresponding to the four arrhythmia classes.

The Adam optimizer was used to train the model at a learning rate of 0.001. The learning rate is an important parameter that controls the rate at which the model’s weights are updated during training. It defines the size of the adjustments made to the model’s parameters at each iteration, significantly impacting the speed and convergence of the training process. Higher learning rates can lead to overshooting, where the adjustments are too large, potentially hindering the convergence of the model. A decay rate of 0.9 and 5000 decay steps were applied to reduce the learning rate over time, ensuring more precise fine-tuning of the model’s parameters.

In each iteration, the number of samples processed is determined by the batch size, which is set to 512 in our experiments. This value was chosen to balance computational efficiency against available memory resources. The model was trained for a total of 100 epochs to represent 100 complete passes through the entire training dataset. This duration was determined to allow sufficient opportunity for the model to iteratively refine its internal parameters and learn the complex patterns necessary for accurate arrhythmia classification from the ECG data. These parameters collectively governed the training dynamics to help with optimal convergence and generalization performance.

3.3. Evaluation Metrics

In order to test and assess the performance of the suggested hybrid 1D CNN-eGRU, several performance metrics are utilized in our experiments:

Sensitivity (Recall): Sensitivity, also known as recall, quantifies the capacity of model to recognize all relevant examples of specific arrhythmia class. It measures the percentage of true positive predictions among the dataset’s real positive examples.

Specificity: Specificity denotes the ratio of correctly identified true negatives by the model among all actual negatives. It is also known as selectivity or the true negative rate, which quantifies the capacity of the model to precisely detect negative instances. Specifically, it assesses the accuracy in identifying examples that do not belong to the target class.

ROC Curve and AUC: The Receiver Operating Characteristic (ROC) curve was used to graphically evaluate the classification performance across various discrimination thresholds. In the ROC, the True Positive Rate is plotted against the False Positive Rate. In addition, the Area Under the ROC Curve (AUC) quantifies the overall performance by calculating the area beneath this curve. A higher AUC value indicates a better ability of the model to distinguish between the different arrhythmia classes.

These measures together offer a comprehensive assessment of the deep learning model in classifying the cardiac arrhythmias.

3.4. Performance of the Proposed Hybrid Model

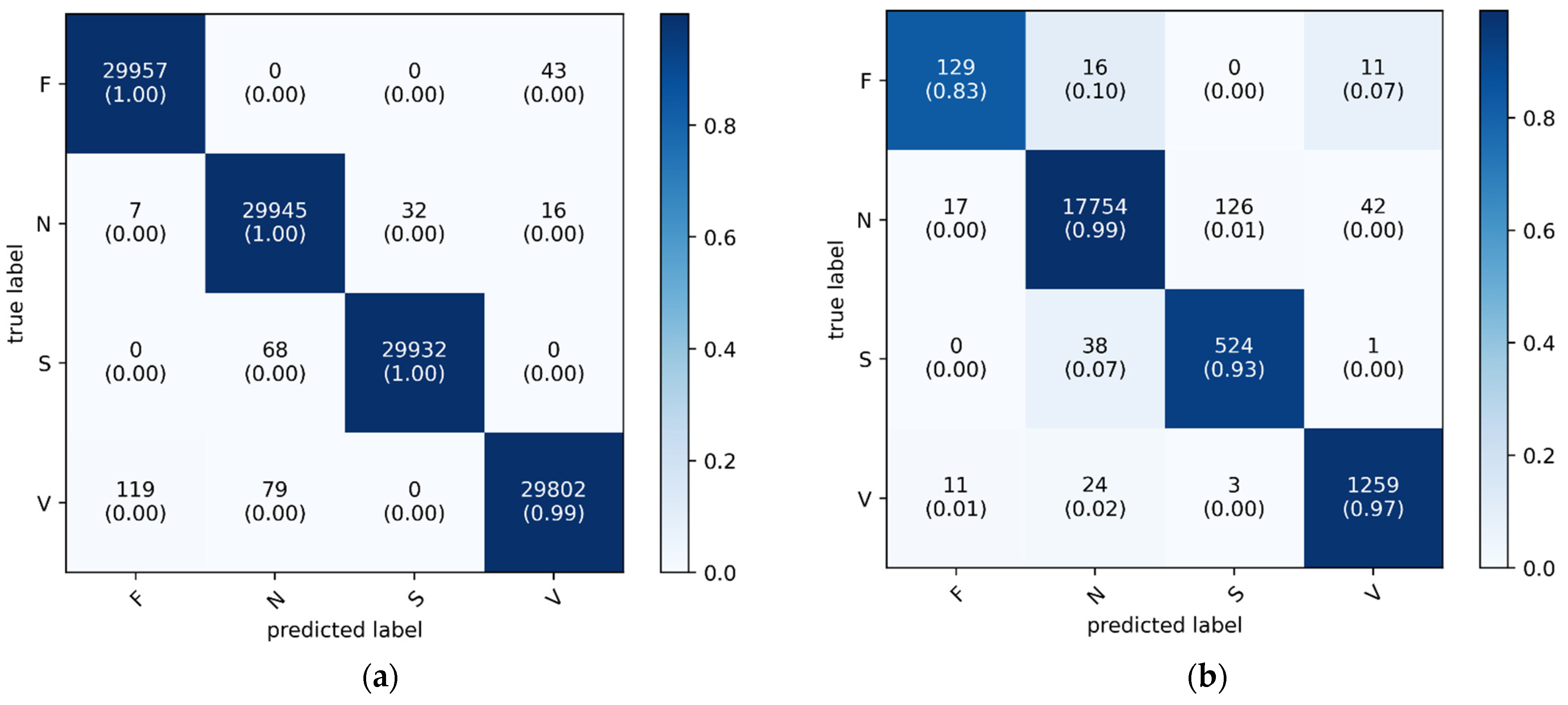

The hybrid 1D CNN-eGRU model demonstrated exceptional performance in the classification of four types of cardiac arrhythmia. In the training set, the model accomplished an overall accuracy of 100%. It indicates the ability of the proposed hybrid 1D CNN-eGRU model to accurately classify various arrhythmia classes. The validation and testing sets yielded impressive accuracy rates of 99%, further emphasizing the generalization capabilities and robustness of the proposed hybrid CNN-eGRU model. As depicted in

Figure 5, the accuracy curve illustrates the performance of the proposed model throughout the training process.

The loss function scores of the 1D CNN-eGRU were notably low, with values of 0.02 and 0.07 in both the training and testing datasets, respectively. The testing dataset shows a slightly higher loss reflecting a modest increase in error when the model is applied to new data. This slight increase in loss is expected due to inherent differences between training and testing datasets.

The ROC curves, presented in

Figure 6 for the training and testing, provide insights into the classification performance of the 1D CNN-eGRU for individual arrhythmia types. The AUC values in the training were 1.00 for all classes, indicating perfect discrimination between different classes. In the testing set, the AUC values were slightly lower but still high, with 0.99 for class F and class S and 1.00 for the remaining classes (N, V).

As shown in

Figure 7, the confusion matrix displays the classification performance of the proposed 1D CNN-eGRU model on both the training (a) and testing (b) datasets. The y-axis represents the actual ground-truth labels for the four arrhythmia classes: fusion beats (F), normal beats (N), supraventricular beats (S), and ventricular beats (V). The x-axis corresponds to the predicted labels generated by the model. Each cell indicates the number of samples classified into each predicted class, with the diagonal cells representing correct classifications. These diagonal entries also include class-wise accuracy percentages in parentheses. A color bar is provided to illustrate the normalized accuracy values, using a blue gradient where darker shades indicate higher classification accuracy and lighter shades indicate lower agreement. This visualization highlights the model’s performance, with darker cells along the diagonal confirming accurate predictions and lighter off-diagonal cells revealing instances of misclassification.

Table 2 offers a comprehensive comparison of various performance metrics for a classification model across four distinct classes (F, N, S, and V) for the testing dataset. These metrics encompass precision, sensitivity (recall), F1-score, specificity, AUC, accuracy, and loss. Each measure gives worthy insight into the performance of the model in accurately classifying instances of each class, thereby providing a thorough performance evaluation of the model. It is important to notice that we rounded all values in all tables to two decimal places.

Precision quantifies the proportion of true positive predictions among all positive predictions, with high precision indicating a low rate of false positives. In the testing dataset, precision values varied across classes, with class F and class S recording lower values (0.82 and 0.80, respectively) compared to other classes. This indicates that the model encounters some difficulty in distinguishing these classes in unseen data. Despite this, precision remained perfect for class N (1.00) and high for class V (0.96), resulting in an average precision of 0.90.

Sensitivity (Recall) measures the proportion of actual positive instances correctly identified by the model. The sensitivity values in the testing dataset remained high, with class N, S, and V achieving values above 0.90, while class F showed a lower sensitivity of 0.83. The overall average sensitivity of 0.93 indicates that the model is generally effective in identifying arrhythmia cases, though challenges exist for specific classes with lower sample representation.

F1-score, which balances precision and recall, showed similar patterns. The testing dataset recorded an F1-score of 0.82 for class F and 0.86 for class S, whereas the other classes performed significantly better. The average F1-score of 0.91 demonstrates that the model maintains an effective balance between precision and recall, although class imbalance slightly impacts its ability to generalize across all classes.

Specificity evaluates the capability of the model to correctly identify negative cases. The model performed exceptionally in this aspect since it achieved near-perfect specificity across all classes, with values ranging from 0.97 (class N) to 1.00 (class F and V). The average specificity of 0.99 confirms that the model is highly reliable in ruling out negative instances. This ensures minimal misclassification of normal ECG signals.

The AUC values provide insight into the ability of the model to differentiate between different arrhythmia classes. The model demonstrated excellent discriminative power since it achieved near-perfect AUC values (0.99–1.00) for all classes. The average AUC of 1.00 reflects the high robustness and effectiveness of the model in classification of the different arrhythmia categories.

The overall accuracy of 0.99 confirms that the model generalizes well to unseen data, correctly classifying the majority of testing samples. Additionally, the low loss value of 0.07 further indicates the stability and reliability of the trained model.

Despite its strong performance, the model exhibits slight limitations in precision and sensitivity, particularly for class F (156 samples) and class S (563 samples), which are underrepresented compared to other classes. Although a hybrid data balancing approach, including resampling and class weighting, was employed to mitigate class imbalance, the disparity in sample sizes remains a challenge.

3.5. Comparison with Standalone 1D CNN

To evaluate the effectiveness of the proposed 1D CNN-eGRU model, its performance was compared against a standalone 1D CNN model. The evaluation metrics, presented in

Table 3, serve as a baseline to assess the advantages of integrating GRU layers with CNN.

In terms of precision, as can be observed from

Table 2 and

Table 3, the proposed 1D CNN-eGRU model achieved an average precision of 0.90, which is higher than the 0.87 obtained by the standalone 1D CNN model. This improvement is particularly noticeable in class F (0.82 vs. 0.76) and class S (0.80 vs. 0.78), indicating that the incorporation of GRU layers enhances the model’s ability to distinguish between arrhythmia types, particularly for minority classes.

As can be seen in

Table 2 and

Table 3, the sensitivity of both models remained comparable, with an average of 0.93 in both cases. However, the standalone CNN model demonstrated slightly lower sensitivity for class F (0.85 vs. 0.83 in the hybrid model). This result indicates that while CNN layers alone are effective at capturing spatial features, they may not fully capture the temporal dependencies of ECG signals, which are better handled by GRU layers.

The F1-score of the proposed hybrid model, which balances precision and recall, was 0.91 compared to 0.90 achieved by the standalone CNN model. The improvement is particularly evident in class F (0.82 vs. 0.80) and class S (0.86 vs. 0.85). These results confirm that the hybrid model maintains a better trade-off between precision and sensitivity, leading to more reliable classification performance.

In terms of specificity and AUC, both models exhibited strong performance. The specificity values remained high in both models (0.99 on average) to ensure minimal false positives. Similarly, AUC values were nearly identical between the two models. The proposed hybrid model achieved an average of 1.00 and the standalone CNN model reached 0.99. These results indicate that both architectures are highly capable of distinguishing between arrhythmia classes.

The most significant improvement was observed in accuracy and loss. The 1D CNN-eGRU model achieved a higher accuracy (0.99) compared to 0.98 in the standalone 1D CNN model. Additionally, the loss was significantly lower in the hybrid model (0.07) compared to 0.16 in the CNN-only model, indicating that the incorporation of GRU layers contributed to improved stability and convergence.

3.6. Comparison with Standalone GRU

A second comparative analysis was conducted between the proposed 1D CNN-eGRU model and a standalone GRU model. The performance metrics of the GRU-only architecture, presented in

Table 4, highlight the limitations of using GRU layers alone without CNN-based feature extraction.

As can be observed from

Table 2 and

Table 4, the precision of the proposed hybrid model (0.90) was significantly higher than that of the GRU model (0.84). This difference was particularly evident in class F (0.82 vs. 0.70) and class S (0.80 vs. 0.71), demonstrating that the CNN component plays a crucial role in improving classification accuracy, particularly for minority classes.

In terms of the sensitivity measure, the proposed hybrid 1D CNN-eGRU model showed slight improvement (0.93) compared to the GRU model (0.92). This highlights the ability of the model to correctly identify true positives for different arrhythmia classes.

The proposed hybrid 1D CNN-GRU model demonstrated superior performance across several key metrics compared to the standalone GRU model. It achieved higher overall accuracy (0.99 vs. 0.98) and also higher average F1-score (0.91 vs. 0.88). The F1-score improvement was notably influenced by enhanced precision in classifying specific arrhythmia classes (F and S). This indicates that the CNN component effectively extracted features that benefited the GRU’s sequential analysis. Furthermore, the hybrid model exhibited more efficient learning and potentially better generalization since it produced lower training loss (0.07 vs. 0.16). While both models achieved high specificity and AUC values that reflect strong capabilities in minimizing false positives and discriminating between classes, the hybrid model showed marginally higher average specificity (0.99 vs. 0.98). The near-identical AUC scores confirm the robust discriminative power of both architectures, yet the collective improvements in accuracy, F1-score, precision, and loss underscore the advantages of integrating CNN feature extraction with GRU sequence modeling for this arrhythmia classification task.

3.7. Interpretability Analysis with Sig-LIME

Sig-LIME (signal-based enhancement of LIME) [

46] introduces an advanced version of the Local Interpretable Model-agnostic Explanations (LIME) [

47] tailored for signal data, addressing limitations of the traditional LIME when applied to temporally dependent data. Sig-LIME leverages signal-specific features to generate more accurate and reliable explanations for machine learning models, particularly in fields requiring precise temporal data interpretation such as ECG analysis and other time-series data applications. The technique also tackles the issues of instability and local fidelity in LIME by integrating new data generation and weighting techniques, ensuring more consistent and faithful explanations.

Figure 8 illustrates the application of the Sig-LIME explanation technique to a 1D CNN-eGRU model used for classifying cardiac arrhythmia. The plot on the bottom right shows the Sig-LIME explanation for the input signal. The highlighted regions indicate the portions of the signal that contributed most to the model’s classification decision. These regions are where the model focused its attention to classify the signal as class N.

Figure 9 provides a comprehensive visual representation of the investigation’s extensive scope and the significant insights obtained through detailed analysis.

Figure 9 illustrates the outcome of the Sig-LIME explanation technique using heatmaps, which highlights the features primarily situated around the QRS complex. This observation is visually apparent, as Sig-LIME consistently emphasizes the regions of the P-wave, QRS complex, and T-wave to differentiate between classes and classify various arrhythmias. Notably, the QRS complex is crucial in diagnosing a wide range of cardiac pathologies, including arrhythmias [

48], thus substantiating the representations generated by Sig-LIME. The explanations demonstrate the proposed model’s capability to utilize these areas to effectively distinguish between different classes.

3.8. Comparison with Existing Works

This section presents a comparative analysis of the proposed 1D CNN-eGRU classifier against several existing deep learning-based models reported in recent literature. The evaluation is based on three primary performance metrics: accuracy, sensitivity, and specificity.

Table 5 summarizes the results, and the percentage differences are discussed to highlight the improvements achieved by the proposed model.

The proposed 1D CNN-eGRU classifier consistently demonstrates superior performance across most key metrics. When compared to the standard 1D CNN model [

49], our approach yields a 2.06% increase in accuracy (0.97 vs. 0.99), a slight 2.08% reduction in sensitivity (0.96 vs. 0.94), and a 1.02% improvement in specificity (0.98 vs. 0.99).

Against the CNN-BiLSTM classifier developed by Hassan et al. [

50], the proposed model achieves a 1.02% higher accuracy, a 3.30% increase in sensitivity, and a substantial 8.79% improvement in specificity. Similarly, when compared to the CNN-LSTM approach reported by Essa et al. [

51], our model shows a 3.12% improvement in accuracy, a notable 36.23% gain in sensitivity, and a 4.21% increase in specificity.

Compared to the CNN-BiLSTM model by Xu et al. [

18], the 1D CNN-eGRU architecture delivers a 3.12% higher accuracy (0.96 vs. 0.99), a 2.08% reduction in sensitivity, and a 3.12% enhancement in specificity.

Finally, in comparison with the DenseNet-GRU model [

52], our proposed method achieves a 7.61% improvement in accuracy, a 14.63% increase in sensitivity (0.82 vs. 0.94), and a 3.12% rise in specificity. When evaluated against another 1D CNN model reported by [

53], the proposed model outperforms it with a 4.21% higher accuracy, a 22.08% increase in sensitivity, and a 2.06% improvement in specificity.

Additional comparisons further validate the robustness of the proposed model. Our 1D CNN-eGRU classifier shows significant improvement over the RNN-LSTM model proposed by Singh et al. [

17], with a 12.50% increase in accuracy, a 2.17% improvement in sensitivity, and a 19.28% gain in specificity. Compared to RISNet by Kachuee et al. [

14], our classifier achieves a 6.45% higher accuracy and a 1.08% increase in sensitivity; however, specificity was not reported in RISNet.

When evaluated against the 1D CNN model by [

54], our approach shows a 5.32% improvement in accuracy and a 7.61% increase in specificity, though it demonstrates a 3.09% lower sensitivity. Finally, when compared with the BBNN architecture developed by Shadmand et al. [

55], our model attains a 1.02% improvement in accuracy and a remarkable 28.77% increase in sensitivity, with both models achieving an identical specificity of 0.99.

In addition to improved classification performance, our approach offers enhanced model interpretability through the integration of the Sig-LIME technique. By applying Sig-LIME, we are able to generate localized explanations for model predictions, highlighting the ECG signal regions that most influenced the decision. This capability addresses a critical gap in many existing deep learning approaches, which often operate as black-box models.

3.9. Discussion

The results in

Table 2 highlight the effectiveness of the CNN-eGRU hybrid architecture in accurately classifying arrhythmias while also identifying areas for further enhancement, particularly in handling class imbalances. The high specificity, AUC, and overall accuracy reinforce the model’s strong predictive capabilities, making it a reliable tool for automated ECG-based arrhythmia classification.

Compared to the baseline 1D CNN model, the results in

Table 2 and

Table 3 demonstrate that the proposed hybrid CNN-eGRU model outperforms the standalone 1D CNN model in precision, F1-score, accuracy, and loss reduction, particularly benefiting underrepresented classes (F and S). While sensitivity, specificity, and AUC values remained comparable, the lower loss and higher precision in the hybrid model suggest that integrating GRU layers enhances the overall classification performance.

Compared to the standalone GRU model, the results in

Table 2 and

Table 4 demonstrate that the proposed hybrid model significantly outperforms the standalone GRU model in precision, F1-score, and accuracy, confirming the importance of CNN layers in feature extraction. The lower loss and improved classification performance in underrepresented classes (F and S) emphasize the advantage of combining CNN with GRU for ECG classification. The standalone GRU model struggled with precision, suggesting that sequential dependencies alone are insufficient without effective feature extraction from CNN layers.

To make our proposed 1D CNN-eGRU model produce more accurate, meaningful, and trust-worthy local explanations for classifying cardiac arrhythmia, the Sig-LIME explanation technique is employed in the proposed 1D CNN-eGRU model. As illustrated in

Figure 9, the Sig-LIME explanations demonstrate that the proposed 1D CNN-eGRU model can utilize the P-wave, QRS and T-wave to effectively distinguish between different arrhythmia types.

When the proposed hybrid 1D CNN-eGRU model is compared to the existing works, the results in

Table 5 demonstrate that the proposed hybrid CNN-eGRU model achieves superior performance and outperforms most of the previous works in terms of accuracy, sensitivity, and specificity. This indicates that integrating GRU and attention mechanism contributes to enhancing the performance of the proposed hybrid CNN-eGRU in the arrhythmia classification. Furthermore, compared to most of the existing works which often works as black-box models, the proposed 1D CNN-eGRU model utilizes the Sig-LIME to enhance the interpretability, transparency and trustworthiness of the model’s predictions.