An Energy System Modeling Approach for Power Transformer Oil Temperature Prediction Based on CEEMD and Robust Deep Ensemble RVFL

Abstract

1. Introduction

- (1)

- For the first time, CEEMD is combined with ORedRVFL for oil temperature prediction, addressing the three bottlenecks of traditional methods: noise sensitivity, overfitting to outliers, and blind parameter tuning.

- (2)

- The Huber norm regularization layer is introduced in edRVFL for the first time to suppress the interference of outliers and enhance the model’s generalization ability.

- (3)

- Tent chaotic initialization is used instead of random initialization to avoid premature convergence of the DE algorithm and optimize the hyperparameters of the ORedRVFL model, thereby improving the model’s prediction accuracy.

- (4)

- A recursive correction mechanism for residual components is established to eliminate cumulative prediction bias, enabling the model to maintain high precision in complex data environments and enhancing its reliability in practical applications.

2. Methods

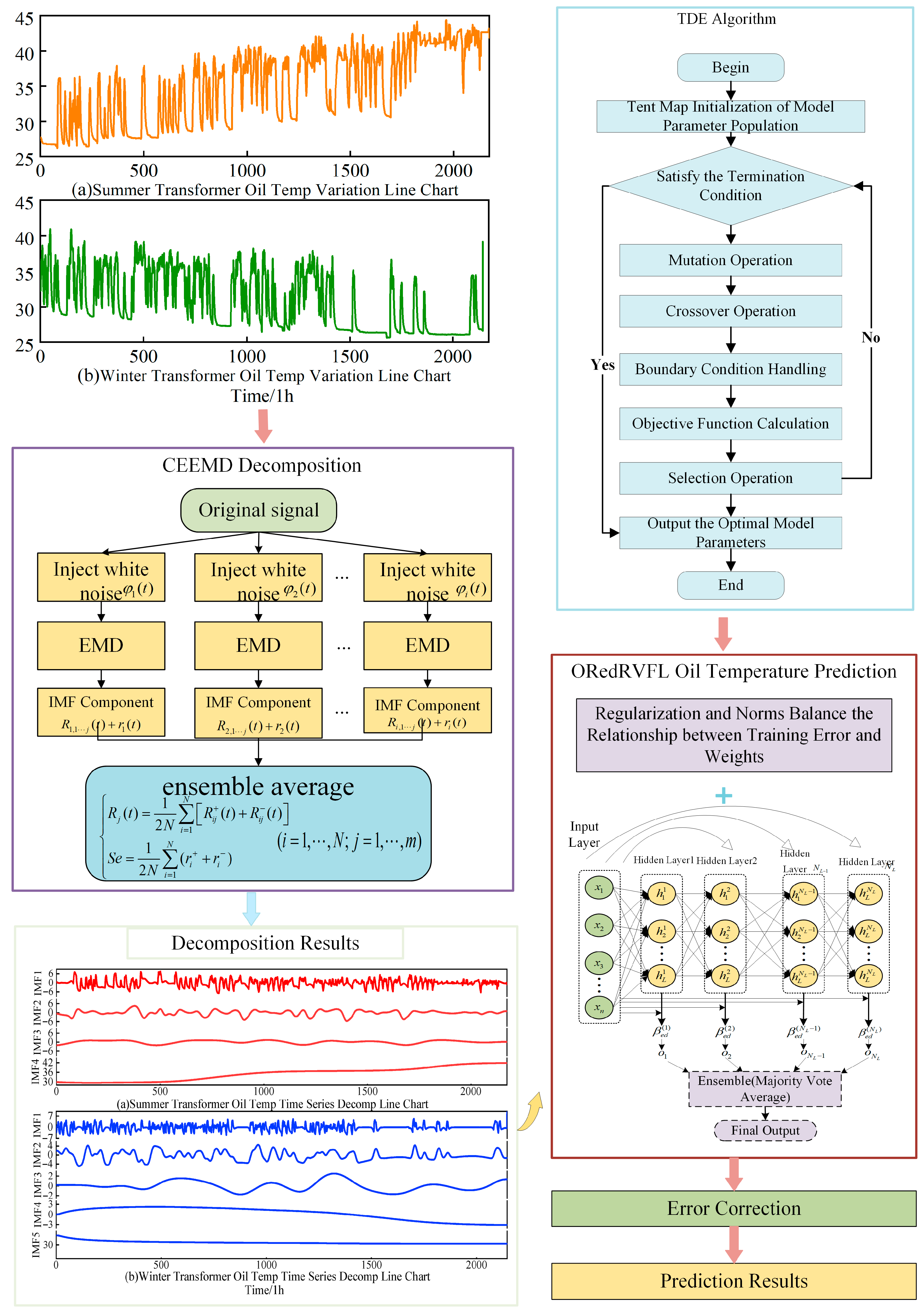

2.1. Complementary Ensemble Empirical Mode Decomposition

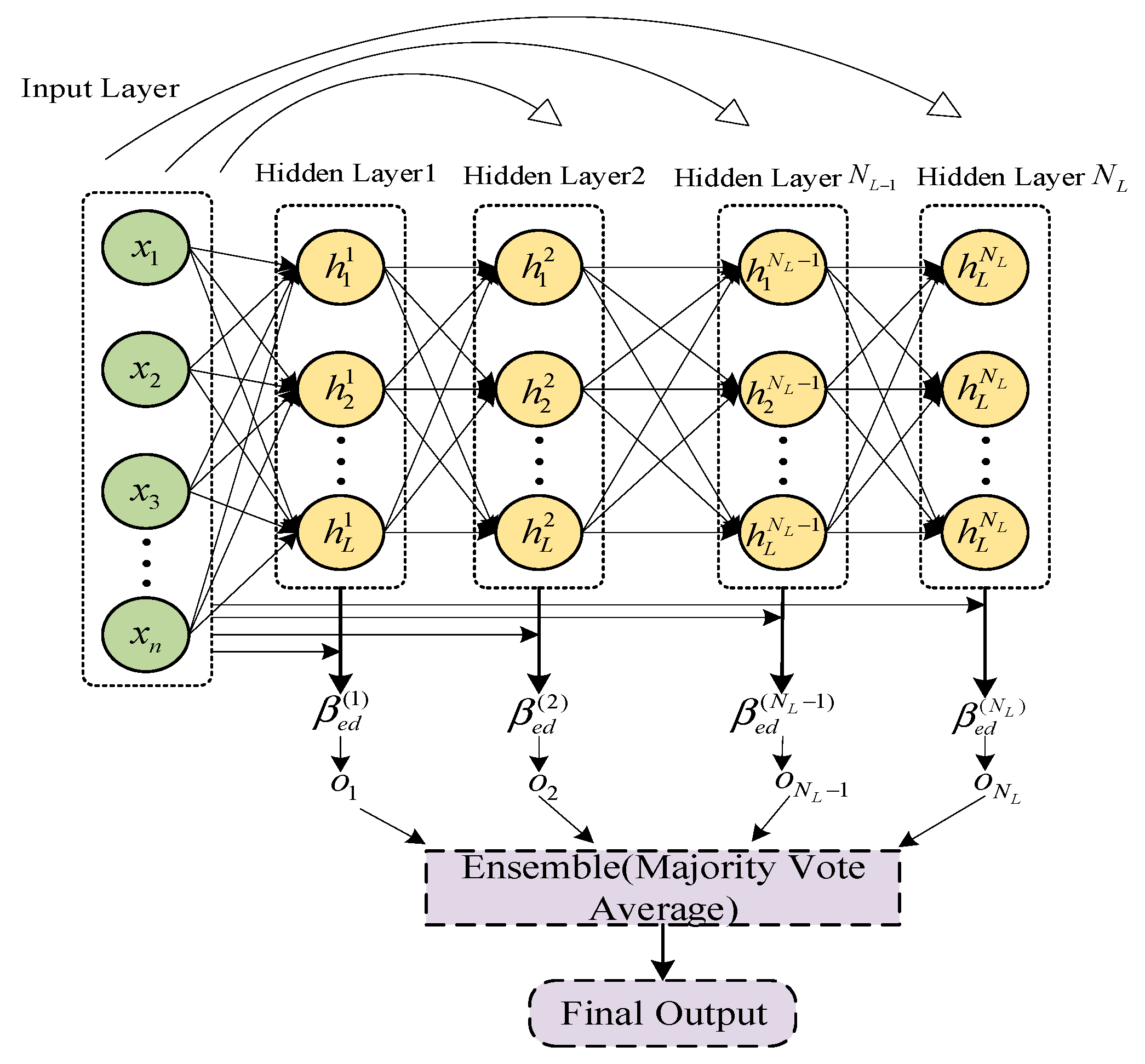

2.2. Robust Deep Ensemble RVFL

2.3. Tent Chaotic Differential Evolution Algorithm (TDE)

2.4. Error Correction Model

2.5. Construction of the Transformer Top Oil Temperature Prediction Model

3. Data Preprocessing

3.1. Dataset Introduction

3.2. Data Decomposition and Partitioning

3.3. Random Noise Data

4. Experimental Results and Analysis

4.1. The Performance Comparison of Algorithms

4.2. Evaluation Metrics

4.3. Experimental Design and Result Analysis

5. Diebold-Mariano Test

6. Conclusions

- (1)

- The CEEMD algorithm decomposes the oil temperature sequence into multiple sub-sequences with different frequencies, significantly enhancing the regularity and predictability of the data. Experiments show that, after the introduction of the decomposition algorithm, the model’s prediction accuracy for winter and summer oil temperatures increased by 5.05% and 4.13%, respectively.

- (2)

- The introduction of regularization and norm improvements to edRVFL resulted in the ORedRVFL model, which exhibited significantly reduced degradation when subjected to random noise. This validates its robustness and anti-interference capability.

- (3)

- The error correction mechanism further improved prediction accuracy, enabling the model to more accurately reflect the actual changes in transformer oil temperature.

- (4)

- Based on the experimental results, the proposed model’s predictive accuracy surpasses that of other control group models, achieving more accurate transformer oil temperature prediction.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xie, S.; Dai, D.; Dong, W.; He, Y. Comprehensive Condition Evaluation of Distribution Transformer Considering Internal Operation, External Environment, and Load Operation for Business Expansion. Energies 2025, 18, 2456. [Google Scholar] [CrossRef]

- Chen, J.; Tao, Z.; Gao, D.; Zhang, Y. A transformer state evaluation method based on multi-source information fusion. In Proceedings of the Journal of Physics: Conference Series, Shenyang, China, 28–30 March 2025; Volume 3043, p. 012142. [Google Scholar]

- Yang, M.; Han, C.; Zhang, W.; Fang, G.; Jia, Y. A short-term power prediction method based on numerical weather prediction correction and the fusion of adaptive spatiotemporal graph feature information for wind farm cluster. Expert Syst. Appl. 2025, 274, 126979. [Google Scholar] [CrossRef]

- Yang, M.; Xu, C.; Bai, Y.; Ma, M.; Su, X. Investigating black-box model for wind power forecasting using local interpretable model-agnostic explanations algorithm. CSEE J. Power Energy Syst. 2023, 11, 227–242. [Google Scholar]

- Chen, S.; Wang, Y.; Yang, M.; Xiao, X.; Gomis-Bellmunt, O. Forced oscillation in hybrid system with grid-following and grid-forming inverters. Energy 2025, 319, 134915. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, S.; Yang, M.; Liao, P.; Xiao, X.; Xie, X.; Li, Y. Low-frequency oscillation in power grids with virtual synchronous generators: A comprehensive review. Renew. Sustain. Energy Rev. 2025, 207, 114921. [Google Scholar] [CrossRef]

- Li, N.; Zhang, C.; Liu, Y.; Zhuo, C.; Liu, M.; Yang, J.; Zhang, Y. Single-degree-of-freedom hybrid modulation strategy and light-load efficiency optimization for dual-active-bridge converter. IEEE J. Emerg. Sel. Top. Power Electron. 2024, 12, 3936–3947. [Google Scholar] [CrossRef]

- Grabko, V.; Tkachenko, S.; Palaniuk, O. Determination of temperature distribution on windings of oil transformer based on the laws of heat transfer. ScienceRise 2021, 5, 3–13. [Google Scholar] [CrossRef]

- Zhang, P.; Hu, K.; Yang, Y.; Yi, G.; Zhang, X.; Peng, R.; Liu, J. Research on Prediction of Dissolved Gas Concentration in a Transformer Based on Dempster–Shafer Evidence Theory-Optimized Ensemble Learning. Electronics 2025, 14, 1266. [Google Scholar] [CrossRef]

- Torres-Bermeo, P.; López-Eugenio, K.; Del-Valle-Soto, C.; Palacios-Navarro, G.; Varela-Aldás, J. Sizing and Characterization of Load Curves of Distribution Transformers Using Clustering and Predictive Machine Learning Models. Energies 2025, 18, 1832. [Google Scholar] [CrossRef]

- Yang, L.; Chen, L.; Zhang, F.; Ma, S.; Zhang, Y.; Yang, S. A Transformer Oil Temperature Prediction Method Based on Data-Driven and Multi-Model Fusion. Processes 2025, 13, 302. [Google Scholar] [CrossRef]

- Monteiro, C.S.; Rodrigues, A.V.; Viveiros, D.; Linhares, C.; Mendes, H.; Silva, S.O.; Marques, P.V.; Tavares, S.M.; Frazão, O. Optical fiber sensors for structural monitoring in power transformers. Sensors 2021, 21, 6127. [Google Scholar] [CrossRef]

- Liu, J.; Hou, Z.; Liu, B.; Zhou, X. Mathematical and Machine Learning Innovations for Power Systems: Predicting Transformer Oil Temperature with Beluga Whale Optimization-Based Hybrid Neural Networks. Mathematics 2025, 13, 1785. [Google Scholar] [CrossRef]

- Lin, L.; Qiang, C.; Zhang, H.; Chen, Q.; An, Z.; Xu, W. Review of Studies on the Hot Spot Temperature of Oil-Immersed Transformers. Energies 2024, 18, 74. [Google Scholar] [CrossRef]

- Zhang, N.; Zhao, G.; Zou, L.; Wang, S.; Ning, S. Monitoring of Transformer Hotspot Temperature Using Support Vector Regression Combined with Wireless Mesh Networks. Energies 2024, 17, 6266. [Google Scholar] [CrossRef]

- Zhang, S.; Zhou, H. Transformer Fault Diagnosis Based on Multi-Strategy Enhanced Dung Beetle Algorithm and Optimized SVM. Energies 2024, 17, 6296. [Google Scholar] [CrossRef]

- Ma, C.; Zhang, C.; Yao, J.; Zhang, X.; Nazir, M.S.; Peng, T. Enhancement of wind speed forecasting using optimized decomposition technique, entropy-based reconstruction, and evolutionary PatchTST. Energy Convers. Manag. 2025, 333, 119819. [Google Scholar] [CrossRef]

- Sun, H.; Zhai, W.; Wang, Y.; Yin, L.; Zhou, F. Privileged information-driven random network based non-iterative integration model for building energy consumption prediction. Appl. Soft Comput. 2021, 108, 107438. [Google Scholar] [CrossRef]

- Han, M.; Fan, M.; Zhao, X.; Ye, L. Knowledge-based hyper-parameter adaptation of multi-stage differential evolution by deep reinforcement learning. Neurocomputing 2025, 648, 130633. [Google Scholar] [CrossRef]

- Hu, M.; Chion, J.H.; Suganthan, P.N.; Katuwal, R.K. Ensemble deep random vector functional link neural network for regression. IEEE Trans. Syst. Man Cybern. Syst. 2022, 53, 2604–2615. [Google Scholar] [CrossRef]

- Zou, D.; Xu, H.; Quan, H.; Yin, J.; Peng, Q.; Wang, S.; Dai, W.; Hong, Z. Top-Oil Temperature Prediction of Power Transformer Based on Long Short-Term Memory Neural Network with Self-Attention Mechanism Optimized by Improved Whale Optimization Algorithm. Symmetry 2024, 16, 1382. [Google Scholar] [CrossRef]

- Qi, J.; Pang, Z.; Liu, Z.; Du, Y. Method of transformer top oil temperature forecasting based on grey-autoregressive differential moving average model. In Proceedings of the International Conference on Computer Network Security and Software Engineering (CNSSE 2023), Sanya, China, 10–12 February 2023; Volume 12714, pp. 268–273. [Google Scholar]

- Gunda, S.K.; Dhanikonda, V.S.S.S.S. Discrimination of transformer inrush currents and internal fault currents using extended kalman filter algorithm (Ekf). Energies 2021, 14, 6020. [Google Scholar] [CrossRef]

- Oliveira, M.; Medeiros, L.; Kaminski, A., Jr.; Falcão, C.; Beltrame, R.; Bender, V.; Marchesan, T.; Marin, M. Thermal-hydraulic model for temperature prediction on oil-directed power transformers. Int. J. Electr. Power Energy Syst. 2023, 151, 109133. [Google Scholar] [CrossRef]

- Ghnatios, C.; Kestelyn, X.; Denis, G.; Champaney, V.; Chinesta, F. Learning data-driven stable corrections of dynamical systems—Application to the simulation of the top-oil temperature evolution of a power transformer. Energies 2023, 16, 5790. [Google Scholar] [CrossRef]

- Juarez-Balderas, E.A.; Medina-Marin, J.; Olivares-Galvan, J.C.; Hernandez-Romero, N.; Seck-Tuoh-Mora, J.C.; Rodriguez-Aguilar, A. Hot-spot temperature forecasting of the instrument transformer using an artificial neural network. IEEE Access 2020, 8, 164392–164406. [Google Scholar] [CrossRef]

- Jiang, H.; Hu, W.; Xiao, L.; Dong, Y. A decomposition ensemble based deep learning approach for crude oil price forecasting. Resour. Policy 2022, 78, 102855. [Google Scholar] [CrossRef]

- Chen, H.; Huang, H.; Zheng, Y.; Yang, B. A load forecasting approach for integrated energy systems based on aggregation hybrid modal decomposition and combined model. Appl. Energy 2024, 375, 124166. [Google Scholar] [CrossRef]

- Lyu, Z.; Wan, Z.; Bian, Z.; Liu, Y.; Zhao, W. Integrated Digital Twins System for Oil Temperature Prediction of Power Transformer Based On Internet of Things. IEEE Internet Things J. 2025, 12, 13746–13756. [Google Scholar] [CrossRef]

- Feng, D.; Wang, Z.; Jarman, P. Evaluation of power transformers’ effective hot-spot factors by thermal modeling of scrapped units. IEEE Trans. Power Deliv. 2014, 29, 2077–2085. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, C.; He, R.; Ma, C.; Yao, J.; Nazir, M.S.; Peng, T. A Pyramidal Attention-based Transformer model Based on Improved Differential Innovation Search Algorithm and Feature Extraction for Solar Radiation Prediction Considering Relevant Factors. Renew. Energy 2025, 253, 123666. [Google Scholar] [CrossRef]

- Yeh, J.-R.; Shieh, J.-S.; Huang, N.E. Complementary ensemble empirical mode decomposition: A novel noise enhanced data analysis method. Adv. Adapt. Data Anal. 2010, 2, 135–156. [Google Scholar] [CrossRef]

- Wu, Z.; Huang, N.E. Ensemble empirical mode decomposition: A noise-assisted data analysis method. Adv. Adapt. Data Anal. 2009, 1, 1–41. [Google Scholar] [CrossRef]

- Ma, H.; Zhang, C.; Peng, T.; Nazir, M.S.; Li, Y. An integrated framework of gated recurrent unit based on improved sine cosine algorithm for photovoltaic power forecasting. Energy 2022, 256, 124650. [Google Scholar] [CrossRef]

- Xiong, J.; Peng, T.; Tao, Z.; Zhang, C.; Song, S.; Nazir, M.S. A dual-scale deep learning model based on ELM-BiLSTM and improved reptile search algorithm for wind power prediction. Energy 2023, 266, 126419. [Google Scholar] [CrossRef]

- Huang, C. International joint conference on neural networks. In Proceedings of the IJCNN 2010, Barcelona, Spain, 18–23 July 2010. [Google Scholar]

- Malik, A.K.; Gao, R.; Ganaie, M.; Tanveer, M.; Suganthan, P.N. Random vector functional link network: Recent developments, applications, and future directions. Appl. Soft Comput. 2023, 143, 110377. [Google Scholar] [CrossRef]

- Hua, L.; Zhang, C.; Peng, T.; Ji, C.; Nazir, M.S. Integrated framework of extreme learning machine (ELM) based on improved atom search optimization for short-term wind speed prediction. Energy Convers. Manag. 2022, 252, 115102. [Google Scholar] [CrossRef]

| Data Name | Summer | Winter |

|---|---|---|

| Maximum Value | 44.381 | 40.922 |

| Minimum Value | 26.166 | 25.631 |

| Average Value | 34.9936 | 30.8591 |

| Benchmark Function | Algorithm | Average Value | Standard Deviation | Optimal Solution |

|---|---|---|---|---|

| ASO | 7.89 × 10−5 | 0.000249401 | 2.94 × 10−15 | |

| PSO | 0.85220533 | 0.330388366 | 0.362945255 | |

| HHO | 11566.65089 | 3885.164694 | 5835.221608 | |

| DE | 3.89 × 10−15 | 3.36 × 10−15 | 5.90 × 10−16 | |

| TDE | 6.16 × 10−62 | 1.53 × 10−61 | 2.51 × 10−75 | |

| ASO | 42.21042681 | 6.548604671 | 35.82220426 | |

| PSO | 56.32590351 | 14.01800727 | 41.41953517 | |

| HHO | 297.6034322 | 19.8717627 | 268.7495013 | |

| DE | 5.357551215 | 5.168711573 | 1.59 × 10−12 | |

| TDE | 0 | 0 | 0 | |

| ASO | 1.794378737 | 1.023738232 | 0.998003838 | |

| PSO | 1.494226808 | 0.843210368 | 0.998003838 | |

| HHO | 1.295816676 | 0.669811166 | 0.998003838 | |

| DE | 5.598097203 | 4.530763325 | 0.998003839 | |

| TDE | 1.097406545 | 0.314338957 | 0.998003838 |

| Assessment Indicators | Formula |

|---|---|

| Root Mean Square Error (RMSE) | |

| Mean Absolute Error (MAE) | |

| Mean Absolute Percentage Error (MAPE) | |

| Correlation Coefficient (R) |

| Season | Model | RMSE | MAE | R | MAPE |

|---|---|---|---|---|---|

| Summer | ELM | 0.98499 | 0.78857 | 0.96842 | 0.01959 |

| BP | 0.90876 | 0.70660 | 0.97154 | 0.01764 | |

| LSTM | 0.80409 | 0.58828 | 0.97539 | 0.01472 | |

| edRVFL | 0.76446 | 0.46345 | 0.97497 | 0.01180 | |

| ORedRVFL | 0.75688 | 0.47819 | 0.97529 | 0.01216 | |

| CEEMD-ORedRVFL | 0.71868 | 0.45684 | 0.97730 | 0.01167 | |

| CEEMD-ORedRVFL-EC | 0.71304 | 0.45326 | 0.97762 | 0.01159 | |

| Winter | ELM | 0.87662 | 0.43601 | 0.93748 | 0.01489 |

| BP | 0.76791 | 0.47134 | 0.95918 | 0.01688 | |

| LSTM | 0.74059 | 0.30142 | 0.95684 | 0.01028 | |

| edRVFL | 0.70246 | 0.19226 | 0.95971 | 0.00625 | |

| ORedRVFL | 0.69605 | 0.21843 | 0.96084 | 0.00724 | |

| CEEMD-ORedRVFL | 0.66732 | 0.23765 | 0.96607 | 0.00796 | |

| CEEMD-ORedRVFL-EC | 0.65980 | 0.21371 | 0.96619 | 0.00708 |

| Season | Model | RMSE | MAE | R | MAPE |

|---|---|---|---|---|---|

| Summer | ELM | 1.23618 | 0.97025 | 0.95088 | 0.02391 |

| BP | 1.19375 | 0.98887 | 0.96861 | 0.02436 | |

| LSTM | 0.94845 | 0.75448 | 0.96998 | 0.01883 | |

| edRVFL | 0.87346 | 0.56784 | 0.96918 | 0.01434 | |

| ORedRVFL | 0.77231 | 0.50869 | 0.97470 | 0.01289 | |

| CEEMD-ORedRVFL | 0.77126 | 0.50902 | 0.97489 | 0.01289 | |

| CEEMD-ORedRVFL-EC | 0.77079 | 0.49497 | 0.97467 | 0.01255 | |

| Winter | ELM | 1.10196 | 0.66029 | 0.90071 | 0.02298 |

| BP | 0.93037 | 0.66356 | 0.94384 | 0.02381 | |

| LSTM | 0.92479 | 0.54066 | 0.93744 | 0.01898 | |

| edRVFL | 0.85924 | 0.47096 | 0.94070 | 0.01629 | |

| ORedRVFL | 0.71107 | 0.24781 | 0.95968 | 0.00832 | |

| CEEMD-ORedRVFL | 0.70846 | 0.26364 | 0.95973 | 0.00894 | |

| CEEMD-ORedRVFL-EC | 0.70462 | 0.23483 | 0.96130 | 0.00784 |

| Season | Model | DM |

|---|---|---|

| Summer | CEEMD-ORedRVFL | 0.5599 |

| ORedRVFL | 2.9853 | |

| edRVFL | 4.8496 | |

| LSTM | 7.6922 | |

| BP | 13.736 | |

| ELM | 11.8441 | |

| Winter | CEEMD-ORedRVFL | 1.3716 |

| ORedRVFL | 2.2922 | |

| edRVFL | 2.5265 | |

| LSTM | 2.7642 | |

| BP | 6.254 | |

| ELM | 6.2713 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Y.; Li, H.; Meng, X.; Chen, J.; Zhang, X.; Peng, T. An Energy System Modeling Approach for Power Transformer Oil Temperature Prediction Based on CEEMD and Robust Deep Ensemble RVFL. Processes 2025, 13, 2487. https://doi.org/10.3390/pr13082487

Xu Y, Li H, Meng X, Chen J, Zhang X, Peng T. An Energy System Modeling Approach for Power Transformer Oil Temperature Prediction Based on CEEMD and Robust Deep Ensemble RVFL. Processes. 2025; 13(8):2487. https://doi.org/10.3390/pr13082487

Chicago/Turabian StyleXu, Yan, Haohao Li, Xianyu Meng, Jialei Chen, Xinyu Zhang, and Tian Peng. 2025. "An Energy System Modeling Approach for Power Transformer Oil Temperature Prediction Based on CEEMD and Robust Deep Ensemble RVFL" Processes 13, no. 8: 2487. https://doi.org/10.3390/pr13082487

APA StyleXu, Y., Li, H., Meng, X., Chen, J., Zhang, X., & Peng, T. (2025). An Energy System Modeling Approach for Power Transformer Oil Temperature Prediction Based on CEEMD and Robust Deep Ensemble RVFL. Processes, 13(8), 2487. https://doi.org/10.3390/pr13082487