Research on Automatic Detection Method of Coil in Unmanned Reservoir Area Based on LiDAR

Abstract

1. Introduction

2. Related Work

2.1. Segmentation

- (1)

- Connectivity: For any two points p, q ∈ C, C stands for class cluster. Let P and Q be connected with respect to the density of parameters ε and MinPts, and ε is the neighborhood radius, Minpts is the minimum number of objects in the neighborhood of ε when point p is the core object;

- (2)

- Maximality: For any two points p, q, if p ∈ C, and q is reachable from p with respect to the density of parameters ε and MinPts, then q ∈ C.

2.2. Feature Extraction

3. Methodology

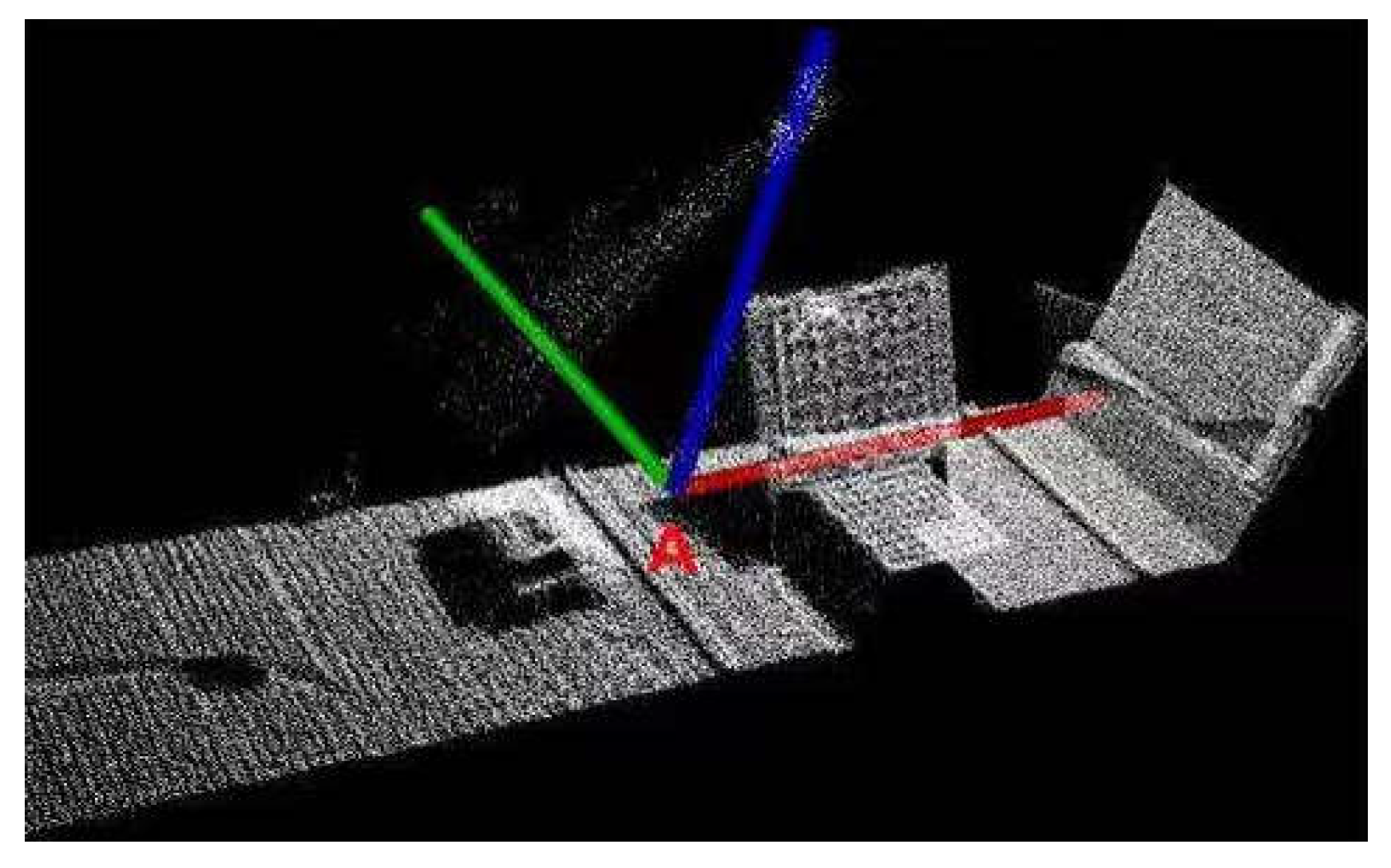

3.1. Three-Dimensional Point Cloud Construction

3.2. Preprocessing of Point Cloud Data

3.3. External Parameter Calibration of LiDAR

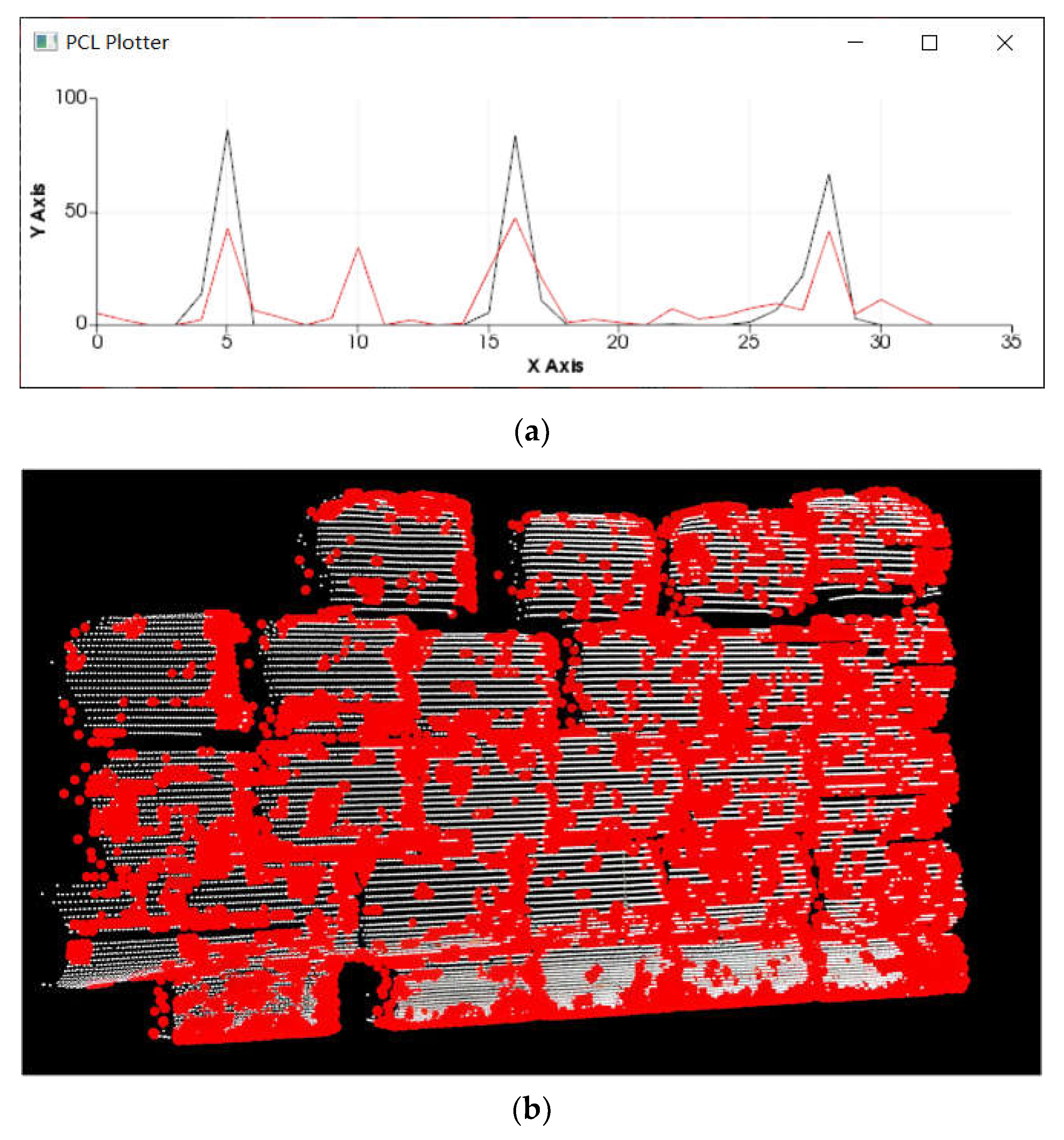

3.4. Ground Filtering

3.5. Segment

3.5.1. VFH Feature Extraction

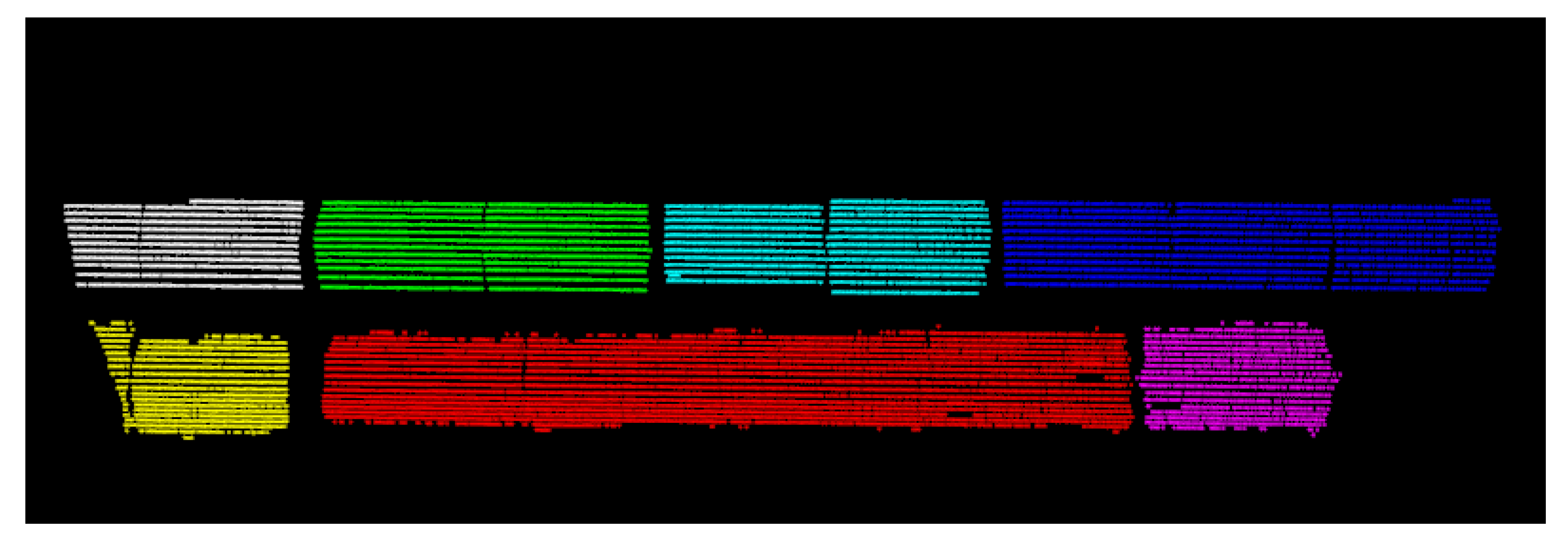

3.5.2. Segmentation of Point Cloud with Multiple Coils

3.5.3. Model Fitting

4. Results

4.1. Test System

4.2. Data Analysis

4.3. Classified Evaluation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fu, M.X.; Wang, J.Q.; Wang, Q.; Sun, L.; Ma, Z.Z.; Zhang, C.Y.; Guan, W.Q.; Li, W. Material recognition and location system with cloud programmable logic controller based on deep learning in 5G environment. Chin. J. Eng. 2023, 45, 1666–1673. [Google Scholar] [CrossRef]

- Miao, R.K.; Liu, X.Y.; Pang, Y.J.; Lang, L.Y. Design of a mobile 3D imaging system based on 2D LIDAR and calibration with Levenberg–Marquardt optimization algorithm. Front. Phys. 2022, 10, 993297. [Google Scholar] [CrossRef]

- Riisgaard, S. SLAM for Dummies: A Tutorial Approach to Simultaneous Localization and Mapping; University of Bristol: Bristol, UK, 2012. [Google Scholar]

- Montemarlo, M. FastSLAM: A Factored Solution to the Simultaneous Localization and Mapping Problem. In Proceedings of the Theaaai National Conference on Artificial Intelligence, American Association for Artificial Intelligence, Edmonton, AB, Canada, 28 July–1 August 2002. [Google Scholar]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved Techniques for Grid Mapping with Rao-Blackwellized Particle Filters. IEEE Trans. Robot. 2007, 23, 34–46. [Google Scholar] [CrossRef]

- Kohlbrecher, S.; Stryk, O.V.; Meyer, J.; Klingauf, J. A flexible and scalable SLAM system with full 3D motion estimation. In Proceedings of the IEEE International Symposium on Safety, Kyoto, Japan, 1–5 November 2011. [Google Scholar]

- Olson, E.B. Real-time correlative scan matching. In Proceedings of the IEEE International Conference on Robotics & Automation, Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Cormen, T.H.; Leiserson, C.E.; Rivest, R.L.; Stein, C. Introduction to Algorithms, 3rd ed.; MIT Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Liu, S.Y.; Xu, J.M.; Lv, D.N. Tunnel segmentation and deformation detection method based on measured point cloud data. Tunn. Constr. (Chin. Engl.) 2021, 41, 531–536. [Google Scholar]

- Tan, Y.Y. Design and Implementation of Coil Loading and Unloading Automatic Positioning System Based on Point Cloud Data. Master’s Thesis, Chongqing University, Chongqing, China, 2017. [Google Scholar]

- Guo, B.Q.; Yu, Z.J.; Zhang, N.; Zhu, L.Q.; Gao, C.G. 3D point cloud segmentation and classification recognition algorithm for railway scene. J. Instrum. 2017, 38, 2103–2111. [Google Scholar]

- Li, Y.; Wang, C. Artificial Intelligence Point Cloud Processing and Deep Learning Algorithms; Beihang University Press: Beijing, China, 2024. [Google Scholar]

- Hasan, M.; Hanawa, J.; Goto, R.; Fukuda, H.; Kuno, Y.; Kobayashi, Y. Person Tracking Using Ankle-Level LiDAR Based on Enhanced DBSCAN and OPTICS. IEEJ Trans. Electr. Electron. Eng. 2021, 16, 778–786. [Google Scholar] [CrossRef]

- Radu, B.; Blodow, N.; Márton, Z.-C.; Beetz, M. Aligning Point Cloud Views Using Persistent Feature Histograms. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Nice, France, 22–26 September 2008. [Google Scholar]

- Lv, W.H.; Guo, H.; Rao, Q.; Hou, Z.; Li, S.; Qiu, S.; Fan, X.; Wang, H. Body Dimension Measurements of Qinchuan Cattle with Transfer Learning from LiDAR Sensing. Sensors 2019, 19, 5046. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.H.; Pan, B.P. Research on Key Technology of Water Robot Avoiding Collision Based on Improved VFH Algorithm. J. Phys. Conf. Ser. 2021, 1820, 012064. [Google Scholar] [CrossRef]

- Han, X.F.; Jin, J.S.; Wang, M.J.; Jiang, W.; Gao, L.; Xiao, L. A review of algorithms for filtering the 3D point cloud. Signal Process. Image Commun. 2017, 57, 103–112. [Google Scholar] [CrossRef]

- Yadav, M.; Khan, P.; Singh, A.K.; Lohani, B. An automatic hybrid method for ground filtering in mobile laser scanning data of various types of roadway environments. Autom. Constr. 2021, 126, 103681. [Google Scholar] [CrossRef]

- Wei, Z.Y.; Jiang, T.; Hu, Z.Y.; Zhao, T.C. Orbit environment identification based on orbit environment characteristics. J. Chang. Univ. Technol. (Nat. Sci. Ed.) 2020, 43, 66–71. [Google Scholar]

- Zhang, R.B.; Guo, Y.S.; Chen, Y.H.; Li, T.M.; Liu, X.G. Urban intersection target recognition technology based on roadside lidar. Sens. Microsyst. 2020, 39, 32–35. [Google Scholar]

- Wang, X.M.; Huang, W.; Zhang, B.X.; Wei, J.Z.; Zhang, X.X.; Lv, Q.F.; Jiang, H.B. Real Time Integral Time Estimation Method for Inter Frame Corner Matching. Infrared Laser Eng. 2021, 5, 20200492. [Google Scholar] [CrossRef]

- Zahra, H.; Hamed, A.; Azar, M. Image matching based on the adaptive redundant keypoint elimination method in the SIFT algorithm. Pattern Anal. Appl. 2021, 24, 669–683. [Google Scholar]

- Xu, Q.W.; Tang, Z.M.; Yao, Y.Y. Research on image mosaic based on improved surf algorithm. J. Nanjing Univ. Technol. 2021, 45, 171–178. [Google Scholar]

- Peng, W.; Wei, L.; Ming, Y. Point cloud registration algorithm based on the volume constraint. J. Intell. Fuzzy Syst. 2019, 38, 197–206. [Google Scholar] [CrossRef]

- Xu, Y.; Chi, Y.D.; Wu, B.Q.; Liu, A.Q.; Wang, J.Q. Research on face recognition technology based on PCA algorithm. Inf. Technol. Inf. 2021, 34–37. [Google Scholar] [CrossRef]

- Yi, Z.H.; Wang, H.; Duan, G.; Wang, Z. An Airborne LiDAR Building-Extraction Method Based on the Naive Bayes–RANSAC Method for Proportional Segmentation of Quantitative Features. J. Indian Soc. Remote Sens. 2020, 49, 393–404. [Google Scholar] [CrossRef]

| Cloud | Human1 | Human2 | One Cylinder | Cylinders |

|---|---|---|---|---|

| points number | 513 | 795 | 2386 | 67,984 |

| Predicted as Adhesion | Forecast for Central | Sum | |

|---|---|---|---|

| Actually adhesion | 95 | 7 | 102 |

| Actually central | 5 | 9 | 14 |

| Sum | 100 | 16 | 116 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Liang, M.; Li, X.; Zhang, X.; Yuan, J.; Xu, D. Research on Automatic Detection Method of Coil in Unmanned Reservoir Area Based on LiDAR. Processes 2025, 13, 2432. https://doi.org/10.3390/pr13082432

Liu Y, Liang M, Li X, Zhang X, Yuan J, Xu D. Research on Automatic Detection Method of Coil in Unmanned Reservoir Area Based on LiDAR. Processes. 2025; 13(8):2432. https://doi.org/10.3390/pr13082432

Chicago/Turabian StyleLiu, Yang, Meiqin Liang, Xiaozhan Li, Xuejun Zhang, Junqi Yuan, and Dong Xu. 2025. "Research on Automatic Detection Method of Coil in Unmanned Reservoir Area Based on LiDAR" Processes 13, no. 8: 2432. https://doi.org/10.3390/pr13082432

APA StyleLiu, Y., Liang, M., Li, X., Zhang, X., Yuan, J., & Xu, D. (2025). Research on Automatic Detection Method of Coil in Unmanned Reservoir Area Based on LiDAR. Processes, 13(8), 2432. https://doi.org/10.3390/pr13082432