1. Introduction

Artificial intelligence (AI)-generated content (AIGC) refers to content created using artificial intelligence technologies, which can include text, images, videos, and more. Technically, AIGC refers to utilizing generative AI (GAI) to generate content that satisfies the instructions, given human prompts which could help teach and guide the model to complete the task [

1]. In recent years, AIGC has been fundamentally transforming multiple sectors, including materials discovery, healthcare, education, scientific research, and industrial manufacturing. In materials discovery, AIGC accelerates the identification of new materials by simulating molecular interactions and predicting properties, enhancing innovation cycles [

2]. In healthcare, it improves diagnostics and personalized treatment plans by analyzing vast datasets for patterns and aiding in drug discovery through hypothesis generation [

3]. In education, AIGC personalizes learning experiences by creating adaptive content that improves engagement and outcomes while facilitating interactive tools [

4,

5]. In industrial manufacturing, it optimizes design and production processes with rapid prototyping and predictive analytics that enhance the operational efficiency [

6]. For instance, Hu et al. [

7] proposed a generative AI-driven and Industry 4.0-compliant approach for a facility layout design to enable end-to-end facility layout generation. Furthermore, AIGC extends its applications to creative industries and environmental science, aiding in tasks such as personalized marketing and climate modeling [

8,

9]. Briefly, AIGC fosters innovation, boosts efficiency, and reduces barriers to entry across these diverse fields, positioning itself as a pivotal force for future advancements.

The design of intelligent robots involves several key processes, including requirement analysis, conceptual and mechanical design, control system development, sensor integration, AI implementation, prototyping, testing, and deployment. Obviously, the development of intelligent robots presents significant complexities and challenges, especially for beginners or young engineers. These include the necessity for interdisciplinary knowledge across engineering and AI, ensuring robustness and reliability in unpredictable conditions, and managing real-time data processing demands. Furthermore, addressing cost constraints and the need for seamless integration with existing systems are critical. Lastly, developing adaptable robots capable of learning autonomously remains a complex hurdle, often requiring advanced machine learning techniques. In short, the traditional design paradigm results in long cycles, high costs, and steep expertise thresholds, and also hinders rapid iteration and system integration. AIGC has the potential to offer a new paradigm, assisting in conceptual modeling, structural sketching, and functional module design to accelerate prototyping. Currently, its application in complex systems like intelligent robots remains nascent.

In the fields of robots, apple-harvesting robots exemplify a high-impact application due to stringent demands for target recognition accuracy, mechanical flexibility, and motion-control coordination. Orchards present challenges like random fruit distribution and severe occlusion, requiring domain expertise and complex modeling [

10]. While prior work has focused on the manipulator design, vision algorithms, or path planning, the overall development process remains expert-driven and manually modeled, lacking systematic AIGC integration. Taking the design of an apple-harvesting robot, for example, this research attempts to answer the question of whether AI can help and how to help intelligent robot design. Basically, the primary innovations or contributions include the following.

First, the AIGC-assisted intelligent robot design methodology is proposed. Its basic workflow leverages generative AI tools or models to streamline various stages of robot design, from conceptualization to deployment. We especially emphasize the application of the Chain of Thought (CoT) and the intervention methods of AI hallucination and risks.

In addition, training the intelligent sensing system of intelligent robots requires a large number of learning samples. One aspect of this research is to expand the actual apple image dataset by using the apple images generated by the large visual language model and answer the question of whether it can enhance the robot’s perception ability.

Further, as a proof of concept, an apple-harvesting robot prototype based on an AIGC-aided design is demonstrated and the pick-up experiment in the simulated scene indicates that it achieves a harvesting success rate of 92.2% and good terrain traversability with a maximum climbing angle of 32°.

2. AIGC-Aided Robot Design Methodology

2.1. Basic Framework of the Proposed Method

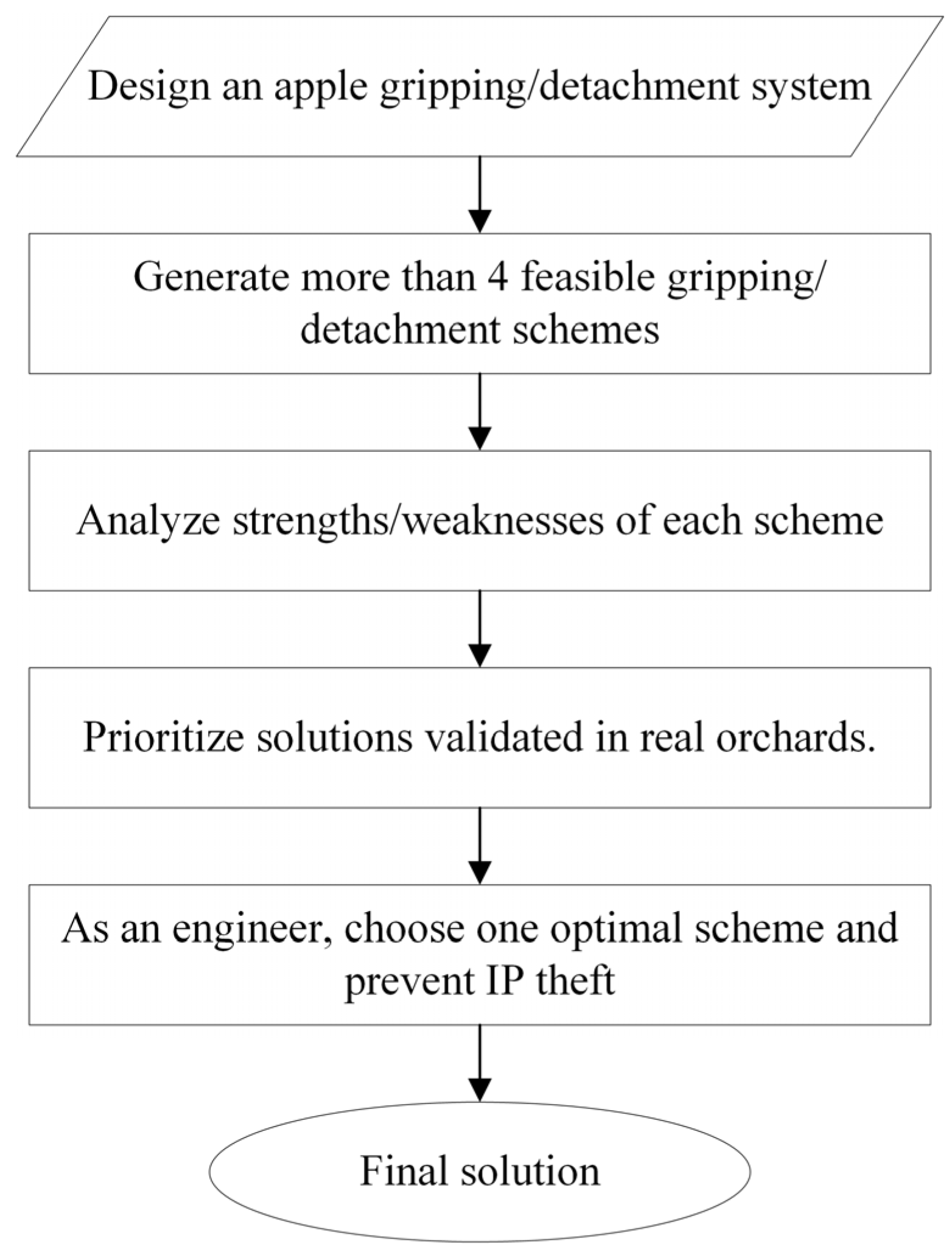

As illustrated in

Figure 1, the proposed AIGC-assisted robot design methodology is an innovative approach to leverage generative AI tools or models to streamline various stages of robot design, from conceptualization to deployment. This methodology integrates AIGC to enhance the process by automating tasks such as idea generation, design creation, specification development, and performance testing. It improves efficiency by rapidly producing multiple design alternatives, optimizing configurations, and simulating real-world interactions. Additionally, AIGC aids in integrating intelligent systems like vision and sensor technologies, ensuring a more adaptive and innovative design process. By incorporating AI-driven insights, the methodology enhances the overall quality and robustness of robotic solutions, enabling quicker iterations and more effective deployment.

2.2. AIGC-Assisted Technical Specification Generation

In the technical specification generation phase, the Large Language Model (LLM) leverages natural language processing (NLP) techniques to parse user requirements and extract critical design parameters, ensuring a streamlined and precise translation of needs into actionable metrics. For example, when a user inputs the prompt “Design an apple-picking robot suitable for densely planted orchards, capable of operating in a 1.5 m row spacing and a maximum picking height of 1.8 m,” the model swiftly identifies and quantifies these specifications, producing detailed indicators such as a fruit size range of 50–90 mm, operational environment constraints including row spacing of 1.5 m and picking reachability of 1.8 m, and a manipulator requirement of at least four degrees of freedom to accommodate complex picking actions. Compared to traditional manual analysis, this approach excels in efficiency and consistency, rapidly processing extensive textual data, minimizing priori knowledge, and delivering comprehensive technical visions, thereby enhancing the design process reliability.

As illustrated in

Table 1, the model capability is further exemplified by its handling of the vague prompt “adapt to rugged terrain,” where different models interpret and convert this into quantifiable parameters based on their unique strengths [

11]. ChatGPT (

https://openai.com/ (accessed on 17 May 2025)), harnessing its creative divergence, proposes a solution featuring wide tracks paired with high-torque motors to optimize adaptability. DeepSeek (

https://www.deepseek.com/ (accessed on 17 May 2025)), rooted in robust engineering logic, stipulates a track ground contact length of ≥0.8 m to ensure stability. And Doubao (

https://www.doubao.com/ (accessed on 17 May 2025)), leveraging its deep repository of local agricultural knowledge, recommends track materials specifically suited to hilly orchards, excelling in wear resistance, anti-slip properties, and adaptability to diverse soil conditions, thus significantly enhancing the robot’s practical utility in real-world orchard settings.

The selection of multimodal LLMs (ChatGPT, DeepSeek, Doubao) in this phase was determined based on their complementary strengths to address diverse design requirements. ChatGPT was chosen for its strong creative divergence in generating alternative solutions, DeepSeek for its robust engineering logic in quantifying technical parameters (e.g., the track contact length), and Doubao for its domain-specific knowledge in agricultural contexts (e.g., hilly orchard terrain adaptation). Cross-LLMs synthesis transforms AI into a collaborative cognitive ensemble, helps to achieve significantly broader solution diversity than single-model approaches, and suppresses hallucinations through consensus verification. Nevertheless, LLMs exhibit limitations in their capacity to interpret complex or implicit requirements, particularly when addressing non-standard terminology or region-specific demands inherent to agricultural contexts. In such instances, human intervention may be required to rectify biases or supplement missing details, ensuring that the model’s outputs align with practical needs.

2.3. AIGC-Aided Conceptual Design

In the concept generation phase, the model leverages technical specifications to rapidly produce preliminary design sketches, facilitating designers’ intuitive understanding of the design trajectory. For the apple-harvesting robot, the model can generate conceptual diagrams encompassing the tracked chassis, manipulator layout, and sensor configuration, as illustrated in

Figure 2, which delineates the robot’s overall structure and operational posture. Compared to traditional computer-aided design (CAD) drafting, the model’s strengths lie in its accelerated generation speed and diverse output. By employing image generation technology, it delivers high-precision details that stimulate innovative thinking among designers.

Nevertheless, the sketches produced exhibit shortcomings in precision and engineering feasibility. Critical design directions remain under human decision making. For example, as illustrated in

Figure 2, the generated tracked chassis design may not adequately account for the terrain load distribution, necessitating subsequent refinements and optimizations by designers to ensure the concept practicality and feasibility. Models are guided by refining prompts to prioritize key constraints (e.g., ‘compact structure for dense orchards’ and ‘lightweight materials for energy efficiency’), and all generated concepts are validated against engineering feasibility.

2.4. AIGC-Aided Design Generation and Configuration

In the mechanical structure design phase, the model leverages technical specifications and conceptual diagrams to generate preliminary mechanical structure proposals, encompassing material selection, joint load calculations, and dynamics simulations. As detailed in

Table 1, the model can recommend the adoption of carbon fiber materials to achieve a lightweight manipulator and compute joint load torques (e.g., ≤3 N · m) to ensure flexibility in harvesting operations. Compared to traditional design methodologies, the model’s primary advantage lies in its capacity to rapidly produce a diverse array of design solutions and optimize parameters through data-driven approaches, thereby significantly alleviating the workload of designers. However, its limitations in addressing complex engineering constraints are notable; in multi-objective optimization scenarios—such as achieving a balance between strength, weight, and cost—the generated designs may lack sufficient maturity, necessitating further refinement through physical simulations or experimental validation to fulfill practical engineering requirements.

In particular, the Chain of Thought (CoT) methodology provides a rigorous framework for approximating feasible solutions to complex multi-objective engineering problems through structured decomposition [

12]. By recursively breaking down intricate challenges into sequential, interpretable subtasks, CoT explicitly models intermediate cognitive transitions between initial inputs and final outputs—effectively transforming opaque neural network inferences into transparent, stepwise rationales that mirror human deductive processes [

13]. This approach enforces the local verifiability at each reasoning stage via Bayesian cognitive frameworks, substantially mitigating extrinsic hallucinations. Furthermore, CoT generates explicit justifications for design decisions, significantly enhancing the interpretability of large-scale model reasoning. Crucially, it formalizes multi-objective optimization as a process of sequential constraint satisfaction, where competing requirements are resolved through prioritized iterative refinement rather than monolithic black-box optimization.

Figure 3 illustrates the CoT to generate the apple gripping/detachment design scheme.

Existing studies and practices have applied AIGC to early conceptual sketch generation, 3D modeling assistance (e.g., the Text2CAD framework automates 3D CAD generation from textual descriptions via isometric image generation and orthographic technical drawing transformation [

14]), and interactive design proposals, enhancing the efficiency and creativity. For instance, the DreamFusion project by MIT CSAIL employs text prompts to generate renderable 3D models, providing a novel approach to industrial product modeling [

15]. Recent frameworks like GAD (Generative AI-Assisted Design) further integrate LLMs (e.g., GPT-4o) with CAD tools, enabling multi-modal input (text, images, voice) processing and iterative model refinement via closed feedback loops [

16]. Additionally, CAD vendors such as Autodesk and Dassault Systèmes are progressively embedding AIGC into design platforms for parametric modeling and intelligent recommendations [

17]. In China, institutions like Alibaba DAMO Academy, Baidu Wenxin, and Tencent YouTu have released AIGC-based tools for industrial vision generation and auxiliary modeling, demonstrating preliminary applications in complex parts and structural assembly [

18]. Briefly, the technology of directly generating three-dimensional structural models of engineering standards based on generative AI is far from mature and still in the research and development stage.

2.5. AIGC-Aided Intelligent Perception

A robot target recognition algorithm based on deep learning often needs large-scale training samples. The source of training samples is generally the actual photos and their variants after digital image processing, so the workload of making training sets is very large. As such, we intend to generate the apple pictures based on the large vision-language model (LVLM) to expand the training dataset.

Specifically, the text-to-image method can produce apple images under varying lighting conditions to enhance the robustness of fruit detection algorithms. In contrast to conventional data collection methods, the model excels in rapidly generating large volumes of synthetic data, substantially reducing the costs and time associated with field data acquisition. Nevertheless, the synthetic data exhibit limitations in realism, particularly when simulating complex lighting and occlusion conditions, where discrepancies between synthetic and real-world images may impair the algorithm’s generalization capability. Consequently, integration with real-world data for fine-tuning and validation remains essential to ensure the reliability of the visual system. In

Section 3, we will investigate text-to-image synthesis for dataset augmentation in apple recognition systems, targeting enhanced detection accuracy under challenging environmental conditions.

2.6. AI Hallucination Intervention

In the realm of NLP, hallucination specifically denotes instances where the content generated by a language model while creating text is inconsistent with the provided input or the anticipated output context, lacks coherence, or contradicts factual information [

19]. These hallucinations are divided into two categories: an intrinsic hallucination, in which the generated output conflicts with the source material, and an extrinsic hallucination, where the generated output cannot be corroborated by the source. In the context of LLM-based code generation, conflicts in the project context align with context-conflicting hallucinations found in the general domain, resulting in generated code that may improperly utilize project contexts such as environments, dependencies, and resources [

20]. In LVLMs, a hallucination indicates a discrepancy between the factual details presented in the images (such as object presence, attributes, and spatial relationships) and the generated text that corresponds to them [

21]. In one AIGC-generated technical specification of apple-harvesting robots, the claimed operational efficiency of harvesting and collecting 100–150 apples per hour constitutes a clear instance of factuality hallucination, as this performance metric fails to demonstrate any advantage over manual harvesting. Meanwhile, within AIGC-generated synthetic training data for apple detection, occlusion by leaves or branches covers less than 15% of fruit surfaces—a significant deviation from empirical orchard observations where natural foliage occlusion averages 25–40% coverage.

As mentioned earlier, the method of cross-LLMs synthesis and CoT can reduce AI hallucinations and enhance the interpretability of large model reasoning. Further, to mitigate AI hallucinations in robotic design, we propose a three-pronged strategy. First, rule-based sanity checks enforce compliance with established standards such as a Technical Specification for Apple Picking (NY/T 1086-2006) formulated by the China Rural Agriculture Department [

22] and agricultural machinery and tractors—safety of partially automated, semi-autonomous, and autonomous machinery (ISO 18497-1:2024) [

23], systematically validating LLM-generated technical schemes against these benchmarks. Also, the standard ISO 12100:2010 [

24] can guide the identifying hazards and estimating and evaluating risks during relevant phases of the machine life cycle. Second, an expert review addresses the temporal limitations of LLM knowledge bases—where models may fabricate facts or reference obsolete solutions—by having domain specialists evaluate technical feasibility through the lens of contemporary robotics integration and emerging technologies. Third, virtual verification through a finite element simulation, digital twin implementation, and virtual prototyping rigorously tests AIGC-generated designs before physical realization, for example, deliberately corrupt sensor inputs in the simulation (e.g., 30% sensor dropout). This reduces safety-critical hallucinations to the slightest degree while maintaining full audit trails for certification bodies.

3. AIGC-Enhanced Visual Perception

3.1. Construction of Mixed Dataset

Generative adversarial networks (GANs) offer distinct advantages in generating data. However, in this study, we applied a latent diffusion model [

25], to generate synthetic agricultural scenes tailored to orchard environments. The choice of diffusion models for synthetic data generation is intentionally selected for their unique capability in text-to-image generation with hierarchical refinement and multi-modal control, making them particularly suitable for generating realistic orchard scenes with complex variations—critical for enhancing model robustness in unstructured agricultural environments.

The implementation focused on replicating real orchard light distributions and fruit morphological variations via textual conditioning. Leveraging the diffusion model’s denoising-based training paradigm, high-fidelity generation is achieved, indicating strong alignment between generated images and agricultural scene descriptions. These models supplement training datasets with extreme scenarios absent in real data (e.g., dense fog and extreme backlighting), enhancing diversity beyond the capabilities of traditional data augmentation methods. Unlike conventional techniques limited by linear interpolation, our diffusion-based approach produces physically consistent samples, including effects like light refraction under partial occlusion and seasonal variations in fruit ripeness, thereby significantly enriching the training data for orchard scene recognition tasks.

To assess the impact of the generated data, six datasets were constructed: mixed datasets with generated-to-real ratios of 1:3 (D1), 2:3 (D2), and 1:1 (D3), alongside three pure real-data control groups (D4, D5, D6). Control groups are established to isolate and quantify the impact of synthetic data on the model performance, ensuring the rigorous validation of the diffusion-augmented dataset efficacy.

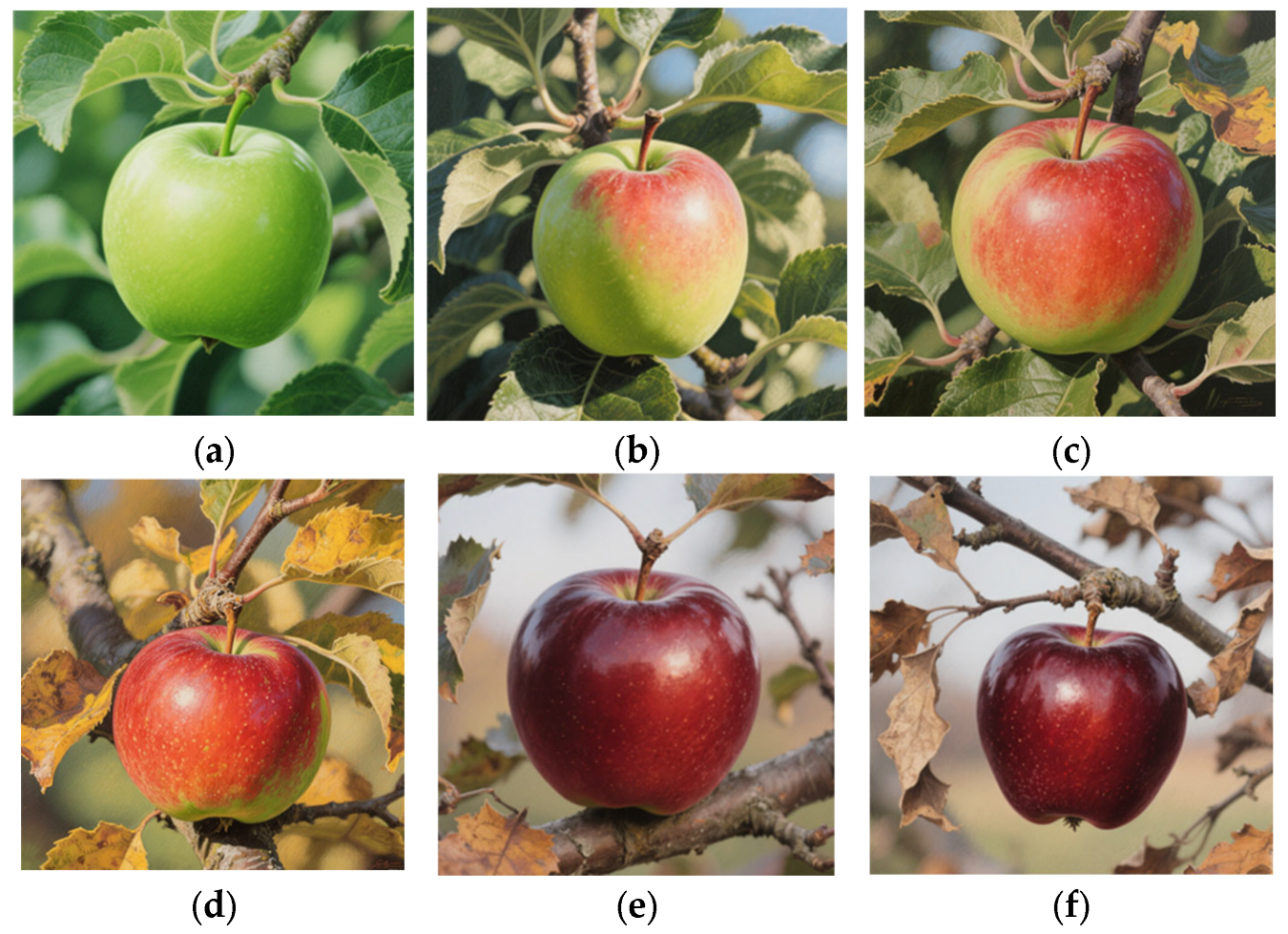

By varying mixing ratios, this experiment quantifies model generalization under different data combinations to identify the optimal cost–performance balance. By leveraging AIGC’s controllable generation, rare scenarios are supplemented, including the fruit morphology under extreme weather and different maturity stages (see

Figure 4 and

Figure 5), which significantly enhance the dataset diversity.

3.2. Experimental Design Based on YOLOv8 Model

The YOLOv8 (

https://yolov8.com/ (accessed on 1 June 2025)) model was selected for apple recognition experiments due to its advanced feature extraction and balanced accuracy–speed performance. Capable of real-time high-resolution image processing, YOLOv8 excels in rapid agricultural target detection [

26] and small-target recognition, critical for apples [

27]. This study evaluated the effects of different generated-to-real data ratios on the model performance, with the experimental configurations outlined in

Table 2.

Variables were controlled to ensure the validity: all datasets maintained consistent label types and apple distributions (1–10 apples per image). Training environments and parameters—epochs (200), batch sizes (32), image sizes (640), and learning rates (0.01)—were uniform. Data augmentation parameters included hsv_h (0.015), hsv_s (0.7), and fliplr (0.4). The hardware environment comprised an NVIDIA GeForce MX350 (CUDA 12.7), with software including Python 3.9, Ultralytics YOLOv8n, and PyTorch 2.5.1. Validation used a consistent set of 200 real apple images.

3.3. Performance Verification

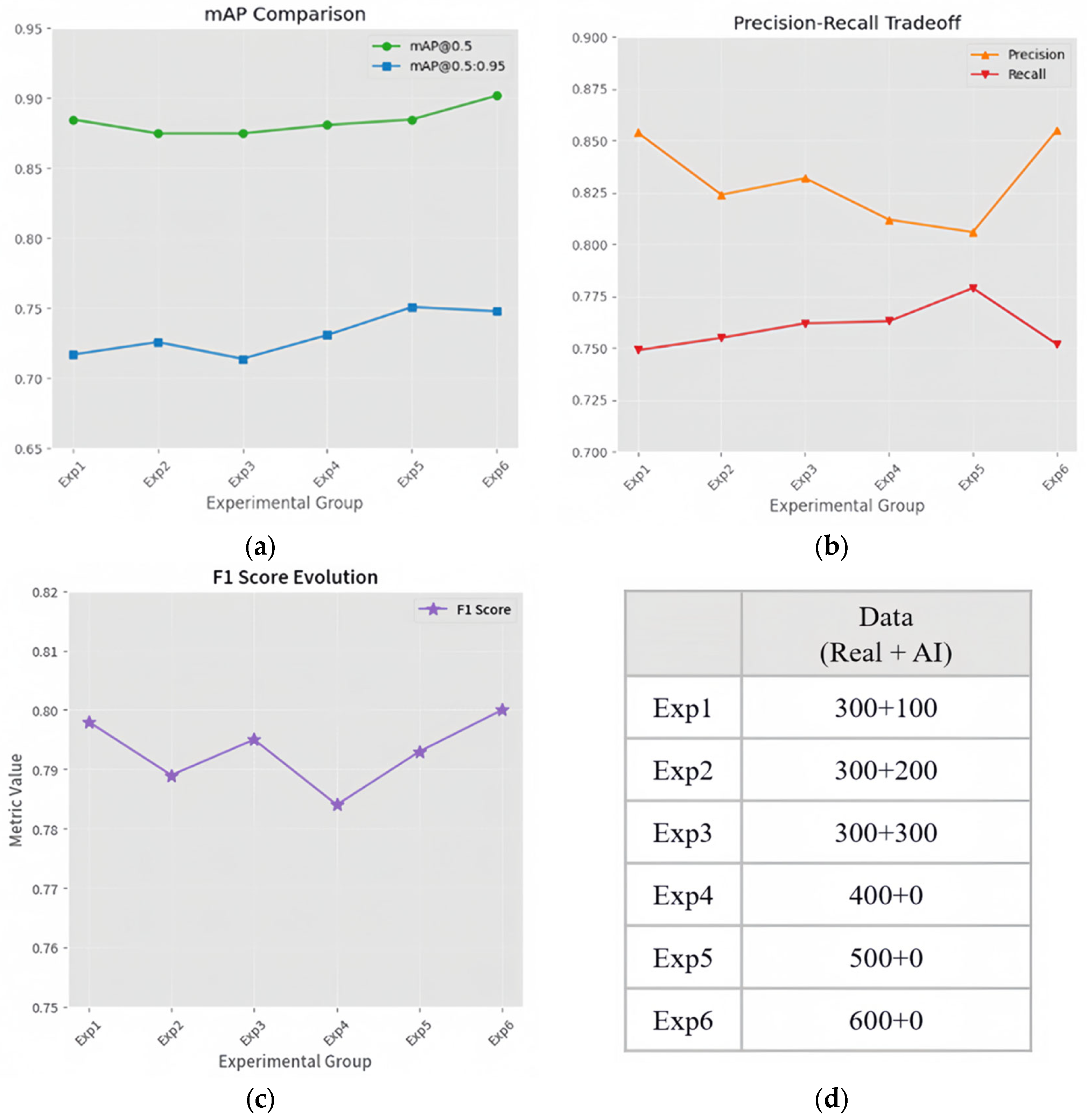

Analysis of mixed dataset training effects reveals the value of the generated data.

Table 3 and

Figure 6 demonstrate the experimental results.

Table 3 shows that D1 and D4 exhibit comparable mAP@0.5 and mAP@0.5:0.95, suggesting that at certain ratios, generated images effectively augment real data, enhancing the performance. However, higher generated data proportions (D2, D3) cause mAP@0.5 fluctuations, indicating that feature distribution disparities affect the learning stability.

Further analysis indicates that higher generated data ratios do not consistently improve the performance (e.g., D2’s mAP@0.5 decline). An optimal ratio balances the cost and performance. Precision, recall, and F1 score variations suggest that generated data may not fully capture real-scenario diversity, limiting generalization. Mixed datasets perform comparably to pure real datasets in key metrics, reducing reliance on costly real data. However, limitations in simulating complex lighting and occlusion necessitate data augmentation (e.g., random occlusion and lighting perturbations) to enhance robustness.

4. Prototype of the Apple-Harvesting Robot

4.1. Design of the Mobile Platform

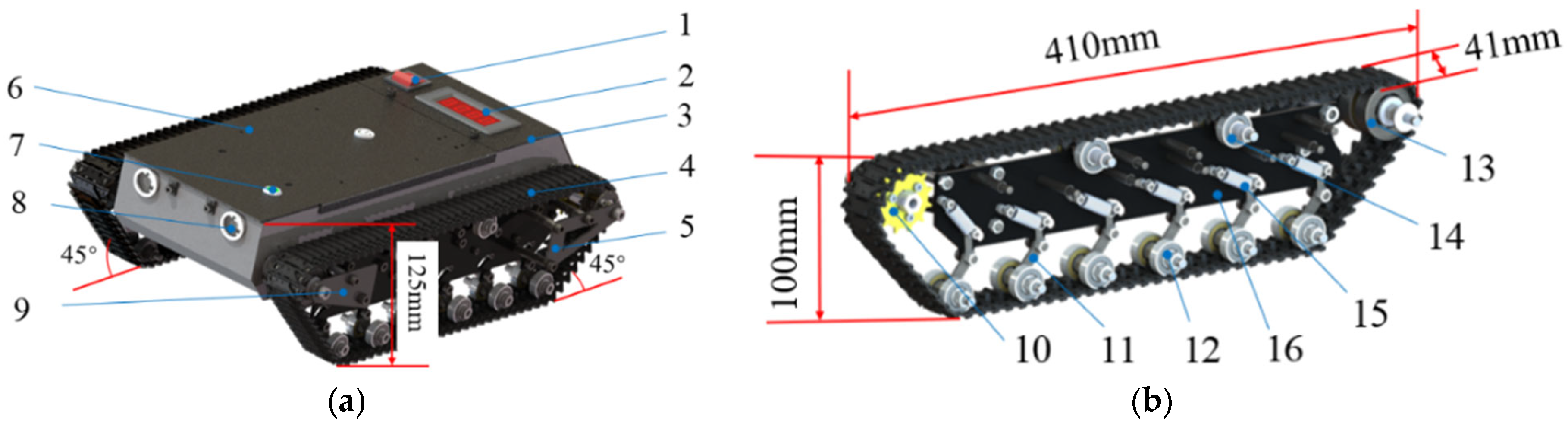

The mobile platform must effectively balance the load-bearing capacity with adaptability to complex terrains. A comparative analysis of wheeled, tracked, and articulated platforms highlights their distinct characteristics: wheeled platforms offer high maneuverability but exhibit limited obstacle-crossing capabilities and articulated platforms are hindered by complex control mechanisms and lower reliability, whereas tracked platforms, with their superior obstacle-crossing performance, are well-suited to the muddy and undulating terrain commonly found in orchards, leading to the selection of a tracked mobile platform. As illustrated in

Figure 7, the tracked mobile platform measures 410 mm in length, 290 mm in width, and 125 mm in height, with its track structure spanning 410 mm in length, 41 mm in width, and 100 mm in height. This configuration employs differential steering, achieved through speed variations between the tracks on either side, enabling adaptation to diverse unstructured surfaces.

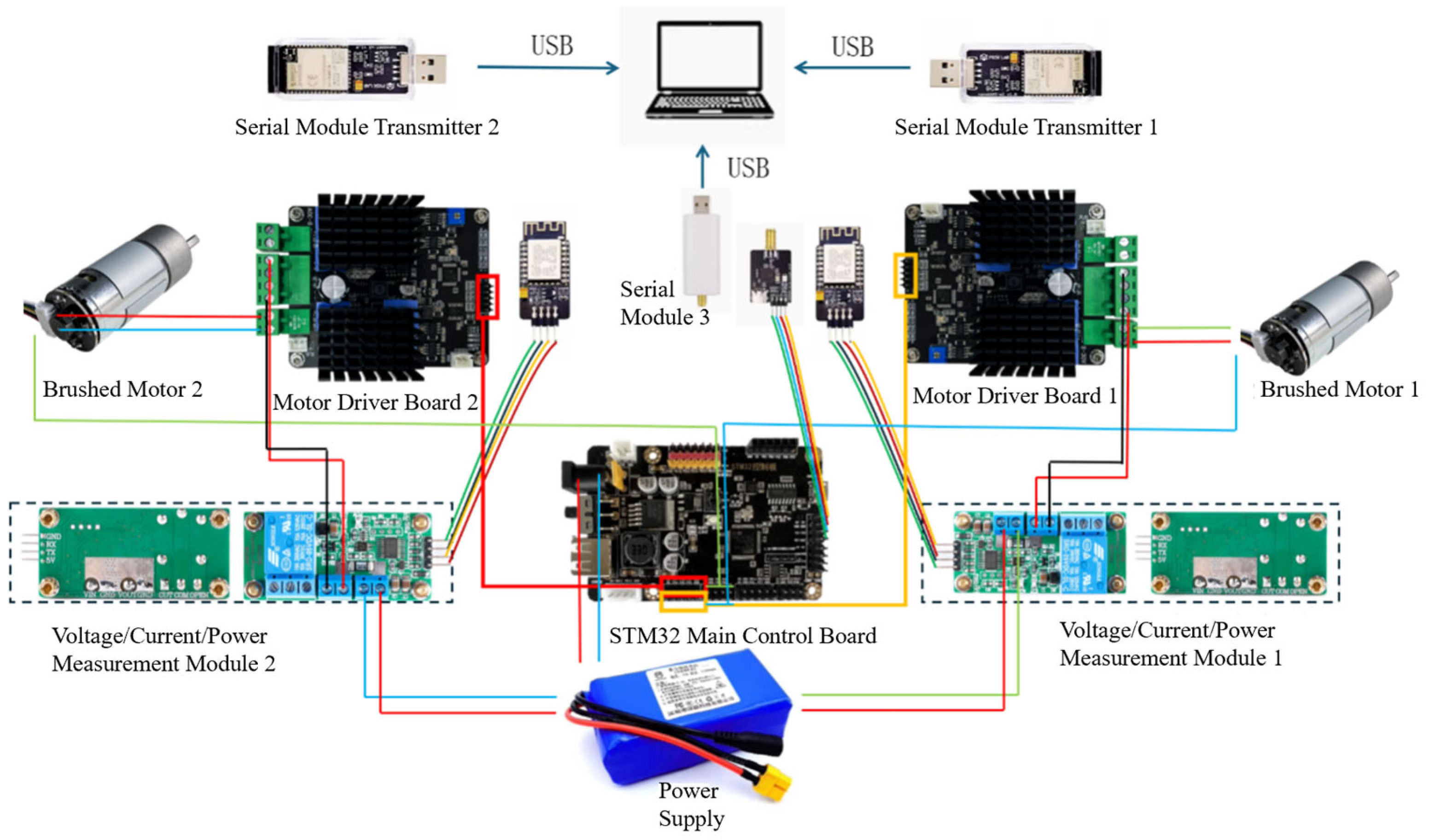

The electronic control system of the apple-picking robot consists of a power supply, an STM32 main control board, motor driver boards, voltage/current/power measurement modules, brushed DC (Direct Current) motors, and serial communication modules, with the control configuration as shown in

Figure 8.

The power supply delivers a voltage of 12.6 V to two brushed DC motors through two voltage/current/power measurement modules and two motor driver boards. Specifically, the motor driver boards control the rotation of the motors based on input motor control signals. For the current detection function of the voltage/current/power measurement modules, the current in the motors is converted into a corresponding voltage via a sampling resistor, which is then input to the ADC (Analog-to-Digital Converter) chip for detection. For voltage detection, the voltage is directly input to the ADC chip after resistor voltage division for measurement. These modules can be used to measure the power of the robot under different operating conditions. Each brushed DC motor is equipped with a built-in encoder at its end, which is utilized to feedback the motor speed. Based on this speed feedback, the driving torque of the motors under different operating conditions of the robot can be calculated. Serial Communication Module 3 is capable of transmitting the detection data from the measurement modules to a computer. The computer sends commands to the STM32 main control board (which is responsible for detecting encoder signals and outputting motor control signals) through an upper computer. Upon receiving the commands from the upper computer, the STM32 main control board drives the brushed DC motors to rotate via PWM (Pulse Width Modulation) and adjusts the motor speed accordingly. Serial Communication Modules 1 and 2 measure the output power of the two motors by receiving data transmitted from Voltage/Current/Power Measurement Modules 1 and 2, respectively. Motor speed regulation is managed by a differential Proportional–Integral-Derivative (PID) algorithm, the stability of which is supported by classical control theory, with analytical proof confirming the system stability [

28]. Simulations employing the Åström and Hägglund parameter tuning method ensured control precision, verifying the dynamic compatibility between the control algorithm and mechanical structure, with results demonstrating stable operation on a 10° slope and a theoretical maximum climbing angle of 35°.

4.2. Design of the Picking Manipulator

As depicted in

Figure 9, the picking control system integrates a four-degrees-of-freedom (DoF) manipulator powered by five ST3215 servos (each with a torque of 3.2 kg · cm), utilizing a “timer + Direct Memory Access (DMA)” control mode.

This approach leverages the 16-bit counter of the TIM1 timer to generate precise pulse outputs ranging from 0.5 to 2.5 ms, with DMA channels updating the duty cycle in real time, allowing the simultaneous control of all five servos with a latency of ≤1 ms [

29]. This ensures synchronous motion accuracy across the manipulator’s four degrees of freedom, controlling waist rotation, boom and forearm pitch, and end-effector opening and closing, in coordination with a binocular-structured light camera (mounted atop the control cabin) for target localization.

The vision module, executed on an onboard computer, employs the YOLOv8 algorithm, achieving a recognition accuracy of 90.2% for apples with diameters ≥ 65 mm and a positioning error of ≤5 mm. The main control unit communicates with the mobile platform controller via the Universal Asynchronous Receiver/Transmitter (UART) protocol, employing the Denavit–Hartenberg method to solve the manipulator’s forward and inverse kinematics, and integrates Monte Carlo methods to plan a safe workspace, mitigating a 10.8% hazardous angle range during waist rotation. The power module features a dual-conversion circuit, delivering 12 V for the servos and 5 V for the sensors, with current monitoring and overload protection facilitated by an INA219 chip.

4.3. Hybrid AIGC–Human Workflow for Embedded Control Code

The embedded code for the robotic control system is generated through a hybrid workflow combining AIGC assistance and human optimization, leveraging LLMs to accelerate low-level code development while ensuring functional reliability. Targeting the STM32F103RB/CT6 microcontroller (STMicroelectronics, Geneva, Switzerland) and servo control logic for a 4-DoF robotic arm, initial code frameworks are automatically generated by inputting technical specifications—including PWM signal timing, encoder pulse parsing rules, and UART communication protocol formats—into LLMs such as DeepSeek and Doubao. Specifically, DeepSeek generated modular code incorporating dual-channel H-bridge driver initialization and PID closed-loop speed control based on the drive characteristics of JGB37-550 motors (Shenzhen Xinyongtai Motor Co., Ltd., Shenzhen, Guangdong, China), while Doubao optimized the serial data interaction logic between voltage/current measurement modules and the main control board, reducing transmission latency to <1 ms to address orchard robot communication requirements.

LLMs primarily automate repetitive coding tasks, including peripheral register configuration, basic I/O interface definitions, and algorithm template generation (e.g., the discretized implementation of incremental PID). Human intervention focuses on critical functional verification: calibrating PID parameters via the Ziegler–Nichols method to ensure the motion stability of the tracked chassis on 32° slopes; integrating overload protection logic using the INA219 current sensor (Texas Instruments, Dallas, TX, USA) to prevent stall-induced motor damage; and validating 50 Hz PWM frequency compatibility with ST3215 servos (Waveshare Technology Co., Ltd., Shenzhen, Guangdong, China).

The microcontroller firmware is developed in C using STM32CubeIDE for the code compilation, debugging, and Hardware Abstraction Layer (HAL) configuration, supplemented by Keil MDK for low-level register optimization. Host–computer data parsing and control command transmission are implemented in Python 3.9 within the PyCharm (2023.3.7 Professional Edition) environment, utilizing the pyserial library for UART communication with the STM32.

5. Experimental Work

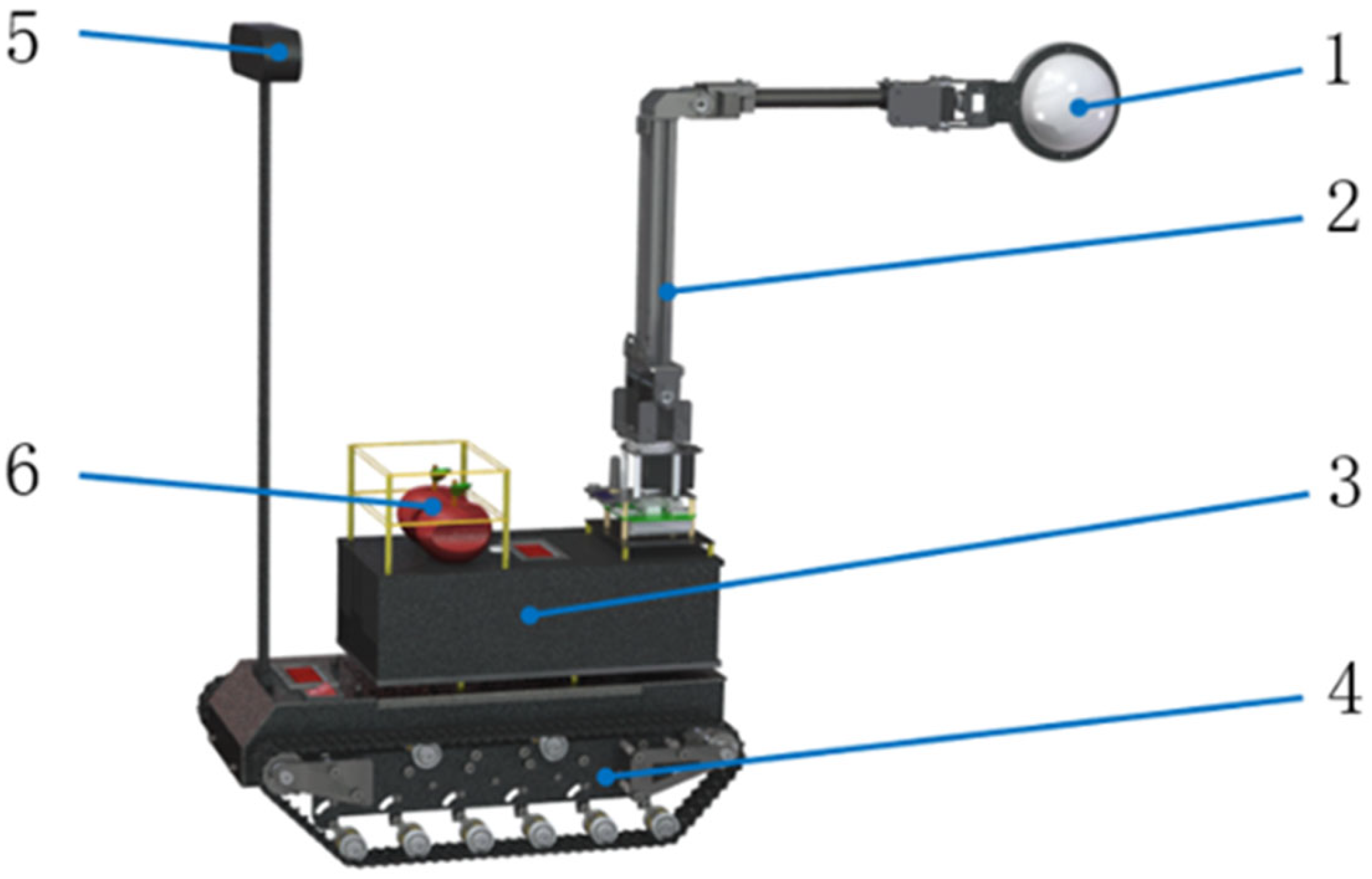

As shown in

Figure 10, the prototype measures 400 mm × 255 mm × 635 mm (folded), meeting the ≤500 mm × 300 mm × 700 mm requirement. The mechanical arm has a 235 mm boom, 220 mm forearm, 100 mm gripper diameter, and 830.32 mm maximum picking height, exceeding the 800 mm target. The mobile platform weighs 10.65 kg unloaded and achieves a 0.97 m ∙ s

−1 maximum speed, aligning with theoretical calculations.

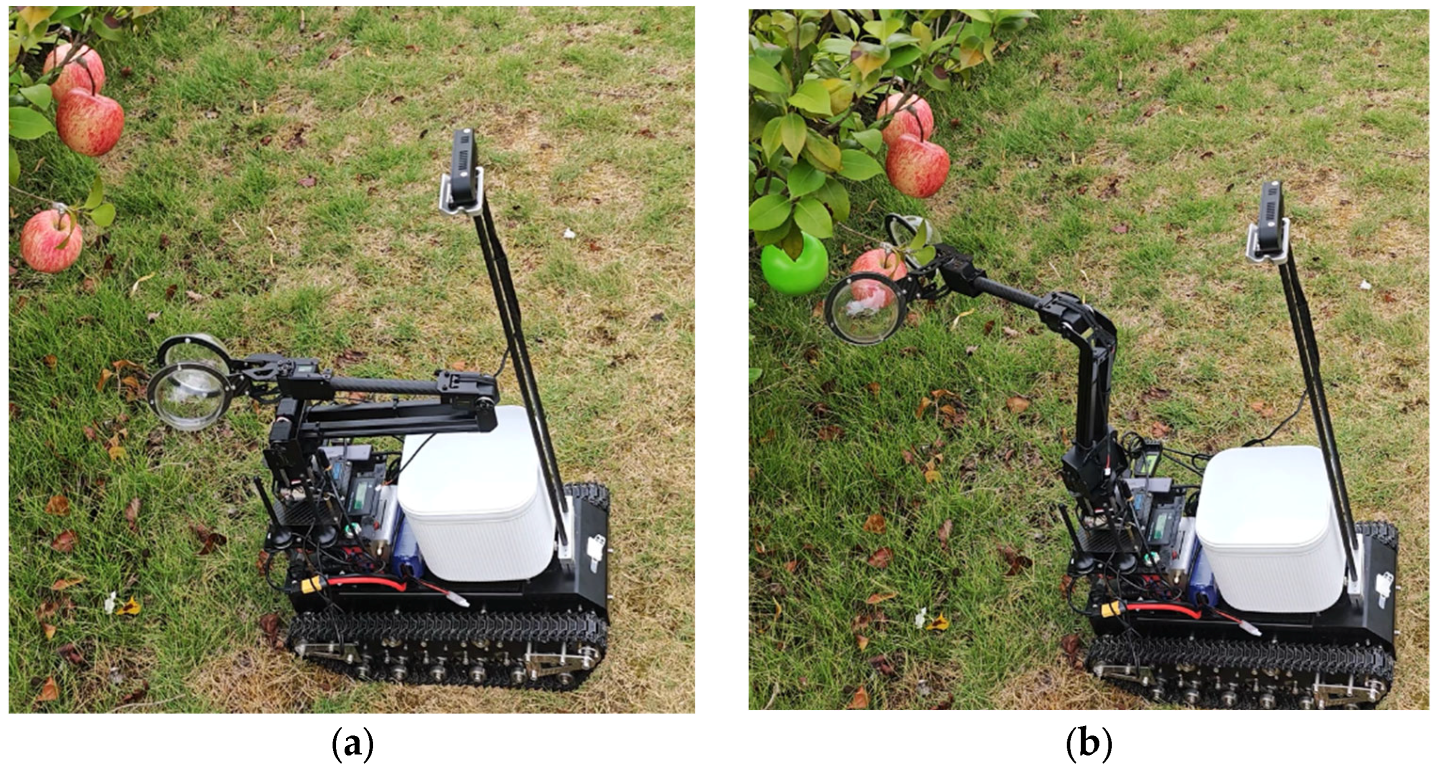

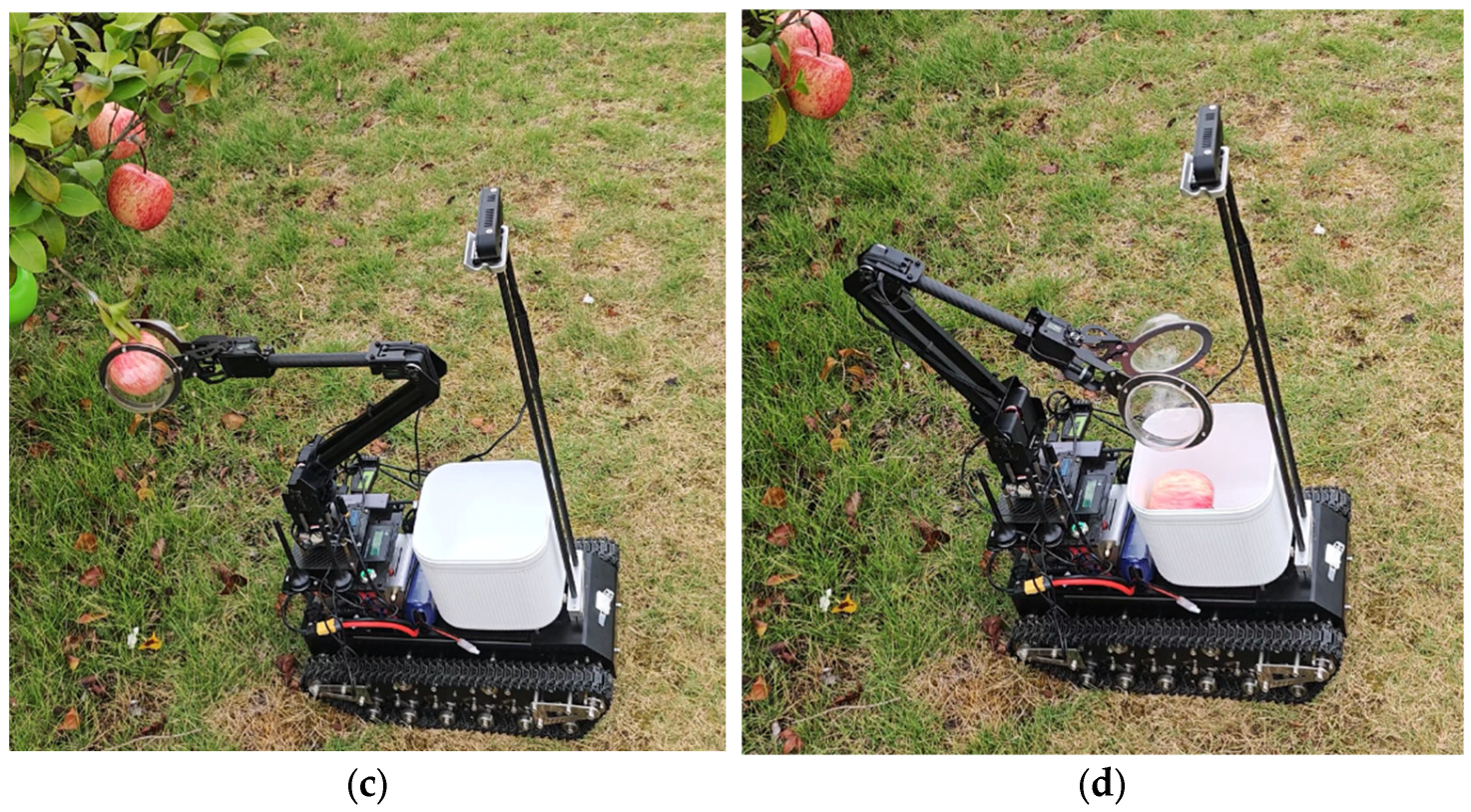

The experiments were conducted on trunk-shaped fruit trees ranging from 2.53 m to 3 m in height with canopy widths less than 1.5 m, assessing picking tasks involving apples of varying quantities and colors. The picking workflow, detailed in

Figure 11, revealed a recognition success rate of 95% for red, ripe apples, with an average picking time of 12.5 s and a capacity to process up to three fruits per cycle; however, the recognition accuracy for green, unripe apples dropped to 80%, resulting in an overall success rate of 92.2%. In a typical scenario, picking three red apples consecutively took 18.5s, while a task involving five red and one green apple resulted in one failure due to the missed detection of the green fruit. The hemispherical end effector caused no fruit damage across all successful cases, demonstrating that its structural design effectively mitigates the squeezing risks associated with traditional grasping methods.

While the initial field tests validated the core functionality, the post-experiment analysis identified opportunities for refinement. Specifically, the hemispherical end-effector exhibited slight fruit slippage during rapid movement, an issue addressed by applying a rubber membrane coating to enhance the friction and reduce slip. Furthermore, leveraging the AI-driven analysis of experimental metrics—including the 92.2% harvesting success rate and 12.5 s average picking time—the system proposed a lightweight redesign of the clamping end-effector. Validated via 3D printing, this optimization reduced the manipulator’s end-effector inertia, thereby enhancing the motion control precision, lowering the motor load and energy consumption, and enabling faster, more accurate, and more energy-efficient operations. These improvements not only strengthened the system durability and environmental adaptability, but also aligned closely with the core requirement of agricultural robots for high-efficiency and damage-free harvesting, laying a solid technical foundation for practical orchard applications.

Compared to similar studies, this prototype exhibits significant advantages in picking the efficiency and non-damage rate, with the hemispherical end effector simplifying the mechanical structure while enhancing the compatibility with diverse fruit shapes [

30]. Building on the validated optimizations, future improvements could focus on developing multi-robot cooperative navigation algorithms to further enhance the adaptability and operational efficiency in complex orchard environments [

31].

6. Discussion

AI technology permeates the entire robot design workflow, with its application spanning from conceptualization to deployment and embodying significant advantages over traditional design processes. In the conceptual design phase, multimodal LLMs (ChatGPT, DeepSeek, and Doubao) synergistically generate technical specifications by parsing user requirements. For instance, driven by the need to “adapt to hilly orchards”, these models automatically derive critical parameters such as a crawler ground contact length of 270 mm and a 4-DoF manipulator configuration, laying a precise foundation for subsequent development.

In the data and algorithm optimization stage, generative AI further enhances the system’s robustness. Latent diffusion models are employed to generate synthetic orchard images covering extreme lighting and weather conditions, which are integrated into training datasets to augment diversity. Combined with the optimized YOLOv8 model, this hybrid dataset achieves a 90.2% recognition accuracy in complex orchard environments, effectively addressing challenges such as fruit occlusion and varying light conditions.

In control logic design, LLMs play a key role in accelerating low-level development. By inputting technical requirements, models such as DeepSeek automatically generate finite state machine code frameworks that govern the manipulator’s picking action sequence, while Doubao optimizes serial communication logic between measurement modules and the main control board. This not only reduces repetitive coding efforts, but also ensures the reliability of core control flows.

The core value of AI in this workflow lies in accelerating repetitive tasks and integrating cross-domain knowledge. It reduces the design iteration time compared to traditional methods, effectively overcoming efficiency bottlenecks and creative constraints. In cross-domain integration, Doubao accurately provides agronomic parameters to prevent mechanical overload, while DeepSeek-generated STM32 motor control code frameworks significantly reduce low-level development efforts. Additionally, AIGC models map safety standards such as ISO 18497 [

23], embedding emergency stop circuits and collision detection sensors to systematically enhance the design safety.

Despite its advantages, generative design exhibits notable limitations in practice. First, it relies heavily on high-quality, large-scale training data; biases or insufficiencies can lead to logical errors or design flaws, potentially yielding impractical outcomes. Second, current models face precision bottlenecks in cross-domain knowledge integration, relying on probabilistic matching rather than a deep comprehension of physical laws or industry standards, rendering some solutions unimplementable in precision manufacturing contexts. Additionally, safety and interpretability challenges persist: black-box model decision processes are difficult to trace, and auto-generated code or modules may harbor vulnerabilities, failing compliance reviews. If solutions lack clear safety validation logic, regulatory approval becomes challenging. Finally, generative design risks innovation homogenization, as models constrained by training data distributions may produce “locally optimal” solutions, struggling to achieve disruptive breakthroughs.

AIGC introduces a transformative paradigm for apple-picking robot design, with its value evident in enhanced design efficiency and cross-modal optimization. According to the tentative research, the AIGC-driven design workflow can reduce the significant complexities and challenges of intelligent robot design, especially for beginners or young engineers [

32]. The visual system, trained on a 1:3 mixed dataset with an improved YOLOv8 model incorporating GSConv and DCNv3, achieves 90.2% recognition accuracy, enhancing robustness under complex conditions [

33]. In terms of control logic design, automatically generating finite state machine (FSM) code frameworks reduces the underlying development workload, and embedding safety modules in the control flow systematically reduces risks [

31].

However, challenges remain. Model-generated solutions exhibit “technical hallucinations” (e.g., 15–40% errors in cutting force parameters), requiring physics simulations and prototype validation [

34]. Robotic knowledge integration is limited, suggesting the need for a domain-specific robotic design LLM. Data quality depends on manual prompt optimization, necessitating automated cleaning tools. Future work could develop a “language-driven–simulation–implementation” platform, integrating digital twin technology for real-time data-driven model tuning and exploring multi-modal iterations to optimize motion trajectories [

35].

What cannot be ignored is that the integration of generative AI in agricultural robotics precipitates significant intellectual property (IP) and ethical challenges [

36]. Foremost among these is unintentional IP infringement, wherein AI systems may inadvertently replicate patented mechanisms—such as proprietary fruit detachment systems or gripper designs. Addressing these challenges necessitates not only the comprehensive legal analysis and adaptive reform of copyright frameworks, but also coordinated multidisciplinary efforts spanning technological, societal, and legal domains to establish the effective governance of AI-generated creations [

37]. As interim measures, the proactive filtering of AI outputs against patent databases and explicit disclosure of AI-generated content constitute essential safeguards.

7. Conclusions

This work proposed the AIGC-assisted robot design methodology. It transitions the design paradigm from human-centric iteration to human–AI co-creation. This synergy unlocks an unprecedented innovation velocity while embedding responsibility into autonomous systems, proving AIGC is not merely a tool, but a foundational promoter in building intelligent machines.

Although the AIGC-driven design workflow can alleviate the significant complexities and challenges of intelligent robot design, it also puts forward new requirements for engineers, such as identifying cross-LLMs synthesis, the design of a chain of thought, and identifying and intervening in AI hallucinations. Furthermore, AI-generated creations might bring out unintentional intellectual property infringement. Therefore, the wise application and compliance supervision of AIGC need a technical transparency mechanism and legal tracking.

Future research will continue to deepen the integration of the AIGC and robot design process, especially exploring the AI-agent based automatic and systemic workflow [

38]. For instance, RAG (Retrieval-Augmented Generation) can be introduced because it can transform workflows from statistical guessing to evidence-based reasoning, addressing critical gaps in reliability and innovation, and embedding regulatory compliance and physics-aware reasoning directly into generative workflows. In addition, more design processes should be integrated with AIGC, especially the generation of a 3D model based on generative AI, intelligent analysis based on finite element simulation, and the upgrading of scene intelligence or embodied intelligence based on a virtual prototype and digital twins.

Author Contributions

Conceptualization, Q.J. and F.H.; methodology, Q.J., J.Z. (Jiayu Zhao) and F.H.; software, J.Z. (Jiayu Zhao); validation, Q.J. and J.Z. (Jiayu Zhao); formal analysis, Q.J. and F.H.; investigation, Q.J., J.Z. (Jiayu Zhao), W.B., J.Z. (Ji Zhao) and F.H.; resources, Q.J., F.H. and Y.Z.; data curation, J.Z. (Jiayu Zhao), W.B. and J.Z. (Ji Zhao); writing—original draft preparation, F.H., J.Z. (Jiayu Zhao), W.B., J.Z. (Ji Zhao) and Q.J.; writing—review and editing, F.H., J.Z. (Jiayu Zhao) and Q.J.; visualization, W.B., J.Z. (Ji Zhao) and Q.J.; supervision, F.H.; project administration, Q.J. and F.H.; funding acquisition, Q.J. and F.H. All authors have read and agreed to the published version of the manuscript.

Funding

We acknowledge the Jiangsu Province Engineering Technology Research and Development Center for Higher Vocational Colleges and Universities Big Data Intelligent Application (Su Teaching Letter [2023] No. 11); the General Program of Basic Science (Natural Science) Research in Colleges and Universities of Jiangsu Province (grant No. 22KJD210005); and Qing Lan Project of Jiangsu Province of China (grant No. 07030050204) for providing the equipment and funding.

Data Availability Statement

All data generated or analyzed during this study are included in this article.

Conflicts of Interest

The authors declare no conflicts of interest.

Nomenclature

| ADC | Analog-to-Digital Converter |

| AI | Artificial Intelligence |

| AIGC | Artificial Intelligence-Generated Content |

| CAD | Computer-Aided Design |

| CoT | Chain of Thought |

| DC | Direct Current |

| DMA | Direct Memory Access |

| DoF | Degree of Freedom |

| GAD | Generative AI-Assisted Design |

| GAI | Generative Artificial Intelligence |

| GAN | Generative Adversarial Network |

| IP | Intellectual Property |

| LLM | Large Language Model |

| LVLM | Large Vision-Language Model |

| PID | Proportional–Integral-Derivative |

| PWM | Pulse Width Modulation |

References

- Cao, Y.; Li, S.; Liu, Y.; Yan, Z.; Dai, Y.; Yu, P.S.; Sun, L. A comprehensive survey of AI-generated content (AIGC): A history of generative AI from GAN to ChatGPT. arXiv 2023, arXiv:2303.04226. [Google Scholar] [CrossRef]

- Kalluri, K. Revolutionizing computational material science with ChatGPT: A framework for AI-driven discoveries. Int. J. Innov. Res. Creat. Technol. 2024, 10, 1–11. [Google Scholar] [CrossRef]

- Akram, J.; Aamir, M.; Raut, R.; Anaissi, A.; Jhaveri, R.H.; Akram, A. AI-generated content-as-a-service in IoMT-based smart homes: Personalizing patient care with human digital twins. IEEE Trans. Consum. Electron. 2025, 71, 1352–1362. [Google Scholar] [CrossRef]

- Alasadi, E.A.; Baiz, C.R. Generative AI in education and research: Opportunities, concerns, and solutions. J. Chem. Educ. 2023, 100, 2965–2971. [Google Scholar] [CrossRef]

- Aluko, H.A.; Aluko, A.; Offiah, G.A.; Ogunjimi, F.; Aluko, A.O.; Alalade, F.M.; Ogeze Ukeje, I.; Nwani, C.H. Exploring the effectiveness of AI-generated learning materials in facilitating active learning strategies and knowledge retention in higher education. Int. J. Organ. Anal. 2025. [Google Scholar] [CrossRef]

- Shafiee, S. Generative AI in manufacturing: A literature review of recent applications and future prospects. Procedia CIRP 2025, 132, 1–6. [Google Scholar] [CrossRef]

- Hu, F.; Wang, C.; Wu, X. Generative artificial intelligence-enabled facility layout design paradigm. Appl. Sci. 2025, 15, 5697. [Google Scholar] [CrossRef]

- Jin, J.; Yang, M.; Hu, H.; Guo, X.; Luo, J.; Liu, Y. Empowering design innovation using AI-generated content. J. Eng. Des. 2025, 36, 1–18. [Google Scholar] [CrossRef]

- Rane, N.; Choudhary, S.; Rane, J. Contribution of ChatGPT and similar generative artificial intelligence for enhanced climate change mitigation strategies. SSRN Electron. J. 2024. [Google Scholar] [CrossRef]

- Davidson, J.R.; Silwal, A.; Hohimer, C.J.; Karkee, M.; Mo, C.; Zhang, Q. Proof-of-concept of a robotic apple harvester. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 634–639. [Google Scholar] [CrossRef]

- Li, W.; Zhang, W.; Wu, W.; Xu, J. Exploring human-machine collaboration paths in the context of AI-generation content creation: A case study in product styling design. J. Eng. Des. 2024, 36, 298–324. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar] [CrossRef]

- Li, Z.; Huang, Z.; Zhu, Y.; Yang, S.; Hu, X. Chain of thought empowers transformers to solve inherently serial problems. arXiv 2024, arXiv:2402.12030. [Google Scholar] [CrossRef]

- Yavartanoo, M.; Hong, S.; Neshatavar, R.; Lee, K.M. Text2CAD: Text to 3D CAD Generation via Technical Drawings. arXiv 2024, arXiv:2411.06206v1. [Google Scholar] [CrossRef]

- Poole, B.; Jain, A.; Barron, J.T.; Mildenhall, B. DreamFusion: Text-to-3D using 2D Diffusion. arXiv 2022, arXiv:2209.14988. [Google Scholar] [CrossRef]

- Daareyni, A.; Martikkala, A.; Mokhtarian, H.; Ituarte, I.F. Generative AI meets CAD: Enhancing engineering design to manufacturing processes with large language models. Int. J. Adv. Manuf. Technol. 2025. [Google Scholar] [CrossRef]

- Dassault Systèmes. Generative AI in CATIA: Parametric Modeling & Intelligent Recommendations. 2025. Available online: https://www.3ds.com/products/catia/ai-driven-generative-experiences (accessed on 5 March 2025).

- Baidu. Wenxin Industrial Vision Generation & Auxiliary Modeling: Technical Whitepaper. 2025. Available online: https://wenxin.baidu.com/tech/industrial-aigc (accessed on 5 March 2025).

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B.; et al. A survey on hallucination in large language models: Principles, taxonomy, challenges, and open questions. ACM Trans. Inf. Syst. 2025, 43, 1–55. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, C.; Wang, Y.; Shi, E.; Ma, Y.; Zhong, W.; Chen, J.; Mao, M.; Zheng, Z. LLM hallucinations in practical code generation: Phenomena, mechanism, and mitigation. Proc. ACM Softw. Eng. 2025, 2, 481–503. [Google Scholar] [CrossRef]

- Lim, Y.; Choi, H.; Shim, H. Evaluating image hallucination in text-to-image generation with question-answering. In Proceedings of the AAAI Conference on Artificial Intelligence, Palo Alto, CA, USA, 25 February–4 March 2025; AAAI Press: Washington, DC, USA, 2025; Volume 39, pp. 26290–26298. [Google Scholar] [CrossRef]

- NY/T 1086-2006 (S); Apple Picking Technical Specification. Ministry of Agriculture of the People’s Republic of China: Beijing, China; Agriculture Press: Beijing, China, 2006. Available online: https://www.moa.gov.cn/govpublic/SCYJJXXS/201006/t20100606_1532967.htm (accessed on 26 June 2025).

- ISO 18497-1:2024 (S); Agricultural Machinery and Tractors—Safety of Partially Automated, Semi-Autonomous and Autonomous Machinery. International Organization for Standardization (ISO): Geneva, Switzerland, 2024. Available online: https://www.iso.org/standard/82684.html (accessed on 10 March 2025).

- ISO/DIS 12100 (S); Safety of Machinery—General Principles for Design—Risk Assessment and Risk Reduction. International Organization for Standardization (ISO): Geneva, Switzerland, 2025. Available online: https://www.iso.org/standard/88578.html (accessed on 10 March 2025).

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-Resolution Image Synthesis with Latent Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 10674–10685. [Google Scholar] [CrossRef]

- Ma, B.; Hua, Z.; Wen, Y.; Deng, H.; Zhao, Y.; Pu, L.; Song, H. Using an Improved Lightweight YOLOv8 Model for Real-Time Detection of Multi-Stage Apple Fruit in Complex Orchard Environments. In Artificial Intelligence in Agriculture; Elsevier: Amsterdam, The Netherlands, 2024; Volume 11, pp. 70–82. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Åström, K.J.; Hägglund, T. PID Controllers: Theory, Design, and Tuning. In Instrument Society of America; Wiley-IEEE Press: Hoboken, NJ, USA, 1995. [Google Scholar] [CrossRef]

- STMicroelectronics. AN5732: STM32 Servo Motor Control Application Note. 2022. Available online: https://www.st.com/resource/en/application_note/an5732-stm32-servo-motor-control-stmicroelectronics.pdf (accessed on 10 May 2024).

- Fang, J.; Dai, N.; Xin, Z.; Yuan, J.; Liu, X. Design and Interaction Dynamics Analysis of a Novel Hybrid Bending-Twisting-Pulling End-Effector for Robotic Tomato Picking. Comput. Electron. Agric. 2025, 231, 110011. [Google Scholar] [CrossRef]

- Jia, Y.; Song, Y.; Cheng, J.; Jin, J.; Zhang, W.; Yang, S.X.; Kwong, S. A Deep Reinforcement Learning Approach Using Asymmetric Self-Play for Robust Multirobot Flocking. IEEE Trans. Ind. Inform. 2025, 21, 3266–3275. [Google Scholar] [CrossRef]

- Owen, A.E.; Roberts, J.C. Towards a Generative AI Design Dialogue. arXiv 2024, arXiv:2409.01283. [Google Scholar] [CrossRef]

- Lu, M.; Sun, L.; Wang, J.; Chu, H.; Yang, G.; Owoola, E.O.; Zhao, H.; Zhang, J.; Liu, M.; Song, Z.; et al. Self-Powered Flexible Force-Sensing Sensor Based on Triboelectric Nanogenerator: Practical Applications in Non-Destructive Harvesting of Fresh Fruits and Vegetables. Nano Energy 2025, 126, 107134. [Google Scholar] [CrossRef]

- Hu, F. Mutual Information-Enhanced Digital Twin Promotes Vision-Guided Robotic Grasping. Adv. Eng. Inform. 2022, 52, 101562. [Google Scholar] [CrossRef]

- Hu, F.; Wang, W.; Zhou, J. Petri Nets-Based Digital Twin Drives Dual-Arm Cooperative Manipulation. Comput. Ind. 2023, 147, 103880. [Google Scholar] [CrossRef]

- Yang, W. Legal Regulation of Intellectual Property Rights in the Digital Age: A Perspective from AIGC Infringement. Sci. Law J. 2024, 3, 164–173. [Google Scholar] [CrossRef]

- Xu, X. Research on Legal Regulation of Infringement of AIGC Generated Content. In Proceedings of the 2024 2nd International Conference on Digital Economy and Management Science, Wuhan, China, 26–28 April 2024; Atlantis Press: Dordrecht, The Netherlands, 2024; pp. 487–503. [Google Scholar] [CrossRef]

- Wang, Y.; Pan, Y.; Su, Z.; Deng, Y.; Zhao, Q.; Du, L.; Luan, T.H.; Kang, J.; Niyato, D. Large model based agents: State-of-the-art, cooperation paradigms, security and privacy, and future trends. IEEE Commun. Surv. Tutor. 2025. [Google Scholar] [CrossRef]

Figure 1.

A schematic of AIGC-aided robot design methodology.

Figure 1.

A schematic of AIGC-aided robot design methodology.

Figure 2.

Generated multiple design concepts of apple-harvesting robots. (a) Tracked-chassis apple-harvesting robot with transparent spherical enclosure end effector; (b) fixed-base apple-harvesting robot with gripper-clamping end effector; (c) tracked-chassis apple-harvesting robot with metal spherical adsorption end effector; (d) tracked-chassis apple-harvesting robot with lightweight gripping end effector.

Figure 2.

Generated multiple design concepts of apple-harvesting robots. (a) Tracked-chassis apple-harvesting robot with transparent spherical enclosure end effector; (b) fixed-base apple-harvesting robot with gripper-clamping end effector; (c) tracked-chassis apple-harvesting robot with metal spherical adsorption end effector; (d) tracked-chassis apple-harvesting robot with lightweight gripping end effector.

Figure 3.

Chain of thought to generate the apple gripping and detachment design scheme.

Figure 3.

Chain of thought to generate the apple gripping and detachment design scheme.

Figure 4.

Apple images with different lighting conditions and weather generated by latent diffusion models. (a) Apples in rainy conditions with visible raindrops; (b) apples in a misty or light drizzle environment; (c) apples under strong sunlight, exhibiting vivid color saturation; (d) apples at dusk, displaying gradient coloration due to ripening and low-light illumination.

Figure 4.

Apple images with different lighting conditions and weather generated by latent diffusion models. (a) Apples in rainy conditions with visible raindrops; (b) apples in a misty or light drizzle environment; (c) apples under strong sunlight, exhibiting vivid color saturation; (d) apples at dusk, displaying gradient coloration due to ripening and low-light illumination.

Figure 5.

Apple images with different degrees of ripeness generated by latent diffusion models. (a) Unripe green apple; (b) early ripening stage with initial red blush; (c) moderate ripening with expanded red coverage; (d) advanced ripening with dominant red hue; (e) near-ripe apple with intense red coloration; (f) fully ripe deep red apple.

Figure 5.

Apple images with different degrees of ripeness generated by latent diffusion models. (a) Unripe green apple; (b) early ripening stage with initial red blush; (c) moderate ripening with expanded red coverage; (d) advanced ripening with dominant red hue; (e) near-ripe apple with intense red coloration; (f) fully ripe deep red apple.

Figure 6.

Model performance evaluation and data configuration of experimental groups. (a) mAP comparison of different experimental groups; (b) precision–recall tradeoff analysis; (c) evolution of F1 score across experimental groups; (d) data configuration table.

Figure 6.

Model performance evaluation and data configuration of experimental groups. (a) mAP comparison of different experimental groups; (b) precision–recall tradeoff analysis; (c) evolution of F1 score across experimental groups; (d) data configuration table.

Figure 7.

Structure of tracked mobile platform: (a) tracked mobile platform; (b) track structure: 1. power switch; 2. voltage display screen; 3. frame; 4. track; 5. hollow triangular plate; 6. upper cover; 7. power indicator light; 8. headlight; 9. triangular plate; 10. driving wheel; 11. rocker arm; 12. load-bearing wheel; 13. guide wheel; 14. idler wheel; 15. spring; 16. side plate.

Figure 7.

Structure of tracked mobile platform: (a) tracked mobile platform; (b) track structure: 1. power switch; 2. voltage display screen; 3. frame; 4. track; 5. hollow triangular plate; 6. upper cover; 7. power indicator light; 8. headlight; 9. triangular plate; 10. driving wheel; 11. rocker arm; 12. load-bearing wheel; 13. guide wheel; 14. idler wheel; 15. spring; 16. side plate.

Figure 8.

Control configuration of the apple-picking robot.

Figure 8.

Control configuration of the apple-picking robot.

Figure 9.

Structure of the 4-DoF manipulator includes components: 1. dual-hemisphere soft gripper; 2. forearm; 3. boom; 4. waist; 5. transitional connecting plate; 6. base.

Figure 9.

Structure of the 4-DoF manipulator includes components: 1. dual-hemisphere soft gripper; 2. forearm; 3. boom; 4. waist; 5. transitional connecting plate; 6. base.

Figure 10.

Overall structure of the apple-picking robot includes components: 1. dual-hemisphere soft gripper, 2. manipulator, 3. control cabin, 4. tracked locomotion mechanism, 5. binocular structured light camera, 6. storage cabin.

Figure 10.

Overall structure of the apple-picking robot includes components: 1. dual-hemisphere soft gripper, 2. manipulator, 3. control cabin, 4. tracked locomotion mechanism, 5. binocular structured light camera, 6. storage cabin.

Figure 11.

Harvesting experiment in the simulated scene. (a) Detecting the apple; (b) end-effector locating for grasping; (c) grasping the apple; (d) placing the apple into the collection box.

Figure 11.

Harvesting experiment in the simulated scene. (a) Detecting the apple; (b) end-effector locating for grasping; (c) grasping the apple; (d) placing the apple into the collection box.

Table 1.

Comparison of design recommendations generated by LLMs.

Table 1.

Comparison of design recommendations generated by LLMs.

| Prompt | ChatGPT Output | DeepSeek Output | Doubao Output |

|---|

| “Adapt to rugged terrain” | Wide tracks + high-torque motors | Track ground contact length ≥ 0.8 m | Recommended track material for hilly orchards |

| “Lightweight mechanical arm” | Carbon fiber structure | Joint load torque ≤ 3 N · m | Reference to Chongqing hilly agricultural machinery data |

Table 2.

Experimental group configurations.

Table 2.

Experimental group configurations.

| Experimental Group | Generated Data | Real Data | Total | Mixing Ratio |

|---|

| D1 | 100 | 300 | 400 | 1:3 |

| D2 | 200 | 300 | 500 | 2:3 |

| D3 | 300 | 300 | 600 | 1:1 |

| D4 | 0 | 400 | 400 | Pure real |

| D5 | 0 | 500 | 500 | Pure real |

| D6 | 0 | 600 | 600 | Pure real |

Table 3.

Performance metrics.

Table 3.

Performance metrics.

| Experimental Group | mAP@0.5 | mAP@0.5:0.95 | Precision | Recall | F1 Score | Inference Speed (ms) |

|---|

| D1 | 0.905 | 0.717 | 0.854 | 0.749 | 0.798 | 36.7 |

| D2 | 0.885 | 0.726 | 0.824 | 0.755 | 0.789 | 37.6 |

| D3 | 0.895 | 0.714 | 0.832 | 0.762 | 0.795 | 38.2 |

| D4 | 0.901 | 0.731 | 0.812 | 0.763 | 0.784 | 37.3 |

| D5 | 0.905 | 0.751 | 0.806 | 0.779 | 0.793 | 36.1 |

| D6 | 0.922 | 0.748 | 0.855 | 0.752 | 0.800 | 37.7 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).