1. Introduction

With the rapid development of global renewable energy, lithium batteries have become the main solution to the problem of the grid-connected stability of renewable energy due to their high energy density, long cycle life, and relatively low self-discharge rate [

1]. The reason is that renewable energy sources such as solar energy and wind energy are unstable and intermittent during generation, which makes it difficult to apply these valuable forms of energy continuously and stably. To tackle this issue, the employment of energy storage systems combined with renewable energy may greatly improve the utilization rate and stability of renewable energy [

2,

3]. However, with an increase in the number of uses, lithium batteries will exhibit the battery degradation phenomenon, resulting in a decline in battery capacity, shortening the service life, etc. [

4], which seriously affects the charging and discharging efficiency of the battery. Thus, the accurate estimation of the performance of lithium batteries is key to realize the safe and stable consumption of renewable energy [

5].

The state of health (SOH) of lithium batteries is an important indicator in evaluating their performance. In existing research, the prediction of the lithium battery SOH is mainly categorized into direct measurement, model-based prediction, and data-driven prediction methods [

6]. The direct measurement method and the model-based prediction method have high professional requirements and require sufficient knowledge reserves, while the battery’s response in the charging and discharging process is complex and involves more parameters, which makes the model’s construction more difficult [

7,

8]. Compared with the above two methods, the data-driven method is based on historical data, learns the hidden features of the data through machine learning algorithms such as neural networks, and realizes the prediction of the SOH based on the learned features.

In the data-driven prediction approach, researchers have searched for the accurate mapping relationship between the features and SOH through feature processing, using recurrent neural networks [

9], machine learning algorithms [

10], Gaussian regression [

11], etc. Wen J et al. predicted the SOH of lithium batteries via incremental capacity curves and analyzed them in combination with backpropagation (BP) neural networks, but BP neural networks are unable to learn time-series features, and it is difficult to accurately characterize the trend of the SOH through a single feature [

12]. Xia F et al. analyzed the correlations between different feature curves and the SOH via Pearson correlation analysis (PCA). They then used improved particle swarm optimization (PSO) to optimize the network parameters of a bidirectional gated recurrent unit and trained and predicted the SOH based on the model. However, tripartite competition mechanism PSO (TCMPSO) reduces the speed of optimization, which makes the model less efficient for prediction in practical applications [

13]. Zhao D et al. proposed a prediction method integrating a neural network and physical model and constructed a hybrid model combining a temporal convolutional network and bidirectional long short-term memory network to predict the SOH of lithium batteries [

14]. A large number of researchers have achieved better performance in estimating the SOH of lithium batteries by analyzing the correlations between the physical features and SOH and using neural networks to extract the mapping relationships between the strongly correlated features and SOH [

15,

16,

17]. However, in the process of practical application, energy storage batteries are limited by factors such as the application scenario, and there is the phenomenon of low data volumes, in which case the neural network will have problems such as underfitting and will not be able to accurately learn the mapping relationship between the features and SOH.

“Capacity regeneration” is another reason that it is difficult to realize the accurate estimation of lithium batteries. Due to the versatility of charging and discharging strategies, the SOH of lithium batteries presents a fluctuating downward trend with strong volatility at a certain stage. To address this problem, existing studies have proposed the use of decomposition algorithms [

18]. The purpose of decomposing the raw SOH data includes mitigating the degree of volatility and removing volatile information. After the mitigation process, the “capacity regeneration” information is still retained in the dataset, and the data have a high degree of realism; after the elimination process, the “capacity regeneration” is rejected as anomalous data. The mitigation process enables the neural network to learn the historical “capacity regeneration” information; the elimination process can extract the core trend of the SOH. Fu J et al. used variational mode decomposition (VMD) to mitigate the effects of “capacity regeneration” and then reconstructed the components based on the entropy of the alignment [

19]. Yuan Z et al. eliminated “capacity regeneration” and noise signals by VMD and then used a convolutional neural network and Transformer to estimate the SOH [

20]. Although the above research mitigated the effects of “capacity regeneration”, the problem of parameter setting has not been solved. Some research has used other algorithms to deal with the original data; for example, Cheng G et al. used empirical mode decomposition (EMD) to decompose the data [

21], but this method led to modal aliasing problems [

22].

In addition to the above problems, lithium battery SOH estimation also faces the problem of model homogeneity. There are a large number of research results for lithium battery SOH estimation, but, when using different datasets, better prediction performance can only be achieved on new datasets if the trends in the data are similar, which leads to lower applicability of the model. Therefore, some studies have proposed the use of a migration learning approach, which is fine-tuned on the new dataset after being trained on the old dataset. This not only achieves better prediction performance on different datasets but also mitigates the effects of small-sample datasets. Huang K et al. proposed two strategies, reconstruction and fine-tuning, based on migration learning to adapt to different datasets [

23]. Liu Y et al. shifted the feature information from the source domain to the target domain via a fine-tuning strategy based on migration learning [

24]. Fu S et al. improved the reliability of migration learning by finding the best matching route between the source and target domains based on analyzing feature scatter [

25].

To address these challenges, we propose a novel transfer learning-based framework for SOH prediction. The framework first extracts new physical features to enhance SOH representation in the feature space and introduces a multidimensional correlation analysis method to systematically evaluate the relationship between multivariate features and the battery SOH. We further develop an improved particle swarm optimization (IPSO) algorithm to optimize the VMD parameters and construct a hybrid model capable of effectively fusing multivariate features while learning their nonlinear mapping to the SOH. By strategically integrating transfer learning with time-series feature analysis, the proposed framework significantly enhances the learning performance across diverse datasets, overcoming the limitations of conventional approaches.

The main contributions of this paper are as follows.

- (1)

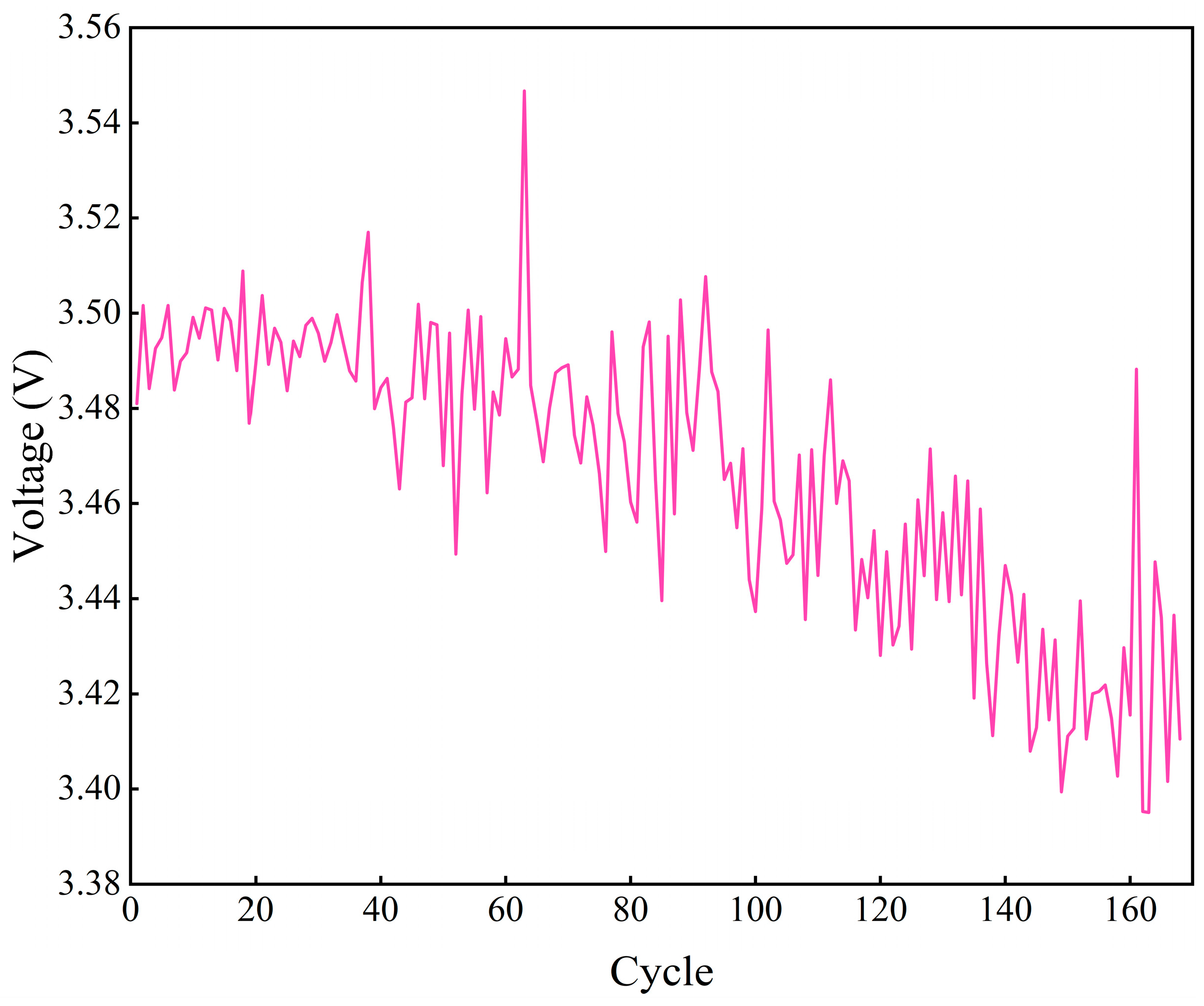

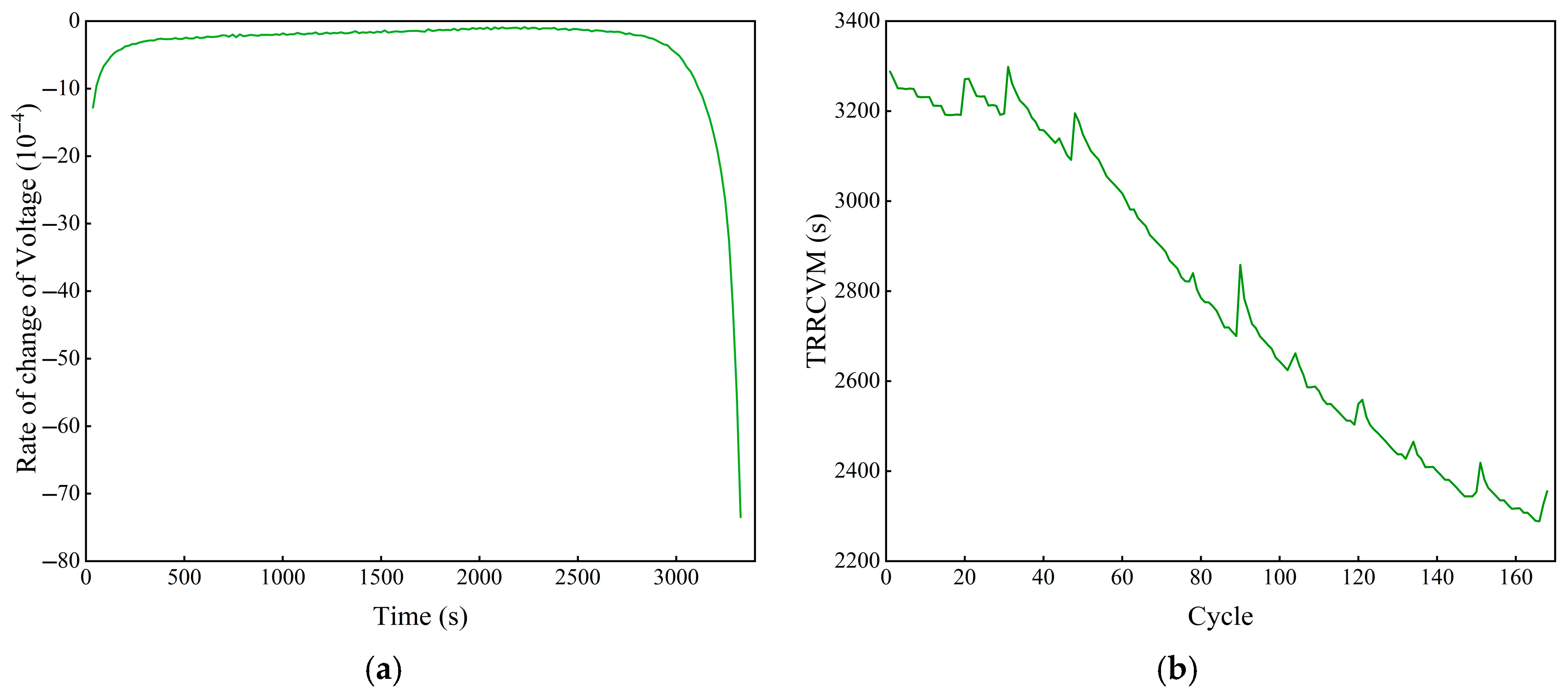

A new strong correlation feature is proposed to extract the time at which the voltage change rate reaches the lowest value based on the relationship between the voltage change rate and the time of the discharge process of lithium batteries. On this basis, a correlation analysis method, T-Pearson, is proposed to evaluate the correlation between the multivariate features and SOH from the two dimensions of the trend and linearity to ensure the comprehensiveness of the correlation analysis and the accuracy of feature selection.

- (2)

A new approach to data processing is employed to mitigate the capacity resurgence phenomenon and reduce the fluctuation magnitude of the “capacity resurgence” point. This approach enhances the model’s learning capabilities while optimizing the search process through variable weight coefficients. Specifically, the variable weight coefficients improve the search efficiency during the initial optimization phase, which prevents the traditional PSO algorithm from prematurely converging to local optima.

- (3)

A new model based on linear regression (LR) and a convolutional neural network (CNN) is proposed to accurately extract the trend features of a single type of battery through LR to achieve feature learning, and we then combine it with the strongly correlated features to achieve the learning of cross-type features through the CNN.

- (4)

To enhance the reliability of transfer learning and improve the model prediction accuracy, we adopt a time-domain analysis-based transfer learning fine-tuning strategy. This approach selects source and target domains with similar temporal characteristics by analyzing the time-domain features of the dataset, thereby achieving high-matching-value transfer learning.

The rest of the paper is organized as follows:

Section 2 describes the lithium battery-related dataset and the extraction of physical features;

Section 3 details the proposed migration learning-based SOH prediction framework for lithium batteries; and the relevant experimental validation and analyses are described in

Section 4. Finally, the conclusions are provided in

Section 5.

3. Lithium Battery SOH Prediction Based on Transfer Learning

3.1. VIW-PSO-VMD Algorithm

In order to achieve the learning of the “capacity resurgence” phenomenon, a signal decomposition framework is proposed. The framework is based on EMD decomposition, combined with PSO to optimize the VMD parameters, and the optimized VMD is applied to the original SOH to extract the high-frequency components and reduce the data volatility. In addition to extracting the high-frequency components, the framework is also able to extract the time-series characteristics of the lithium battery SOH, which assists the model in making accurate predictions. The framework is mainly composed of three parts: improved PSO based on variable inertia weights (VIW), EMD, and VMD.

3.1.1. VIW-PSO

VIW-PSO is an improved algorithm based on the traditional PSO, which is combined with the greedy algorithm [

35]. The algorithm evaluates the adaptation of particles through a multivariate evaluation function, while introducing time as an evaluation criterion. The evaluation function is shown in the following equation:

where

is the multivariate evaluation function,

is the

n-th evaluation function, and

p is the value produced by PSO.

In the research process, the arrangement entropy, the mean absolute error (MAE) between the original data and the restructured data, and the time are selected as the evaluation functions, which together form

. Then, the weights of different particles are calculated based on the multidimensional evaluation value, as shown in the following equation:

where

is the weight of the

i-th particle.

In the traditional PSO, the inertia weights are set as a decreasing function value from 0.9 to 0.4, which strengthens the search capabilities of the target domain by applying higher inertia weights in the early stage, and stable convergence is achieved by decreasing the inertia weights in the later stage. In order to accelerate the search process in the early stage, VIW is proposed on the basis of PSO, and the calculation formula is as shown below:

where

is the variable inertia weight, and

t is the number of iterations.

Based on the above formula, the range of values of the variable inertia weights is defined as [0, 1], which not only ensures its significance, i.e., the influence of the current moment on the next moment, but also ensures that the values can present a rapid decline at the beginning of the search, a slow decline in the middle of the search, and stable convergence at the end of the search.

The flow of VIW-PSO is as shown below.

Step 1: Initialize the number, position, and velocity of particles.

Step 2: Calculate the multivariate evaluation index of particles.

Step 3: Determine whether the loop termination condition is reached. If yes, the algorithm is ended; otherwise, the algorithm goes to Step 4.

Step 4: Update the velocity and position of the particles. Specifically, the speed update function is

where

is the velocity of the

pi-th particle of the

i-th iteration;

is the single-particle bias coefficient, so that the global optimum is chosen in the range of the single-history optimum. Therefore, the single-particle bias coefficient ensures that the search is directed toward the single-history optimum, and

and

are the

i-th random numbers.

The position update formula is

where

is the position of the

i-th iteration.

Step 5: Calculate the multivariate evaluation index of the updated particle.

Step 6: Update the historical optimal particle, update the global optimal particle, and go to Step 2.

3.1.2. EMD Process

EMD is a classical signal decomposition algorithm that defines the intrinsic modal components for the first time and uses them as the benchmark for component decomposition. The basic process of the algorithm is as follows: (1) obtain the greatest and smallest values of the data; (2) construct the upper and lower envelopes, as well as the mean envelope; (3) eliminate the mean envelope and check whether the remaining data satisfy the definition of the intrinsic modal component; if they do, the component is eliminated from the original data and (1) to (3) are repeated for the remaining data; if they do not, (1) and (2) are repeated.

EMD allows the noise-laden fluctuation components to be decomposed into a set of smoother, stable components. These components can describe the trends of the original data in the time dimension more clearly; therefore, the components are used as the temporal characteristics of the lithium battery SOH, while the number of components decomposed by EMD is used as the reference value for the K value in the optimization of the VMD parameters of VIW-PSO.

3.1.3. VMD Process

VMD is a decomposition algorithm based on the variational model and achieves the decomposition of the data by continuously solving the variational model. Different from the EMD series of decomposition algorithms, VMD avoids the endpoint effect problem, while the number of components can be customized. Since VMD decomposes the original data through the frequency domain, the extraction of high-frequency components is better facilitated compared to the EMD algorithm, and the capacity for data smoothing is also better than in the EMD decomposition algorithm. During the study, it is found that, after VMD decomposition, the volatility of the components is significantly lower than that after EMD decomposition, and the volatility and standard deviations (SDs) of the components are compared in

Table 1, taking B0005 as an example.

While the number of customized components in VMD improves the range of its application, it also limits its decomposition performance. It is prone to under-decomposition when the value of K is low, so that high-frequency noise still exists in the components, and over-decomposition when the value of K is high. Therefore, the EMD decomposition results are used as a benchmark, and the parameters of VMD are optimized by combining it with VIW-PSO.

3.2. T-Pearson Correlation Analysis Algorithm

This paper proposes the T-Pearson correlation analysis algorithm, combining trend correlation coefficients and Pearson correlation coefficients as a means of avoiding the limitations associated with assessing only the linear correlations between the features and lithium battery SOH.

The trend correlation analysis algorithm is a method proposed by the authors of this paper to measure the correlation of the data in a time series, which is mainly reflected in measuring the trends of the data. Different from the commonly used correlation analysis algorithms, trend correlation analysis evaluates the similarity of different sequences of data in a time series by comparing whether there is consistency in the changes between the current moment and the next moment. The specific calculation steps are shown below.

Step 1: Calculate the change in sequence

and sequence

at the current moment and the next moment:

where

is the original length of the data,

is the length of the sequence of changes, and

;

is the change in the data at moment

of sequence

.

Step 2: Return a value of 1 if the changes are the same or 0 otherwise:

where

is the trend correlation index at the

t-th time.

Step 3: Calculate the overall trend correlation:

where

is the trend correlation index of sequence

A and sequence

B.

The similarity of two sets of data in terms of change trends can be measured by comparing the change values of the two sets of data at the current moment with those at the previous moment. However, there is a limitation in selecting features only via the similarity in the change trend, i.e., the similarity of the data in the linear change cannot be measured. Therefore, by combining Pearson correlation analysis and trend correlation analysis, the T-Pearson correlation analysis algorithm is proposed, as shown in the following equation:

where

is the Pearson correlation index between

A and

B.

The combined correlations are expressed as means by calculating trend correlations and Pearson correlation coefficients between the features and SOH.

3.3. Linear Regression Convolutional Neural Network Model

Existing lithium battery SOH prediction models need to be retrained to learn new parameters after accessing new data, especially when the trained model is applied on other datasets, and the prediction performance of the model will be affected to a high degree. To learn dataset-specific features as well as common features across different datasets, enabling the model to achieve high-precision predictions on new datasets with the same structure and weights, based on high-precision predictions on the training dataset, the linear regression convolutional neural network (LRCNN) model is proposed. The model consists of four parts.

- (1)

Trend feature learning model. This module is based on LR, seeking to learn the mapping relationship between historical data and the current data in a specific dataset, thereby extracting the development trend of the specific dataset as a feature. Lithium battery SOH data differ from other time-series data in that they have weaker volatility and a more pronounced downward trend within a cycle, with the linear characteristics being particularly evident. After decomposing the raw data using VMD, the data with the core trend can be obtained, enabling LR to learn the core trend in the data.

- (2)

Feature concatenation module. This module concatenates trend features and strongly correlated physical features selected by T-Pearson through a concatenation layer.

- (3)

Common feature learning module. The correlation between the lithium-ion battery SOH data and physical features from different data sources exhibits a certain degree of similarity. Therefore, a convolutional neural network is used to learn the mapping between strongly correlated physical features and SOH data. To accommodate the uniqueness of data from different sources, the trend features of the data are concatenated and fused with the strongly correlated physical features to achieve the learning of common features.

- (4)

Prediction module. Finally, the lithium battery SOH data are predicted through a fully connected layer.

3.3.1. Trend Feature Extraction Block

LR is a classic machine learning algorithm, and its ordinary form can be expressed as shown in Equation (13):

where

are the input features;

are the linear weights corresponding to different inputs, respectively; and

is the intercept.

Through Equation (13), it can be seen that the LR model is able to achieve better prediction performance in the face of a small-sample dataset, and the trained model has strong interpretability, being able to represent the effects of different features on the data to be predicted. However, although LR has more stable and better prediction performance than neural networks under small-sample datasets, it requires a linear relationship between the input data and output data, and it is difficult for LR to achieve better prediction performance for nonlinear prediction tasks. Therefore, the linear relationship among lithium battery SOH data is analyzed.

Firstly, the historical data are reconstructed in terms of the time window by reconstructing the original data

into

. The size of the time window is

, and the structure of the reconstructed data is as shown in the following equation:

where

is the original dataset,

is the length of the original dataset, and

is the window size.

Then, the Pearson correlation coefficient between the corresponding value

in each window and the current SOH is calculated, as shown in Equation (15) to Equation (17). In the case of type B0005, for example,

is set to 3, i.e., the original data are reconstructed with a time window of size 3. Since EMD decomposition is performed prior to this, the original data at this time are component data, and the Pearson correlation coefficients between the corresponding values of the time window composing the data and the data to be predicted are as shown in

Table 2.

Table 2 shows that there is a strong correlation between the historical data and the data to be predicted, so LR can be used as a training model for trend features to learn the linear mapping relationships between the historical data and the data to be predicted, and the trend features can be extracted for the specific data.

A neural network is an effective tool to achieve SOH prediction, and it can learn the nonlinear features between the SOH data and physical features. However, due to the small size of the dataset, the neural network cannot correct features precisely; as a result, the model’s performance is very unstable. In contrast, LR can achieve accurate prediction with a small-sized dataset. In order to confirm that LR has higher performance than a neural network when the dataset is small, LR and several neural networks are compared via the MAE, root mean square error (RMSE), and coefficient of determination (R

2), and the results are shown in

Table 3. The neural networks include BP, long short-term memory (LSTM), and CNN-LSTM.

As can be seen from

Table 3, the neural networks’ performance is worse than that of LR in trend feature prediction, especially regarding the R

2, where the scores of LSTM and CNN-LSTM are less than 0. The core reason is the features of the trend curve. The trend curve provided by VMD is a smooth curve, and the length of the curve is short, which means that the neural network cannot learn the correct features.

3.3.2. Feature Fusion Model

A model that only learns the trend features is unable to achieve accurate prediction on different datasets, so it also needs to learn the common features. According to the electrochemical mechanism of battery charging and discharging, as well as the discussion in

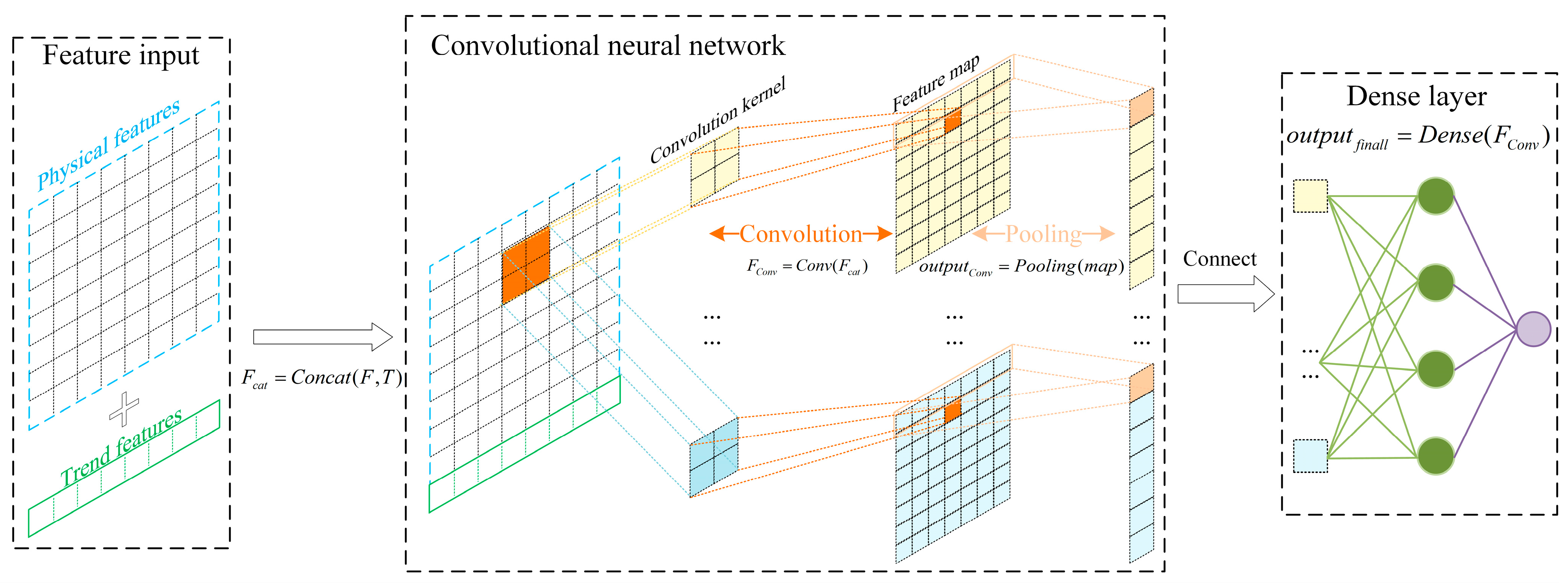

Section 2.2, it can be seen that the SOH of lithium batteries is related to their physical features. Therefore, common features of different categories of batteries can be extracted based on the physical features of lithium batteries. Meanwhile, in order to strengthen the learning ability of the model regarding the features of different datasets, the trend features are combined with the strongly correlated physical features and then fused and extracted by a CNN, as shown in the following equation:

where

is the spliced features,

is the strongly correlated physical features, and

is the trend features obtained based on LR.

A convolution operation is then performed on the spliced features using the convolution layer, as shown in Equation (19):

where

is the value of the output sequence at position

i and channel

d, i.e., the convolutional output value of the

i-th spliced feature at the

d-th convolutional kernel;

is the weight of the

d-th convolutional kernel at the

m-th position, where the input channel is

; and

is the bias term of the output channel

d.

After the convolution operation, the trend features are fused with the strongly correlated physical features, and their common features are learnt; these are then input into the subsequent fully connected layer for feedforward training to finally obtain the predicted values of the components. The flow of the LRCNN is shown in

Figure 6.

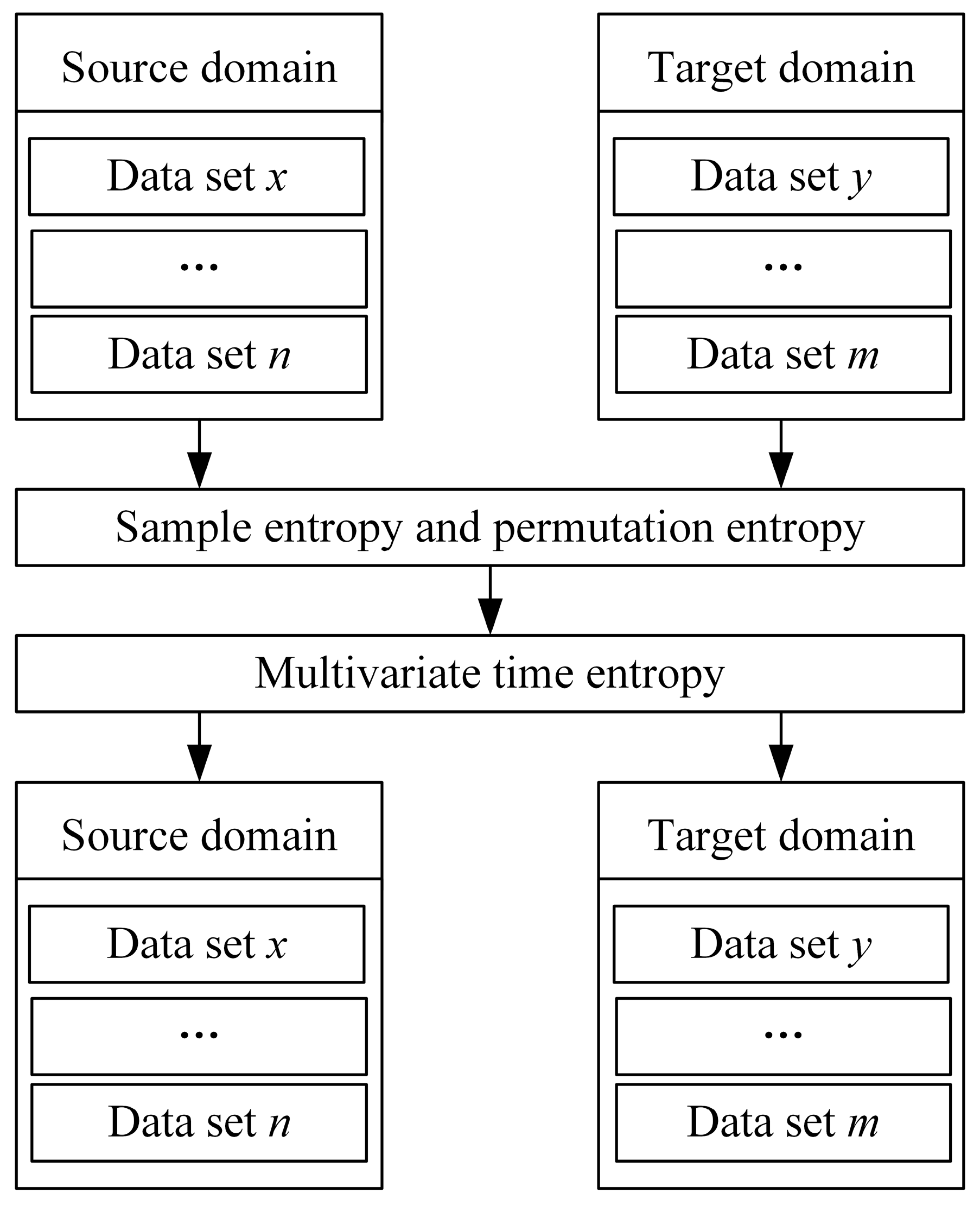

3.4. Transfer Learning Based on Time Feature Analysis

After learning trend features and common features via the LRCNN, the model is initially equipped with cross-dataset feature learning capabilities, and, after training on a single dataset, it can achieve higher-accuracy prediction on other datasets. However, in order to further improve the prediction performance of the trained model on other datasets and increase the efficiency of the model, migration learning based on temporal feature analysis is proposed. After calculating the sample entropy and alignment entropy of the source-domain dataset and the target-domain dataset, the multiple entropy values are used to measure their temporal features, and migration learning is performed by selecting source and target domains with similar temporal features. The steps are shown in

Figure 7. Meanwhile, in order to improve the accuracy and training efficiency, the following operations are carried out in the process of migration learning:

- (1)

Freeze all but the last layer and train only the output weights of the last layer;

- (2)

Use a smaller learning rate and number of epochs.

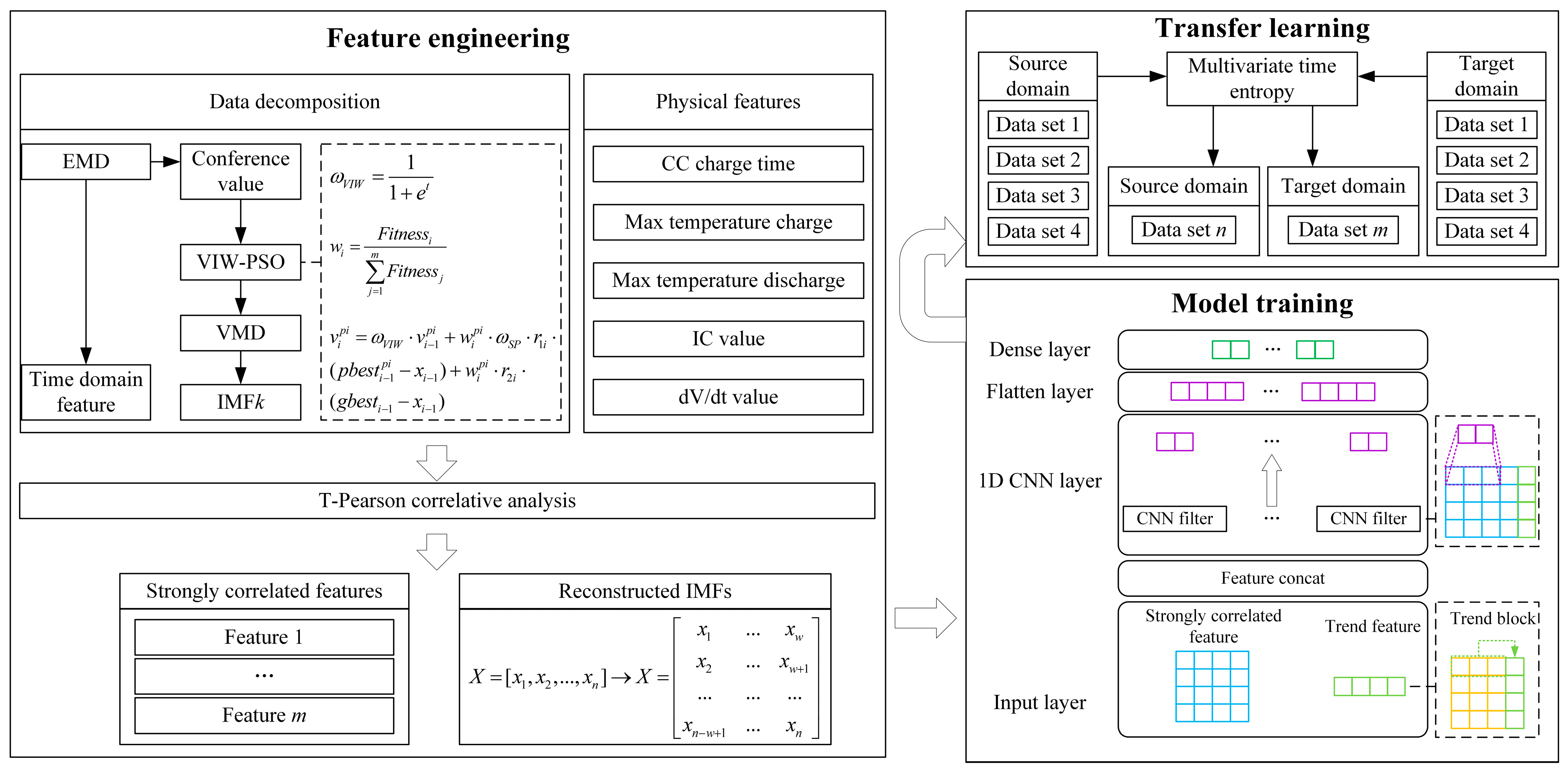

3.5. Proposed Prediction Framework

The proposed framework for lithium battery SOH prediction is shown in

Figure 8.

4. Results and Analysis

4.1. Dataset

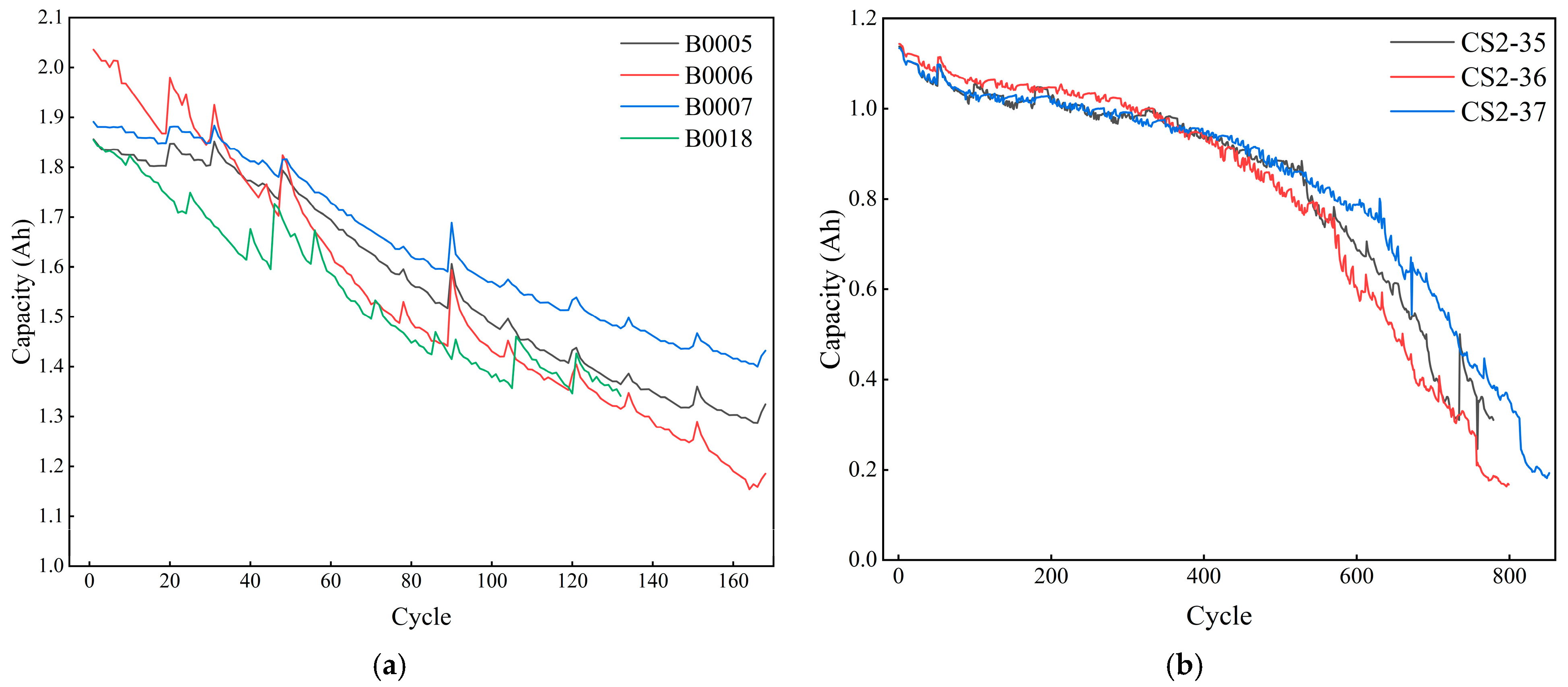

The datasets used in this paper include the lithium battery datasets from the NASA Ames Research Center and the Center for Advanced Life Cycle Engineering (CALCE) team at the University of Maryland. Among these, B0005, B0006, B0007, B0018, and CS2-35, and CS2-37 are selected as the data, and their capacity variations are shown in

Figure 9. The B0005, B0006, B0007, and B0018 batteries are the same types of batteries, with a capacity of 2 Ah and a constant-current and constant-voltage (CC-CV) charging and discharging strategy. The CS2-35 and CS2-37 batteries are the same types of batteries, with a capacity of 1100 mAh and a CC-CV charging and discharging strategy.

4.2. Case Study Conditions and Dataset Division

In order to validate the performance of the proposed lithium battery SOH estimation method, method validation and model comparison experiments are carried out on a desktop computer equipped with an Inter Core i5-10400 CPU and Inter UHD Graphics 630. The software platform is PyCharm Community Edition 2023.1.

The B0005, B0006, and B0007 batteries have 168 cycles; the B0018 battery has 132 cycles; and the CS2-35 and CS2-37 batteries have 779 and 852 cycles, respectively. In order to prove that the proposed method has better prediction performance compared with the existing methods, random scaling is used to separate the test set and prediction set, and the datasets are divided separately based on the division methods demonstrated in different existing studies.

4.3. Evaluation Accuracy Indicators

In order to evaluate the prediction performance of the proposed method, the MAE, RMSE, and R

2 are chosen as the evaluation metrics, with the formulas shown in the following equations:

where

n is the number of test data,

is the

i-th prediction value,

is the

i-th true value, and

is the average value of the true values.

4.4. Comparison and Analysis of Different Decomposition Algorithms

In order to verify that the proposed VIW-PSO-VMD has better decomposition performance, ablation experiments are conducted to compare it with PSO-VMD. The ablation function is a multivariate ablation function combining the permutation entropy, the MAE of the original and reconstructed data, and the time. We apply the optimality-seeking stopping condition, the overall number of iterations is set to 50, and stopping is implemented after 50 iterations.

Table 4 shows the comparison results for VIW-PSO and PSO.

From

Table 4, it can be seen that VIW enables PSO to perform more efficient parameter seeking, and its seeking results have better adaptation scores compared to those of ordinary PSO.

Next, the prediction performance of the models under different decomposition algorithms is compared. The main decomposition algorithms used are EMD, complete ensemble empirical mode decomposition (CEEMD), VMD-EMD, and VMD. The comparison results are shown in

Table 5.

From

Table 5, it can be seen that EMD and CEEMD are unable to achieve accurate decomposition, causing the features of other components to exist between different components and thus reducing the prediction accuracy. Meanwhile, the quadratic VMD-EMD method is able to achieve better prediction accuracy but at the cost of becoming longer. VMD sets the K value as the reference value from EMD, but it still has significant modal aliasing. After optimization, VMD obtains the best value from VIW-PSO, and it achieves the best decomposition performance.

4.5. Correlation Analysis and Strongly Correlated Feature Extraction

The SOH of lithium batteries is closely related to their states during charging and discharging. In order to extract multiple covariates that are the most relevant to the SOH trends of lithium batteries, and to reduce the influence of weakly correlated covariates on the model performance, the correlations are measured using the T-Pearson correlation analysis method. Taking the B0005 battery as an example, its specific correlations are as shown in

Table 6.

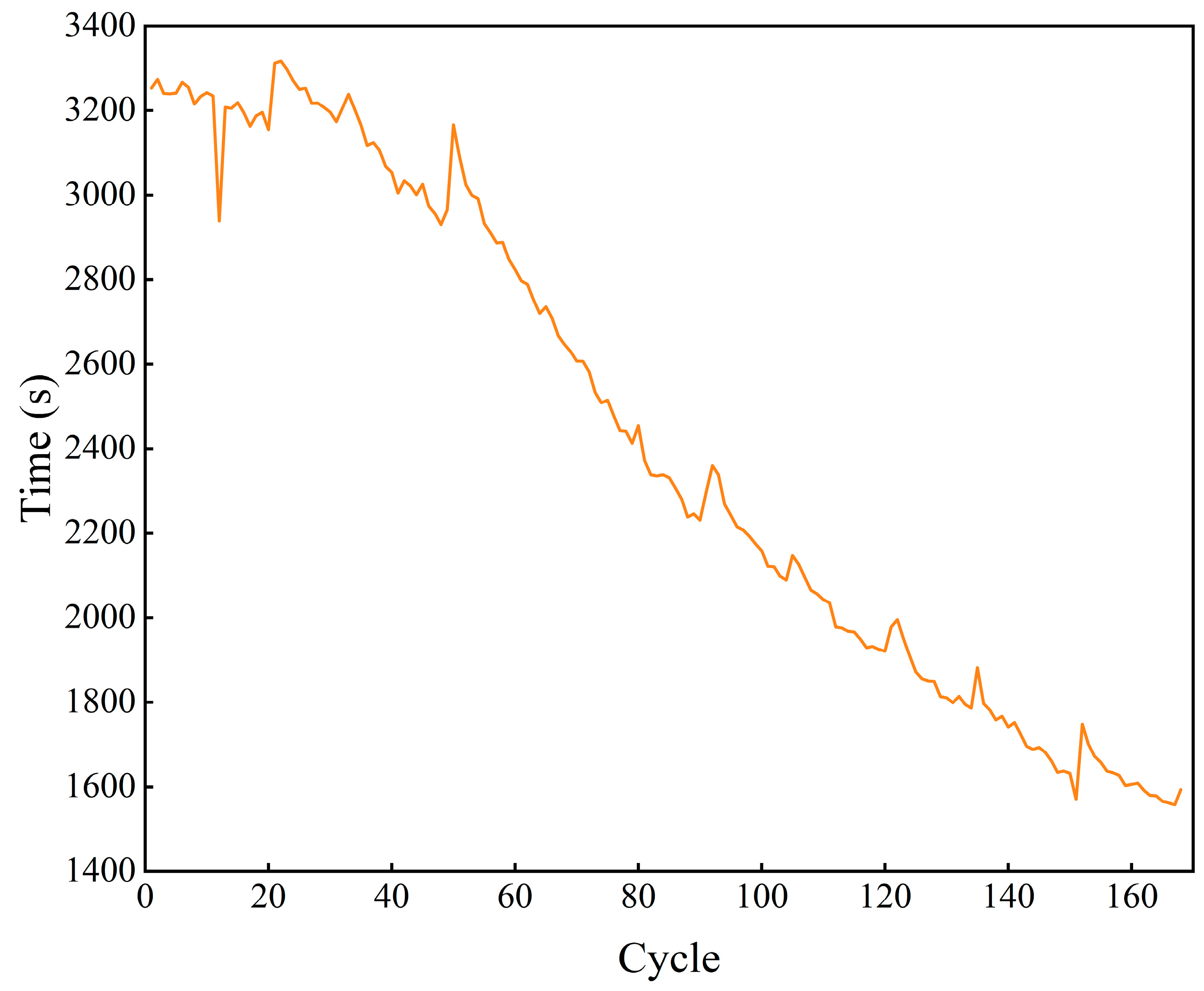

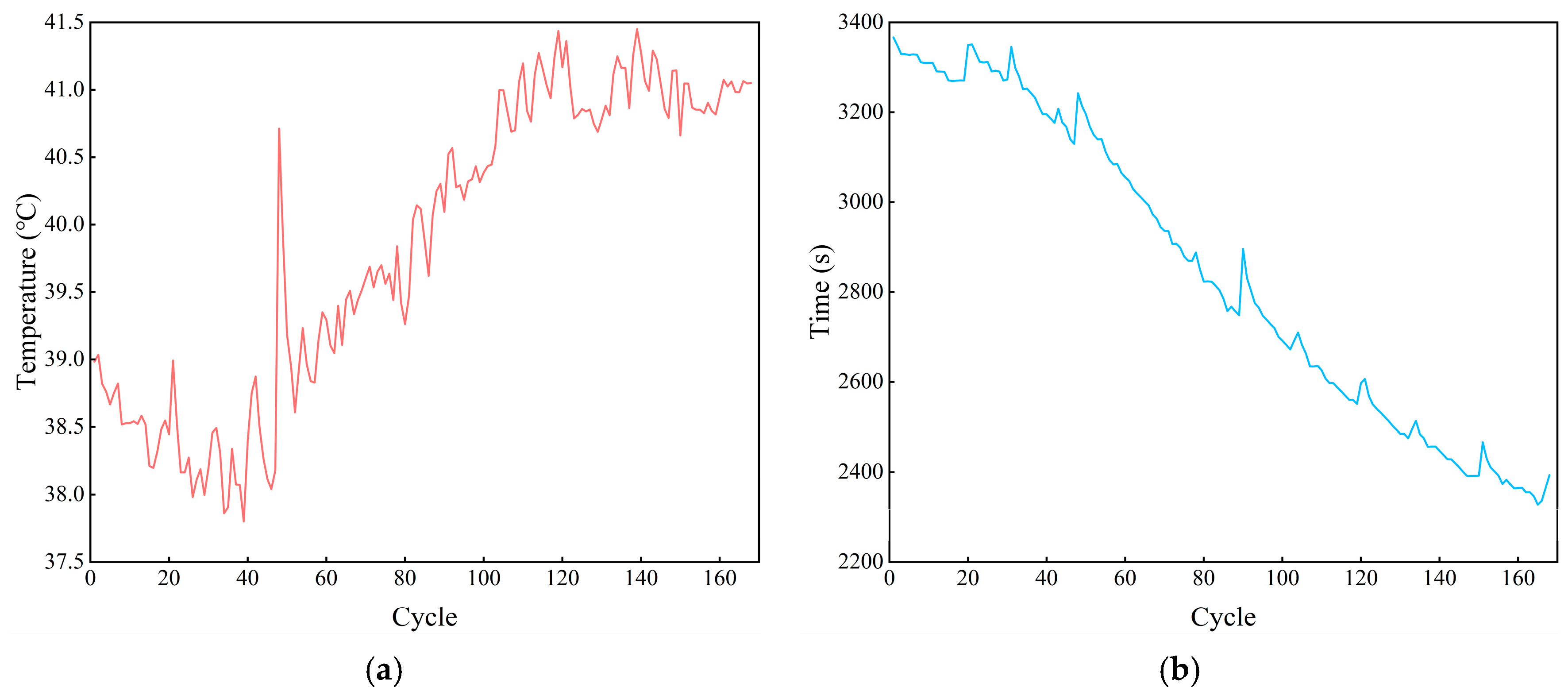

In terms of linear correlations, the correlations between all of the selected features and the SOH of lithium batteries are high, and their absolute values are all greater than 0.8; however, in terms of trend correlations, the correlation between the constant-current discharge time, the time required for the discharge process to reach the maximum temperature, and the time required for the rate of change in the voltage to reach the minimum in the discharge process and the SOH of the lithium battery is higher. Meanwhile, the maximum temperature of the discharge process is negatively correlated with the SOH, and the trend correlation is positively stemmed, resulting in a weaker T-Pearson correlation. The linear correlation is negative, while the trend correlation is positive, which leads to a weak T-Pearson correlation.

Therefore, according to the results of the T-Pearson analysis of all features, the constant-current discharge time, the time required to reach the maximum temperature in the discharge process, and the time required for the rate of change in the voltage in the discharge process to reach the minimum value are taken as the strongly correlated physical features.

In order to demonstrate the advantage of T-Pearson in feature selection, the performance of Pearson-selected features and T-Pearson-selected features on the prediction model is compared. The results are shown in

Table 7.

As can be seen from

Table 7, T-Pearson is able to select more accurate and strongly correlated features to improve the predictive performance of the model.

The lithium battery SOH yields time-series data, and the temporal features are equally important for lithium battery SOH prediction, so the time-series features of the lithium battery SOH are extracted by EMD. Then, the correlations between the time-series features and the SOH of lithium batteries are analyzed using T-Pearson correlation analysis; the results are shown in

Table 8.

Table 8 shows that, although the linear correlation between IMF4 and SOH is high, the T-Pearson correlation does not reach the threshold due to its low trend correlation.

4.6. Predictive Performance of Proposed Model on Other Datasets

In order to achieve the same high prediction performance of the model on other datasets, the proposed SOH prediction framework improves the generalization of the model via two methods: (1) combining the trend features of a single dataset with the strongly correlated features and learning the common features through a CNN; (2) using migration learning based on temporal feature analysis and achieving the cross-domain training of the model by freezing the network and fine-tuning the parameters. The results of the multivariate temporal entropy calculations for different types of batteries in the NASA dataset and CALCE dataset are shown in

Table 9.

Table 9 shows that the multivariate temporal entropy of the B0005 dataset is the closest to that of B0018 in the NASA dataset, and the multivariate temporal entropy of CS2-35 is the closest to that of CS2-37 in the CALCE dataset. Therefore, under the premise of using B0005 as the source-domain dataset, since B0018 is the closest to it in terms of time-domain features, B0018 is used as the target domain to achieve the cross-domain training of the model by freezing the network layers, as well as fine-tuning the parameters, and the model is able to achieve higher prediction performance. Taking B0005 and B0018 as examples, the specific parameters of migration learning are as shown in

Table 10. During the training phase of the source domain, the structure of the model is as shown in

Table 10, and the total parameter count is 4183. The number of epochs is 200, and the training time is 22 s.

By freezing the convolutional layer and preserving the degree of learning for common features, the fully connected layer achieves higher prediction accuracy with a lower learning rate and epoch number according to different datasets. Taking B0005 and CS2-35 as the source domains, the model’s performance after migration learning, as well as the model’s performance before migration learning, is shown in

Table 11.

As can be seen from

Table 11, after temporal feature selection, the model still has better prediction performance even without migration when the temporal characteristics of the target and source domains are similar, and the prediction performance of the model is further improved after migration learning. Compared with other datasets, B0006 has a minimal performance gap before and after transfer learning, and the reason is that it is similar to B0005 in term of multivariate time entropy, and the model can achieve good performance without transfer learning. After training, the model can accurately map the input data of the B0006 dataset to the predicted data of the B0006 dataset. Although the B0006 dataset yields a smaller improvement in the prediction performance of the model before and after migration learning, in terms of training efficiency, migration learning can achieve more efficient prediction performance with a lower learning rate and fewer epochs.

4.7. Predictive Performance of Proposed Model on Small-Sample Dataset

The LRCNN can learn the trend features and common features via a trend feature learning model and common feature learning model, and the common feature learning model is the most important part. The structure of the common feature learning model affects the prediction performance. Thus, the different structures are compared, and the results are shown in

Table 12.

As can be seen from

Table 12, the model that has two CNN layers has the worst performance. The reason is that the features obtained by two CNN layers cannot accurately map the data to be predicted. The excessive number of layers leads to overfitting. Thus, one CNN is the best structure.

Small samples are one of the problems faced during lithium battery SOH prediction. The proposed SOH prediction framework achieves the accurate estimation of trends under small-sample datasets through an internal trend block, which in turn improves the prediction ability of the whole prediction framework on small-sample datasets. Taking B0005 as an example, 20 cycles, 40 cycles, and 60 cycles are used as the training set, and its solutions are shown in

Table 13.

As can be seen from

Table 13, the proposed prediction framework can still achieve high prediction accuracy on small-sample datasets. Meanwhile, due to the strong linear relationship among SOH data on a single type of battery, and the fact that the CNN is a nonlinear neural network, its performance on linear datasets will have an impact on the overall prediction performance. Thus, the table shows that there is a slight decrease in the performance of the model with an increase of the size of the training set. Regarding the LR model, although, on a single type of battery SOH data, it can accurately capture trends, its trained model is difficult to apply to other types of batteries. Therefore, CNNs are needed to learn the common features so as to improve the generalization ability of the model.

4.8. Comparison with Other Methods

In order to objectively evaluate the performance of the proposed method in lithium battery SOH prediction and highlight its advantages in terms of prediction performance, it is compared with several mainstream methods, and the comparison results are shown in

Table 14 and

Table 15. M1–M8 are specified as follows.

M1: The model uses LSTM to predict the SOH of Li-ion batteries and improves the sparrow optimization algorithm (SSA) to optimize the parameters of LSTM to improve the prediction accuracy.

M2: The model convolves the features obtained by the CNN to extract the features with strong representation capabilities; it then learns the temporal features via LSTM and finally predicts them via a deep feedforward network (DNN).

M3: The model uses multi-population evolution whale optimization algorithm-optimized variational mode decomposition to optimize the parameters of VMD and decompose the SOH, followed by component prediction via a Transformer.

M4: The model uses a Gaussian regression model to predict the SOH.

M5: The model performs prediction using support vector regression (SVR) after decomposing the data via ensemble empirical mode decomposition (EEMD), while the parameters of SVR are optimized using the grey wolf optimizer (GWO).

M6: The model combines CNN-LSTM with an attention mechanism and uses Pearson correlation analysis and Spearman correlation analysis to extract strongly correlated features.

M7: The model decomposes the data via complete ensemble empirical mode decomposition with adaptive noise (CEEMDAN) and then predicts the components via CNN-LSTM.

M8: The model selects the features through grey relation analysis and predicts the SOH data via Gaussian process regression.

From

Table 14 and

Table 15, it can be seen that the proposed method has a more obvious improvement in the prediction accuracy. VIW-PSO-VMD helps the model to extract the high-frequency components in the original data and transform them into smoother components. The strongly correlated features extracted with T-Pearson aid the model’s learning and reduce the impact of weakly correlated features on the model’s prediction performance. The LRCNN extracts and fuses the trend features and common features, which improves the generalization ability of the model. Transfer learning based on time-domain feature analysis helps the model to achieve cross-domain learning and, at the same time, improves the training efficiency of the model. Although there are some models that outperform the proposed framework, migration learning is able to achieve higher training efficiency.