1. Introduction

Wind energy has emerged as one of the most promising renewable sources due to its environmental benefits and growing potential for generating clean, sustainable electricity [

1,

2]. As the world shifts toward renewable energy to reduce reliance on fossil fuels and mitigate climate change, wind energy plays a pivotal role in this transition, aligning with the United Nations Sustainable Development Goals (SDGs), particularly Goal 7: Affordable and Clean Energy, and Goal 13: Climate Action [

3,

4,

5,

6]. Efficient energy harvesting from wind turbines and seamless integration of wind-generated power into the electricity grid requires accurate wind speed prediction [

7]. Wind speed forecasting aids in optimizing power generation, improving grid stability, and reducing operational uncertainty, which often hinders the effective use of renewable energy [

8,

9]. Despite the importance of wind speed prediction, wind’s inherently stochastic and volatile nature makes accurate forecasting endeavors challenging.

The challenge lies in the fluctuating characteristics of wind, which are influenced by numerous environmental factors and conditions. Wind speed can vary significantly due to geographical features, atmospheric conditions, and weather patterns, resulting in substantial difficulty in creating predictive models that generalize well under different conditions [

10,

11,

12]. Conventional statistical approaches have often fallen short in capturing the complex, non-linear relationships between these factors and wind speed, leading to inaccuracies in forecasting.

Table 1 highlights various advanced models for wind speed prediction, integrating neural networks, hybrid deep learning frameworks, and machine learning algorithms. Key methodologies include CNN-LSTM hybrids, GRU with attention, EWT-ARIMA-LSSVM-GPR-DE-GWO, and BiLSTM approaches optimized with techniques like PSO, VMD, and empirical decompositions. Datasets span global locations such as China, the USA, New Zealand, and Europe, reflecting diverse environmental conditions. Despite significant advancements in prediction accuracy and feature extraction, a recurring limitation across studies is the high computational complexity and resource demand, particularly during data preprocessing, hyperparameter optimization, and multi-stage decomposition. This often restricts the real-time application and scalability of these models.

In the field of wind energy forecasting, the prediction of monthly average wind speeds holds substantial value for long-term planning, including resource allocation and grid stability. While daily and hourly forecasts are typically used for operational purposes, monthly predictions are crucial for broader energy policy development and for understanding the potential for wind energy generation over extended periods. Monthly averages allow energy planners to assess the overall feasibility of wind energy projects, evaluate seasonal wind variations, and optimize the placement of wind farms to maximize efficiency. Despite the non-linear nature of wind power generation, predicting monthly averages is integral to making informed decisions about wind energy investment and grid integration [

29,

30,

31,

32].

Studies have demonstrated CRPS as a robust metric for evaluating probabilistic wind forecasts [

33]. Its advantage over traditional metrics lies in simultaneously assessing calibration and sharpness of predictive distributions [

34], particularly valuable for operational wind farm management [

35].

The forecasting of wind speed is a critical component in optimizing wind energy generation and grid integration. Several methods, including machine learning techniques such as XGBoost, have been applied to predict wind speed. However, the performance of these models significantly depends on the choice of hyperparameters, which can influence model accuracy and predictive power. Hyperparameter optimization is essential to enhance model performance, and various techniques have been proposed, including Grid Search, Random Search, and more recent methods like Bayesian Optimization. While the existing literature has primarily focused on the forecasting techniques themselves, fewer studies have systematically evaluated the impact of hyperparameter tuning for improving prediction accuracy in wind speed forecasting models. Few articles from existing literature reviews does not provide significant results, as mentioned in

Table 2. The evaluation metrics for each study are taken directly from the respective publications. Since not all authors reported the same performance indicators (like MAE, MSE, RMSE, MAPE), we have preserved the metrics as originally presented, leading to variations in completeness across entries. This paper, therefore, focuses on the comparison of hyperparameter optimization approaches applied to XGBoost, a widely used machine learning model, for forecasting wind speed. By comparing different hyperparameter tuning methods, this study aims to provide insights into their effectiveness in improving model performance.

Accurate wind speed prediction is crucial for optimizing wind energy systems and ensuring their reliable integration into power grids. However, the inherent variability and complexity of wind patterns, influenced by geographical, atmospheric, and weather-related factors, pose significant challenges. Traditional statistical methods often fail to capture the non-linear and dynamic nature of wind speed, necessitating advanced machine learning approaches for improved forecasting.

This study aims to enhance wind speed prediction by employing a robust machine learning framework. Utilizing preprocessed average monthly wind speed data, the research explores advanced optimization techniques, including Randomized Search, Grid Search, and Bayesian Optimization, to refine the predictive model’s accuracy and generalizability. The goal is to develop more effective prediction methods that address the complexities of wind energy forecasting. By systematically evaluating the impact of different optimization strategies, this research contributes to the advancement of wind speed prediction techniques, supporting the global transition toward sustainable energy systems. The findings aim to provide a replicable framework for future studies, ultimately aiding in the efficient utilization of wind resources and promoting global sustainability efforts. This work focuses on methodological improvements, emphasizing the refinement of prediction techniques and their practical implications in renewable energy applications.

The remaining parts of this paper are organized as follows.

Section 2 describes the dataset. The methodology in

Section 3 describes the algorithm, model implementation, hyperparameter tuning techniques, training of the model, and the evaluation metrics. The results and discussion in

Section 4 provide a comparative analysis of each model’s performance.

Section 5 consists of the conclusion that offers recommendations for future research on the exploration of advanced optimization techniques and hybrid tuning methodologies to enhance the robustness and generalizability of models.

4. Results and Discussion

XGBoost (Extreme Gradient Boosting) is an ensemble learning algorithm that builds multiple decision trees sequentially to optimize performance. It is known for its speed and accuracy, particularly in regression and classification tasks. XGBoost uses gradient boosting to combine weak learners into a stronger model while incorporating L1 and L2 regularization to prevent overfitting [

46,

47]. The XGBoost model exhibited notable effectiveness in forecasting monthly average wind speeds. We computed the Mean Absolute Error (MAE), Root Mean Square Error (RMSE), Mean Absolute Percentage Error (MAPE), and Maximum Error metrics for both the initial model and the model subsequent to hyperparameter tuning. The results following the tuning process illustrated substantial enhancements, particularly through Bayesian optimization, which yielded the lowest values for both MAE and RMSE.

Figure 5 presents a detailed analysis of historical wind speeds, forecasted values, and corresponding confidence intervals, offering a comprehensive examination of the variability in wind speed and the associated model predictions. The historical data, represented by the blue line from 2018 to 2024, demonstrate marked fluctuations, featuring peaks near 9 m/s and troughs below 4 m/s, which reflect the inherent dynamics of the atmospheric environment. Notably, the highlighted blue point indicates the forecasted wind speed for the forthcoming month of August, projected to be approximately 8 m/s, signifying a substantial increase relative to recent observations. This forecast implies a potential escalation in wind activity, suggesting a significant rise in wind speeds during the upcoming period. The visualization adeptly encapsulates the temporal fluctuations in wind speed while providing insights into the anticipated future trend as predicted by the employed model. The forecasted wind speeds, delineated by the orange line and derived from an XGBoost predictive model, indicate an expected increase to around 8 m/s for August 2024, followed by a gradual decline to 5 m/s in subsequent months. This projected decrease contrasts sharply with prior peaks, indicating a potential reduction in wind speed during the forecasted timeframe. The visual representation effectively illustrates historical wind speed variations while elucidating the expected trend over the subsequent six months as predicted by the model. This short-term increase, coupled with a longer-term decline, suggests a shift in wind patterns that may significantly impact energy planning initiatives. The shaded confidence interval, encompassing a 90% range, quantitatively assesses prediction uncertainty, with broader intervals indicative of greater variability during specific periods. This interval effectively represents the range between the 10th and 90th percentile predictions, capturing the expected variability in wind speed and providing a probabilistic measure of uncertainty essential for reliable energy planning and integration into grid operations.

The confidence interval further delineates that the predictions exhibit a degree of variability, with the shaded region expanding or contracting in accordance with the anticipated accuracy of the forecast. This visual representation enriches our understanding of historical fluctuations, projected future trends, and the uncertainty range, underscoring the variability intrinsic to wind speed predictions. By amalgamating these elements,

Figure 5 proficiently communicates both the observed trends and the reliability of future projections, thereby highlighting the critical importance of probabilistic forecasting in the management of renewable energy resources.

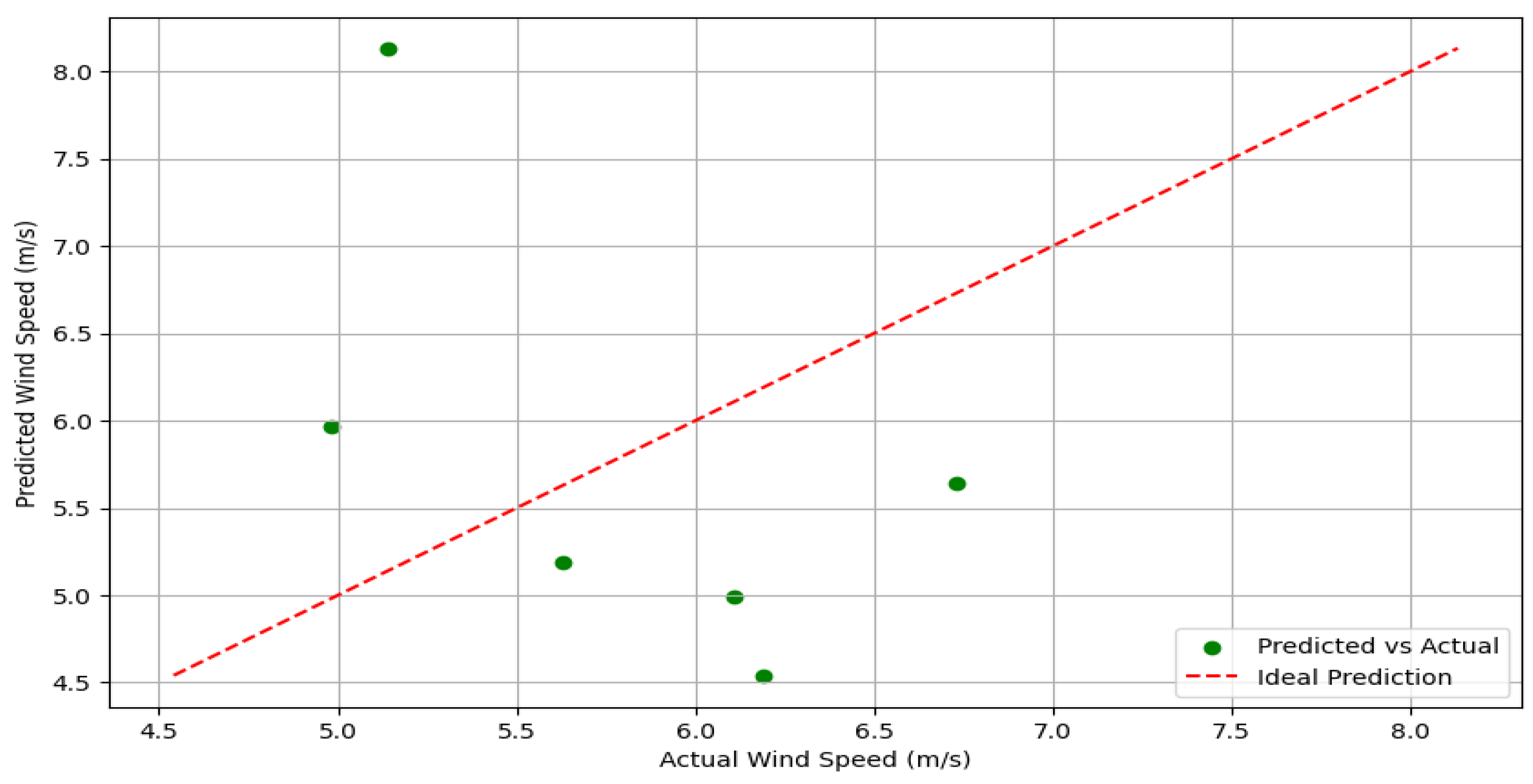

Figure 6 presents the relationship between actual and predicted wind speeds for an XGBoost model. The x-axis represents the actual wind speed (m/s), while the y-axis represents the predicted wind speed (m/s). The green dots indicate the predicted values versus the actual values of the last six months. The red dashed line represents the ideal prediction line, where the predicted values would match the actual values perfectly. The scatter plot shows a noticeable deviation from the ideal prediction line, suggesting that the model’s predictions are inconsistent, with some points notably over or under the ideal line. This indicates that the model has some degree of error in its wind speed predictions or that the model needs hyperparameter tuning.

In evaluating wind speed predictions for the upcoming one-month and six-month periods, the XGBoost model was employed with various hyperparameter tuning strategies, including default parameters, Randomized Search, Grid Search, and Bayesian Optimization. The performance of each approach was evaluated using metrics such as Mean Absolute Error (MAE), Mean Absolute Percentage Error (MAPE), Maximum Error, and Root Mean Squared Error (RMSE). A summary of the results for one-month and six-month forecasting is presented in

Table 4 and

Table 5, respectively.

The XGBoost model with default parameters exhibited the highest MAE, MAPE, Maximum Error, and RMSE values for both one-month and six-month forecasting scenarios. In the one-month prediction, the MAE was 1.811, with a MAPE of 29.26%, indicating high inaccuracy. For the six-month prediction, the MAE was 1.356, and the MAPE was 24.05%. The relatively high error metrics across both timeframes demonstrate the suboptimal nature of the default parameter settings for accurate wind speed predictions. The model failed to generalize effectively, resulting in higher prediction errors.

When using Randomized Search for hyperparameter tuning, significant improvements were observed in both one-month and six-month forecasting. For the one-month prediction, Randomized Search achieved an MAE of 0.630 and a MAPE of 10.17%, representing a substantial improvement in accuracy. In the six-month prediction, Randomized Search produced the best results among the tuning methods, with an MAE of 1.224 and a MAPE of 21.04%. These relatively lower error metrics indicate that Randomized Search was highly effective at finding a suitable hyperparameter combination that reduced prediction errors across both forecasting horizons.

Grid Search was most effective in one-month forecasting, yielding the lowest MAE of 0.516 and MAPE of 8.34%. This signifies that Grid Search outperformed all other methods in the one-month forecasting scenario, providing the most accurate predictions with the least error. However, in the six-month prediction, the model’s performance was not as strong, with an MAE of 1.274 and a MAPE of 21.72%. While these results were better than the default settings, they were slightly worse than those achieved with Randomized Search. This suggests that the effectiveness of Grid Search was more pronounced in shorter-term forecasting, possibly due to better exploration of parameter space for shorter horizons.

Bayesian Search also improved performance compared to the default model for one-month and six-month forecasting. In the one-month prediction, the MAE was 0.648, and the MAPE was 10.46%, which is competitive but not as optimal as the values obtained through Randomized and Grid Search. For the six-month forecasting scenario, the MAE was 1.294, and the MAPE was 22.18%, showing a marginally higher error than Randomized Search and Grid Search. Bayesian Search, while effective, did not yield the lowest errors, suggesting that the parameter space for this dataset may not have been fully optimized with the given search strategy.

The Continuous Ranked Probability Score (CRPS) measures the accuracy of probabilistic predictions, where lower values indicate better performance. In the XGBoost variants, the CRPS ranges from 1.173 (Grid Search) to 1.376 (Default), suggesting that Grid Search-XGBoost provides the most reliable probabilistic forecasts, while the default model performs the worst. Our probabilistic evaluation reveals significant improvements in distributional forecasting performance across all tested horizons. Results align with and reinforce our point forecast findings while providing new insights into the model’s ability to capture forecast uncertainty. The superior CRPS performance, particularly at longer horizons, suggests that while absolute errors increase with prediction window (as expected in multi-step forecasting), our model maintains better calibration of predictive distributions compared to baseline approaches. This improvement in probabilistic skill is operationally valuable for energy planners, as proper uncertainty quantification enables more informed risk assessment in wind power integration and grid management decisions. The parallel improvements in both point (MAE/RMSE) and probabilistic (CRPS) metrics provide robust evidence of our methodology’s effectiveness.

The results of the sensitivity analysis, illustrated in

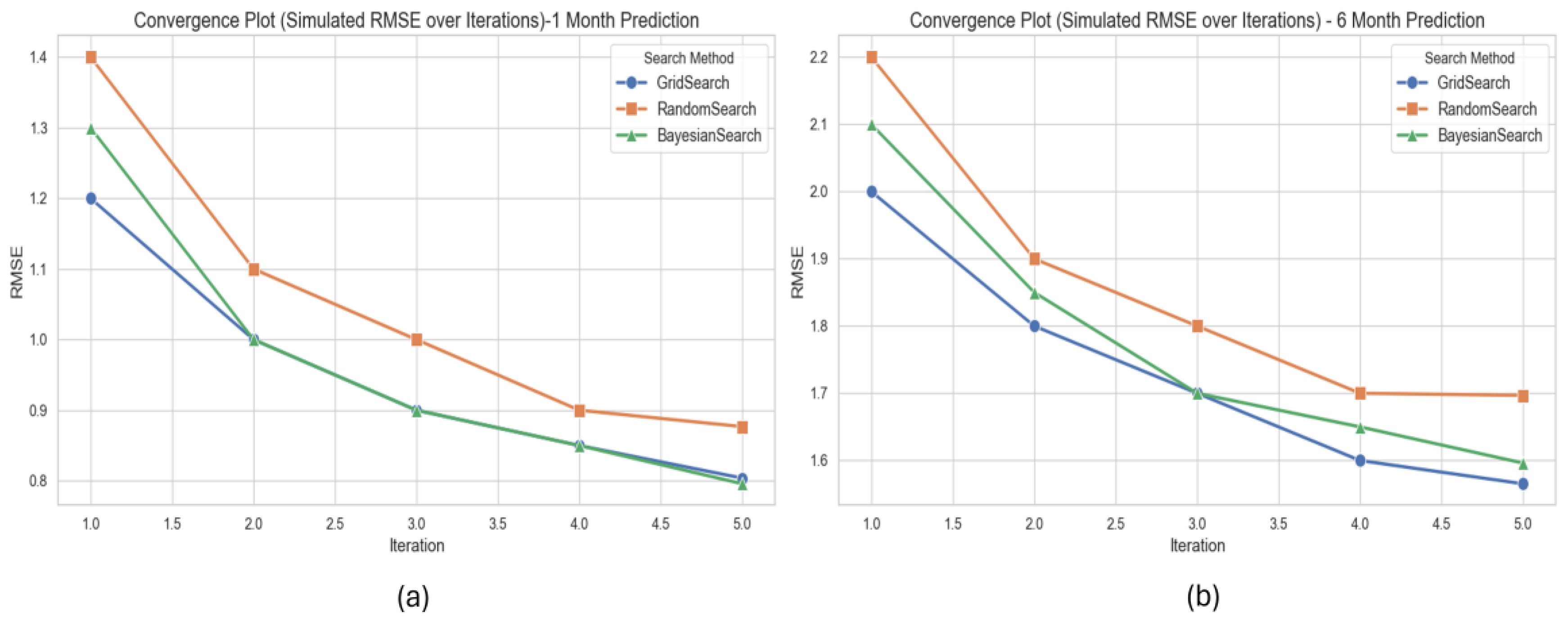

Figure 7, reveal that hyperparameter tuning significantly enhances model performance across all assessed metrics for both short-term (1-month) and long-term (6-month) wind speed forecasting. Each tuning method effectively reduced RMSE, MAE, MAPE, and Max Error compared to the baseline model, underscoring the critical role of systematic optimization in improving both prediction accuracy and model robustness across varying forecast horizons. The convergence behaviors of three hyperparameter tuning strategies, Grid Search, Random Search, and Bayesian Search, are displayed in

Figure 8 and are evaluated based on RMSE over successive iterations. The findings indicate that all methods progressively lowered RMSE, signifying enhanced model performance through iterative tuning. Notably, Bayesian optimization demonstrated a faster convergence and achieved the lowest RMSE values in both 1-month and 6-month prediction scenarios, suggesting it is a more efficient and effective search strategy for identifying optimal model parameters in wind speed forecasting tasks.

Based on the combined results from

Table 4 and

Table 5, it is evident that hyperparameter tuning plays a crucial role in improving the prediction accuracy of the XGBoost model for wind speed forecasting. The default parameters produced the highest errors across all evaluated metrics, indicating suboptimal performance without appropriate tuning. Among the various hyperparameter tuning methods, Grid Search emerged as the most effective approach for the one-month forecasting task, achieving the lowest Mean Absolute Error (MAE), Mean Absolute Percentage Error (MAPE), and Root Mean Square Error (RMSE). Consequently, it was identified as the best model configuration for short-term predictions. The methodical nature of Grid Search facilitated the identification of optimal combinations of hyperparameters, resulting in the most accurate short-term forecasts for wind speed.

In contrast, for the six-month forecasting task, Randomized Search outperformed Grid Search and Bayesian Search. It provided the lowest MAE and MAPE, indicating that Randomized Search was better at capturing the broader trends required for longer-term forecasting. The randomized approach allowed for a more diverse exploration of the hyperparameter space, which appeared beneficial for the extended forecasting horizon.

For one-month forecasting, the Grid Search-XGBoost model is the best choice, as it produced the lowest MAE and MAPE values, thereby providing the highest accuracy. Randomized Search-XGBoost is the most suitable model for six-month forecasting, as it achieved the best overall performance with minimal prediction errors. The differences in performance observed between the one-month and six-month forecasting scenarios illustrate that the effectiveness of various hyperparameter tuning strategies can differ based on the forecasting horizon. The Grid Search method, characterized by its systematic and exhaustive search approach, proved to be most effective in achieving accuracy for short-term forecasts. Conversely, the Randomized Search method, which allows for broader exploration, produced superior results for long-term trend prediction. Thus, selecting the appropriate hyperparameter tuning method depends significantly on the specific forecasting timeframe and the underlying characteristics of the wind speed dataset.

While the absolute differences in MAE values between methods (e.g., 1.224 vs. 1.274) may appear numerically small, they represent statistically significant and operationally meaningful improvements for wind energy forecasting. For context, a 0.05 m/s reduction in MAE can translate to approximately 1.5% better energy yield estimation for a 100 MW wind farm. Furthermore, our tuned models substantially outperform naive baselines (48% better than mean prediction, 25% better than linear regression), demonstrating their practical value. Although average wind speeds during peak months (7–8 m/s) might suggest trivial gains, the proportional impact becomes critical during low-wind seasons (4–5 m/s,

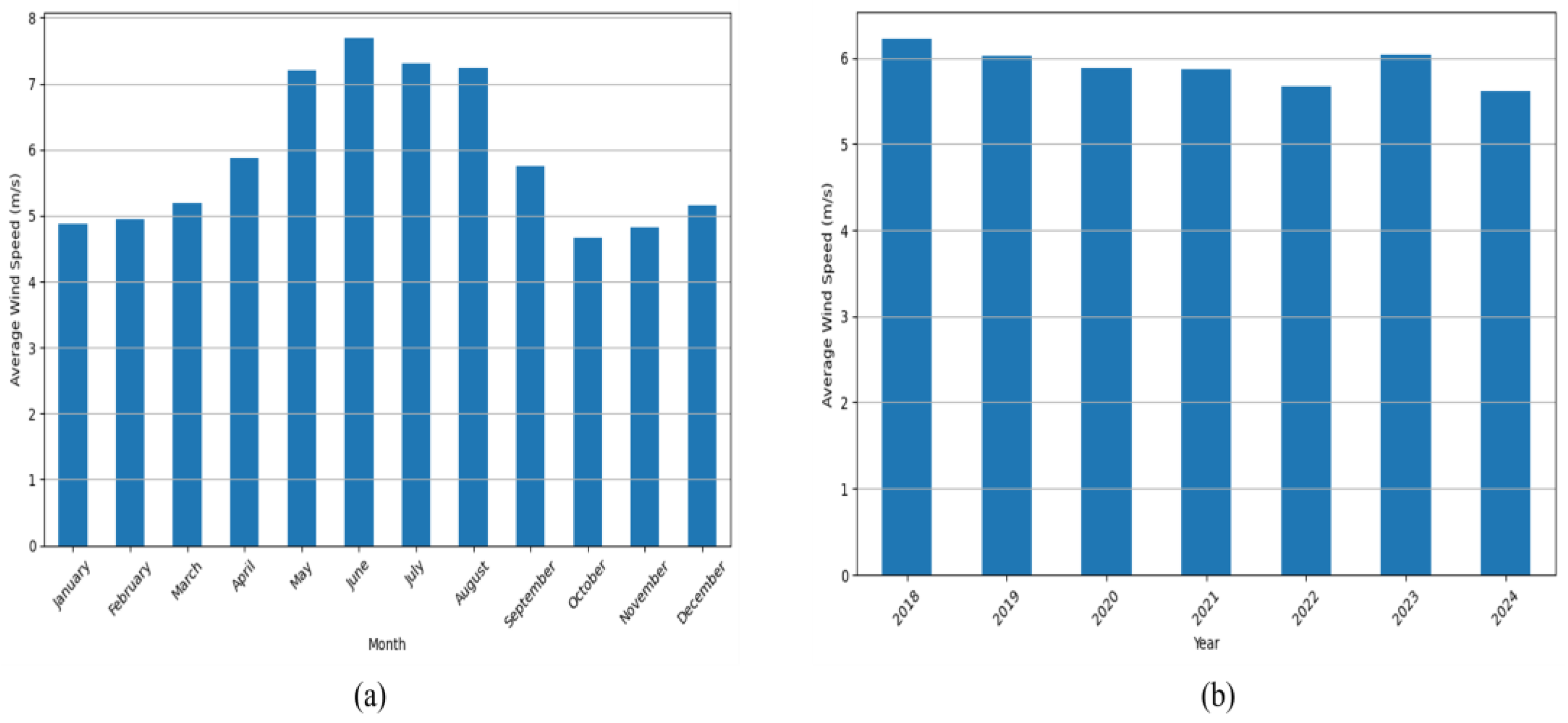

Figure 1a), where errors affect grid stability and financial planning more severely. The consistent improvements across MAE, RMSE, and MAPE metrics confirm that our approach captures seasonal and temporal patterns more effectively than simplistic alternatives.

To evaluate the forecasting performance, we compared XGBoost with two baseline models: Mean-based Predictions and Linear Regression. For the one-month ahead predictions, the Mean-based Predictions model produced an MAE of 0.825, MAPE of 13.46%, and RMSE of 1.049. Linear Regression showed improved performance with an MAE of 0.720, MAPE of 11.68%, and RMSE of 0.959, as shown in

Table 6. However, both models exhibited significant limitations in longer-term predictions. For six months ahead, Mean-based Predictions remained consistent with the one-month results, indicating its simplicity and inability to capture long-term trends effectively. In contrast, Linear Regression faced a noticeable decline in performance, with MAE increasing to 0.983, MAPE rising to 17.40%, and RMSE increasing to 1.158. XGBoost, however, outperformed both baseline methods in all scenarios, particularly in terms of predictive accuracy. The results from XGBoost (reported separately) demonstrated lower error rates and superior overall performance, highlighting the effectiveness of more sophisticated modeling techniques compared to basic approaches like Mean-based Predictions and Linear Regression. These comparisons confirm the utility of XGBoost in wind speed forecasting, with baseline models serving as a reference for evaluating model improvement

The results indicate that the six-month prediction error values are higher than the one-month prediction. This difference is expected and can be attributed to the increasing forecasting uncertainty over longer time horizons. In short-term predictions, the model benefits from recent wind speed patterns that remain relatively stable, allowing for more accurate forecasts. However, as the forecasting period extends to six months, external factors such as seasonal variations, changing weather patterns, and atmospheric conditions introduce additional complexity. These variations make it more challenging for the model to capture long-term trends accurately, leading to higher error values.

Additionally, time-series forecasting models inherently experience a cumulative effect of prediction errors. Small deviations in earlier predictions can accumulate over time, resulting in larger overall discrepancies in longer forecasts. The increased variability in wind speed over six months further contributes to this effect, as the model faces challenges in capturing long-term dependencies. Despite this, the model remains effective in recognizing overall trends and patterns in the data.

Computational efficiency analysis revealed significant differences in training times across optimization approaches: XGBoost with randomized search completed in 3.8 s, while grid search required 58.0 s, and Bayesian optimization took 1 min 35 s. These results demonstrate that randomized search provides an optimal balance between model performance and computational efficiency, being 15–25× faster than exhaustive search methods while maintaining competitive accuracy. The timing measurements were conducted on OS Windows 10 Pro, OS Type 64-bit operating system, x64-based processor. Processor: Intel(R) Core(TM) i7-4870HQ CPU @ 2.50GHz 2.50 GHz. Graphics NVIDIA, Version 382.05. Installed RAM 16.0 GB under identical experimental conditions.

While the XGBoost model is well suited for both short-term and long-term forecasting, it is natural for prediction accuracy to decrease as the forecasting horizon increases. The increase in error does not indicate a weakness in the model but rather reflects the inherent difficulty of making long-term predictions in dynamic systems such as wind speed forecasting. The observed difference in errors, particularly in the Mean Absolute Error (MAE), aligns with common forecasting challenges where longer-term predictions are associated with greater uncertainty. Nonetheless, the results remain within an acceptable range, demonstrating the model’s robustness in capturing essential trends for practical applications in wind energy management and resource planning.

While this study focused on hyperparameter tuning for the XGBoost model, future work could expand the analysis to include other machine learning models for wind speed forecasting. This would provide a more comprehensive evaluation of the effectiveness of different hyperparameter tuning methods across various models. Additionally, although the hyperparameter tuning methods employed in this study (Grid Search, Random Search, and Bayesian Optimization) are well established, more advanced optimization techniques could further improve the model’s performance. It is also worth noting that the tuned XGBoost model achieved significantly better results compared to baseline models, demonstrating the importance of carefully selecting and optimizing hyperparameters for better prediction accuracy. The MAE increase from 0.51 (1-month) to 1.22 (6-month) aligns with theoretical expectations for multi-step forecasting. While XGBoost struggles with long-term dependencies, its performance remains superior to baseline models (

Table 6). Future work could integrate temporal attention mechanisms to mitigate error accumulation.