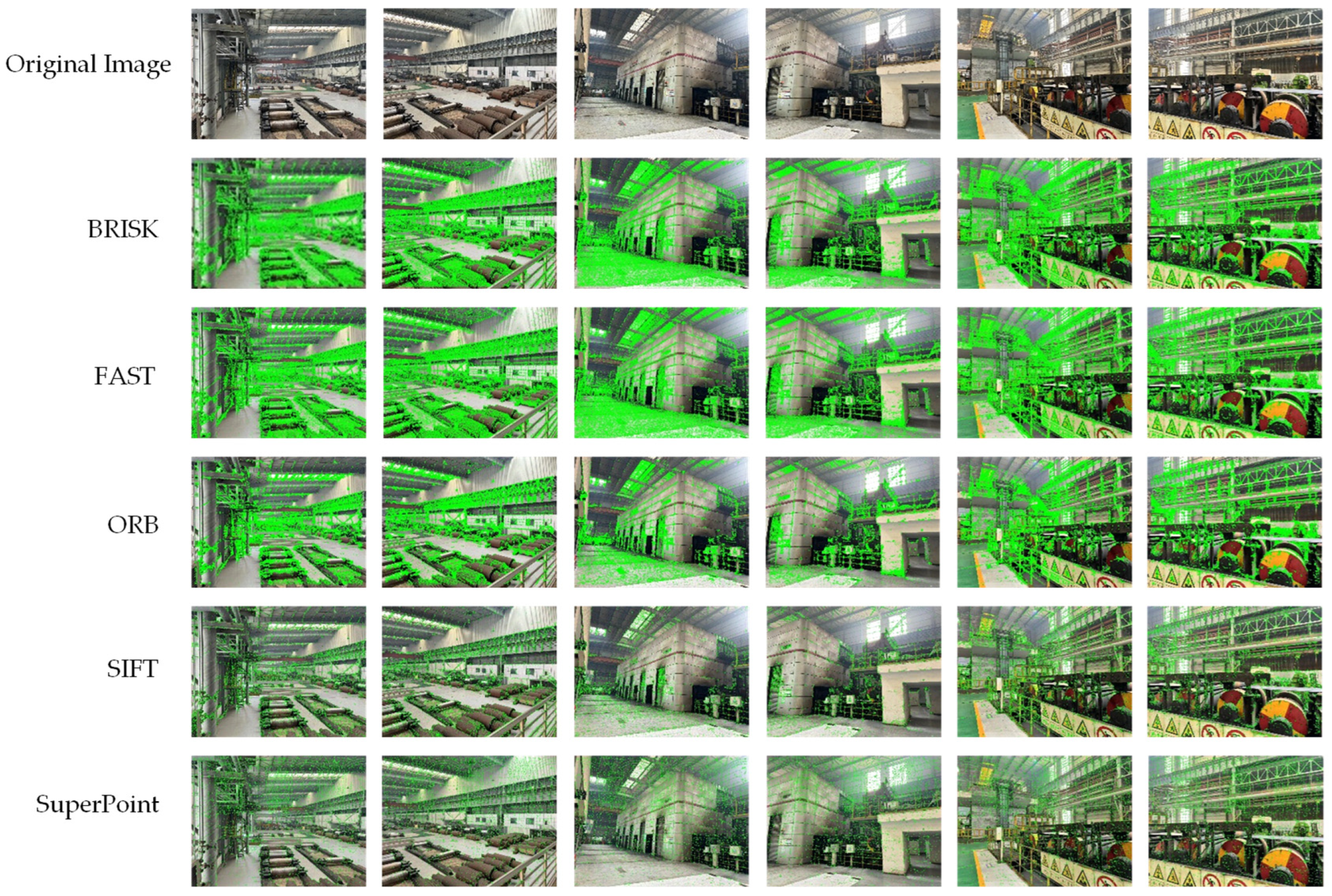

Through the work in the previous section, this paper has successfully completed the extraction of video image feature points based on the SuperPoint algorithm. The final output results are the corresponding position coordinates of the feature points and the feature descriptor vectors. The next step is to complete the matching of the feature points based on these feature point positions and descriptor vectors, thus laying a solid foundation for the final image fusion. According to

Table 2, the number of feature points extracted by the feature extraction algorithm is in the thousands. Facing such a large-scale number of feature points, there must be many invalid feature points, and there will also be a large number of mismatched points during the matching process.

Moreover, this section also proposes using Agglomerative Clustering (AGG) as a pre-module for LightGlue matching. By clustering the feature points in advance, the matching efficiency and quality can be improved. Finally, in this section, the RANSAC algorithm is added after LightGlue to eliminate mismatched points and improve matching accuracy and quality.

3.1. Basic Principles of the LightGlue Algorithm

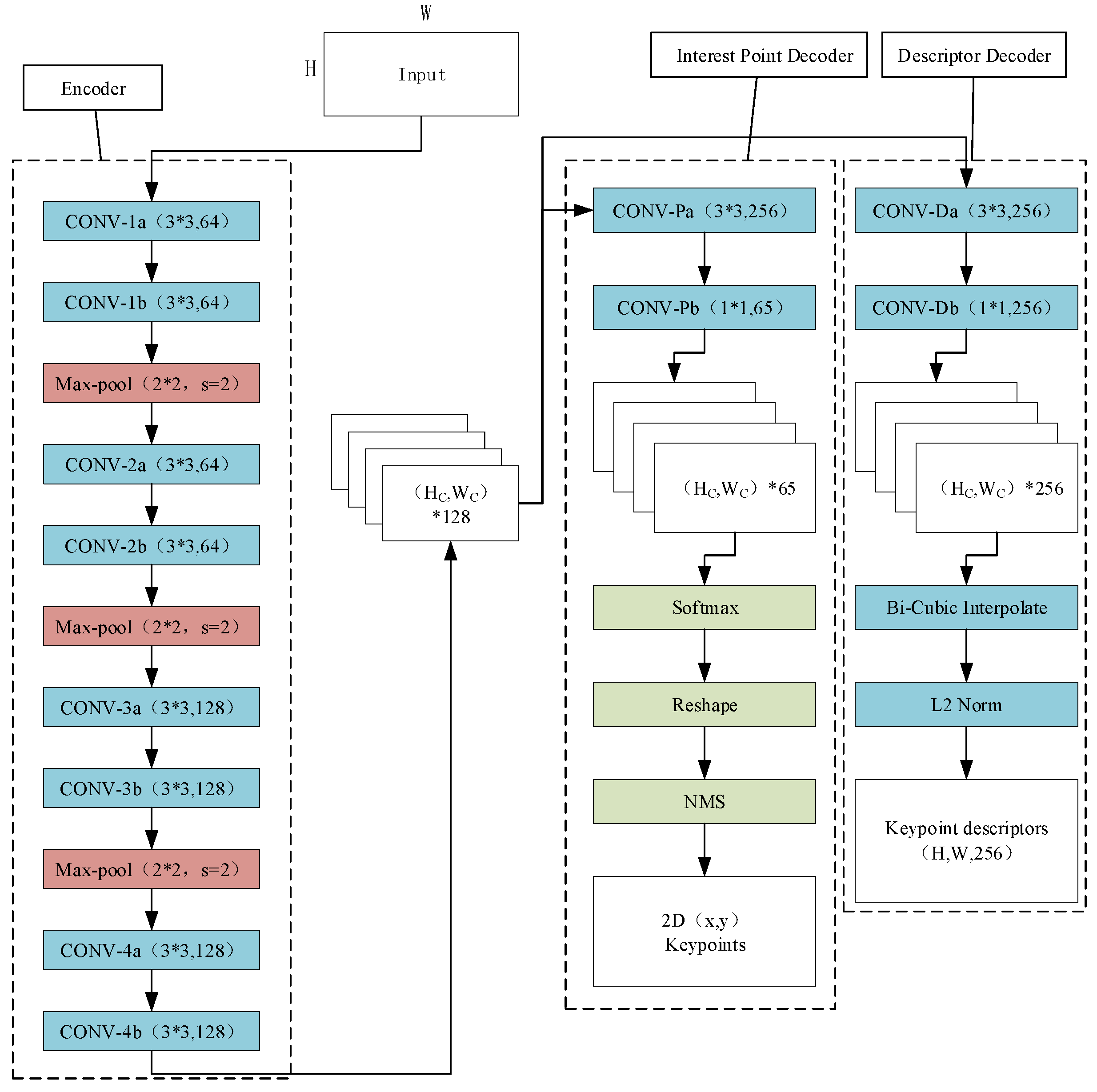

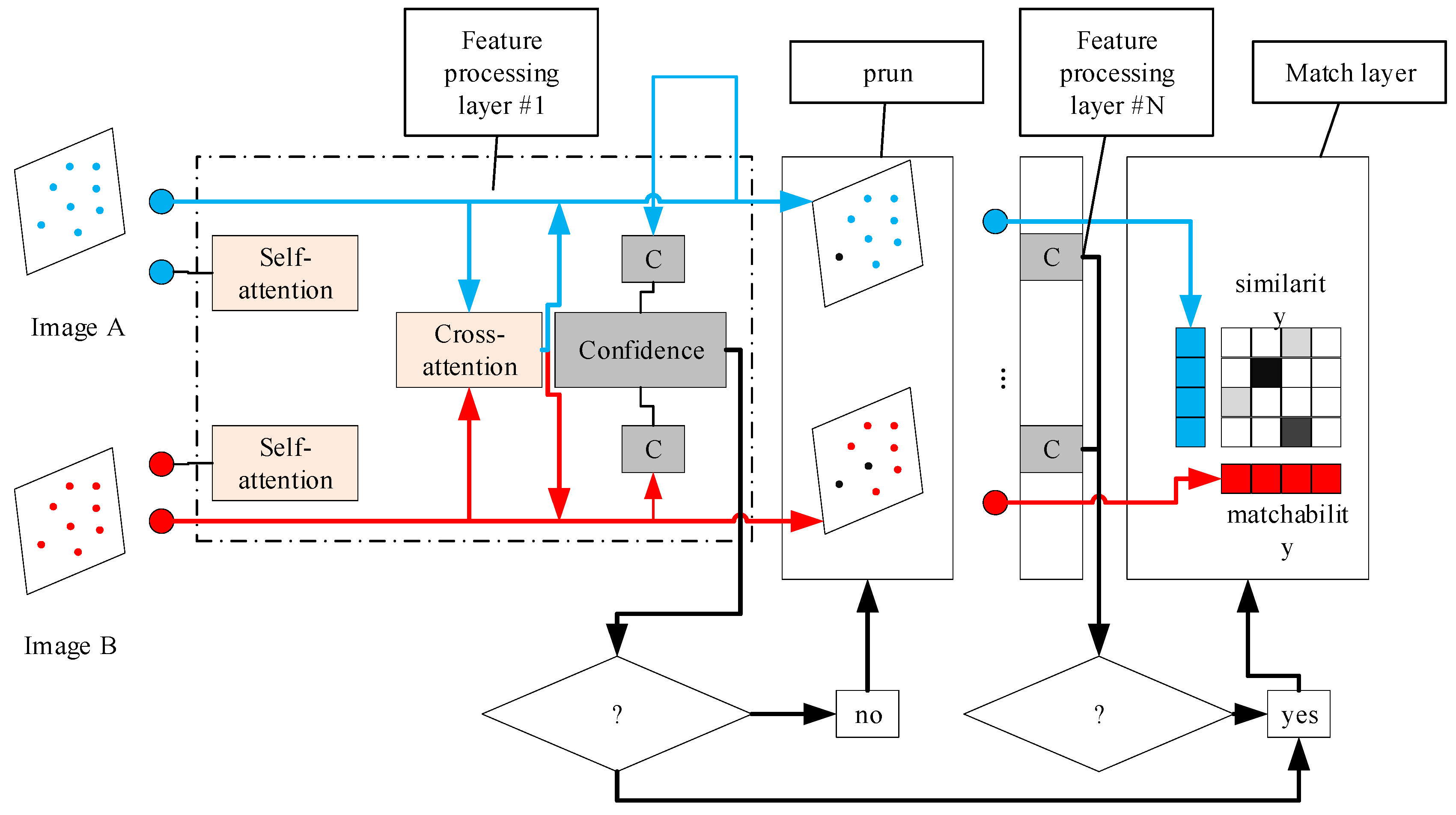

The LightGlue algorithm is derived from the Transformer attention model, and its network structure is shown in

Figure 3. It is mainly divided into three parts: The first part is the feature processing layer composed of the self-attention mechanism and the cross-attention mechanism, with a total of

N layers. The second part is the confidence judgment layer, which follows the feature processing layer and is mainly responsible for determining whether to exit the iteration of the feature processing layer. The third part is the feature matching layer, which calculates the matching matrix through a lightweight detection head and outputs the matching relationships of the feature points. The following will further introduce the network structure and principle of the LightGlue algorithm through the specific process of feature matching.

The LightGlue algorithm starts to work by taking a pair of input feature point position coordinates and feature descriptor vectors (that is, the output of SuperPoint mentioned in

Figure 2) as the input. The feature processing layer processes the feature information through contextual content based on the self-attention and cross-attention units. Among them, the feature point descriptor matrices of the images

A and

B to be matched are:

In the formula, N and M represent the number of feature points in image A and image B, respectively, and C represents the feature dimension. For SuperPoint, C = 256.

Taking

XA as an example, the above matrix

XA representing the state of feature points generates a query vector

QA, a key vector

KA, and a value vector

VA after linear transformation. Therefore, for a single feature point state

xi in image

A, it will also be transformed into a query vector

qi, a key vector

qi, and a value vector

qi accordingly. Then, for the query vector

qi of each feature point, calculate its similarity with the key vector

kj of other feature points, and obtain the weight through softmax normalization as follows:

In the formula, d is the dimension of the attention head; then the weight matrix represents the dependency relationship between feature points.

Finally, the self-attention output

X′ is obtained by weighted summation of the value vector

VA using the weights, as follows:

This output integrates the information of the current feature point with that of all other feature points, significantly enhancing the connections between features within the same image.

The principle of the cross-attention mechanism is similar to that of the self-attention unit. The only difference is that the query vector comes from image A, while the key vector and the value vector come from image B. Through the cross-attention mechanism, the feature points of one image can focus on all the feature points of the other image. At the same time, this is also the core mechanism of the LightGlue network.

After the feature information is processed by the self-attention mechanism and the cross-attention mechanism, LightGlue performs a confidence judgment. If the confidence judgment layer network determines that the number of feature points with high confidence is small and does not reach the preset threshold, it will continue the next iteration of feature inference, repeating the above-mentioned working process of the self-attention and cross-attention mechanisms. At the same time, a feature point pruning operation will be carried out to remove the feature points with very low confidence. The confidence judgment layer can improve the computational efficiency of LightGlue while ensuring its accuracy. As the feature inference layer iterates continuously, when the confidence judgment network determines in a certain iteration that the number of feature points with high confidence has met the threshold, it will enter the feature matching layer. First, the matching score matrix

M is calculated using the features obtained from the above-mentioned iterations:

In the formula, N represents the number of feature points in image A; M represents the number of feature points in image B; represents the similarity score between the i-th point of image A and the j-th point of image B.

Finally, through the matching score matrix and the matchability scores, the LightGlue network will calculate the optimal matches and then output the matching relationships between the two images.

3.2. Agglomerative–LightGlue Feature Matching Model

The Agglomerative Clustering algorithm is an unsupervised machine learning method [

12]. The Agglomerative hierarchical clustering algorithm is a bottom-up clustering algorithm. It first treats each data point as a separate cluster. Then, based on specific distance measurement methods such as single-linkage, complete-linkage, or average-linkage, it calculates the distances between clusters. After that, it finds the two closest clusters and merges them into a new cluster. This operation of calculating the distances between clusters and merging them is repeatedly carried out until a preset stopping condition is met, such as reaching a specified number of clusters or a threshold value for the distance between clusters. Eventually, a hierarchical clustering result is formed.

In this section, the idea of Agglomerative hierarchical clustering is considered to optimize the LightGlue algorithm. It mainly involves clustering the output feature points of SuperPoint, and then the LightGlue algorithm performs feature point matching within the same cluster. The specific method is as follows:

Before LightGlue matching, considering the relationship between the two photos, the Agglomerative hierarchical clustering algorithm is used to divide the clusters of the two images simultaneously, reducing the number of regions to be matched. The input part of the Agglomerative Clustering algorithm consists of two parts. The first part is the difference in image positions. The coordinates in the two images are processed for relative position normalization respectively and then input into the clustering algorithm.

The spatial relationship is quantified by the difference in image positions, which captures the relative geometry between camera viewpoints. First, image coordinates are normalized using the steel plant’s layout. For image

A and

B, their absolute position differences are shown in the following equation:

Normalize the absolute position difference to obtain the normalized difference as (Δ

x′, Δ

y′). The definition of the spatial proximity metric is as follows:

If dspatial ≤ 0.05, the images are clustered into one category to ensure that only spatially adjacent images are grouped for matching.

The second part is the pixel difference, and the values of the three RGB elements of the images are input.

The pixel-wise relationship is measured by the pixel difference, which evaluates RGB intensity consistency between images. For each image, the mean (

μR,

μG,

μB) and standard deviation (

σR,

σG,

σB) of pixel values are computed. The photometric distance is derived from normalized pixel differences:

Images with dphoto < 0.3 are clustered, as their pixel distributions (e.g., mean intensities and contrast) are sufficiently similar to enable reliable feature matching.

After integrating the above two inputs, the feature points extracted by SuperPoint are clustered, and the LightGlue matching is carried out for the feature points belonging to the same cluster in the two images. In this way, the number of matches that each feature point needs to calculate is reduced, the matching time consumption is decreased, and the matching efficiency is improved.

To reduce computational complexity, the matching process is constrained within clusters through the following steps: For the hierarchical clustering workflow, the combined feature vector (dspatial, dphoto) for each image pair is fed into an Agglomerative Clustering algorithm with complete linkage (maximizing inter-cluster distances) to form clusters. The algorithm iteratively merges image pairs until the number of clusters K satisfies K ≤ √N (where N is the total number of images), empirically reducing the search space by 60–70%. Within each cluster, SuperPoint keypoints undergo spatial consistency filtering: keypoints with reprojection errors > 3 pixels (estimated via prior camera calibration) are discarded. LightGlue matching is then applied only to keypoint pairs within the same cluster, reducing the number of candidate matches from O(N2) to O(K ∙ M2), where M is the average number of images per cluster.

RANSAC (Random Sample Consensus) is an iterative algorithm for robustly estimating the parameters of a mathematical model from data containing outliers, which is widely used in fields such as computer vision and robotics. Its core idea is to randomly select a minimum sample subset (for example, select two points to fit a straight line) to fit the model, calculate the errors between all data points and the model to distinguish inliers (valid data conforming to the model) and outliers (abnormal data), and through multiple repeated iterations, the model with the most inliers is retained as the optimal solution.

After the image feature matching is completed by the above image matching algorithm based on Agglomerative + LightGlue, the RANSAC algorithm is integrated in this section to remove the mismatched points, further improving the accuracy of image stitching.

3.3. Comparison and Analysis of Experimental Results

In this section of the experiment, two evaluation indicators are selected: the matching accuracy rate and the matching time. The specific definitions of the indicators are as follows:

Matching Accuracy Rate (Accuracy): The matching accuracy rate refers to the proportion of the number of correctly matched point pairs to the total number of matched point pairs. It reflects the accuracy of the feature matching algorithm. The larger this value is, the better the accuracy of the corresponding feature matching algorithm is, and the better its performance is [

13].

Matching Time (Time): The matching time refers to the time required for the feature matching algorithm to complete matching for each group of images to be stitched. It reflects the performance and work efficiency of the feature matching algorithm. The smaller this value is, the better the performance of the corresponding feature matching algorithm is [

14].

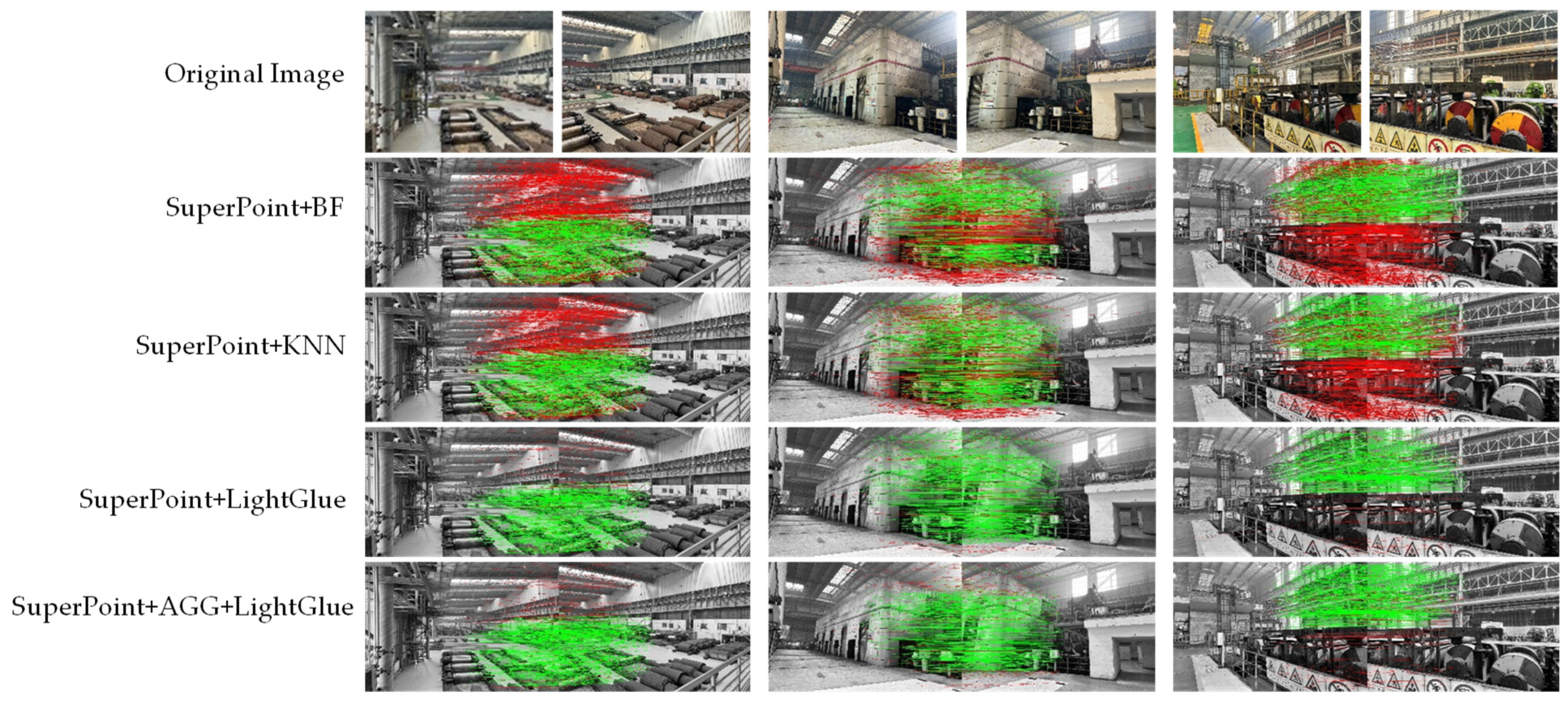

On the basis of having already completed the feature extraction by SuperPoint in the previous text, in order to verify the effectiveness of the algorithm in this section, four groups of control experiments are set up, which are as follows: SuperPoint + AGG + LightGlue (the algorithm of this chapter), SuperPoint + LightGlue, and SuperPoint + KNN. Comparison with SuperPoint + LightGlue can verify the performance improvement of the algorithm in this section after the improvement, while comparison with SuperPoint + KNN can verify that the LightGlue algorithm is superior to the traditional algorithm. The experimental results are shown in

Figure 4.

In

Figure 4, BF (Brute Force) refers to the brute-force matching algorithm; KNNKNN refers to the k-nearest neighbors algorithm, both of which are typical and commonly used image matching algorithms. In

Figure 4, the green matching lines represent the correctly matched feature points, and the red connection lines represent the incorrectly matched feature points. It can be clearly seen from

Figure 4 that the correct matching rate of the LightGlue algorithm is significantly better than that of the other methods.

At the same time, combined with the above-mentioned evaluation indicators, the specific algorithm evaluation results shown in

Table 3 can be obtained.

As shown in

Table 3, compared with traditional methods, the LightGlue algorithm greatly improved the matching accuracy rate. However, it is somewhat behind traditional methods in terms of matching speed. Through analysis, it is likely that this is caused by setting up many feature point detection layers during the training process in order to place a greater emphasis on accuracy. After the improvement of the algorithm in this section, it can be seen that on the basis of maintaining the matching accuracy rate, the matching time has been reduced by 26.2%. In summary, the algorithm presented in this section performs better than other algorithms in terms of both matching time and matching accuracy rate. It has relatively high real-time performance and accuracy in stitching, providing excellent preparatory conditions for subsequent video image fusion.