Abnormal Energy Consumption Diagnosis Method of Oilfields Based on Multi-Model Ensemble Learning

Abstract

1. Introduction

2. Dataset Preparation

2.1. Raw Data

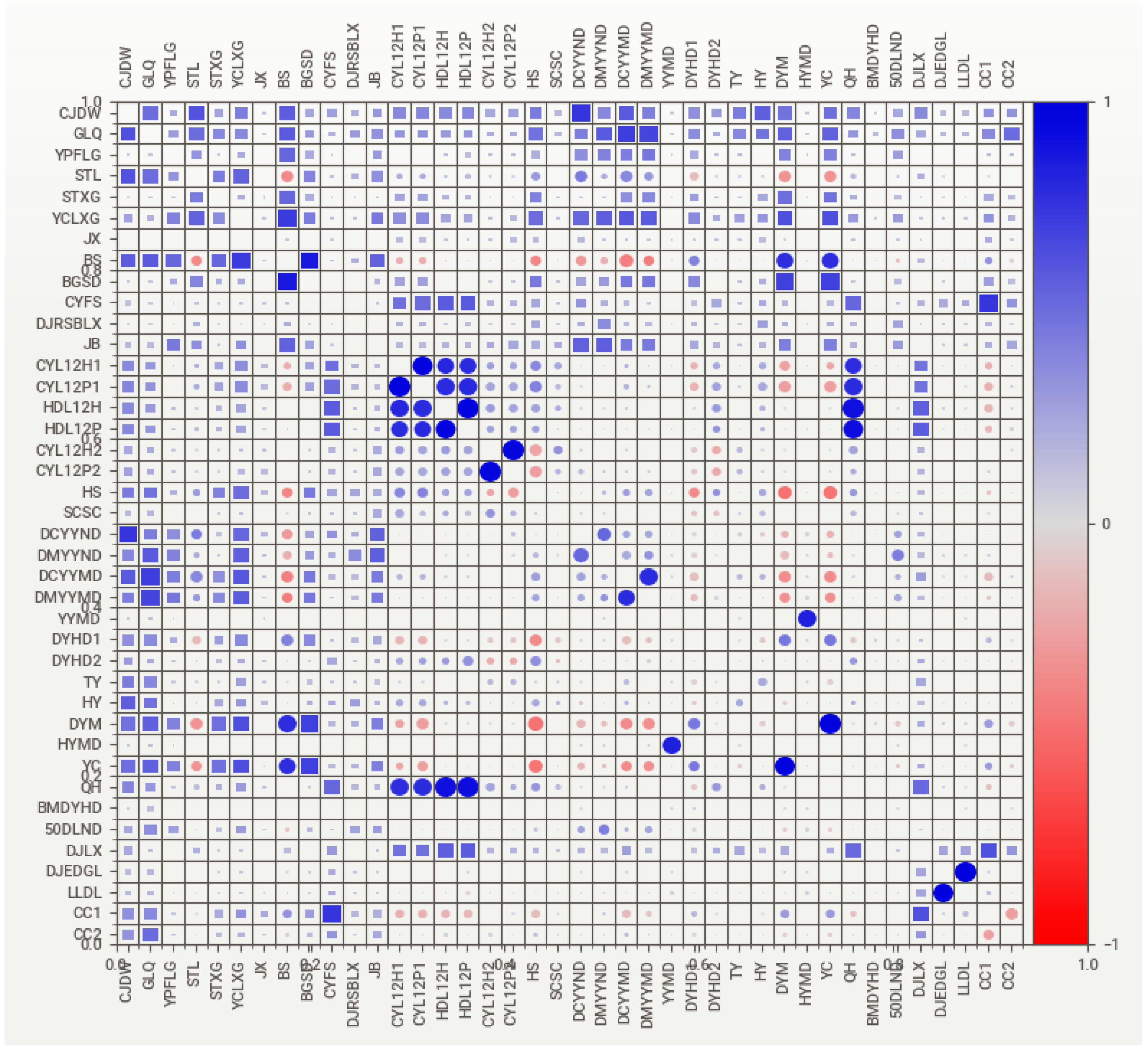

2.2. Correlation Analysis

2.3. Data Preprocessing

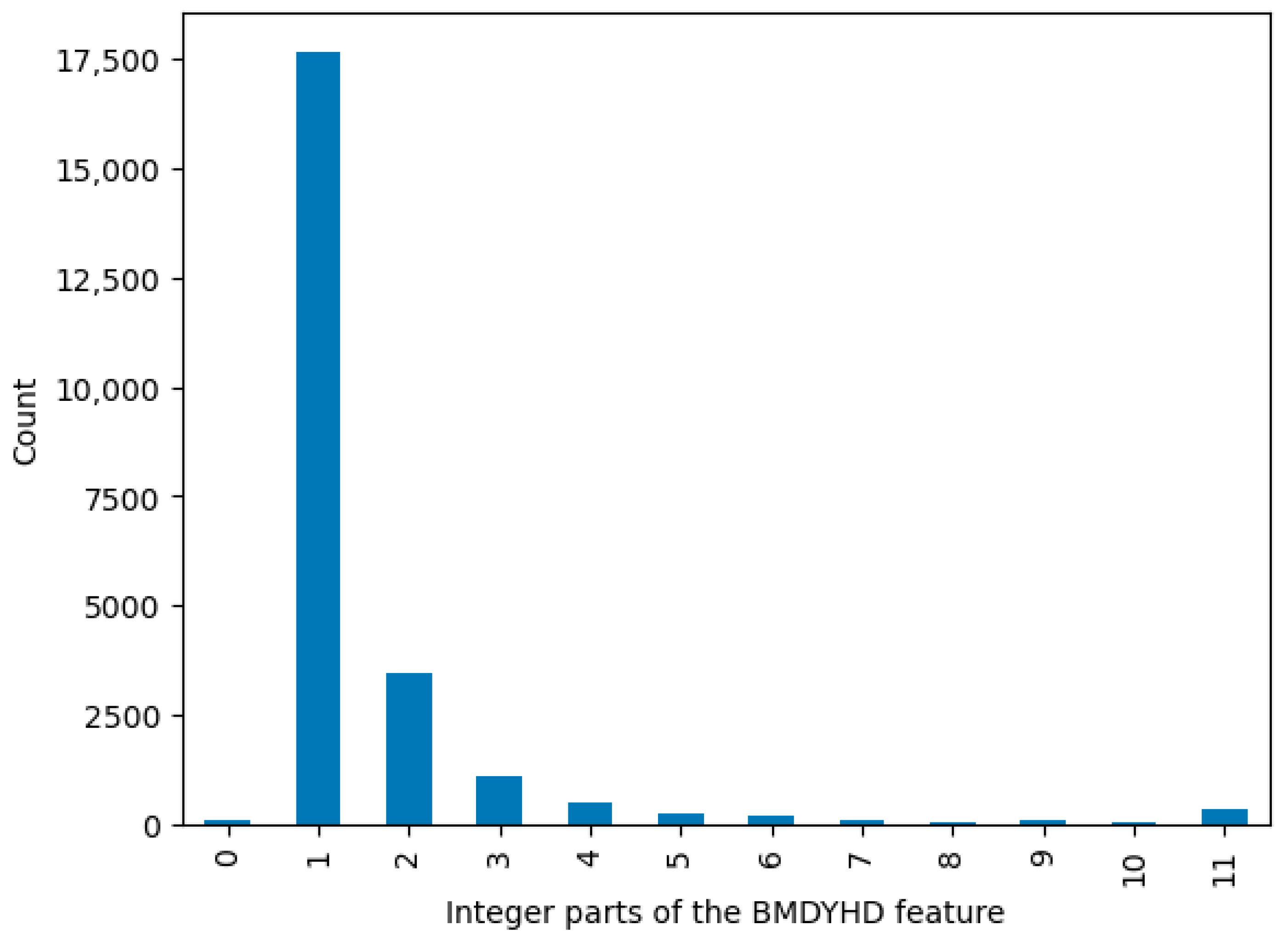

2.4. Target Variable Processing

2.5. Dataset

3. Methods

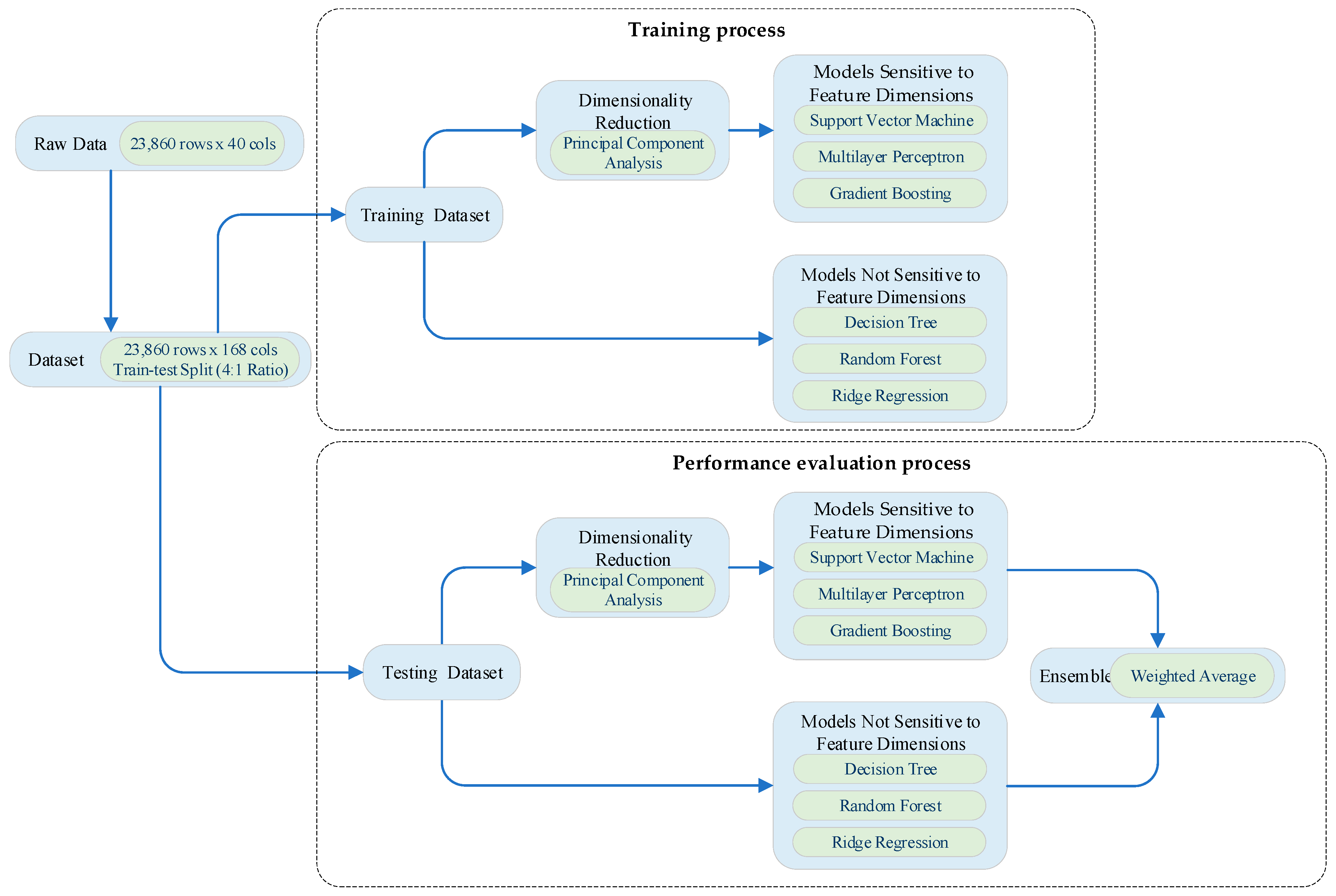

3.1. Overall Logic

3.2. Models Sensitive to Feature Dimensions

3.3. Models Not Sensitive to Feature Dimensions

3.4. Ensemble of Base Model Outputs

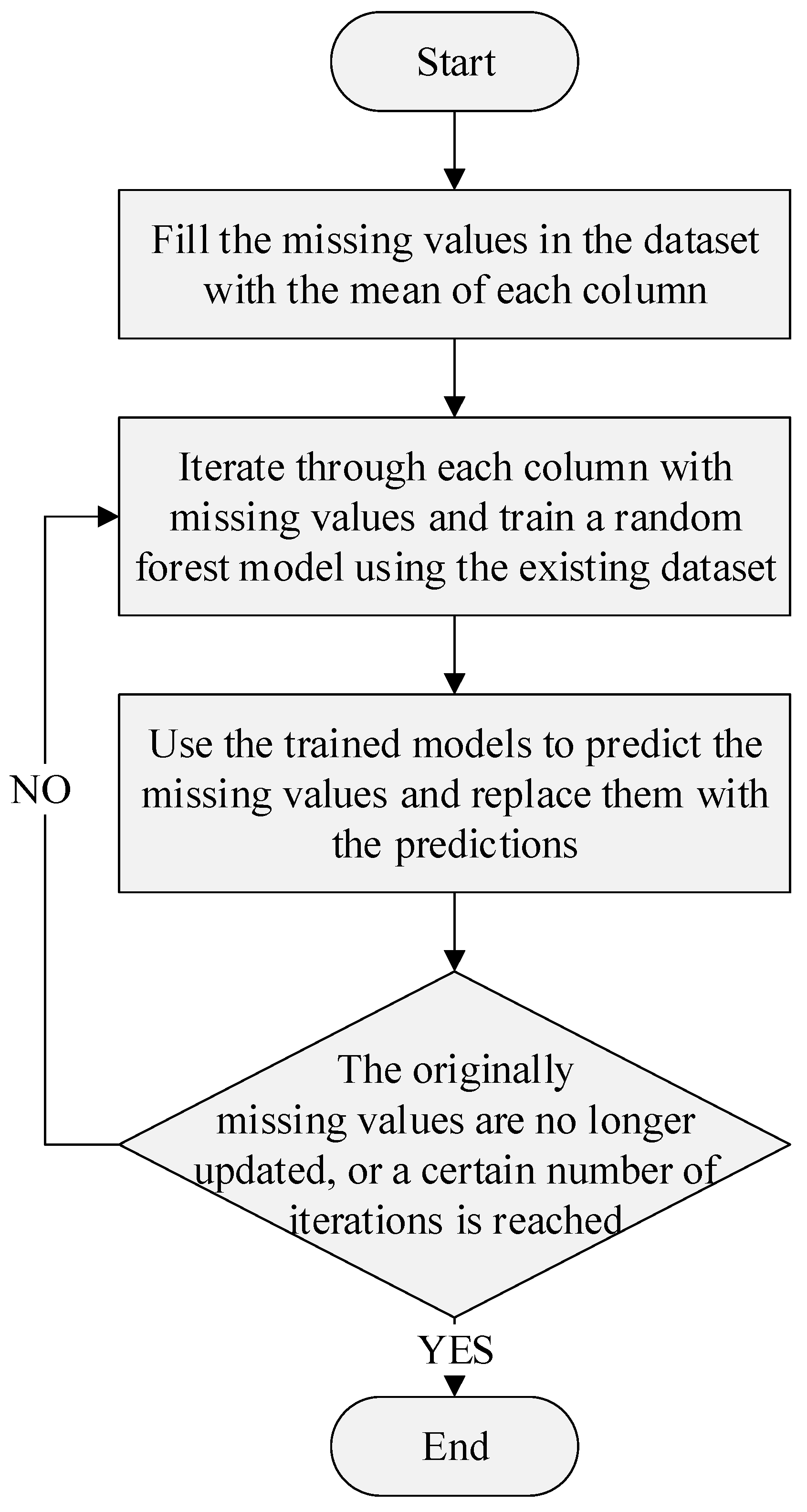

3.5. Lightweight Data Iterative Cleaning Model

- 1.

- Missing Value Imputation

| Algorithm 1: Missing Value Imputation |

| Let denote the original dataset, and represent the set of columns with missing values. Initialize with missing values filled by column-wise means. For each column : Train a random forest model using (with as the target). Update missing values in using predictions from . Repeat until convergence or a predefined iteration limit is reached. |

- 2.

- Anomaly Detection and Correction

4. Experimental and Result Analysis

4.1. Experimental Environment

4.2. Anomaly Diagnosis Evaluation Metrics

4.3. Data Cleaning Evaluation Metrics

- R2 = 1: Indicates a perfect fit, where predictions exactly match the observed values.

- R2 = 0: Suggests the model performs no better than a naive baseline that always predicts the mean value.

- R2 < 0: Implies severe model inadequacy, where predictions are worse than using the mean value, often signaling overfitting, underfitting, or data-process mismatches.

4.4. Anomaly Diagnosis Experimental Result Analysis

4.5. Data Cleaning Experimental Result Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Elsayed, A.H.; Hammoudeh, S.; Sousa, R.M. Inflation Synchronization among the G7and China: The Important Role of Oil Inflation. Energy Econ. 2021, 100, 105332. [Google Scholar] [CrossRef]

- Chen, S.-Y.; Zhang, Q.; Mclellan, B.; Zhang, T.-T. Review on the Petroleum Market in China: History, Challenges and Prospects. Pet. Sci. 2020, 17, 1779–1794. [Google Scholar] [CrossRef]

- Hong, J.; Wang, Z.; Wang, C.; Zhang, J.; Liu, W.; Ling, K. Modeling of Multiphase Flow with the Wellbore in Gas-Condensate Reservoirs Under High Gas/Liquid Ratio Conditions and Field Application. SPE J. 2025, 30, 1301–1314. [Google Scholar] [CrossRef]

- Acevedo, R.A.; Lorca-Susino, M. The European Union Oil Dependency: A Threat to Economic Growth and Diplomatic Freedom. Int. J. Energy Sect. Manag. 2021, 15, 987–1006. [Google Scholar] [CrossRef]

- Brandt, A.R. Oil Depletion and the Energy Efficiency of Oil Production: The Case of California. Sustainability 2011, 3, 1833–1854. [Google Scholar] [CrossRef]

- Yessengaliyev, D.A.; Zhumagaliyev, Y.U.; Tazhibayev, A.A.; Bekbossynov, Z.A.; Sarkulova, Z.S.; Issengaliyeva, G.A.; Zhubandykova, Z.U.; Semenikhin, V.V.; Yeskalina, K.T.; Ansapov, A.E. Energy Efficiency Trends in Petroleum Extraction: A Bibliometric Study. Energies 2024, 17, 2869. [Google Scholar] [CrossRef]

- Chen, X.; Wang, M.; Wang, B.; Hao, H.; Shi, H.; Wu, Z.; Chen, J.; Gai, L.; Tao, H.; Zhu, B.; et al. Energy Consumption Reduction and Sustainable Development for Oil & Gas Transport and Storage Engineering. Energies 2023, 16, 1775. [Google Scholar] [CrossRef]

- Bing, S.; Zhao, W.; Li, Z.; Xiao, W.; Lv, Q.; Hou, C. Global Optimization Method of Energy Consumption in Oilfield Waterflooding System. Pet. Geol. Recovery Effic. 2019, 26, 102–106. [Google Scholar] [CrossRef]

- Solanki, P.; Baldaniya, D.; Jogani, D.; Chaudhary, B.; Shah, M.; Kshirsagar, A. Artificial Intelligence: New Age of Transformation in Petroleum Upstream. Pet. Res. 2022, 7, 106–114. [Google Scholar] [CrossRef]

- Dong, X.; Han, S.; Wang, A.; Shang, K. Online Inertial Machine Learning for Sensor Array Long-Term Drift Compensation. Chemosensors 2021, 9, 353. [Google Scholar] [CrossRef]

- Ni, P.; Lv, P.; Sun, W. Application of Energy Saving and Consumption Reduction Measures in Oil Field Based on Big Data Mining Theory. In Proceedings of the International Field Exploration and Development Conference 2022; Lin, J., Ed.; Springer Nature: Singapore, 2023; pp. 7147–7154. [Google Scholar]

- Zhao, J. Research and Application of Oil and Gas Gathering and Transportation Data Acquisition and Energy Consumption Monitoring System Based on Internet of Things. In Proceedings of the International Field Exploration and Development Conference 2022; Lin, J., Ed.; Springer Nature: Singapore, 2023; pp. 7016–7029. [Google Scholar]

- Research on Intelligent Diagnosis and Decision-Making Method for Oilfield Water Injection System Faults|IEEE Journals & Magazine|IEEE Xplore. Available online: https://ieeexplore.ieee.org/abstract/document/10637453 (accessed on 16 November 2024).

- Bai, Y.; Hou, J.; Liu, Y.; Zhao, D.; Bing, S.; Xiao, W.; Zhao, W. Energy-Consumption Calculation and Optimization Method of Integrated System of Injection-Reservoir-Production in High Water-Cut Reservoir. Energy 2022, 239, 121961. [Google Scholar] [CrossRef]

- Li, Y.; Sun, R.; Horne, R. Deep Learning for Well Data History Analysis. In Proceedings of the SPE Annual Technical Conference and Exhibition, Calgary, AB, Canada, 23 September 2019. [Google Scholar]

- Yan, R.; Tong, W.; Jiaona, C.; Alteraz, H.A.; Mohamed, H.M. Evaluation of Factors Influencing Energy Consumption in Water Injection System Based on Entropy Weight-Grey Correlation Method. Appl. Math. Nonlinear Sci. 2021, 6, 269–280. [Google Scholar] [CrossRef]

- Lv, G.; Li, Q.; Wang, S.; Li, X. Key Techniques of Reservoir Engineering and Injection–Production Process for CO2 Flooding in China’s SINOPEC Shengli Oilfield. J. CO2 Util. 2015, 11, 31–40. [Google Scholar] [CrossRef]

- Kessel, R.; Kacker, R.; Berglund, M. Coefficient of Contribution to the Combined Standard Uncertainty. Metrologia 2006, 43, S189. [Google Scholar] [CrossRef]

- Roche, A.; Malandain, G.; Pennec, X.; Ayache, N. The Correlation Ratio as a New Similarity Measure for Multimodal Image Registration. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI’98, Cambridge, MA, USA, 11–13 October 1998; Wells, W.M., Colchester, A., Delp, S., Eds.; Springer: Berlin/Heidelberg, Germany, 1998; pp. 1115–1124. [Google Scholar]

- Sedgwick, P. Pearson’s Correlation Coefficient. BMJ 2012, 345, e4483. [Google Scholar] [CrossRef]

- Rodríguez, P.; Bautista, M.A.; Gonzàlez, J.; Escalera, S. Beyond One-Hot Encoding: Lower Dimensional Target Embedding. Image Vis. Comput. 2018, 75, 21–31. [Google Scholar] [CrossRef]

- Jia, B.-B.; Zhang, M.-L. Multi-Dimensional Classification via Sparse Label Encoding. In Proceedings of the 38th International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 4917–4926. [Google Scholar]

- Donders, A.R.T.; van der Heijden, G.J.M.G.; Stijnen, T.; Moons, K.G.M. Review: A Gentle Introduction to Imputation of Missing Values. J. Clin. Epidemiol. 2006, 59, 1087–1091. [Google Scholar] [CrossRef]

- Suthaharan, S. Support Vector Machine. In Machine Learning Models and Algorithms for Big Data Classification: Thinking with Examples for Effective Learning; Suthaharan, S., Ed.; Springer: Boston, MA, USA, 2016; pp. 207–235. ISBN 978-1-4899-7641-3. [Google Scholar]

- Ramchoun, H.; Ghanou, Y.; Ettaouil, M.; Janati Idrissi, M.A. Multilayer Perceptron: Architecture Optimization and Training. Int. J. Interact. Multimed. Artif. Intell. 2016, 4, 26–30. [Google Scholar] [CrossRef]

- Bentéjac, C.; Csörgő, A.; Martínez-Muñoz, G. A Comparative Analysis of Gradient Boosting Algorithms. Artif. Intell. Rev. 2021, 54, 1937–1967. [Google Scholar] [CrossRef]

- Myles, A.J.; Feudale, R.N.; Liu, Y.; Woody, N.A.; Brown, S.D. An Introduction to Decision Tree Modeling. J. Chemom. 2004, 18, 275–285. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random Forest in Remote Sensing: A Review of Applications and Future Directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Arashi, M.; Roozbeh, M.; Hamzah, N.A.; Gasparini, M. Ridge Regression and Its Applications in Genetic Studies. PLoS ONE 2021, 16, e0245376. [Google Scholar] [CrossRef]

- Beattie, J.R.; Esmonde-White, F.W.L. Exploration of Principal Component Analysis: Deriving Principal Component Analysis Visually Using Spectra. Appl. Spectrosc. 2021, 75, 361–375. [Google Scholar] [CrossRef]

- Aldi, F.; Hadi, F.; Rahmi, N.A.; Defit, S. Standardscaler’s Potential in Enhancing Breast Cancer Accuracy Using Machine Learning. J. Appl. Eng. Technol. Sci. (JAETS) 2023, 5, 401–413. [Google Scholar] [CrossRef]

- Dong, X.; Yu, Z.; Cao, W.; Shi, Y.; Ma, Q. A Survey on Ensemble Learning. Front. Comput. Sci. 2020, 14, 241–258. [Google Scholar] [CrossRef]

- Harris, C.R.; Millman, K.J.; van der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J.; et al. Array Programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef]

- Lemenkova, P. Python Libraries Matplotlib, Seaborn and Pandas for Visualization Geo-Spatial Datasets Generated by QGIS. Analele Stiintifice Ale Univ. “Alexandru Ioan Cuza" Din Iasi-Ser. Geogr. 2020, 1, 13–32. [Google Scholar]

- Tran, M.-K.; Panchal, S.; Chauhan, V.; Brahmbhatt, N.; Mevawalla, A.; Fraser, R.; Fowler, M. Python-Based Scikit-Learn Machine Learning Models for Thermal and Electrical Performance Prediction of High-Capacity Lithium-Ion Battery. Int. J. Energy Res. 2022, 46, 786–794. [Google Scholar] [CrossRef]

- Hodson, T.O. Root-Mean-Square Error (RMSE) or Mean Absolute Error (MAE): When to Use Them or Not. Geosci. Model Dev. 2022, 15, 5481–5487. [Google Scholar] [CrossRef]

- Dong, X.; Li, J.; Chang, Q.; Miao, S.; Wan, H. Data Fusion and Models Integration for Enhanced Semantic Segmentation in Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 7134–7151. [Google Scholar] [CrossRef]

- Nakagawa, S.; Johnson, P.C.D.; Schielzeth, H. The Coefficient of Determination R2 and Intra-Class Correlation Coefficient from Generalized Linear Mixed-Effects Models Revisited and Expanded. J. R. Soc. Interface 2017, 14, 20170213. [Google Scholar] [CrossRef]

| No. | Feature | Abbr. | Type |

|---|---|---|---|

| 1 | Factory-level Unit | CJDW | CATEGORICAL |

| 2 | Management Area | GLQ | CATEGORICAL |

| 3 | Oil Classification | YPFLG | CATEGORICAL |

| 4 | Permeability | STL | NUMERICAL |

| 5 | Permeability Type | STXG | CATEGORICAL |

| 6 | Reservoir Type | YCLXG | CATEGORICAL |

| 7 | Well Type | JX | CATEGORICAL |

| 8 | Pump Depth | BS | NUMERICAL |

| 9 | Pump Hanging Depth | BGSD | CATEGORICAL |

| 10 | Oil Extraction Method | CYFS | CATEGORICAL |

| 11 | Electric Heating Equipment Type | DJRSBLX | CATEGORICAL |

| 12 | Well Category | JB | CATEGORICAL |

| 13 | Production Liquid Volume (12-day Sum) | CYL12H1 | NUMERICAL |

| 14 | Production Liquid Volume (12-day Average) | CYL12P1 | NUMERICAL |

| 15 | Power Consumption (12-day Sum) | HDL12H | NUMERICAL |

| 16 | Power Consumption (12-day Average) | HDL12P | NUMERICAL |

| 17 | Oil Production Volume (12-day Sum) | CYL12H2 | NUMERICAL |

| 18 | Oil Production Volume (12-day Average) | CYL12P2 | NUMERICAL |

| 19 | Water Cut | HS | NUMERICAL |

| 20 | Production Duration (Days) | SCSC | NUMERICAL |

| 21 | Formation Oil Viscosity | DCYYND | NUMERICAL |

| 22 | Surface Oil Viscosity | DMYYND | NUMERICAL |

| 23 | Formation Oil Density | DCYYMD | NUMERICAL |

| 24 | Surface Oil Density | DMYYMD | NUMERICAL |

| 25 | Crude Oil Density | YYMD | NUMERICAL |

| 26 | Electricity Consumption per Ton of Liquid | DYHD1 | NUMERICAL |

| 27 | Electricity Consumption per Ton of Oil | DYHD2 | NUMERICAL |

| 28 | Tubing Pressure | TY | NUMERICAL |

| 29 | Back Pressure | HY | NUMERICAL |

| 30 | Dynamic Liquid Level | DYM | NUMERICAL |

| 31 | Mixture Density | HYMD | NUMERICAL |

| 32 | Lift Height | YC | NUMERICAL |

| 33 | QH | QH | NUMERICAL |

| 34 | Electricity Consumption per 100 Meters of Liquid | BMDYHD | NUMERICAL |

| 35 | Dynamic Viscosity at 50 °C | 50DLND | NUMERICAL |

| 36 | Motor Type | DJLX | CATEGORICAL |

| 37 | Motor Rated Power | DJEDGL | NUMERICAL |

| 38 | Theoretical Electric Quantity | LLDL | NUMERICAL |

| 39 | Stroke | CC1 | NUMERICAL |

| 40 | Cycle | CC2 | NUMERICAL |

| No. | Feature | Abbr. | Type | |

|---|---|---|---|---|

| 1 | Factory-level Unit | CJDW | CATEGORICAL | One-Hot Encoding |

| 2 | Management Area | GLQ | CATEGORICAL | One-Hot Encoding |

| 3 | Oil Classification | YPFLG | CATEGORICAL | One-Hot Encoding |

| 5 | Permeability | STXG | CATEGORICAL | Label Encoding |

| 6 | Reservoir Type | YCLXG | CATEGORICAL | One-Hot Encoding |

| 7 | Well Type | JX | CATEGORICAL | One-Hot Encoding |

| 9 | Pump Hanging Depth | BGSD | CATEGORICAL | Label Encoding |

| 10 | Oil Extraction Method | CYFS | CATEGORICAL | One-Hot Encoding |

| 11 | Electric Heating Equipment Type | DJRSBLX | CATEGORICAL | One-Hot Encoding |

| 12 | Well Category | JB | CATEGORICAL | One-Hot Encoding |

| 36 | Motor Type | DJLX | CATEGORICAL | One-Hot Encoding |

| Model | PCA | MSE | A | P | R | F1 | TT | PT |

|---|---|---|---|---|---|---|---|---|

| SVM | Y | 1.93 | 0.65 | 0.67 | 0.65 | 0.66 | 8.58 | 2.860 |

| Gradient Boosting | Y | 1.82 | 0.58 | 0.67 | 0.58 | 0.61 | 69.31 | 0.015 |

| MLP | Y | 1.38 | 0.57 | 0.73 | 0.57 | 0.63 | 7.96 | 0.011 |

| KNeighbors | Y | 1.98 | 0.74 | 0.68 | 0.74 | 0.70 | 0.14 | 0.116 |

| Gaussian Process | Y | 3.18 | 0.42 | 0.68 | 0.42 | 0.52 | 75.87 | 18.39 |

| AdaBoost | Y | 3.64 | 0.06 | 0.02 | 0.06 | 0.03 | 7.97 | 0.026 |

| Random Forest | N | 0.11 | 0.93 | 0.93 | 0.93 | 0.93 | 28.14 | 0.033 |

| Decision Tree | N | 0.37 | 0.86 | 0.86 | 0.86 | 0.86 | 0.34 | 0.005 |

| Ridge | N | 1.58 | 0.52 | 0.73 | 0.52 | 0.58 | 0.02 | 0.004 |

| Linear Regression | N | 1.58 | 0.52 | 0.73 | 0.52 | 0.58 | 0.08 | 0.004 |

| Lasso | N | 1.81 | 0.50 | 0.71 | 0.50 | 0.55 | 0.30 | 0.004 |

| ElasticNet | N | 1.76 | 0.50 | 0.72 | 0.50 | 0.56 | 0.34 | 0.004 |

| Ensemble Model | - | 0.04 | 0.96 | 0.96 | 0.96 | 0.96 | - | - |

| No. | Feature Abbr. | Algorithm | Performance Metric | Evaluation Value |

|---|---|---|---|---|

| 1 | CJDW | Classifier | Accuracy | 0.99 |

| 2 | GLQ | Classifier | Accuracy | 0.94 |

| 3 | YPFLG | Classifier | Accuracy | 0.98 |

| 4 | STXG | Classifier | Accuracy | 1 |

| 5 | YCLXG | Classifier | Accuracy | 0.97 |

| 6 | JX | Classifier | Accuracy | 0.74 |

| 7 | BGSD | Classifier | Accuracy | 1 |

| 8 | CYFS | Classifier | Accuracy | 0.95 |

| 9 | DJRSBLX | Classifier | Accuracy | 0.92 |

| 10 | JB | Classifier | Accuracy | 0.92 |

| 11 | DJLX | Classifier | Accuracy | 0.77 |

| 12 | STL | Regressor | R2 Score | 0.95 |

| 13 | BS | Regressor | R2 Score | 0.94 |

| 14 | CYL12H1 | Regressor | R2 Score | 0.99 |

| 15 | CYL12P1 | Regressor | R2 Score | 0.99 |

| 16 | HDL12H | Regressor | R2 Score | 1 |

| 17 | HDL12P | Regressor | R2 Score | 0.99 |

| 18 | CYL12H2 | Regressor | R2 Score | 1 |

| 19 | CYL12P2 | Regressor | R2 Score | 0.99 |

| 20 | HS | Regressor | R2 Score | 0.98 |

| 21 | SCSC | Regressor | R2 Score | 0.94 |

| 22 | DCYYND | Regressor | R2 Score | 1 |

| 23 | DMYYND | Regressor | R2 Score | 0.98 |

| 24 | DCYYMD | Regressor | R2 Score | 0.99 |

| 25 | DMYYMD | Regressor | R2 Score | 1 |

| 26 | YYMD | Regressor | R2 Score | 0.1 |

| 27 | DYHD1 | Regressor | R2 Score | 0.99 |

| 28 | DYHD2 | Regressor | R2 Score | 0.94 |

| 29 | TY | Regressor | R2 Score | 0.73 |

| 30 | HY | Regressor | R2 Score | 0.57 |

| 31 | DYM | Regressor | R2 Score | 1 |

| 32 | HYMD | Regressor | R2 Score | 0.9 |

| 33 | YC | Regressor | R2 Score | 1 |

| 34 | QH | Regressor | R2 Score | 0.98 |

| 35 | 50DLND | Regressor | R2 Score | 0.12 |

| 36 | DJEDGL | Regressor | R2 Score | 0.97 |

| 37 | LLDL | Regressor | R2 Score | 0.98 |

| 38 | CC1 | Regressor | R2 Score | 0.81 |

| 39 | CC2 | Regressor | R2 Score | 0.79 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, W.; Wang, X.; Sheng, Q.; Liu, S.; Wan, G.; Li, Y.; Dong, X. Abnormal Energy Consumption Diagnosis Method of Oilfields Based on Multi-Model Ensemble Learning. Processes 2025, 13, 1501. https://doi.org/10.3390/pr13051501

Li W, Wang X, Sheng Q, Liu S, Wan G, Li Y, Dong X. Abnormal Energy Consumption Diagnosis Method of Oilfields Based on Multi-Model Ensemble Learning. Processes. 2025; 13(5):1501. https://doi.org/10.3390/pr13051501

Chicago/Turabian StyleLi, Wei, Xinyan Wang, Qingbo Sheng, Shaopeng Liu, Guangyi Wan, Yunfei Li, and Xiaorui Dong. 2025. "Abnormal Energy Consumption Diagnosis Method of Oilfields Based on Multi-Model Ensemble Learning" Processes 13, no. 5: 1501. https://doi.org/10.3390/pr13051501

APA StyleLi, W., Wang, X., Sheng, Q., Liu, S., Wan, G., Li, Y., & Dong, X. (2025). Abnormal Energy Consumption Diagnosis Method of Oilfields Based on Multi-Model Ensemble Learning. Processes, 13(5), 1501. https://doi.org/10.3390/pr13051501