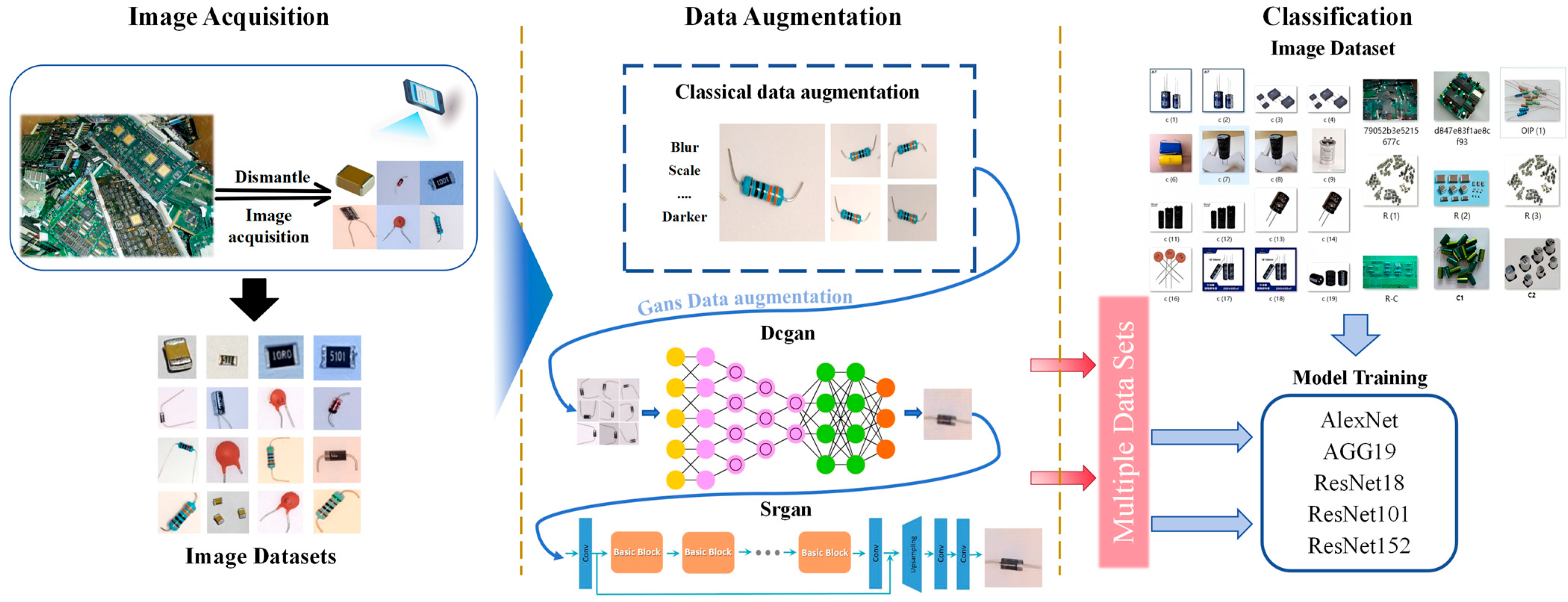

Research on Image Data Augmentation and Accurate Classification of Waste Electronic Components Utilizing Deep Learning Techniques

Abstract

1. Introduction

- Creation of a waste electronic component image dataset comprising five major categories and nineteen subcategories.

- Proposal of a deep learning-based image data augmentation method for electronic components.

- The electronic component images generated by this method are highly similar to real electronic component images.

- The generated electronic component images significantly improve the classification accuracy of deep learning models.

2. Methodology

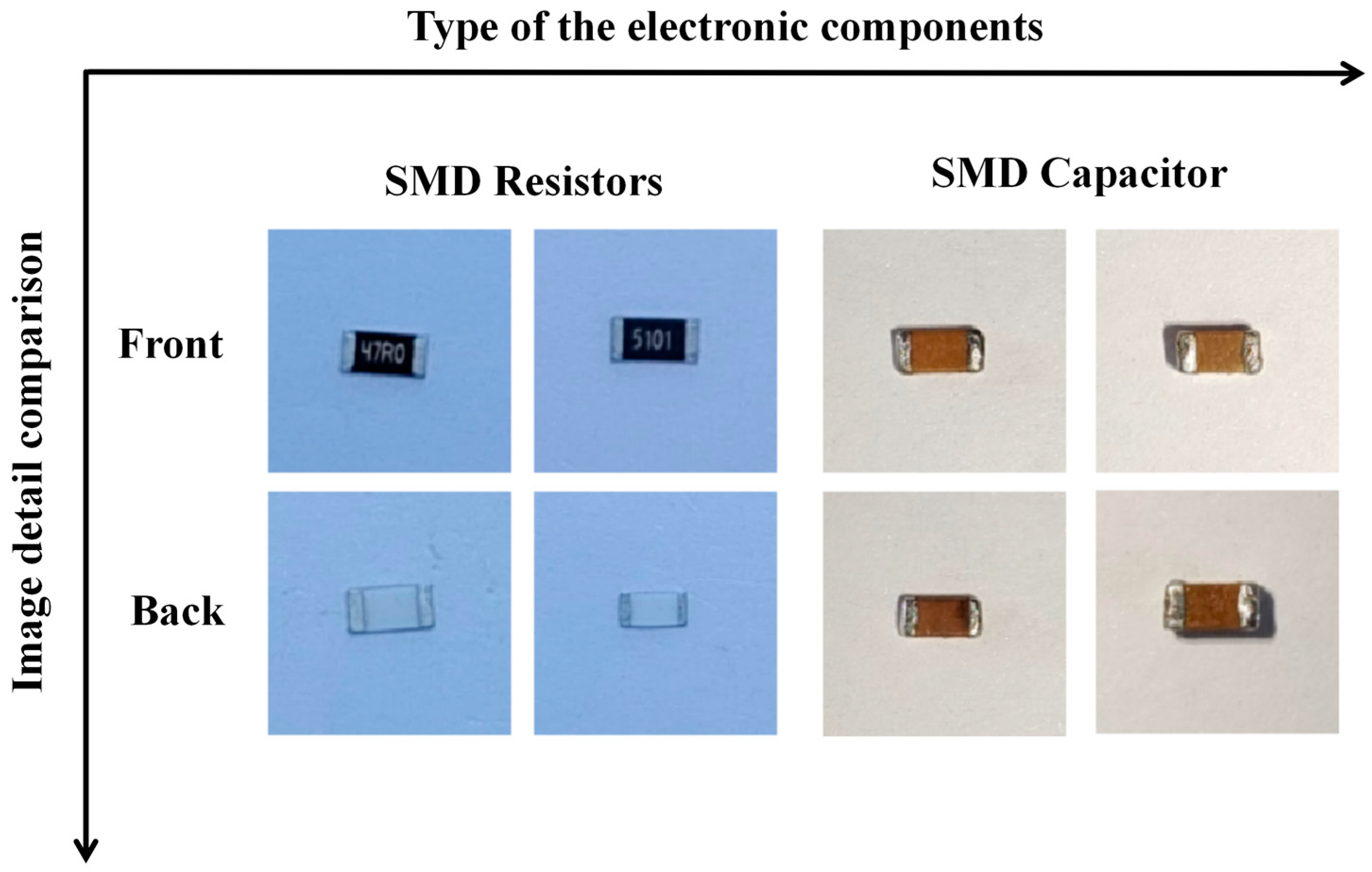

2.1. Image Dataset Construction

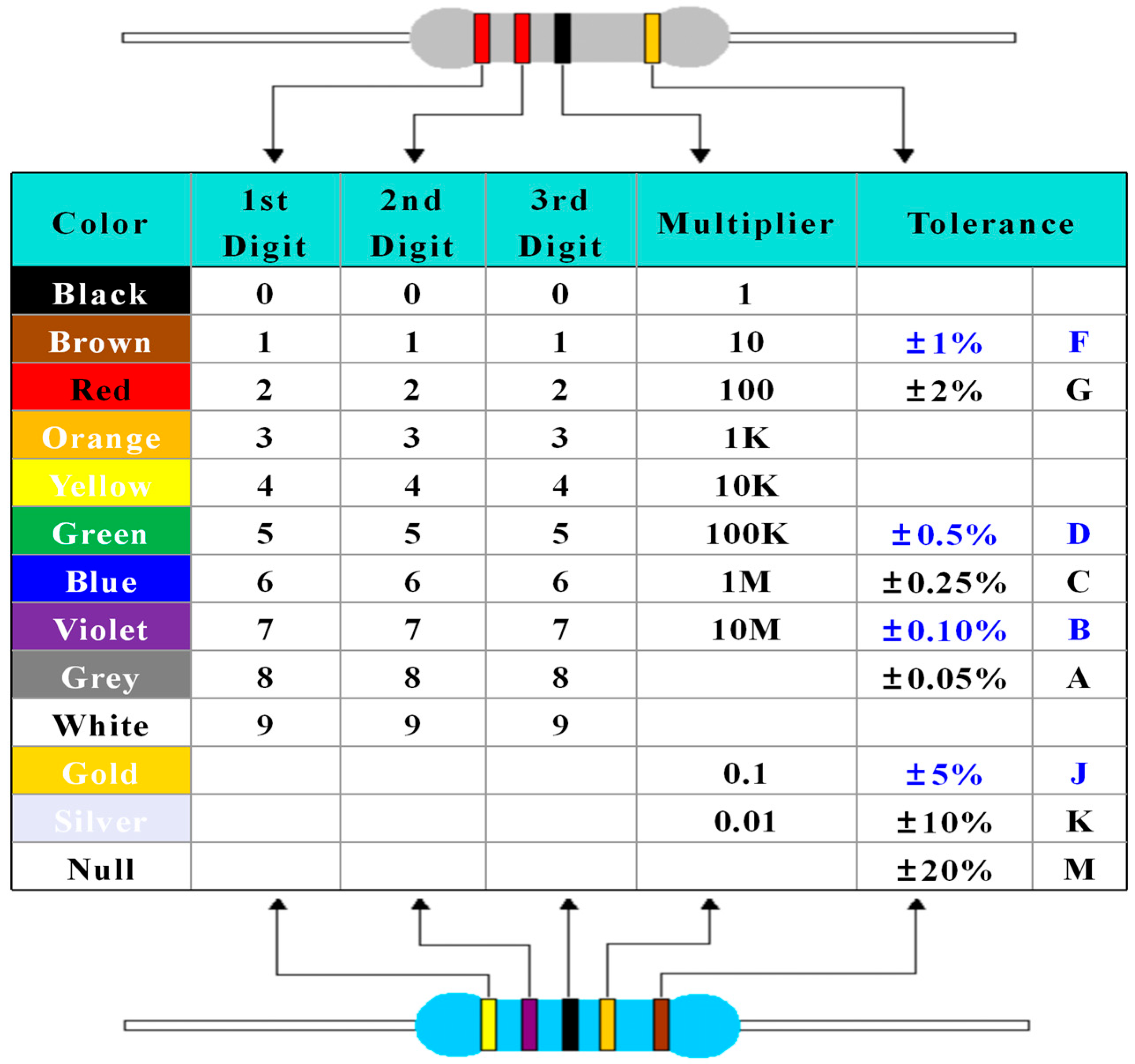

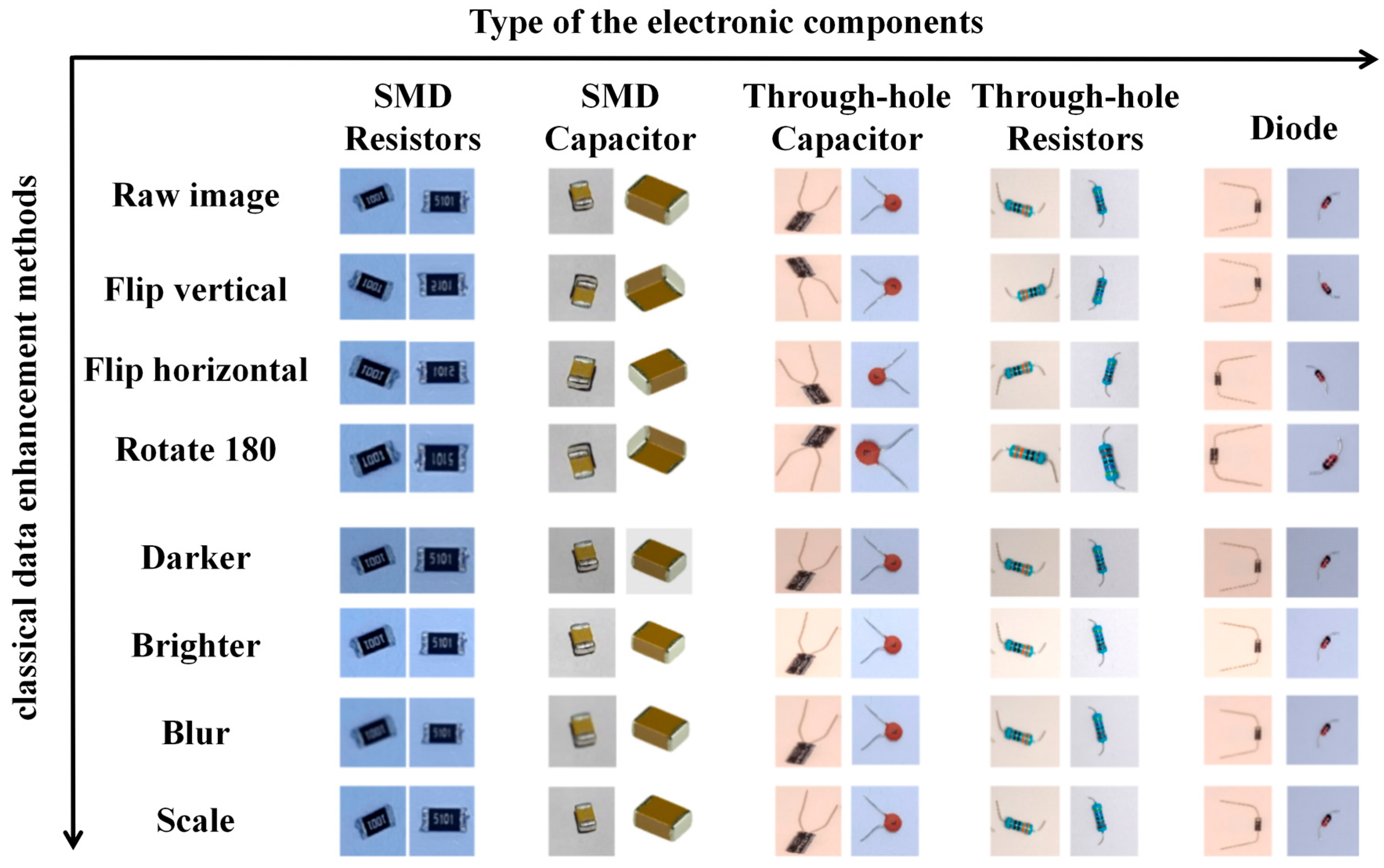

2.2. Classical DA Methods

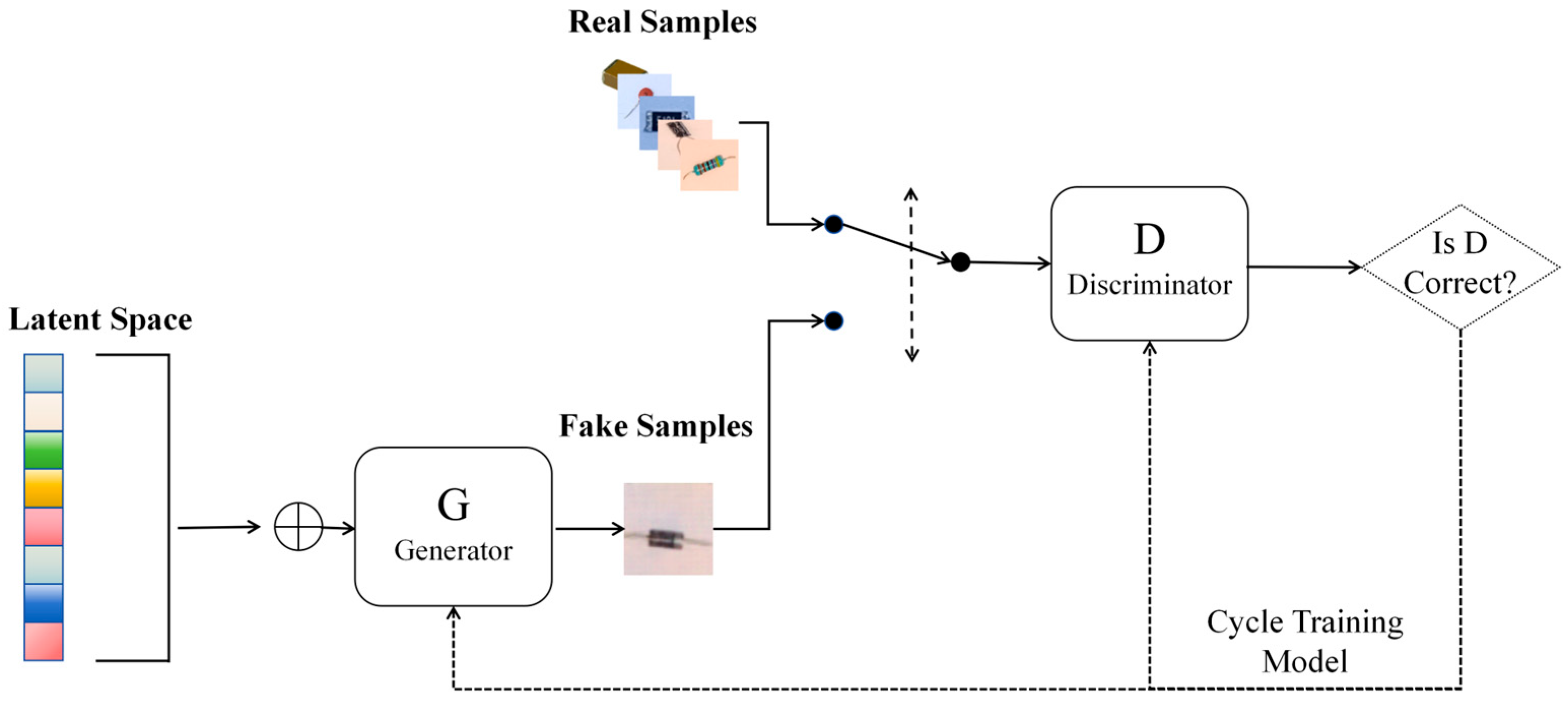

2.3. Generative Adversarial Networks DA Methods

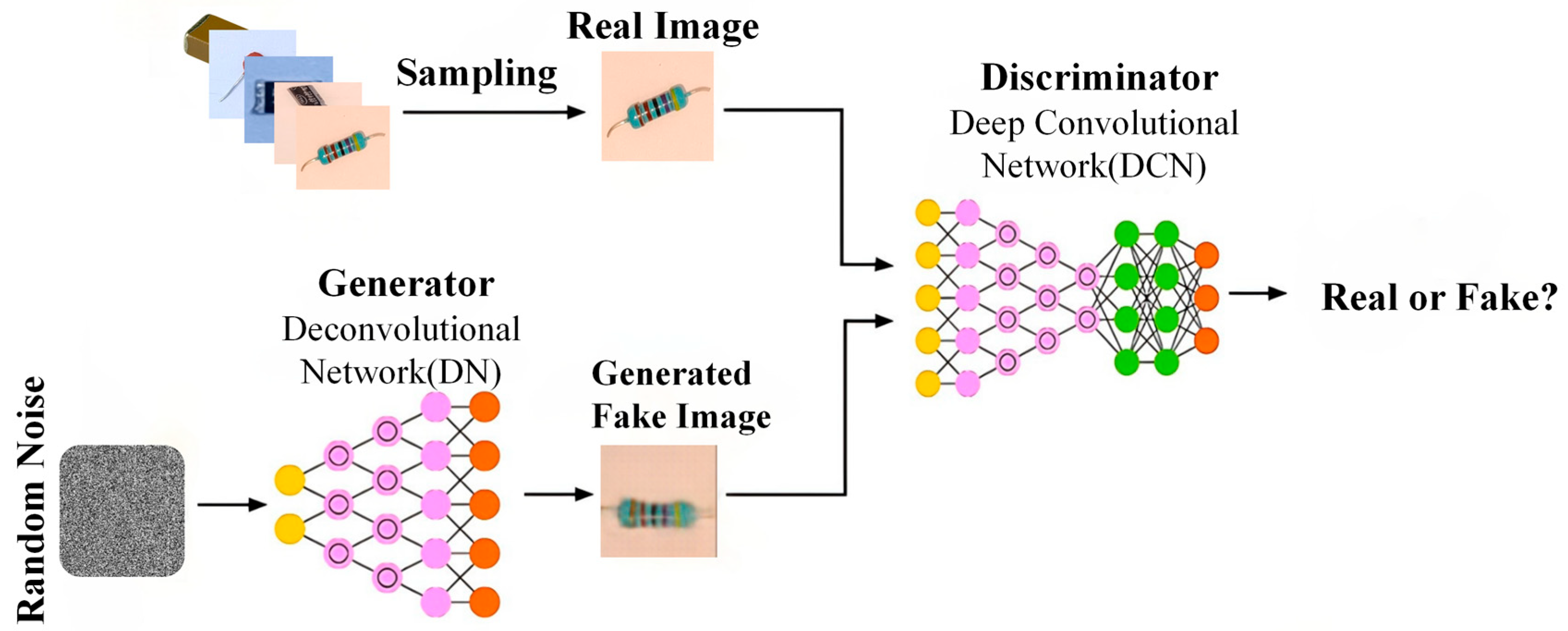

2.3.1. Generative Adversarial Networks

2.3.2. Deep Convolutional Generative Adversarial Networks

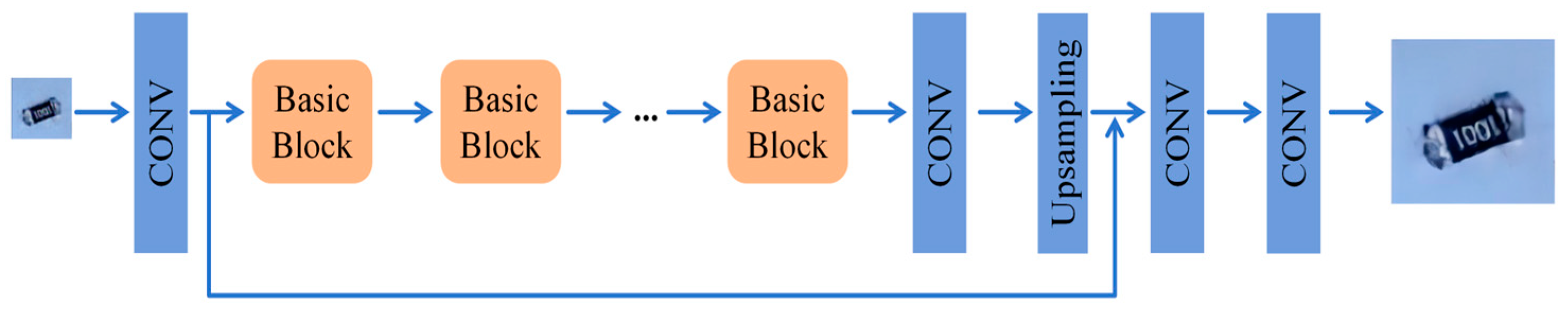

2.3.3. Super Resolution Generative Adversarial Network

3. Experiments

3.1. Image Dataset Preparation

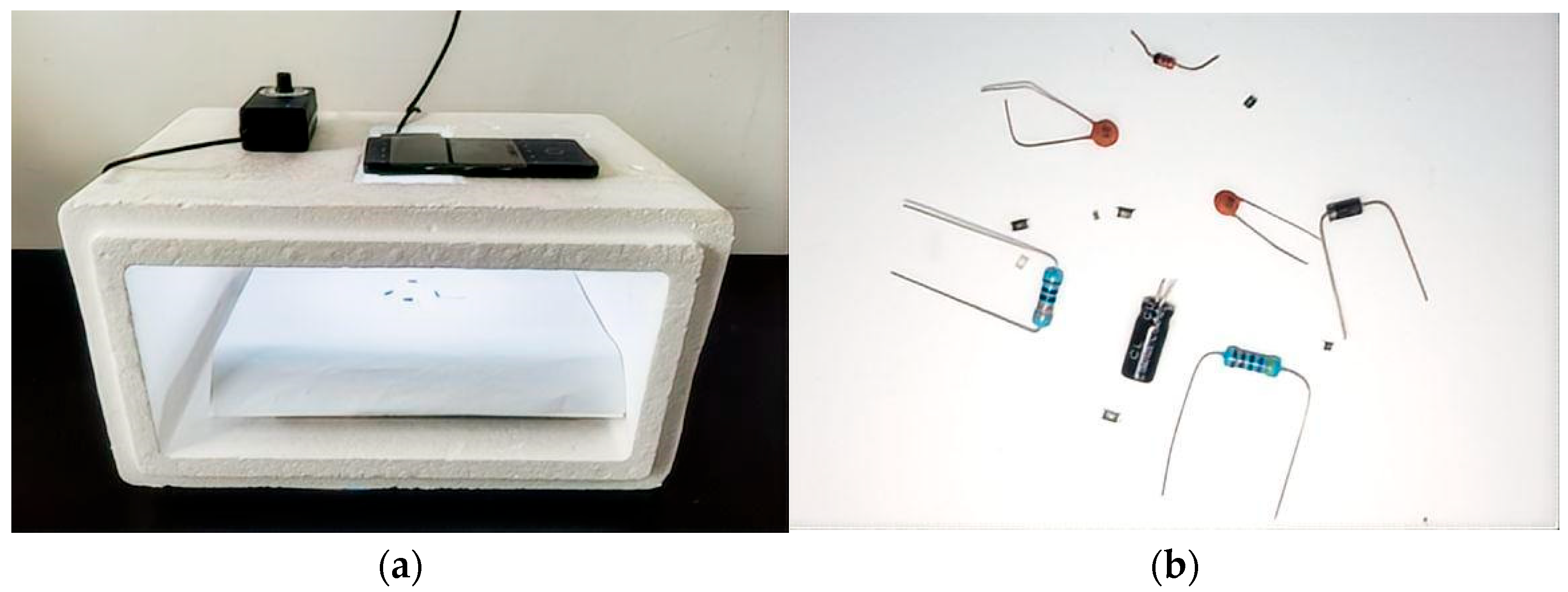

3.1.1. Material Preparation

3.1.2. Original Dataset Acquisition

3.2. Data Augmentation

3.2.1. Classic DA Methods

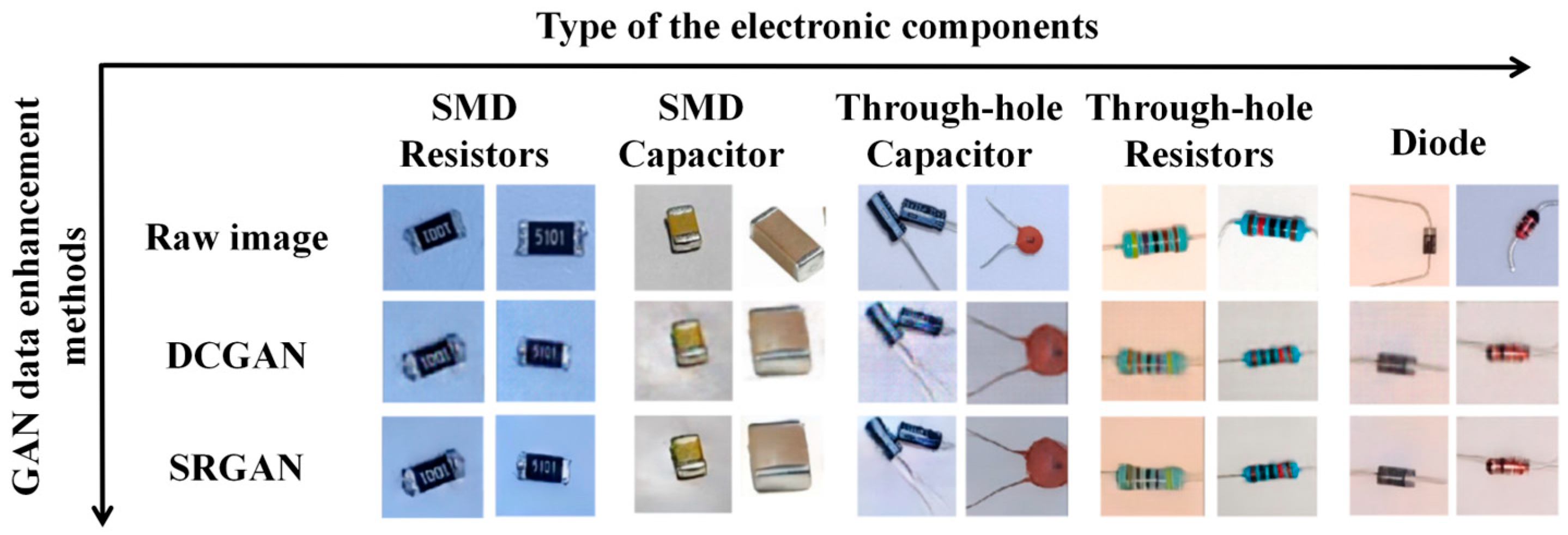

3.2.2. Generative Adversarial Networks DA

3.2.3. Datasets and Configuration

3.2.4. Evaluation Metrics

4. Experimental Results and Analysis

4.1. Model Selection

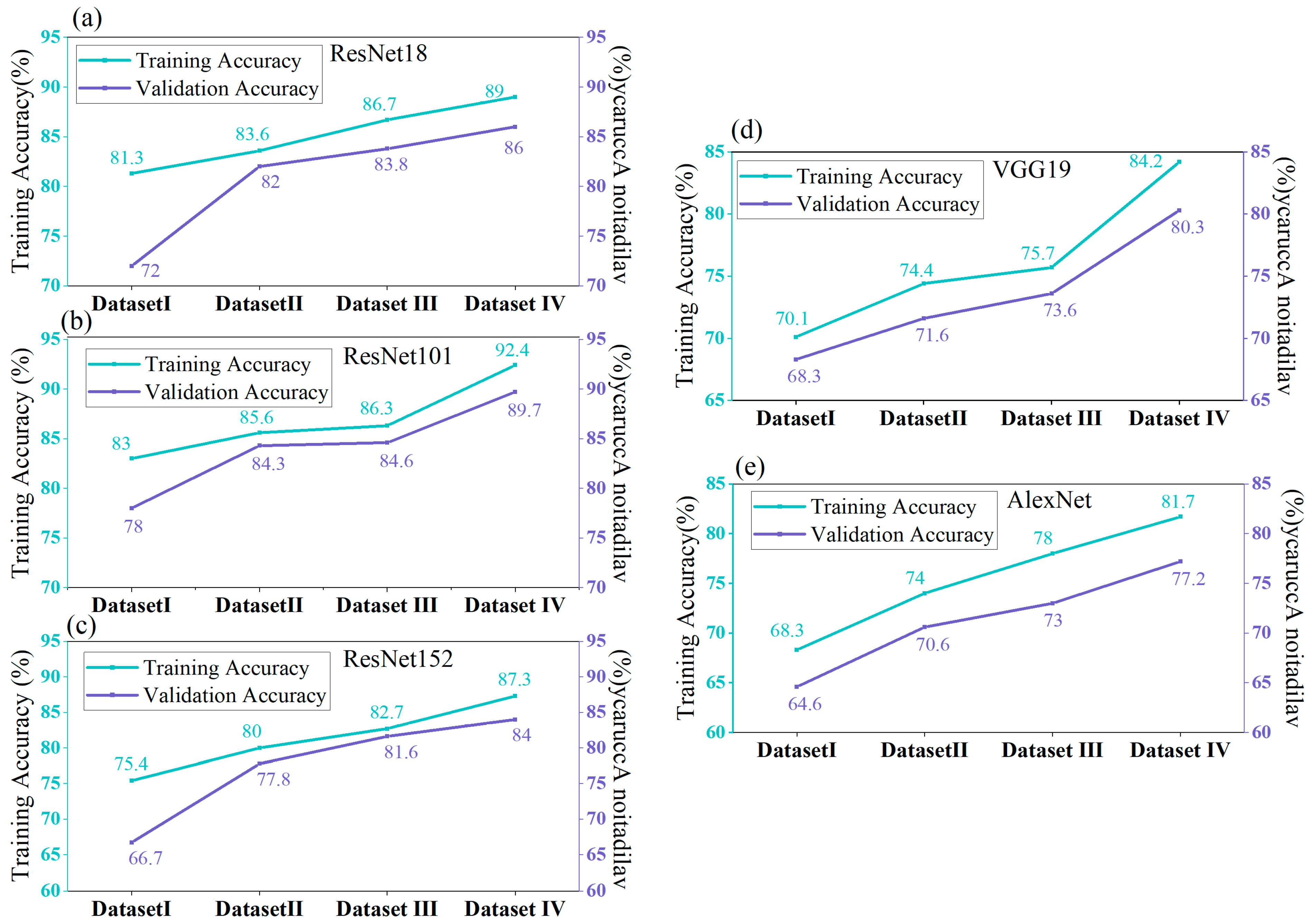

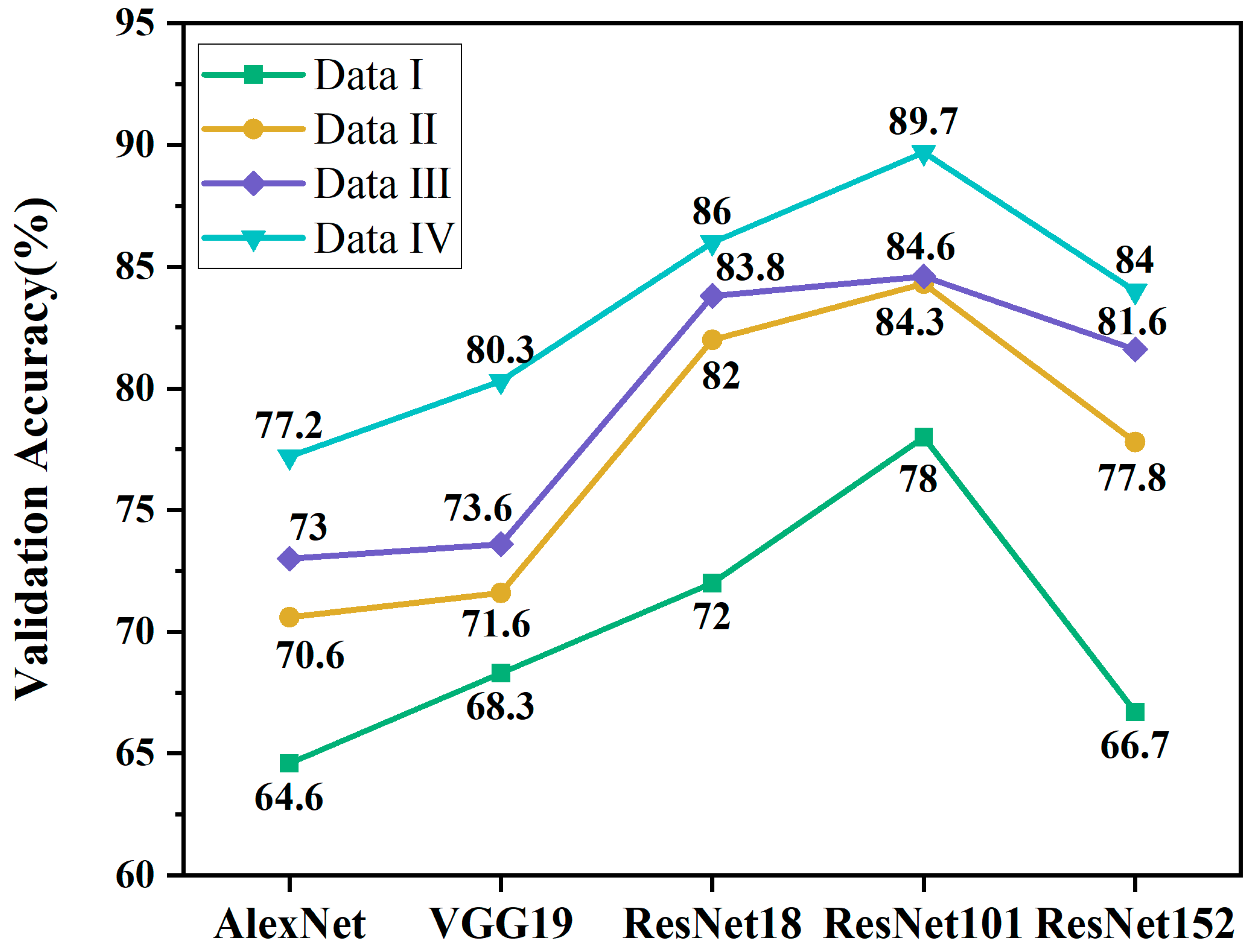

4.2. CNN Model Evaluation

4.3. Data Augmentation Comparison

4.4. Generated Image Quality Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Magnier, L.; Mugge, R. Replaced too soon? An exploration of Western European consumers’ replacement of electronic products. Resour. Conserv. Recycl. 2022, 185, 106448. [Google Scholar] [CrossRef]

- Zhao, W.; Xu, J.; Fei, W.; Liu, Z.; He, W.; Li, G. The reuse of electronic components from waste printed circuit boards: A critical review. Resour. Conserv. Recycl. 2023, 2, 196–214. [Google Scholar] [CrossRef]

- Alkouh, A.; Keddar, K.A.; Alatefi, S. Remanufacturing of industrial electronics: A case study from the GCC region. Electronics 2023, 12, 1960. [Google Scholar] [CrossRef]

- Sharma, H.; Kumar, H. A computer vision-based system for real-time component identification from waste printed circuit boards. J. Environ. Manag. 2024, 351, 119779. [Google Scholar] [CrossRef]

- Ren, C.; Ji, H.; Liu, X.; Teng, J.; Xu, H. Visual sorting of express packages based on the multi-dimensional fusion method under complex logistics sorting. Entropy 2023, 25, 298. [Google Scholar] [CrossRef] [PubMed]

- Atik, S.O. Classification of electronic components based on a convolutional neural network. Electronics 2022, 11, 2347. [Google Scholar] [CrossRef]

- Sayeedi, M.F.A.; Osmani, A.M.I.M.; Rahman, T.; Deepti, J.F.; Rahman, R.; Islam, S. ElectroCom61: A multiclass dataset for detection of electronic components. Data Brief 2025, 59, 111331. [Google Scholar] [CrossRef] [PubMed]

- Mikołajczyk, A.; Grochowski, M. Data augmentation for improving deep learning in image classification problem. In Proceedings of the 2018 International Interdisciplinary PhD Workshop (IIPhDW), Białystok, Poland, 14–15 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 117–122. [Google Scholar]

- Sarswat, P.K.; Singh, R.S.; Pathapati, S.V.S.H. Real time electronic-waste classification algorithms using the computer vision based on convolutional neural network (cnn): Enhanced environmental incentives. Resour. Conserv. Recycl. 2024, 207, 107651. [Google Scholar] [CrossRef]

- Dornaika, F.; Barrena, N. HSMix: Hard and soft mixing data augmentation for medical image segmentation. Inf. Fusion 2025, 115, 102741. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; NIPS: San Diego, CA, USA, 2012; Volume 25, pp. 1097–1105. [Google Scholar]

- Zhang, Z.; Pan, X.; Jiang, S.; Zhao, P. High-quality face image generation based on generative adversarial networks. J. Vis. Commun. Image Represent. 2020, 71, 102719. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 105–114. [Google Scholar]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and improving the image quality of StyleGAN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8107–8115. [Google Scholar]

- Yang, S.; Zhang, Y.; Yan, J.; Liu, Y.; Xu, H.; Guo, Y. StyleGANEX: StyleGAN-based manipulation beyond cropped aligned faces. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 1987–1997. [Google Scholar]

- Zhang, Y.; Fang, K.; Xu, X.; Deng, L. Deep learning-based recovery and management after total hip arthroplasty. Curr. Probl. Surg. 2025, 69, 101796. [Google Scholar] [CrossRef]

- Song, Y.; Zhang, J.; Liu, Z.; Xu, Y.; Quan, S.; Sun, L.; Bi, J.; Wang, X. Deep learning for hyperspectral image classification: A comprehensive review and future predictions. Inf. Fusion 2025, 123, 103285. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, S.; Liu, H. Deep learning for image classification: A comprehensive review of techniques, applications, and future directions. Arch. Comput. Methods Eng. 2023, 30, 1645–1668. [Google Scholar]

- Sterkens, W.; Diaz-Romero, D.; Goedemé, T. Detection and recognition of batteries on X-Ray images of waste electrical and electronic equipment using deep learning. Resour. Conserv. Recycl. 2021, 168, 105246. [Google Scholar] [CrossRef]

- Foo, G.; Kara, S.; Pagnucco, M. Screw detection for disassembly of electronic waste using reasoning and re-training of a deep learning model. Procedia CIRP 2021, 98, 666–671. [Google Scholar] [CrossRef]

- Koyanaka, S.; Kobayashi, K. Individual model identification of waste digital devices by the combination of CNN-based image recognition and measured values of mass and 3D shape features. J. Mater. Cycles Waste Manag. 2024, 26, 2214–2225. [Google Scholar] [CrossRef]

- Lu, Y.; Yang, B.; Gao, Y.; Xu, Z. An automatic sorting system for electronic components detached from waste printed circuit boards. Waste Manag. 2022, 137, 1–8. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Maharana, K.; Mondal, S.; Nemade, B. A review: Data pre-processing and data augmentation techniques. Glob. Transit. Proc. 2022, 3, 91–99. [Google Scholar] [CrossRef]

- Suto, J. Using data augmentation to improve the generalization capability of an object detector on remote-sensed insect trap images. Sensors 2024, 24, 4502. [Google Scholar] [CrossRef]

- Perez, L.; Wang, J. The effectiveness of data augmentation in image classification using deep learning. arXiv 2017, arXiv:1712.04621. [Google Scholar] [CrossRef]

- IEC 60062:2016; Marking Codes for Resistors and Capacitors. International Electrotechnical Commission: Geneva, Switzerland, 2016.

- Kalita, D.; Sharma, H.; Mirza, K.B. Continuous glucose, insulin and lifestyle data augmentation in artificial pancreas using adaptive generative and discriminative models. IEEE J. Biomed. Health Inform. 2024, 28, 4963–4974. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Bengio, Y. Generative adversarial nets. In Advances in Neural Information Processing Systems; NIPS: San Diego, CA, USA, 2014; Volume 27, pp. 1097–1105. [Google Scholar]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive growing of GANs for improved quality, stability, and variation. In Proceedings of the International Conference on Learning Representations, Vancouver, VA, Canada, 30 April–3 May 2018. [Google Scholar]

- Miyato, T.; Maeda, S.; Koyama, M.; Ishii, S. Spectral normalization for generative adversarial networks. In Proceedings of the International Conference on Learning Representations, Vancouver, VA, Canada, 30 April–3 May 2018. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015. [Google Scholar]

- Fathallah, M.; Sakr, M.; Eletriby, S. Stabilizing and improving training of generative adversarial networks through identity blocks and modified loss function. IEEE Access 2023, 11, 43276–43285. [Google Scholar] [CrossRef]

- Zhang, L.; Zhao, L. High-quality face image generation using particle swarm optimization-based generative adversarial networks. Future Gener. Comput. Syst. 2021, 122, 98–104. [Google Scholar] [CrossRef]

- Kushwaha, V.; Nandi, G.C. Study of prevention of mode collapse in generative adversarial network (GAN). In Proceedings of the IEEE 4th Conference on Computing, Communication and Security, Mehsana, India, 9–13 November 2020. [Google Scholar]

- Roy, A.; Dasgupta, D. A novel conditional Wasserstein deep convolutional generative adversarial network. IEEE Trans. Artif. Intell. 2023, 1–13. [Google Scholar] [CrossRef]

- Liu, B.; Chen, J. A super resolution algorithm based on attention mechanism and SRGAN network. IEEE Access 2021, 9, 139138–139145. [Google Scholar] [CrossRef]

- Guo, S.; Zheng, X. Improved SRGAN for remote sensing image super-resolution across locations and sensors. Remote Sens. 2021, 12, 1263. [Google Scholar]

- Song, J.; Yi, H.; Xu, W.; Li, X.; Li, B.; Liu, Y. ESRGAN-DP: Enhanced super-resolution generative adversarial network with adaptive dual perceptual loss. Heliyon 2023, 9, e15134. [Google Scholar] [CrossRef]

- Zhao, L.; Chi, H.; Zhong, T.; Jia, Y. Perception-oriented generative adversarial network for retinal fundus image super-resolution. Comput. Biol. Med. 2024, 168, 107708. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Hou, Y.; Fan, W.; Yang, X.; Zhou, D. Perception-oriented single image super-resolution network with receptive field block. Neural Comput. Appl. 2022, 34, 14845–14858. [Google Scholar] [CrossRef]

- Rad, M.S.; Bozorgtabar, B.; Marti, U.V.; Basler, M.; Ekenel, H.K.; Thiran, J.P. Srobb: Targeted perceptual loss for single image super-resolution. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2710–2719. [Google Scholar]

| Package Model | Lengths | Width | Height | Unit | |

|---|---|---|---|---|---|

| 0603 | 1.60 | 0.80 | 0.40 | mm | |

| SMD resistors | 0805 | 2.00 | 1.25 | 0.50 | mm |

| 1206 | 3.20 | 1.60 | 0.55 | mm |

| Type | Category | Quantity | Total | |

|---|---|---|---|---|

| 1 | SMD Capacitor | SMD Capacitor | 266 | 266 |

| 2 | Through-hole Capacitor | Electrolytic Capacitor | 148 | 396 |

| Porcelain Capacitor | 248 | |||

| 3 | Diode | Commutation Diode | 80 | 223 |

| Voltage Regulator Diode | 143 | |||

| 4 | SMD Resistors | 0603 | 74 | 251 |

| 0805 | 90 | |||

| 1206 | 87 | |||

| 5 | Through-hole Resistors | 4.7 kR | 110 | 474 |

| 10 kR | 100 | |||

| 330 R | 151 | |||

| 510 R | 113 | |||

| Total: 1610 | ||||

| Dataset I | Dataset II | Dataset III | Dataset IV | |

|---|---|---|---|---|

| Dataset Size | 1610 | 12,880 | 3220 | 14,490 |

| Enhancement multiples | - | 8 | 2 | 9 |

| Model | Convolutional Layers | Fully Connected Layers | Parameter Count |

|---|---|---|---|

| AlexNet | 5 | 3 | 61 million |

| VGG19 | 16 | 3 | 139 million |

| Resnet18 | 17 | 1 | 11.67 million |

| ResNet101 | 100 | 1 | 44.7 million |

| ResNet152 | 151 | 1 | 60.1 million |

| Models | Metrics | Dataset I | Dataset II | Dataset III | Dataset IV | Average |

|---|---|---|---|---|---|---|

| AlexNet | Accuracy (%) | 64.6% | 70.6% | 73% | 77.2% | 71.3% |

| AUC-PR | 0.838 | 0.89 | 0.936 | 0.991 | 0.91 | |

| F1-score | 0.562 | 0.61 | 0.635 | 0.67 | 0.619 | |

| mAP | 0.754 | 0.811 | 0.826 | 0.899 | 0.822 | |

| VGG19 | Accuracy (%) | 68.3% | 71.6% | 73.6% | 80.3% | 73.5% |

| AUC-PR | 0.867 | 0.934 | 0.952 | 0.963 | 0.931 | |

| F1-score | 0.597 | 0.623 | 0.645 | 0.702 | 0.641 | |

| mAP | 0.782 | 0.843 | 0.884 | 0.911 | 0.855 | |

| ResNet18 | Accuracy (%) | 72% | 82% | 83.8% | 86% | 80.9% |

| AUC-PR | 0.914 | 0.932 | 0.935 | 0.941 | 0.940 | |

| F1-score | 0.629 | 0.715 | 0.731 | 0.755 | 0.708 | |

| mAP | 0.828 | 0.888 | 0.886 | 0.897 | 0.875 | |

| ResNet101 | Accuracy (%) | 78% | 84.3% | 84.6% | 89.7% | 84.1% |

| AUC-PR | 0.974 | 0.978 | 0.978 | 0.986 | 0.983 | |

| F1-score | 0.681 | 0.738 | 0.734 | 0.782 | 0.734 | |

| mAP | 0.903 | 0.887 | 0.899 | 0.889 | 0.895 | |

| ResNet152 | Accuracy (%) | 66.7% | 77.8% | 81.6% | 84% | 77.5% |

| AUC-PR | 0.846 | 0.932 | 0.953 | 0.959 | 0.93 | |

| F1-score | 0.582 | 0.678 | 0.704 | 0.73 | 0.674 | |

| mAP | 0.765 | 0.898 | 0.88 | 0.895 | 0.859 |

| Category | Types | PSNR | SSIM |

|---|---|---|---|

| SMD Capacitor | SMD Capacitor | 20.07 | 0.803 |

| Through-hole Capacitor | Electrolytic Capacitor | 18.47 | 0.758 |

| Porcelain Capacitor | 18.43 | 0.790 | |

| Diode | Commutation Diode | 20.79 | 0.854 |

| Voltage Regulator Diode | 22.95 | 0.912 | |

| SMD Resistors | 0603-9.1 kR | 18.96 | 0.805 |

| 0603-9.1 R | 21.82 | 0.882 | |

| 0603-510 R | 21.74 | 0.879 | |

| 0805-2.7 kR | 18.77 | 0.799 | |

| 0805-10 R | 21.89 | 0.883 | |

| 0805-470 R | 21.73 | 0.879 | |

| 1206-1 kR | 19.66 | 0.823 | |

| 1206-5.1 kR | 22.40 | 0.897 | |

| 1206-5.1 R | 18.34 | 0.788 | |

| 1206-47 R | 19.82 | 0.827 | |

| Through-hole Resistors | 4.7 kR | 18.43 | 0.790 |

| 10 kR | 18.48 | 0.792 | |

| 330 R | 18.77 | 0.825 | |

| 510 R | 23.01 | 0.913 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, B.; Zhang, S.; Liu, S.; Wu, Y.; Guan, J.; Zhang, X.; Guo, Y.; Xu, Q.; Dong, W.; Gu, W. Research on Image Data Augmentation and Accurate Classification of Waste Electronic Components Utilizing Deep Learning Techniques. Processes 2025, 13, 3802. https://doi.org/10.3390/pr13123802

Chen B, Zhang S, Liu S, Wu Y, Guan J, Zhang X, Guo Y, Xu Q, Dong W, Gu W. Research on Image Data Augmentation and Accurate Classification of Waste Electronic Components Utilizing Deep Learning Techniques. Processes. 2025; 13(12):3802. https://doi.org/10.3390/pr13123802

Chicago/Turabian StyleChen, Bolin, Shuping Zhang, Shuangyi Liu, Yanlin Wu, Jie Guan, Xiaojiao Zhang, Yaoguang Guo, Qin Xu, Weiguo Dong, and Weixing Gu. 2025. "Research on Image Data Augmentation and Accurate Classification of Waste Electronic Components Utilizing Deep Learning Techniques" Processes 13, no. 12: 3802. https://doi.org/10.3390/pr13123802

APA StyleChen, B., Zhang, S., Liu, S., Wu, Y., Guan, J., Zhang, X., Guo, Y., Xu, Q., Dong, W., & Gu, W. (2025). Research on Image Data Augmentation and Accurate Classification of Waste Electronic Components Utilizing Deep Learning Techniques. Processes, 13(12), 3802. https://doi.org/10.3390/pr13123802