A Robust 3D Fixed-Area Quality Inspection Framework for Production Lines

Abstract

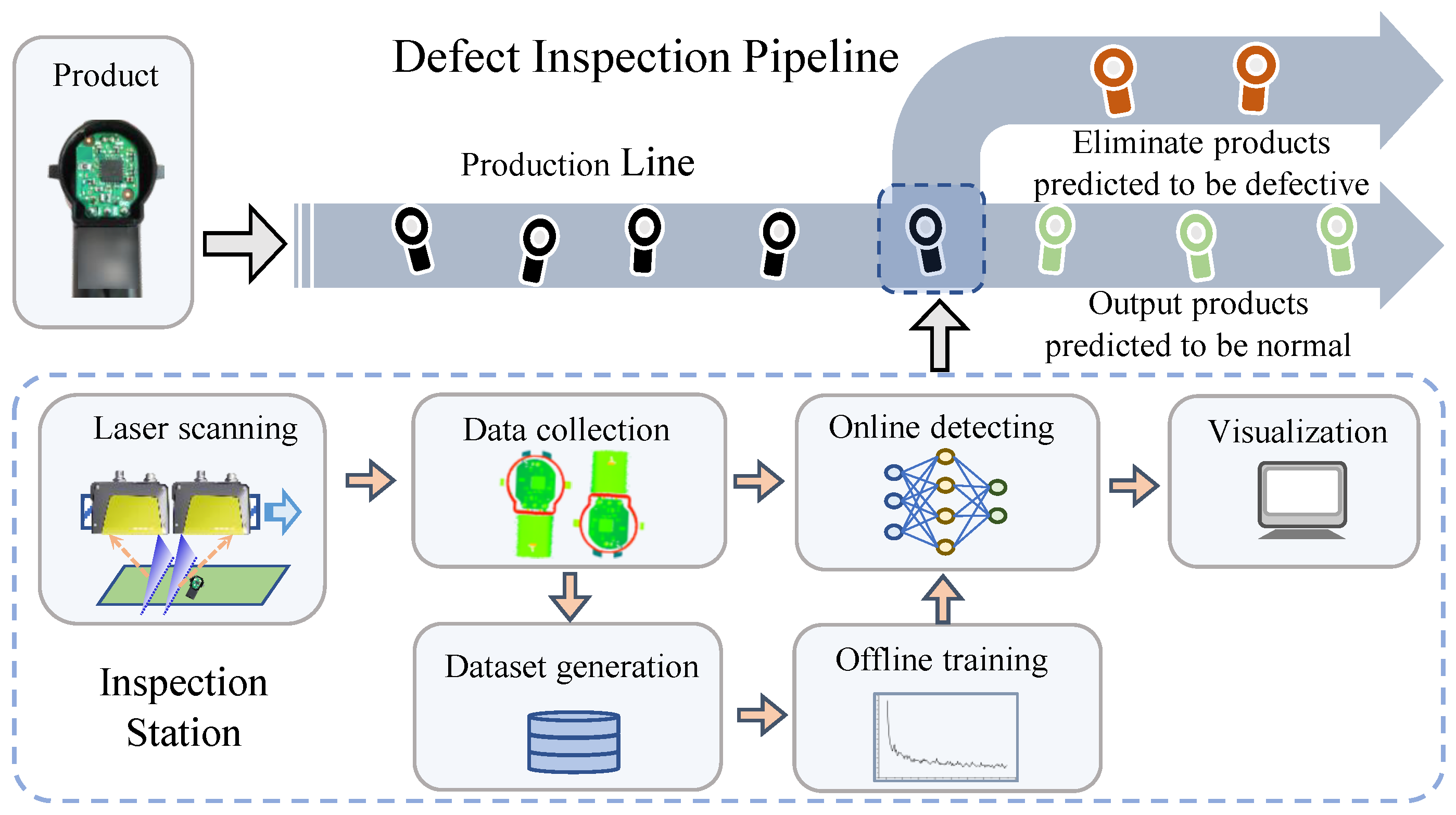

1. Introduction

- (1)

- Non-ignorable calculation costs from high-resolution data and redundant data: Collecting high-resolution data is the prerequisite to ensure high-quality detection, but high-resolution and redundant data inevitably lead to increased computational effort and additional invalid calculations.

- (2)

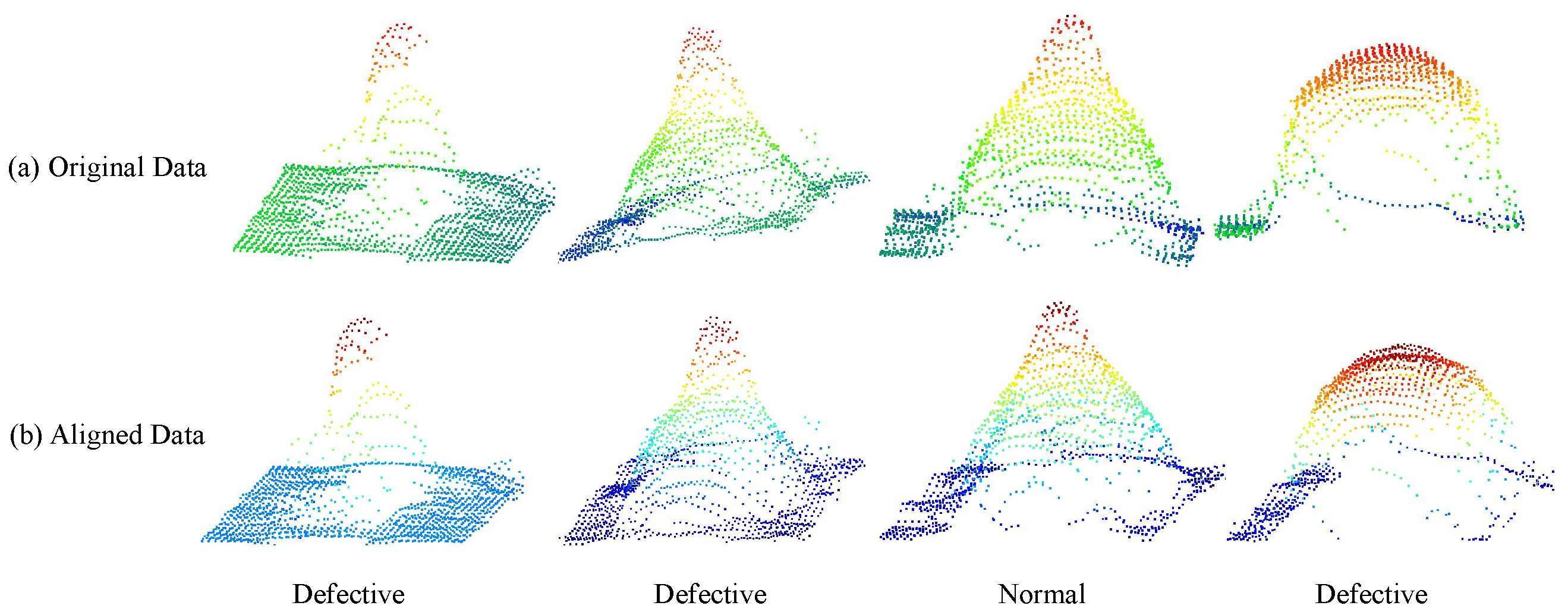

- Poor robustness caused by unaligned poses: Improper assembly and random workpiece placement inevitably lead to pose misalignment. Changes in pose lead to changes in input data, which can easily lead to samples that the model has not seen, making the model unable to remain robust.

- (3)

- Data imbalance: The data collected from the production line contains more than normal samples, and the distribution of defects in different instances is also uneven, which leads to an extremely unbalanced dataset.

- (4)

- Hard-to-classify samples: Determining a defective product as normal leads to false detection, which is unacceptable because of the strict quality standard. However, it is difficult for deep learning-based methods to avoid misclassification because of hard samples.

- (1)

- A recursive segmentation network is proposed to reduce invalid calculation costs associated with high-resolution point clouds, and rotation-invariant local feature abstraction is additionally introduced specifically for pose robustness.

- (2)

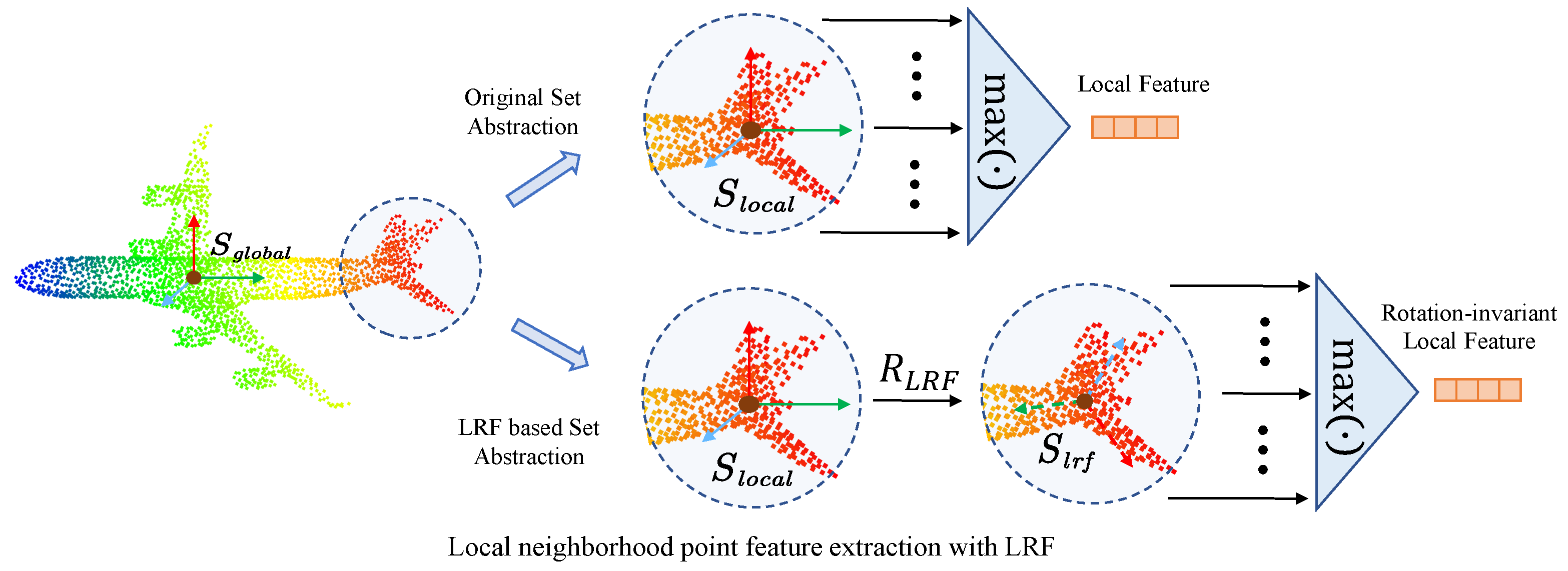

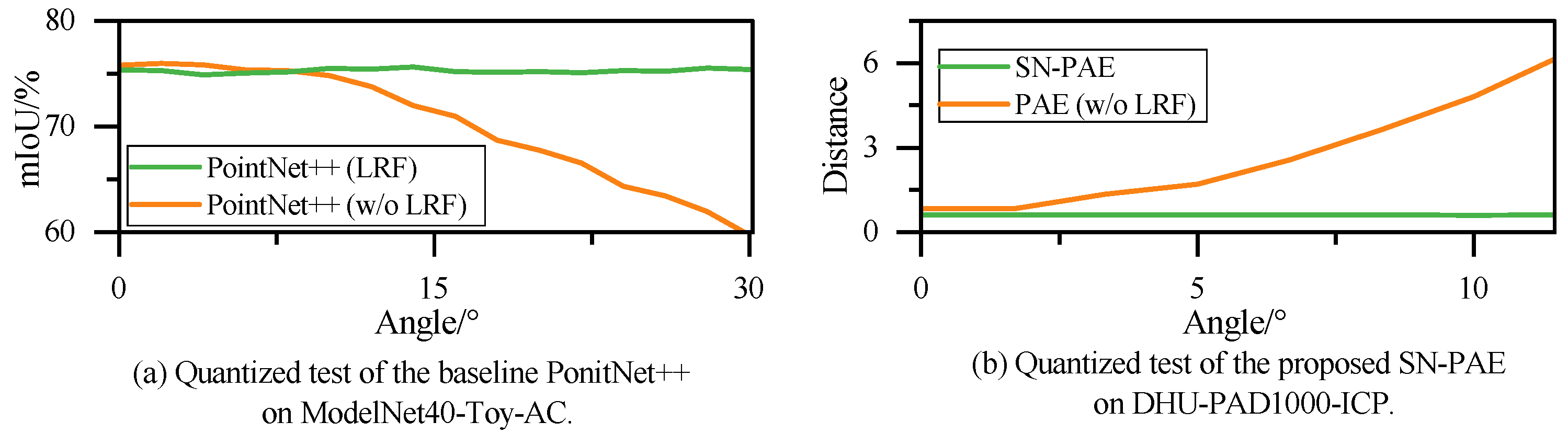

- With the innovative introduction of LRF-based rotation-invariant local feature extraction, LRF-based set abstraction (LRF-SA) is proposed to achieve pose robustness. LRF-SA is applied to all feature extraction layers of the framework, achieving a rotation-invariant detection framework.

- (3)

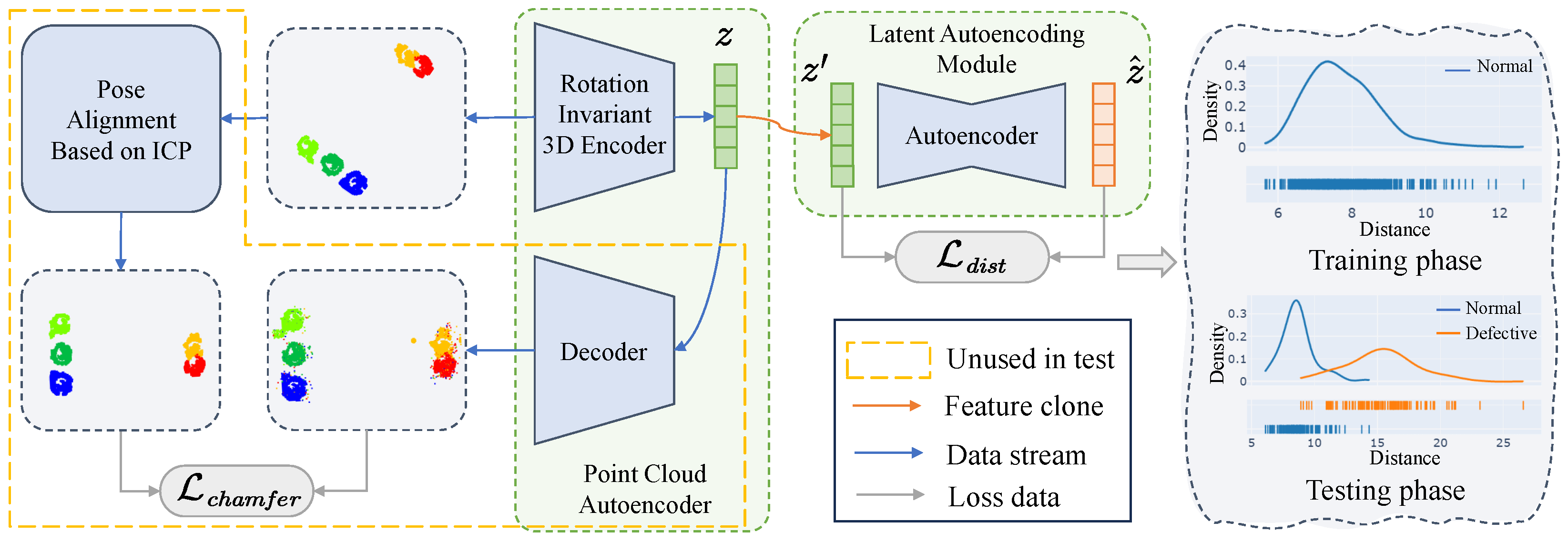

- A semi-nested point cloud autoencoder (SN-PAE) is proposed, which transfers the sample chamfer distance metric to the latent-variable vector metric, thereby circumventing the negative impact of pose perturbations. SN-PAE uses only normal samples for training, avoiding the impact of data imbalance and filtering out difficult samples by setting strict thresholds.

- (4)

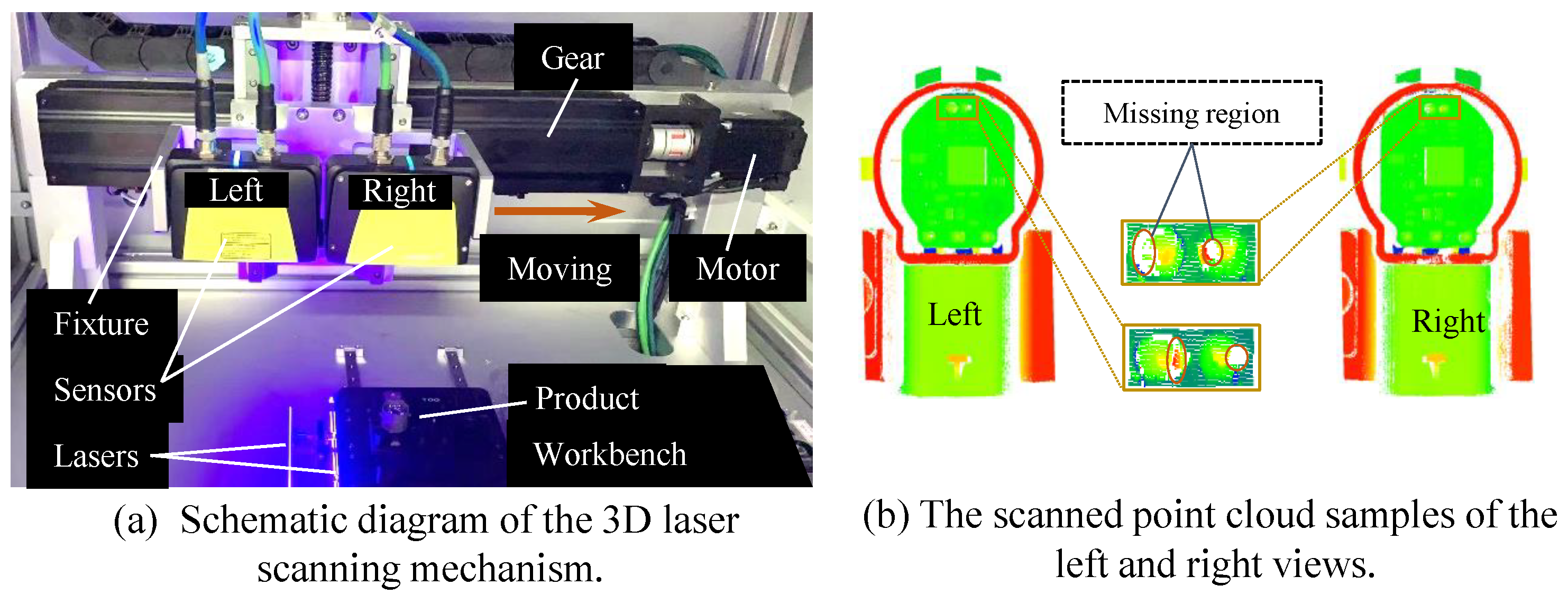

- A solder joint scanning system for solder inspection is deployed, and several point-cloud datasets are constructed separately. Multiple methods are evaluated on these datasets, and the results demonstrate the effectiveness and superiority of the proposed framework.

2. Related Work

2.1. Industrial Quality Inspection

2.2. One-Class Learning

2.3. Deep Learning Based on 3D Point Clouds

3. Methodology

3.1. Baseline

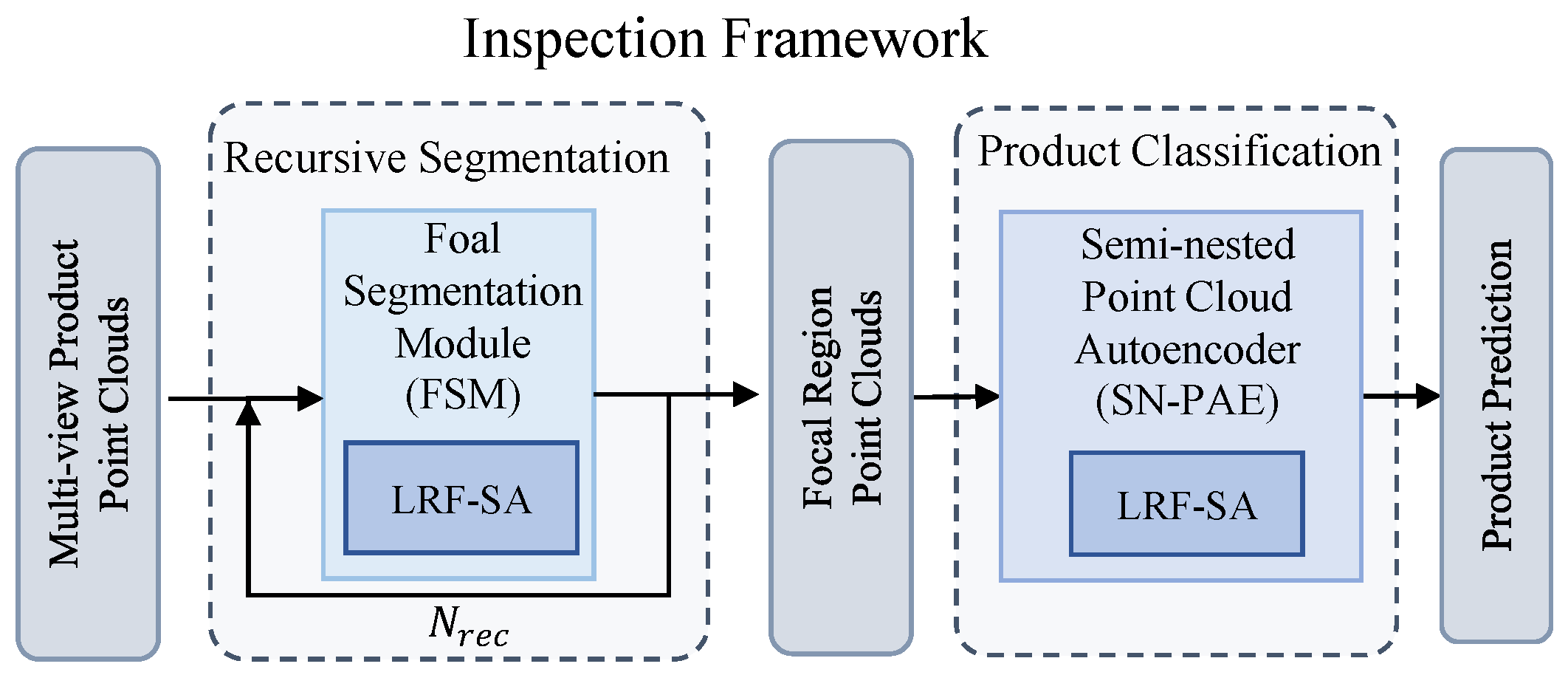

3.2. Inspection Framework

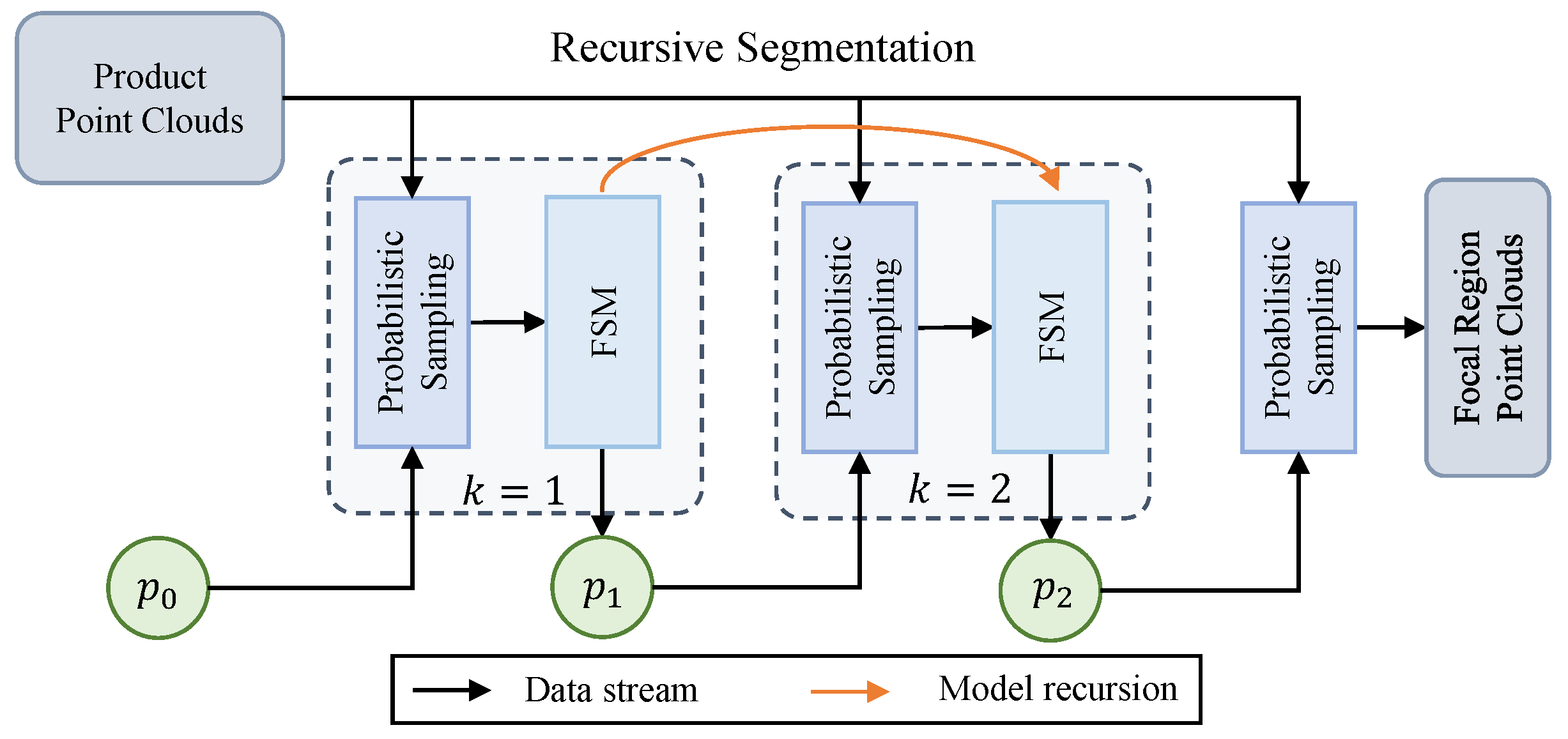

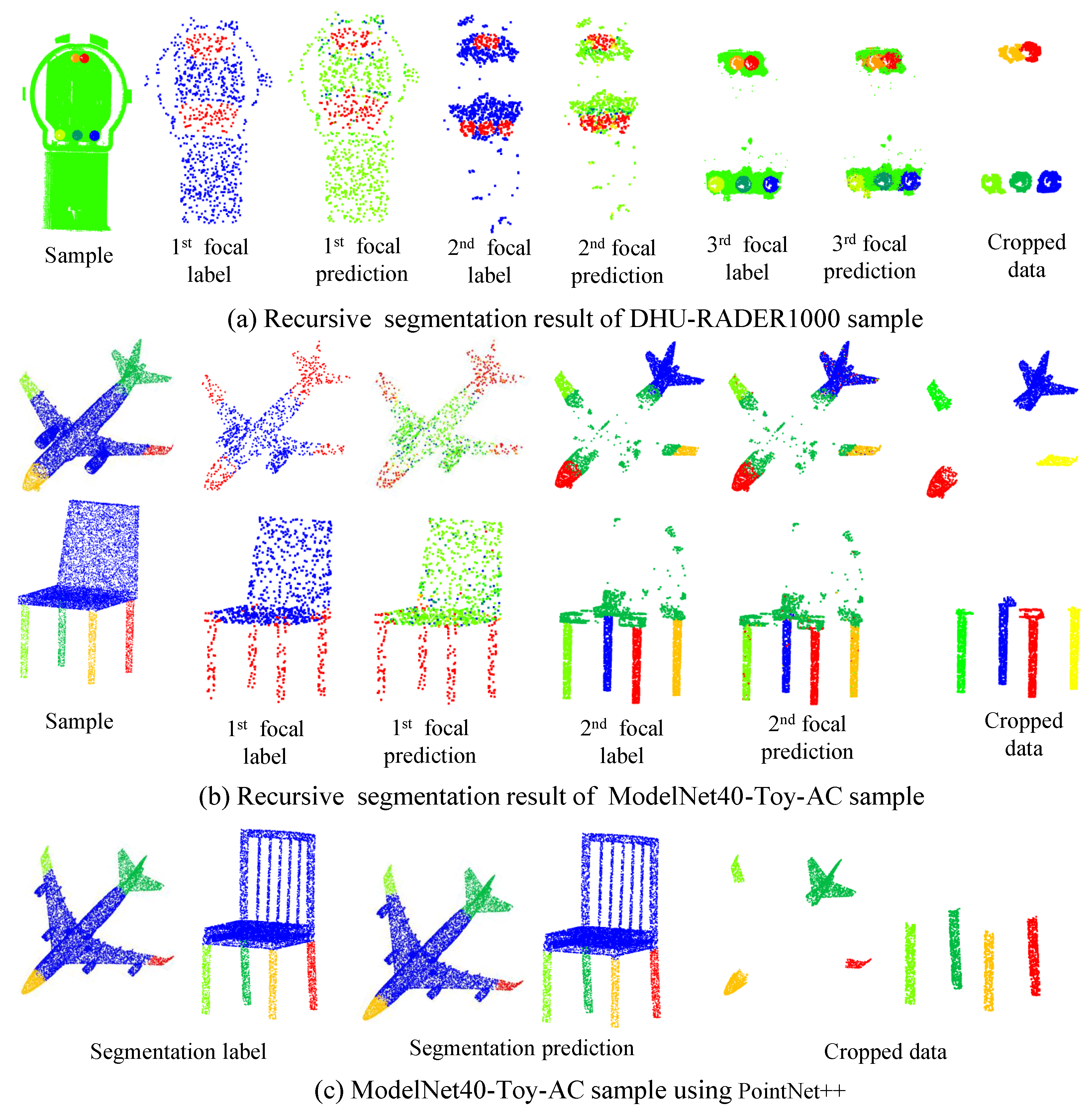

3.3. Recursive Segmentation Method

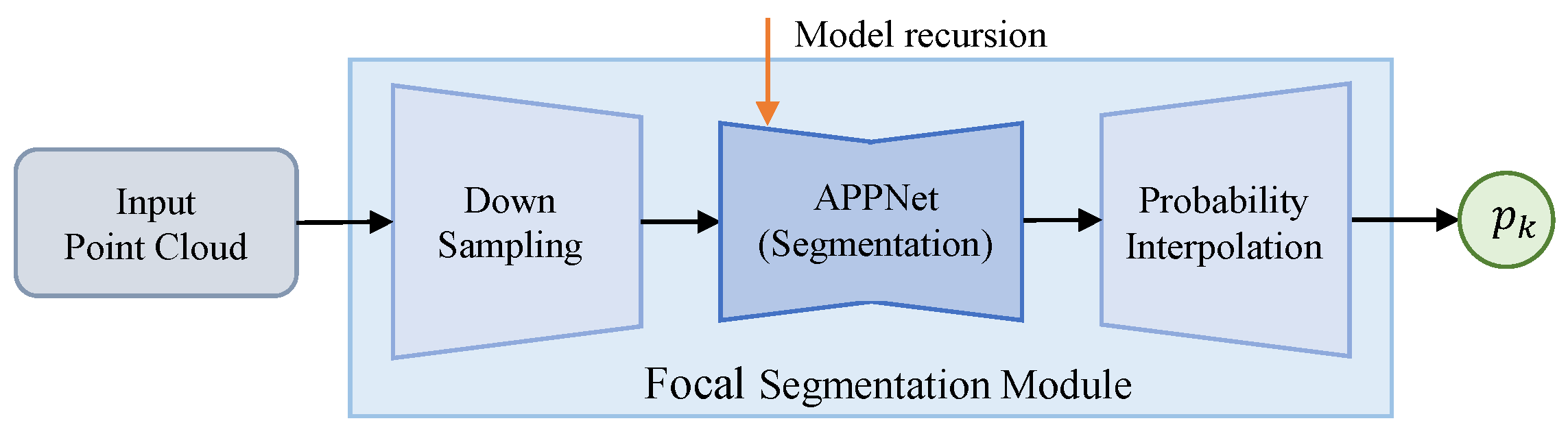

3.4. Focal Segmentation Module

| Algorithm 1 Focal Segmentation. |

return . |

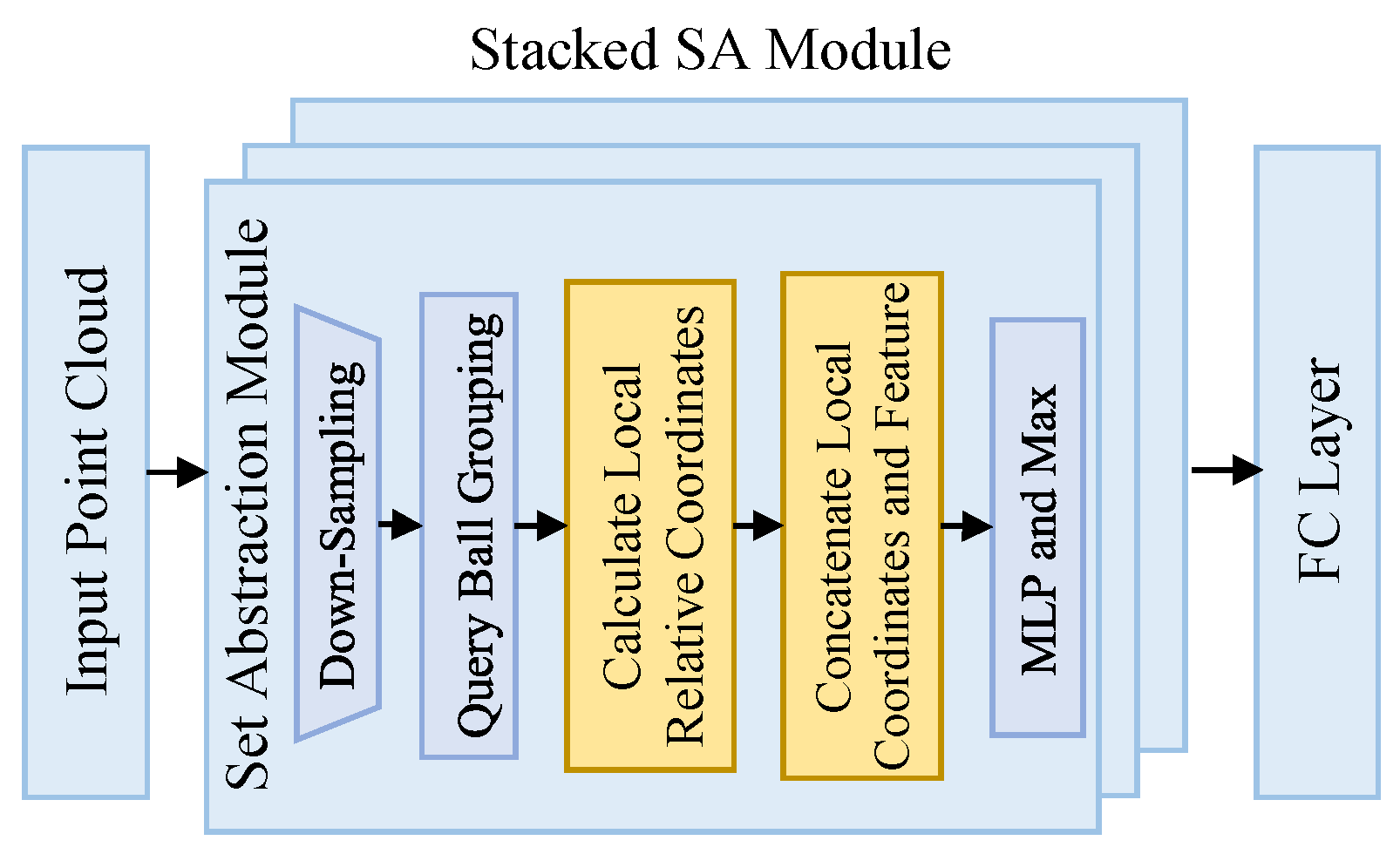

3.5. LRF-Based Set Abstraction

3.6. Semi-Nested Point Cloud Autoencoder

| Algorithm 2 Semi-Nested Point Cloud Autoencoder (SN-PAE). |

|

3.7. Loss

4. Experiments and Analysis

- (1)

- Several datasets are built.

- (2)

- The experimental setup and metrics are introduced.

- (3)

- The experimental results are explained in detail.

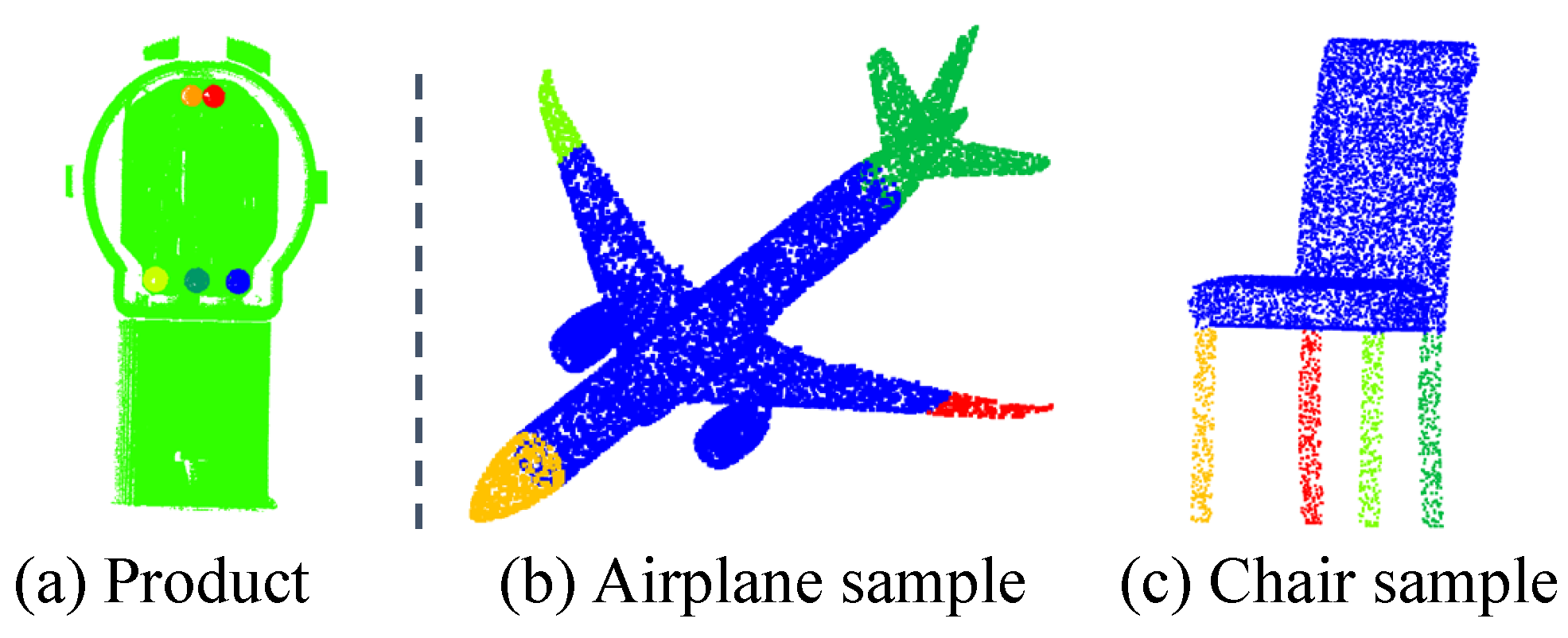

4.1. Datasets

4.2. Experimental Setup

4.3. Experimental Results

4.3.1. Segmentation-Stage Experiments

4.3.2. Classification-Stage Experiments

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| LRF | Local Reference Frame |

| FSM | Focal Segmentation Module |

| SN-PAE | Semi-Nested Point Cloud Autoencoder |

| LAM | Latent Autoencoding Module |

References

- Ko, J.H.; Yin, C. A Review of Artificial Intelligence Application for Machining Surface Quality Prediction: From Key Factors to Model Development. J. Intell. Manuf. 2025. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep Learning for 3d Point Clouds: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4338–4364. [Google Scholar] [CrossRef]

- Zhang, J.; Zhou, Z.; Sun, J. Center-Aware Instance Segmentation for Close Objects in Industrial 3-D Point Cloud Scenes. IEEE Trans. Ind. Inform. 2024, 20, 2812–2821. [Google Scholar] [CrossRef]

- Wei, C.; Bao, Y.; Zheng, C.; Ji, Z. AMFNet: Aggregated Multi-Level Feature Interaction Fusion Network for Defect Detection on Steel Surfaces. J. Intell. Manuf. 2025. [Google Scholar] [CrossRef]

- Saberironaghi, A.; Ren, J.; El-Gindy, M. Defect Detection Methods for Industrial Products Using Deep Learning Techniques: A Review. Algorithms 2023, 16, 95. [Google Scholar] [CrossRef]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3d Shapenets: A Deep Representation for Volumetric Shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar]

- Chen, Y.; Medioni, G. Object Modelling by Registration of Multiple Range Images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Li, H.; Hao, K.; Wei, B.; Tang, X.s.; Hu, Q. A Reliable Solder Joint Inspection Method Based on a Light-Weight Point Cloud Network and Modulated Loss. Neurocomputing 2022, 488, 315–327. [Google Scholar] [CrossRef]

- Hu, Q.; Hao, K.; Wei, B.; Li, H. An Efficient Solder Joint Defects Method for 3D Point Clouds with Double-Flow Region Attention Network. Adv. Eng. Inform. 2022, 52, 101608. [Google Scholar] [CrossRef]

- Zhang, Z.; Hua, B.S.; Yeung, S.K. RIConv++: Effective Rotation Invariant Convolutions for 3D Point Clouds Deep Learning. Int. J. Comput. Vis. 2022, 130, 1228–1243. [Google Scholar] [CrossRef]

- Armeni, I.; Sener, O.; Zamir, A.R.; Jiang, H.; Brilakis, I.; Fischer, M.; Savarese, S. 3D Semantic Parsing of Large-Scale Indoor Spaces. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1534–1543. [Google Scholar] [CrossRef]

- Zhang, Z.; Gao, P.; Peng, S.; Duan, C.; Zhang, P. Enhanced Point Feature Network for Point Cloud Salient Object Detection. IEEE Signal Process. Lett. 2023, 30, 1617–1621. [Google Scholar] [CrossRef]

- Vu, T.; Kim, K.; Luu, T.M.; Nguyen, T.; Yoo, C.D. Softgroup for 3d Instance Segmentation on Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2708–2717. [Google Scholar]

- Wei, B.; Hao, K.; Gao, L.; Tang, X.S. Bioinspired Visual-Integrated Model for Multilabel Classification of Textile Defect Images. IEEE Trans. Cogn. Dev. Syst. 2021, 13, 503–513. [Google Scholar] [CrossRef]

- Wang, D.; Su, S.; Lu, X. RSD-Diff: Boundary-Frequency Feature-Enhanced Rail Surface Defect Inspection with Diffusion-Based Transformer Decoder. In Proceedings of the 2024 10th International Conference on Systems and Informatics (ICSAI), Shanghai, China, 14–16 December 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Qin, T.; Chen, D.; Xiang, J.; Tian, Z. SP-YOLO: A Model for Detecting Solder Paste Printing-Defect in PCB. In Proceedings of the 2025 8th International Conference on Advanced Algorithms and Control Engineering (ICAACE), Shanghai, China, 21–23 March 2025; pp. 1251–1254. [Google Scholar] [CrossRef]

- Reddy, V.V.; Ume, I.C.; Williamson, J.; Sitaraman, S.K. Evaluation of the Quality of BGA Solder Balls in FCBGA Packages Subjected to Thermal Cycling Reliability Test Using Laser Ultrasonic Inspection Technique. IEEE Trans. Compon. Packag. Manuf. Technol. 2021, 11, 589–597. [Google Scholar] [CrossRef]

- Yan, H.; Zhang, H.; Gao, F. Transforming PCB Solder Joint Detection with Deep Learning Empowered X-ray Nondestructive Testing. In Proceedings of the 2024 IEEE Far East NDT New Technology & Application Forum (FENDT), Zhongshan, China, 24–27 June 2024; pp. 26–30. [Google Scholar] [CrossRef]

- Dai, W.; Mujeeb, A.; Erdt, M.; Sourin, A. Soldering Defect Detection in Automatic Optical Inspection. Adv. Eng. Inform. 2020, 43, 101004. [Google Scholar] [CrossRef]

- Oza, P.; Patel, V.M. One-Class Convolutional Neural Network. IEEE Signal Process. Lett. 2019, 26, 277–281. [Google Scholar] [CrossRef]

- Ruff, L.; Vandermeulen, R.; Goernitz, N.; Deecke, L.; Siddiqui, S.A.; Binder, A.; Müller, E.; Kloft, M. Deep One-Class Classification. Proc. Mach. Learn. Res. 2018, 80, 4393–4402. [Google Scholar]

- Perera, P.; Nallapati, R.; Xiang, B. Ocgan: One-Class Novelty Detection Using Gans with Constrained Latent Representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2898–2906. [Google Scholar]

- Sabokrou, M.; Khalooei, M.; Fathy, M.; Adeli, E. Adversarially Learned One-Class Classifier for Novelty Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3379–3388. [Google Scholar]

- Vincent, P.; Larochelle, H.; Bengio, Y.; Manzagol, P.A. Extracting and Composing Robust Features with Denoising Autoencoders. In Proceedings of the 25th International Conference on Machine Learning—ICML’08, Helsinki, Finland, 5–9 July 2008; pp. 1096–1103. [Google Scholar] [CrossRef]

- Masana, M.; Ruiz, I.; Serrat, J.; van de Weijer, J.; Lopez, A.M. Metric Learning for Novelty and Anomaly Detection. arXiv 2018, arXiv:1808.05492. [Google Scholar] [CrossRef]

- Perera, P.; Oza, P.; Patel, V.M. One-Class Classification: A Survey. arXiv 2021, arXiv:2101.03064. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep Learning on Point Sets for 3d Classification and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5105–5114. [Google Scholar]

- Wang, Y.; Ye, T.; Cao, L.; Huang, W.; Sun, F.; He, F.; Tao, D. Bridged Transformer for Vision and Point Cloud 3d Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12114–12123. [Google Scholar]

- Zhang, Q.; Hou, J. PointVST: Self-Supervised Pre-Training for 3D Point Clouds via View-Specific Point-to-Image Translation. IEEE Trans. Vis. Comput. Graph. 2024, 30, 6900–6912. [Google Scholar] [CrossRef]

- Zhang, C.; Wan, H.; Shen, X.; Wu, Z. Pvt: Point-Voxel Transformer for Point Cloud Learning. Int. J. Intell. Syst. 2022, 37, 11985–12008. [Google Scholar] [CrossRef]

- Gojcic, Z.; Zhou, C.; Wegner, J.D.; Wieser, A. The Perfect Match: 3d Point Cloud Matching with Smoothed Densities. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5545–5554. [Google Scholar]

- Deng, H.; Birdal, T.; Ilic, S. PPFNet: Global Context Aware Local Features for Robust 3D Point Matching. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 195–205. [Google Scholar] [CrossRef]

- Xu, M.; Ding, R.; Zhao, H.; Qi, X. Paconv: Position Adaptive Convolution with Dynamic Kernel Assembling on Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3173–3182. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the Dimensionality of Data with Neural Networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Achlioptas, P.; Diamanti, O.; Mitliagkas, I.; Guibas, L. Learning Representations and Generative Models for 3d Point Clouds. Proc. Mach. Learn. Res. 2018, 80, 40–49. [Google Scholar]

- Yang, Y.; Feng, C.; Shen, Y.; Tian, D. Foldingnet: Point Cloud Auto-Encoder via Deep Grid Deformation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 206–215. [Google Scholar]

- Chen, S.; Duan, C.; Yang, Y.; Li, D.; Feng, C.; Tian, D. Deep Unsupervised Learning of 3D Point Clouds via Graph Topology Inference and Filtering. IEEE Trans. Image Process. 2020, 29, 3183–3198. [Google Scholar] [CrossRef]

- Pang, J.; Li, D.; Tian, D. Tearingnet: Point Cloud Autoencoder to Learn Topology-Friendly Representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 7453–7462. [Google Scholar]

- Li, H.; Hao, K.; Wei, B.; Tang, X.S. A Semi-Nested Point Cloud Autoencoder for Solder Joint Inspection. In Proceedings of the 2024 7th International Symposium on Autonomous Systems (ISAS), Chongqing, China, 7–9 May 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. Learning Semantic Segmentation of Large-Scale Point Clouds with Random Sampling. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 8338–8354. [Google Scholar] [CrossRef] [PubMed]

| Dataset | Sample | #Train | #Test | #Total |

|---|---|---|---|---|

| DHU-RADER1000-ICP | Normal | 919 | 256 | 1175 |

| Defective | 0 | 256 | 256 | |

| ModelNet40-Toy-AC | Airplane | 40 | 12 | 52 |

| Chair | 20 | 6 | 26 |

| Dataset | DHU-RADER1000-ICP | DHU-PAD1000-ICP | ||||

|---|---|---|---|---|---|---|

| Partition | #Train | #Test | #Total | #Train | #Test | #Total |

| Normal | 919 | 256 | 1175 | 521 | 131 | 652 |

| Defective | 0 | 256 | 256 | 0 | 116 | 116 |

| #Total | 919 | 512 | 1431 | 521 | 237 | 768 |

| Method | Metric | DHU-RADER1000-ICP | ModelNet40-Toy-AC | ||

|---|---|---|---|---|---|

| Train/% | Test/% | Train/% | Test/% | ||

| PointNet++ (LRF) | IoU | 59.2 | 67.4 | 77.7 | 75.7 |

| RandLANet [41] | IoU | 63.2 | 69.7 | 81.6 | 87.4 |

| RIConv++ [10] | IoU | 61.5 | 68.1 | 79.3 | 87.1 |

| Recursive segmentation | IoU (k = 1) | 73 | 82.7 | 75.4 | 85.7 |

| IoU (k = 2) | 67.4 | 68.1 | - | - | |

| Method | #Points | Memory Cost/MB | Time Cost/ms | FLOPs | Params |

|---|---|---|---|---|---|

| PointNet++ (LRF) | 1273 | 16.2 | 18.5 M | 0.564 M | |

| PointNet++ (LRF) | 1366 | 36.5 | 278.2 M | 0.564 M | |

| PointNet++ (LRF) | 2946 | 184.8 | 3949.8 M | 0.564 M | |

| PointNet++ (LRF) | 4788 | 2839.5 | 8112.1 M | 0.564 M | |

| RandLANet | 5598 | 421.3 | 15,456.8 M | 1.3 M | |

| RandLANet | 14,672 | 1249.1 | 46,373.6 M | 1.3 M | |

| RIConv++ | 3017 | 218.3 | 4125.8 M | 0.436 M | |

| RIConv++ | 4923 | 3074.2 | 8239.6 M | 0.436 M | |

| Ours () | 1205 | 10.3 | 21.3 M | 0.546 M | |

| Ours () | 1265 | 18.0 | 129.1 M | 0.614 M | |

| Ours () | 1369 | 70.4 | 265.3 M | 0.698 M |

| Model | / | / | / | ||||||

|---|---|---|---|---|---|---|---|---|---|

| SD | SD | SD | |||||||

| DHU-PAD1000-ICP | |||||||||

| OCCNN [20] | 0 | 6.1 | - | 0 | 5.3 | - | 0 | 8.4 | - |

| FoldingNet [37] | 12.8 | 79.4 | - | 35.4 | 22.9 | - | 27.3 | 30.5 | - |

| TargetNet [39] | 13.7 | 80.9 | - | 29.5 | 32.8 | - | 28.1 | 37.4 | - |

| SN-PAE | 9.7 | 68.6 | - | 8.7 | 64.9 | - | 8.9 | 67.9 | - |

| DHU-RADER1000-ICP | |||||||||

| FoldingNet [37] | 14.6 | 75.3 | 0.62 | 28.3 | 17.6 | 14.7 | 24.1 | 35.8 | 5.3 |

| TargetNet [39] | 15.2 | 78.4 | 0.74 | 31.4 | 23.7 | 15.2 | 23.7 | 39.2 | 4.8 |

| SN-PAE | 6.97 | 73.8 | 0.49 | 7.12 | 73.8 | 0.51 | 6.93 | 73.9 | 0.43 |

| SN-PAE | / | / | / | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| LRF | LAM | SD | SD | SD | ||||||

| DHU-PAD1000-ICP | ||||||||||

| ✗ | ✗ | 13.5 | 81.7 | - | 38.4 | 18.3 | - | 33.6 | 24.4 | - |

| ✓ | ✗ | 12.6 | 78.4 | - | 12.8 | 78.2 | - | 12.6 | 78.4 | - |

| ✓ | ✓ | 9.7 | 68.6 | - | 8.7 | 64.9 | - | 8.9 | 67.9 | - |

| DHU-RADER1000-ICP | ||||||||||

| ✗ | ✗ | 14.2 | 76.9 | 0.67 | 26.1 | 16.4 | 13.7 | 20.7 | 42.6 | 4.6 |

| ✓ | ✗ | 13.5 | 76.1 | 0.52 | 13.6 | 76.3 | 0.53 | 13.5 | 76.1 | 0.52 |

| ✓ | ✓ | 6.97 | 73.8 | 0.49 | 7.12 | 73.8 | 0.51 | 6.93 | 73.9 | 0.43 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Hao, K.; Zhuang, T.; Zhang, P.; Wei, B.; Tang, X.-s. A Robust 3D Fixed-Area Quality Inspection Framework for Production Lines. Processes 2025, 13, 3300. https://doi.org/10.3390/pr13103300

Li H, Hao K, Zhuang T, Zhang P, Wei B, Tang X-s. A Robust 3D Fixed-Area Quality Inspection Framework for Production Lines. Processes. 2025; 13(10):3300. https://doi.org/10.3390/pr13103300

Chicago/Turabian StyleLi, Haijian, Kuangrong Hao, Tao Zhuang, Ping Zhang, Bing Wei, and Xue-song Tang. 2025. "A Robust 3D Fixed-Area Quality Inspection Framework for Production Lines" Processes 13, no. 10: 3300. https://doi.org/10.3390/pr13103300

APA StyleLi, H., Hao, K., Zhuang, T., Zhang, P., Wei, B., & Tang, X.-s. (2025). A Robust 3D Fixed-Area Quality Inspection Framework for Production Lines. Processes, 13(10), 3300. https://doi.org/10.3390/pr13103300