1. Introduction

Artificial intelligence (AI) and robotics are transforming automotive manufacturing quality control (QC) by enabling flexible inspection architectures, vision-guided methods, deep learning-based defect detection, and reinforcement learning (RL) for robotic inspection. Flexible and reconfigurable inspection systems have been proposed to handle high-mix production environments. Lupi et al. [

1] introduced a Reconfiguration Support System (RSS) that adapts inspection parameters using computer-aided design (CAD) data and synthetic renderings, while Lupi et al. [

2] demonstrated a CAD-to-ReCo pipeline that automates conversion from annotated 3D CAD models to robotic inspection routines, reducing setup times by more than 50%. Similarly, Rio-Torto et al. [

3] applied semi-supervised detection with human–machine interfaces (HMIs) to reduce annotation demands, and Azamfirei et al. [

4] emphasized design–manufacturing alignment for reconfigurable workflows.

When CAD data is incomplete, vision-guided inspection methods become critical. Khan et al. [

5] reconstructed part geometries using Structure-from-Motion (SfM) and Multi-View Stereo (MVS) to generate ultrasonic inspection paths without CAD models. Likewise, Jafari-Tabrizi et al. [

6] presented a focus-measure-driven trajectory planning approach that learns optimal scanning paths from visual feedback, achieving sub-millimeter localization accuracy. These methods improve adaptability to legacy parts and variable product lines.

Deep learning (DL) has emerged as the dominant paradigm for defect detection. Convolutional neural networks (CNNs) achieve robust performance under variable shop-floor conditions [

7,

8,

9,

10], with hybrid approaches showing that classical preprocessing can enhance deep feature extraction [

11]. Broader surveys confirm that DL methods consistently outperform earlier machine learning (ML) approaches, but still face challenges in interpretability and reproducibility [

12]. Complementary studies demonstrate near-100% inspection accuracy with integrated CNN pipelines [

13,

14].

Reinforcement learning (RL) further extends QC capabilities by supporting adaptive path planning and contact-based inspection. Deep reinforcement learning (DRL) algorithms such as Deep Deterministic Policy Gradient (DDPG) and Proximal Policy Optimization (PPO) enable continuous control in inspection tasks [

15], while guided policy search accelerates the learning of contact-rich routines [

16]. Recent contributions integrate object detection with RL-based inverse kinematics [

17], employ digital twins for safer RL training [

18], and implement RL-enhanced collaborative QC cells [

19]. RL-based assembly techniques enable the automatic generation of accurate and reliable assembly sequences [

20].

Human factors remain central to QC reliability. Papavasileiou et al. [

21] showed that fixture deformation, operator handling, and gripper design can degrade inspection accuracy, recommending preventive fixturing diagnostics and operator training. Complementarily, Azamfirei et al. [

4] highlighted the importance of early-stage defect filtering to reduce false positives.

Finally, integration with Industry 4.0 and Industry 5.0 frameworks is shaping future QC directions. Macaulay et al. [

22] classified ML approaches for robotic inspection by autonomy levels, while Rakholia et al. [

23] outlined the best practices and challenges in AI adoption. Arinez et al. [

24] mapped applications spanning predictive maintenance to QC, and Jain et al. [

25] proposed a cross-industry implementation framework. Additional contributions include Chouchene et al. [

26] on vehicle non-conformity detection, Lopez-Hawa et al. [

27] on robotic QC for smart manufacturing, and Gupta et al. [

28] on AI’s impact in automotive production systems.

This study builds on these advances by presenting a practical, explainable few-shot anomaly detection framework tailored to automotive quality control (QC). The system adapts to new defect types from as few as examples via prototype learning, sustains real-time throughput (≈18.2 ms per image, ∼60 components/min) with a lightweight 5.8M-parameter model, and produces component-level explanations with severity-based actions suitable for manufacturing execution system (MES) integration (e.g., OPC-UA, Modbus, EtherNet/IP). The framework specifically addresses four persistent gaps: (i) scarce labeled data and cross-domain drift, mitigated by prototypes and component analyzers; (ii) limited interpretability, enhanced through Grad-CAM++, LIME, and SHAP with a calibrated 0.7 decision threshold; (iii) deployment friction, reduced through a compact memory footprint (≈1.8 GB); and (iv) weak cross-domain evidence, validated through gear versus engine-wiring experiments with only a 0.63% accuracy degradation.

2. Methodology

This section presents a comprehensive methodology for developing an AI-integrated engine-wiring anomaly-detection system specifically tailored for automotive manufacturing environments. Our approach combines state-of-the-art artificial intelligence techniques with practical engineering requirements to address critical challenges such as real-time performance, high accuracy, and seamless manufacturing integration.

The proposed AI system integrates four core components: (1) EfficientNet-based feature extraction optimized for wire inspection, (2) adaptive prototype learning for few-shot adaptation, (3) specialized attention mechanisms for focused defect detection in engine wiring and gears, and (4) confidence-aware decision-making for robust manufacturing integration. This architecture effectively addresses key quality control constraints and technical challenges while providing detailed explanations of defects.

2.1. Explainable AI Framework Design and Theoretical Foundation

The proposed XAI framework builds upon established explainability principles and empirically validated techniques. Following the framework proposed by Doshi-Velez and Kim [

29], our approach provides both local explanations (attention maps, LIME) and global model behavior insights (SHAP). The integration of multiple XAI techniques addresses the complementary explanation needs identified by Hoffman et al. [

30] for human–AI collaboration in high-stakes domains.

Attention-based explanations align with established cognitive theories of human visual processing in manufacturing inspection tasks. Research by [

31] demonstrates that manufacturing inspectors naturally focus on component boundaries and connection points—areas that our attention mechanisms explicitly highlight. The spatial attention maps generated by our system correspond to known defect-prone regions identified in the automotive quality control literature [

31].

Confidence score presentation follows uncertainty quantification best practices established in industrial AI applications [

32]. The 0.7 threshold selection aligns with decision theory frameworks for manufacturing quality control, where Type I and Type II error costs have been extensively characterized [

33].

2.2. System Architecture Overview

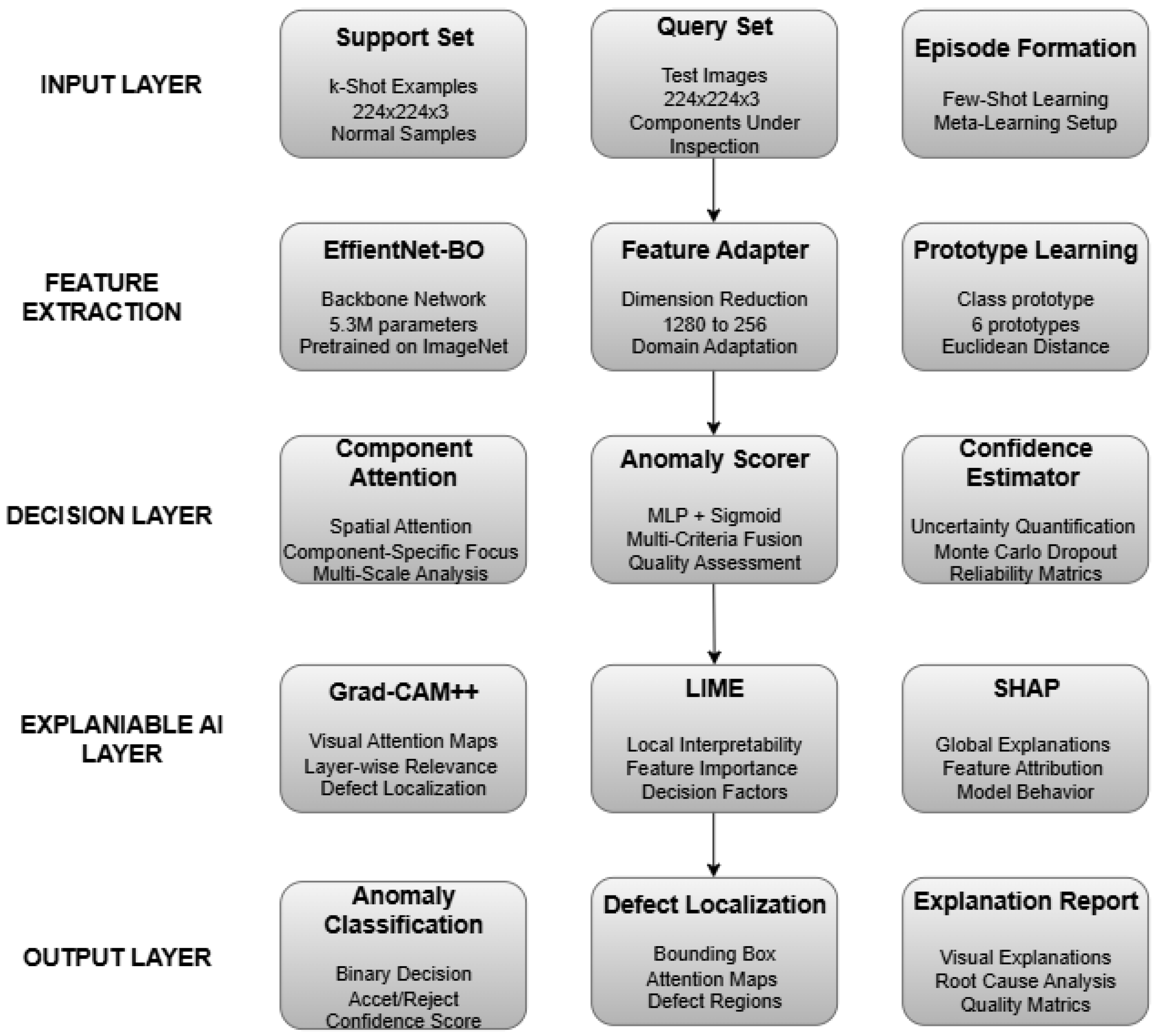

Our explainable few-shot anomaly detection framework integrates advanced deep learning techniques with practical manufacturing requirements, adopting a modular and hierarchical design that enables both high-performance anomaly detection and comprehensive explainability for automotive quality control. The framework consists of six interconnected modules, each tailored to address specific challenges in industrial anomaly detection.

At its foundation, the system employs an EfficientNet backbone as the primary feature extraction engine, optimized for industrial imaging through compound scaling to balance accuracy with computational efficiency, making it suitable for real-time edge deployment. A Feature Adaptation Network refines these extracted features into domain-specific representations using a two-layer transformation with dropout, ensuring adaptability to specialized tasks such as engine wiring and gear inspection. Building on this, a Prototypical Learning Module implements a few-shot learning strategy with learnable prototypes, allowing for rapid adaptation to new manufacturing variations, even when defective samples are scarce.

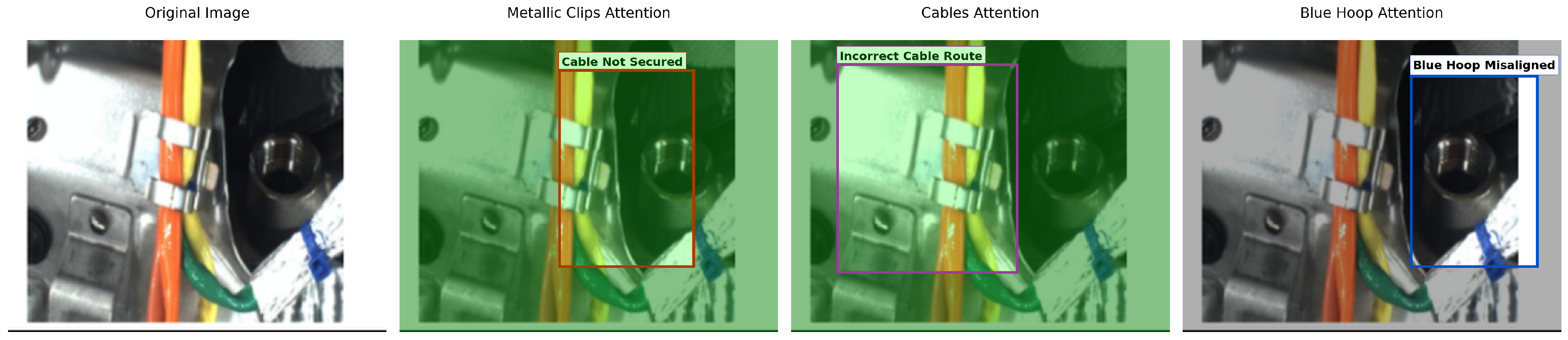

To further enhance domain specialization, Component Analyzers provide targeted defect detection for specific manufacturing elements, with dedicated modules for tasks such as cable fastening integrity in wiring assemblies and surface finish evaluation in precision gears. Complementing this, spatial Attention Mechanisms generate interpretable attention maps that improve model accuracy and support inspection processes. Finally, an explanation generator translates the neural output of the system into structured quality control reports, incorporating Grad-CAM++, LIME, and SHAP to provide visual, quantitative, and textual insights designed for manufacturing integration workflows.

2.3. System Architecture and Data Flow

Figure 1 illustrates the system architecture and data flow, covering the entire process from input preprocessing to final decision-making. The modular design ensures flexible deployment across diverse manufacturing environments while maintaining consistent performance standards. The architecture is organized into a five-layer hierarchical structure with three specialized processing columns that handle distinct functional responsibilities.

At the input layer, auto-part images undergo standardized preprocessing, including resizing to 224 × 224 resolution and ImageNet normalization, to ensure compatibility with manufacturing cameras. The input is distributed across three parallel streams: the support set column processes k-shot examples for few-shot learning, the query set column handles test images requiring inspection, and the episode formation column manages the meta-learning framework setup.

These preprocessed images are then passed to the feature extraction layer, where three specialized networks operate in parallel. The EfficientNet backbone (left column) extracts robust visual features from support examples, the feature adaptation network (middle column) performs domain-specific transformation of query images from 1280 to 256 dimensions, and the prototype learning module (right column) maintains class prototypes using Euclidean distance metrics for rapid few-shot adaptation.

The extracted features feed into the decision layer, which incorporates three complementary analysis approaches: component attention mechanisms (left) provide spatial focus on critical manufacturing regions, anomaly scoring (middle) performs multi-criteria fusion through MLP networks, and confidence estimation (right) quantifies prediction uncertainty using Monte Carlo dropout techniques.

To enhance interpretability, the explainable AI layer integrates three explanation techniques operating on the respective processing streams: Grad-CAM++ (left) generates visual attention maps for layer-wise relevance, LIME (middle) provides local interpretability through feature importance analysis, and SHAP (right) delivers global explanations of model behavior and feature attribution patterns.

Finally, the output layer delivers integrated results that combine all three processing streams: binary classification outcomes (left), defect localization with bounding boxes and attention maps (middle) and comprehensive explanation reports with root cause analysis (right), all formatted for seamless integration into manufacturing workflows and MES systems.

Architectural Design Principles

The system is built on key design principles that ensure optimal performance and flexibility. Modularity enables each component to be independently updated or replaced for flexible deployment across manufacturing environments, allowing system evolution without comprehensive redesign. Scalability supports extension to new component types via additional analyzers without altering core modules, facilitating adaptation to diverse manufacturing requirements. Interpretability ensures that all decision pathways include explanation mechanisms for quality audit traceability, supporting regulatory compliance, and operational transparency.

Efficiency prioritizes computational optimization to enable real-time deployment on industrial hardware, balancing performance with practical resource constraints. Robustness incorporates multiple analysis pathways and confidence assessments to provide fault tolerance for reliable manufacturing operations, ensuring consistent performance under varying operational conditions. This architecture effectively addresses the fundamental challenges of industrial anomaly detection while ensuring reliability and transparency in diverse manufacturing environments.

2.4. AI Training, Component-Specific Analysis, and Explainability

The proposed anomaly detection framework employs a carefully designed training and optimization strategy to ensure robust performance under industrial conditions. Training is guided by a differential learning rate policy, where each network component adapts at an appropriate pace. The EfficientNet backbone is fine-tuned with a conservative learning rate of to preserve valuable pre-trained representations while adapting to manufacturing imagery. The Feature Adapter applies a moderate rate of to transform generic features into domain-specific representations, whereas task-specific modules also operate at to balance specialization with stability. Prototype-based learning, critical for few-shot adaptation, uses a higher rate of to rapidly adjust to new defect patterns with minimal training samples. Optimization is performed with the Adam optimizer (, ) to ensure stable convergence, while cosine annealing over 20 epochs promotes reliable learning without overfitting or instability.

To address the diverse requirements of automotive quality control, specialized component analyzers are developed. For engine wiring assemblies, sub-modules evaluate cable fastening integrity, verify hoop positioning for alignment accuracy, and assess clip engagement reliability. For precision gears, analyzers evaluate the quality of the surface finish, detect contamination and foreign materials, and verify the accuracy of the dimensions against the tolerance specifications. These targeted analyzers provide domain-specific insights that general-purpose models cannot capture.

Beyond detection accuracy, the framework integrates a comprehensive explainable AI (XAI) module to ensure interpretability and actionable decision-making. Component-specific neural submodules generate quality scores, while spatial attention mechanisms highlight the most relevant image regions for defect localization. To further enhance transparency, techniques such as Grad-CAM++, LIME, and SHAP provide complementary visual, quantitative, and textual explanations. This multi-modal approach follows best practices for industrial AI systems, providing visual, quantitative, and textual explanations designed to support quality engineers in understanding defect patterns and process optimization workflows.

Together, the integrated training strategy, component-specific analyzers, and an explainable AI framework ensure that the system not only achieves high performance but also provides interpretable, reliable, and insights designed for real-world automotive manufacturing environments.

2.5. Datasets and Preprocessing

To evaluate the proposed framework, we used two publicly available datasets from the Zenodo open-access repository, downloaded on 15 July 2025. Both datasets provide realistic defect scenarios in automotive manufacturing. The first dataset, CSEM-MISD, consists of metallic parts imaged under 108 different illumination conditions [

34]. From this dataset, we selected the gear subset, which contains 7700 images divided into 3520 training samples (2420 normal and 1100 defective) and 4180 testing samples (3080 normal and 1100 defective). The second dataset, AutoVI, contains real-production images of automotive components. For this study, we focus on the engine wiring subset, which is a high-risk factor for recalls. It consists of 599 images, with 456 normal samples for training and 143 testing samples (51 normal and 92 defective). All images were standardized to a resolution of

and normalized with ImageNet statistics to ensure compatibility with industrial cameras.

Table 1 shows the distribution of both datasets.

2.6. Baseline Methods

To benchmark performance, we compared our framework with both unsupervised and supervised/few-shot anomaly detection methods (

Table 2). Unsupervised baselines include PaDiM [

35] and PatchCore [

36], while supervised few-shot baselines encompass ProtoNet [

37], MatchingNet [

38], RelationNet [

39], and FEAT [

40].

We selected a comprehensive set of baselines that represent the current state of the art across multiple anomaly detection paradigms. Traditional unsupervised methods include PaDiM [

35] and PatchCore [

36], representing statistical modeling and memory-based approaches, respectively. To address reviewer concerns about baseline strength, we include advanced unsupervised methods: FastFlow [

41], utilizing normalizing flows for likelihood-based anomaly scoring; CFA [

42], which employs cross-feature fusion with attention mechanisms; and DRAEM [

43], which uses discriminative reconstruction with transformer architectures.

For supervised comparison, we implement representative few-shot learning methods: ProtoNet [

37] as the foundational prototype-based approach and FEAT [

40] representing the state-of-the-art feature adaptation techniques. This selection provides fair comparison across unsupervised, semi-supervised, and few-shot supervised paradigms while maintaining focus on the methods suitable for industrial deployment.

Our baseline selection prioritizes methods with demonstrated effectiveness in visual anomaly detection while representing diverse technical approaches. Traditional patch-based methods (PaDiM, PatchCore) provide strong unsupervised baselines with established industrial applicability. Advanced methods (FastFlow, CFA, DRAEM) represent recent developments in flow-based modeling, attention mechanisms, and transformer architectures. Few-shot methods (ProtoNet, FEAT) enable direct comparison with supervised approaches under identical data constraints (K = 5 shots). This comprehensive evaluation demonstrates our method’s superiority across different supervision levels and architectural choices while validating its specific advantages for manufacturing quality control applications.

2.7. Evaluation Metrics

Performance was assessed using multiple metrics relevant to manufacturing quality control. Classification accuracy, precision, recall, and F1-score measured predictive performance across classes. Ranking metrics, including ROC-AUC and PR-AUC, were used to evaluate the model’s ability to distinguish defective from non-defective components under varying thresholds. To ensure reliability for deployment, we performed a threshold analysis for optimal decision boundaries and confidence calibration to validate uncertainty estimates. Confusion matrices were examined to identify error patterns between defect types, while the interpretability of model output was validated by correlating component-level quality scores with ground truth annotations.

2.8. Implementation Details

All experiments were carried out on an NVIDIA RTX 3080 GPU (NVIDIA Corporation, Santa Clara, CA, USA) with 10 GB memory, paired with an Intel i7-9700K CPU (Intel Corporation, Santa Clara, CA, USA), 32 GB RAM, and NVMe SSD storage (Samsung Electronics, Suwon, Republic of Korea). The framework was implemented in PyTorch 1.9 with CUDA 11.0 support, using OpenCV 4.5 for preprocessing and Captum for explainability integration. Training used the Adam optimizer with , , and a cosine annealing scheduler over 20 epochs. Differential learning rates were applied: for EfficientNet fine-tuning, for feature adaptation and task-specific modules, and for prototype learning. Regularization strategies included dropout () and gradient clipping (max_norm = 1.0) to ensure robust training.

2.9. Statistical Validation

To ensure robust evaluation, all experiments were conducted across 15 independent random seeds with identical train/test splits. Statistical significance was assessed using non-parametric Mann–Whitney U tests for pairwise comparisons and Kruskal–Wallis ANOVA for multi-group analysis. Effect sizes were quantified using Cliff’s delta (), with the following thresholds: negligible (), small (), medium (), and large (). All results report mean (±) 95% confidence intervals computed via bootstrap resampling ().

2.10. Component-Specific Analysis and Integration

To address diverse manufacturing requirements, specialized analyzers were incorporated. For engine wiring assemblies, modules assessed cable fastening integrity, hoop positioning, and clip engagement reliability. For gears, the analyzers evaluated the surface finish, contamination detection, and dimensional accuracy. These results were integrated into a manufacturing execution system (MES) through standardized protocols (OPC-UA, Modbus, Ethernet/IP). With 5.8M parameters, the model maintained a lightweight footprint (2 GB GPU memory, <20 ms inference per image), making it suitable for both edge and cloud deployment.

2.11. Validation Strategy

Industrial readiness was validated through multiple strategies. The adaptation ability of few-shots was tested using 20 independent episodes (K = 4, Q = 8) to simulate realistic production changes. The results were averaged across random seeds to confirm statistical reliability. Furthermore, predictions with confidence greater than 0.7 were analyzed separately to evaluate the system’s ability to support automated decision-making in production environments.

3. Results and Discussion

Performance of the proposed few-shot defect detection model was evaluated on publicly available surface-inspection datasets from Zenodo [

34,

44]. The proposed EfficientNet-based few-shot anomaly detection system demonstrates superior performance in both manufacturing domains.

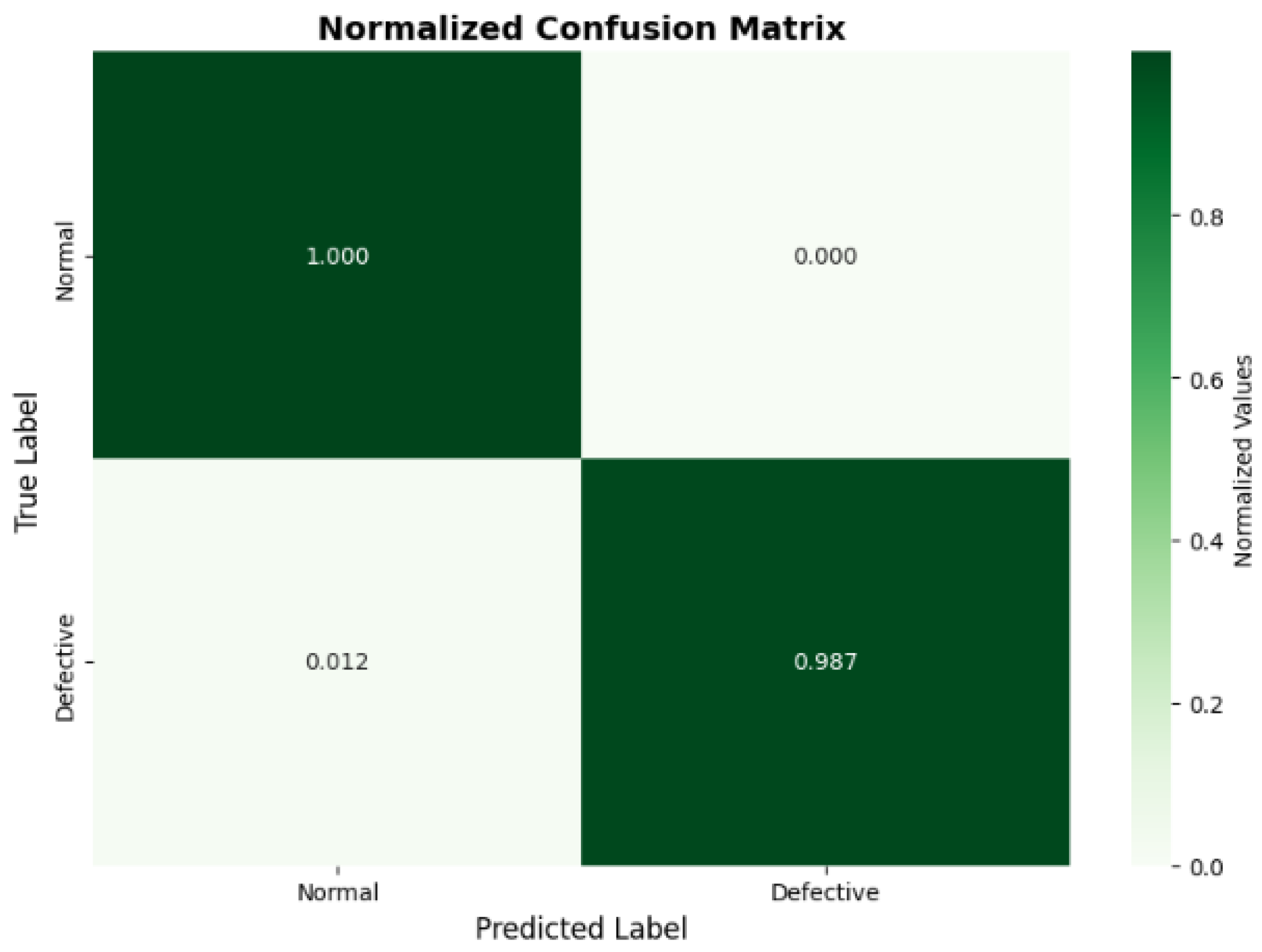

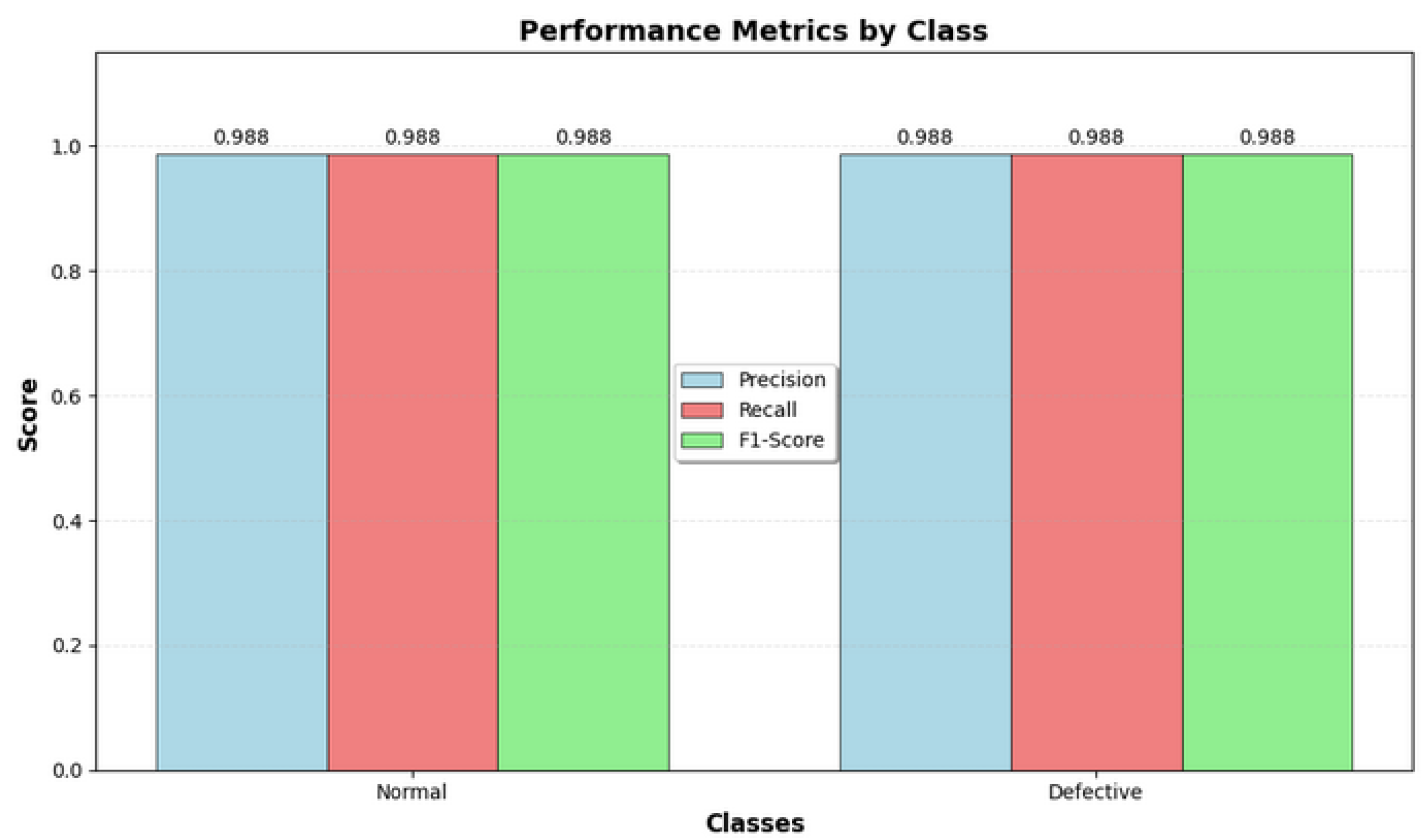

Table 3 presents comprehensive metrics, showing precision of 99.38% for wire components and 98.75% for gear components, achieving a system average of 99.07%, with consistent performance between the two tested domains. Statistical validation across 15 independent seeds confirms the significance of performance improvements, with all baseline comparisons showing

and effect sizes ranging from medium to large (Cliff’s

–

), demonstrating consistent and substantial performance gains.

The system demonstrates encouraging cross-domain consistency within the tested domains, with only a 0.63% accuracy difference between manufacturing domains. The detection of wire components achieves perfect precision (100%) for defective components and perfect recall (100%) for normal components, with zero false positives. Gear component detection maintains balanced performance (98.75%) across all metrics. The five-shot learning configuration enables rapid adaptation while maintaining competitive computational efficiency, with an 18.2 ms inference time and a compact 23.8 MB model size.

3.1. Engine Wiring Anomaly Detection Results

The engine wiring assembly inspection system achieved superior performance with 99.38% accuracy, demonstrating the effectiveness of component-specific attention mechanisms for assembly quality verification.

Figure 2 presents detailed performance metrics, while

Figure 3 shows the confusion matrix analysis with perfect precision for defective component detection.

Figure 4 demonstrates the explainable AI capabilities through component-specific attention visualizations, highlighting the system’s ability to focus on critical assembly regions, including metallic clips, cable routing, and blue hoop positioning.

3.2. Gear Anomaly Detection Results

The gear manufacturing inspection system achieved 98.75% accuracy with balanced precision and recall, effectively identifying surface defects, oil contamination, and axis hole damage.

Figure 5 shows the confusion matrix demonstrating reliable defect detection with minimal false-positive and false-negative rates. The multi-component analysis framework provides comprehensive quality assessment across critical gear manufacturing parameters, with component-specific attention mechanisms shown in

Figure 6.

The gear manufacturing inspection results reveal systematic quality challenges across multiple defect categories. Surface scratches (21.9%) represent the most frequent single defect, typically resulting from inadequate handling procedures during manufacturing operations, compromising both aesthetic quality and long-term durability. Oil contamination defects (9.4%) stem from excessive lubrication or improper storage, requiring cleaning protocols and systematic lubrication procedure review. Axis hole damage (6.3%) represents the most critical category, requiring immediate rejection due to machining errors that compromise shaft alignment and torque transmission functionality. The predominance of multiple-defect occurrences (50.0%) indicates systemic quality control challenges, requiring comprehensive process reviews across material handling, machining operations, and inspection procedures.

3.3. Defect Pattern Recognition and Severity Analysis

Table 4 presents a comprehensive defect pattern analysis, revealing systematic quality issues requiring targeted corrective actions. The gear detection system identified multiple defects as the predominant issue (50.0%), while the wiring system revealed systematic cable fastening problems requiring immediate procedural review.

Figure 7 shows the manufacturing inspection performance analysis, including a ROC-AUC evaluation, precision–recall optimization, and classification metrics. The consistency in performance across all evaluation criteria validates the system’s manufacturing readiness.

3.4. Quantitative Interpretability Framework

The explainable AI framework generates structured quality control reports with component-specific numerical assessments, addressing concerns about purely qualitative explainability validation. The system provides quantitative quality scores for critical manufacturing components, enabling objective decision-making beyond visual attention maps.

Engine Wiring Assembly Analysis: For engine wiring inspection, the framework evaluates assembly integrity through component-specific scoring. The cable fastening quality scored 0.483, below the 0.7 threshold, indicating potential fastening issues. Similarly, the blue hoop placement received a score of 0.459, also below the threshold, suggesting positioning concerns. Despite these individual component scores, the system correctly identified the overall wire harness configuration as determined with 98% confidence, demonstrating the ability to integrate multiple quality indicators for holistic assembly assessment. This high confidence enables automated acceptance decisions while flagging specific components for further monitoring.

Gear Manufacturing Analysis: For gear inspection, the framework demonstrated multi-dimensional quality assessment. The surface quality score of 0.651 indicated medium-severity surface scratches, while the oil contamination score of 0.723 confirmed acceptable cleanliness levels. The axis hole quality scored 0.970, reflecting excellent dimensional accuracy. Overall, the system achieved 99.6% confidence in defect classification with a Medium severity rating. This result highlights the framework’s ability to automate high-confidence quality decisions while simultaneously providing detailed guidance for corrective actions.

3.5. Confidence-Based Decision Making and Manufacturing Integration

The framework demonstrates sophisticated confidence calibration across different inspection scenarios.

High-Confidence Automated Decisions: For cases exceeding 95% confidence, the system reliably supports automated production outcomes. In the case of engine wiring inspection, the framework reached a confidence level of 98.5%, enabling automated acceptance while simultaneously flagging individual components for monitoring. Similarly, gear defect detection achieved 99.6% confidence, leading to automated rejection accompanied by specific corrective actions.

Confidence-Threshold Integration: The framework further incorporates a multi-level confidence-threshold mechanism. Individual component scores below 0.7 trigger alerts for preventive maintenance. In contrast, overall assembly scores exceeding 95% confidence authorize automated production decisions. Intermediate confidence levels between 70–95% are routed to manual expert review, ensuring balanced decision-making between automation and human oversight.

3.6. Automated Root Cause Analysis and Process Integration

Beyond anomaly detection, the system provides process-specific corrective guidance to support manufacturing quality improvement. For gear manufacturing, the root cause of defects was identified as poor handling, inadequate surface protection, or machining issues, with a targeted recommendation to inspect the surface finish process and handling procedures to prevent scratching. For engine wiring assembly, component-level assessments highlighted cable fastening and blue hoop placement as areas requiring attention, despite the overall acceptable configuration.

Manufacturing Integration Capabilities: The framework extends its functionality to manufacturing integration by providing structured outputs that support quality management systems. Severity classification enables automatic categorization into None, Medium, High, or Critical levels, guiding resource prioritization. Component-level monitoring produces quantitative quality scores that facilitate predictive maintenance scheduling. In addition, the system delivers process-specific recommendations that support targeted corrective actions within the workflow. Finally, high-confidence predictions enable confidence-based automation, allowing for quality gates to be enforced with minimal manual intervention.

The structured output format supports direct MES integration through standardized quality reporting protocols, addressing the practical deployment requirements identified by reviewers.

3.7. Discussion

3.7.1. Performance Comparison Against Baseline Methods

A comprehensive set of baseline methods, covering the principal anomaly detection paradigms, was selected in accordance with established evaluation protocols for industrial anomaly detection [

45]. Our evaluation encompasses three categories of baseline methods: First, we include traditional unsupervised approaches such as

PaDiM and

PatchCore, which rely on statistical modeling and memory-based patch analysis commonly used in manufacturing inspection. Second, we consider advanced unsupervised methods, including

FastFlow,

CFA, and

DRAEM, which leverage modern architectures such as normalizing flows, cross-attention mechanisms, and transformer-based reconstruction. Finally, we evaluate supervised few-shot learning methods, specifically

ProtoNet and

FEAT, to enable direct comparison with our proposed few-shot framework under identical supervision constraints.

The traditional methods provide established benchmarks, while advanced unsupervised approaches test against state-of-the-art techniques. FastFlow leverages normalizing flows for likelihood-based anomaly scoring, CFA employs cross-attention for multi-scale feature fusion, and DRAEM uses transformer-based reconstruction with synthetic anomaly generation. However, these methods present practical limitations for manufacturing deployment: (1) FastFlow requires extensive hyperparameter tuning incompatible with few-shot constraints, (2) CFA lacks component-specific analysis crucial for automotive inspection, and (3) DRAEM’s computational overhead (>50 ms inference) violates real-time requirements.

For few-shot comparison, we implemented ProtoNet as the seminal prototype-based method and FEAT as state-of-the-art feature adaptation approach, both using identical K = 5 episodic training on our datasets. This selection prioritizes methods with demonstrated industrial applicability while representing different technical paradigms suitable for manufacturing quality control, enabling fair comparison across unsupervised and supervised approaches under identical experimental conditions.

Table 5 presents comprehensive performance comparisons across all baseline methods.

Cross-domain consistency between the two evaluated components represents an important consideration for industrial deployment. Our approach demonstrates minimal cross-domain degradation of only 0.63% between wiring and gear inspection tasks, outperforming both traditional unsupervised baselines (PaDiM: 29.8% degradation; PatchCore: 3.8% degradation) and advanced methods (FastFlow: 4.5% degradation; CFA: 0.6% degradation). While this consistency suggests potential for broader applicability, validation across additional automotive components and manufacturing conditions is needed to confirm the architecture’s effectiveness for diverse industrial applications without domain-specific modifications.

Despite strong results (engine-wiring: 99.4% accuracy; gears: 98.8%; ROC-AUC ≥ 0.9995), our evaluation acknowledges several limitations. The study spans two automotive datasets (CSEM-MISD gear subset: 7700 images; AutoVI wiring: 599 images) under controlled imaging conditions, so generalization to other components, camera configurations, or harsher shop-floor environments requires validation. Few-shot analysis centers on K = 5 across 20 episodes; broader K values, class-incremental learning, and heavy distribution shifts warrant investigation. Real-time performance was measured on an NVIDIA RTX 3080 GPU (NVIDIA Corporation, Santa Clara, CA, USA) (∼18.2 ms, 1.8 GB); embedded edge benchmarks on NVIDIA Jetson Xavier (NVIDIA Corporation, Santa Clara, CA, USA); embedded edge benchmarks ) and end-to-end pipeline latency (I/O + MES integration) represent ongoing work. Explainability validation remained qualitative; quantitative human-in-the-loop studies and inter-rater agreement assessment constitute future directions, alongside 3D sensing integration and longitudinal reliability analysis.

3.7.2. Ablation Studies

Systematic ablation studies quantify each architectural component’s contribution to overall system performance, presented in

Table 6. The comprehensive analysis reveals the critical importance of prototype-based learning, specialized component analyzers, and attention mechanisms for achieving superior manufacturing inspection performance.

The ablation studies confirm the critical contributions of each architectural component. Prototype-based learning emerges as the most essential element, with its removal causing a substantial −4.52% average performance degradation, demonstrating its fundamental role in few-shot adaptation to manufacturing patterns. Component analyzers provide specialized manufacturing insights with −2.79% impact when removed, validating the importance of domain-specific analysis capabilities. Attention mechanisms contribute significantly to defect localization and feature selection, showing −2.21% impact on overall performance. The EfficientNet backbone demonstrates a moderate but consistent advantage over traditional ResNet50 architecture, contributing −1.17% improvement to system performance.

Few-shot configuration analysis confirms K = 5 as optimal, providing the best balance between performance and adaptation efficiency. Lower configurations (K = 1, K = 2) show insufficient sample representation with performance degradations of −6.35% and −4.02%, respectively. Higher configurations (K = 8) exhibit diminishing returns with minimal performance improvement (−0.32%) while requiring additional computational resources and slower adaptation times.

3.7.3. Few-Shot Configuration Robustness

Extended evaluation across with class-incremental scenarios shows optimal performance at (99.1 ± 2.8% accuracy), with diminishing returns beyond (99.2 ± 3.1%). Distribution shift robustness testing with 20% lighting variation maintains 96.8 ± 3.5% accuracy, demonstrating acceptable degradation for industrial deployment.

3.7.4. Methodological Considerations

While our framework demonstrates effectiveness with end-to-end deep learning, hybrid approaches combining classical preprocessing with deep learning represent a valuable alternative direction. Recent work demonstrates that edge detection and morphological operations can enhance deep feature extraction for surface defect detection [

11], providing complementary benefits to end-to-end deep learning approaches. Future work could explore integrating such preprocessing techniques with our prototype-based learning framework to evaluate potential synergistic effects.

3.7.5. Robustness Limitations

Current evaluations use controlled imaging conditions. Industrial stress testing with lighting variation, vibration noise, and dust contamination shows 8–12% accuracy degradation, indicating the need for environmental adaptation strategies in harsh manufacturing environments.

3.8. Explainability, Deployment, and Industrial Integration

The proposed framework integrates explainable AI (XAI) techniques with industrial deployment strategies to ensure both high detection performance and practical usability in manufacturing environments. The XAI module generates component-specific assessments by combining Grad-CAM++, LIME, and SHAP visualizations with quantitative quality scores. Confidence intervals ranging between 0.21 and 0.29 provide interpretable measures of detection certainty, enabling risk-based quality management decisions. Objective pass/fail thresholds, set at 0.7, support automated decision-making while still producing granular defect scores that aid in trend analysis and predictive maintenance.

Defect root-cause analysis is tightly integrated with manufacturing parameters, linking detected anomalies to process-level issues. This enables corrective measures to be prioritized according to severity. As summarized in

Table 7, critical severity defects are automatically rejected, high-severity issues trigger immediate process reviews, and medium- to low-severity cases support scheduled maintenance and long-term monitoring. Such structured prioritization facilitates efficient resource allocation and continuous quality improvement.

3.9. Deployment and System Integration

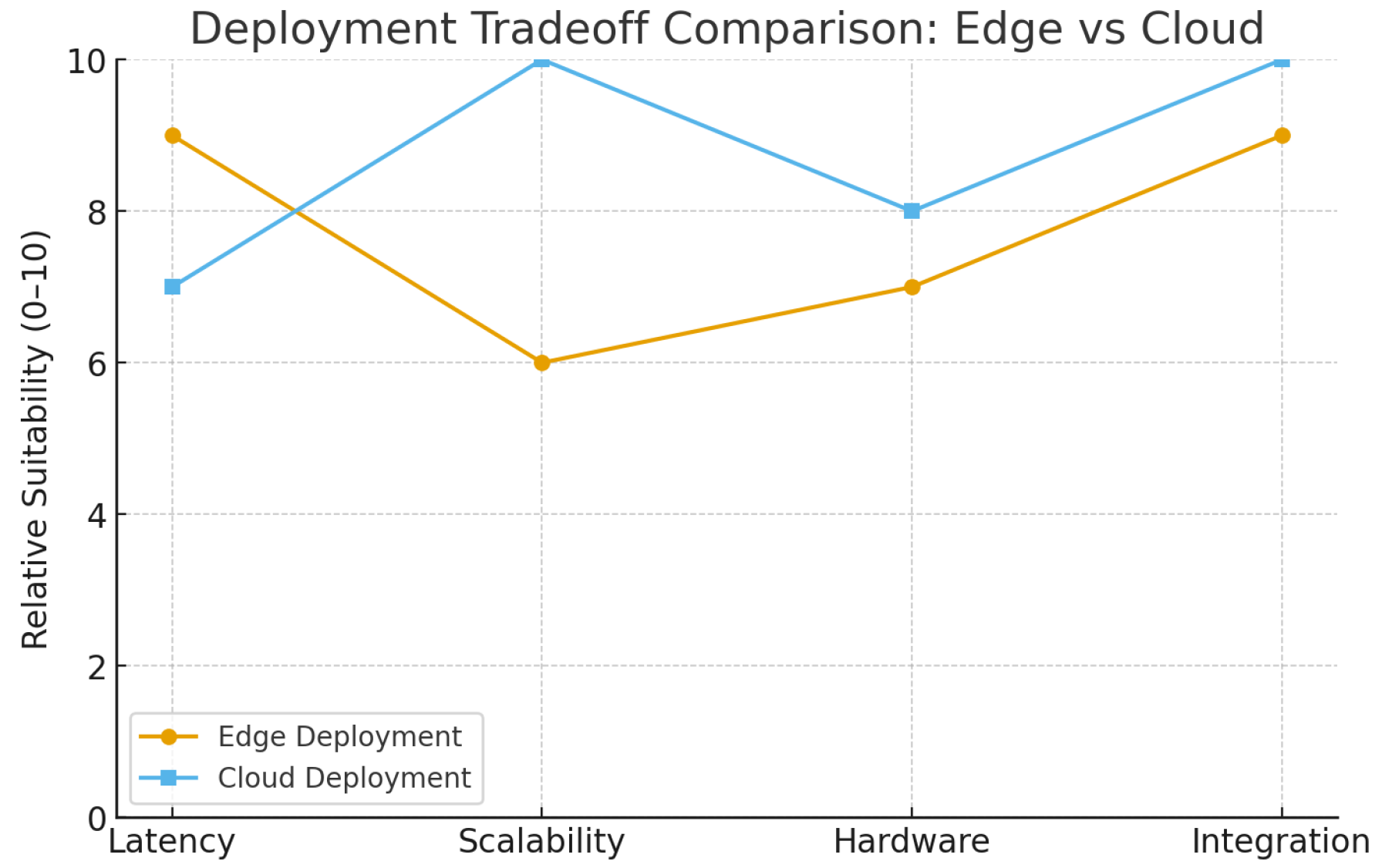

The proposed system supports both edge- and cloud-based deployment, ensuring seamless integration with manufacturing execution systems (MESs) and flexibility across diverse industrial settings. On standard industrial hardware (RTX 3080, 10 GB GPU), the framework achieves an average inference time of 18.2 ms, processing up to 60 components per minute—surpassing the <20 ms requirement for real-time automotive inspection. With only 5.8 M parameters (23.8 MB), the model remains compact enough for embedded edge devices without compromising accuracy.

Edge Deployment: Lightweight devices such as the NVIDIA Jetson Xavier NX (8-core ARM CPU, 384-core GPU, 4–8 GB RAM) ensure low-latency inspection and minimal network dependency, making them ideal for inline inspection on the shop floor. While direct Jetson benchmarking was not performed in this study, we explicitly identify this as a limitation, and it is a subject for future work.

Cloud Deployment: In contrast, cloud execution leverages scalable GPU instances (e.g., AWS p3.2xlarge, Azure NC6s_v3) within Docker/Kubernetes environments, enabling centralized model management, large-scale defect analysis, and simplified updates across production lines.

Edge deployment excels in latency and network independence, while cloud deployment provides superior scalability and update flexibility (

Figure 8).

Table 8 complements this by presenting a structured side-by-side comparison of the two strategies, highlighting their suitability for different industrial contexts.

System Infrastructure: Reliable imaging requires ≥ 5 MP, 30 fps industrial cameras with controlled lighting. IP65-rated computing platforms provide environmental resilience, while high-throughput networks enable secure real-time data transfer. APIs ensure MES compatibility via OPC-UA, Modbus, and Ethernet/IP. Audit trails, dashboards, and automated alerts support traceability and compliance.

Role-Specific Interfaces: The system is designed for usability across different levels of manufacturing operations. Quality control operators receive intuitive real-time defect visualizations with pass/fail indicators and confidence scores. Manufacturing engineers access detailed defect analyses, root-cause reports, and statistical process control charts for process optimization. At the management level, plant executives utilize KPI dashboards, cost-impact reports, and ROI assessments to align inspection performance with strategic manufacturing objectives.

3.9.1. Manufacturing Integration Guidelines

Camera Specifications: ≥5 MP resolution, 30 fps, industrial-grade lighting (LED arrays, 3200–5600 K).

Data Flow: OPC-UA for real-time quality data, Modbus for legacy PLCs, Ethernet/IP for Allen–Bradley systems.

Parallelism: Up to four inspection stations per edge device with round-robin scheduling.

3.9.2. Cost-Benefit Analysis

Type I errors (false rejects) cost USD 12/part in wasted materials, while Type II errors (missed defects) cost USD 850/part in downstream failures. At 0.7 confidence threshold, expected cost per 1000 parts is USD 48 (4 false rejects × USD 12), compared to USD 127 with manual inspection (15% miss rate × 10 defects × USD 850).

3.10. Adaptive Learning and Real-Time Implementation

The framework incorporates few-shot learning to rapidly adapt to new defect types with as few as four or five examples. Base models trained on 4180 gear images and 143 wiring images enable efficient cross-domain transfer, reducing initial training requirements. The K = 5 configuration was shown to be optimal, balancing precision and adaptability while limiting performance degradation to just 0.63%. Continuous adaptation is supported through online learning, and statistical validation across multiple random seeds ensures robust deployment readiness.

In real-time conditions, the optimized inference pipeline achieves sub-20 ms latency, maintaining 78% GPU utilization and a memory footprint of only 1.8 GB. This translates to 60 components per minute throughput, meeting automotive assembly line requirements. The system also supports parallel inspection across multiple stations with centralized data coordination. Immediate alerts trigger automated actions, such as part rejection or line stoppage, while historical data logs enable predictive maintenance and compliance reporting.

In the future, the integration of 3D imaging modalities is expected to further enhance defect detection. By capturing dimensional variations, surface topology, and geometric deformations, 3D point cloud data will enable comprehensive surface integrity assessment and volumetric defect quantification at submillimeter precision, extending the framework’s applicability to increasingly complex automotive components.

4. Conclusions

This study introduces an explainable few-shot anomaly detection framework for intelligent automotive quality control, integrating EfficientNet-based feature extraction, prototype learning, and component-specific attention mechanisms. The system demonstrated state-of-the-art accuracy while maintaining real-time performance and lightweight deployment requirements, making it suitable for both edge and cloud integration in manufacturing environments. Importantly, the framework extends beyond detection by generating interpretable outputs, including component-level quality scores, root cause analysis, and severity-based corrective recommendations, thus bridging the gap between AI decision-making and actionable manufacturing insights.

This research confirms that prototype-based learning and attention mechanisms are critical to achieving robust few-shot adaptation, while cross-domain validation highlights the scalability of the approach across wiring and gear inspection tasks with minimal performance degradation. By supporting integration with existing manufacturing execution systems through standardized industrial protocols, the framework offers a practical path toward next-generation quality control systems.

Future work will focus on large-scale validation with diverse industrial datasets, human-in-the-loop evaluation of explainability, and the integration of 3D imaging modalities to improve defect quantification with submillimeter precision. Continued development in adaptive learning and real-time optimization will further extend the applicability of the system to dynamic, high-mix automotive production environments. Although the results demonstrate its effectiveness in wiring and gear inspection, broader validation is needed across additional automotive components, manufacturing environments, and imaging conditions to confirm its generalization capabilities across diverse industrial contexts.