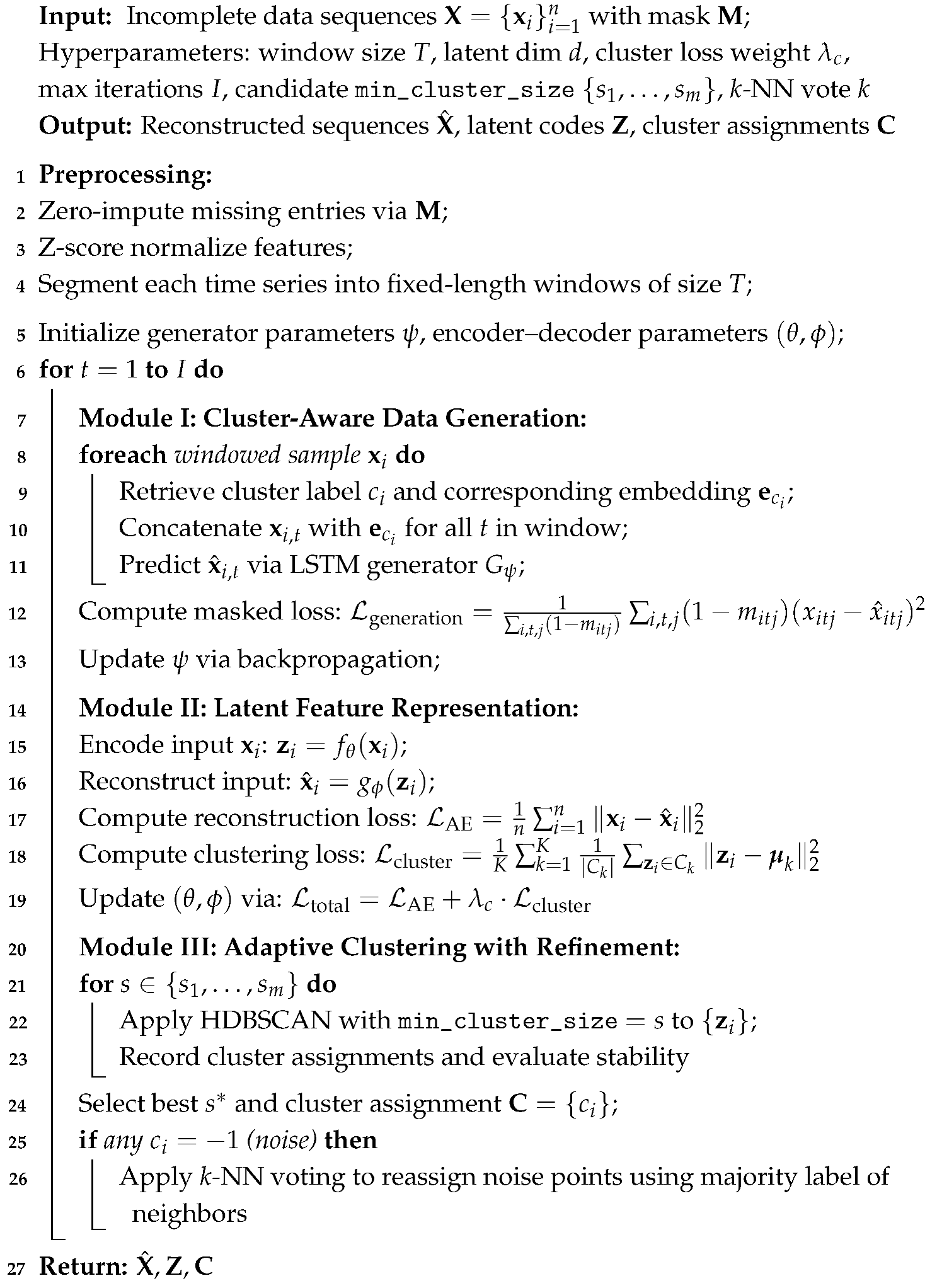

This section presents a comprehensive evaluation of the proposed GRAIL framework using a real-world distributed photovoltaic dataset. We begin by describing the dataset and the preprocessing pipeline. We then introduce the evaluation metrics, present a comparative analysis of GRAIL against several baseline models, and conclude with an in-depth analysis of the framework’s performance.

3.1. Data

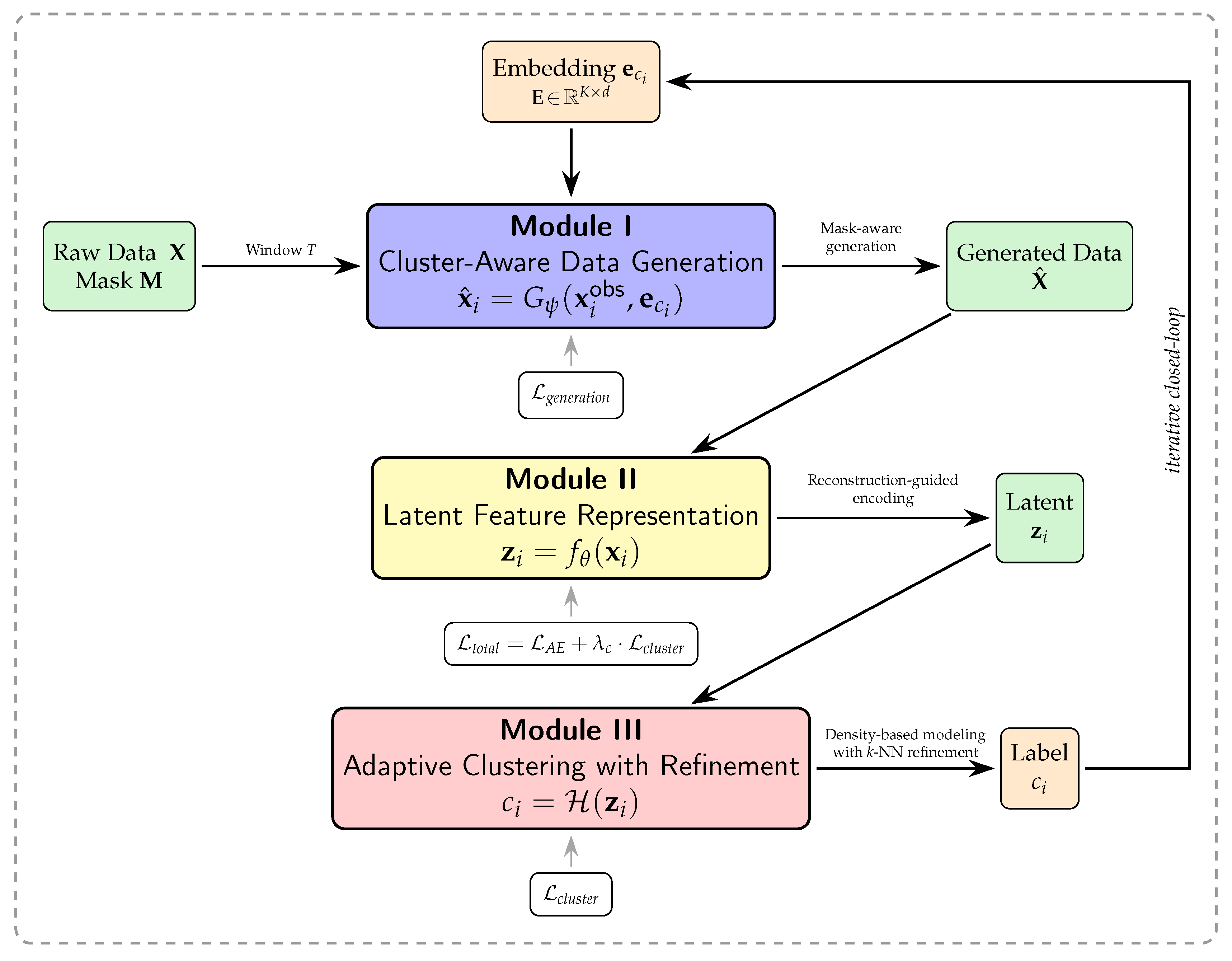

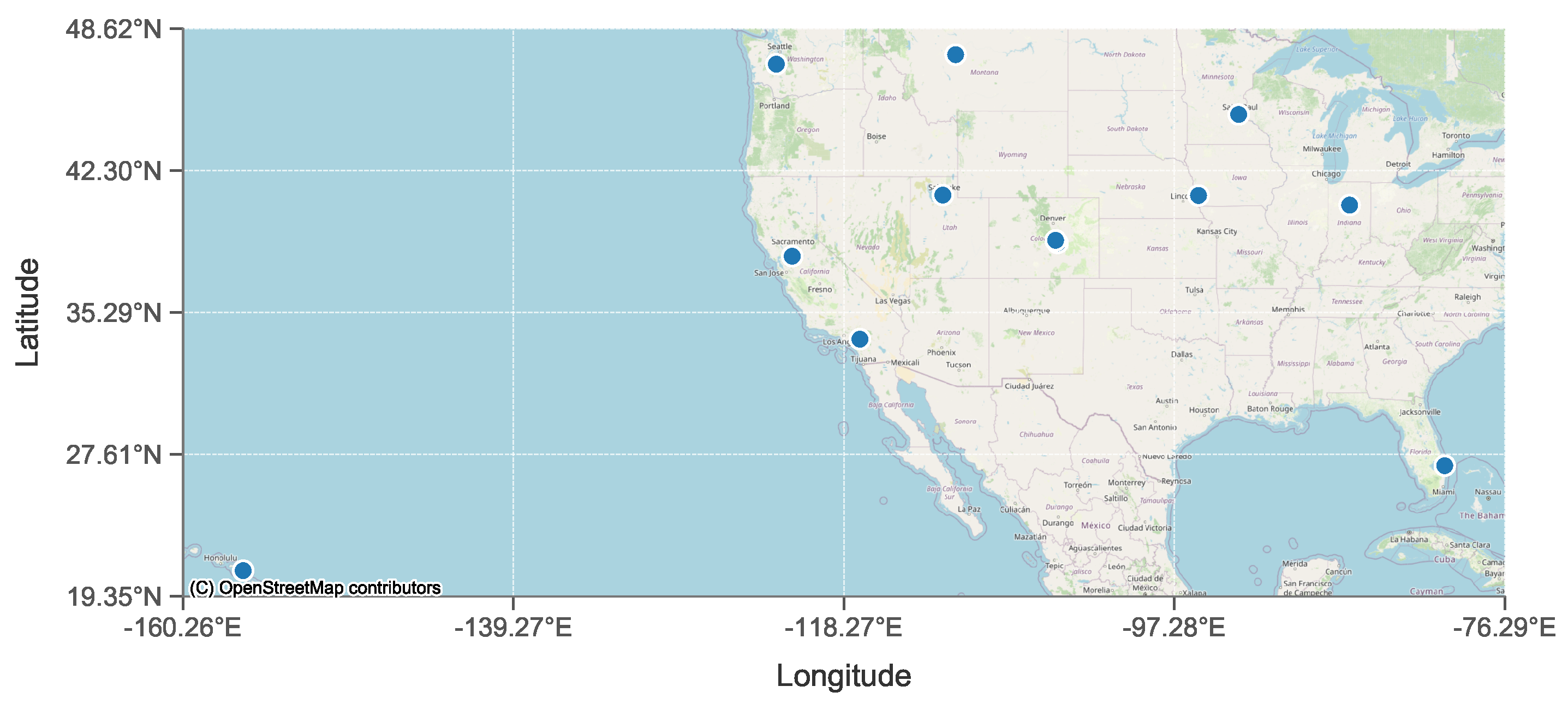

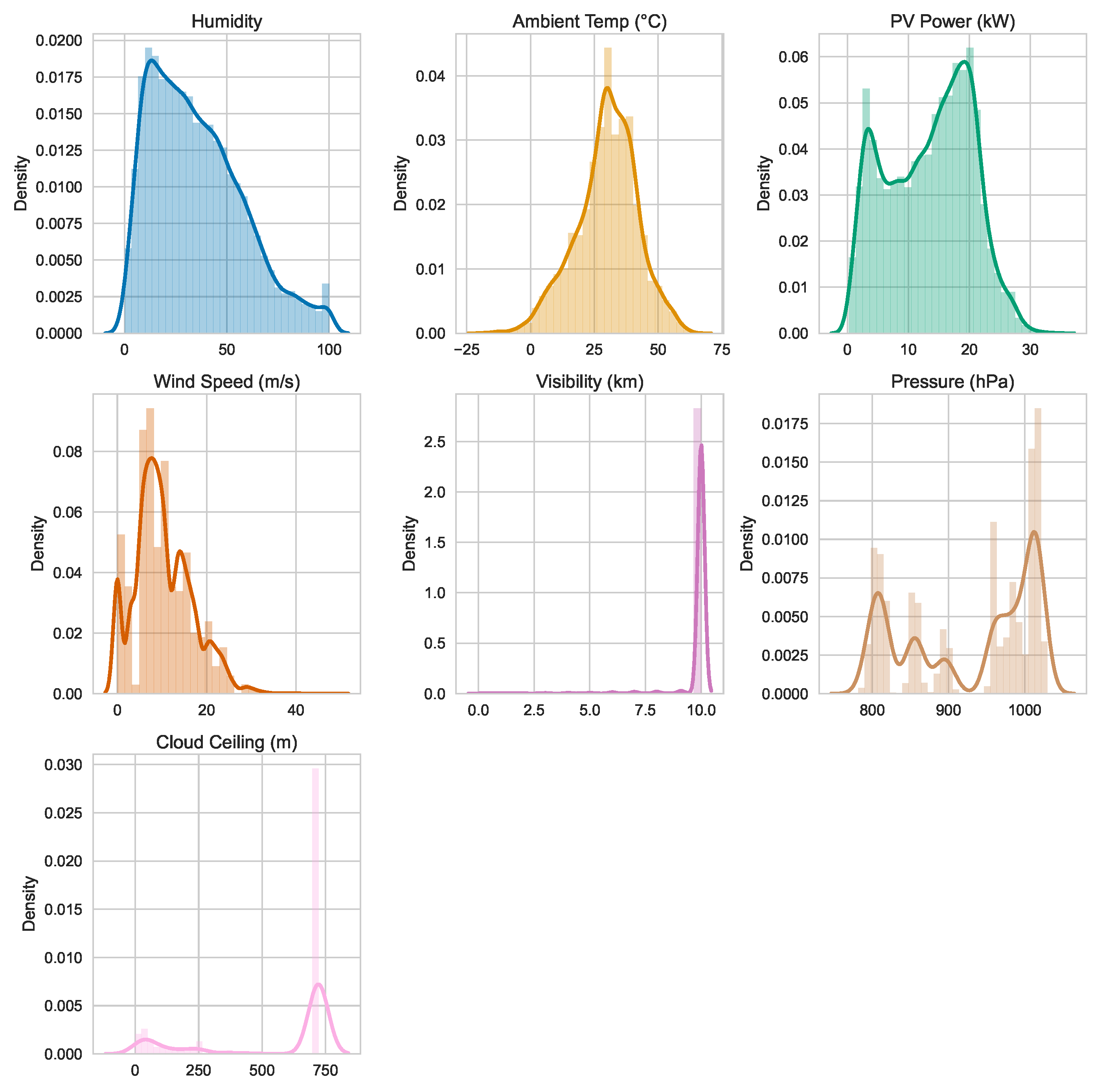

The dataset employed in this study consists of multi-site spatiotemporal records collected from horizontal photovoltaic panels deployed across 12 distinct locations in the Northern Hemisphere, covering a continuous 14-month observation period. As visualized in

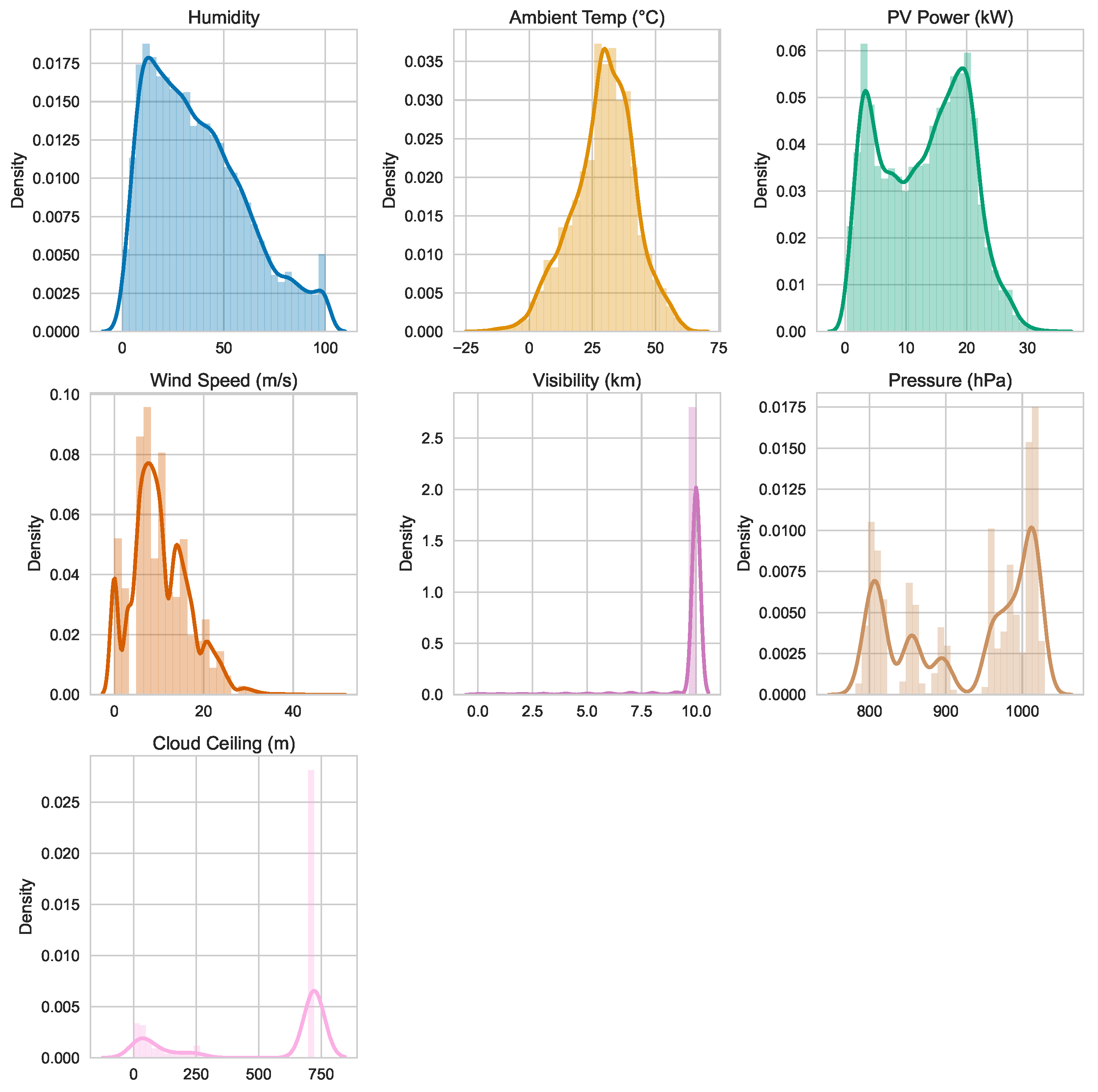

Figure 2, the sites span a broad geographic range from approximately 160° W to 76° W longitude and 19° N to 48° N latitude, encompassing diverse coastal, island, and inland settings. Each observation contains 17 raw features, including meteorological covariates like humidity, ambient temperature, wind speed, visibility, surface pressure, and cloud ceiling, which exhibit wide variability, as shown in

Figure 3,

Figure 4 and

Figure 5. This environmental and geographic diversity, compounded by a substantial missing data rate of 45.4%, presents a realistic and challenging scenario reflective of heterogeneous operating conditions in distributed PV fleets. These characteristics motivate the development of methods that are robust to both data sparsity and cross-site variability. The specific sites analyzed are Camp Murray, Grissom, Hill Weber, JDMT, Kahului, MNANG, Malmstrom, March AFB, Offutt, Peterson, Travis, and USAFA.

Notably, the dataset does not contain direct measurements of solar irradiance. This omission is by design and reflects common operational conditions where irradiance series are frequently unavailable or unreliable due to factors such as sensor outages, soiling, calibration drift, and biases in satellite or reanalysis products [

38,

39,

48]. Since solar irradiance is a dominant driver of PV generation, its absence poses a realistic and nontrivial modeling challenge. Our study explicitly targets this irradiance-scarce regime. The proposed framework is designed to infer irradiance-dependent dynamics solely from other observable environmental covariates and latent cross-site structural information. This capability is critical for developing robust models that can be deployed in practical settings where high-quality irradiance data cannot be guaranteed.

To balance temporal resolution with computational efficiency, the original PV data sampled at 15-min intervals, shown in

Figure 3, are aggregated into hourly windows using mean pooling as illustrated in

Figure 4. This results in a total of 18,312 aggregated samples. A visual comparison of the marginal distributions before and after this process confirms that the statistical properties of the resampled data remain highly consistent with the original series across all numerical predictors. This consistency indicates that key temporal and meteorological patterns are well preserved after aggregation, supporting the suitability of hourly-resolution data for the subsequent modeling.

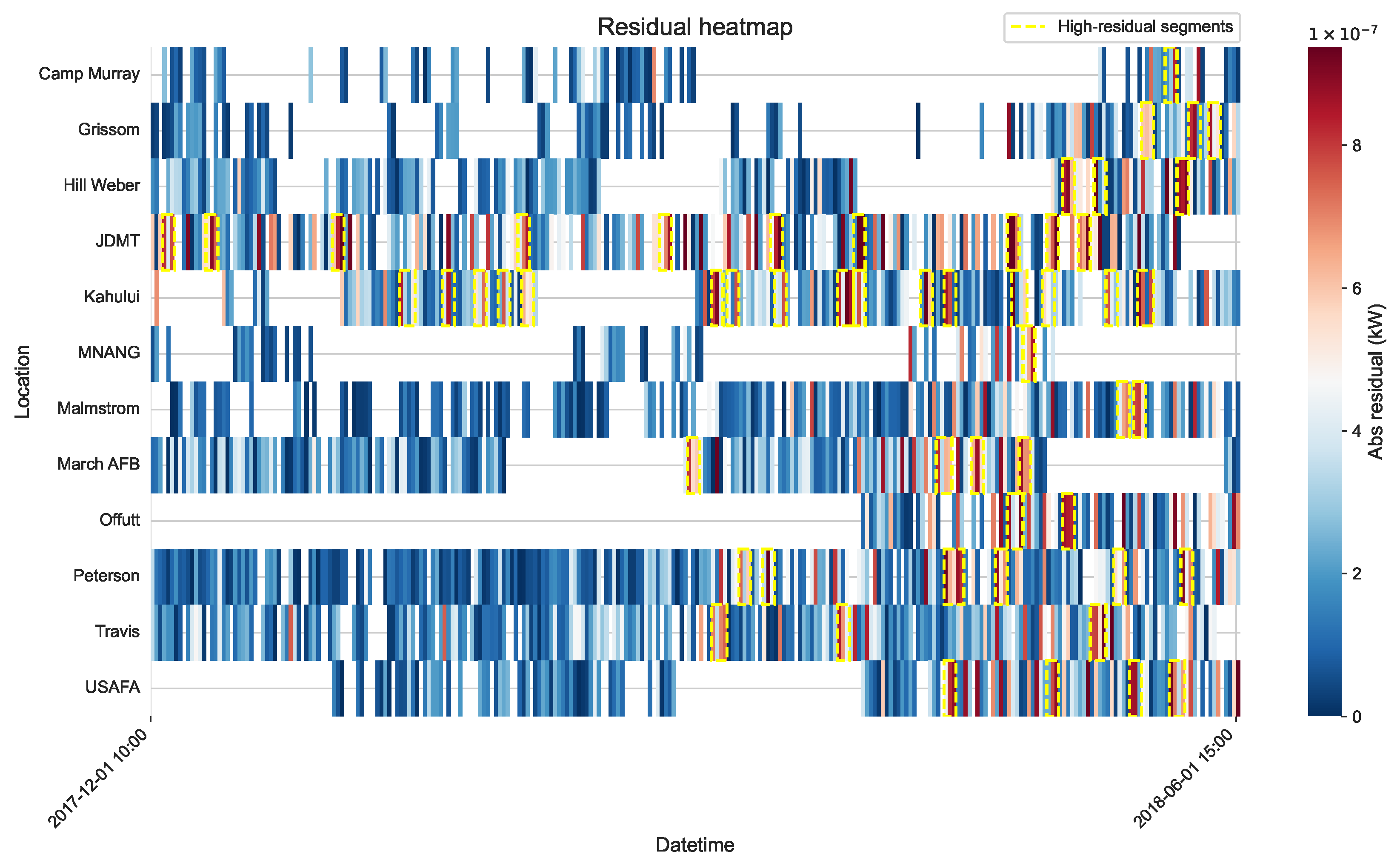

The dataset also exhibits considerable heterogeneity in temporal coverage and data completeness across the different sites, as shown in

Table 1. The start and end timestamps of data collection vary significantly among locations, reflecting differences in sensor deployment timelines and operational stability. To mitigate this temporal misalignment and ensure consistent coverage, a common subwindow from 1 December 2017 10:00:00 to 1 June 2018 15:00:00 is selected, yielding a dataset of 7192 hourly observations. The statistical distribution of this final dataset, shown in

Figure 5, remains aligned with both the original 15-min and the aggregated hourly data, confirming the validity of the selected subwindow for a cross-site comparative analysis.

Despite temporal alignment, the refined dataset exhibits substantial sparsity. Within the selected time frame, an expected count of 13,176 hourly records is present for a complete dataset. However, only 7192 valid observations exist, which indicates a high missingness rate of 45.4%. This degree of incompleteness underscores the critical need for modeling techniques capable of operating effectively under conditions of severe data sparsity. The GRAIL framework, enabling effective modeling under severe data absence by capturing shared temporal dynamics and latent variability structures across space and time, is capable of solving the problem.

To enhance the temporal representativeness and modeling efficacy of the aggregated hourly dataset, we perform feature engineering and standardization. First, a suite of calendar-based features is derived to enrich the temporal context of each observation, including IsWeekend, Month, Day, Hour, DayOfWeek, DayOfYear, and WeekOfYear. Predictors designed by feature engineering capture distinct aspect of temporal periodicity relevant to photovoltaic output dynamics, respectively.

To encode the cyclical nature inherent in attributes mentioned above, sine and cosine transformations are applied as follows:

where

x denotes a time-based feature and

P is the corresponding period. Specifically, we use

P = 6 for Hour feature (reflecting the key 10:00 to 15:00 operational window),

P = 7 for DayOfWeek, and

P = 365 for DayOfYear. This transformation maps discrete time indices onto the unit circle, preserving continuity and facilitating smooth transitions for temporal modeling. Such encoding scheme is advantageous for RNN architecture that benefits from continuous and differentiable inputs. Conversely, the Year attribute is excluded from the final feature set. Its variance over the 2017–2018 sampling window is negligible, rendering it uninformative for learning meaningful temporal patterns.

All continuous numerical variables are standardized using z-score normalization:

where

and

represent the empirical mean and standard deviation the feature, respectively.

This normalization procedure places all inputs on a comparable scale. As a result, it improves numerical stability, reduces the model’s sensitivity to initial parameter values, and can accelerate convergence during training.

After data preprocessing, the resulting dataset comprises 7192 hourly samples, each with 25 features. These features can be categorized into three groups:

Spatiogeographic Descriptors including Location, Latitude, Longitude, and Altitude.

Meteorological and PV Measurements including Season, Humidity, AmbientTemperature, PowerOutput, WindSpeed, Visibility, Pressure, and CloudCeiling.

Temporal Encodings including IsWeekend, Month_sin, Month_cos, Day_sin, Day_cos, Hour_sin, Hour_cos, DayOfWeek_sin, DayOfWeek_cos, DayOfYear_sin, DayOfYear_cos, WeekOfYear_sin, and WeekOfYear_cos.

The enriched feature space provides the foundation for the GRAIL framework to learn latent irradiance-sensitive dynamics from auxiliary environmental and calendar signals, even in the absence of direct solar irradiance measurements.

3.2. Experiment

To systematically evaluate the performance of various clustering algorithms on spatiotemporal photovoltaic data, a comprehensive benchmark across eight representative methods is conducted. These comparators are selected to instantiate four orthogonal axes of the design space. The first axis is the algorithmic family, spanning partition-based, density-based, and hierarchical density approaches. The second axis is the data representation, distinguishing between classical methods operating on raw features and deep methods using autoencoder latent embeddings. A third axis is the training paradigm, which contrasts two-stage deep embedding with joint optimisation. The final axis is the use of generative augmentation. This taxonomy covers the classic-to-deep spectrum relevant to unsupervised PV clustering under missing data. The first group consists of classical algorithms K-means, DBSCAN, and HDBSCAN. The second category comprises deep clustering approaches DE-KMeans, DE-DBSCAN, DE-HDBSCAN, DEC-HDBSCAN, and our proposed GRAIL framework.

Table 2 summarises the mapping of these models to the design axes. Furthermore, to isolate the effect of the generator, we also evaluate a no-generation configuration where the generator is disabled and incomplete data is handled via listwise deletion. The results of this analysis are presented in

Section 3.4.2.

The hyperparameters in Algorithm 1 are configured with specific default values and initialization strategies. The input window length

T is set to 32. For the LSTM generator, evaluation of recurrent depths

ℓ from

and hidden sizes

h from

shows no significant accuracy differences. To balance runtime and memory, we adopt a shallow configuration with

, a hidden size of

, and a dropout rate of

. This choice maintains framework generality since the model is agnostic to the specific recurrent design [

45] and aligns with prior photovoltaic forecasting practices that often use shallow LSTM to mitigate overfitting and meet deployment constraints [

38]. Each cluster label is mapped to a learnable embedding of dimension

through a table

. For a sample window with label

, the conditioning vector

is concatenated to every time step and then fed to the generator. The embedding table

is initialized using Xavier-uniform initialization with zero biases. We apply a mild

penalty on

and a small dropout with

on

to reduce overfitting in sparsely observed clusters. A default value of

is chosen because a sensitivity check over

shows negligible differences and offers a favorable latency-memory trade-off. The autoencoder’s latent dimension

is set to 10, while additional ablation studies evaluate values of 8 and 16. The training process runs for

outer iterations. The clustering-consistency weight

is initialized at 1.0 and annealed using a piecewise schedule. It is set to 1.0 for iterations 1 to 5, 0.5 for iterations 6 to 10, 0.2 for iterations 11 to 15, and 0.1 for iterations 16 to 20. For HDBSCAN, we scan the

min_cluster_size over twenty logarithmically spaced candidates ranging from 0.1% to 50% of the total number of sites

N. We select the solution located in a stability plateau that maximizes cluster persistence. The

min_samples hyperparameter is set to 10 by default and is tuned over values of 3, 5, 10, and 20. After clustering, points labeled as noise are reassigned using a

k-NN majority vote with

, while values of 3, 7, and 11 are also evaluated. Models are trained using the Adam optimizer with a learning rate of

and a weight decay of

. A StepLR scheduler is applied with a

step_size of 5 and a

of 0.5. The batch size is 16 for the RNN generator and 128 for the autoencoder. Both components are trained for 10 epochs within each outer iteration. All networks are randomly initialized and initial cluster labels are set to

. Random seeds are fixed to ensure reproducibility. We conduct further sensitivity studies by varying

T across

,

I across

, and

across

while repeating the HDBSCAN searches described previously.

For all models employing an HDBSCAN clustering head, the min_cluster_size is determined by sweeping over 20 logarithmically spaced candidates between and of the dataset size N. The min_samples parameter is tuned from the set . Any points initially labeled as noise are subsequently reassigned using a k-NN majority vote, with k selected from . All neural network-based models are trained using the Adam optimizer with a learning rate of and a weight decay of , paired with a StepLR scheduler configured with a step size of 5 and a decay factor of 0.5. The cluster-embedding dimension is set to and the autoencoder latent size to . Unless stated otherwise, experiments are run for outer iterations using an input window length of . Within each outer iteration, the LSTM generator is trained for 10 epochs with a batch size of 16, while the autoencoder is trained for 10 epochs with a batch size of 128. The clustering-consistency weight is annealed from to across the iterations. For the classical baselines, the K-means algorithm is implemented with K-means++ initialization, and the number of clusters K is selected via the elbow method. For DBSCAN, the min_samples parameter is fixed at 5, and the neighborhood radius is chosen from a grid guided by an analysis of k-distance plots.

K-means is a canonical partitioning algorithm that minimizes intra-cluster variance by iteratively assigning samples to the nearest centroid. Since the number of clusters must be predefined, we determine this hyperparameter by applying the elbow method to a candidate range of cluster counts. To enhance stability and mitigate sensitivity to initial conditions, we employ the K-means++ initialization heuristic, which improves convergence and reduces the likelihood of settling in suboptimal local minima.

DBSCAN represents a classical density-based algorithm, excels at discovering clusters of arbitrary shape without requiring a predefined cluster count. It identifies clusters by connecting high-density regions, governed by a neighborhood radius and a minimum point count min_samples. In our implementation, min_samples is set to 5, and the radius is selected via a grid search informed by k-distance plots, which help estimate the intrinsic neighborhood scale of the data. To mitigate fragmentation from points classified as noise, a post-processing step reassigns these outliers based on a majority vote among their nearest non-noise neighbors, thereby improving cluster completeness and spatial coherence.

HDBSCAN extends DBSCAN by introducing a hierarchical, density-based framework that adapts to clusters of varying densities without requiring a strict distance threshold. This makes it particularly well-suited for complex, heterogeneous data distributions. Its primary hyperparameters, including the minimum cluster size, are optimized via a grid search over a range proportional to the dataset size. A refinement step is also applied to relabel ambiguous points initially marked as noise, enhancing the structural integrity of the final partitioning.

DE-KMeans follows a two-stage paradigm that decouples representation learning from clustering. First, an autoencoder is trained to map the raw input data into a compact latent space, capturing a denoised and task-relevant structure. The K-means algorithm is then applied to partition the resulting latent embeddings. This design leverages the power of deep feature extraction while retaining the simplicity of centroid-based clustering. The number of clusters is determined using the elbow method, and the latent space remains fixed during clustering to ensure stable and reproducible results.

DE-DBSCAN substitutes the centroid-based K-means in the DE-KMeans pipeline with a density-based alternative. By applying DBSCAN to the learned latent space, this method can identify non-convex and irregularly shaped clusters. The autoencoder is trained to compress the input sequences into a semantically structured embedding space, where DBSCAN operates using a fixed minimum point count and a neighborhood radius selected via k-distance analysis.

DE-HDBSCAN advances the deep density-based approach by coupling HDBSCAN with the latent embeddings from an autoencoder. This pairing enables the discovery of multi-scale density structures within a compact and robust feature space. The minimum cluster size is optimized through a grid search over logarithmically spaced values. To suppress over-fragmentation, a minimum number of core neighbors is specified, and ambiguous points are relabeled using nearest-neighbor voting.

DEC-HDBSCAN represents a more integrated paradigm where feature learning and clustering are jointly optimized. The model first initializes cluster assignments using HDBSCAN on the latent representations. It then iteratively refines both the cluster assignments and the autoencoder’s parameters using a clustering-aware loss, which encourages the encoder to learn more discriminative features. The primary hyperparameter is the minimum cluster size, which is selected by scanning over a predefined range during initialization.

GRAIL, in contrast to approaches that separate representation learning and clustering, performs a unified, end-to-end optimization. Each experiment consists of 20 outer iterations, with each iteration comprising three sequential stages. In the first stage, An LSTM-based recurrent neural network reconstructs missing values within fixed-length input windows of 32 time steps. To make the generation cluster-aware, each sequence is augmented at every time step with a trainable 8-dimensional embedding corresponding to its current cluster identity. Specifically, a discrete label is mapped via an embedding table to a vector , which is then fed to the generator. A binary mask distinguishes observed from missing entries, and the network is trained for 10 epochs using a masked mean squared error objective to optimize only the imputed values. In the second stage, the imputed sequences are processed by an autoencoder, which projects the inputs into a 10-dimensional latent space. The autoencoder is trained for 10 epochs per iteration using a composite loss that balances reconstruction fidelity with a cluster-consistency regularizer. This regularizer encourages samples from the same cluster to form compact regions in the latent space. Crucially, network parameters are preserved across iterations, allowing for progressive refinement of the latent structure. In the third stage, HDBSCAN is applied to the latent embeddings to partition the data. A grid search over 20 candidate values for the minimum cluster size, logarithmically spaced between 0.1% and 50% of the dataset size, is performed to accommodate clusters of varying density. Finally, to improve assignment coverage, a 5-nearest-neighbor majority vote is used to reassign any points initially labeled as noise.

3.3. Evaluation

To systematically evaluate clustering performance, we adopt a multi-indicator framework integrating four complementary metrics: Silhouette Score, Calinski–Harabasz index, Davies–Bouldin index, and normalized clustering entropy. Each metric assesses a different aspect of partitioning quality, from geometric separation to distributional balance.

The Silhouette Score (S) quantifies the quality of a clustering by measuring how similar a sample is to its own cluster compared to other clusters. It is calculated for each sample and then averaged over all samples as follows:

where

is the average intra-cluster distance for sample

i, and

is its average distance to the nearest neighboring cluster. A score near

indicates dense, well-separated clusters.

The Calinski–Harabasz index (C), also known as the variance ratio criterion, measures the ratio of between-cluster dispersion to within-cluster dispersion. It is defined as:

where

and

are the traces of the between-cluster and within-cluster scatter matrices, respectively,

k is the number of clusters, and

n is the total number of samples. Higher Calinski–Harabasz index values indicate better-defined clusters.

The Davies–Bouldin index (D) evaluates cluster separation by computing the average similarity between each cluster and its most similar counterpart. It is defined as:

where

is the average distance of points in cluster

i to their centroid, and

denotes the Euclidean distance between the centroids of clusters

i and

j. Lower values of

D indicate better inter-cluster dissimilarity and more compact clusters.

Normalized entropy (H) measures the uniformity of the sample distribution across clusters. It is computed as:

where

is the number of samples in cluster

i, and

n is the total number of samples. A value near 1 implies that clusters are of a similar size, indicating a balanced partitioning.

To aggregate these metrics into a unified ranking criterion, we employ min–max normalization across each score and define a weighted composite score (M):

where

,

,

,

are the normalized versions of the corresponding metrics, and

reflect the relative importance attributed to silhouette coherence and inter-cluster separation over statistical balance and compactness.

3.4. Model Comparison

3.4.1. Models with Generative Augmentation

The quantitative comparison presented in

Table 3 reveals significant variation in clustering performance across models. Classical methods such as K-means and DBSCAN demonstrate limited clustering capability, with Silhouette Scores of 0.122 and 0.078, and relatively low Calinski–Harabasz indices of

and

, respectively, indicating weak inter-cluster separation. The Davies–Bouldin indices of 2.270 and 3.484 suggest high intra-cluster dispersion. Although their normalized entropy values of 0.984 and 0.977 imply balanced cluster sizes, this uniformity may obscure poor semantic structure or reflect fragmentation into similarly sized but incoherent regions, particularly in the case of DBSCAN. HDBSCAN demonstrates improved geometric separability, with a Silhouette Score of 0.216 and a moderately higher Calinski–Harabasz index of

. However, its entropy further increases to 0.999, suggesting highly uniform but potentially over-fragmented partitions with limited semantic cohesion.

To better leverage structure-preserving properties of latent representations, deep embedded clustering variants are further evaluated. DE-KMeans and DE-DBSCAN both improve intra-cluster compactness of 1.714 and 1.337, and Silhouette Scores of 0.185 and 0.322. DE-DBSCAN achieves a more favorable balance between density sensitivity and feature expressiveness, yielding a normalized entropy of 0.949 and a Calinski–Harabasz index of . DE-HDBSCAN further improves separation and compactness, achieving a Silhouette Score of 0.421 and Davies-–Bouldin index of 1.336, though its entropy slightly decreases to 0.945, indicating mild cluster size imbalance in exchange for enhanced geometric regularity.

Further, DEC-HDBSCAN achieves stronger joint optimization of clustering and representation. With a Silhouette Score of 0.956, Calinski–Harabasz index of , and compactness score of 0.066, it demonstrates well-separated and coherent partitions. Its entropy of 0.971 remains moderate, reflecting that clusters are slightly unbalanced in size but structurally well-formed.

Moreover, the proposed GRAIL framework attains the best overall performance, with a Silhouette Score of 0.969, Calinski–Harabasz index of , and a Davies–Bouldin index of 0.042. It consistently outperforms all baselines in both separation and compactness. Importantly, its normalized entropy of 0.994 confirms that clusters are not only geometrically well-formed but also highly uniform in size distribution. This balance highlights the advantage of closed-loop integration of cluster-guided generation, reconstruction-aware representation, and density-based refinement.

To provide a unified evaluation metric, we construct Composite Score

M by applying min–max normalization and linearly aggregating the Silhouette Score, Calinski–Harabasz index, inverse Davies–Bouldin index, and normalized entropy, with weights specified in

Section 3.3. As reported in

Table 3, GRAIL achieves the highest Composite Score of 0.991, significantly outperforming the second-best method DEC-HDBSCAN, which attains Composite Score of 0.69. In contrast, classical algorithms such as K-means with a score of 0.163 and DBSCAN with a score of 0.059 exhibit lower composite scores, which underscore the limited expressive capacity and structural rigidity of traditional clustering techniques when applied to complex high-dimensional spatiotemporal PV data.

3.4.2. Models Without Generative Augmentation

To further examine the impact of data generation for clustering, we also evaluate a non-generative configuration. In this setting, the generator is disabled. Instead of imputing missing values, we perform complete-case filtering by discarding any sample window containing one or more missing entries. Given the dataset’s 45.4% missingness rate, this procedure substantially reduces the number of usable samples and may introduce a bias toward sites with more complete observations. The remaining complete windows are fed directly to the autoencoder for deep methods, while baseline models operate on the raw features. All other preprocessing steps and hyperparameters are held constant. This listwise deletion strategy provides a conservative performance baseline. As shown in

Table 4, the performance of classical methods is notably constrained under this setting. K-means achieves a modest Silhouette Score of 0.122 and a Calinski-Harabasz index of

, coupled with a relatively high Davies-Bouldin index of 2.275. DBSCAN, by contrast, attains the lowest Composite Score of 0.1, a result attributable to an undesirable Silhouette Score of 0.047 and an extremely high Davies-Bouldin index of 4.645, which indicate excessive noise labeling and unstable cluster boundaries. HDBSCAN also performs modestly. Its high normalized entropy of 0.990 suggests that it produces highly uniform but potentially over-segmented cluster sizes.

The deep embedded methods show marked improvement over classical techniques, though they also reveal certain trade-offs. While DE-KMeans and DE-DBSCAN surpass their classical counterparts, their performance is mixed. Specifically, DE-DBSCAN achieves a higher Calinski-Harabasz index of and a better Davies-Bouldin index of 1.683. However, both methods exhibit near-maximal entropy of 0.999. In the absence of generative guidance, this extreme uniformity in cluster size may not correspond to meaningful structure and could instead indicate over-segmentation. DE-HDBSCAN delivers a stronger and more balanced result, achieving a Silhouette Score of 0.289, a Calinski-Harabasz index of , and a low Davies-Bouldin index of 1.382. It ranks second overall with a Composite Score of 0.345, highlighting the advantage of density-aware modeling in a latent space, even without generative support.

DEC-HDBSCAN emerges as the top-performing approach under the no-generation setting. It delivers excellent results across key metrics, achieving a Silhouette Score of 0.909, a Calinski-Harabasz index of , and a low Davies-Bouldin index of 0.132. Furthermore, its high normalized entropy of 0.953 indicates that it produces balanced clusters with strong partition consistency. This robust performance culminates in a Composite Score of 0.900, reinforcing the significant advantages of an end-to-end framework that jointly optimizes representation learning and clustering.

3.4.3. Models with vs. Without Generation

To quantify the contribution of the generative module, we systematically compare the performance of each model category with and without data augmentation, as summarized in

Table 5. The results show that generative augmentation provides a consistent and significant performance uplift, particularly for advanced deep learning frameworks.

When operating on the augmented data, classical methods exhibit modest but consistent gains. On average, the Silhouette Score increases by 0.009, a 7% relative improvement that indicates a minor enhancement in inter-cluster separability. The Calinski-Harabasz index rises by , reflecting a 1.076-fold increase in between-cluster dispersion. Similarly, cluster compactness, measured by the inverted Davies-Bouldin index (1-D), improves by 0.385 and a 13% relative gain, suggesting enhanced intra-cluster cohesion. Finally, the normalized entropy remains stable with a slight increase of 0.003, implying that the generative augmentation preserves the balance of cluster sizes while improving their geometric structure.

In contrast to classical approaches, the deep embedded models benefit far more substantially from generative augmentation. The average Silhouette Score, for instance, increases by 0.073, corresponding to an 18% relative improvement. The Calinski-Harabasz index shows an even more dramatic rise, with an absolute gain of that represents a 1.625-fold enhancement in between-cluster separability. Furthermore, cluster compactness, as measured by the inverted Davies-Bouldin index (1-D), improves by 0.083, reflecting a 7% gain in intra-cluster density, which suggests that the augmented data helps the autoencoder learn a latent representation that is more geometrically structured.

The benefits of generative augmentation are most pronounced in the top-performing deep clustering heads, where different architectures leverage the augmented data to achieve specific gains. DE-HDBSCAN demonstrates the largest improvement in Silhouette Score, with an absolute increase of 0.132, highlighting its enhanced ability to refine complex cluster boundaries. DE-DBSCAN attains the greatest gain in cluster compactness, as measured by the inverted Davies-Bouldin index (1-D), improving by 0.346, which reflects a substantial consolidation of internal cluster structure. Meanwhile, the most significant increase in the Calinski-Harabasz index is observed in DEC-HDBSCAN, with a gain of that suggests sharply defined inter-cluster separations. Furthermore, its normalized entropy increases by 0.018, demonstrating that the introduction of generative signals sustains size balance while improving semantic clarity.

Taking joint modeling comparison into account, GRAIL is evaluated against DEC-HDBSCAN under non-generative scenario. This comparison reveals a transformative performance leap. Specifically, GRAIL achieves a Silhouette Score improvement of 0.06, alongside a 15-fold increase in the Calinski–Harabasz index, reaching a gain of , and a 68% enhancement in cluster compactness, as measured by the inverted Davies-Bouldin index. Furthermore, its normalized entropy increases by 0.041, confirming that GRAIL not only achieves superior geometric resolution but also promotes a more balanced distribution of samples across clusters. These findings provide definitive evidence for the efficacy of GRAIL’s feedback-coupled generative learning mechanism.

A comparison of the joint modeling approaches highlights the significant contribution of the generative component. When evaluated against DEC-HDBSCAN, the top-performing model in the non-generative setting, GRAIL demonstrates substantial improvements across all metrics. Specifically, GRAIL achieves a Silhouette Score improvement of 0.06 and a 15-fold increase in the Calinski-Harabasz index, corresponding to an absolute gain of . Additionally, its compactness improves by 0.09, representing a 68% enhancement. The normalized entropy also increases by 0.041, indicating that GRAIL not only achieves superior geometric resolution but also promotes a more equitable distribution of samples across clusters. These findings emphasize the efficacy of the feedback-coupled generative learning mechanism within the GRAIL framework.

3.5. In-Depth Analysis of GRAIL

To assess the generative accuracy of GRAIL in reconstructing incomplete photovoltaic sequences,

Figure 6 presents a sequence-tile residual heatmap. Each row in the heatmap corresponds to a PV site and each column represents an hourly timestep, with the color encoding the absolute difference between observed and reconstructed power values. The residuals are predominantly concentrated in the deep-blue region, indicating that the generation module in GRAIL consistently captures the underlying temporal dynamics of PV output with high precision. For example, sites such as Camp Murray, Hill Weber, and Malmstrom display uniformly low reconstruction errors across all timestamps, demonstrating the model’s ability to capture site-specific PV power dynamics with high consistency. Although locations such as JDMT and Kahului exhibit more frequent and longer high-residual segments, as highlighted by the dashed yellow boxes in

Figure 6, these deviations are temporally localized and spatially confined. This pattern suggests that the reconstruction errors are likely induced by meteorological disturbances rather than a systematic model bias. Moreover, GRAIL maintains temporal stability across the entire analysis horizon, with no observable drift or cumulative degradation in reconstruction performance. This stability underscores the model’s robustness in maintaining sequence integrity over time. Finally, the lack of significant residual concentrations at any single site demonstrates GRAIL’s capability to generalize across heterogeneous locations.

In addition, We also evaluate the clustering outcome of GRAIL through an analysis of the temporal power profiles associated with each cluster.

Figure 7 visualizes the average hourly photovoltaic power output between 10:00 and 15:00 for the identified groups. The resulting partition reveals two clearly distinct clusters that correspond to low-generation and high-generation scenarios. Cluster 0 is characterized by a suppressed generation profile, with its output remaining consistently below 4.5 kW. The average output begins at 4.1 kW at 10:00 and declines to 3.3 kW by 15:00. This flat trajectory is indicative of persistent atmospheric attenuation from conditions such as overcast skies or heavy cloud cover. In contrast, Cluster 1 follows a distinct bell-shaped generation curve characteristic of clear-sky conditions. Its power output starts at 9.5 kW, peaks at 13.5 kW around solar noon, and gradually declines to 11.5 kW. The generation amplitude of this cluster is approximately three times that of Cluster 0, which suggests optimal irradiance-to-power conversion efficiency. The distinct separation between the two profiles highlights the model’s capacity to preserve salient structural patterns during the generation and clustering processes. Furthermore, the hour-to-hour profiles within each cluster are nearly parallel, a feature that reflects low intra-cluster variability and high internal consistency. This visual observation aligns with the elevated silhouette scores from

Section 3.4 and confirms that GRAIL produces cluster assignments that are both geometrically compact and physically meaningful.

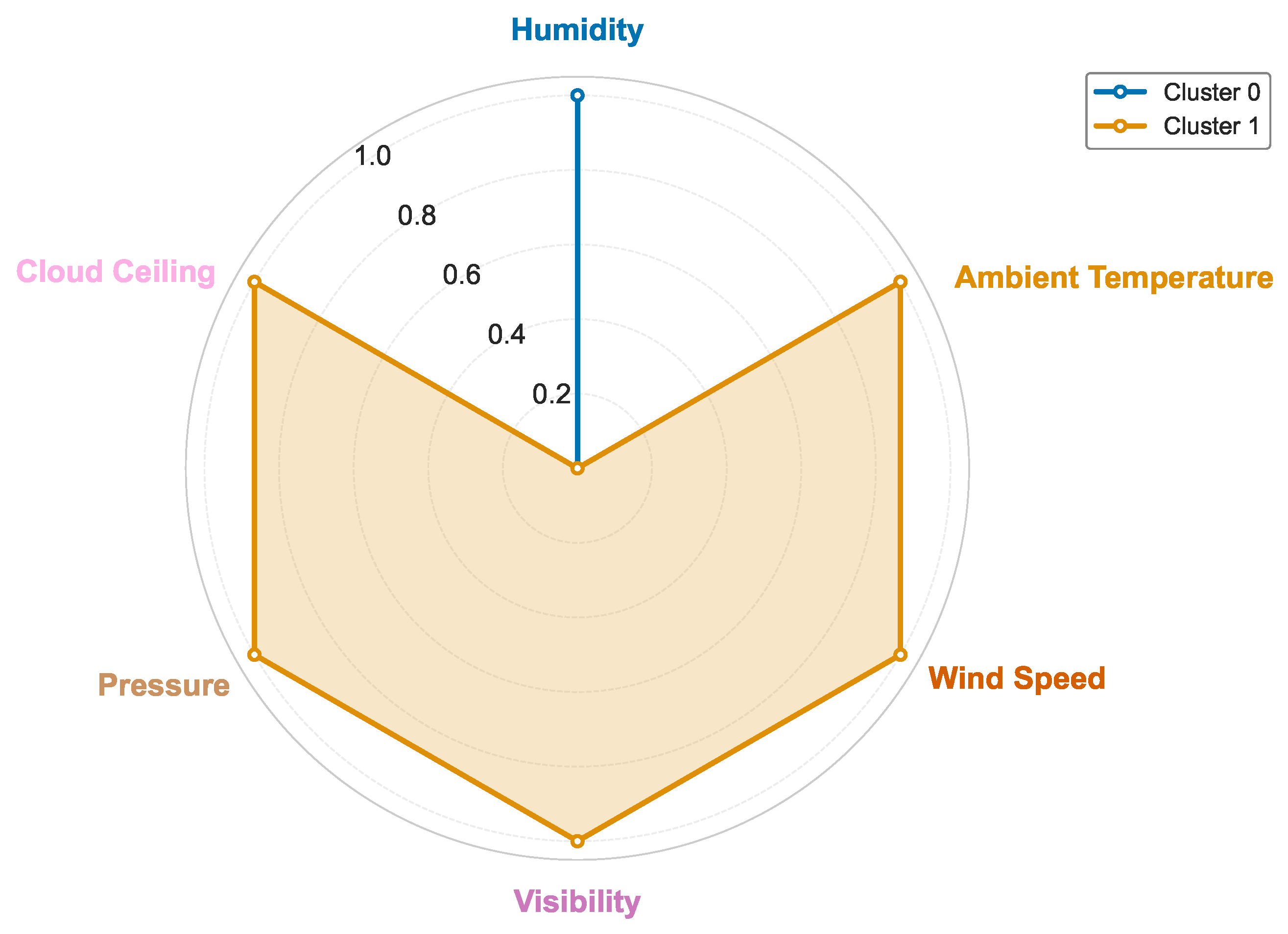

Furthermore, we analyzed the average environmental profiles associated with each cluster.

Figure 8 presents a radar plot that contrasts six key meteorological indicators, which are normalized to the

range for comparability. The two clusters display remarkably divergent environmental characteristics. Cluster 0, indicated by the blue trace, is predominantly characterized by high relative humidity, a condition representative of overcast or saturated settings that attenuate incoming solar radiation and inhibit photovoltaic performance. In contrast, Cluster 1, indicated by the orange trace, exhibits high normalized scores across ambient temperature, wind speed, visibility, surface pressure, and cloud ceiling. These indicators correspond to clear-sky, dry, high-pressure conditions that are conducive to maximized solar insolation. The complementary environmental properties of the two clusters are consistent with their power generation profiles, which show a difference of approximately 9 kW. As evidenced by

Figure 7, Cluster 1 captures high-yield daytime windows, while Cluster 0 represents weather-degraded, low-output periods. These clear meteorological distinctions underscore the semantic validity of the clustering produced by the GRAIL framework.

Figure 9 illustrates the convergence behavior of GRAIL’s alternating optimization, with key clustering metrics stabilizing rapidly. The silhouette score shows a steep ascent from 0.13 at iteration 2 to over 0.9 by iteration 4, after which it saturates. This early stabilization indicates that the latent space quickly organizes into compact, well-separated clusters. A comparable trend is observed in the Calinski-Harabasz index, which rises by three orders of magnitude between iterations 5 and 7, suggesting a continued refinement of inter-cluster dispersion even after the initial structure is formed. Inversely, the Davies-Bouldin index exhibits a steady decay, falling below 0.05 by iteration 6, which corroborates the reduction in cluster overlap. Other metrics provide further insight into the training dynamics. The normalized entropy experiences an abrupt increase at iteration 3 and remains at its theoretical maximum, indicating that the sample distribution across clusters becomes balanced early and remains stable. Concurrently, the autoencoder’s reconstruction error remains nearly constant during the initial iterations and only begins to decrease after iteration 5. This delay suggests a strategic shift in the model’s learning process. Once the global cluster geometry stabilizes, GRAIL reallocates its capacity toward refining the localized reconstruction of data, improving generation quality without compromising structural clarity. These patterns demonstrate the computational efficiency of GRAIL. The framework achieves convergence within approximately six outer iterations, dramatically reducing the training cost compared to typical deep clustering models. This accelerated optimization not only improves scalability but also enhances the model’s responsiveness for real-world settings where training budgets may be limited.

The alternating optimization process converges to a stable clustering partition. As shown in

Figure 9, once the convergence curves reach a plateau, subsequent outer iterations consistently produce the same partition. To formalize this, we let

denote the cluster labels and

be the number of non-noise clusters at outer iteration

t. We observe that after the metric curves flatten, which typically occurs within six outer iterations in our experiments, the value of

becomes constant, and no further cluster merges or splits occur. The stability of the final cluster count is further reinforced by the properties of the clustering algorithm. Because the HDBSCAN configuration is selected from a stability plateau of the

min_cluster_size hyperparameter, small perturbations to this value yield the same number of clusters at convergence, indicating robustness. The subsequent

k-NN refinement step assigns labels only to points previously marked as noise and does not alter the set of non-noise clusters. Taken together, these observations confirm the within-run stability of both the cluster assignments and the number of clusters produced by the framework.