Study on Real-Time Detection of Lightweight Tomato Plant Height Under Improved YOLOv5 and Visual Features

Abstract

:1. Introduction

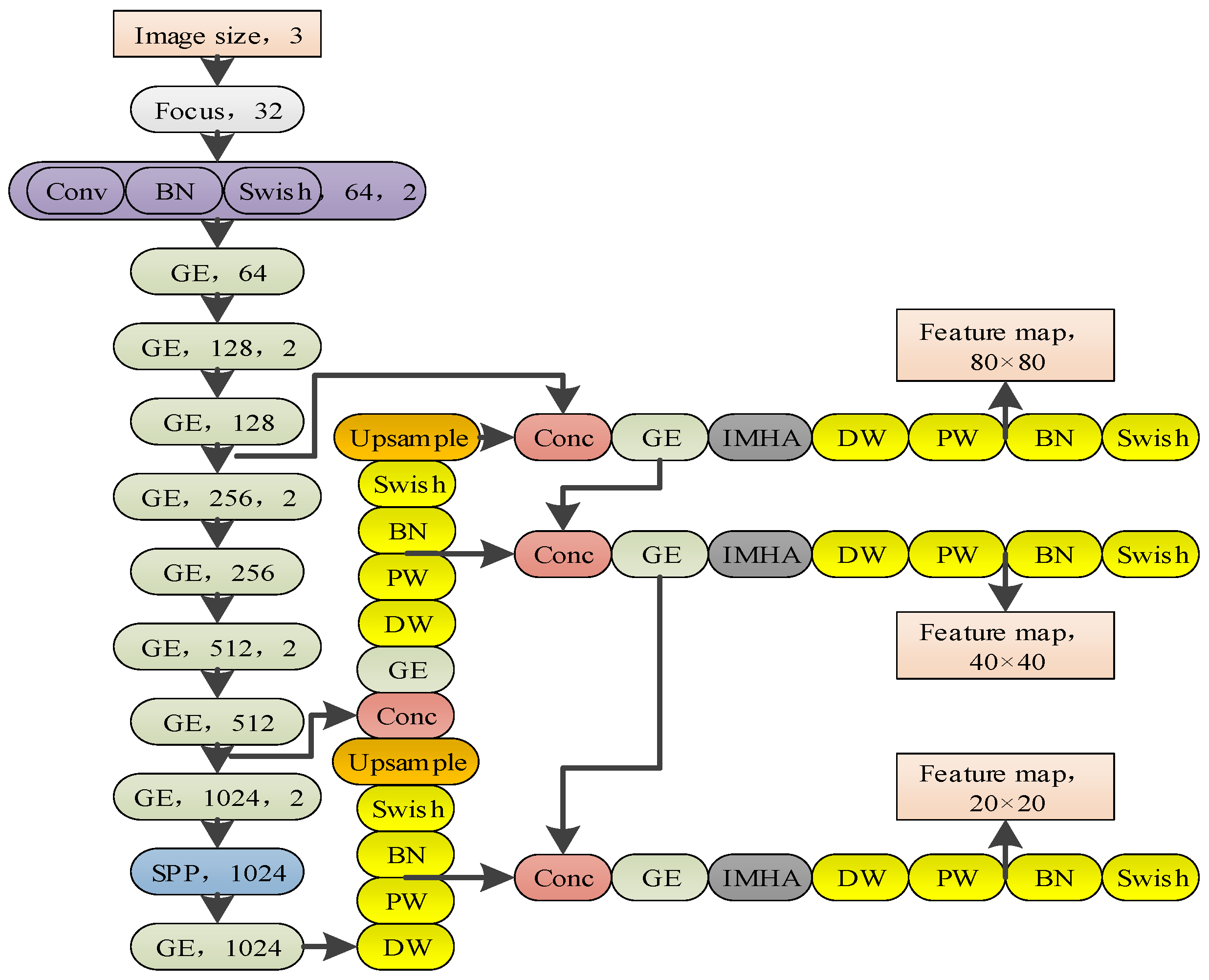

2. Improvement in YOLOv5 for Real-Time Detection of Plant Height of Lightweight Tomato

- (1)

- Backbone: The backbone of YOLOv5 is replaced with CSP dark net53-s [15], and GE modules are reduced to achieve a lightweight structure and simplified computation. A ghost module [16] and Mobile net v2 [17] are combined to establish the ghost bottleneck structure of the GE module to prevent information loss due to scale compression by converting information between small dimensions and large dimensions. In order to compensate for the precision loss of the structure and make the information about the feature map more complete and rich, the GE module channel is extended and the focus structure [18] is introduced to slice the input image.

- (2)

- Neck and head: First, all the CSP2_X structures in the neck and head are replaced with GE modules, and all standard convolution is replaced with deep separable convolution [19], so as to avoid model parameter redundancy and reduce the calculation amount. Then, interactive multi-head attention is embedded into the neck [20], and after obtaining spatial attention and channel attention simultaneously, feature information is selected based on two dimensions.

- (3)

3. Real-Time Detection of Tomato Plant Height and Weight

- (1)

- Delineation of visual characteristics of main stems of tomato plants. Regarding the visual characteristics of the main stem, the color, texture, and shape are selected to avoid deviation due to single features.

- (2)

- Three types of visual features are input into the improved YOLOv5 model, and through the backbone, neck, and head parts and step-by-step operation and reasoning, effective feature maps of different specifications of receptor fields are obtained. The main stems of tomato plants are selected from the background by using the feature pyramid network [27] and three effective feature maps.

- (3)

- According to the obtained ratio value B of actual plant height H0 and detected plant height the following ratio conversion is carried out to solve the actual plant height Ht of tomato and complete the test:

4. Experiments

4.1. Ablation Experiment of Improved YOLOv5 Algorithm

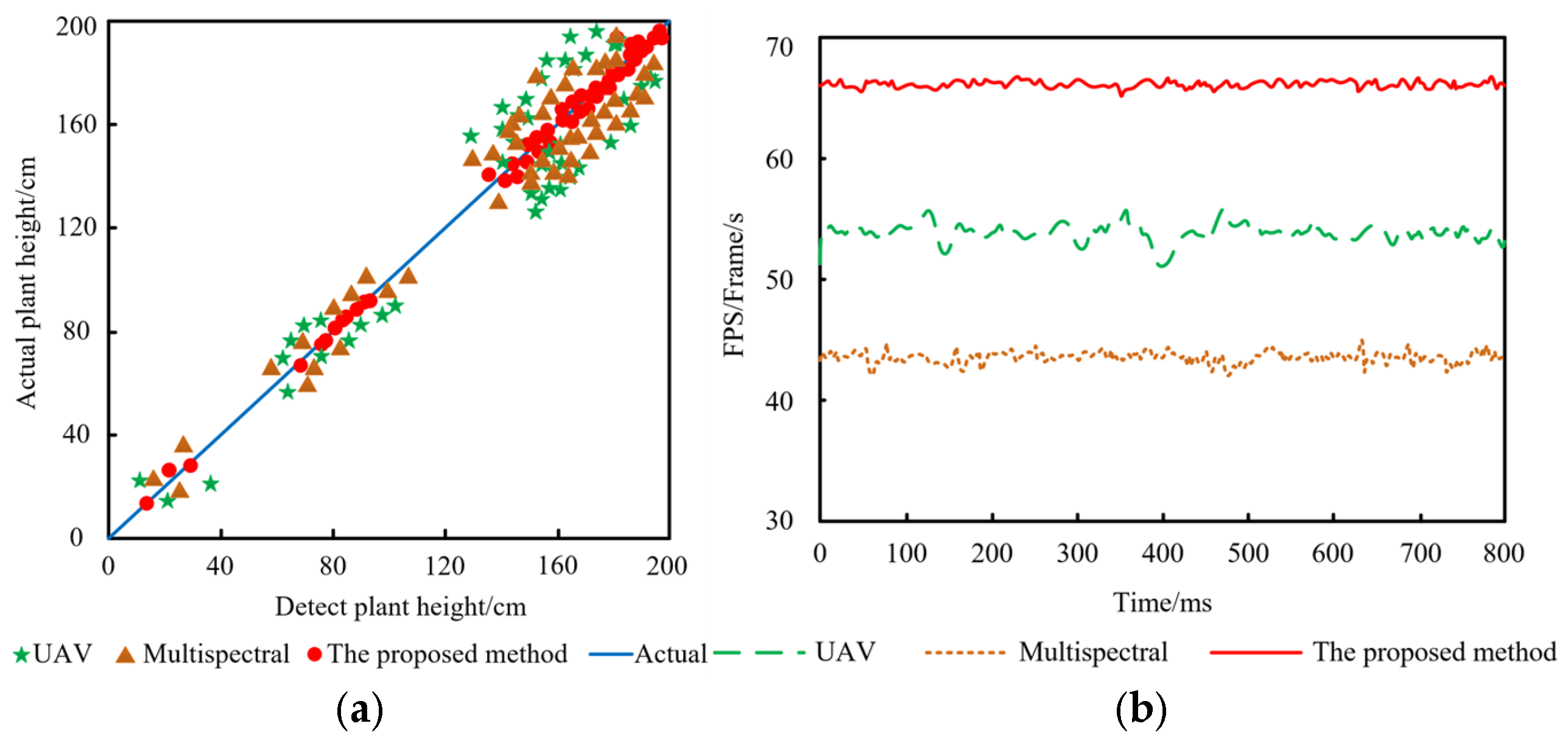

4.2. Deviation and Speed Test of Plant Height Detection

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Karunathilake, M.; Le, A.T.; Heo, S.; Chung, Y.S.; Mansoor, S. The Path to Smart Farming: Innovations and Opportunities in Precision Agriculture. Agriculture 2023, 13, 1593. [Google Scholar] [CrossRef]

- Chen, C.; Zhang, L.; Ni, K. Simulation of Pepper Plant Height, Fruit Growth and Yield Based on Logistic Model with Film Mulching. J. Yunnan Agric. Univ. 2023, 38, 1049–1058. [Google Scholar]

- Yin, C.; Wang, S.; Liu, H. Screening of High-yield and Good-taste Japonica Rice Varieties (Lines) in the Yellow River Basin of Henan Province and Their Characteristics. Jiangsu Agric. Sci. 2022, 50, 60–67. [Google Scholar]

- Cai, G.; Tao, J. Crop Growth Environment Control Based on Bacterial Foraging Optimized Multi-kernel Support Vector Machine. J. Univ. Jinan 2023, 37, 303–308. [Google Scholar]

- Yang, G.; Wang, J.; Nie, Z.; Yang, H.; Yu, S. A Lightweight YOLOv8 Tomato Detection Algorithm Combining Feature Enhancement and Attention. Agronomy 2023, 13, 1824. [Google Scholar] [CrossRef]

- Lin, S.; Xu, T.; Ge, Y.; Ma, J.; Sun, T.; Zhao, C. 3D Information Detection Method of facility tomato based on improved YOLOv5l. J. Chin. Agric. Mech. 2024, 45, 274–284. [Google Scholar]

- He, X.; Feng, T.; Liang, H.; Yuan, J. Lettuce Plant Height Detection Driven by Unmanned Aerial Vehicle Image Data. Electron. Meas. Technol. 2023, 46, 169–176. [Google Scholar]

- Wu, T.; Liu, X.; Nie, R.; Liu, J.; Wu, L.; Li, T. Unmanned Aerial Vehicle Measurement Method for Wheat Plant Height Based on Fine-grained Calibration. Trans. Chin. Soc. Agric. Mach. 2023, 54, 158–167. [Google Scholar]

- Liang, Y.; Wu, W.; Shi, Z.; Tang , L.; Song , X.; Yan , M.; Guo , Q.; Qin , C.; He , H.; Zhang , X. Estimation of Sugarcane Plant Height Based on Unmanned Aerial Vehicle RGB Remote Sensing. Crops 2023, 1, 226–232. [Google Scholar]

- Johannes, N.; Kaiser, L.; Longin, C.F.H.; Hitzmann, B. Prediction of Wheat Quality Parameters Combining Raman, Fluorescence, and Near-Infrared Spectroscopy (NIRS). Cereal Chem. 2022, 99, 830–842. [Google Scholar]

- Wang, Y.; Fang, S.; Zhao, L.; Huang, X. Estimation of Maize Plant Height in North China by Means of Backscattering Coefficient and Depolarization Parameters Using Sentinel-1 Dual-Pol SAR Data. Int. J. Remote Sens. 2022, 43, 1960–1982. [Google Scholar] [CrossRef]

- Busquier, M.; Valcarce-Diñeiro, R.; Lopez-Sanchez, J.M.; Plaza, J.; Sánchez, N.; Arias-Pérez, B. Fusion of Multi-Temporal PAZ and Sentinel-1 Data for Crop Classification. Remote Sens. 2021, 13, 3915. [Google Scholar] [CrossRef]

- Maham, G.; Rahimi, A.; Subramanian, S.; Smith, D.L. The environmental impacts of organic greenhouse tomato production based on the nitrogen-fixing plant (Azolla). J. Clean. Prod. 2020, 245, 118679. [Google Scholar] [CrossRef]

- Raja, R.; Nguyen, T.; Slaughter, C.; Fennimore, S.A. Real-time robotic weed knife control system for tomato and lettuce based on geometric appearance of plant labels. Biosyst. Eng. 2020, 194, 152–164. [Google Scholar] [CrossRef]

- Jin, M.; Li, Y.; Zhang, L.; Ma, Z. An Improved Lightweight Object Detection Algorithm Based on Attention Mechanism. Laser Optoelectron. Prog. 2023, 60, 385–392. [Google Scholar]

- Yu, Y.; Mo, Y.; Yan, J.; Xiong, C.; Dou, S.; Yang, R. Research on Citrus Disease Recognition Based on Improved ShuffleNet V2. J. Henan Agric. Sci. 2024, 53, 142–151. [Google Scholar]

- Peng, Y.; Li, S. A Model for Leaf Disease Recognition of Crops Based on Reparameterized MobileNetV2. Trans. Chin. Soc. Agric. Eng. 2023, 39, 132–140. [Google Scholar]

- Wang, Q.; Li, H.; Xie, L.; Xie, J.; Peng, S. Research on the Improvement of Vehicle Target Detection Algorithm Based on Lidar Point Cloud. Electron. Meas. Technol. 2023, 46, 120–126. [Google Scholar]

- Gu, T.; Sun, Y.; Lin, H. A Semantic Segmentation Network for Complex Background Characters Based on Lightweight UNet. J. South Cent. Univ. Natl. 2024, 43, 273–279. [Google Scholar]

- Zhang, T.; Guo, Q.; Li, Z.; Deng, L. MC-CA: Multi-modal Emotion Analysis Based on Modality Time Series Coupling and Interactive Multi-head Attention. J. Chongqing Univ. Posts Telecommun. 2023, 35, 680–687. [Google Scholar]

- Cheng, Q.; Li, J.; Du, J. Ship Target Detection Algorithm in Optical Remote Sensing Images Based on YOLOv5. Syst. Eng. Electron. 2023, 45, 1270–1276. [Google Scholar]

- Xu, H.; Yang, D.; Jiang, Q.; Lin, L. Improvement of Lightweight Vehicle Detection Network Based on SSD. Comput. Eng. Appl. 2022, 58, 209–217. [Google Scholar]

- Liu, C.; Feng, Q.; Sun, Y.; Li, Y.; Ru, M.; Xu, L. YOLACTFusion: An instance segmentation method for RGB-NIR multimodal image fusion based on an attention mechanism. Comput. Electron. Agric. 2023, 213, 108186. [Google Scholar] [CrossRef]

- Li, W.; Wang, Z.; Cui, L. Target Tracking Algorithm Based on Multi-feature Fusion and Improved SIFT. J. Zhengzhou Univ. 2024, 56, 40–46. [Google Scholar]

- Dai, G.; Tian, Z.; Fan, J.; Wang, C. Plant Leaf Disease Enhancement Recognition Method for Neural Network Structure Search. J. Northwest For. Univ. 2023, 38, 153–161. [Google Scholar]

- Liu, M.; Chen, J.; Chen, H. Building Point Cloud Extraction Method Based on Vector Angle Difference and Fitting Curve Fusion. Sci. Technol. Eng. 2023, 23, 15360–15369. [Google Scholar]

- Dang, J.; Tang, X.; Li, S. HA-FPN: Hierarchical Attention Feature Pyramid Network for Object Detection. Sensors 2023, 23, 4508. [Google Scholar] [CrossRef]

- Zuo, X.; Chu, J.; Shen, J.; Sun, J. Multi-Granularity Feature Aggregation with Self-Attention and Spatial Reasoning for Fine-Grained Crop Disease Classification. Agriculture 2022, 12, 1499. [Google Scholar] [CrossRef]

- Shahi, B.; Xu, Y.; Neupane, A.; Guo, W. Recent Advances in Crop Disease Detection Using UAV and Deep Learning Techniques. Remote Sens. 2023, 15, 2450. [Google Scholar] [CrossRef]

- Jimenez-Sierra, D.A.; Benítez-Restrepo, H.D.; Vargas-Cardona, H.D.; Chanussot, J. Graph-Based Data Fusion Applied to: Change Detection and Biomass Estimation in Rice Crops. Remote Sens. 2020, 12, 2683. [Google Scholar] [CrossRef]

- Zhang, X.; Li, X.; Zhang, B.; Zhou, J.; Tian, G.; Xiong, Y.; Gu, B. Automated robust crop-row detection in maize fields based on position clustering algorithm and shortest path method. Comput. Electron. Agric. 2018, 154, 165–175. [Google Scholar] [CrossRef]

- Lu, X.; Zhou, J.; Yang, R.; Yan, Z.; Lin, Y.; Jiao, J.; Liu, F. Automated Rice Phenology Stage Mapping Using UAV Images and Deep Learning. Drones 2023, 7, 83. [Google Scholar] [CrossRef]

- Lee, U.; Islam, M.; Kochi, N.; Tokuda, K.; Nakano, Y.; Naito, H.; Kawasaki, Y.; Ota, T.; Sugiyama, T.; Ahn, D.-H. An Automated, Clip-Type, Small Internet of Things Camera-Based Tomato Flower and Fruit Monitoring and Harvest Prediction System. Sensors 2022, 22, 2456. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Hu, Z.; Chen, Y.; Wu, H.; Wang, Y.; Wu, F.; Gu, F. Integration of agricultural machinery and agronomy for mechanised peanut production using the vine for animal feed. Biosyst. Eng. 2022, 219, 135–152. [Google Scholar] [CrossRef]

- Li, J.; Zhou, Y.; Zhang, H.; Pan, D.; Gu, Y.; Luo, B. Maize plant height automatic reading of measurement scale based on improved YOLOv5 lightweight model. PeerJ Comput. Sci. 2024, 10, 2207. [Google Scholar] [CrossRef]

- Zhao, Z.; Wang, J.; Zhao, H. Research on Apple Recognition Algorithm in Complex Orchard Environment Based on Deep Learning. Sensors 2023, 23, 5425. [Google Scholar] [CrossRef]

- Zheng, X.; Rong, J.; Zhang, Z.; Yang, Y.; Li, W.; Yuan, T. Fruit growing direction recognition and nesting grasping strategies for tomato harvesting robots. J. Field Robot. 2023, 41, 300–313. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, Q.; Yang, J.; Ren, G.; Wang, W.; Zhang, W.; Li, F. A Method for Tomato Plant Stem and Leaf Segmentation and Phenotypic Extraction Based on Skeleton Extraction and Supervoxel Clustering. Agronomy 2024, 14, 198. [Google Scholar] [CrossRef]

| Module | Argument | Numerical Value |

|---|---|---|

| Stochastic gradient descent optimizer | Learning rate | 0.02 |

| Momentum coefficient | 0.965 | |

| Weight attenuation | 0.0003 | |

| YOLOv5 model | Batch size | 48 |

| Epoch number | 200 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Leng, L.; Wang, L.; Lv, J.; Xie, P.; Zeng, C.; Wu, W.; Fan, C. Study on Real-Time Detection of Lightweight Tomato Plant Height Under Improved YOLOv5 and Visual Features. Processes 2024, 12, 2622. https://doi.org/10.3390/pr12122622

Leng L, Wang L, Lv J, Xie P, Zeng C, Wu W, Fan C. Study on Real-Time Detection of Lightweight Tomato Plant Height Under Improved YOLOv5 and Visual Features. Processes. 2024; 12(12):2622. https://doi.org/10.3390/pr12122622

Chicago/Turabian StyleLeng, Ling, Lin Wang, Jinhong Lv, Pengan Xie, Chao Zeng, Weibin Wu, and Chaoyan Fan. 2024. "Study on Real-Time Detection of Lightweight Tomato Plant Height Under Improved YOLOv5 and Visual Features" Processes 12, no. 12: 2622. https://doi.org/10.3390/pr12122622

APA StyleLeng, L., Wang, L., Lv, J., Xie, P., Zeng, C., Wu, W., & Fan, C. (2024). Study on Real-Time Detection of Lightweight Tomato Plant Height Under Improved YOLOv5 and Visual Features. Processes, 12(12), 2622. https://doi.org/10.3390/pr12122622