1. Introduction

With industrial modernization, the direction of rotating machinery development has been toward large-scale, intelligent, high-precision, and high-efficiency. Rotating machinery usually continuously works at high speed under heavy loads. Rolling bearings are used to convert sliding friction between the shaft and shaft seat into rolling friction, as a result, rolling bearings have become one of the most prone to failure parts in rotating machinery and equipment. According to relevant statistics, 40% of motor failures come from bearings [

1], and therefore, in order to ensure the reliable and safe operations of rotating machinery, the fault diagnosis of rolling bearings is of great importance.

The signals used in fault diagnosis methods are usually vibration signals, acoustic signals, current signals, speed signals, temperature signals, etc. The bearing fault diagnosis relies on various sensors. One of the effective methods is based on the signals from vibration sensors [

2,

3]. Many mechanical failures, such as local defects in rotating machinery. The vibration signal is manifested as a series of pulse events [

4]. The fault diagnosis process is generally divided into two stages: feature extraction and fault classification. The time–frequency analysis can be used to extract the information contained in the signal in the time and frequency domains, and commonly the Short-Time Fourier Transform (STFT) [

5], Fast Fourier Transform (FFT) [

6], Wavelet Transform (WT) [

7], Variational Modal Decomposition (VMD) [

8], and Ensemble Empirical Modal Decomposition (EEMD) [

9], etc., are used in the feature extraction. Hou et al. [

10] used EEMD to decompose the vibration signal to obtain the intrinsic modal components. Combining the permutation entropy eigenvectors of each modal component, the Linear Discriminant Analysis (LDA) method is used to process the entropy eigenvectors as the input of the clustering algorithm, which has the advantage of better intra-class clustering compactness, but the EEMD relies too much on expert experience in the decomposition process. He et al. [

11] introduced the hybrid impact index (SII) as a new metric to evaluate the fault components in the VMD method and the optimal parameters of VMD were selected using an artificial bee colony algorithm. The models that can be used for fault classification include the support vector machine (SVM) [

12], K-Nearest Neighbor (K-NN) [

13], Artificial Neural Network (ANN) [

14], etc.; Deng et al. [

15] optimized the least squares support vector machine (LS-SVM) parameters using the Particle Swarm Optimization algorithm (PSO) to improve the classification accuracy. Lu et al. [

16] proposed a case-based reconstruction algorithm to adaptively locate the nearest neighbors of each test sample, which can achieve the classification of bearing faults using both parameters and cases.

In recent years, research on deep learning has received more and more attention from scholars, and its applications in object recognition, image segmentation, speech recognition, machine health detection, and medical health diagnosis have become more widespread [

17,

18,

19]. Traditional machine learning architectures are simple and difficult to automatically extract the information carried by features in higher-order samples, and only through feature engineering that relies on expert experience may more desirable classification results be obtained. Therefore, deep learning methods are more often used for bearing fault diagnosis of rotating machinery in production practice, and the commonly used deep learning methods are convolutional neural networks (CNN) [

20], Deep Belief Network (DBN) [

21], and Generative Adversarial Network (GAN) [

22]. Jiang et al. [

23] improved the feature learning capability through a layered learning structure for convolution and pooling layers by taking the multiscale characteristics of gearbox vibration signals into consideration. Gong et al. [

24] proposed an improved convolutional neural network support vector machine, in which the raw data from multiple sensors were directly input to the CNN-Softmax model, and the extracted feature vectors were input to the support vector machine for fault classification. The results were better than those achieved using the SVM and K-nearest neighbor methods. Deng et al. [

25] proposed a Multi-Swarm Intelligence Quantum Differential Evolution (MSIQDE) algorithm to optimize the DBN parameters to avoid premature convergence and improve the global search capability, and the experimental results showed that higher classification accuracy was achieved using the MSIQDE-DBN than other comparative methods. Zhang et al. [

26] used CNN to extract features from data and then combined them with the Long Short-Term Memory (LSTM) neural network to process time series data. The Sparrow Search Algorithm (SSA) is used to optimize the parameters of the Long Short-Term Memory (LSTM) neural network and improve the accuracy and feasibility of fault diagnosis. Chen et al. [

27] proposed a fault diagnosis method combining a convolutional neural network (CNN) and Extreme Learning Machine (ELM), in which, firstly, the original vibration signal was processed using the continuous wavelet transform, then advanced features were extracted with the CNN. The classification performance was improved by using the ELM as the classifier, the proposed method was able to detect different fault types and the classification accuracy was higher than other methods. Chen et al. [

28] proposed a novel fault diagnosis method for rolling bearings based on hierarchical refined composite multiscale fluctuation-based dispersion entropy (HRCMFDE) and PSO-ELM. This method solves the problem of missing high-frequency signals in the process of coarse-grained decomposition and improves the anti-interference ability and computational efficiency. The extracted feature vectors can effectively describe the fault information. Finally, the PSO-ELM classifier is used to classify the fault characteristics. Experimental results show that this method has high recognition accuracy and good load migration effect. Zhou et al. [

29] proposed a new adversarial Generative Adversarial Network (GAN) generator and per-discriminator, which was improved by extracting fault features through an Auto-Encoder (AE) instead depending on fault samples., and the generator training was enhanced from the original statistical overlap to the fault features and diagnostic result errors guided model. The experimental results proved the effectiveness of Zhou’s method. Mao et al. [

30] used the spectral data obtained through the original signal processing as the input to the GAN, which generated synthetic samples with fewer fault classes according to the real samples. Such synthetic samples were used as the training set, and the experiment results demonstrated that the improved generalization ability was achieved.

Based on the investigation of the above research, a rotating machinery fault diagnosis method based on an improved deep convolutional neural network (DCNN) and Whale Optimization Algorithm (WOA) optimized Deep Extreme Learning Machine (DELM) is proposed in this paper. The proposed method enhances the ability of the DCNN networks to extract important features while leveraging the excellent generalization ability of the WOA-DELM model. It addresses the issues related to poor feature extraction and low diagnostic accuracy in traditional convolutional neural networks due to feature masking caused by background noise under varying operational conditions. The main contributions of this paper are as follows:

An improved DCNN (IDCNN) classification network with Bi-directional Long Short-Term Memory (BiLSTM) and Efficient Channel Attention Net (ECA-Net) is constructed. The BiLSTM added to DCNN can extract the deep features of the data based on the timing information. The ECA-Net in the DCNN is introduced to weight different features. Therefore, IDCNN can make expressive features play a greater role.

The initial weights of the first input layer of DELM are optimized by WOA to effectively improve the overall stability of DELM. Using WOA-DELM as a classifier the classification accuracy as well as the generalization performance are improved.

The IDCNN-WOA-DELM fault diagnosis method proposed in this paper is experimentally validated using multiple bearing data sets and its effectiveness and generalization capability for bearing fault diagnosis have been verified by comparing it with other network models.

2. Theoretical Derivation

2.1. DCNN

The deep convolutional neural network is a class of the feedforward neural network with convolutional computation and deep structure, which is one of the representative algorithms of deep learning. The convolutional layer and pooling layer are feature extraction layers, which can be set alternately. The structure of the classical DCNN network is shown in

Figure 1.

In the convolutional layer, a certain size of convolutional kernels is used to convolve local regions of the input features. Each kernel convolution represents a feature map, multiple feature surfaces are output by the nonlinear activation function, and the same input feature surface and the same feature output surface use the same convolutional kernel, so as to achieve a weight-sharing network structure. The mathematical model of the convolutional layer is expressed as follows:

In this equation, is the nth feature mapping of the lth layer; is the activation function; is the number of input feature mappings; is the number of nth feature mappings of the l − 1th layer; is the trainable convolution kernel; and is the bias.

The pooling layer is a downsampling layer, and the size of the input matrix will change in this layer, but not the depth of the matrix. The pooling layer can be used to reduce the number of nodes for the fully connected layer, which, in the neural network training parameters optimization, has a certain role to play. The pooling layer has no parameter, so there is no need for weight updates. The mathematical model of the pooling layer is expressed as follows:

In this formula down() represents the subsampling function.

The fully connected layer is a traditional feedforward neural network in which the neurons of the fully connected layer are connected to the neurons of the upper layer, and the features that have been convolved and pooled can continue to be expressed nonlinearly, and the inputs of the fully connected layer are all one-dimensional feature vectors. The mathematical model expression of the fully connected layer is as follows:

is the output of the fully connected layer, is the weight factor, is the network layer sequence number, is the bias, is the unfolded one-dimensional vector, is the activation function, and the classification task usually uses the Softmax function.

2.2. DELM

ELM, proposed by Huang et al. [

31], is a new training model for Single-hidden Layer Feedforward Networks without iterative tuning its network structure of the extreme learning machine consists of an input layer, an implicit layer, and an output layer. Since it contains only one implicit layer, its generalization ability is better than the classical neural network model, and in its training process, the learning parameters in the hidden nodes are randomly chosen, which are not required to be adjusted. The output weights are obtained through generalized inverse operation, and only the number of hidden nodes needs to be determined, which is no longer propagated backward during the training process. Compared with traditional deep learning models, the training speed of the ELM is significantly improved, and the model has better generalization ability.

The mathematical model of the ELM output with a sample set of x samples and l number of hidden layers is expressed as follows:

In the formula, is the number of hidden nodes; and are the input weight and output weight between the nodes of the ith hidden layer and the output layer, respectively; is the threshold of the ith hidden neuron. is the activation function. is the arbitrary number of samples.

By setting

to be the inner product of

and

, the above equation can be written as follows:

where

is the output matrix of the hidden layer, and

is the desired output.

In order to make the error between the output and the desired output close to 0, let the network cost function

, approach minimal. Based on the ELM theory, the learning parameters of the hidden nodes can be generated randomly without considering the input data, and hence the above equation can be changed into a linear function, and the output weights can be determined by the least squares method. The mathematical model is as follows:

is the Moore-Penrose generalized inverse matrix of .

The basic unit in the DELM is the ELM-AE, which is a combination of the ELM and an Auto-Encoder (AE), which can be seen as connecting multiple ELMs into an instrument. The structure of DELM is shown in

Figure 2. This allows a more comprehensive extraction of mapping relationships between data, exhibiting better performance for processing high-dimensional and nonlinear data. The mathematical model of DELM is as follows:

where

is the number of hidden layer neurons;

is the number of derived neurons corresponding to the hidden neuron,

is the weight vector between the jth hidden layer neuron and the output layer;

is the kth order derivative of the implicit layer neuron activation function of the jth implicit layer neuron activation function; n is the number of input layer neurons;

is the weight vector between the input layer and the jth hidden layer neuron, and

is the jth hidden layer node bias.

is the number of training data sets.

The weight of the DELM input layer is an orthogonal random matrix randomly generated in the first ELM-AE pre-training stage. In DELM, the least square method can only adjust the weight parameter of the output layer. The weight of the hidden layer must be obtained through iteration. The input weight of each ELM-AE in DELM will affect the final DELM effect.

2.3. ECA-Net

ECA-Net is proposed by Wang et al. [

32], which is a local cross-channel interaction network without dimensionality reduction. It can be used to reduce model complexity while maintaining performance. The attention mechanism is a method to optimize deep learning models by simulating the attention mechanism of the human brain, in the ECA-Net, mainly the SE-net module is improved so that the ECA-Net can adaptively select the size of one-dimensional convolution kernels. Only a few parameters need to be added into the model, but obvious performance gains have been achieved. The structure of ECA-Net is shown in

Figure 3.

The ECA module is implemented by fast one-dimensional convolution of size

, where

represents the coverage of local cross-channel interactions.

is related to the channel dimension

, and the larger the channel dimension

, the stronger the long-term interaction. The mapping between

and

is shown below:

When the channel dimension is given, the size of

can be determined adaptively.

where

represents the most recent odd number;

;

.

2.4. BiLSTM

LSTM was developed by Hochreiterand Schmidhuber from Recurrent Neural Network (RNN) [

33] in 1998. The structure of LSTM is shown in

Figure 4. LSTM has a special gating mechanism, so it can learn long-term dependencies between two sequences and has long-term memory. The key to LSTM is the transmission of information from

to

and the selective retention of desired features in the process. LSTM unit includes a forget gate

, input gate

and output gate

. The mathematical expression of the gates is shown below.

where

is the activation function,

is the weight of the corresponding gate,

is the output of the previous LSTM unit,

is the input at the current time, and

is the bias of the corresponding gate.

The formula for calculating the cell state

and hidden layer state

of LSTM unit is as follows:

where

is the weight coefficient matrix of the current input cell state.

BiLSTM is composed of a forward LSTM and a reverse LSTM. BILSTM makes use of known time series and reverse position series, and deepens the feature extraction of the original sequence through forward and back propagation bi-directional operation. The final output of the BiLSTM neural network is the sum of LSTM output results propagated forward and back. The structure of BiLSTM is shown in

Figure 5.

Forward calculation is performed from time 1 to time t in the forward layer to obtain and save the output

of the forward hidden layer at each time. The backward calculation is performed at the backward layer along time t to time 1 to obtain and save the output

of the backward hidden layer at each time. Finally, the final output is obtained by combining the output results

of the corresponding moments of the forward layer and backward layer at each moment. The calculation formula is as follows:

2.5. WOA

The whale optimization algorithm (WOA) algorithm is a novel natural heuristic optimization algorithm proposed by Mirjalili et al. [

34], and its main idea is to mimic the unique behaviors and predation strategy adopted by humpback whales when exploring prey, shrinking the encirclement, and spiral prey location updating.

At the exploration stage, whales randomly look for prey according to each other’s location during the search process, but the prey location is generally unknown, so whales need to update the position according to their own position, and the update equation is as follows:

The current position of a whale is denoted by

, while

refers to the position of a randomly selected whale. The distance between the current individual and the randomly selected individual whales is represented by

.

is the coefficient vector, which is mathematically expressed as follows:

where

and

are random vectors from 0 to 1.

is a vector that decreases linearly from 2 to 0.

where

is the maximum number of iterations.

There are two predation modes The first mode is to narrow down the search. It can be described using the following mathematical expression:

At this point, all individuals move towards the position with the best fitness value, thus forming a contraction surround.

The second mode uses a spiral equation to update the whale’s position based on the prey’s location, and its mathematical expression is shown below:

In Formulas (25) and (26), is the current number of iterations, is the current coordinate vector of the whale, is the location of the prey found by the whale, is the logarithmic spiral shape constant, is a random number between −1 and 1, and is the distance between the humpback whale and the prey.

The above two processes are synchronized in the actual predation process, and therefore it requires setting the probability control to determine the strategy to choose between the two methods, which can be explained with Equation (27).

The real behavior of humpback whales is simulated by assigning equal probabilities of 50% to each of the two methods. The search is judged to be over when the number of iterations is maximized.

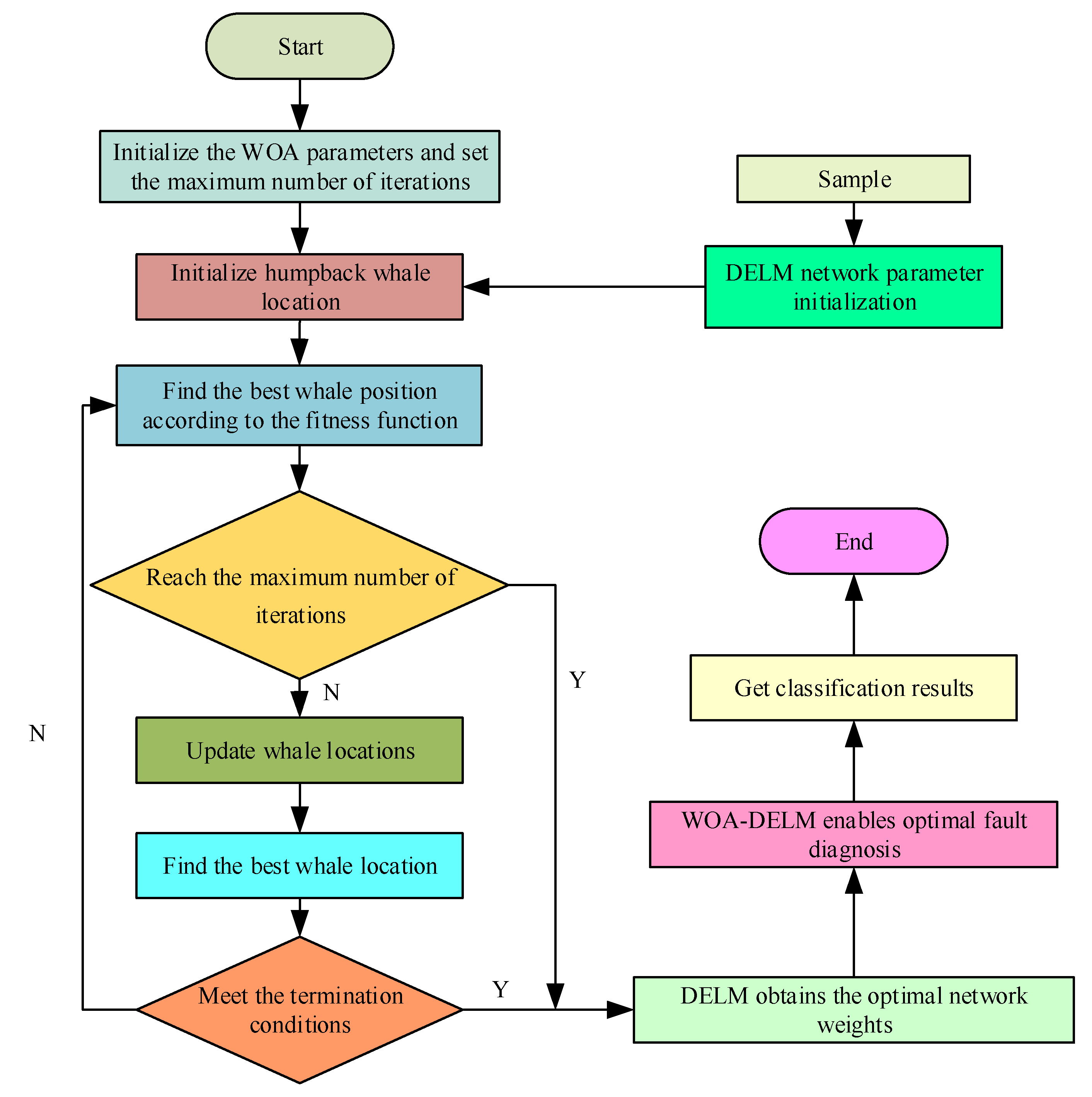

3. The Proposed Bearing Fault Diagnosis Method Based on IDCNN Feature Extraction and WOA-DELM

In rotating machinery, bearing fault diagnosis under variable conditions is often a challenging task due to the high noise environment, heavy load, and high speed. Such a task requires sufficient expertise and abundant experience. To address this issue, a fault diagnosis method based on a combination of IDCNN and WOA-optimized DELM is proposed in this paper. This method aims to improve the feature extraction capability of the convolutional neural network and to leverage the excellent classification capability and stable global dynamic search capability of the WOA-DELM. The overall approach is presented in the following roadmap in

Figure 6.

As shown in

Figure 6, the specific troubleshooting process is as follows:

Step 1: The bearing vibration signal is collected from the experimental platform, and the collected one-dimensional vibration signal is cut into small segments of 1 × 2048, and the training set and testing set are selected from the small segments to simulate the working conditions under variable working conditions.

Step 2: The IDCNN model is trained using the training set and the deep convolutional neural network combined with BiLSTM and ECA-Net is used to mine the deep features of the data so that the features with expressive power in the samples can play a greater role. The extracted fault features are then fed into the WOA-DELM model for training.

Step 3: The testing set samples are imported into the optimal IDCNN network, and the results of the fully connected layer are output as a new testing set into the trained WOA-DELM classifier. Then the diagnostic results and various evaluation indexes are combined to illustrate the effectiveness of the model. The traditional feedforward neural network using gradient descent iterative algorithm to adjust the weight parameters will lead to slow training speed, poor generalization ability, and more training parameters, which affect the effectiveness of the feedforward neural network. Therefore, using the WOA-DELM classifier instead of the commonly used Softmax classifier can effectively improve the accuracy, efficiency, and generalization ability of classification. The fault features extracted from the IDCNN network are input into the DELM classifier for classification. The features input to the DELM classifier is trained using the WOA to find the optimal weights and bias parameters for the DELM classifier to improve the diagnostic performance of the model.

WOA–DELM Classifier

WOA is used to optimize the original randomly generated weights of the first input layer in the DELM model. The optimization of DELM using the WOA is shown in

Figure 7. The specific optimization steps are as follows:

Step 1: Set the number of ELM-AEs as 180, the activation function is Sigmoid, the input weights are , and the hidden layer bias is .

Step 2: Initialization operation of the WOA parameters. The population size is set to 80 and the number of iterations is set to 20.

Step 3: Initialize the individual whale with the randomly generated x, x from DELM as the initial position vector.

Step 4: Set the fitness function, which in this paper is set as the error rate of the training set, and calculate the individual fitness values in the initialized population to obtain the optimal individual.

Step 5: After randomly generating values, and are used jointly to decide how to determine the formula for the location update. When , Formula (19) is chosen. When , Formula (27) is chosen in combination with the probability .

Step 6: Recalculate the fitness values and find a better solution.

Step 7: Examine whether the WOA algorithm meets the termination condition, and outputs the optimal result if the termination condition is met, otherwise repeat the above steps according to the set number of iterations.

Step 8: Enter the search parameters in the WOA-DELM model to start fault diagnosis.

4. Experiments and Analysis

This paper uses a bearing experimental dataset to validate the proposed method. The experimental data are a publicly available dataset from Case Western Reserve University.

The computer hardware environment was configured with Windows 11 operating system, CPU i5-12400F@2.5 GHz, and GPU Nvidia GeForce RTX 3060. The program deep learning framework was built and run in Tensorflow using Python 3.7 and the WOA-DELM in Matlab R2018b.

4.1. Data Description and Processing

The CWRU (Case Western Reserve University) dataset, which is a commonly used dataset for bearing fault diagnosis, consists of a motor, torque sensor, and dynamometer in its experimental platform. The experimental platform of CWRU bearing is shown in

Figure 8. It encompasses four different types of bearing failures, including inner ring damage, outer ring damage, ball failure, and normal operation, with failure diameters of 0.007 inches, 0.014 inches, and 0.028 inches, respectively. Furthermore, outer ring damage is placed in three positions: 3 o’clock, 6 o’clock, and 12 o’clock. The drive end (DE) features two sampling frequencies of 12 KHz and 48 KHz, while the fan end (FE) has only a 12 KHz sampling frequency. Each fault type contains different bearing operating states, comprising four different loads of 0, 1, 2, and 3 hp, as well as four different speeds of 1797, 1772, 1750, and 1730 rpm. The CWRU (Case Western Reserve University) dataset, which is a commonly used dataset for bearing fault diagnosis, consists of a motor, torque sensor, and dynamometer in its experimental platform. It encompasses four different types of bearing failures, including inner ring damage, outer ring damage, ball failure, and normal operation, with failure diameters of 0.007 inches, 0.014 inches, and 0.028 inches, respectively. Furthermore, outer ring damage is placed in three positions: 3 o’clock, 6 o’clock, and 12 o’clock. The drive end (DE) features two sampling frequencies of 12 KHz and 48 KHz, while the fan end (FE) has only a 12 KHz sampling frequency. Each fault type contains different bearing operating states, comprising four different loads of 0, 1, 2, and 3 hp, as well as four different speeds of 1797, 1772, 1750, and 1730 rpm.

The data used in experiments 1 and 2 are sampled at 12 KHz frequency at the DE end, with speeds of 1797 rpm, 1772 rpm, 1750 rpm, and 1730 rpm. Different speeds correspond to 0 hp, 1 hp, 2 hp, and 3 hp under four kinds of load in different fault positions, including inner ring, ball, and outer ring fault states with a fault size of 0.014, as well as the normal state. The above data are divided into data sets A, B, C, and D according to the speed and load, with each data set including 4 different working conditions. Each working condition has 200 samples. The original vibration signal of each health state contains 121,048 points. In order to avoid overfitting due to the small amount of data, the data are enhanced by overlapping sampling. Each sample contains 2048 points, and each health condition yields 200 samples, totaling 800 samples for each data set. The samples of each data set are provided in

Table 1.

The data used in Experiment 3 is also sampled at 12 KHz frequency at the DE end. Experiment 3 was carried out under four conditions which are 1797 rpm/0 hp, 1772 rpm/1 hp, 1750 rpm/2 hp, and 1730 rpm/3 hp. In Experiment 3, three fault types of inner race fault, outer race fault, and ball fault were selected. Each fault type included 0.07 inches, 0.014 inches, and 0.021 inches fault sizes, respectively. Therefore, the data set used in Experiment 3 contains nine different fault conditions and one normal condition. After overlapping sampling, each fault type includes 300 samples The data set used in Experiment 3 is shown in

Table 2.

This study comprises three experiments, denoted Experiment 1, Experiment 2, and Experiment 3, respectively. Experiment 1 involves the use of data from two distinct working conditions as the training set, and data from a third, distinct working condition as the testing set. The sample numbers of the training set and testing set are 1600 and 686, respectively. The training set and the testing set used in Experiment 2 are, respectively, from two different working conditions. The sample numbers of the training set and testing set are 800 and 343, respectively. Since the situation of 0 load rarely occurs during the actual operation of bearings, Experiment 2 does not consider the situation of 0 hp.

4.2. Model Parameter Setting

The structure of the IDCNN feature extraction is shown in

Table 3. There are 4 convolutional layers and 4 maximum pooling layers in the model. The sizes of the four convolutional filters are 16, 32, 64, and 32. The sizes of the convolutional layers are 64, 3, 3, and 3. The step sizes are 16, 1, 1, and 1, respectively. The four maximum pooling layers are behind the four convolutional layers. The kernel size and step size are both 2. The Bilstm is set after the fourth maximum pooling layer to extract the bi-directional deep features of the positive and negative extraction time series data. The ECA-Net is set after the BiLSTM layer, and this feature extraction layer multiplies the features after the convolutional pooling layer with its feature weight matrix after the ECA attention mechanism completes to achieve feature weighting. Except for the activation function of the ECA module, which uses Sigmoid, the rest of the activation functions use the ReLU function. The parameters of the WOA-DELM classifier are set as follows: the sum of the error rates of the training and testing sets is used as the fitness function during the training iterations, and the fixed parameters are calculated at the end of the optimization in the final accuracy. The hidden layer of ELM-AE is 180, while the number of populations is 80, and the number of iterations is 20; moreover, the activation function is chosen as Sigmoid.

4.3. Analysis of Experiment 1

To evaluate the classification effect, the accuracy and F1 Score are usually used as criteria, which are calculated based on multiple experiment results so that the probability of wrong conclusions can be reduced when dealing with data containing random errors.

The following basic concepts are included in the evaluation metrics: TP (True Positives) indicates that positive cases are classified as positive cases; FP (False Positives) means that negative cases are classified as positive cases; FN (false Negatives) depicts that positive cases are classified as negative cases; TN (True Negatives) represents that negative cases are classified as negative cases.

Accuracy is the ratio of the number of correctly classified samples to the total number of samples. The formula is as follows:

F1 Score is the summed average of precision and recall. The formula is as follows:

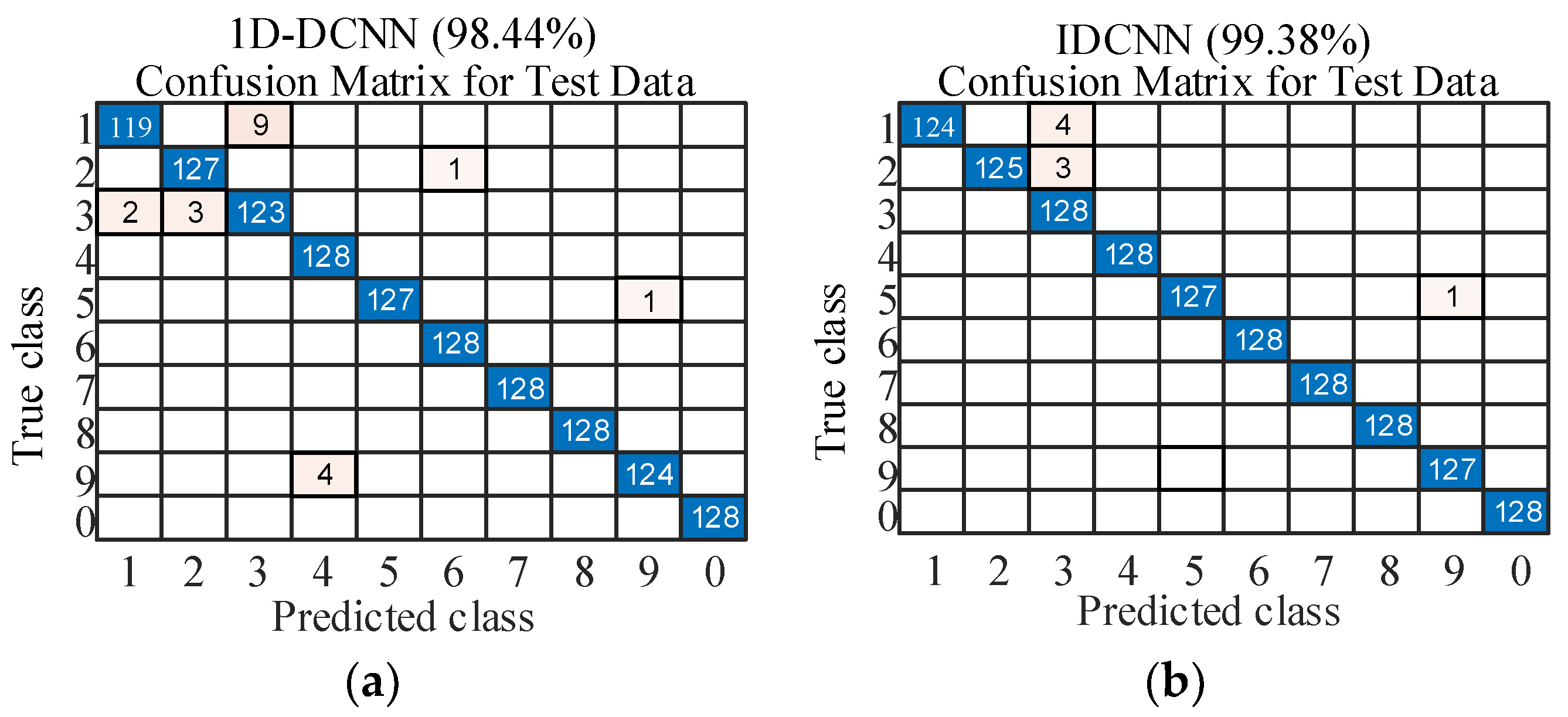

In Experiment 1, each data set in

Table 1 is used. Both IDCNN and 1D-DCNN use Softmax as the classifier The network structure of the above two methods is the same as the network structure selected in this paper. Ten experiments are used during the experiments to reduce random errors.

In Experiment 1, the data from two conditions were used as the training set, and the data from the other conditions as the testing set. The average accuracy of ten experiments is shown in

Figure 9. It can be seen from the experimental results that in 12 groups of experiments, the lowest average accuracy reached 96.65%, and the highest accuracy reached 99.85%. The proposed method shows high accuracy and stability.

Four of the twelve groups were selected. They are numbered Tasks 1–4. The other two methods were used to further verify the effectiveness of the proposed method. The sample situations of Tasks 1–4 are shown in

Table 4.

One experiment was randomly selected from each of the four tasks, and its accuracy and F1 Score are shown in

Figure 10. In the four tasks, all the indicators were higher than 97%, among which the accuracy and F1 Score of Task 4 reached 100%. The result of Task 1 was relatively poor, but the accuracy and F1 Score still reached 97.96%. The average accuracy and average F1 Score of the ten experiments are shown in

Table 5. The average accuracy and average F1 Score of the proposed method in four tasks are significantly higher than those of the other two methods, which proves the effectiveness of the proposed method in fault classification and feature extraction. The training time and testing time of the three methods are shown in

Table 6. The total time of the proposed method is longer than that of the other two methods, but it is within the acceptable range. This proves that the modified method can improve diagnostic performance even with a small increase in training and testing time.

The convergence curves are shown in

Figure 11. The WOA-DELM classifier achieves the optimal parameter values within 20 iterations for the task types in

Table 5. The proposed method has been compared with the 1D-DCNN and IDCNN methods to show its advantages in terms of classification efficiency for rotating machine bearing faults, which reflects the excellent parameter-finding ability of the WOA-DELM classifier.

In order to demonstrate the better feature extraction ability of the improved convolutional neural network, the testing set samples in the input model for the four tasks and the output samples of the fully connected layer are visualized and analyzed using the t-SNE method.

Figure 12 shows the data distribution of the input testing set samples of one of the four task types and the distribution of the testing set samples of the output of the fully connected layer. It can be seen that In the four tasks, there is still some confusion about individual features but the results obtained using the proposed method in Task 2 show good diagnostic and generalization capabilities.

4.4. Analysis of Experiment 2

In Experiment 2, each data set in

Table 1 has been used. A choice of 800 samples were selected from each of the four data sets as the training set, and 343 samples are randomly selected from one of the remaining data sets as the testing set. The rest of the experiment conditions, parameter settings, and evaluation criteria were the same as those used in Experiment 1. For the same reason to avoid random results, 10 experiments were conducted.

In Experiment 2, the data of one working condition are selected as the training set, and one of the remaining data sets is selected as the testing set. The average accuracy of 10 experiments is shown in

Figure 13. It can be seen from the experimental results that in the 6 groups of experiments, the lowest average accuracy reached 97.73%, and the highest accuracy reached 99.42%. Under the condition that the training set is reduced, the average accuracy of the diagnosis of the same fault type still has good and stable performance.

Three of the six groups were selected. They are numbered Tasks 5–7. The other two methods are still used to compare with the proposed method. The sample situations of Tasks 5–7 are shown in

Table 7.

One experiment was randomly selected from each of the four tasks, and its accuracy and F1 Score are shown in

Figure 14. The accuracy and F1 Score of the proposed method in the three tasks are all over 97.37%, which is better than the other two methods. In Task 5, accuracy and F1 Score reach 98.83%, which proves the excellent fault diagnosis capability of the proposed method under variable working conditions. The average accuracy and average F1 Score of the ten experiments are shown in

Table 8. The average evaluation index obtained from ten experiments in different tasks can again prove the generalization and stability of the proposed method. The training time and testing time of the three methods are shown in

Table 9. The average time spent on different tasks decreases as the number of training and testing sets decreases. Although the time used is higher than the other two methods, it is still within the acceptable range.

Figure 15 shows the confusion matrix of the method proposed in this paper for one of the experiments for each of the three tasks, particularly included are the results of the classification for all four fault states. It can be seen from this figure that the accuracy reached 98.83% in Task 5, and in Task 6, three samples of fault type 3 were incorrectly diagnosed as fault type 1, whilst eight samples of fault type 3 were incorrectly diagnosed as fault type 2. The accuracy for label 1 is 91.2% and that for label 3 is 98.8%. From the signal frequency analysis, it is known that when the outer and inner ring faults occur, the low-frequency component almost disappears, and for the rolling body fault, the vibration signal is dominated by low-frequency components, that is the reason that the samples of IF and OF in Task 6 cause confusion. In Task 7, except for the accuracy of all types but label 1 reaches 100%, the accuracy for label 1 is 92.9%.

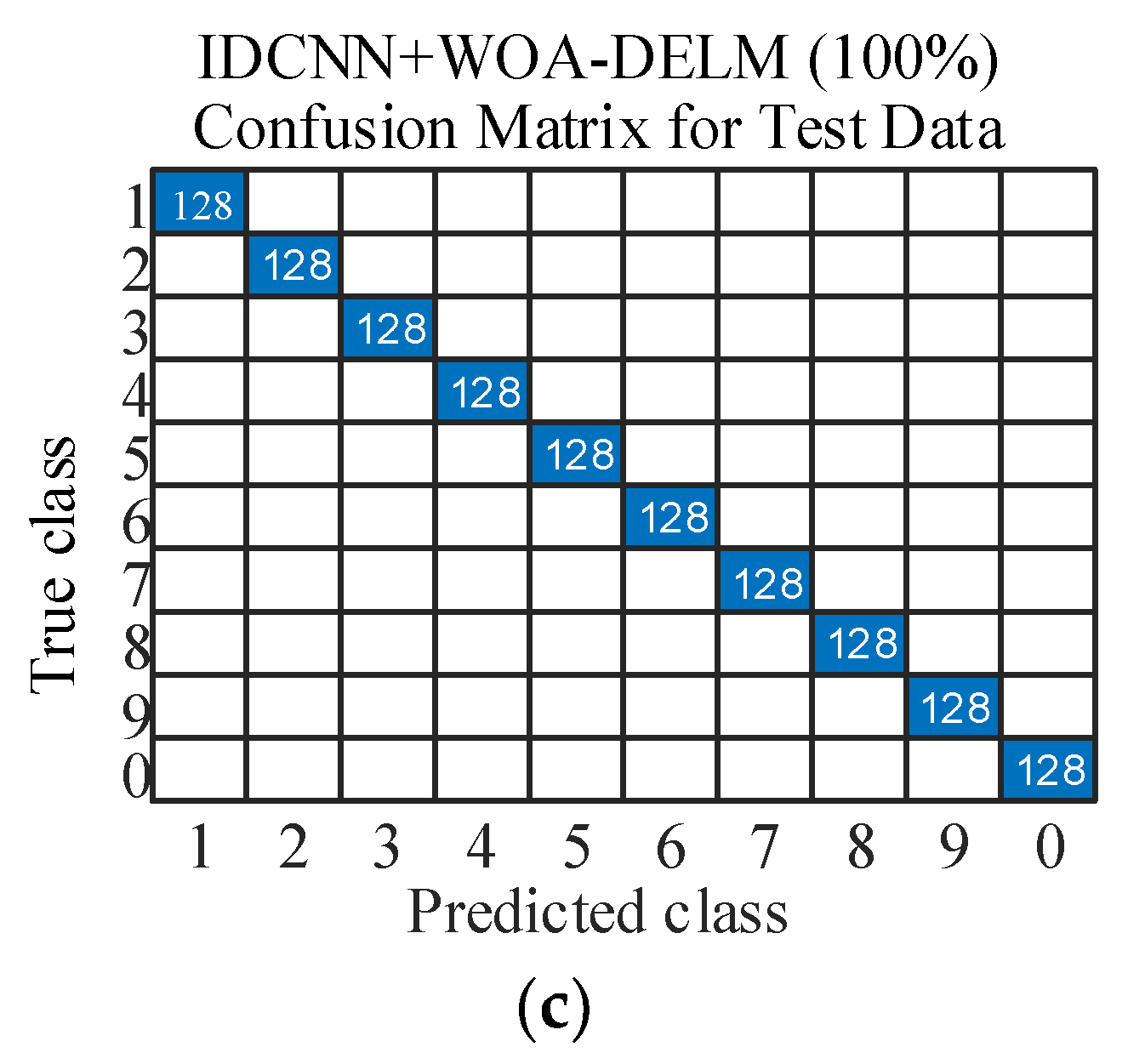

4.5. Analysis of Experiment 3

In Experiment 3, the data in

Table 1 were used. A total of 3000 training set samples and 1280 testing set samples. Other experimental conditions, parameter settings and evaluation criteria are the same as in Experiment 1. By the same token, we conducted 10 experiments to avoid random results. When the working condition is 1772 rpm/1 hp, the fault status identification result is shown in

Figure 16. Furthermore, the average recognition accuracy reached 100% under this condition.

In order to verify the effectiveness of the proposed method, 1D-DCNN, IDCNN, and the proposed method in this paper were, respectively, used for experiments at 1730 rpm/3 hp.

Figure 17 shows the confusion matrix of the results of any of the ten experiments. It can be seen from the experimental results that the accuracy of the proposed method reaches 100% under non-variable working conditions. In addition, compared with the IDCNN method, the accuracy of the proposed method is also improved, indicating that the construction of the WOA-DELM classifier plays a certain role in improving the accuracy of fault types.

In order to verify the generalization of the method proposed in this paper. The proposed method was used to carry out experiments under four conditions, and the average accuracy and F1 Score of ten experiments were obtained. The experimental results are shown in

Table 10. According to the results, it can be seen that the average accuracy and F1 Score of the proposed method reach 100% in the three working conditions. It shows that the proposed method has a high accuracy of fault identification.

Table 11 shows the accuracy and average accuracy of the three methods in ten experiments under different working conditions. According to the results, it can be seen that the proposed method has good performance in four different working conditions.

Eight commonly used fault diagnosis methods were used for Experiment 2, in which the training set and testing set were randomly selected from the corresponding data set and compared with the proposed method in this paper, and 10 experiments were conducted to find the average accuracy in order to reduce random error. The results are shown in

Table 12. Apparently, under the variable working conditions, the accuracy and F1 Score of the six methods are lower than that of the proposed method.