Multimodal Age and Gender Estimation for Adaptive Human-Robot Interaction: A Systematic Literature Review

Abstract

1. Introduction

1.1. Physical Biometrics

1.2. Chemical Biometrics

1.3. Behavioral Biometrics

2. Relevant Works

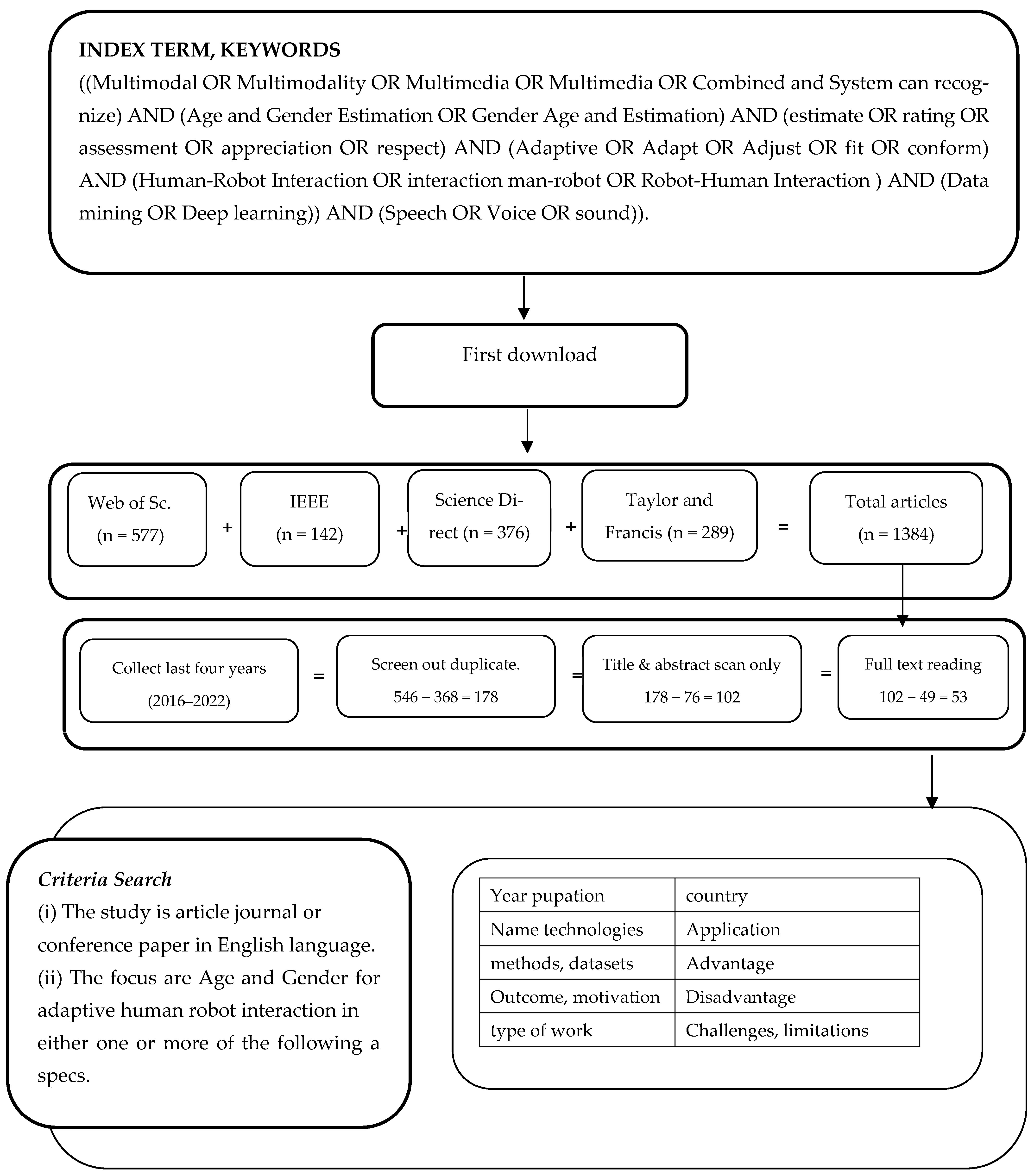

3. Method

- RQ1: What methods were used and what techniques in data analysis are age estimation and gender knowledge?

- RQ2: Does the data depend on the multi-means of the species? What kind of data is used to estimate age and known gender? Do you rely on multimedia?

- RQ3: What are the challenges? Types, methods of conclusion, challenge it, classify it. Ways to overcome them, how to overcome the challenges?

- RQ4: What essential features of the datasets employed in this investigation are there? Do their traits seem to have an impact on the outcomes?

- RQ5: What are the probable difficulties that exist in the studies in developing an adaptive human-robot interaction’s multimodal age and gender estimation?

3.1. Properties Criteria Search (Eligibility Requirements)

3.2. Process for Gathering and Taxonomy of Data

4. Results

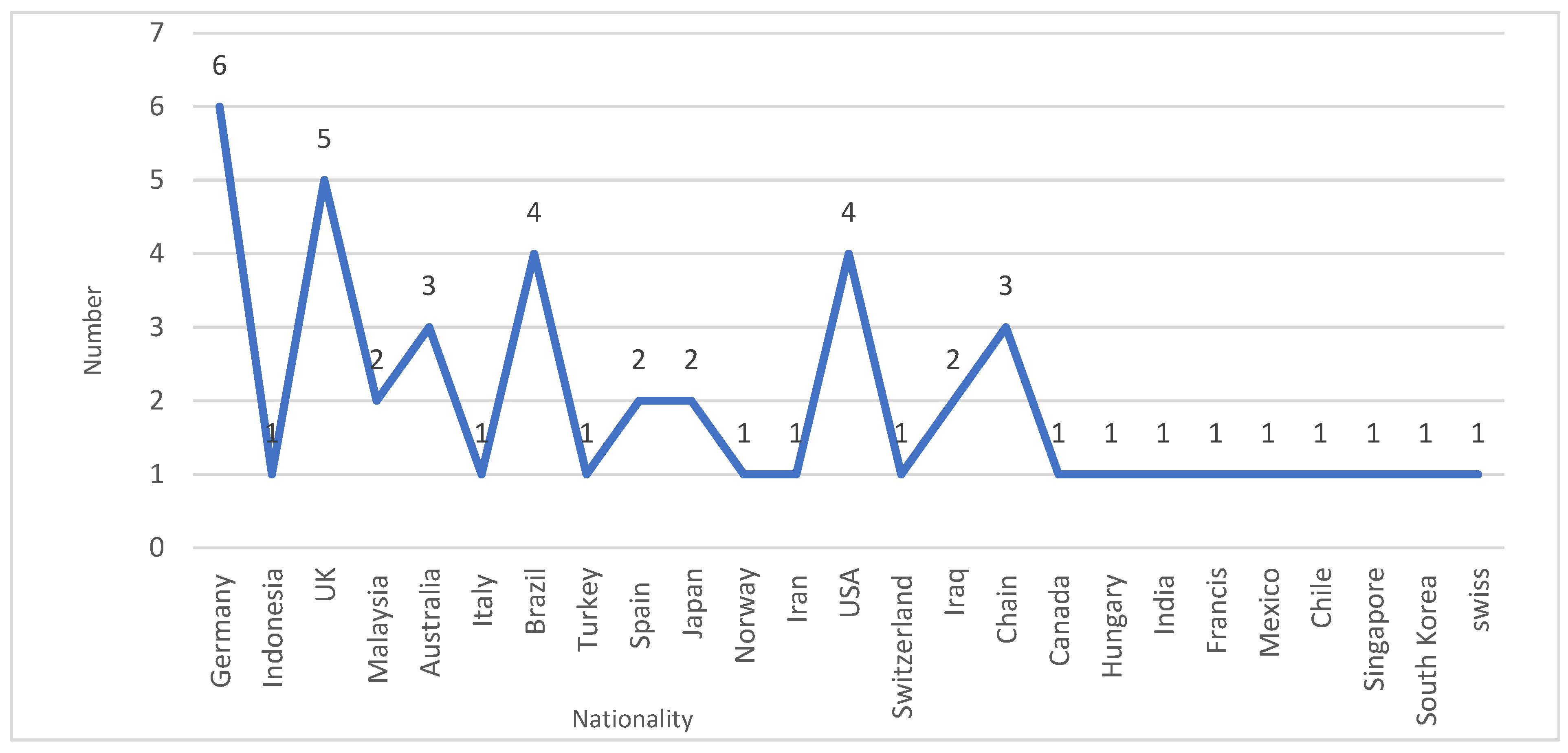

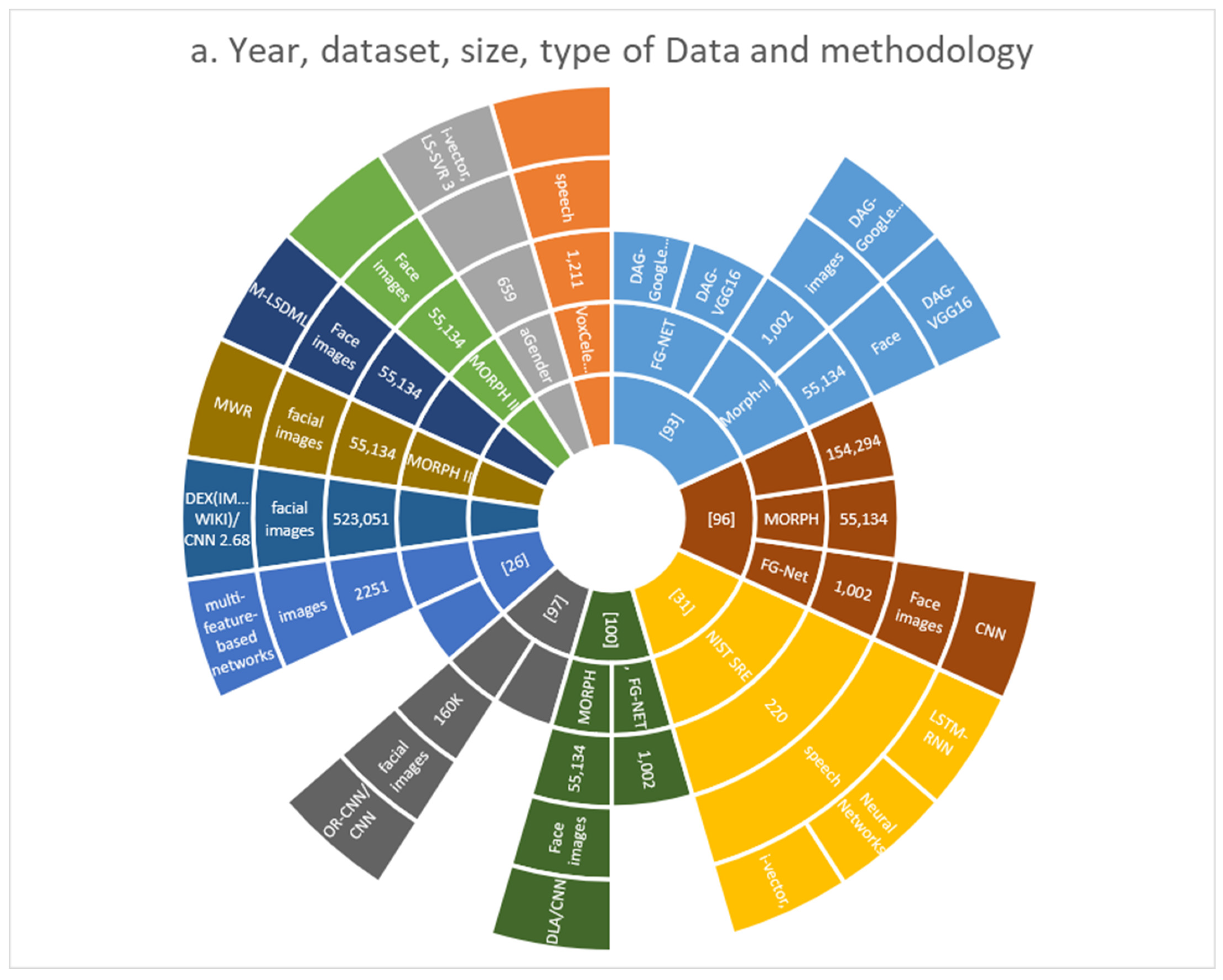

4.1. Distribution Outcomes

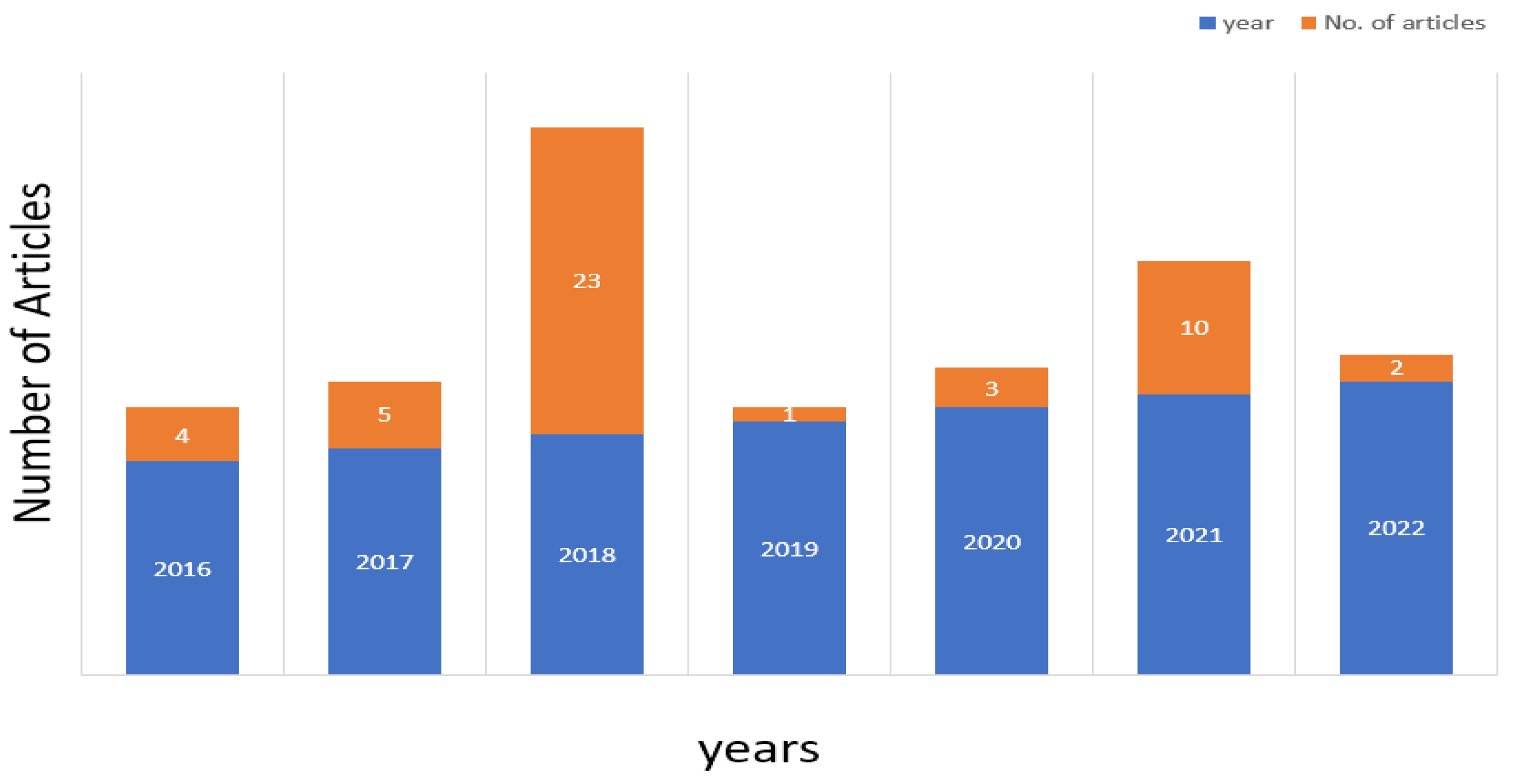

4.2. Distribution by Articles’ Publication Years

4.3. Classification

4.4. Artificial Intelligence (AI)

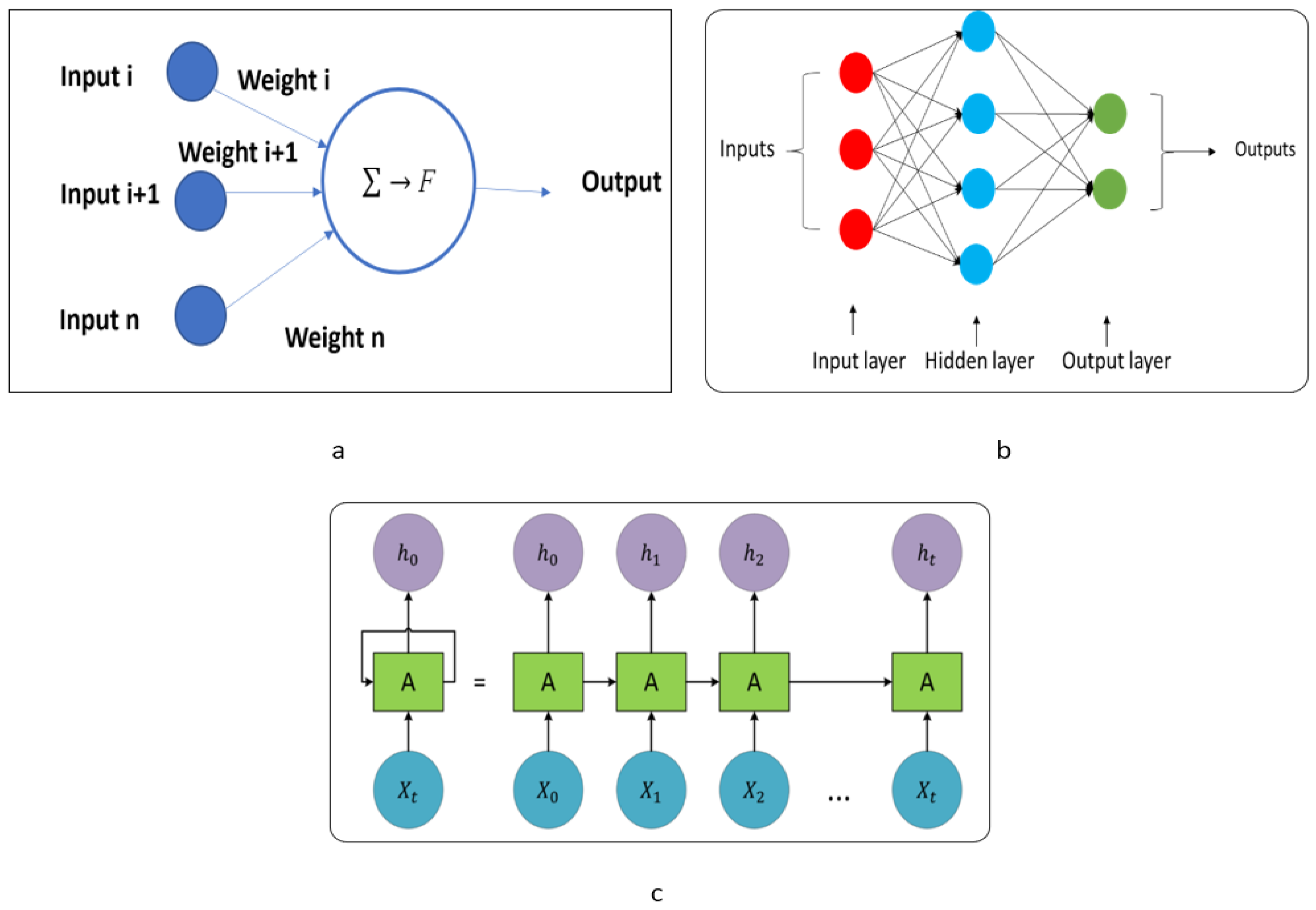

- A.

- Machine learning (ML)

- B.

- Deep learning (DL)

- C.

- Recurrent neural network (RNN)

- D.

- Long short-term memory (LSTM)

5. Evaluation Metrics

- A.

- Mean Absolute Error (MAE)

- B.

- Root Mean Square Error (RMSE)

6. Challenges and Limitations

7. Dataset

- A.

- Group A Dataset

- B.

- GROUP B DATASET

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Definition | Abbreviations |

| Percep-Tual Evaluation of Speech Quality | PESQ |

| Segmental Snr | SSNR |

| Source-To-Distortion Ratio | SDR |

| Short-Time Objective Intelligibility | STOI |

| Perceptual Risk Optimization For Speech Enhancement | PROSE |

| Mean-Square Error | MSE |

| Weighted Euclidean Distortion | WE |

| Itakura–Saito Distortion | IS |

| Hyperbolic Cosine Distortion Measure | COSH |

| Itakura-Saito Distortion Between Dct Power Spectra | IS-II |

| Stabilised Wavelet Transform | SWT |

| Just Noticeable Difference | JND |

| Point Of Subjective Equality | PSE |

| Long Short-Term Memory | LSTM |

| Stabilized Wavelet-Melling Transform | SWMT |

| Stabilized Wavelets Transform | SWT |

| Deep-Neural Network | DNN |

| Asian Face Age Dataset | AFAD |

| random auditory stimulation and Deep-learning based age estimation, though individual perception | RaS-DeeP |

| used Deep Expectation—Convolutional neural network | DEX-CNN |

| Systematic Literature Review | SLR |

| Preferred Reporting Items for Systematic Reviews and Meta-Analyses | PRISMA |

| Artificial Intelligence | AI |

| Machine Learning | ML |

| Deep Learning | DL |

| Recurrent Neural Networks | RNN |

| Artificial Neural Network | ANN |

| Audio Forensic Dataset | AFDS |

| Mel filter cepstral coefficients | MFCC |

| Mean Absolute Error | MAE |

| short-time Fourier transform | STFT |

| Gaussian mixture models | GMMs |

| Hidden Markov Model | HMM |

| support vector machines | SVMs |

| principal component analysis | PCA |

| ADNI: the Alzheimer’s Disease Neuroimaging Initiative, | ADNI |

| Dallas Lifespan Brain Study | DLBS |

| Functional Connectomes Project | 1000FCP |

| Information eXtraction from Images | IXI |

| Neuro Imaging Tools & Resources Collaborator | NITRC |

| Open Access Series of Imaging Studies | OASIS |

| Parkinson’s Progression Markers Initiative, | PPMI |

| label-sensitive deep metric learning | LSDML |

| Moving Window Regression | MWR |

| Southwest University Adult Lifespan Dataset | SALD |

| Mean Absolute Error | MAE |

| Root Mean Square Error | RMSE |

References

- Badr, A.A.; Abdul-Hassan, A.K. Estimating Age in Short Utterances Based on Multi-Class Classification Approach. Comput. Mater. Contin. 2021, 68, 1713–1729. [Google Scholar] [CrossRef]

- Badr, A.A.; Abdul-Hassan, A.K. Age Estimation in Short Speech Utterances Based on Bidirectional Gated-Recurrent Neural Networks. Eng. Technol. J. 2021, 39, 129–140. [Google Scholar] [CrossRef]

- Minematsu, N.; Sekiguchi, M.; Hirose, K. Automatic estimation of one’s age with his/her speech based upon acoustic modeling techniques of speakers. In Proceedings of the 2002 IEEE International Conference on Acoustics, Speech, and Signal Processing, Orlando, FL, USA, 13–17 May 2002; Volume 1, pp. I-137–I-140. [Google Scholar]

- Badr, A.A.; Karim, A. Speaker gender identification in matched and mismatched conditions based on stacking ensemble method. J. Eng. Sci. Technol. 2022, 17, 1119–1134. [Google Scholar]

- Younis, H.; Mohamed, A.; Jamaludin, R.; Wahab, N. Survey of Robotics in Education, Taxonomy, Applications, and Platforms during COVID-9. Comput. Mater. Contin. 2021, 67, 687–707. [Google Scholar] [CrossRef]

- Ayounis, H.; Jamaludin, R.; Wahab, M.; Mohamed, A. The review of NAO robotics in Educational 2014–2020 in COVID-19 Virus (Pandemic Era): Technologies, type of application, advantage, disadvantage and motivation. IOP Conf. Ser. Mater. Sci. Eng. 2020, 928, 032014. [Google Scholar] [CrossRef]

- Younis, H.A.; Mohamed, A.; Ab Wahab, M.N.; Jamaludin, R.; Salisu, S. A new speech recognition model in a human-robot interaction scenario using NAO robot: Proposal and preliminary model. In Proceedings of the 2021 International Conference on Communication & Information Technology (ICICT), Basrah, Iraq, 5–6 June 2021; pp. 215–220. [Google Scholar] [CrossRef]

- Ma, Y.; Zhao, S.; Wang, W.; Li, Y.; King, I. Multimodality in meta-learning: A comprehensive survey. Knowl.-Based Syst. 2022, 250, 108976. [Google Scholar] [CrossRef]

- Lim, F.V.; Toh, W.; Nguyen, T.T.H. Multimodality in the English language classroom: A systematic review of literature. Linguist. Educ. 2022, 69, 101048. [Google Scholar] [CrossRef]

- Li, H.; Schrode, K.M.; Bee, M.A. Vocal sacs do not function in multimodal mate attraction under nocturnal illumination in Cope’s grey treefrog. Anim. Behav. 2022, 189, 127–146. [Google Scholar] [CrossRef]

- Shrestha, A.; Mahmood, A. Review of Deep Learning Algorithms and Architectures. IEEE Access 2019, 7, 53040–53065. [Google Scholar] [CrossRef]

- Song, Z.; Yang, X.; Xu, Z.; King, I. Graph-Based Semi-Supervised Learning: A Comprehensive Review. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–21. [Google Scholar] [CrossRef]

- Young, T.; Hazarika, D.; Poria, S.; Cambria, E. Recent Trends in Deep Learning Based Natural Language Processing. IEEE Comput. Intell. Mag. 2018, 13, 55–75. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep learning for visual understanding: A review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Asif, M.K.; Nambiar, P.; Ibrahim, N.; Al-Amery, S.M.; Khan, I.M. Three-dimensional image analysis of developing mandibular third molars apices for age estimation: A study using CBCT data enhanced with Mimics & 3-Matics software. Leg. Med. 2019, 39, 9–14. [Google Scholar] [CrossRef]

- Kim, Y.H.; Nam, S.H.; Hong, S.B.; Park, K.R. GRA-GAN: Generative adversarial network for image style transfer of Gender, Race, and age. Expert Syst. Appl. 2022, 198, 116792. [Google Scholar] [CrossRef]

- Guo, G.; Mu, G. A framework for joint estimation of age, gender and ethnicity on a large database. Image Vis. Comput. 2014, 32, 761–770. [Google Scholar] [CrossRef]

- Zhang, L.; Losin, E.A.R.; Ashar, Y.K.; Koban, L.; Wager, T.D. Gender Biases in Estimation of Others’ Pain. J. Pain 2021, 22, 1048–1059. [Google Scholar] [CrossRef]

- de Sousa, A.L.A.; da Silva, B.A.K.; Lopes, S.L.P.D.C.; Mendes, J.D.P.; Pinto, P.H.V.; Pinto, A.S.B. Estimation of gender and age through the angulation formed by the pterygoid processes of the sphenoid bone. Forensic Imaging 2022, 28, 200489. [Google Scholar] [CrossRef]

- Lee, S.H.; Hosseini, S.; Kwon, H.J.; Moon, J.; Koo, H.I.; Cho, N.I. Age and gender estimation using deep residual learning network. In Proceedings of the 2018 International Workshop on Advanced Image Technology (IWAIT), Chiang Mai, Thailand, 7–9 January 2018; pp. 1–3. [Google Scholar] [CrossRef]

- Puc, A.; Struc, V.; Grm, K. Analysis of Race and Gender Bias in Deep Age Estimation Models. In Proceedings of the 2020 28th European Signal Processing Conference (EUSIPCO), Amsterdam, The Netherlands, 18–21 January 2021; pp. 830–834. [Google Scholar] [CrossRef]

- Lee, S.S.; Kim, H.G.; Kim, K.; Ro, Y.M. Adversarial Spatial Frequency Domain Critic Learning for Age and Gender Classification. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 2032–2036. [Google Scholar] [CrossRef]

- Zhao, T.C.; Kuhl, P.K. Development of infants’ neural speech processing and its relation to later language skills: A MEG study. Neuroimage 2022, 256, 119242. [Google Scholar] [CrossRef]

- Tremblay, P.; Brisson, V.; Deschamps, I. Brain aging and speech perception: Effects of background noise and talker variability. Neuroimage 2020, 227, 117675. [Google Scholar] [CrossRef]

- Liu, X.; Beheshti, I.; Zheng, W.; Li, Y.; Li, S.; Zhao, Z.; Yao, Z.; Hu, B. Brain age estimation using multi-feature-based networks. Comput. Biol. Med. 2022, 143, 105285. [Google Scholar] [CrossRef]

- Zeng, J.; Peng, J.; Zhao, Y. Comparison of speech intelligibility of elderly aged 60–69 years and young adults in the noisy and reverberant environment. Appl. Acoust. 2019, 159, 107096. [Google Scholar] [CrossRef]

- Arya, R.; Singh, J.; Kumar, A. A survey of multidisciplinary domains contributing to affective computing. Comput. Sci. Rev. 2021, 40, 100399. [Google Scholar] [CrossRef]

- Maithri, M.; Raghavendra, U.; Gudigar, A.; Samanth, J.; Barua, P.D.; Murugappan, M.; Chakole, Y.; Acharya, U.R. Automated emotion recognition: Current trends and future perspectives. Comput. Methods Programs Biomed. 2022, 215, 106646. [Google Scholar] [CrossRef]

- Egger, M.; Ley, M.; Hanke, S. Emotion Recognition from Physiological Signal Analysis: A Review. Electron. Notes Theor. Comput. Sci. 2019, 343, 35–55. [Google Scholar] [CrossRef]

- Zazo, R.; Nidadavolu, P.S.; Chen, N.; Gonzalez-Rodriguez, J.; Dehak, N. Age Estimation in Short Speech Utterances Based on LSTM Recurrent Neural Networks. IEEE Access 2018, 6, 22524–22530. [Google Scholar] [CrossRef]

- Bakhshi, A.; Harimi, A.; Chalup, S. CyTex: Transforming speech to textured images for speech emotion recognition. Speech Commun. 2022, 139, 62–75. [Google Scholar] [CrossRef]

- Gustavsson, P.; Syberfeldt, A.; Brewster, R.; Wang, L. Human-robot Collaboration Demonstrator Combining Speech Recognition and Haptic Control. Procedia CIRP 2017, 63, 396–401. [Google Scholar] [CrossRef]

- Dimeas, F.; Aspragathos, N. Online Stability in Human-Robot Cooperation with Admittance Control. IEEE Trans. Haptics 2016, 9, 267–278. [Google Scholar] [CrossRef]

- Song, C.S.; Kim, Y.-K. The role of the human-robot interaction in consumers’ acceptance of humanoid retail service robots. J. Bus. Res. 2022, 146, 489–503. [Google Scholar] [CrossRef]

- Cui, Y.; Song, X.; Hu, Q.; Li, Y.; Sharma, P.; Khapre, S. Human-robot interaction in higher education for predicting student engagement. Comput. Electr. Eng. 2022, 99, 107827. [Google Scholar] [CrossRef]

- Zhang, Q.; Fang, L.; Zhang, Q.; Xiong, C. Simultaneous estimation of joint angle and interaction force towards sEMG-driven human-robot interaction during constrained tasks. Neurocomputing 2022, 484, 38–45. [Google Scholar] [CrossRef]

- Kim, H.; So, K.K.F.; Wirtz, J. Service robots: Applying social exchange theory to better understand human–robot interactions. Tour. Manag. 2022, 92, 104537. [Google Scholar] [CrossRef]

- Coronado, E.; Kiyokawa, T.; Ricardez, G.A.G.; Ramirez-Alpizar, I.G.; Venture, G.; Yamanobe, N. Evaluating quality in human-robot interaction: A systematic search and classification of performance and human-centered factors, measures and metrics towards an industry 5.0. J. Manuf. Syst. 2022, 63, 392–410. [Google Scholar] [CrossRef]

- Paliga, M.; Pollak, A. Development and validation of the fluency in human-robot interaction scale. A two-wave study on three perspectives of fluency. Int. J. Hum.-Comput. Stud. 2021, 155, 102698. [Google Scholar] [CrossRef]

- Lee, K.H.; Baek, S.G.; Lee, H.J.; Lee, S.H.; Koo, J.C. Real-time adaptive impedance compensator using simultaneous perturbation stochastic approximation for enhanced physical human–robot interaction transparency. Robot. Auton. Syst. 2022, 147, 103916. [Google Scholar] [CrossRef]

- Secil, S.; Ozkan, M. Minimum distance calculation using skeletal tracking for safe human-robot interaction. Robot. Comput. Manuf. 2022, 73, 102253. [Google Scholar] [CrossRef]

- Chen, J.; Ro, P.I. Human Intention-Oriented Variable Admittance Control with Power Envelope Regulation in Physical Human-Robot Interaction. Mechatronics 2022, 84, 102802. [Google Scholar] [CrossRef]

- Liu, H.; Fang, T.; Zhou, T.; Wang, Y.; Wang, L. Deep Learning-based Multimodal Control Interface for Human-Robot Collaboration. Procedia CIRP 2018, 72, 3–8. [Google Scholar] [CrossRef]

- Grasse, L.; Boutros, S.J.; Tata, M.S. Speech Interaction to Control a Hands-Free Delivery Robot for High-Risk Health Care Scenarios. Front. Robot. AI 2021, 8, 612750. [Google Scholar] [CrossRef]

- Dargan, S.; Kumar, M. A comprehensive survey on the biometric recognition systems based on physiological and behavioral modalities. Expert Syst. Appl. 2020, 143, 113114. [Google Scholar] [CrossRef]

- Cummins, N.; Baird, A.; Schuller, B.W. Speech analysis for health: Current state-of-the-art and the increasing impact of deep learning. Methods 2018, 151, 41–54. [Google Scholar] [CrossRef] [PubMed]

- Imani, M.; Montazer, G.A. A survey of emotion recognition methods with emphasis on E-Learning environments. J. Netw. Comput. Appl. 2019, 147, 102423. [Google Scholar] [CrossRef]

- Tapus, A.; Bandera, A.; Vazquez-Martin, R.; Calderita, L.V. Perceiving the person and their interactions with the others for social robotics–A review. Pattern Recognit. Lett. 2019, 118, 3–13. [Google Scholar] [CrossRef]

- Badr, A.; Abdul-Hassan, A. A Review on Voice-based Interface for Human-Robot Interaction. Iraqi J. Electr. Electron. Eng. 2020, 16, 1–12. [Google Scholar] [CrossRef]

- Akçay, M.B.; Oğuz, K. Speech emotion recognition: Emotional models, databases, features, preprocessing methods, supporting modalities, and classifiers. Speech Commun. 2020, 116, 56–76. [Google Scholar] [CrossRef]

- Berg, J.; Lu, S. Review of Interfaces for Industrial Human-Robot Interaction. Curr. Robot. Rep. 2020, 1, 27–34. [Google Scholar] [CrossRef]

- Shoumy, N.J.; Ang, L.-M.; Seng, K.P.; Rahaman, D.; Zia, T. Multimodal big data affective analytics: A comprehensive survey using text, audio, visual and physiological signals. J. Netw. Comput. Appl. 2019, 149, 102447. [Google Scholar] [CrossRef]

- Grossi, G.; Lanzarotti, R.; Napoletano, P.; Noceti, N.; Odone, F. Positive technology for elderly well-being: A review. Pattern Recognit. Lett. 2020, 137, 61–70. [Google Scholar] [CrossRef]

- Abdu, S.A.; Yousef, A.H.; Salem, A. Multimodal Video Sentiment Analysis Using Deep Learning Approaches, a Survey. Inf. Fusion 2021, 76, 204–226. [Google Scholar] [CrossRef]

- Fahad, S.; Ranjan, A.; Yadav, J.; Deepak, A. A survey of speech emotion recognition in natural environment. Digit. Signal Process. 2021, 110, 102951. [Google Scholar] [CrossRef]

- Bjørk, M.B.; Kvaal, S.I. CT and MR imaging used in age estimation: A systematic review. J. Forensic Odonto-Stomatol. 2018, 36, 14–25. [Google Scholar]

- Kofod-petersen, A. How to do a structured literature review in computer science. Researchgate 2014, 1, 1–7. [Google Scholar]

- Veras, L.G.D.O.; Medeiros, F.L.L.; Guimaraes, L.N.F. Systematic Literature Review of Sampling Process in Rapidly-Exploring Random Trees. IEEE Access 2019, 7, 50933–50953. [Google Scholar] [CrossRef]

- Keele, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering. Tech. Report, Ver. 2.3 EBSE Tech. Report. EBSE. 2007. Available online: https://www.elsevier.com/__data/promis_misc/525444systematicreviewsguide.pdf (accessed on 12 February 2023).

- Götz, S. Supporting systematic literature reviews in computer science: The systematic literature review toolkit. In Proceedings of the 21st ACM/IEEE International Conference on Model Driven Engineering Languages and Systems: Companion Pro-ceedings, Proceedings of the MODELS ’18: ACM/IEEE 21th International Conference on Model Driven Engineering Languages and Systems, Copenhagen Denmark, 14–19 October 2018; Association for Computing Machinery: New York, NY, USA; pp. 22–26. [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, 105906. [Google Scholar] [CrossRef]

- Makridakis, S. The forthcoming Artificial Intelligence (AI) revolution: Its impact on society and firms. Futures 2017, 90, 46–60. [Google Scholar] [CrossRef]

- Lele, A.; Lele, A. Artificial intelligence (AI). Disruptive technologies for the militaries and security. In Disruptive Technologies for the Militaries and Security; Springer: Berlin/Heidelberg, Germany, 2019; Volume 132, pp. 139–154. [Google Scholar]

- Makridakis, S.; Spiliotis, E.; Assimakopoulos, V. The M4 Competition: Results, findings, conclusion and way forward. Int. J. Forecast. 2018, 34, 802–808. [Google Scholar] [CrossRef]

- Makridakis, S.; Spiliotis, E.; Assimakopoulos, V. The Accuracy of Machine Learning (ML) Forecasting Methods versus Statistical Ones: Extending the Results of the M3-Competition. In Working Paper, University of Nicosia; Institute for the Future: Palo Alto, CA, USA, 2017. [Google Scholar]

- Hayder, I.M.; Al Ali, G.A.N.; Younis, H.A. Predicting reaction based on customer’s transaction using machine learning ap-proaches. Int. J. Electr. Comput. Eng. 2023, 13, 1086–1096. [Google Scholar]

- Wang, J.; Wang, J. Forecasting stochastic neural network based on financial empirical mode decomposition. Neural Netw. 2017, 90, 8–20. [Google Scholar] [CrossRef]

- Kock, A.B.; Teräsvirta, T. Forecasting Macroeconomic Variables Using Neural Network Models and Three Automated Model Selection Techniques. Econ. Rev. 2015, 35, 1753–1779. [Google Scholar] [CrossRef]

- Mcmahan, H.B.; Ramage, D.; Com, B.G. Federated Learning of Deep Networks using Model Averaging. arXiv 2012, arXiv:1602.05629. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Le, X.H.; Ho, H.V.; Lee, G.; Jung, S. Application of Long Short-Term Memory (LSTM) Neural Network for Flood Forecasting. Water 2019, 11, 1387. [Google Scholar] [CrossRef]

- Hayder, I.M.; Al-Amiedy, T.A.; Ghaban, W.; Saeed, F.; Nasser, M.; Al-Ali, G.A.; Younis, H.A. An In-telligent Early Flood Forecasting and Prediction Leveraging Machine and Deep Learning Algorithms with Ad-vanced Alert System. Processes 2023, 11, 481. [Google Scholar] [CrossRef]

- Zhao, J.; Huang, F.; Lv, J.; Duan, Y.; Qin, Z.; Li, G.; Tian, G. Do RNN and LSTM have long memory? In Proceedings of the 37th International Conference on Machine Learning, ICML, Vienna, Austria, 12–18 July 2020; pp. 11302–11312. [Google Scholar]

- Lim, S. Estimation of gender and age using CNN-based face recognition algorithm. Int. J. Adv. Smart Converg. 2020, 9, 203–211. [Google Scholar] [CrossRef]

- Lin, Y.; Hsieh, P.-J. Neural decoding of speech with semantic-based classification. Cortex 2022, 154, 231–240. [Google Scholar] [CrossRef] [PubMed]

- Jiao, D.; Watson, V.; Wong, S.G.-J.; Gnevsheva, K.; Nixon, J.S. Age estimation in foreign-accented speech by non-native speakers of English. Speech Commun. 2018, 106, 118–126. [Google Scholar] [CrossRef]

- Narendra, N.; Airaksinen, M.; Story, B.; Alku, P. Estimation of the glottal source from coded telephone speech using deep neural networks. Speech Commun. 2019, 106, 95–104. [Google Scholar] [CrossRef]

- Sadasivan, J.; Seelamantula, C.S.; Muraka, N.R. Speech Enhancement Using a Risk Estimation Approach. Speech Commun. 2020, 116, 12–29. [Google Scholar] [CrossRef]

- Matsui, T.; Irino, T.; Uemura, R.; Yamamoto, K.; Kawahara, H.; Patterson, R.D. Modelling speaker-size discrimination with voiced and unvoiced speech sounds based on the effect of spectral lift. Speech Commun. 2022, 136, 23–41. [Google Scholar] [CrossRef]

- Lileikyte, R.; Irvin, D.; Hansen, J.H. Assessing child communication engagement and statistical speech patterns for American English via speech recognition in naturalistic active learning spaces. Speech Commun. 2022, 140, 98–108. [Google Scholar] [CrossRef]

- Tang, Y. Glimpse-based estimation of speech intelligibility from speech-in-noise using artificial neural networks. Comput. Speech Lang. 2021, 69, 101220. [Google Scholar] [CrossRef]

- Cooke, M. A glimpsing model of speech perception in noise. J. Acoust. Soc. Am. 2006, 119, 1562–1573. [Google Scholar] [CrossRef] [PubMed]

- Cooke, M.; Mayo, C.; Valentini-Botinhao, C.; Stylianou, Y.; Sauert, B.; Tang, Y. Evaluating the intelligibility benefit of speech modifications in known noise conditions. Speech Commun. 2013, 55, 572–585. [Google Scholar] [CrossRef]

- Shahnawazuddin, S.; Adiga, N.; Kathania, H.K.; Pradhan, G.; Sinha, R. Studying the role of pitch-adaptive spectral estimation and speaking-rate normalization in automatic speech recognition. Digit. Signal Process. 2018, 79, 142–151. [Google Scholar] [CrossRef]

- Kalluri, S.B.; Vijayasenan, D.; Ganapathy, S. Automatic speaker profiling from short duration speech data. Speech Commun. 2020, 121, 16–28. [Google Scholar] [CrossRef]

- Avikal, S.; Sharma, K.; Barthwal, A.; Kumar, K.N.; Badhotiya, G.K. Estimation of age from speech using excitation source features. Mater. Today Proc. 2021, 46, 11046–11049. [Google Scholar] [CrossRef]

- Srivastava, R.; Pandey, D. Speech recognition using HMM and Soft Computing. Mater. Today Proc. 2022, 51, 1878–1883. [Google Scholar] [CrossRef]

- Narendra, N.P.; Alku, P. Automatic intelligibility assessment of dysarthric speech using glottal parameters. Speech Commun. 2020, 123, 1–9. [Google Scholar] [CrossRef]

- Ilyas, M.; Nait-Ali, A. Auditory perception vs. face based systems for human age estimation in unsupervised environments: From countermeasure to multimodality. Pattern Recognit. Lett. 2021, 142, 39–45. [Google Scholar] [CrossRef]

- Abirami, B.; Subashini, T.; Mahavaishnavi, V. Automatic age-group estimation from gait energy images. Mater. Today Proc. 2020, 33, 4646–4649. [Google Scholar] [CrossRef]

- Sethi, D.; Bharti, S.; Prakash, C. A comprehensive survey on gait analysis: History, parameters, approaches, pose estimation, and future work. Artif. Intell. Med. 2022, 129, 102314. [Google Scholar] [CrossRef]

- Lee, S.; Lee, J.; Moon, H.; Park, C.; Seo, J.; Eo, S.; Koo, S.; Lim, H. A Survey on Evaluation Metrics for Machine Translation. Mathematics 2023, 11, 1006. [Google Scholar] [CrossRef]

- Aafaq, N.; Mian, A.; Liu, W.; Gilani, S.Z.; Shah, M. Video description: A survey of methods, datasets, and evaluation metrics. ACM Comput. Surv. (CSUR). 2019, 52, 1–37. [Google Scholar] [CrossRef]

- Rao, K.S.; Manjunath, K.E. Speech Recognition Using Articulatory and Excitation Source Features; Springer International Publishing: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- Grzybowska, J.; Kacprzak, S. Speaker Age Classification and Regression Using i-Vectors. In Proceedings of the INTERSPEECH 2016 Conference, San Francisco, CA, USA, 8–12 September 2016; pp. 1402–1406. [Google Scholar] [CrossRef]

- Taheri, S.; Toygar, Ö. On the use of DAG-CNN architecture for age estimation with multi-stage features fusion. Neurocomputing 2019, 329, 300–310. [Google Scholar] [CrossRef]

- Hiba, S.; Keller, Y. Hierarchical Attention-based Age Estimation and Bias Estimation. arXiv 2021, arXiv:2103.09882. [Google Scholar]

- Liu, H.; Lu, J.; Feng, J.; Zhou, J. Label-Sensitive Deep Metric Learning for Facial Age Estimation. IEEE Trans. Inf. Forensics Secur. 2018, 13, 292–305. [Google Scholar] [CrossRef]

- Hu, Z.; Wen, Y.; Wang, J.; Wang, M.; Hong, R.; Yan, S. Facial Age Estimation With Age Difference. IEEE Trans. Image Process. 2016, 26, 3087–3097. [Google Scholar] [CrossRef]

- Niu, Z.; Zhou, M.; Wang, L.; Gao, X.; Hua, G. Ordinal Regression with Multiple Output CNN for Age Estimation. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4920–4928. [Google Scholar] [CrossRef]

- Shin, N.-H.; Lee, S.-H.; Kim, C.-S. Moving Window Regression: A Novel Approach to Ordinal Regression. arXiv 2022, arXiv:2203.13122. [Google Scholar]

- Rothe, R.; Timofte, R.; Van Gool, L. Deep Expectation of Real and Apparent Age from a Single Image Without Facial Landmarks. Int. J. Comput. Vis. 2016, 126, 144–157. [Google Scholar] [CrossRef]

- Wang, X.; Guo, R.; Kambhamettu, C. Deeply-Learned Feature for Age Estimation. In Proceedings of the 2015 IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2015; pp. 534–541. [Google Scholar] [CrossRef]

- Duan, M.; Li, K.; Yang, C.; Li, K. A hybrid deep learning CNN–ELM for age and gender classification. Neurocomputing 2018, 275, 448–461. [Google Scholar] [CrossRef]

- Ng, C.-C.; Cheng, Y.-T.; Hsu, G.-S.; Yap, M.H. Multi-layer age regression for face age estimation. In Proceedings of the 2017 Fifteenth IAPR International Conference on Machine Vision Applications (MVA), Nagoya, Japan, 8–12 May 2017. [Google Scholar] [CrossRef]

- Antipov, G.; Baccouche, M.; Berrani, S.-A.; Dugelay, J.-L. Apparent Age Estimation from Face Images Combining General and Children-Specialized Deep Learning Models. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 801–809. [Google Scholar] [CrossRef]

- Kalluri, S.B.; Vijayasenan, D.; Ganapathy, S. A Deep Neural Network Based End to End Model for Joint Height and Age Estimation from Short Duration Speech. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 6580–6584. [Google Scholar] [CrossRef]

- Singh, J.B.R.; Raj, B. Short-term analysis for estimating physical parameters of speakers. In Proceedings of the 2016 4th International Conference on Biometrics and Forensics (IWBF), Limassol, Cyprus, 3–4 March 2016; pp. 1–6. [Google Scholar]

- Garofolo, J.S.; Lamel, L.F.; Fisher, W.M.; Fiscus, J.G.; Pallett, D.S.; Dahlgren, N.L. TIMIT Acoustic-Phonetic Continuous Speech Corpus. Available online: https://doi.org/10.35111/17gk-bn40 (accessed on 11 January 2023). [CrossRef]

- Liu, Y.; Fung, P.; Yang, Y.; Cieri, C.; Huang, S.; Graff, D. HKUST/MTS: A Very Large Scale Mandarin Telephone Speech Corpus. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2006; pp. 724–735. [Google Scholar] [CrossRef]

- Serda, M. Synteza i aktywność biologiczna nowych analogów tiosemikarbazonowych chelatorów żelaza. Uniw. Śląski 2013, 3, 343–354. [Google Scholar]

- Fung, D.G.P.; Huang, S. HKUST Mandarin Telephone Speech, Part 1-Linguistic Data Consortium. Available online: https://catalog.ldc.upenn.edu/LDC2005S15 (accessed on 20 June 2022).

- Group, N.M.I. 2008 NIST Speaker Recognition Evaluation Test Set-Linguistic Data Consortium. Available online: https://catalog.ldc.upenn.edu/LDC2011S08 (accessed on 20 June 2022).

- An, P.; Shenzhen, T. Towards speaker age estimation with label distribution learning. In Proceedings of the ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 4618–4622. [Google Scholar]

- Ghahremani, P.; Nidadavolu, P.S.; Chen, N.; Villalba, J.; Povey, D.; Khudanpur, S.; Dehak, N. End-to-end Deep Neural Network Age Estimation. INTERSPEECH 2018, 2018, 277–281. [Google Scholar] [CrossRef]

- Kelly, F.; Drygajlo, A.; Harte, N. Speaker verification with long-term ageing data. In Proceedings of the 2012 5th IAPR International Conference on Biometrics (ICB), New Delhi, India, 29 March–1 April 2012; pp. 478–483. [Google Scholar] [CrossRef]

- Pantraki, E.; Kotropoulos, C. Multi-way regression for age prediction exploiting speech and face image information. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017; pp. 2196–2200. [Google Scholar] [CrossRef]

- Kelly, F.; Drygajlo, A.; Harte, N. Speaker verification in score-ageing-quality classification space. Comput. Speech Lang. 2013, 27, 1068–1084. [Google Scholar] [CrossRef]

- Itou, K.; Yamamoto, M.; Takeda, K.; Takezawa, T.; Matsuoka, T.; Kobayashi, T.; Shikano, K.; Itahashi, S. JNAS: Japanese speech corpus for large vocabulary continuous speech recognition research. Acoust. Sci. Technol. 1999, 20, 199–206. [Google Scholar] [CrossRef]

- Kobayashi, T. ASJ Continuous Speech Corpus. Jpn. Newsp. Artic. Sentences 1997, 48, 888–893. [Google Scholar]

- VoxCeleb. Available online: https://www.robots.ox.ac.uk/~vgg/data/voxceleb/ (accessed on 19 June 2022).

- Chung, J.S.; Nagrani, A.; Zisserman, A. VoxCeleb2: Deep Speaker Recognition. In Proceedings of the INTERSPEECH 2018, Hyderabad, India, 2–6 September 2018; pp. 1086–1090. [Google Scholar] [CrossRef]

- Nagrani, A.; Chung, J.S.; Zisserman, A.V. VoxCeleb: A large-scale speaker identification dataset. In Proceedings of the Interspeech, Stockholm, Sweden, 20–24 August 2017; pp. 2616–2620. [Google Scholar]

- Zhao, M.; Ma, Y.; Liu, M.; Xu, M. The speakin system for voxceleb speaker recognition challange 2021. arXiv 2021, arXiv:2109.01989. [Google Scholar]

- Naohiro, T.V.; Ogawa, A.; Kitagishi, Y.; Kamiyama, H. Age-vox-celeb: Multi-modal corpus for facial and speech estimation. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 6963–6967. [Google Scholar]

- Bahari, M.H.; Van Hamme, H. Speaker age estimation using Hidden Markov Model weight supervectors. In Proceedings of the 2012 11th International Conference on Information Science, Signal Processing and their Applications (ISSPA), Montreal, QC, Canada, 2–5 July 2012; pp. 517–521. [Google Scholar] [CrossRef]

- van Leeuwen, D.A.; Kessens, J.; Sanders, E.; Heuvel, H.V.D. Results of the n-best 2008 dutch speech recognition evaluation. INTERSPEECH 2009, 2009, 2571–2574. [Google Scholar] [CrossRef]

- Spiegl, W.; Stemmer, G.; Lasarcyk, E.; Kolhatkar, V.; Cassidy, A.; Potard, B.; Shum, S.; Song, Y.C.; Xu, P.; Beyerlein, P.; et al. Analyzing features for automatic age estimation on cross-sectional data. In Proceedings of the Tenth Annual Conference of the International Speech Communication Association, Brighton, United Kingdom, 6–10 September 2009. [Google Scholar] [CrossRef]

- Harnsberger, J.D.; Brown, W.S.; Shrivastav, R.; Rothman, H. Noise and Tremor in the Perception of Vocal Aging in Males. J. Voice 2010, 24, 523–530. [Google Scholar] [CrossRef]

- Burkhardt, F.; Eckert, M.; Johannsen, W.; Stegmann, J. A database of age and gender annotated telephone speech. In Proceedings of the Seventh International Conference on Language Resources and Evaluation (LREC’10), Valletta, Malta, 17–23 May 2010; pp. 1562–1565. [Google Scholar]

- Keren, G.; Schuller, B. Convolutional RNN: An enhanced model for extracting features from sequential data. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 3412–3419. [Google Scholar] [CrossRef]

- Cao, Y.T.; Iii, H.D. Toward Gender-Inclusive Coreference Resolution. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 4568–4595. [Google Scholar] [CrossRef]

- Cao, Y.T.; Daumé, H. Toward Gender-Inclusive Coreference Resolution: An Analysis of Gender and Bias Throughout the Machine Learning Lifecycle. Comput. Linguist. 2021, 47, 615–661. [Google Scholar] [CrossRef]

- Bahari, M.H.; McLaren, M.; Van Hamme, H.; van Leeuwen, D.A. Speaker age estimation using i-vectors. Eng. Appl. Artif. Intell. 2014, 34, 99–108. [Google Scholar] [CrossRef]

- Sadjadi, S.O. NIST SRE CTS Superset: A large-scale dataset for telephony speaker recognition. arXiv 2021, arXiv:2108.07118. [Google Scholar]

| Survey Paper | Year | Features | Research Design | Feature Selection | Deep Learning | Dataset | Speech Dependent | Text Dependent | Multimodal | Language | Protocol | Feature Direction |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| [47] | 2018 | √ | √ | √ | √ | √ | √ | √ | √ | √ | √ | √ |

| [48] | 2019 | √ | √ | √ | √ | |||||||

| [49] | 2019 | √ | √ | √ | √ | √ | ||||||

| [6] | 2020 | √ | √ | √ | √ | √ | ||||||

| [50] | 2020 | √ | √ | √ | √ | √ | √ | √ | ||||

| [51] | 2020 | √ | √ | √ | √ | √ | √ | √ | ||||

| [52] | 2020 | √ | √ | √ | ||||||||

| [53] | 2020 | √ | √ | √ | √ | √ | √ | √ | √ | √ | ||

| [54] | 2020 | √ | √ | √ | √ | √ | ||||||

| [5] | 2021 | √ | √ | √ | √ | √ | ||||||

| [55] | 2021 | √ | √ | √ | √ | √ | ||||||

| [56] | 2021 | √ | √ | √ | √ | √ | √ | √ | √ | √ | ||

| [57] | 2022 | √ | √ | √ | √ | √ | √ | √ | ||||

| This Survey | 2022 | √ | √ | √ | √ | √ | √ | √ | √ | √ | √ |

| Original Words | Alternative Words |

|---|---|

| Multimodal | Multimodality Multimedia Multi-media Efficiently manage Combined gdp System can recognize |

| Age and Gender Estimation | Age and Gender estimate, Age and Gender rating, Age and Gender assessment, Age and Gender appreciation, Age and Gender estimation, Age and Gender respect, Age appreciation and gender appreciation |

| Adaptive | Adapt Adjust Fit Conform |

| Human-Robot Interaction Robot-Human Interaction | Interaction man—robot. The interaction between man is a robot. The interaction between a human robot. |

| Speech | Voice, sound |

| Ref. | Year | Model | Parameters | Type | Input | Out Put | Average Accuracy | Challenges |

|---|---|---|---|---|---|---|---|---|

| [75] | 2020 | CNN | Six hidden layers, | Face recognition algorithm | GoogLeNet’s, 5328 images | Estimation | 85%Age est., 98% gender esti. 98% | more difficult to classify the more detailed classes according to age. |

| [76] | 2022 | neural activity | Five categories, 300 dimensional semantic | Speech | speak 119 words in the MRI, FMRI raw data | Estimation | 52% | similarity & language perception and language production in semantic representations |

| [77] | 2018 | hypotheses 1a & 1b | fundamental frequency (F0), Speech rate on age estimates. | Speech | 50 participants, Arabic, Korean and Mandarin | Estimation | 71% | non-native speakers |

| [78] | 2019 | Deep neural networks | DNN-GIF, QCP, AMR, CP, IAIF and CCD. | telephone speech | windowing, interpolate and overlap add | Estimation | ___ | glottal flow estimation |

| [79] | 2020 | PROSE | PESQ, SNR, SSNR, SDR, STOI, MSE, WE, IS, COSH, IS-II | Speech | clean speech NOIZEUS and NOISEX-92 database | Estimation | __ | Risk Estimation, F16 noise, White noise, Street noise, Train noise. |

| [80] | 2022 | stabilized wavelet transform (SWT), Auditory mode, Cross-correlation, Transform SWM, | Spectral, just noticeable difference (JND, point of subjective equality (PSE), | speech | Japanese words (FW03), TANDEMS-TRAIGHT dataset | Estimation | ___ | weighting function, |

| [81] | 2022 | DNN, LSTM | Hesitations, Paranormal, filler words word fragments, word repetition | speech | text augmentation approaches by adult data, Web data, text generated by RNN, 33 children, LENA recording | Estimation, Speech Recognition | -- | degree of spontaneity, include incomplete sentences. speech may be ill-formed, vocal effort |

| [82] | 2021 | ANN, niHEGP | artificial neural networks | speech | 3 h, 2023 newspaper style sentences, 300 sentences [83,84] | Estimation | 81% | noise |

| [85] | 2018 | MF VTLN, SRN, MFCC | Speaking-rate normalization, Zero-frequency filtering | speech | TANDEM STRAIGHT | Speech Recognition | spectrogram, Did speaking-rate normalization (SRN) highly effective? | |

| [86] | 2020 | GMM, MFCC, short-time, STFT | Feature extraction, Mel filter bank features, UBM, RMSE, MAE | Speech, video | The TIMIT dataset, 630 speakers Audio Forensic Dataset (AFDS) | Estimation | extraction of information, speaker characteristics | |

| [87] | 2018 | GMMs, MFCC | LPCCs | Speech, video | 9 age groups | Classification | Textually neutral Hindi words have been used to construct text prompts | |

| [88] | 2020 | HMM, Soft Computing | (i) Rules inference engine (ii) Fuzzifier (iii) Defuzzifier | Speech | Speech signal | Speech Recognition, Classification | how know ideas fuzzy HMM strategy, how know speech signal handling territory | |

| [89] | 2020 | support vector machines (SVMs), time-domain, frequency-domain parameters | glottal parameters: time-domain, frequency-domain parameters, PCA | Speech | dysarthric speech database, 765 isolated word utterances (B1, B2 and B3) 255 words, 155 common words | Classification | 72.01% Test data, 73.53% Validation data | classification, intelligibility estimation tasks compared, |

| Ref. | Year | Dataset | Size | Type of Data | Methodology | MAE (Years) | ||

|---|---|---|---|---|---|---|---|---|

| Female | Male | Mixed | ||||||

| [26] | 2022 | ADNI, ADNI, DLBS, 1000FCP, IXI, NITRC, OASIS, PPMI, SALD | 2251 | images | multi-feature-based networks | 3.73 | ||

| [2] | 2021 | VoxCeleb1 | 1211 | speech | Statistical Functional, LDA, G- RNN | 9.25 | 10.33 | 10.96 |

| [96] | 2016 | aGender | 659 | Telephone Speech | i-vector, LS-SVR 3 | 9.77 | 10.63 | |

| [31] | 2018 | NIST SRE | 220 | speech | i-vector, Neural Networks LSTM RNN | 9.85 9.56 7.44 6.97 | 10.82 10.69 8.29 7.79 | |

| [97] | 2019 | Morph-II, FG-NET | 55,134 1002 | Face images | DAG-VGG16 DAG-GoogLeNet | 2.81 2.83 | ||

| [98] | 2021 | MORPH II | 55,134 | Face images | Hierarchical Attention-based Age Estimation | 2.53 | ||

| [99] | 2018 | MORPH (Album2) | 55,134 | Face images | M-LSDML | 3.31 | ||

| [100] | 2017 | FG-Net MORPH Year-labeled. | 1002 55,134 154,294 | Face images | CNN | 2.78 | ||

| [101] | 2016 | Asian Face Age Dataset (AFAD) | 160 K | facial images | OR-CNN/CNN | 3.27 | ||

| [102] | 2022 | MORPH II | 55,134 | facial images | MWR | 2.00 | ||

| [103] | 2016 | IMDB-WIKI | 523,051 | facial images | DEX(IMDB-WIKI)/CNN 2.68 | 2.68 | ||

| [104] | 2015 | MORPH, FG-NET | 55,134 1002 | Face images | DLA CNN | 4.77 4.26 | ||

| [105] | 2018 | Morph-II | 55,134 | facial images | CNN + ELM | 3.44 | ||

| [106] | 2017 | FGNET, MORPH, FERET, PAL | 1002, 2000 2366, 576 | facial images | CNN | 5.39, 3.98 3.00, 3.43 | ||

| [107] | 2016 | IMDB-Wiki | 500K | Face images | CNN | 2.99 | ||

| Grope | Number of Calls | Number of Hours | Females | Males |

|---|---|---|---|---|

| Training | 873 | 144.7 | 797 | 948 |

| Development | 24 | 3.9 | 24 | 24 |

| Total | 867 | 148.6 | 821 | 972 |

| Class No. | Age | Gender | Age Group |

|---|---|---|---|

| 1 | 7–14 | Male + Female | Children |

| 2 | 15–24 | Female | Young |

| 3 | 15–24 | Male | Young |

| 4 | 25–54 | Female | Male |

| 5 | 25–54 | Male | Male |

| 6 | 55–80 | Female | Seniors |

| 7 | 55–80 | Male | Seniors |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Younis, H.A.; Ruhaiyem, N.I.R.; Badr, A.A.; Abdul-Hassan, A.K.; Alfadli, I.M.; Binjumah, W.M.; Altuwaijri, E.A.; Nasser, M. Multimodal Age and Gender Estimation for Adaptive Human-Robot Interaction: A Systematic Literature Review. Processes 2023, 11, 1488. https://doi.org/10.3390/pr11051488

Younis HA, Ruhaiyem NIR, Badr AA, Abdul-Hassan AK, Alfadli IM, Binjumah WM, Altuwaijri EA, Nasser M. Multimodal Age and Gender Estimation for Adaptive Human-Robot Interaction: A Systematic Literature Review. Processes. 2023; 11(5):1488. https://doi.org/10.3390/pr11051488

Chicago/Turabian StyleYounis, Hussain A., Nur Intan Raihana Ruhaiyem, Ameer A. Badr, Alia K. Abdul-Hassan, Ibrahim M. Alfadli, Weam M. Binjumah, Eman A. Altuwaijri, and Maged Nasser. 2023. "Multimodal Age and Gender Estimation for Adaptive Human-Robot Interaction: A Systematic Literature Review" Processes 11, no. 5: 1488. https://doi.org/10.3390/pr11051488

APA StyleYounis, H. A., Ruhaiyem, N. I. R., Badr, A. A., Abdul-Hassan, A. K., Alfadli, I. M., Binjumah, W. M., Altuwaijri, E. A., & Nasser, M. (2023). Multimodal Age and Gender Estimation for Adaptive Human-Robot Interaction: A Systematic Literature Review. Processes, 11(5), 1488. https://doi.org/10.3390/pr11051488