Real-Time Steel Surface Defect Detection with Improved Multi-Scale YOLO-v5

Abstract

1. Introduction

- We propose an improved multi-scale YOLO-v5 network for effective steel surface defect detection, which achieves a high detection accuracy and demonstrates a good robust performance.

- We develop the multi-scale block and spatial attention mechanism to process the steel surface images, which effectively explore the defect information and improve the accuracy of the network.

- Experimental results show that the improved network has a higher prediction accuracy than the vanilla YOLO-v5 method, which satisfies the real-time speed requirement.

2. Related Work

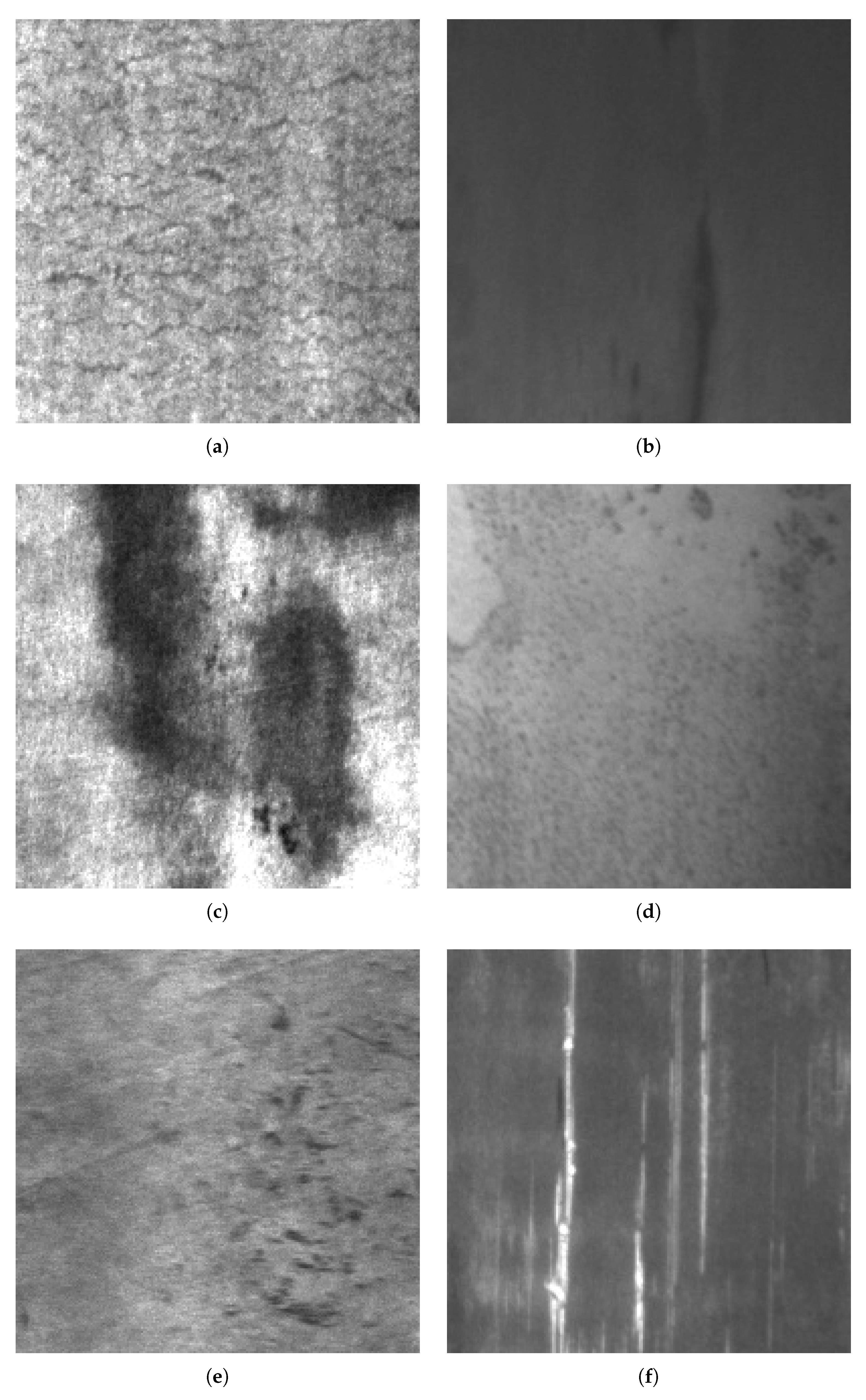

2.1. Steel Surface Defect Detection

2.2. Deep Learning for Classification and Object Detection

2.3. Deep Learning for Defect Detection

2.4. Attention Mechanism

3. Method

3.1. Network Design

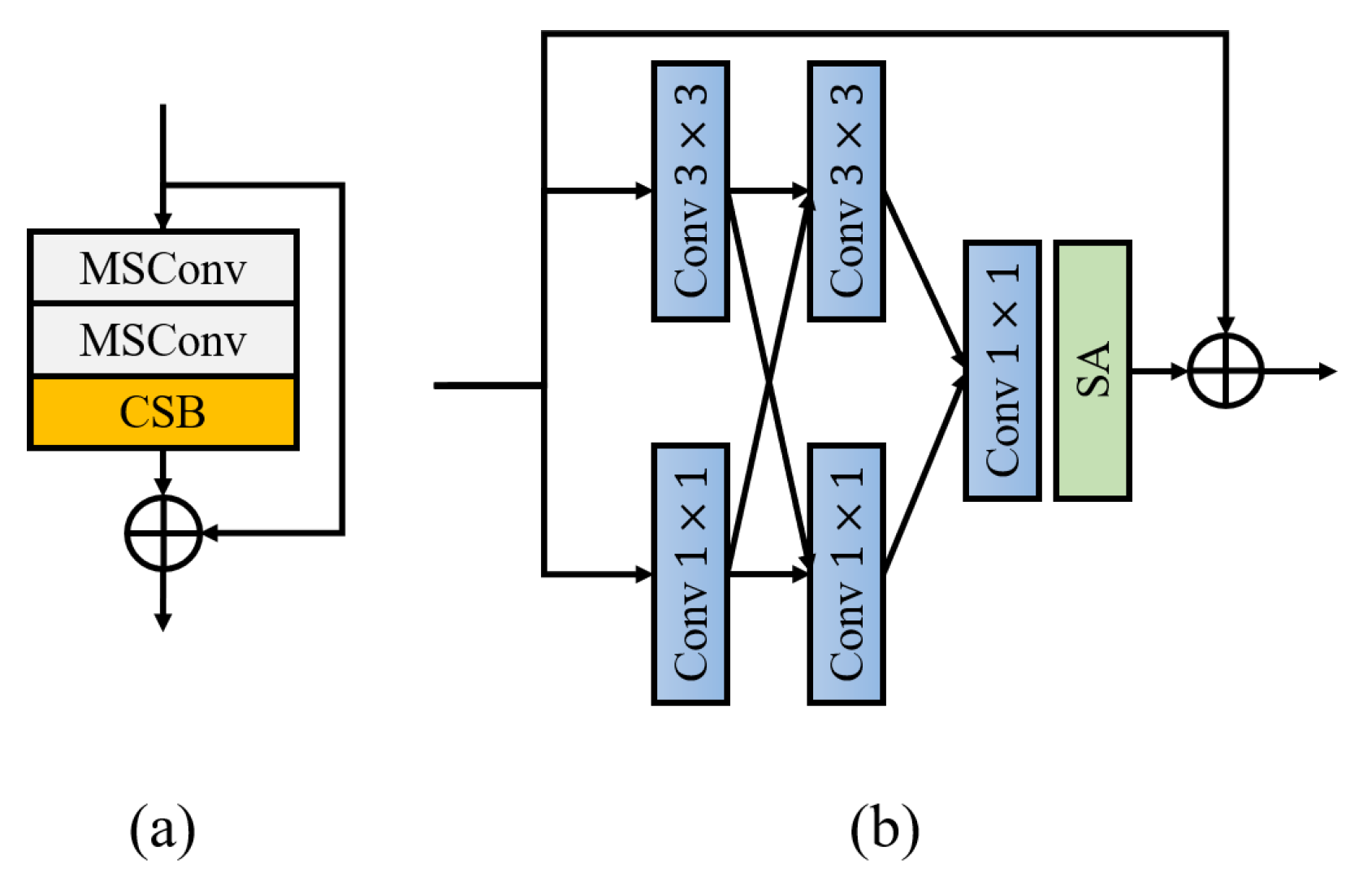

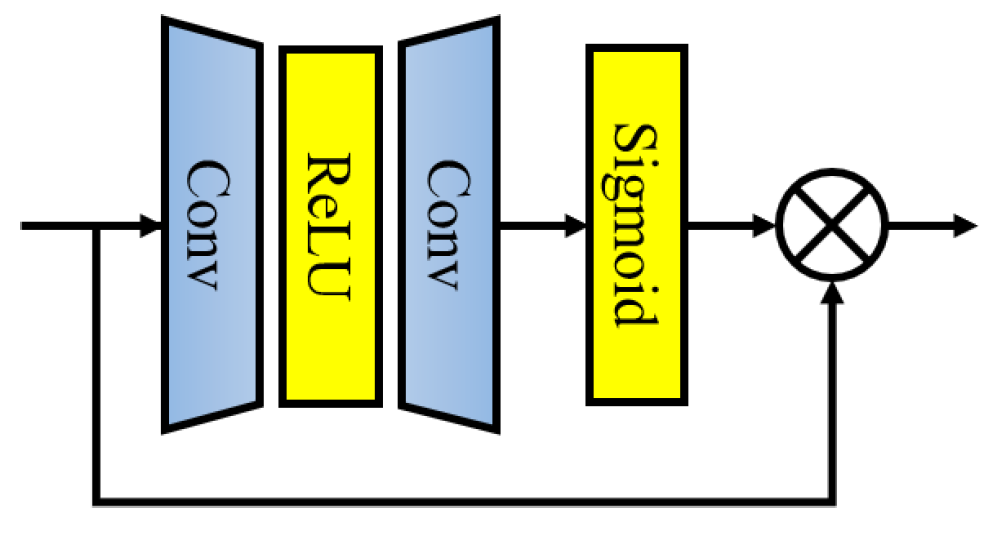

3.2. Design of the Multi-Scale Block and Spatial Attention

3.3. Implementation Details

4. Experiment

4.1. Settings

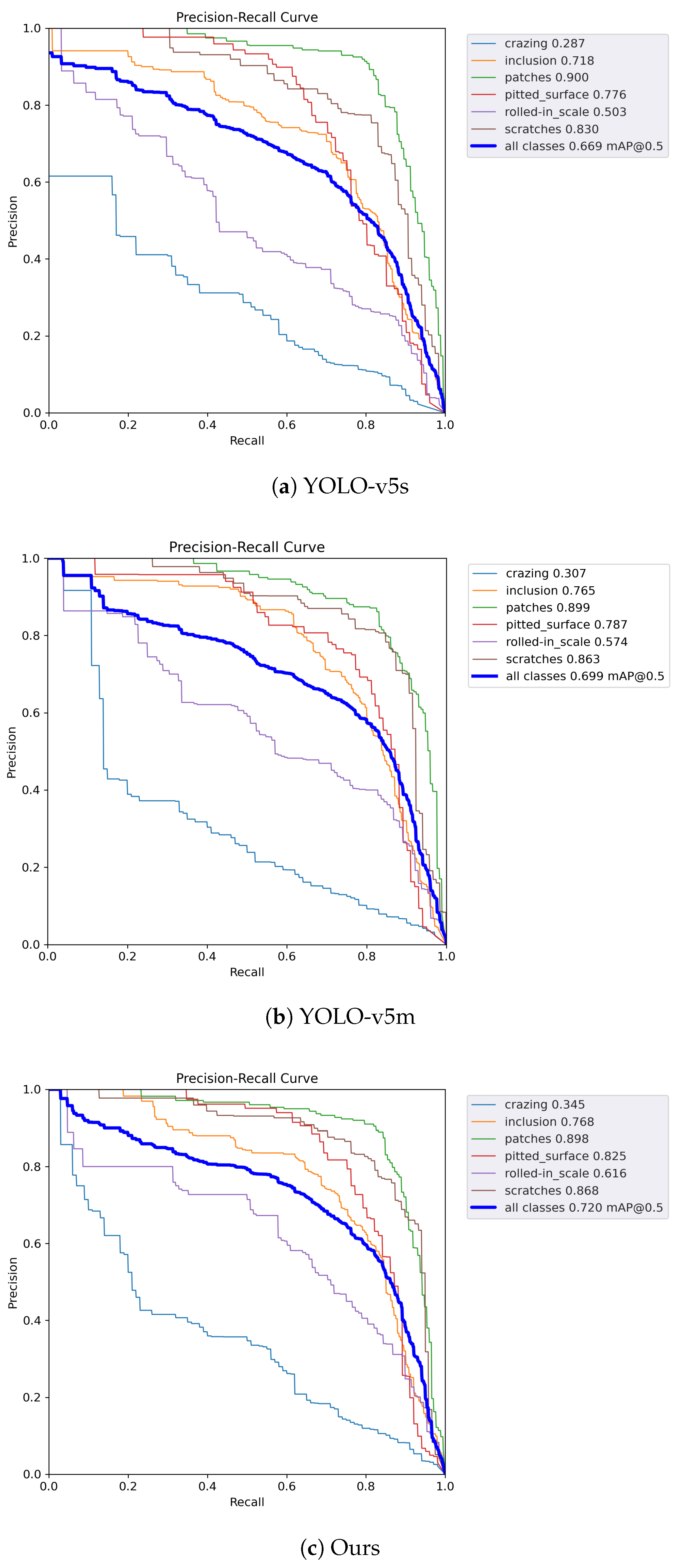

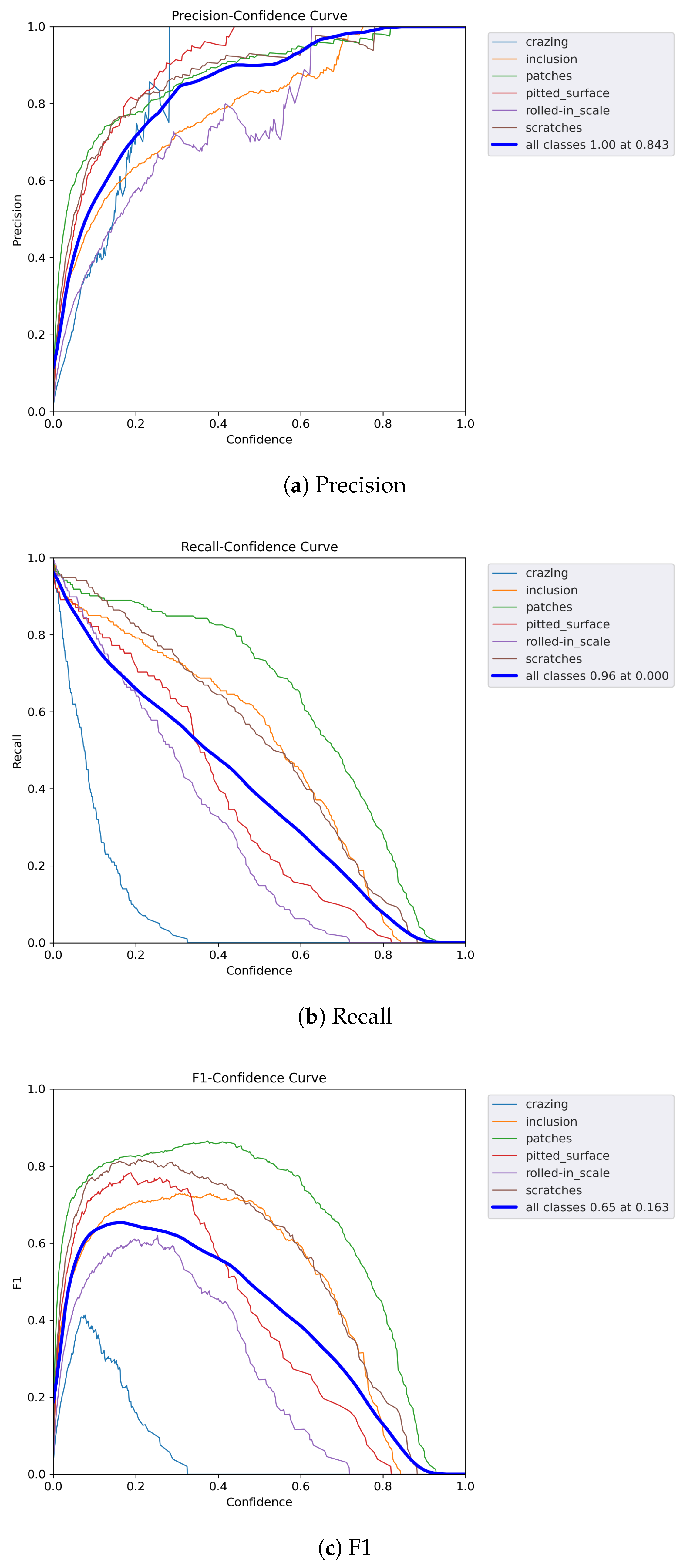

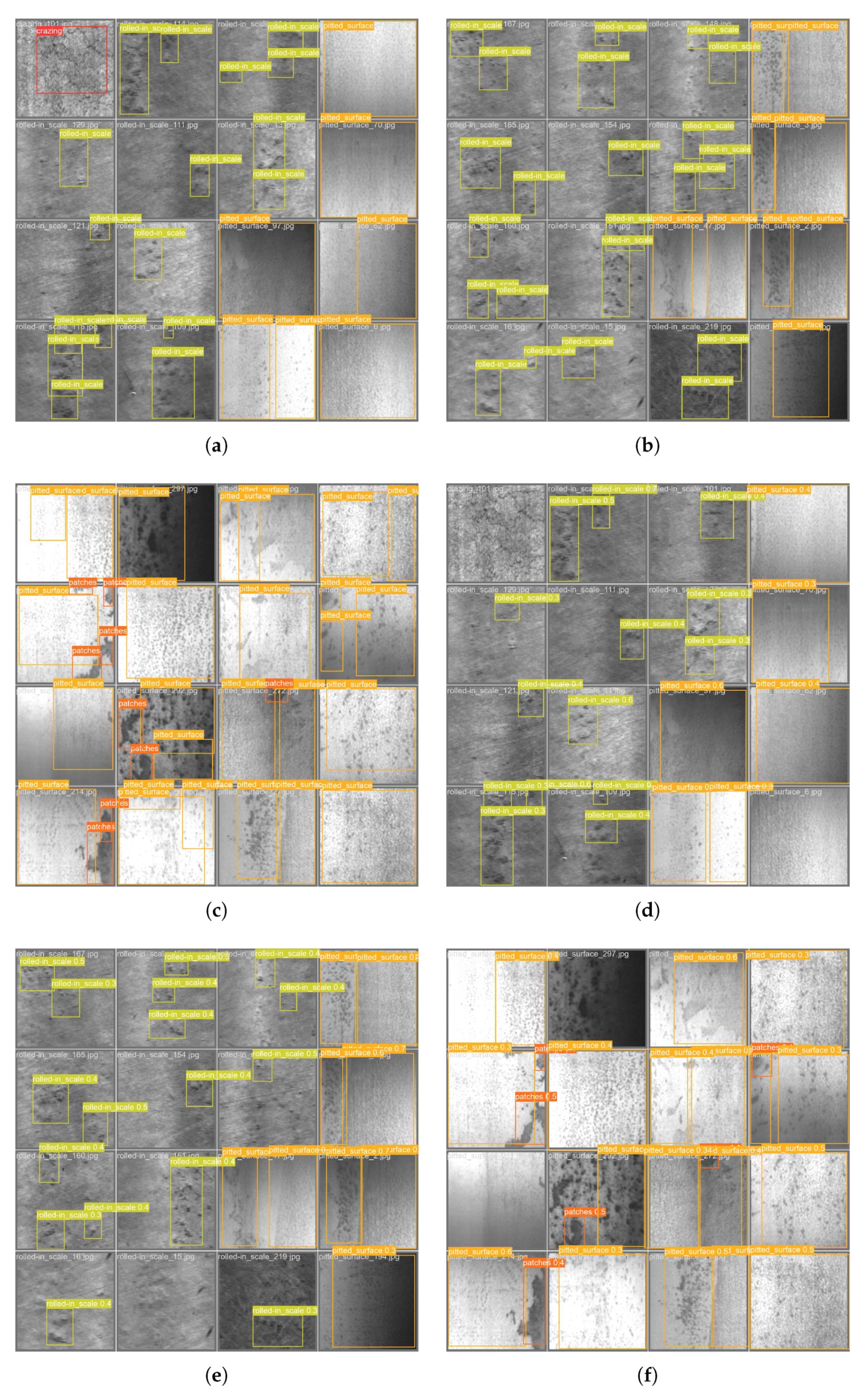

4.2. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Luo, Q.; Fang, X.; Liu, L.; Yang, C.; Sun, Y. Automated Visual Defect Detection for Flat Steel Surface: A Survey. IEEE Trans. Instrum. Meas. 2020, 69, 626–644. [Google Scholar] [CrossRef]

- Lv, X.; Duan, F.; Jiang, J.J.; Fu, X.; Gan, L. Deep Metallic Surface Defect Detection: The New Benchmark and Detection Network. Sensors 2020, 20, 1562. [Google Scholar] [CrossRef] [PubMed]

- Amin, D.; Akhter, S. Deep Learning-Based Defect Detection System in Steel Sheet Surfaces. In Proceedings of the 2020 IEEE Region 10 Symposium (TENSYMP), Dhaka, Bangladesh, 5–7 June 2020; pp. 444–448. [Google Scholar] [CrossRef]

- Caleb, P.; Steuer, M. Classification of surface defects on hot rolled steel using adaptive learning methods. In Proceedings of the KES’2000, Fourth International Conference on Knowledge-Based Intelligent Engineering Systems and Allied Technologies, Proceedings (Cat. No. 00TH8516), Brighton, UK, 30 August–1 September 2000; Volume 1, pp. 103–108. [Google Scholar] [CrossRef]

- Choi, K.; Koo, K.; Lee, J.S. Development of Defect Classification Algorithm for POSCO Rolling Strip Surface Inspection System. In Proceedings of the 2006 SICE-ICASE International Joint Conference, Busan, Republic of Korea, 18–21 October 2006; pp. 2499–2502. [Google Scholar] [CrossRef]

- Rautkorpi, R.; Iivarinen, J. Content-based image retrieval of Web surface defects with PicSOM. In Proceedings of the 2004 IEEE International Joint Conference on Neural Networks (IEEE Cat. No. 04CH37541), Budapest, Hungary, 25–29 July 2004; Volume 3, pp. 1863–1867. [Google Scholar] [CrossRef]

- Tunali, M.M.; Yildiz, A.; Çakar, T. Steel Surface Defect Classification Via Deep Learning. In Proceedings of the 2022 7th International Conference on Computer Science and Engineering (UBMK), Diyarbakir, Turkey, 14–16 September 2022; pp. 485–489. [Google Scholar] [CrossRef]

- He, Y.; Song, K.; Meng, Q.; Yan, Y. An End-to-End Steel Surface Defect Detection Approach via Fusing Multiple Hierarchical Features. IEEE Trans. Instrum. Meas. 2020, 69, 1493–1504. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Chen, D.; Ju, Y. SAR ship detection based on improved YOLOv3. In Proceedings of the IET International Radar Conference (IET IRC 2020), Virtual, 4–6 November 2020; Volume 2020, pp. 929–934. [Google Scholar] [CrossRef]

- Liu, T.; Chen, S. YOLOv4-DCN-based fabric defect detection algorithm. In Proceedings of the 2022 37th Youth Academic Annual Conference of Chinese Association of Automation (YAC), Beijing, China, 27–29 May 2022; pp. 710–715. [Google Scholar] [CrossRef]

- Li, Y.; Cheng, R.; Zhang, C.; Chen, M.; Ma, J.; Shi, X. Sign language letters recognition model based on improved YOLOv5. In Proceedings of the 2022 9th International Conference on Digital Home (ICDH), Guangzhou, China, 28–30 October 2022; pp. 188–193. [Google Scholar] [CrossRef]

- He, B.; Zhuo, J.; Zhuo, X.; Peng, S.; Li, T.; Wang, H. Defect detection of printed circuit board based on improved YOLOv5. In Proceedings of the 2022 International Conference on Artificial Intelligence and Computer Information Technology (AICIT), Yichang, China, 16–18 September 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Zheng, L.; Wang, X.; Wang, Q.; Wang, S.; Liu, X. A Fabric Defect Detection Method Based on Improved YOLOv5. In Proceedings of the 2021 7th International Conference on Computer and Communications (ICCC), Chengdu, China, 10–13 December 2021; pp. 620–624. [Google Scholar] [CrossRef]

- Djukic, D.; Spuzic, S. Statistical discriminator of surface defects on hot rolled steel. In Proceedings of the Image and Vision Computing New Zealand 2007, Hamilton, New Zealand, 5–7 December 2007; pp. 158–163. [Google Scholar]

- Wang, Y.; Xia, H.; Yuan, X.; Li, L.; Sun, B. Distributed defect recognition on steel surfaces using an improved random forest algorithm with optimal multi-feature-set fusion. Multimed. Tools Appl. 2018, 77, 16741–16770. [Google Scholar] [CrossRef]

- Choi, D.c.; Jeon, Y.J.; Kim, S.H.; Moon, S.; Yun, J.P.; Kim, S.W. Detection of pinholes in steel slabs using Gabor filter combination and morphological features. ISIJ Int. 2017, 57, 1045–1053. [Google Scholar] [CrossRef]

- Sharifzadeh, M.; Amirfattahi, R.; Sadri, S.; Alirezaee, S.; Ahmadi, M. Detection of steel defect using the image processing algorithms. In Proceedings of the International Conference on Electrical Engineering, Military Technical College, Dhaka, Bangladesh, 20–22 December 2008; Volume 6, pp. 1–7. [Google Scholar]

- Yang, J.; Li, X.; Xu, J.; Cao, Y.; Zhang, Y.; Wang, L.; Jiang, S. Development of an optical defect inspection algorithm based on an active contour model for large steel roller surfaces. Appl. Opt. 2018, 57, 2490–2498. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Wang, J.; Yu, H.; Li, F.; Yu, L.; Zhang, C. Surface Defect Detection of Steel Products Based on Improved YOLOv5. In Proceedings of the 2022 41st Chinese Control Conference (CCC), Hefei, China, 25–27 July 2022; pp. 5794–5799. [Google Scholar]

- Cheng, Y.; Wang, S. Improvements to YOLOv4 for Steel Surface Defect Detection. In Proceedings of the 2022 5th International Conference on Intelligent Autonomous Systems (ICoIAS), Dalian, China, 23–25 September 2022; pp. 48–53. [Google Scholar]

- Zhang, Y.; Xiao, F.; Tian, P. Surface defect detection of hot rolled steel strip based on image compression. In Proceedings of the 2020 International Conference on Computer Network, Electronic and Automation (ICCNEA), Xi’an, China, 25–27 September 2020; pp. 149–153. [Google Scholar]

- Ullah, A.; Xie, H.; Farooq, M.O.; Sun, Z. Pedestrian detection in infrared images using fast RCNN. In Proceedings of the 2018 Eighth International Conference on Image Processing Theory, Tools and Applications (IPTA), Xi’an, China, 7–10 November 2018; pp. 1–6. [Google Scholar]

- Shi, X.; Zhou, S.; Tai, Y.; Wang, J.; Wu, S.; Liu, J.; Xu, K.; Peng, T.; Zhang, Z. An Improved Faster R-CNN for Steel Surface Defect Detection. In Proceedings of the 2022 IEEE 24th International Workshop on Multimedia Signal Processing (MMSP), Shanghai, China, 26–28 September 2022; pp. 1–5. [Google Scholar]

- Xinzi, Z. BSU-net: A surface defect detection method based on bilaterally symmetric U-Shaped network. In Proceedings of the 2020 5th International Conference on Mechanical, Control and Computer Engineering (ICMCCE), Harbin, China, 25–27 December 2020; pp. 1771–1775. [Google Scholar]

- Yang, N.; Guo, W. Application of Improved YOLOv5 Model for Strip Surface Defect Detection. In Proceedings of the 2022 Global Reliability and Prognostics and Health Management (PHM-Yantai), Yantai, China, 13–16 October 2022; pp. 1–5. [Google Scholar]

- Xu, L.; Tian, G.; Zhang, L.; Zheng, X. Research of surface defect detection method of hot rolled strip steel based on generative adversarial network. In Proceedings of the 2019 Chinese Automation Congress (CAC), Hangzhou, China, 22–24 November 2019; pp. 401–404. [Google Scholar]

- Liu, K.; Li, A.; Wen, X.; Chen, H.; Yang, P. Steel surface defect detection using GAN and one-class classifier. In Proceedings of the 2019 25th International Conference on Automation and Computing (ICAC), Lancaster, UK, 5–7 September 2019; pp. 1–6. [Google Scholar]

- Wen, L.; Wang, Y.; Li, X. A new Cycle-consistent adversarial networks with attention mechanism for surface defect classification with small samples. IEEE Trans. Ind. Inform. 2022, 18, 8988–8998. [Google Scholar] [CrossRef]

- Al-Jawfi, R. Handwriting Arabic character recognition LeNet using neural network. Int. Arab. J. Inf. Technol. 2009, 6, 304–309. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Zhang, K.; Sun, M.; Han, T.X.; Yuan, X.; Guo, L.; Liu, T. Residual networks of residual networks: Multilevel residual networks. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 1303–1314. [Google Scholar] [CrossRef]

- Gao, S.H.; Cheng, M.M.; Zhao, K.; Zhang, X.Y.; Yang, M.H.; Torr, P. Res2net: A new multi-scale backbone architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 652–662. [Google Scholar] [CrossRef] [PubMed]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Bharati, P.; Pramanik, A. Deep learning techniques—R-CNN to mask R-CNN: A survey. In Computational Intelligence in Pattern Recognition: Proceedings of CIPR 2019; Springer: Singapore, 2020; pp. 657–668. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Lecture Notes in Computer Science, Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016, Proceedings, Part I 14; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Liu, G. Surface Defect Detection Methods Based on Deep Learning: A Brief Review. In Proceedings of the 2020 2nd International Conference on Information Technology and Computer Application (ITCA), Guangzhou, China, 17–19 December 2020; pp. 200–203. [Google Scholar] [CrossRef]

- Wu, X.; Ge, Y.; Zhang, Q.; Zhang, D. PCB Defect Detection Using Deep Learning Methods. In Proceedings of the 2021 IEEE 24th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Dalian, China, 5–7 May 2021; pp. 873–876. [Google Scholar] [CrossRef]

- An, M.; Wang, S.; Zheng, L.; Liu, X. Fabric defect detection using deep learning: An Improved Faster R-approach. In Proceedings of the 2020 International Conference on Computer Vision, Image and Deep Learning (CVIDL), Nanchang, China, 15–17 May 2020; pp. 319–324. [Google Scholar] [CrossRef]

- Luo, J.; Yang, Z.; Li, S.; Wu, Y. FPCB Surface Defect Detection: A Decoupled Two-Stage Object Detection Framework. IEEE Trans. Instrum. Meas. 2021, 70, 5012311. [Google Scholar] [CrossRef]

- Guan, S.; Wang, X.; Wang, J.; Yu, Z.; Wang, X.; Zhang, C.; Liu, T.; Liu, D.; Wang, J.; Zhang, L. Ceramic ring defect detection based on improved YOLOv5. In Proceedings of the 2022 3rd International Conference on Computer Vision, Image and Deep Learning and International Conference on Computer Engineering and Applications (CVIDL and ICCEA), Changchun, China, 20–22 May 2022; pp. 115–118. [Google Scholar] [CrossRef]

- Mo, D.; Wong, W.K.; Lai, Z.; Zhou, J. Weighted Double-Low-Rank Decomposition With Application to Fabric Defect Detection. IEEE Trans. Autom. Sci. Eng. 2021, 18, 1170–1190. [Google Scholar] [CrossRef]

- Zeng, Z.; Liu, B.; Fu, J.; Chao, H. Reference-Based Defect Detection Network. IEEE Trans. Image Process. 2021, 30, 6637–6647. [Google Scholar] [CrossRef] [PubMed]

- Su, Y.; Zhang, Q.; Deng, Y.; Luo, Y.; Wang, X.; Zhong, P. Steel Surface Defect Detection Algorithm based on Improved YOLOv4. In Proceedings of the 2022 IEEE 5th Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Chongqing, China, 16–18 December 2022; Volume 5, pp. 1425–1429. [Google Scholar] [CrossRef]

- Xie, Q.; Zhou, W.; Tan, H.; Wang, X. Surface Defect Recognition in Steel Plates Based on Impoved Faster R-CNN. In Proceedings of the 2022 41st Chinese Control Conference (CCC), Hefei, China, 25–27 July 2022; pp. 6759–6764. [Google Scholar] [CrossRef]

- Tian, S.; Huang, P.; Ma, H.; Wang, J.; Zhou, X.; Zhang, S.; Zhou, J.; Huang, R.; Li, Y. CASDD: Automatic Surface Defect Detection Using a Complementary Adversarial Network. IEEE Sens. J. 2022, 22, 19583–19595. [Google Scholar] [CrossRef]

- Cheng, X.; Yu, J. RetinaNet With Difference Channel Attention and Adaptively Spatial Feature Fusion for Steel Surface Defect Detection. IEEE Trans. Instrum. Meas. 2021, 70, 2503911. [Google Scholar] [CrossRef]

- Guan, S.; Lei, M.; Lu, H. A Steel Surface Defect Recognition Algorithm Based on Improved Deep Learning Network Model Using Feature Visualization and Quality Evaluation. IEEE Access 2020, 8, 49885–49895. [Google Scholar] [CrossRef]

- Han, C.; Li, G.; Liu, Z. Two-Stage Edge Reuse Network for Salient Object Detection of Strip Steel Surface Defects. IEEE Trans. Instrum. Meas. 2022, 71, 5019812. [Google Scholar] [CrossRef]

- Fan, Z.; Dan, T.; Yu, H.; Liu, B.; Cai, H. Single Fundus Image Super-Resolution Via Cascaded Channel-Wise Attention Network. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 1984–1987. [Google Scholar] [CrossRef]

- Lu, H.; Chen, X.; Zhang, G.; Zhou, Q.; Ma, Y.; Zhao, Y. Scanet: Spatial-channel Attention Network for 3D Object Detection. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 1992–1996. [Google Scholar] [CrossRef]

- Guo, L.; Chen, L.; Philip Chen, C.L.; Li, T.; Zhou, J. Clustering based Image Segmentation via Weighted Fusion of Non-local and Local Information. In Proceedings of the 2018 International Conference on Security, Pattern Analysis, and Cybernetics (SPAC), Jinan, China, 14–17 December 2018; pp. 299–303. [Google Scholar] [CrossRef]

- Zhou, J.; Leong, C.T.; Li, C. Multi-Scale and Attention Residual Network for Single Image Dehazing. In Proceedings of the 2021 6th International Conference on Intelligent Computing and Signal Processing (ICSP), Xi’an, China, 9–11 April 2021; pp. 483–487. [Google Scholar] [CrossRef]

- Wang, A.; Togo, R.; Ogawa, T.; Haseyama, M. Multi-scale Defect Detection from Subway Tunnel Images with Spatial Attention Mechanism. In Proceedings of the 2022 IEEE International Conference on Consumer Electronics—Taiwan, Taipei, Taiwan, China, 6–8 July 2022; pp. 305–306. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, R.; Li, H.; Shao, X. Dynamic Attention Graph Convolution Neural Network of Point Cloud Segmentation for Defect Detection. In Proceedings of the 2020 IEEE International Conference on Artificial Intelligence and Information Systems (ICAIIS), Dalian, China, 20–22 March 2020; pp. 18–23. [Google Scholar] [CrossRef]

- Wu, X.; Lu, D. Parallel attention network based fabric defect detection. In Proceedings of the 2022 IEEE 5th Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Chongqing, China, 16–18 December 2022; Volume 5, pp. 1015–1020. [Google Scholar] [CrossRef]

- Chen, H.; Du, Y.; Fu, Y.; Zhu, J.; Zeng, H. DCAM-Net: A Rapid Detection Network for Strip Steel Surface Defects Based on Deformable Convolution and Attention Mechanism. IEEE Trans. Instrum. Meas. 2023, 72, 5005312. [Google Scholar] [CrossRef]

- Hong, X.; Wang, F.; Ma, J. Improved YOLOv7 Model for Insulator Surface Defect Detection. In Proceedings of the 2022 IEEE 5th Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Chongqing, China, 16–18 December 2022; Volume 5, pp. 1667–1672. [Google Scholar] [CrossRef]

| Component | Index | Operation | Number | Channel | Scale |

|---|---|---|---|---|---|

| Backbone | 0 | CBS | 1 | 64 | 2 |

| 1 | CBS | 1 | 128 | 2 | |

| 2 | MS | 3 | 128 | 1 | |

| 3 | CBS | 1 | 256 | 2 | |

| 4 | MS | 6 | 256 | 1 | |

| 5 | CBS | 1 | 512 | 2 | |

| 6 | MS | 9 | 512 | 1 | |

| 7 | CBS | 1 | 1024 | 2 | |

| 8 | MS | 3 | 1024 | 1 | |

| 9 | SPPF | 1 | 1024 | 1 | |

| Head | 10 | CBS | 1 | 512 | 1 |

| 11 | Bicubic | 1 | - | 2 | |

| 12 | MS | 3 | 512 | 1 | |

| 13 | CBS | 1 | 256 | 1 | |

| 14 | Bicubic | 1 | - | 2 | |

| 15 | MS | 3 | 256 | 1 | |

| 16 | CBS | 1 | 256 | 2 | |

| 17 | MS | 3 | 512 | 1 | |

| 18 | CBS | 1 | 512 | 2 | |

| 19 | MS | 3 | 1024 | 1 |

| Method | Parameters (M) | GFLOPs | Time Cost (ms) | FPS |

|---|---|---|---|---|

| YOLO-v5s | 7.02 | 15.8 | 1.8 | 555.56 |

| YOLO-v5m | 20.8 | 47.9 | 4.1 | 243.90 |

| Ours | 22.2 | 54.1 | 5.2 | 192.30 |

| Method | Indicator | Crazing | Inclusion | Patches | Pitted Surface | Rolled-in Scale | Scratches | All |

|---|---|---|---|---|---|---|---|---|

| YOLO-v5s | P | 0.433 | 0.606 | 0.802 | 0.712 | 0.469 | 0.753 | 0.633 |

| R | 0.010 | 0.758 | 0.849 | 0.723 | 0.680 | 0.814 | 0.597 | |

| mAP50 | 0.287 | 0.718 | 0.900 | 0.776 | 0.574 | 0.830 | 0.669 | |

| mAP50-95 | 0.089 | 0.330 | 0.554 | 0.406 | 0.257 | 0.415 | 0.334 | |

| YOLO-v5m | P | 0.454 | 0.536 | 0.735 | 0.754 | 0.489 | 0.714 | 0.610 |

| R | 0.140 | 0.833 | 0.884 | 0.759 | 0.430 | 0.873 | 0.695 | |

| mAP50 | 0.307 | 0.765 | 0.899 | 0.787 | 0.503 | 0.863 | 0.699 | |

| mAP50-95 | 0.099 | 0.384 | 0.571 | 0.451 | 0.212 | 0.444 | 0.368 | |

| YOLO-v7tiny | P | 1.000 | 0.538 | 0.751 | 0.628 | 0.384 | 0.624 | 0.654 |

| R | 0.000 | 0.738 | 0.824 | 0.574 | 0.342 | 0.746 | 0.537 | |

| mAP50 | 0.168 | 0.659 | 0.835 | 0.626 | 0.332 | 0.713 | 0.555 | |

| mAP50-95 | 0.036 | 0.282 | 0.451 | 0.270 | 0.101 | 0.300 | 0.240 | |

| Ours | P | 0.573 | 0.595 | 0.759 | 0.743 | 0.505 | 0.766 | 0.657 |

| R | 0.180 | 0.819 | 0.890 | 0.772 | 0.703 | 0.864 | 0.705 | |

| mAP50 | 0.345 | 0.768 | 0.898 | 0.825 | 0.616 | 0.868 | 0.720 | |

| mAP50-95 | 0.114 | 0.373 | 0.576 | 0.451 | 0.277 | 0.440 | 0.372 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Liu, X.; Ma, J.; Su, W.; Li, H. Real-Time Steel Surface Defect Detection with Improved Multi-Scale YOLO-v5. Processes 2023, 11, 1357. https://doi.org/10.3390/pr11051357

Wang L, Liu X, Ma J, Su W, Li H. Real-Time Steel Surface Defect Detection with Improved Multi-Scale YOLO-v5. Processes. 2023; 11(5):1357. https://doi.org/10.3390/pr11051357

Chicago/Turabian StyleWang, Ling, Xinbo Liu, Juntao Ma, Wenzhi Su, and Han Li. 2023. "Real-Time Steel Surface Defect Detection with Improved Multi-Scale YOLO-v5" Processes 11, no. 5: 1357. https://doi.org/10.3390/pr11051357

APA StyleWang, L., Liu, X., Ma, J., Su, W., & Li, H. (2023). Real-Time Steel Surface Defect Detection with Improved Multi-Scale YOLO-v5. Processes, 11(5), 1357. https://doi.org/10.3390/pr11051357