1. Introduction

Demands on process safety continue to rise due to the ever-increasing scale and complexity of the modern process industry. Aiming at this issue, the process monitoring technique was designed as a powerful tool to ensure the long-term stable operation of industrial systems through Fault detection and diagnosis (FDD). FDD aims to early detect the abnormal behaviors of the process and transfer the fault information to operators to minimize the impact of faults [

1]. Over the past decades, process monitoring has been well developed and divided into model-based methods, knowledge-based methods, and data-driven methods [

2,

3,

4]. As the real-time operation of plant-wide processes becomes much more complex than that under ideal conditions, many random factors cannot be considered in model-based methods and knowledge-based methods, which challenges their application in industrial FDD systems. Given the widespread application of sensors and data transmission techniques, data-driven process monitoring methods have attracted increasing attention in the past two decades from both academia and industry [

1,

4,

5,

6,

7].

Multivariate statistical analysis is one of the most commonly used techniques in data-driven process monitoring, which is known as multivariate statistical process monitoring (MSPM). MSPM methods aim to project original data into a low-dimensional feature space and a residue space. Then, statistics are employed as the dissimilarity measure in each subspace to determine a control limit for normal variations. The most classical methods include principal component analysis (PCA), partial least squares [

8], canonical variate analysis [

9], and independent component analysis [

10], which are applicable to monitor multivariate linear processes. To handle nonlinear processes, numerous variants of these MSPM methods, such as kernel PCA [

11], have been developed by mapping original data into a higher-dimensional linearly separable space. More kernel-based MSPM methods proposed to handle nonlinear characteristics can be referred to in Apsemidis’s review [

12]. In addition, industrial processes also have obvious process dynamics, which are ignored by traditional methods. Ku proposed dynamic PCA to extract the autocorrelation of variables with an augmented matrix [

13], but the selection of time lag is an ad hoc solution. As an alternative, the dynamic latent variable method is further proposed to handle process dynamics, in which auto-regressive PCA and a vector autoregressive model are combined to extract autocorrelation as well as static cross-correlation [

14]. Dong and Qin extend it with a dynamic inner PCA to capture the most dynamic variations in the data [

15]. More applications of dynamic latent variable models in process monitoring can be referred to in Zheng’s review paper [

16]. Although MSPM methods and their variants have made great progress, multivariate statistical models may not be sufficient to extract complex data characteristics for processes with high nonlinearity and process dynamics.

More recently, deep learning methods, as one of the most popular research interests, have been gradually applied to process monitoring domains. Deep learning methods employ multi-layer artificial neural networks (ANN) to extract features from data. The introduction of nonlinear activation functions enables ANN to approximate complex nonlinear relationships. In this scope, the autoencoder, a special ANN whose output value is equal to its input value, has been proven to be a more effective dimensionality reduction and reconstruction method than PCA [

17]. Later, the autoencoder was applied to anomaly detection [

18] and unsupervised fault detection [

19]. To improve the performance of process monitoring, numerous extensions to the autoencoder have been developed. A stacked autoencoder was widely applied to process monitoring because of the good performance of the deep neural network in feature extraction [

20,

21]. Yu and Zhao applied a denoising autoencoder for robust process monitoring [

22]. The variational autoencoder was proposed by Kingma and Welling as a regularized autoencoder in which the distribution of latent variables is restricted to a normal distribution to prevent overfitting [

23]. Based on the variational autoencoder, Cheng et al. constructed a recursive neural network instead of ANN to extract process dynamics [

24]. Zhang and Qiu proposed a dynamic-inner autoencoder, in which a vector autoregressive model is integrated into a convolutional autoencoder to capture process dynamics [

25].

The aforementioned methods have been widely applied for process monitoring proposes, while most of them require a fully labeled training dataset [

26], which is a huge challenge for their real application in monitoring large-scale industrial processes [

27]. A plant-wide process generally contains several operation units and complex automatic control systems, resulting in numerous process variables and complex correlations among them [

28,

29]. Moreover, there are multiple operating conditions according to the adjustment of production loads [

30,

31], and certain variables also show nonstationary characteristics due to various factors, such as equipment aging [

32]. Given these complex data characteristics, it is difficult to define the normal operating conditions and label fault-free samples from massive historical data to establish a long-term effective process monitoring model. Different from simulation processes such as the Tennessee Eastman process that the training data have already been provided, there are many outliers in historical data of industrial processes that have to be labeled and excluded before training the process monitoring model. To label data manually is expensive due to the high labor and time costs [

33]. Therefore, automatic data labeling with limited labeled samples from normal operating conditions has become an important research direction. This issue can be regarded as a positive-unlabeled learning problem [

34]. The positive-unlabeled learning has already been applied to handle fault detection and classification tasks with only a few normal samples labeled [

26,

35,

36,

37]. The most important task in positive-unlabeled learning is to determine the distribution range of normal samples to label outliers in historical data [

38]. Euclidean distance is a commonly used similarity measure for multivariate sequences based on the distance between normal samples and fault samples [

39,

40]. Hu et al. applied KL-divergence to label fault samples from a large amount of historical data according to the distribution information of multivariate data [

41]. However, the potential information contained in the data space structure of the unlabeled samples has not been considered in data labeling by the above methods [

38]. Then, semi-supervised deep learning was further introduced to deal with the process monitoring issue [

42,

43]. Qian et al. proposed a positive-unlabeled learning based on a hybrid network, which contains a classifier, a feature extraction module, and a clustering layer. An optimization strategy is designed for these three modules to achieve promising fault detection performance using only a few labeled normal samples [

26]. Zheng and Zhao proposed a three-step high-fidelity positive-unlabeled approach based on deep learning [

35], in which a self-training stacked autoencoder is utilized for data labeling. Although these methods have been applied to handle the fault detection task with limited data labels, most of them were applied to benchmark simulation processes, and there are still several issues that limit their application in plant-wide industrial processes [

44]. Unlike simulation processes where most variables display a relatively stable variation, the range of variable variations in industrial processes is much wider. There are frequent random disturbances during practical operation, and only a few key variables, which have a significant impact on product quality, are controlled within a small interval. Therefore, when applying multivariate sequence similarity measures for data labeling, there could be situations where the distance between normal samples is larger than that between normal samples and faults, which will lead to a large control limit for normal variations. The fault samples could be labeled as normal samples together with the normal disturbances in historical data. The real faults will be buried in these normal disturbances and hard to be detected by the process monitoring method in the online application. Moreover, there are a large number of trainable parameters in semi-supervised deep learning models. For a process with very few labeled normal samples, the number of initial training samples is not enough to build an effective deep learning model. Further, the labeled samples obtained by the semi-supervised model are not reliable, which leads to a poor generalization ability of the final process monitoring model.

To address these issues, an automatic selection strategy for modeling data is proposed and applied in the development of an industrial process monitoring framework. The main contributions of this work include: (1) An information entropy-based automatic data selection strategy is proposed to label normal samples and fault samples in historical data. It only requires a very small part of normal samples to be labeled, and all other samples in the historical data, whether normal samples or fault samples are unlabeled. The proposed strategy labels samples through the dissimilarity measure between the distribution of key variables in labeled normal samples and that in unlabeled samples using information entropy within a sliding window. In this way, only abnormal behaviors that affect the key variables will be labeled as fault samples, while the random disturbances that occur in other variables will be labeled as normal samples as long as the pre-defined process operation has not been impacted. Moreover, an accurate estimation of the distribution of variables in each sliding window can be obtained by information entropy with proper window width. Therefore, the proposed strategy does not require a significant number of training samples as deep learning methods to achieve effective data labeling performance. (2) Based on the labeled samples from the proposed data selection strategy, a multi-layer autoencoder and the contribution plots are established for fault detection and diagnosis, in which a model update strategy is proposed to handle the multimode issue. Generally, the multimode issue is addressed under the assumption that all possible modes of the process are available in historical data, while it is hard to be satisfied in industrial processes. Considering that the switching of the mode mostly results from the adjustment of production loads, which can be easily identified by the contribution plots, a mode update strategy is utilized in the proposed methods to monitor multimode processes by adjusting the model parameters according to the fault diagnosis results. The proposed method can be applied to monitor multimode processes with only one mode given in historical data. (3) The proposed method is verified through an industrial application on a methanol-to-olefin facility, which contains both reaction and regeneration units with more than 150 process variables. Given only 1440 labeled normal samples, the proposed automatic data selection strategy achieves correct data labeling for a large unlabeled historical dataset. Then, the process monitoring model is established and successfully tested through about three months (120,000 samples) of real-time application. Details on common procedures of industrial process monitoring systems, including data preprocessing, offline modeling, and real-time monitoring, have also been provided to demonstrate the generalization and replicability of the proposed method.

The following parts of this paper are organized as follows: the preliminaries of the proposed method are introduced in

Section 2. The proposed automatic selection strategy for modeling data and the procedures of the proposed industrial process monitoring framework are introduced in

Section 3. An industrial application of the proposed method on a methanol to olefin unit of a real chemical plant is presented in

Section 4. The conclusions are drawn in

Section 5.

3. Automatic Selection Strategy for Modeling Data and Process Monitoring Method

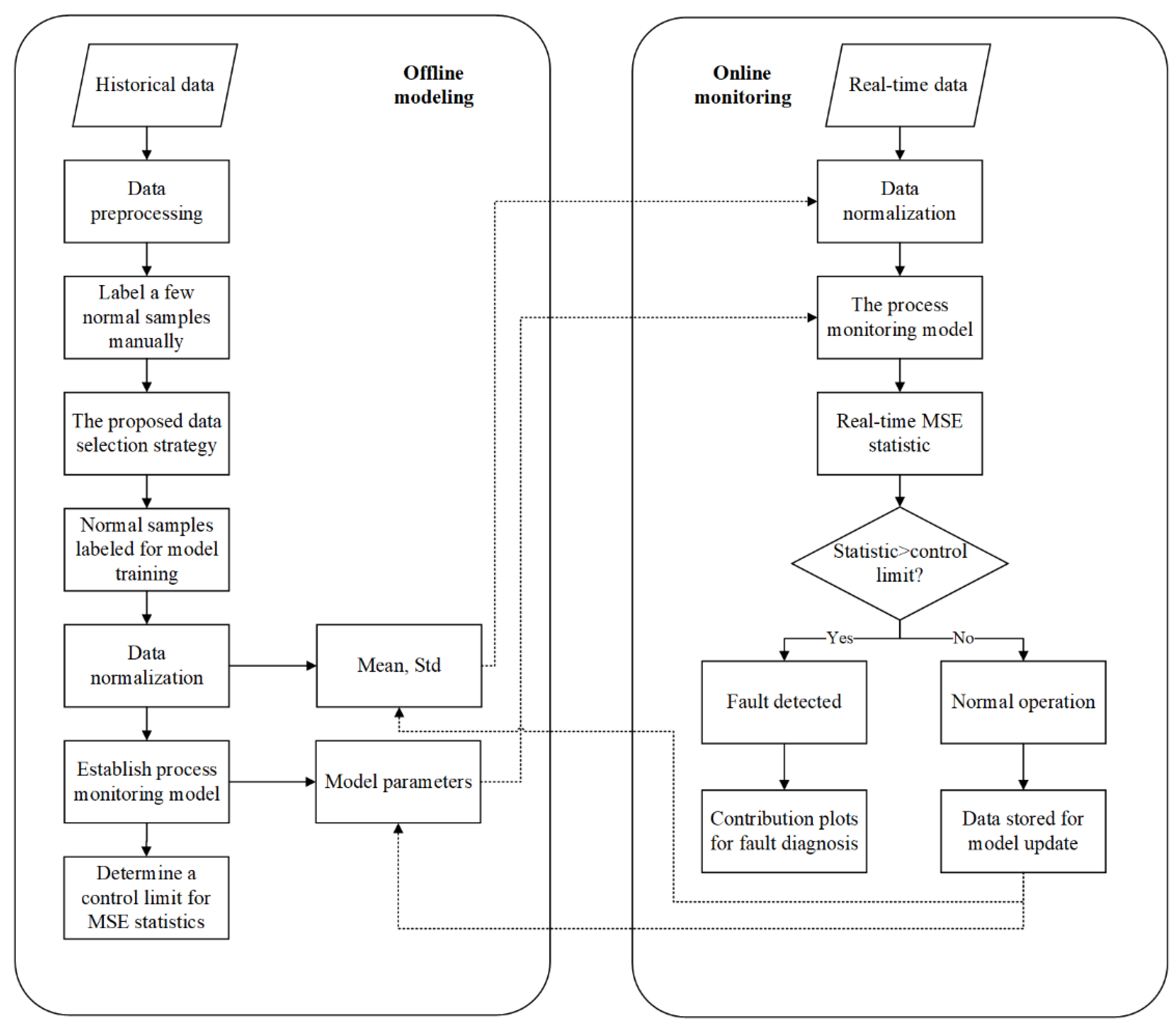

The proposed process monitoring framework can be divided into offline modeling and online monitoring, which can be shown in the flowchart in

Figure 2. Details of each part are introduced in this section.

3.1. Information Entropy-Based Data Labeling Strategy with Few Labeled Normal Samples

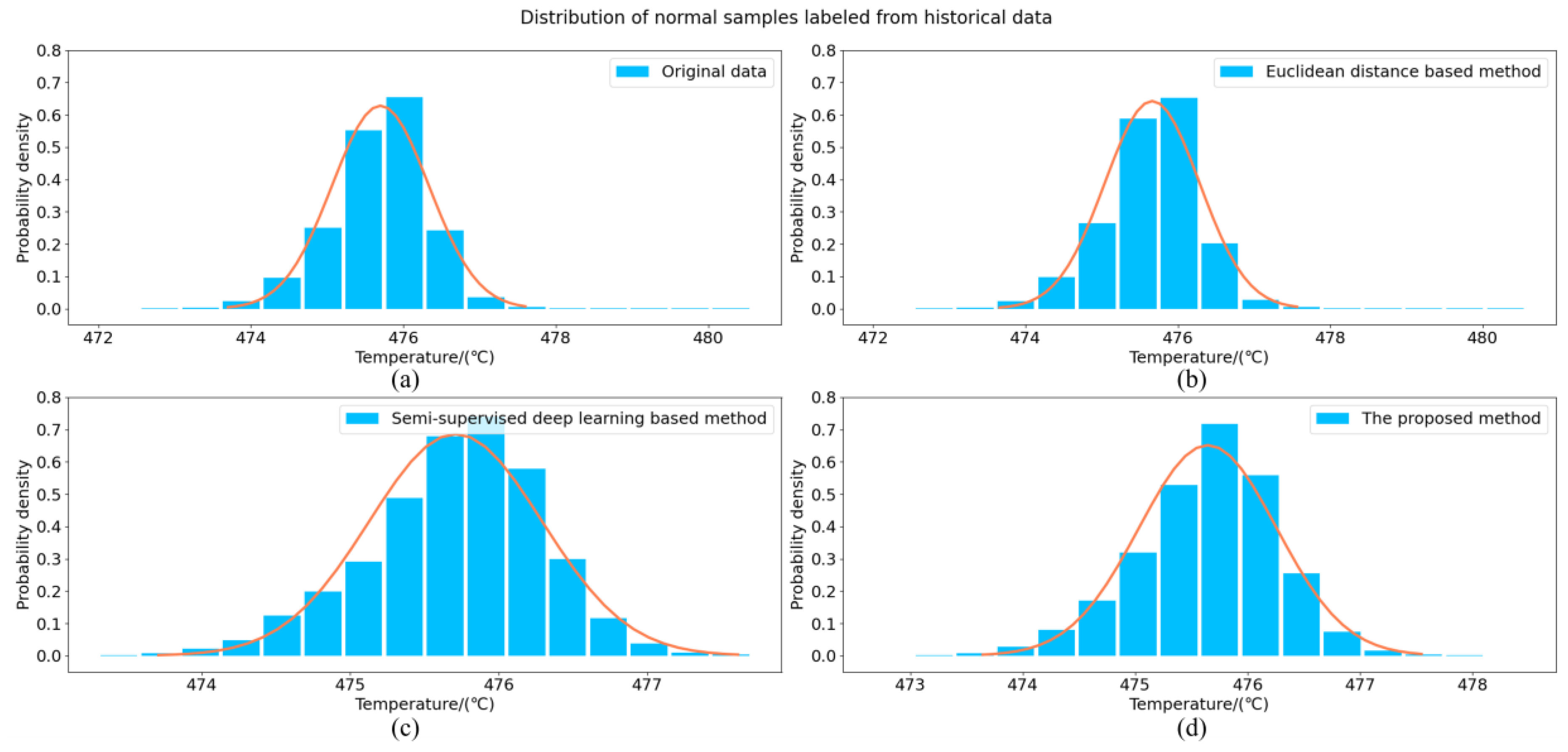

As mentioned before, sufficient normal training data are an important prerequisite for establishing a process monitoring model. In practice, all the historical data collected from industrial processes are unlabeled. There is inevitably a small number of fault samples in the historical data, which have to be labeled and excluded from the training data. Labeling data manually is almost impossible because of the high labor and time costs. Generally, a few normal samples are first labeled manually, and then a data selection strategy is employed to automatically label normal samples and fault samples from the rest of the historical data. To address this issue, an information entropy-based data selection strategy is proposed and compared to distance-based methods and semi-supervised deep learning methods.

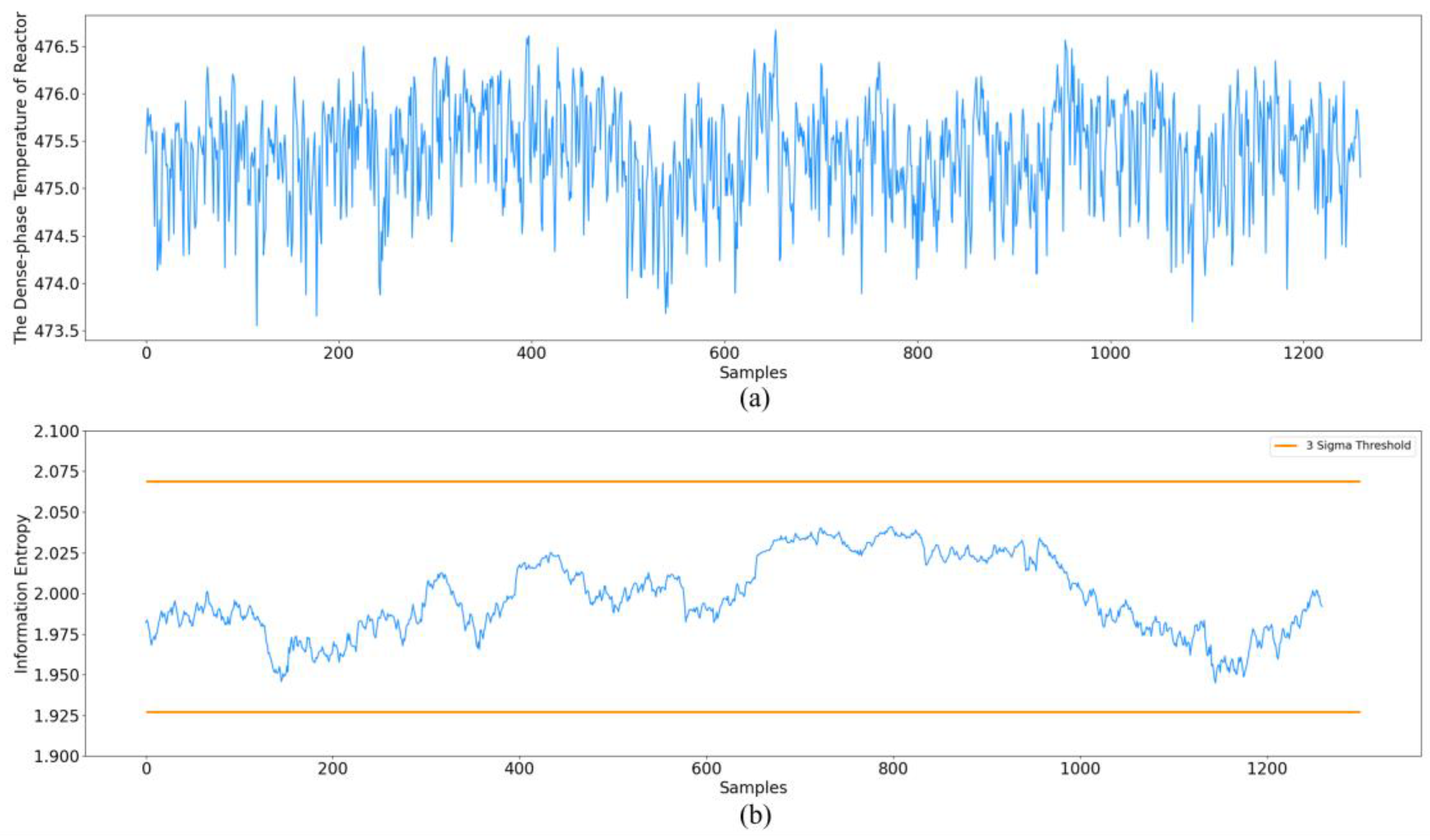

The proposed method aims to automatically label samples according to the dissimilarity measure between the distribution of normal samples and fault samples of key variables using information entropy. The key variables refer to variables that have a great impact on product quality and safety. These variables are usually controlled within a small variation interval, which is hardly influenced by the random disturbances of the process. Therefore, normal process disturbances and real faults can be effectively distinguished by the proposed method.

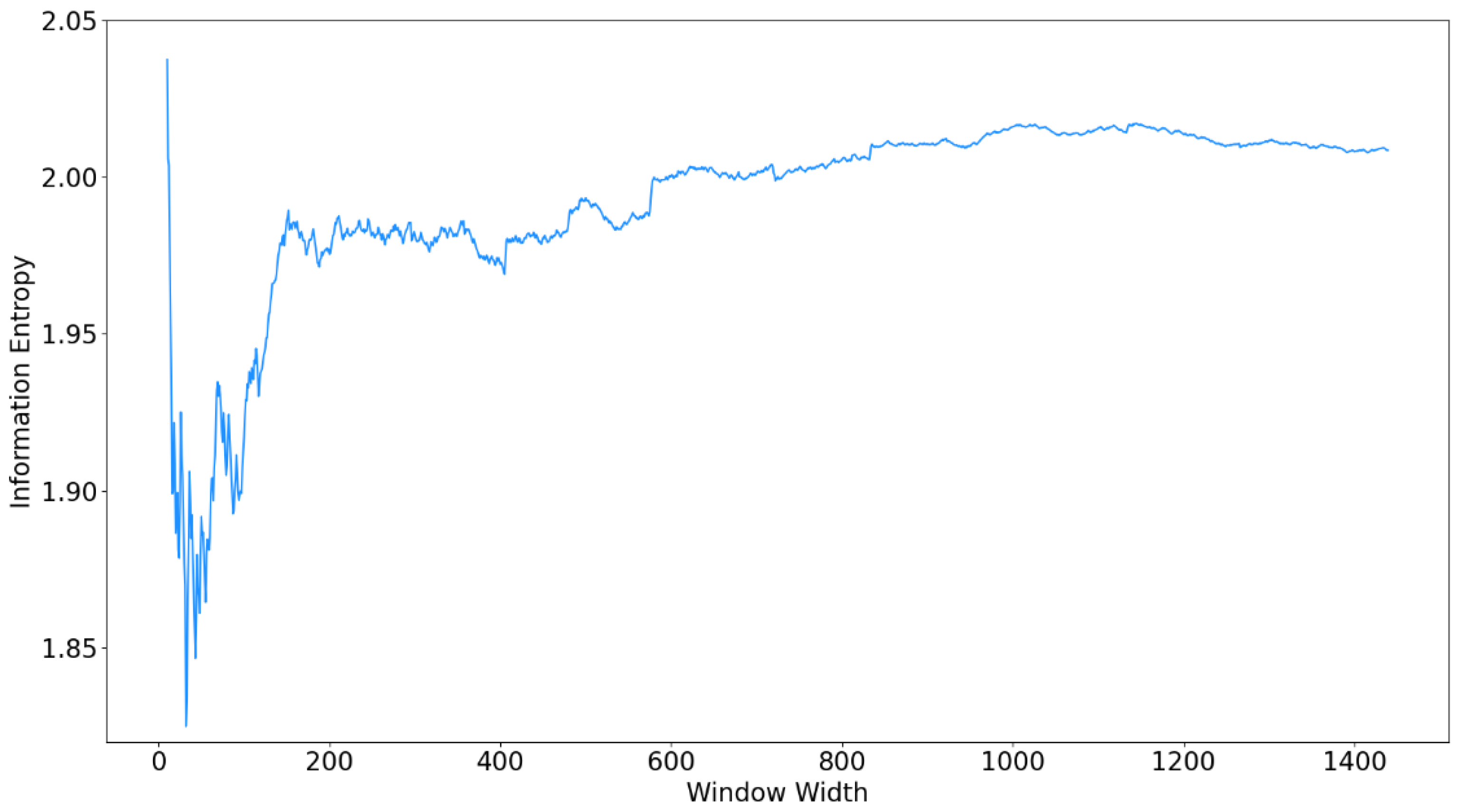

Given a key variable

, where the first

samples are manually labeled normal samples and

is much less than

, the information entropy of normal samples

is first calculated using a sliding window. Details on the selection of window width

are presented in

Section 4.2. Since

group information entropy has been calculated, a control limit can be calculated as follows to determine the normal variations in the distribution of the variable

,

where

are the average value and standard deviation of the information entropy under normal operating conditions,

is the lower control limit. Only the lower control limit is employed because the information entropy will reach its maximum when data are evenly distributed. Therefore, the information entropy remains at a high value for normal samples. When a fault occurs, the distribution of data in the window will change, causing a decrease in its information entropy. Then, the proposed strategy is ready to label samples in the rest of the historical data. The information entropy of unlabeled samples

is calculated and compared with the control limit. New samples whose information entropy is higher than the control limit will be labeled as normal samples; otherwise, they will be labeled as fault samples. After all historical data are labeled, the fault-free samples in historical data are included in the training data to establish the process monitoring model.

The main advantages of the proposed data selection strategy are reflected in two aspects. Firstly, the proposed data selection strategy employs information entropy as the dissimilarity measure between the distribution of key variables in labeled normal samples and unlabeled samples to perform data labeling. The key variables are highly related to product quality or process safety, so they are strictly controlled within a small interval and hardly influenced by random disturbances of the process. Therefore, only real faults that affect the normal process operation can be labeled as fault samples by the proposed method, and the process monitoring model established with training data labeled by the proposed method will show a low false alarm rate and high sensitivity to faults. In contrast, the distance-based methods will be significantly affected by the random disturbances of the process, resulting in situations where the Euclidean distance between normal samples can also be large, even larger than the distance between normal samples and fault samples. In this way, the control limit that represents the normal variations will be large, so that certain fault samples will be labeled as normal samples together with normal process disturbances. Furthermore, the real faults will be buried in these normal process disturbances and cannot be detected by the process monitoring model, making it hard to provide a reliable monitoring result for online applications. Secondly, the proposed data selection strategy does not require a large number of initial labeled samples. The information entropy is a statistical method that can be used to make an accurate estimation of the distribution of data only with proper window width. Therefore, a reliable control limit can be determined with only a few labeled normal samples. This is difficult to implement with semi-supervised deep learning methods. If the initial labeled samples are too limited, it is not able to establish an effective deep learning model since there are a large number of parameters in the model that have to be trained through sufficient training data; otherwise, the model will be overfitting, which will affect the data labeling results, causing a poor generalization ability of the process monitoring model.

3.2. Process Monitoring Modeling

For an industrial process

with

variables and

selected modeling data, a two-layer autoencoder model, which is shown in

Figure 1, is trained for feature extraction and fault detection as follows,

where

are latent variables,

is the construction of

,

are weights and bias of the encoder and decoder. The model aims to minimize the reconstruction error between

and

. The mean squared error (MSE) is applied in this work, which can be calculated as follows,

where

is the sample in

, and the

is the reconstruction of the sample

. As the autoencoder model has been constructed, modeling data are utilized to train the model, in which two percent of the data are randomly divided as the validation dataset. When the reconstruction error of the training dataset no longer decreases significantly and the error of the validation set reaches a minimum, the model training is completed for real-time monitoring.

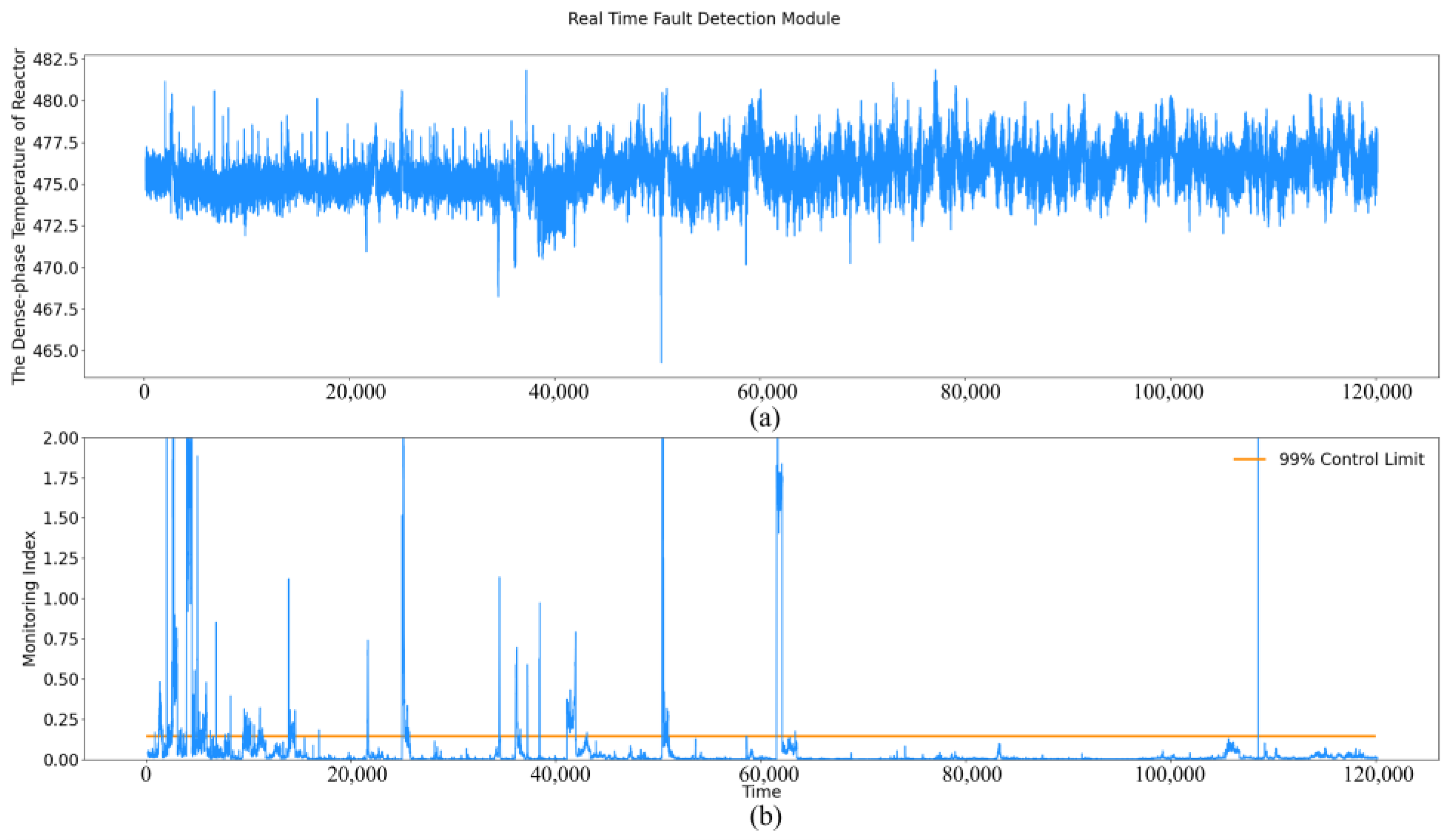

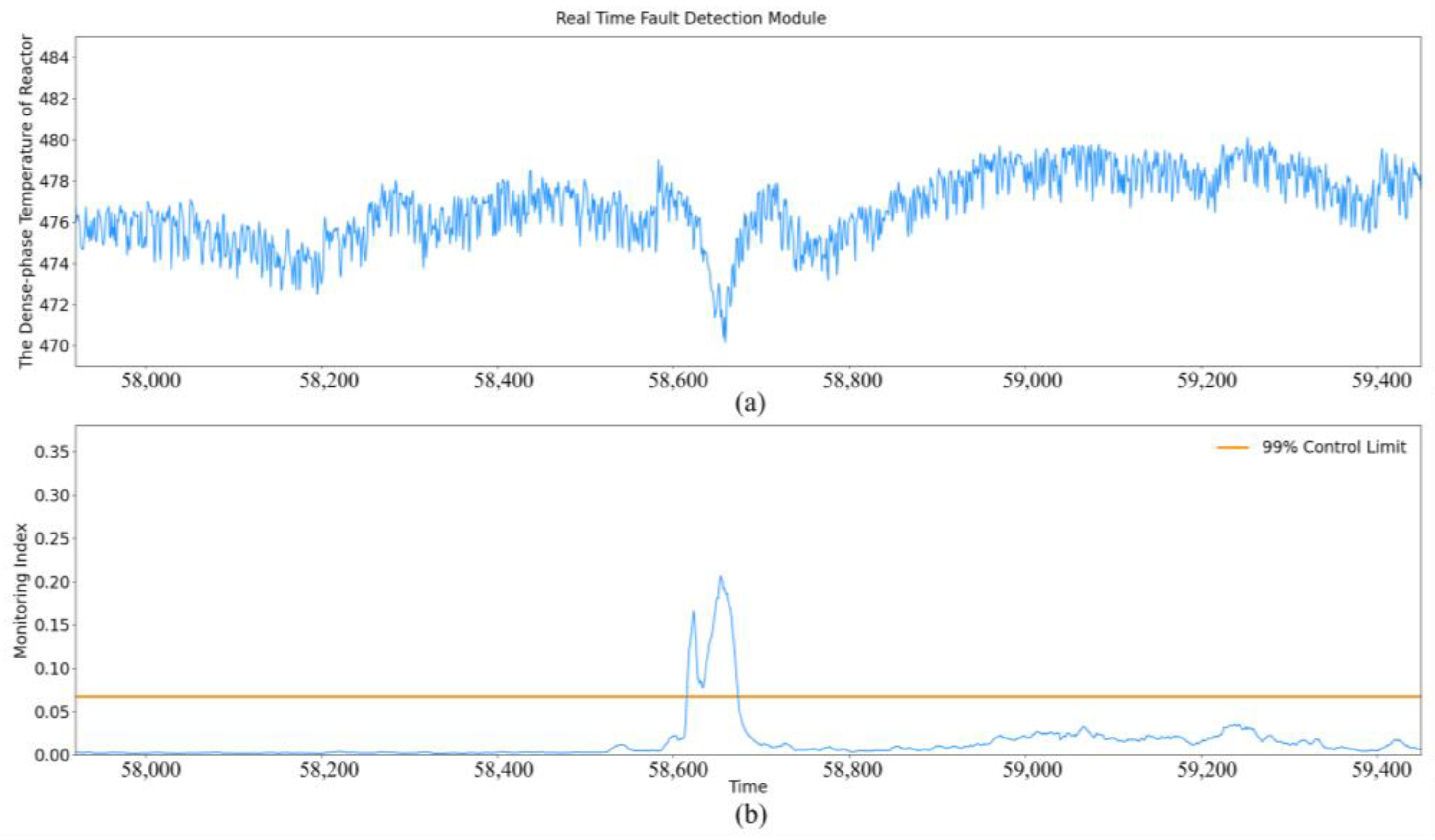

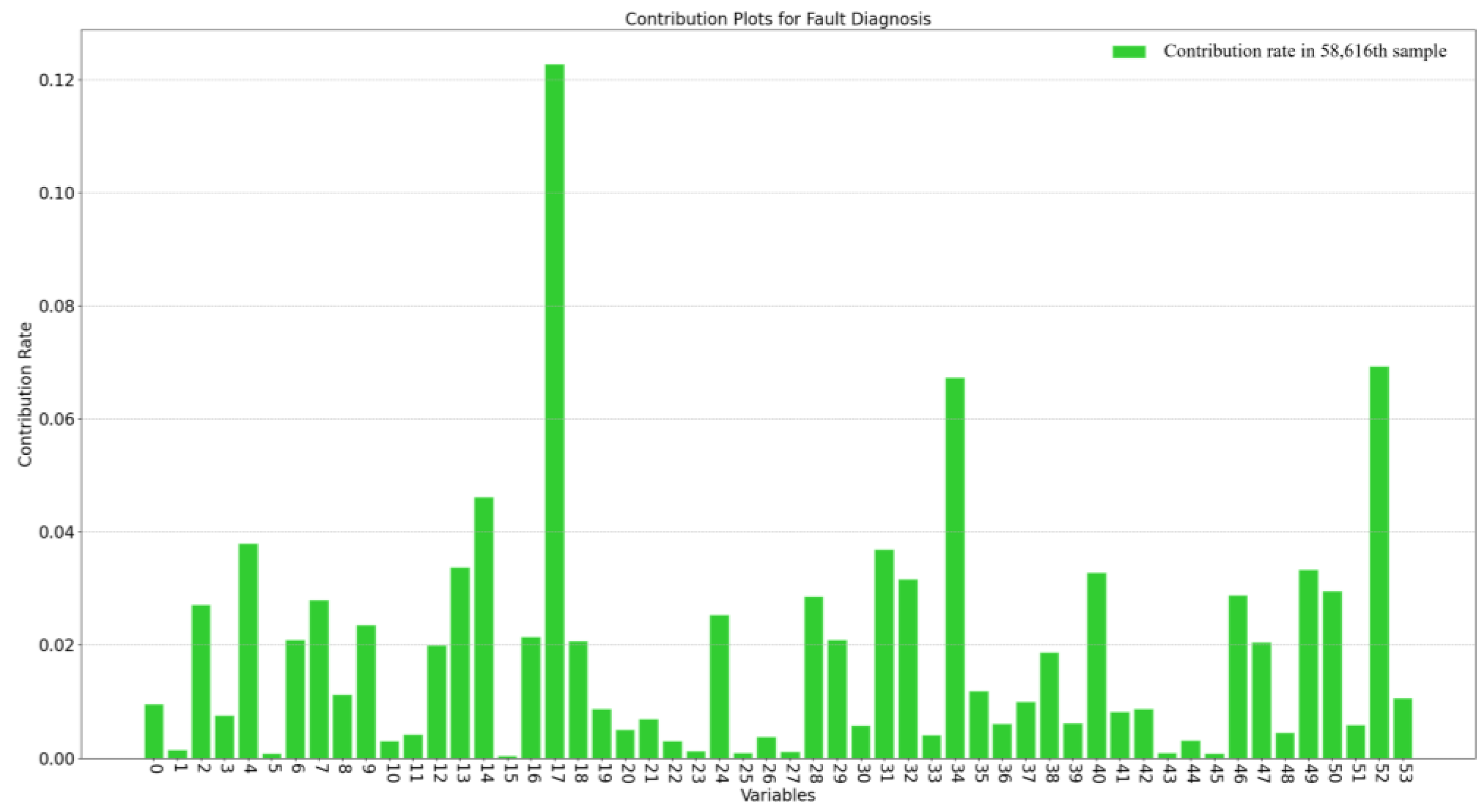

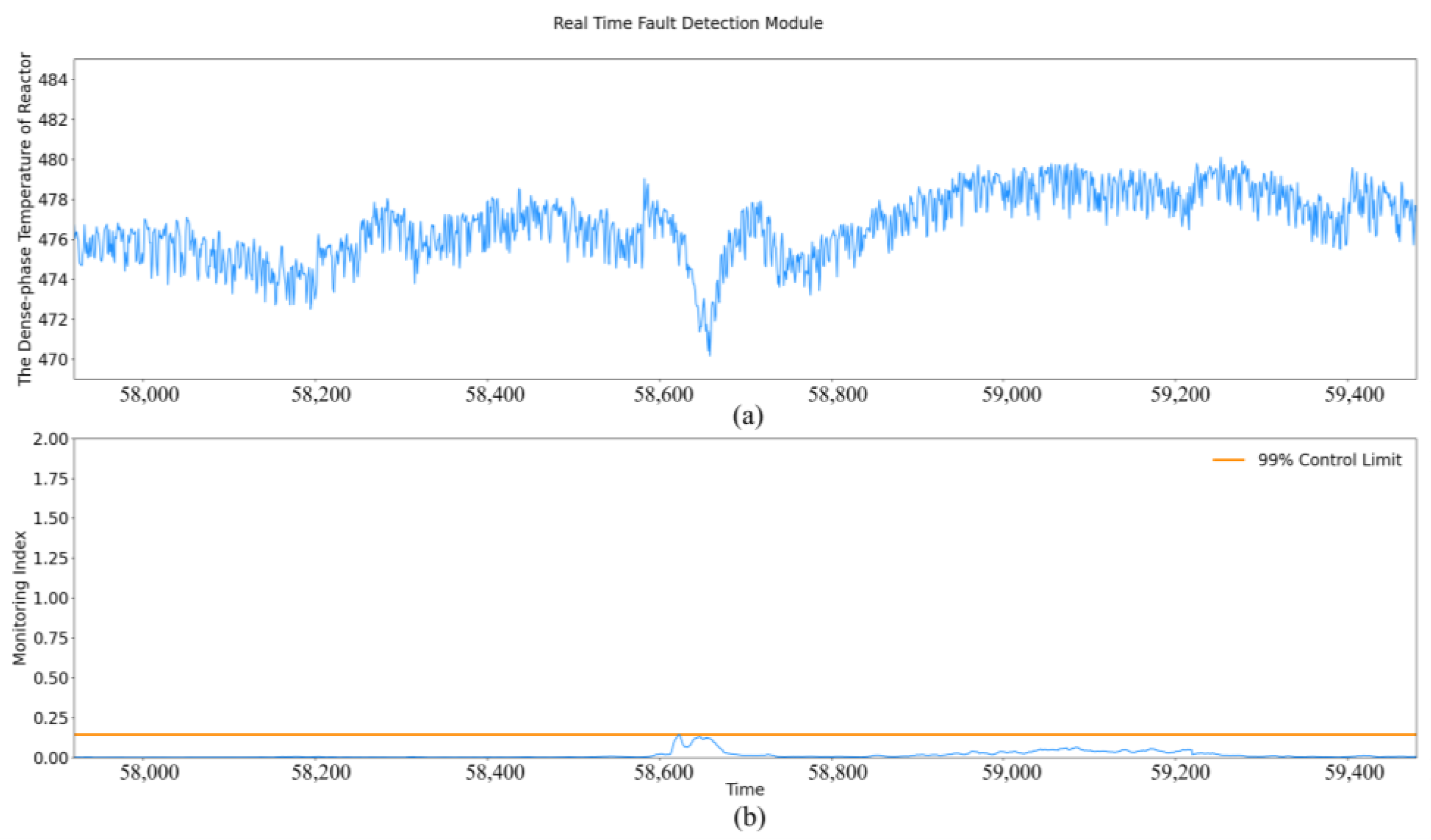

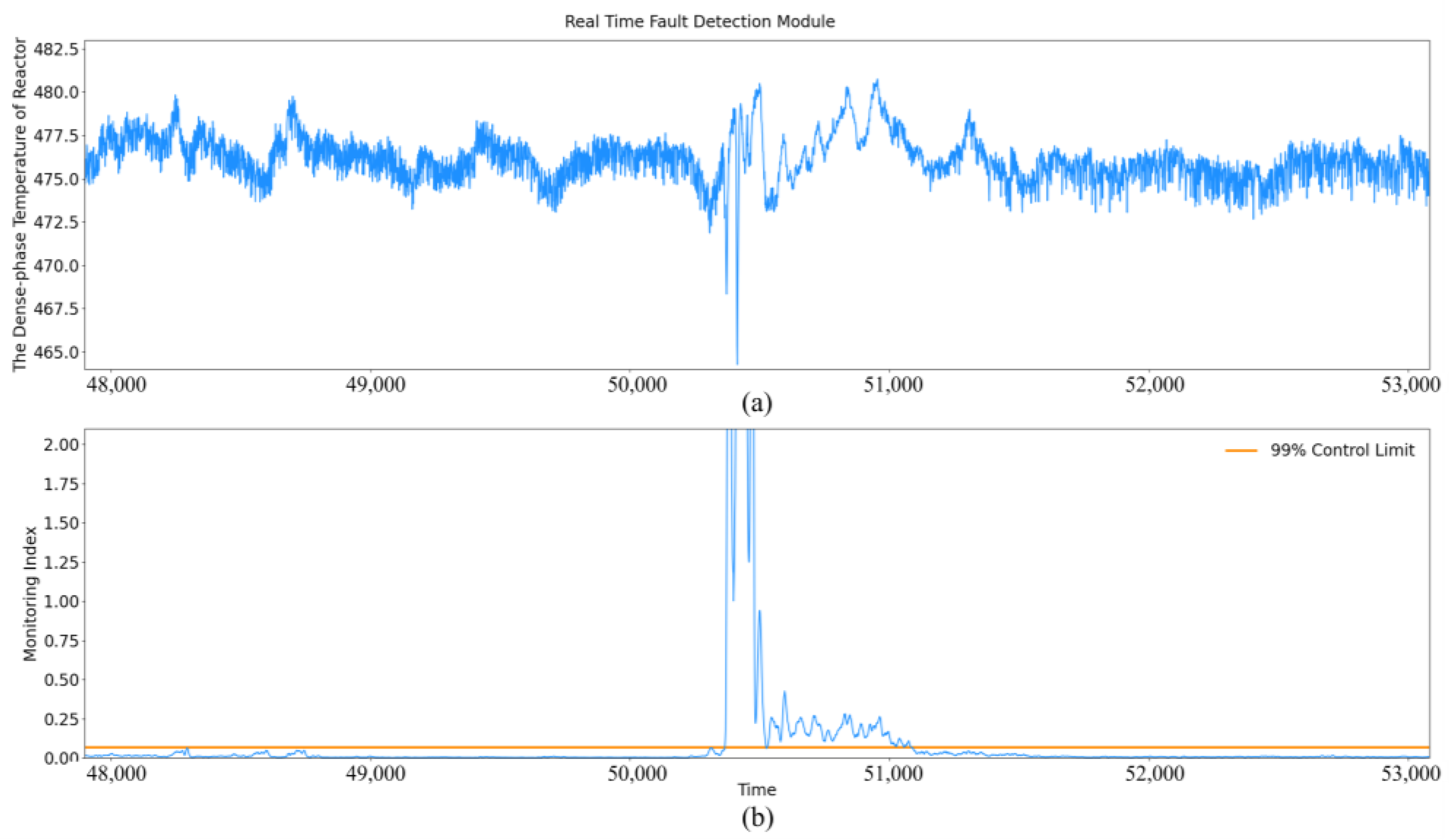

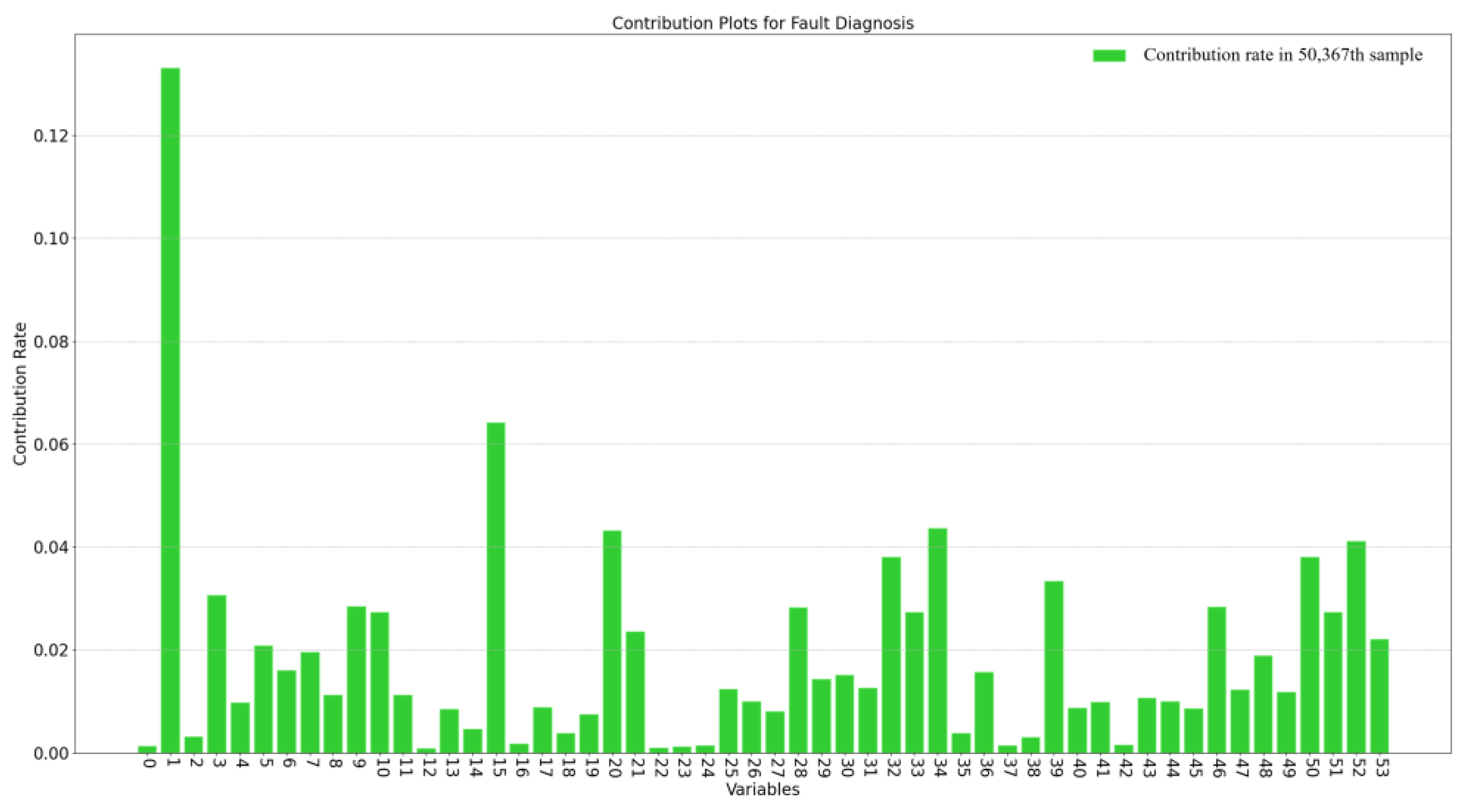

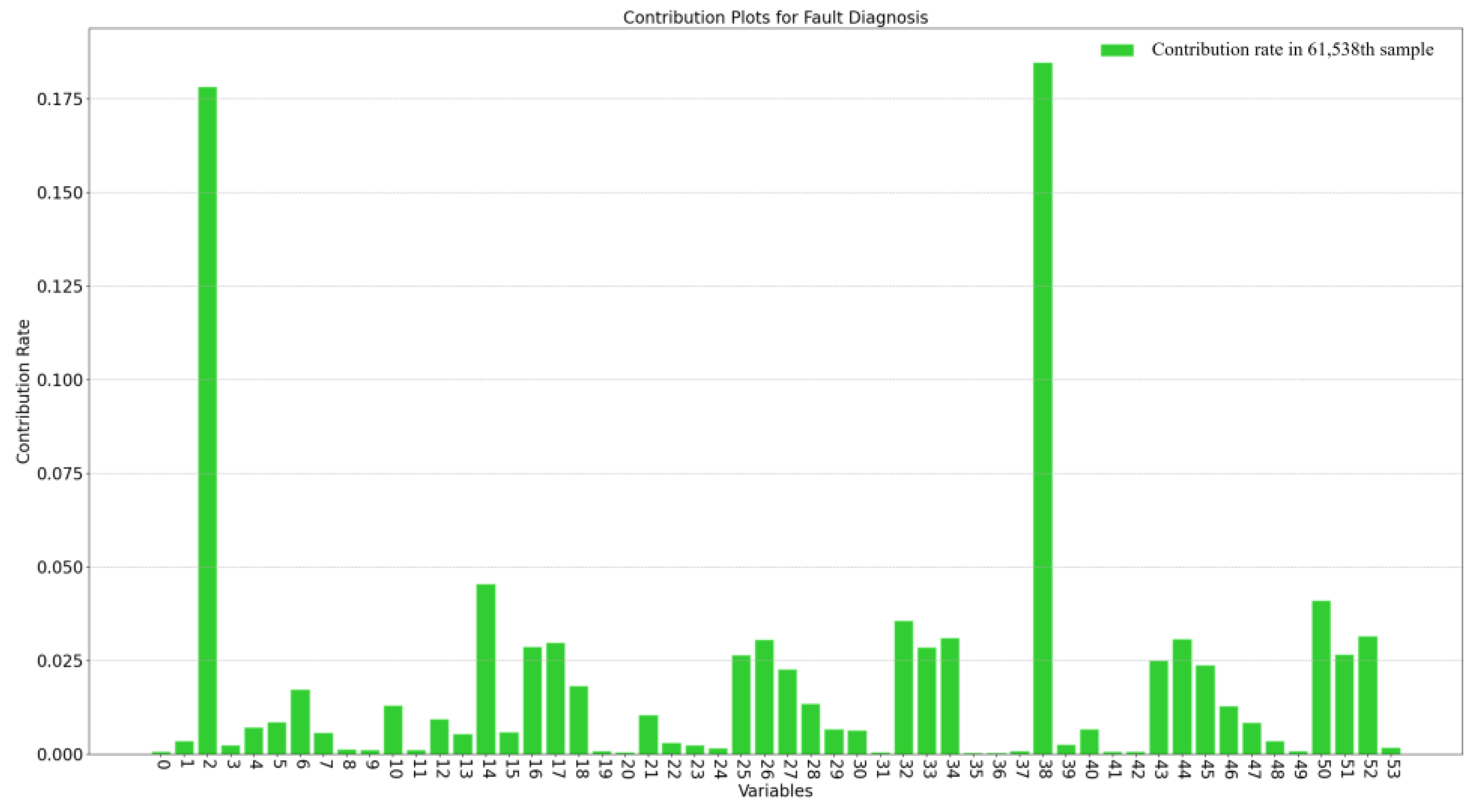

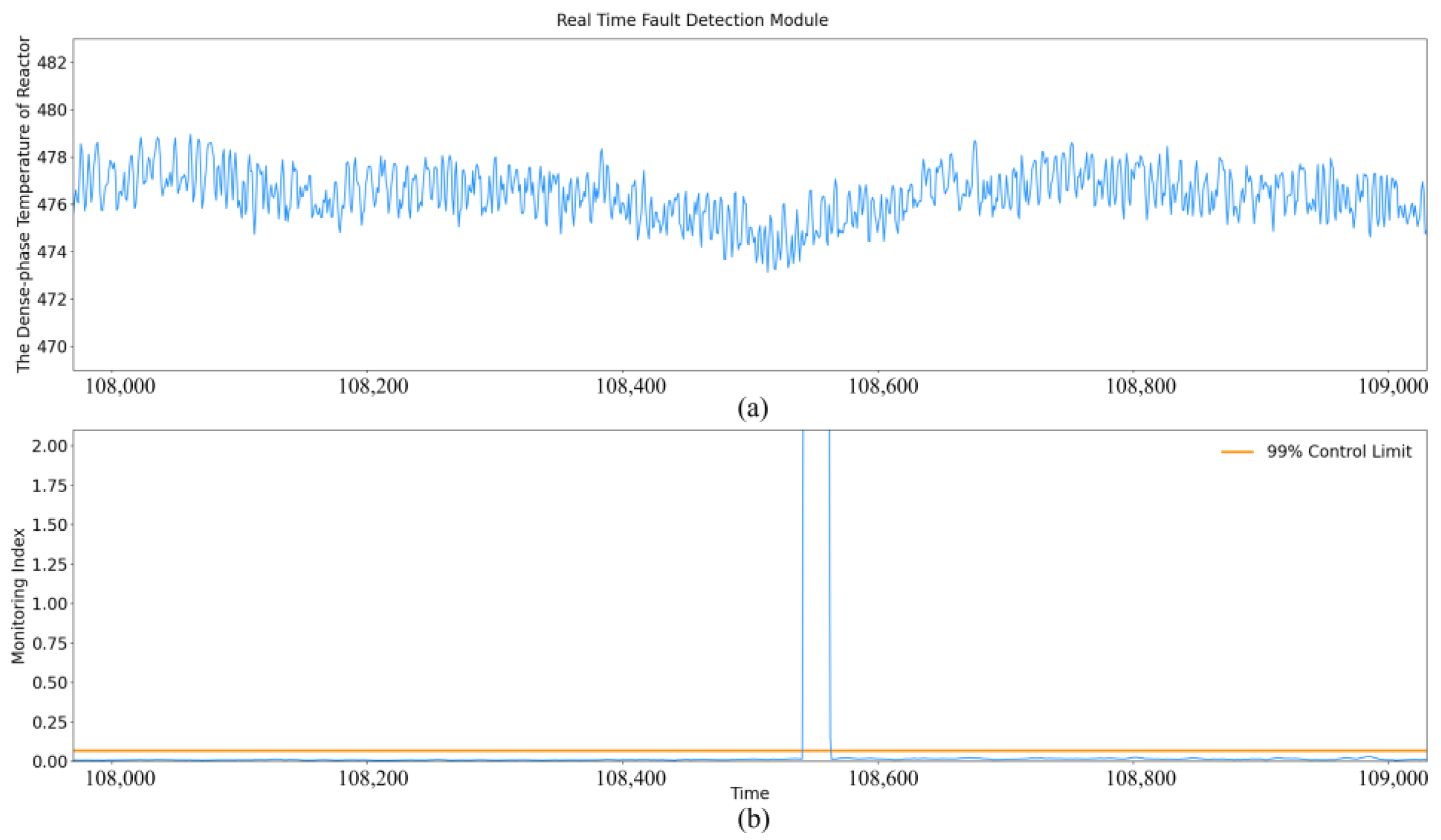

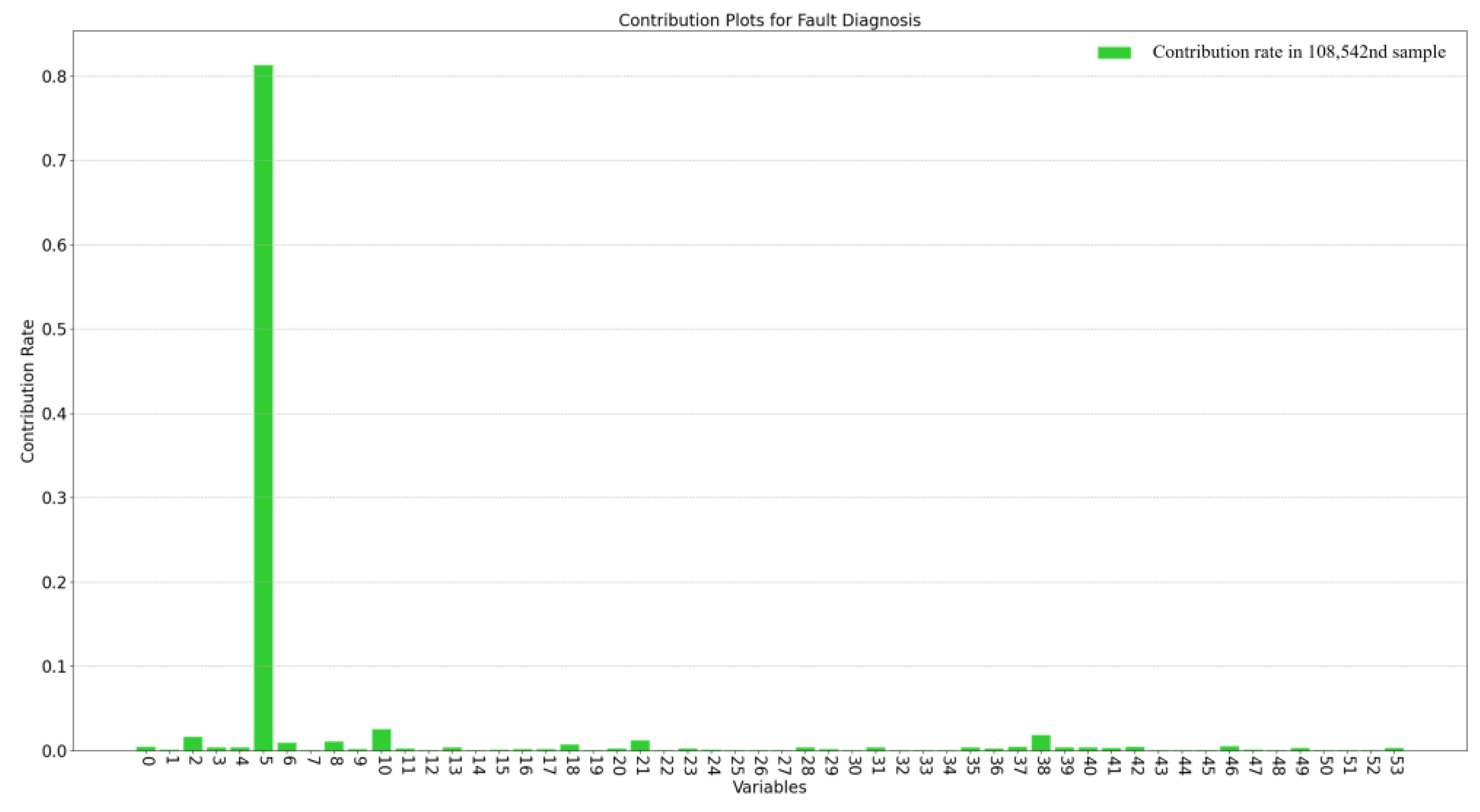

3.3. Fault Detection and Diagnosis

To realize real-time fault detection, a monitoring statistic should be constructed to quantify the process operating status. The MSE statistic in Equation (8) is used in this work. The MSE of data under normal operating conditions should be within a threshold, and variable correlation will change significantly when a fault occurs, resulting in a large reconstruction error. Given a series of MSE under normal conditions

, the threshold under a 99% confidence interval can be determined through kernel density estimation,

where

is the kernel function, which is generally selected as the Gaussian kernel function,

is the probability density function, and

represents the MSE at the

sample. For real-time monitoring, the MSE statistic is calculated and compared with the threshold. Statistics within the threshold indicate that the system is operated under normal operating conditions, and the data will be stored in the database for the model update. If the statistic exceeds the threshold, a fault is detected and the root cause needs to be isolated immediately. In this work, contribution plots are applied by calculating the contribution rates of each variable to the reconstruction error. The variable with the largest contribution rate is preliminarily diagnosed as the root cause.

3.4. Model Update Strategy

For plant-wide industrial processes, the catalyst activity and equipment structure will change to a certain extent with the increase in operation time, and the operating condition can also be adjusted according to the product price and government regulation. To make the model more applicable to the current operating condition, a model update strategy is also applied in the proposed process monitoring model.

In real-time process monitoring, data that are identified as normal operation by the proposed method are continually saved in the database and supplemented to the modeling data after a period of time for a model update. The model parameters will not change significantly as long as the process is operated under normal conditions. Therefore, the time cost for the model update is negligible and will not affect the application of online monitoring. Moreover, the application of the model update strategy can deal with the multimode issue simultaneously. The multimode issue mostly results from the adjustment of production loads in industrial processes. This kind of mode switching will lead to a step change in the feed flow, which can be easily identified by contribution plots. When the mode switching is identified by the process monitoring model, the model update strategy will start to work, by which the normalization center of the model will be adjusted to the new mode. In most multimode process monitoring methods, all possible modes must be available in the training data. For real-time monitoring, new samples are first clustered into one of the historical modes and then monitored with the corresponding model, which requires an expensive computation cost. More importantly, it is impossible to satisfy the assumption that all modes are included in the training data for an industrial process. By contrast, the proposed process monitoring method employs a model update strategy to make a connection between fault detection and fault diagnosis, by which the multimode issue can be addressed by adjusting the normalization center of the model. The proposed method can be applied to monitor multimode processes even with only one operating mode available in the training data.

In summary, the procedure of the proposed industrial process monitoring framework can be described as follows.

Offline modeling:

- (1)

Data are preprocessed to address data deficiency and outliers.

- (2)

Fault-free modeling data are automatically selected using the proposed strategy.

- (3)

Modeling data are normalized with their average value and standard deviation.

- (4)

Modeling data are divided into a training dataset and a validation dataset to train the proposed process monitoring model.

- (5)

MSE statistics under normal operating conditions are calculated and the threshold is determined.

Online monitoring:

- (1)

Real-time data are normalized with the average value and standard of modeling data.

- (2)

Normalized data are put into the process monitoring model for data reconstruction.

- (3)

Real-time MSE statistics are calculated using reconstruction errors.

- (4)

Real-time MSE statistics are compared with the threshold. Normal data are stored in preparation for model updates, while the fault is diagnosed by contribution plots.

5. Conclusions

In this work, we propose a new automatic selection strategy for modeling data of industrial process monitoring based on information entropy. Compared to expert knowledge-based, distance-based, and semi-supervised deep learning-based data selection strategies, the proposed strategy requires lower labor costs, and is more applicable to industrial processes with limited labeled normal samples. Based on this strategy, a data-driven process monitoring framework is developed and a model update strategy is employed to make a connection between fault detection and fault diagnosis for addressing the multimode issue.

The proposed process monitoring framework is applied to a large-scale industrial methanol to olefin unit of a practical chemical plant in China. The results show that the normal samples and fault samples can be correctly labeled by the proposed data selection strategy with only 1440 manually labeled normal samples. A long-term effective process monitoring model is then established based on all normal samples labeled from historical data. The process monitoring performance of the model has been tested in an approximately three-month online application. The results indicate that faults can be detected earlier by the proposed method than by operators through observation, and the root cause of the faults can be preliminarily diagnosed as well. The real-time process monitoring results can be delivered to operators in practical operation, by which they could take action to minimize the impact of faults. Details of the proposed data selection strategy and modeling process have also been provided to demonstrate the replicability, by which we hope to provide a certain reference for other researchers or companies, thereby facilitating a wider industrial application of process monitoring systems.

Although data-driven process monitoring has made great progress, there are several considerations for its practical application. The training data must be large enough to represent various normal behaviors of the process; otherwise, frequent false alarms will be triggered. For example, there are slow changes over time in chemical processes, such as equipment aging and catalyst deactivation. Generally, these changes can be regarded as normal situations in process operation. They have to be considered in establishing the process monitoring model to avoid false alarms. In addition, fault samples should not be included in the training data; otherwise, the faults will be difficult to be detected by the process monitoring model. Therefore, an effective data labeling strategy is required to address the above issues. Another important consideration is the selection of the process monitoring model and parameters. The model is established aiming to extract features from the training data and determine a control limit for normal variations using a statistic. The model should be selected according to the data characteristics of the target process. For example, the industrial process investigated in this work is highly nonlinear with complex variable relationships, which is hard to be captured by multivariate statistical methods. At the same time, there are sufficient historical data available to establish a deep learning model with great generalization ability. Therefore, a multi-layer autoencoder is employed in this work. Moreover, several model parameters have to be determined no matter which model is employed, such as the number of principal components in PCA and the structure of deep learning models. The identification of optimal model parameters is an important research issue to be discussed, which can be referred to in many existing studies.

Through the above considerations, the proposed process monitoring framework has achieved a promising performance through a three-month test in an industrial process, but there are still several limitations. The fault is localized at the variable with the highest contribution rate in this work, while it is difficult to determine the root cause if the fault has been propagated among process variables. Under this circumstance, there will be more variables with a high contribution rate and the variable with the highest contribution rate may not be the root cause of the fault. Another limitation is that the model update strategy may not be sufficient for more complex scenarios. The proposed method addresses the multimode issue through a model update strategy, as the variable correlation will not obviously change in different operating modes. However, the previous model may no longer be applicable after the replacement of the catalyst or shut-down maintenance because the variable correlation has changed. The model has to be re-trained with new data rather than just updating the model parameters. Therefore, future work will lie in the improvement of the process monitoring framework according to the considerations and limitations mentioned above.