Wear Prediction of Tool Based on Modal Decomposition and MCNN-BiLSTM

Abstract

:1. Introduction

1.1. Literature Review

1.2. Research Gaps and Contributions

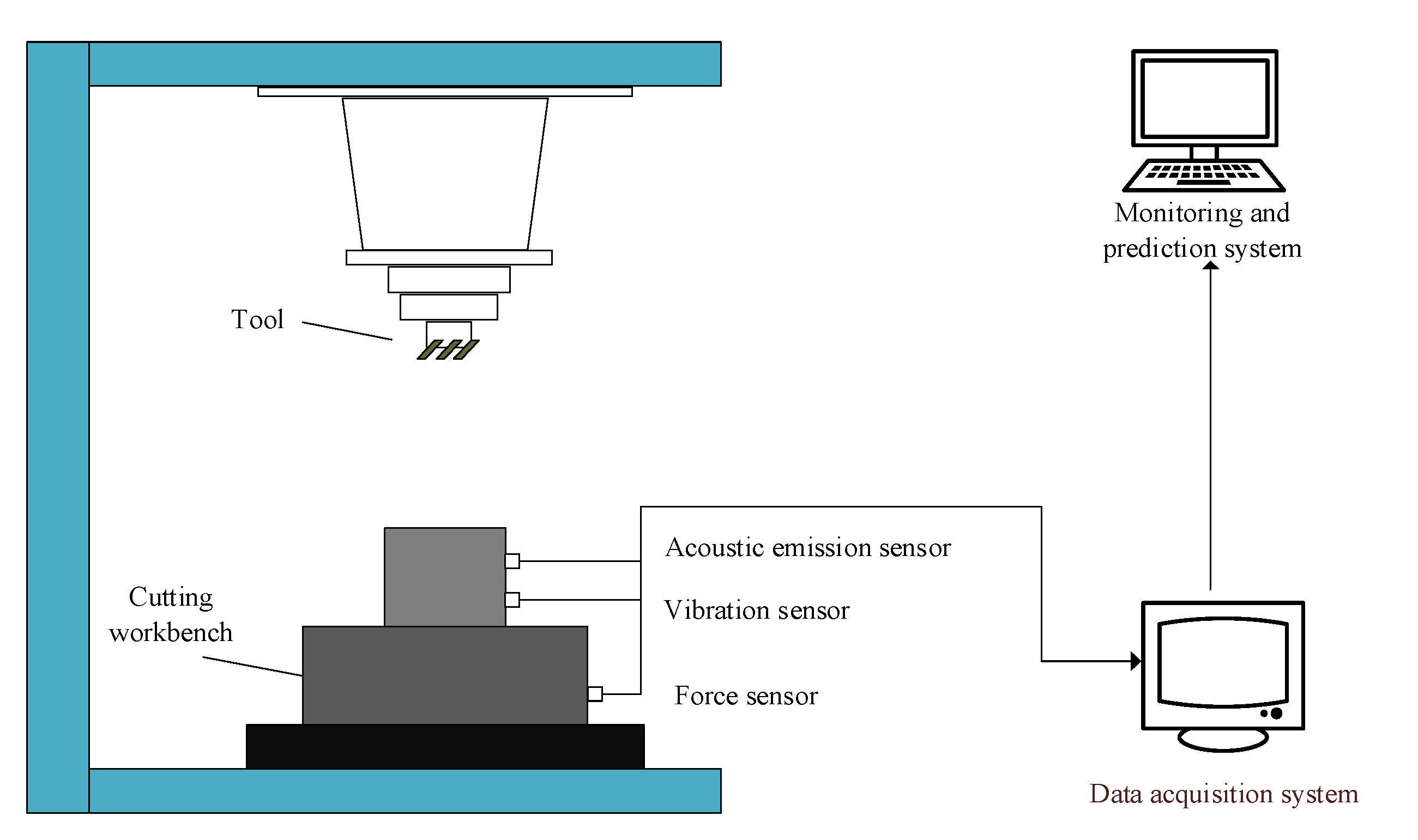

2. Problem Description

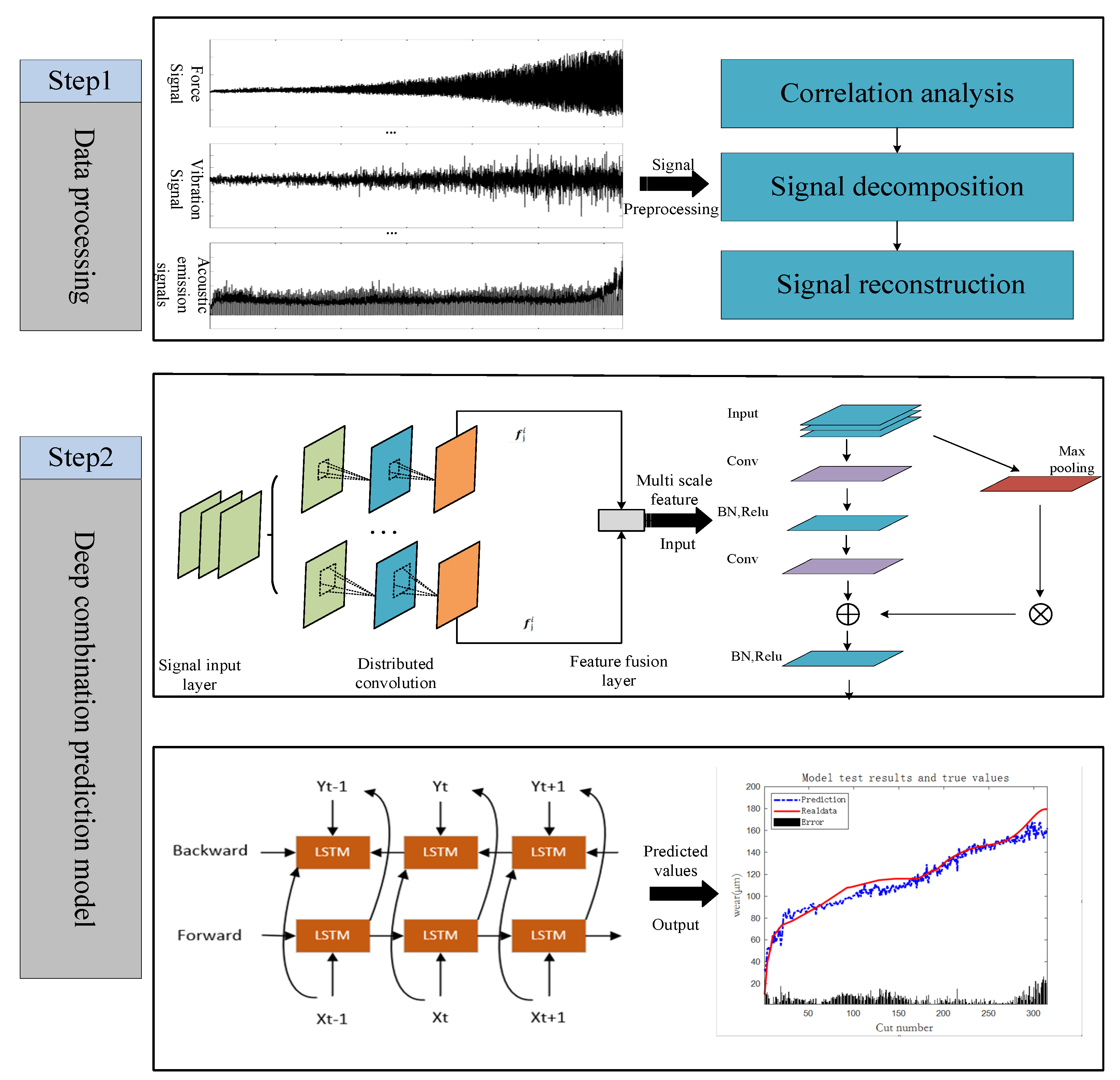

3. Predicting Tool Wear Based on MCNN BiLSTM

3.1. Tool Wear Prediction Framework

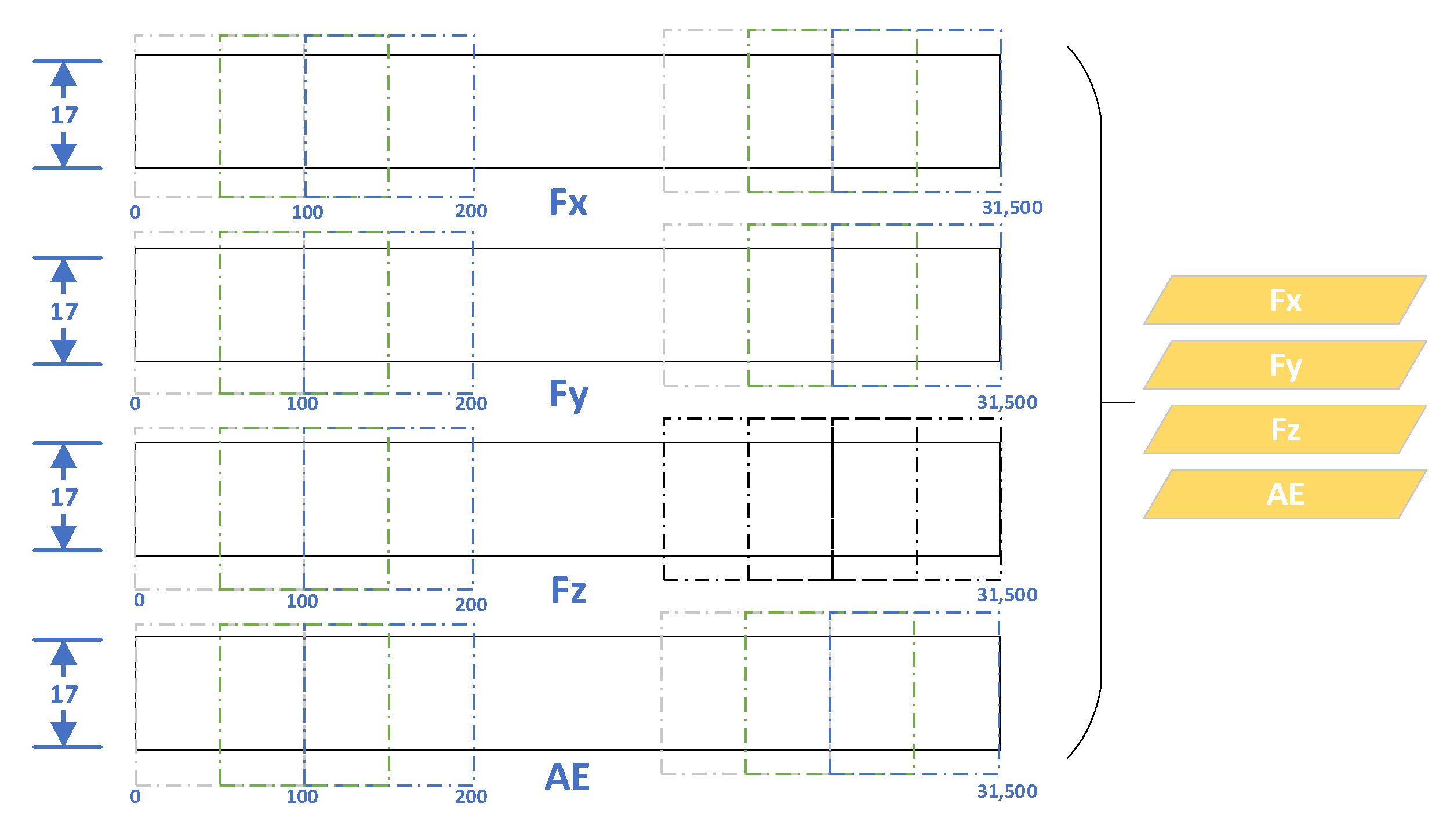

3.2. Data Processing

3.2.1. Multivariate Correlation Analysis

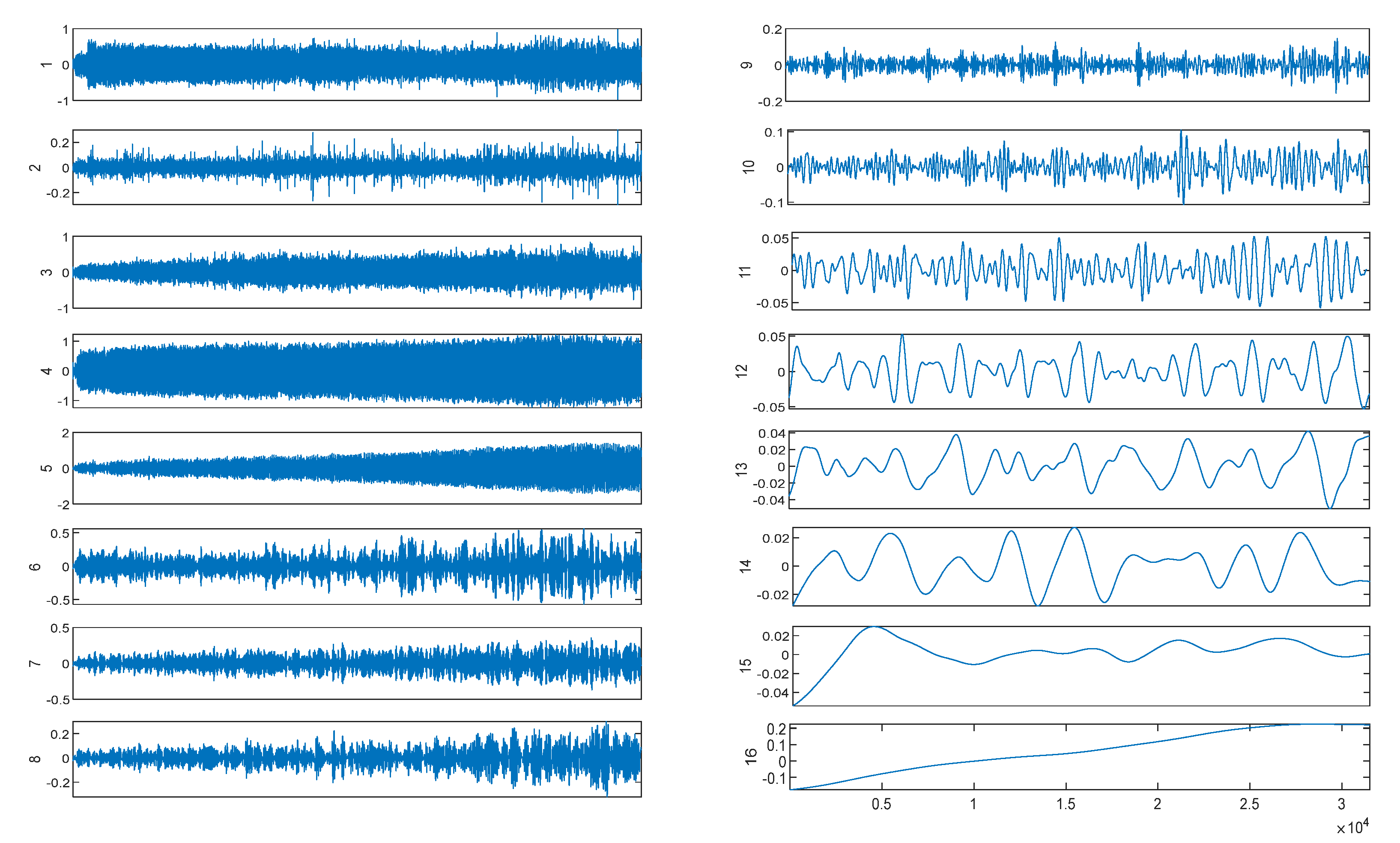

3.2.2. Empirical Mode Decomposition

- (1)

- Perform multiple random perturbations on the original sensor signal to obtain multiple sets of disturbance signals: , ; EMD decomposes the new signal to obtain the first subsequence:

- (2)

- By averaging the created N subsequences, the first subsequence of the CEEMDAN decomposition is obtained, and the residual of the first subsequence is also calculated to be removed.

- (3)

- Add a pair of positive and negative white Gaussian noise to to obtain a new signal. Use the new signal as the carrier for EMD decomposition to obtain the first subsequence , from which we can obtain the second subsequence of CEEMDAN decomposition and the residual after eliminating the second subsequence.

- (4)

- Repeat the above steps until the residual signal obtained is a monotonic function and cannot be further decomposed. The original signal is reproduced as follows:

3.3. Deep Combination Prediction Model

4. Validation and Analysis

4.1. Raw Data

4.1.1. Dataset Selection

4.1.2. Data Filtering

4.1.3. Mode Decomposition

4.2. Evaluating Indicator

4.3. Experimental Environment

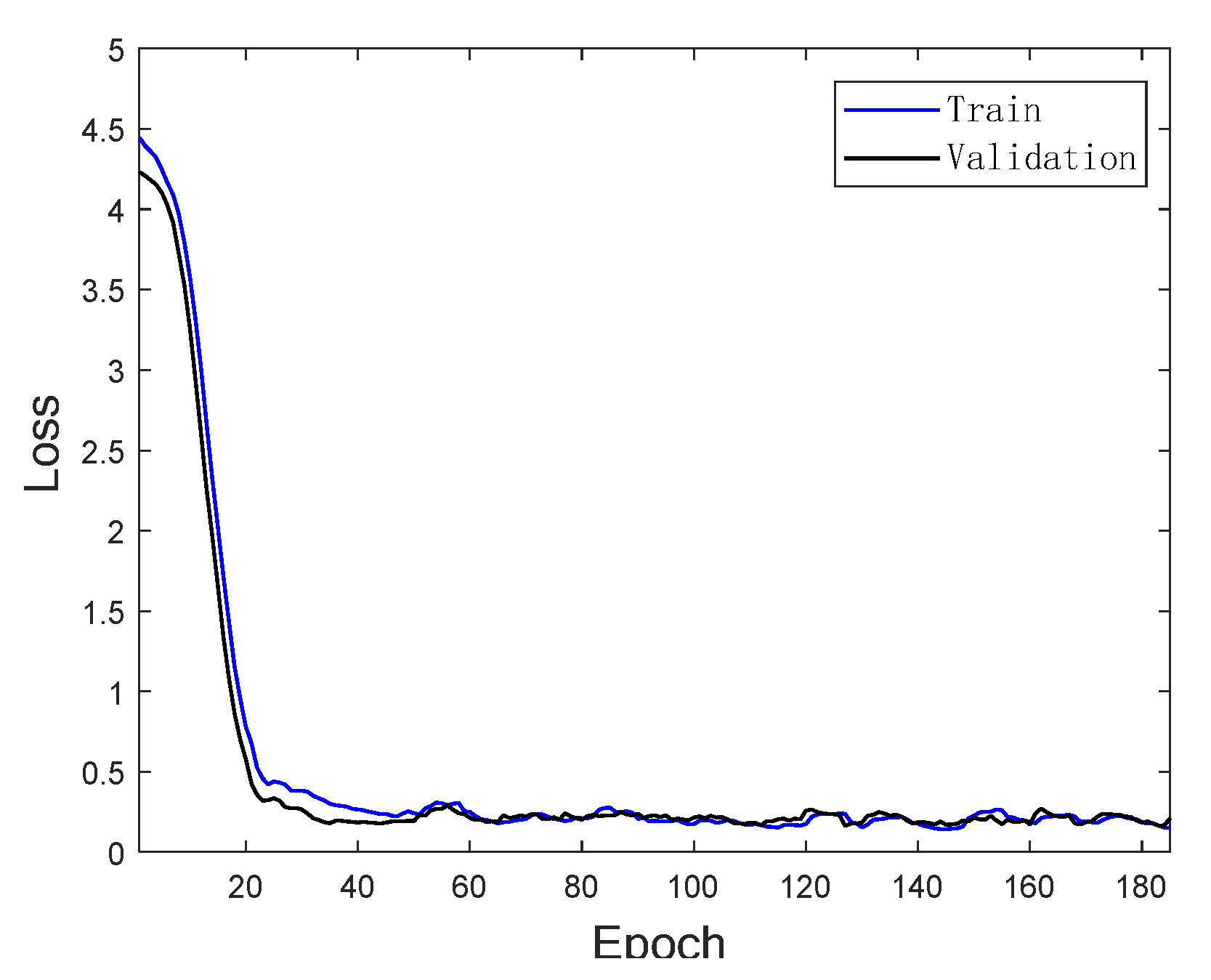

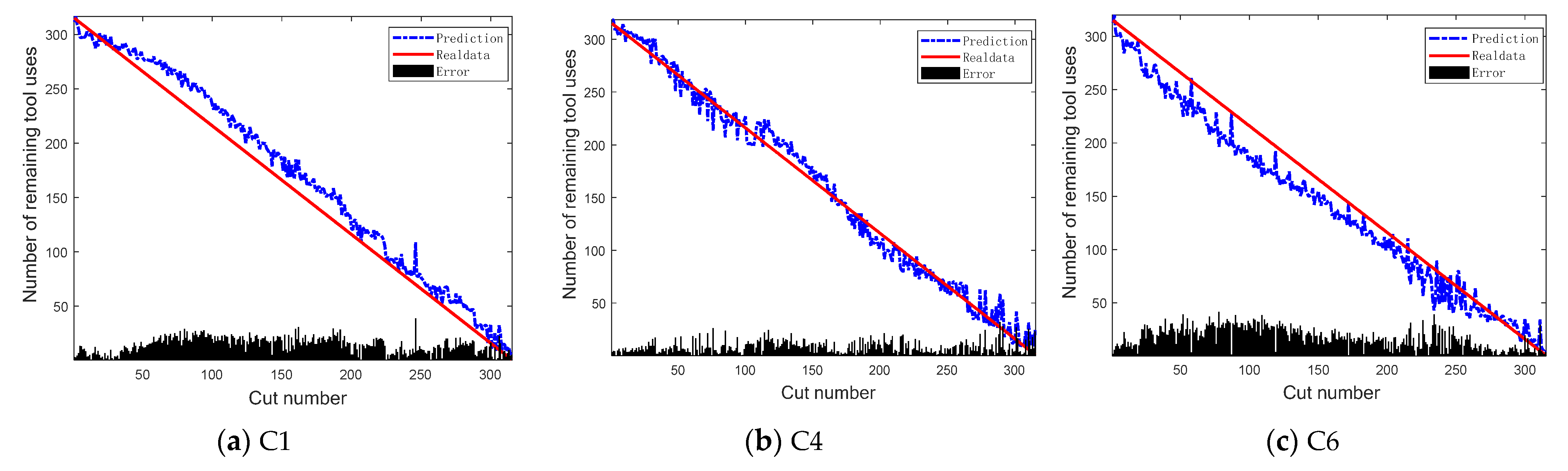

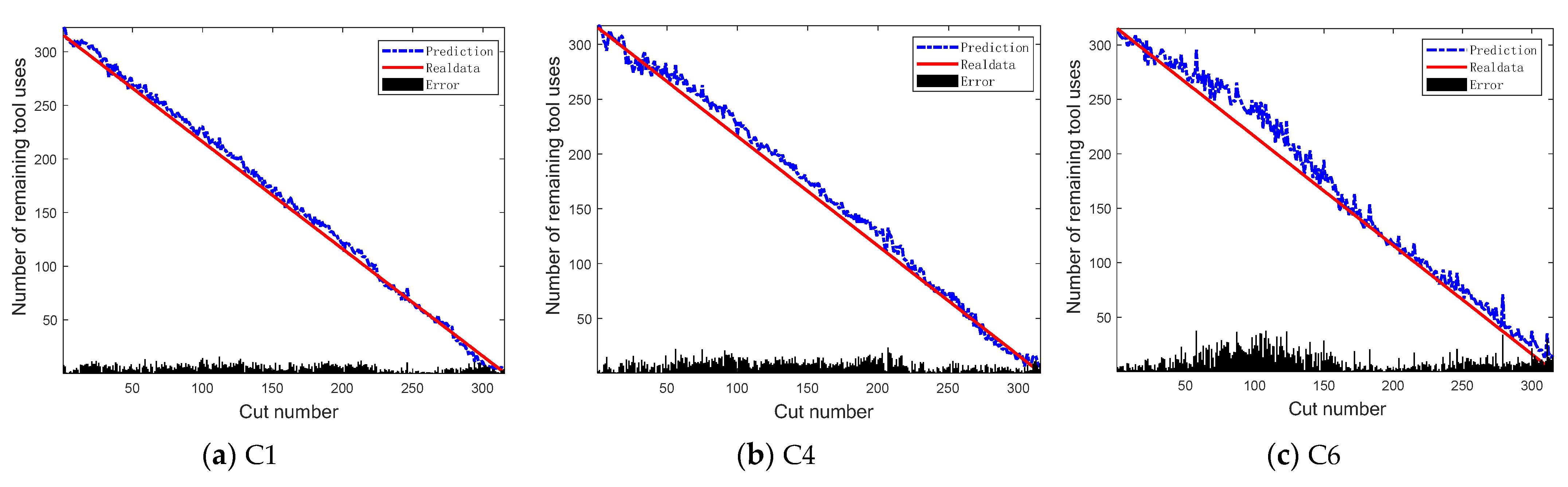

4.4. Result Analysis

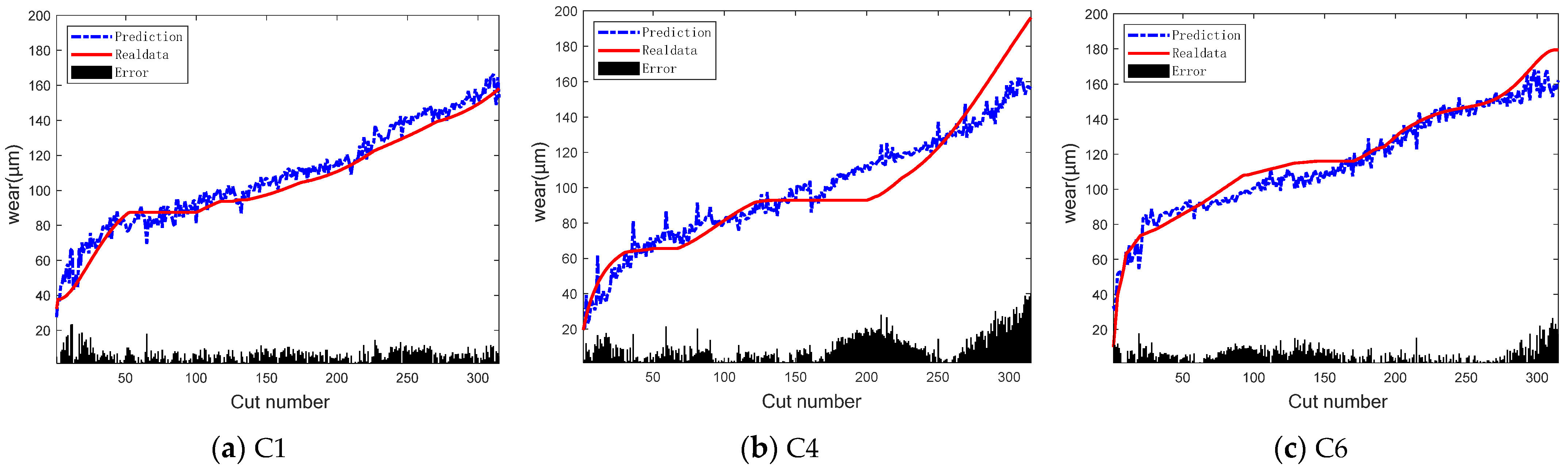

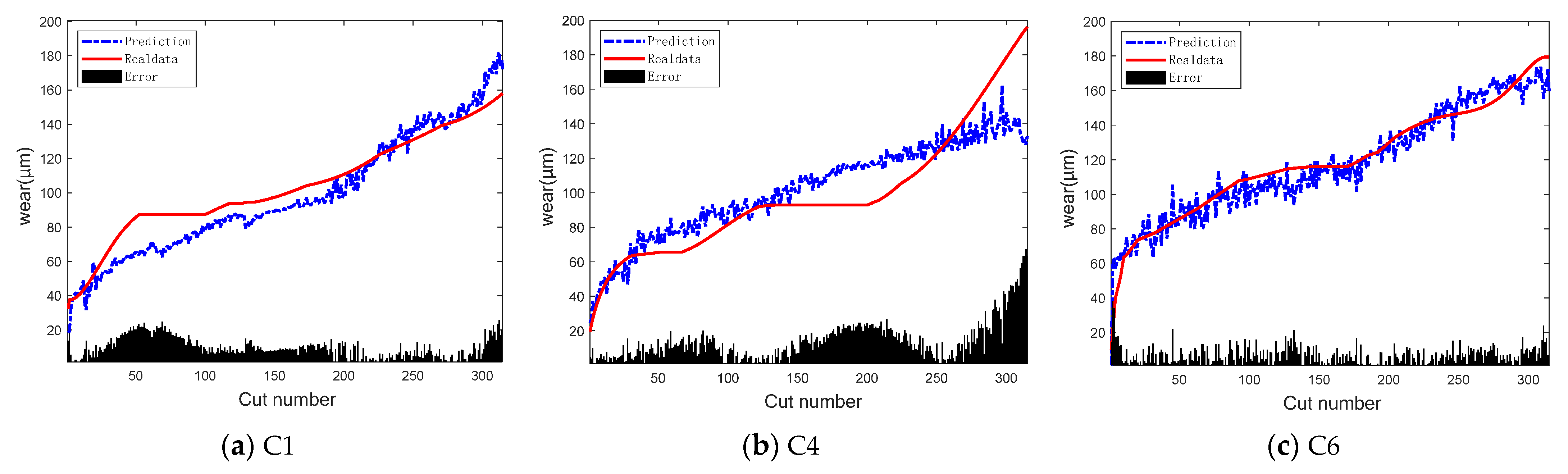

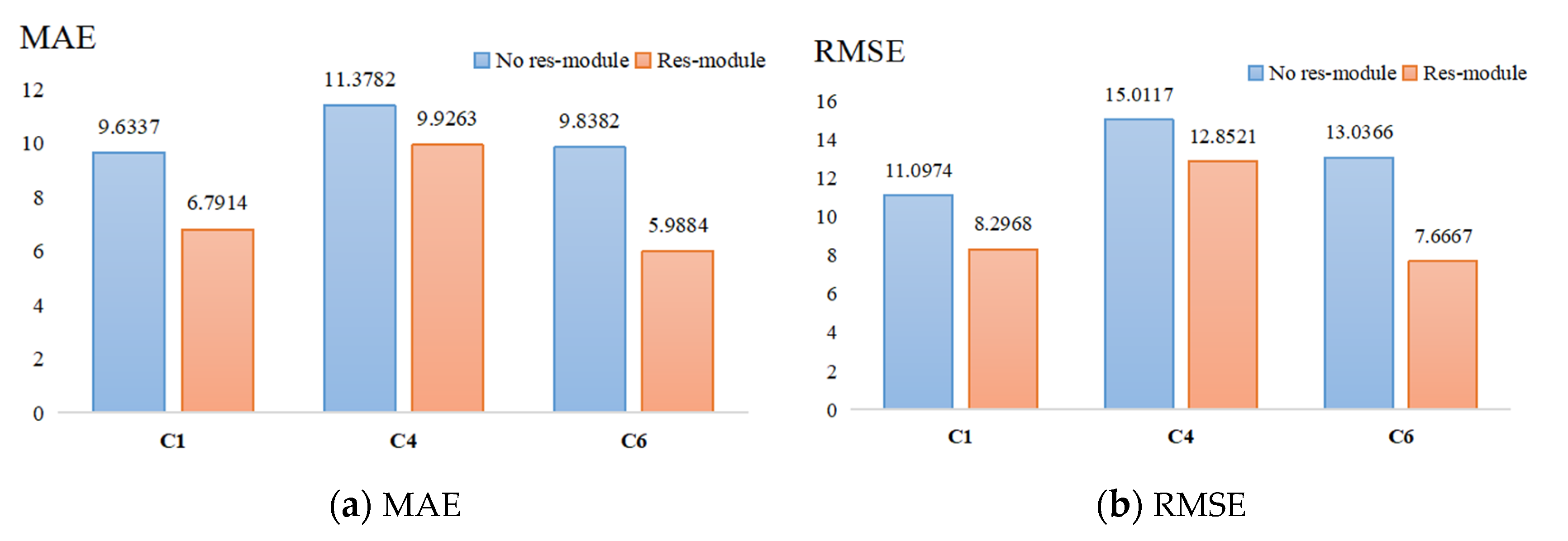

4.4.1. Module Verification

4.4.2. Comparison with Other Models

4.4.3. Exploring the Expandability of Models

5. Conclusions

- (1)

- The sensor signals during tool processing may better handle nonlinear and non-stationary signals after filtering and CEEMDAN, boosting local characteristics.

- (2)

- The developed model will predict tool wear values more accurately because it can more efficiently mine spatiotemporal properties in cutting signals.

- (3)

- This method’s prediction accuracy outperforms the SVR model, GRU model, B-LSTM model, B-BiLSTM model, 1DCNN-BiLSTM model, and 2DCNN model.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yang, Y.; Guo, Y.; Huang, Z.; Chen, N.; Li, L.; Jiang, Y.; He, N. Research on the milling tool wear and life prediction by establishing an integrated predictive model. Measurement 2019, 145, 178–189. [Google Scholar] [CrossRef]

- Vetrichelvan, G.; Sundaram, S.; Kumaran, S.S.; Velmurugan, P. An investigation of tool wear using acoustic emission and genetic algorithm. J. Vib. Control. 2014, 21, 3061–3066. [Google Scholar] [CrossRef]

- Zhou, J.; Li, P.; Zhou, Y.; Wang, B.; Zang, J.; Meng, L. Toward New-Generation Intelligent Manufacturing. Engineering 2018, 4, 11–20. [Google Scholar] [CrossRef]

- Liao, L.; Kottig, F. Review of Hybrid Prognostics Approaches for Remaining Useful Life Prediction of Engineered Systems, and an Application to Battery Life Prediction. IEEE Trans. Reliab. 2014, 63, 191–207. [Google Scholar] [CrossRef]

- van Noortwijk, J. A survey of the application of gamma processes in maintenance. Reliab. Eng. Syst. Saf. 2009, 94, 2–21. [Google Scholar] [CrossRef]

- Zhang, Z.; Si, X.; Hu, C.; Lei, Y. Degradation Data Analysis and Remaining Useful Life Estimation: A Review on Wiener-Process-Based Methods. Eur. J. Oper. Res. 2018, 271, 775–796. [Google Scholar] [CrossRef]

- Toubhans, B.; Fromentin, G.; Viprey, F.; Karaouni, H.; Dorlin, T. Machinability of inconel 718 during turning: Cutting force model considering tool wear, influence on surface integrity. J. Am. Acad. Dermatol. 2020, 285, 116809. [Google Scholar] [CrossRef]

- Huang, Z.; Zhu, J.; Lei, J.; Li, X.; Tian, F. Tool wear predicting based on multi-domain feature fusion by deep convolutional neural network in milling operations. J. Intell. Manuf. 2020, 31, 953–966. [Google Scholar] [CrossRef]

- Feng, K.; Borghesani, P.; Smith, W.A.; Randall, R.B.; Chin, Z.Y.; Ren, J.; Peng, Z. Vibration-based updating of wear prediction for spur gears. Wear 2019, 426-427, 1410–1415. [Google Scholar] [CrossRef]

- Shanbhag, V.V.; Rolfe, B.F.; Arunachalam, N.; Pereira, M.P. Investigating galling wear behaviour in sheet metal stamping using acoustic emissions. Wear 2018, 414–415, 31–42. [Google Scholar] [CrossRef]

- Habrat, W.; Krupa, K.; Markopoulos, A.P.; Karkalos, N.E. Thermo-mechanical aspects of cutting forces and tool wear in the laser-assisted turning of Ti-6Al-4V titanium alloy using AlTiN coated cutting tools. Int. J. Adv. Manuf. Technol. 2020, 115, 759–775. [Google Scholar] [CrossRef]

- Capasso, S.; Paiva, J.; Junior, E.L.; Settineri, L.; Yamamoto, K.; Amorim, F.; Torres, R.; Covelli, D.; Fox-Rabinovich, G.; Veldhuis, S. A novel method of assessing and predicting coated cutting tool wear during Inconel DA 718 turning. Wear 2019, 432–433, 202949. [Google Scholar] [CrossRef]

- Wang, C.; Bao, Z.; Zhang, P.; Ming, W.; Chen, M. Tool wear evaluation under minimum quantity lubrication by clustering energy of acoustic emission burst signals. Measurement 2019, 138, 256–265. [Google Scholar] [CrossRef]

- Shanbhag, V.V.; Rolfe, B.F.; Griffin, J.M.; Arunachalam, N.; Pereira, M.P. Understanding Galling Wear Initiation and Progression Using Force and Acoustic Emissions Sensors. Wear 2019, 436–437, 202991. [Google Scholar] [CrossRef]

- Özbek, O.; Saruhan, H. The effect of vibration and cutting zone temperature on surface roughness and tool wear in eco-friendly MQL turning of AISI D2. J. Mater. Res. Technol. 2020, 9, 2762–2772. [Google Scholar] [CrossRef]

- Shao, Y.; Nezu, K. Prognosis of remaining bearing life using neural networks. Proc. Inst. Mech. Eng. Part I: J. Syst. Control. Eng. 2000, 214, 217–230. [Google Scholar] [CrossRef]

- Santhosh, T.; Gopika, V.; Ghosh, A.; Fernandes, B. An approach for reliability prediction of instrumentation & control cables by artificial neural networks and Weibull theory for probabilistic safety assessment of NPPs. Reliab. Eng. Syst. Saf. 2018, 170, 31–44. [Google Scholar] [CrossRef]

- Liu, H.; Fan, M.; Zeng, Q.; Shen, X. RBF Network Based on Artificial Immune Algorithm and Application of Predicting the Residual Life of Injecting Water Pipeline. In Proceedings of the 2010 Sixth International Conference on Natural Computation, Yantai, China, 10–12 August 2010. [Google Scholar]

- Chen, X.; Xiao, H.; Guo, Y.; Kang, Q. A multivariate grey RBF hybrid model for residual useful life prediction of industrial equipment based on state data. Int. J. Wirel. Mob. Comput. 2016, 10, 90. [Google Scholar] [CrossRef]

- Liu, F.; Liu, Y.; Chen, F.; He, B. Residual life prediction for ball bearings based on joint approximate diagonalization of eigen matrices and extreme learning machine. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2017, 231, 1699–1711. [Google Scholar] [CrossRef]

- Wang, X.; Han, M. Online sequential extreme learning machine with kernels for nonstationary time series prediction. Neurocomputing 2014, 145, 90–97. [Google Scholar] [CrossRef]

- Nieto, P.G.; García-Gonzalo, E.; Lasheras, F.S.; Juez, F.d.C. Hybrid PSO–SVM-based method for forecasting of the remaining useful life for aircraft engines and evaluation of its reliability. Reliab. Eng. Syst. Saf. 2015, 138, 219–231. [Google Scholar] [CrossRef]

- Song, Y.; Liu, D.; Hou, Y.; Yu, J.; Peng, Y. Satellite lithium-ion battery remaining useful life estimation with an iterative updated RVM fused with the KF algorithm. Chin. J. Aeronaut. 2018, 31, 31–40. [Google Scholar] [CrossRef]

- Li, X.; Ding, Q.; Sun, J.-Q. Remaining useful life estimation in prognostics using deep convolution neural networks. Reliab. Eng. Syst. Saf. 2018, 172, 1–11. [Google Scholar] [CrossRef]

- Ren, L.; Sun, Y.; Wang, H.; Zhang, L. Prediction of Bearing Remaining Useful Life With Deep Convolution Neural Network. IEEE Access 2018, 6, 13041–13049. [Google Scholar] [CrossRef]

- Heimes, F.O. Recurrent Neural Networks for Remaining Useful Life Estimation. In Proceedings of the 2008 International Conference on Prognostics and Health Management, Denver, CO, USA, 6–9 October 2008. [Google Scholar]

- Malhi, A.; Yan, R.; Gao, R.X. Prognosis of Defect Propagation Based on Recurrent Neural Networks. IEEE Trans. Instrum. Meas. 2011, 60, 703–711. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, C.; Zhao, S.; Zhou, T.; Zhang, D.; Shi, Z.; Liu, S.; Jiang, R.; Yin, M.; Wang, G.; et al. CLAP: Gas Saturation Prediction in Shale Gas Reservoir Using a Cascaded Convolutional Neural Network–Long Short-Term Memory Model with Attention Mechanism. Processes 2023, 11, 2645. [Google Scholar] [CrossRef]

- Liu, X.; Jia, W.; Li, Z.; Wang, C.; Guan, F.; Chen, K.; Jia, L. Prediction of Lost Circulation in Southwest Chinese Oil Fields Applying Improved WOA-BiLSTM. Processes 2023, 11, 2763. [Google Scholar] [CrossRef]

- de Abreu, R.S.; Silva, I.; Nunes, Y.T.; Moioli, R.C.; Guedes, L.A. Advancing Fault Prediction: A Comparative Study between LSTM and Spiking Neural Networks. Processes 2023, 11, 2772. [Google Scholar] [CrossRef]

- Alhamayani, A. CNN-LSTM to Predict and Investigate the Performance of a Thermal/Photovoltaic System Cooled by Nanofluid (Al2O3) in a Hot-Climate Location. Processes 2023, 11, 2731. [Google Scholar] [CrossRef]

- Liu, M.; Yao, X.; Zhang, J.; Chen, W.; Jing, X.; Wang, K. Multi-Sensor Data Fusion for Remaining Useful Life Prediction of Machining Tools by IABC-BPNN in Dry Milling Operations. Sensors 2020, 20, 4657. [Google Scholar] [CrossRef] [PubMed]

- Li, N.; Chen, Y.; Kong, D.; Tan, S. Force-Based Tool Condition Monitoring for Turning Process Using v-Support Vector Regression. Int. J. Adv. Manuf. Technol. 2017, 91, 351–361. [Google Scholar] [CrossRef]

- Ren, L.; Cheng, X.; Wang, X.; Cui, J.; Zhang, L. Multi-scale Dense Gate Recurrent Unit Networks for bearing remaining useful life prediction. Future Gener. Comput. Syst. 2018, 94, 601–609. [Google Scholar] [CrossRef]

- Li, G.; Du, X.; Zhao, L.; Yu, J. Design of milling-tool wear monitoring system based on eemd-svm. Autom. Instrum. 2019, 6, 30–32. [Google Scholar]

- Wang, J.; Li, Y.; Zhao, R.; Gao, R.X. Physics guided neural network for machining tool wear prediction. J. Manuf. Syst. 2020, 57, 298–310. [Google Scholar] [CrossRef]

- Xu, X.; Wang, J.; Zhong, B.; Ming, W.; Chen, M. Deep learning-based tool wear prediction and its application for machining process using multi-scale feature fusion and channel attention mechanism. Measurement 2021, 177, 109254. [Google Scholar] [CrossRef]

- Liang, Y.; Hu, S.; Guo, W.; Tang, H. Abrasive tool wear prediction based on an improved hybrid difference grey wolf algorithm for optimizing SVM. Measurement 2021, 187, 110247. [Google Scholar] [CrossRef]

- He, Z.; Shi, T.; Xuan, J.; Li, T. Research on tool wear prediction based on temperature signals and deep learning. Wear 2021, 478–479, 203902. [Google Scholar] [CrossRef]

- Duan, J.; Hu, C.; Zhan, X.; Zhou, H.; Liao, G.; Shi, T. MS-SSPCANet: A powerful deep learning framework for tool wear prediction. Robot. Comput. Manuf. 2022, 78, 102391. [Google Scholar] [CrossRef]

- Li, Y.; Wang, J.; Huang, Z.; Gao, R.X. Physics-informed meta learning for machining tool wear prediction. J. Manuf. Syst. 2021, 62, 17–27. [Google Scholar] [CrossRef]

- Li, N.; Ma, L.; Yu, G.; Xue, B.; Zhang, M.; Jin, Y. Survey on Evolutionary Deep Learning: Principles, Algorithms, Applications and Open Issues. ACM Comput. Surv. 2023, 56, 1–34. [Google Scholar] [CrossRef]

- Ma, L.; Li, N.; Guo, Y.; Wang, X.; Yang, S.; Huang, M.; Zhang, H. Learning to Optimize: Reference Vector Reinforcement Learning Adaption to Constrained Many-Objective Optimization of Industrial Copper Burdening System. IEEE Trans. Cybern. 2021, 52, 12698–12711. [Google Scholar] [CrossRef] [PubMed]

- Yu, J.; Zhang, X.; Xu, L.; Dong, J.; Zhangzhong, L. A hybrid CNN-GRU model for predicting soil moisture in maize root zone. Agric. Water Manag. 2020, 245, 106649. [Google Scholar] [CrossRef]

- Li, Y.; Chen, X.; Yu, J. A Hybrid Energy Feature Extraction Approach for Ship-Radiated Noise Based on CEEMDAN Combined with Energy Difference and Energy Entropy. Processes 2019, 7, 69. [Google Scholar] [CrossRef]

- Gao, B.; Huang, X.; Shi, J.; Tai, Y.; Zhang, J. Hourly forecasting of solar irradiance based on CEEMDAN and multi-strategy CNN-LSTM neural networks. Renew. Energy 2020, 162, 1665–1683. [Google Scholar] [CrossRef]

- Luo, X.; Huang, Y.; Zhang, F.; Wu, Q. Study of the Load Forecasting of a Wet Mill Based on the CEEMDAN-Refined Composite Multiscale Dispersion Entropy and LSTM Nerve Net. Int. J. Autom. Technol. 2022, 16, 340–348. [Google Scholar] [CrossRef]

- Dong, L.; Zhang, H.; Yang, K.; Zhou, D.; Shi, J.; Ma, J. Crowd Counting by Using Top-k Relations: A Mixed Ground-Truth CNN Framework. IEEE Trans. Consum. Electron. 2022, 68, 307–316. [Google Scholar] [CrossRef]

| Ref. | Method | Advantage | Deficiency |

|---|---|---|---|

| Li [33] | SVR | Analyzing the characteristics of specific tools resulted in high prediction accuracy | The time required for analysis is long, and the model lacks universality |

| Ren [34] | GRU | Multi sensor feature fusion | The network is simple, but its performance is poor when dealing with big data |

| Li [35] | SVR | EMD decomposition of signals to amplify signal features | The network is simple, but its performance is poor when dealing with big data |

| Wang [36] | Physical model | Integrating data-driven models to improve universality | The network is simple, but its performance is poor when dealing with big data |

| Huang [8] | DCNN | Multi sensor feature fusion | Manually extracting features while ignoring hidden features of the data itself |

| Xu [37] | CNN | Built a more powerful neural network | The original signal has noise, which affects the prediction speed and accuracy |

| Liang [38] | SVM | Integration with data-driven models, mining more features | The model has no universality |

| He [39] | BPNN | Designed a new SSAE model to learn more valuable and deeper features from the original signal | Only one monitoring signal was used, without considering predictive stability |

| Duan [40] | SVR | Integrating MS-SPCANet for autonomous feature extraction | Principal component analysis, difficult to mine hidden features in data |

| Li [41] | Physical model | Integrating the parameters of empirical equations to improve the interpretability of modeling | The time required for analysis is long, and the resulting model is not universally applicable |

| Signal | Select or Not | Pearson | Spearman |

|---|---|---|---|

| Fx | yes | 0.9716 | 0.9937 |

| Fy | yes | 0.9293 | 0.9541 |

| Fz | yes | 0.9750 | 0.9182 |

| Vx | no | 0.0697 | 0.0583 |

| Vy | no | 0.0604 | 0.0845 |

| Vz | no | 0.0603 | 0.1054 |

| AE | yes | 0.5707 | 0.4892 |

| Variable | Description | Value |

|---|---|---|

| Epoch | Training rounds | 500 |

| Batch size | Batch size | 24 |

| Learning rate | Learning rate | 0.001 |

| Step size | Interval of learning rate decline | 1000 |

| Gamma | Adjustment multiple of learning rate | 1 |

| Dropout rate | Dropout rate | 0.3 |

| Method | C1 | C4 | C6 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | |

| Developed model | 11.2946 | 9.5785 | 0.95364 | 18.3126 | 14.0382 | 0.77384 | 8.6167 | 6.7801 | 0.92771 |

| Developed model * | 8.2968 | 6.7914 | 0.96468 | 12.8521 | 9.9263 | 0.88154 | 7.6667 | 5.9884 | 0.95794 |

| Method | RMSE | MAE |

|---|---|---|

| SVR | 31.5 | 24.9 |

| GRU | 36.3615 | 31.422 |

| B-LSTM | 33.859 | 28.9897 |

| B-BiLSTM | 26.4284 | 21.1652 |

| 1DCNN-BiLSTM | 12.396 | 10.9944 |

| 2DCNN | 15.3604 | 11.4455 |

| Developed model | 7.6667 | 5.9884 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, Z.; Liu, Y.; Pang, X.; Zhang, Q. Wear Prediction of Tool Based on Modal Decomposition and MCNN-BiLSTM. Processes 2023, 11, 2988. https://doi.org/10.3390/pr11102988

He Z, Liu Y, Pang X, Zhang Q. Wear Prediction of Tool Based on Modal Decomposition and MCNN-BiLSTM. Processes. 2023; 11(10):2988. https://doi.org/10.3390/pr11102988

Chicago/Turabian StyleHe, Zengpeng, Yefeng Liu, Xinfu Pang, and Qichun Zhang. 2023. "Wear Prediction of Tool Based on Modal Decomposition and MCNN-BiLSTM" Processes 11, no. 10: 2988. https://doi.org/10.3390/pr11102988

APA StyleHe, Z., Liu, Y., Pang, X., & Zhang, Q. (2023). Wear Prediction of Tool Based on Modal Decomposition and MCNN-BiLSTM. Processes, 11(10), 2988. https://doi.org/10.3390/pr11102988