Abstract

Developing a forecasting model for oilfield well production plays a significant role in managing mature oilfields as it can help to identify production loss earlier. It is very common that mature fields need more frequent production measurements to detect declining production. This study proposes a machine learning system based on a hybrid empirical mode decomposition backpropagation higher-order neural network (EMD-BP-HONN) for oilfields with less frequent measurement. With the individual well characteristic of stationary and non-stationary data, it creates a unique challenge. By utilizing historical well production measurement as a time series feature and then decomposing it using empirical mode decomposition, it generates a simpler pattern to be learned by the model. In this paper, various algorithms were deployed as a benchmark, and the proposed method was eventually completed to forecast well production. With proper feature engineering, it shows that the proposed method can be a potentially effective method to improve forecasting obtained by the traditional method.

1. Introduction

One important activity in the oil industry is to measure well production. By conducting measurements of the oil production, it could show how the well performs compared to the simulation result. Moreover, it plays a significant role in the phase of declining production. By measuring the declining production earlier, petroleum engineers have the capability to deliver appropriate action to respond [1,2]. However, such an ideal situation of providing continuous and periodic measurements is not viable to deploy due to economic and technical challenges [3,4]. Non-continuous and occasional basis of well production measurement is very common in oilfield operation [5,6].

Commonly, well production rate is measured using a test separator for some minutes to hours and then applying certain calculations to represent the whole day production of the well. In more advanced technology, the rate is measured by multiphase flow meters (MPFMs) that are equipped with several sensors, such as an ultrasonic meter that is used for gas rate measurement and a capacitance meter that can measure very high water cut, which is barely possible to acquire using the conventional method [7,8]. In many oil fields, a production test is not acquired every day for each well due to the well number limitation of test stations to conduct the test. Therefore, conducting specific tasks such as well performance monitoring will rely on a lagged test that leads to late action when something occurs in the well. Hence, petroleum engineers have difficulty determining declining well production earlier. For a longer production span, some traditional approaches are common to forecast production, including decline curve analysis (DCA), exploration interpolation and the black oil model [9]. Since those forecasting models need to be tuned with proper parameters and pick the right slope, its main disadvantage is a subjective judgment by the expert who is conducting the analysis [3]. For shorter-term prediction, many approaches have been proposed using data-driven methodology, such as using thermogravimetric data to predict oil flow rate [4], inferring flow rate from real-time parameters using diverse neural networks [10] and many data mining methodologies. Another study involves additional hardware such as extended venturi and the use of a support vector machine (SVM) for the model [11]. Another experiment was augmented by a lab-scale vertical pipe to obtain differential pressure signals as the inputs to principal component analysis and neural network models [12].

In the well production forecasting area, the use of artificial intelligence and data mining method have been introduced in the last two decades. The research literature is basically divided into two approaches: non-time series (cross-sectional data) and time series, either univariate or multivariate approaches. Some efforts were intended to improve the prediction by exploiting optimization algorithms such as the imperialist competitive algorithm [13] and aquila optimizer [14]. To capture highly non-linear correlation, the higher-order neural network (HONN) has been introduced to forecast cumulative oil production [15]. A more recent experiment utilized a univariate and multivariate time series approach using a nonlinear autoregressive neural network with exogenous input (NARX) to forecast oil production in a natural fracture reservoir [16]. Another approach with a multi-layer multi-valued neural network (MLMVN) was developed for predicting oil production [17]. This model is based on complex numbers for the input and weight parameters of neural network nodes. The advantage of MLMVN is a derivative-free learning approach which benefits from requiring fewer resources and a faster process [18]. Another approach uses an ensemble neural network with adaptive simulated annealing to optimize the combining strategy [10]. Dongyan et al. [19] proposed ensemble univariate algorithms, namely autoregressive integrated moving average (ARIMA) and long short-term memory (LSTM). The most recent one is the approach using deep long-short term memory (DLSTM) as an extension to the traditional recurrent neural network [20]; however, this research only focuses on non-stationary time series well production data. An interesting approach was performed by decomposing the production data before inputting it into the model [21].

Even though the univariate forecasting method is very popular in other topics, such as crude oil price forecasting [22] and electrical load forecasting [23], only a few studies focused on the univariate time series prediction of oil flow rate. In a previous study, the multivariate model in certain cases showed better results than the univariate one [16]; however, multivariate has its own limitation, such as requiring more dependent variables to be collected. All of the literature confirms that oil production is non-linear and needs a special approach to capture such complex behavior [24]. One of the complex behaviors came from the disturbance factor of oil flow rate measurement noise. According to previous literature, noise reduction is a contributing factor to achieving an excellent univariate forecasting method [15]. Another gap in previous univariate time series forecasting for oil production is the focus on non-stationary data [20], which may not cover all the characteristics of well production.

In this study, we propose a novel hybrid model for time series well production forecasting data using a back propagation higher-order neural network (BP-HONN) with first, second and third-order synaptic operation and the decomposition method. The decomposition method utilizes simplifying the trend of input data (signal); thus, the neural network could learn it more accurately. Based on a recent study, as the effect of decomposition, increasing the linearity of time series data could improve accuracy performance [9]. For the decomposition, empirical mode decomposition (EMD) is being proposed, as it is proven for a non-linear dataset in other research areas [24,25]. To evaluate the robustness of the model, the stationary and non-stationary time series data are being used. The actual field dataset was taken from previous literature [15] and production data from the Sumatra Basin field, Indonesia, a total of 30 wells of production data. In addition, another novel hybrid model is also being introduced as the benchmark. The same dataset will be evaluated with EMD-BP-MLMVN to show the performance comparison of the proposed model.

The contributions of this research are:

- The introduction of a novel hybrid method incorporating EMD and BP-HONN as the main proposed framework for forecasting short term oil production.

- The introduction of a secondary novel hybrid framework utilizing EMD and BP-MLMVN for the same objective.

- Providing a 25-well dataset from an actual oilfield consisting of stationary and non-stationary datasets, which is a real representation of business challenges. This dataset will be available for future work by other researchers.

- The experiment shows that the proposed method EMD-BP-HONN are significantly better than other benchmark models.

The remainder of this paper explains the oilfield/reservoir description where the dataset is retrieved, the algorithms that are used, the selected performance evaluation and eventually, the framework proposed. The final result will be discussed in the result section alongside the statistical test to evaluate the significant difference among models.

2. Materials and Methods

2.1. The Reservoir under Study

The experiment data are carried out from two sources. The first one is from previous literature [18], which provides real production data from 5 (five) wells in Cambay Basin (CB), India. The top depth of the reservoir is between 1380–1413 m, with initial reservoir pressure observed at around 144 kg/cm3. The reservoir reserved oil in place was estimated at around 2.47 MMt. This oilfield started production in February 2000 with CB1 (first well). In September 2009, the cumulative oil produced for the CB1 well was 0.156 MMt. Other wells were drilled in subsequent years. Each well has 63 data points which were taken for each month from the year 2004 to 2009.

The descriptive statistics of those datasets are listed in Table 1. In addition to common statistic measurement, to test the stationarity of the data, the augmented Dickey–Fuller (ADF) test was used as per [26,27]. The null hypothesis of stationarity is rejected whenever the ADF statistic value is above critical values. Hence, all CB well’s production data are categorized as non-stationer.

Table 1.

Descriptive statistic of Cambay Basin dataset.

The second source of experiment data comes from an actual oilfield in the Central Sumatra Basin (CS), Indonesia. The field was discovered in 1952, and it has been producing since 1971. The field area is around 19,905 acres, and currently, it is produced by 220 oil producer wells in either single or commingles production. The reservoir consists of multiple productive sands with depths between 4200–4400 ft and thicknesses around 0.13–158 ft. Regarding rock properties, the sand has permeability between 10 to 1200 mD and porosity around 10–34%. The oil is considered a light oil type with a value of gravity of 0.8°. In this experiment, 25 (twenty-five) wells were selected that consist of stationary and non-stationary characteristics to evaluate the model prediction robustness. Descriptive statistics of those datasets are listed in Table 2. Those well datasets with higher ADF statistics belong to the non-stationary category. Another statistical characteristic being measured is the Hurst exponent value, indicating volatility, roughness and smoothness time-series data [16]. The completed dataset is provided in Supplementary Material.

Table 2.

Descriptive statistic of Central Sumatra Basin dataset.

2.2. Higher-Order Neural Networks (HONN)

Most artificial neural networks (ANN) use linear neurons, where the linear connection exists between the input vector and synaptic-weight vector Naturally, the correlation between input and neuron weight is considered non-linear. To overcome this, one neural network variance was introduced [18]. The major difference between HONN and conventional ANN is how the weighted sum of the input vector is calculated. The operation of synaptic weight and input is defined as:

where is the neuron input of ith element of X, is the weight of neuron input of ith, is the bias neuron input, is the weight of bias and is the output of the synaptic operation. Additionally, the somatic operation to calculate the outputs is described as

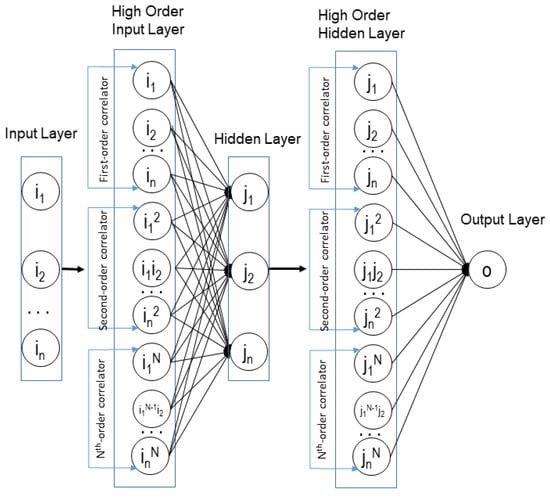

where y is the output of the neural network and is the activation function. Architecture-wise, as illustrated in Figure 1, HONN have interconnected layers, and the input vector will be calculated in each correlator (order) and then applied to the weighted sum of those.

Figure 1.

Higher-order neural network architecture.

Suppose we have a neural network structure, as shown in Figure 1.

First, we must carry out a feed-forward operation by the initial weight, and the input then calculates the error.

where output is the desired output and is the neural output (or y). We begin backpropagating the error from the output layer to the hidden layer.

Recall the error formula to calculate the derivative error.

The derivative output layer node-out to output layer node-in is derivative of its activation function

Output layer node-in is calculated by multiplying the weight and input of the high order hidden layer node.

Then, we continue backpropagating the error from the hidden layer to the input layer.

Derivative error to hidden layer:

We already calculated and in the previous step. To calculate recall the high order synaptics operation when we conducted the feed-forward.

Thus,

The derivative hidden layer node-out to hidden layer node in is derivative of its activation function

Hidden layer node-in is calculated by multiplying the weight and input of the high order input layer node.

The error (squared error) is minimized by updating the weight matrix as

where the change in weight matric is denoted by which is proportional to the gradient of the error function as

where η > 0 is the learning rate which affects the performance of the algorithm during the updating process.

2.3. Multi-Layer Neural Network with Multi-Valued Neurons (MLMVN)

MLMVN is a multi-layer neural network consisting of Multi-Valued Neurons (MVN) as basic neurons with complex-valued weights, which becomes the key difference between MLMVN and the classic neural network. The difference makes the learning process in MLMVN simpler and means that it has a better capability of generalization.

All neurons in the network are complex numbers located on the unit circle and the weights. An input/output mapping of a continuous MVN is described by a function of n variables

where is a set of points located on the unit circle, is the neuron input of ith element of X, is the weight of neuron input of ith element of X, is the bias neuron input, is the weight of bias.

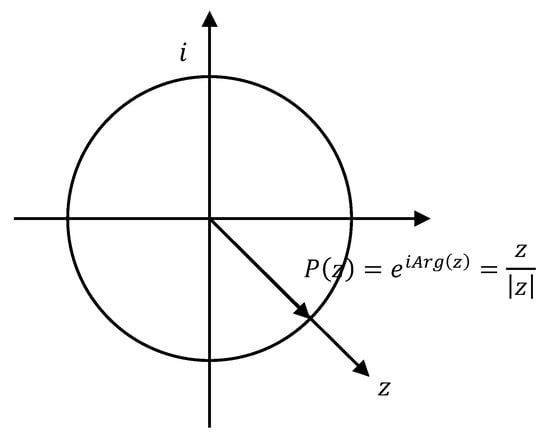

The continuous MVN activation function (as in Figure 2) is

where is the weighted sum, is the main value of the argument of the complex number z. Thus, a continuous MVN output is a projection of its complex-valued weighted sum onto the unit circle.

Figure 2.

Geometrical interpretation of the continuous MVN activation function.

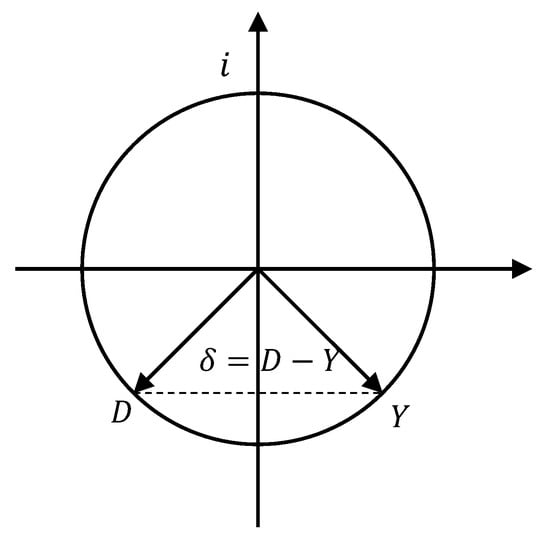

The MVN training is based on the error-correction learning rule (Figure 3) as follows:

where is the array of neuron inputs complex-conjugated, is the number of neuron inputs, is the expected target of the neuron, is the calculated output of the neuron, is the number of the epoch step, is the current weighting vector, is the new weighting vector, is a learning rate and is the current absolute value of the weighted sum.

Figure 3.

MVN training based on error-correction rule.

The MLMVN learning algorithm is derivative-free. It is based on the same error-correction learning rule as the one of a single MVN. Let MLMVN contain layers of neurons and be the network inputs. To obtain the local errors for all neurons, the global error of

must be shared with these neurons. Therefore, the errors of the th (output) layer neurons are

where specifies the th neuron of the th layer; . The errors of the hidden layer’s neurons are

where specifies the th neuron of the th layer; , is the number of all neurons in the layer , and ( is the number of network inputs).

After the error backpropagation is completed, the weights for all connecting layers shall then be updated using the error-correction learning rule adapted to MLMVN. It means that while it is used for the hidden neurons, the factor should not be applied to the output neurons thus it becomes in this output layer.

2.4. Empirical Mode Decomposition (EMD)

Empirical mode decomposition (EMD), also known as the Hilbert–Huang transformation (HHT), is a method to decompose signals into several simpler signals, called intrinsic mode functions (IMF) [28]. This method is an empirical approach to obtain current frequency data from a dataset, which preferably has non-stationer and non-linear characteristics [29,30]. Each IMF has to satisfy only one extrema that crosses the zero line (zero-crossing), with a mean value of zero. Due to its nature, the number of IMF obtained cannot be pre-determined; thus, every dataset has its own number of IMF and residue (last monotonic functions). The final result of EMD with the aggregation of all its components (and residue) can be seen as

where is the time-series signal, is the ith IMF and is the residue.

2.5. Performance Evaluation

In this research, several performance metrics are used, such as R2 (coefficient of determination), root mean square error (RMSE) and mean absolute percentage error (MAPE), as follows:

where is the actual target for component, is the predicted value, is the mean value and n is the amount data.

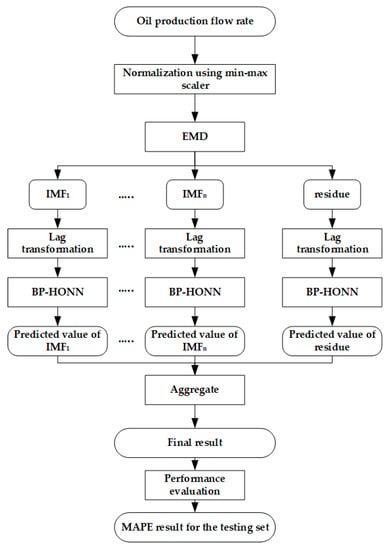

2.6. Framework of the Proposed Model

In this research, a hybrid model of combining EMD and HONN with a backpropagation learning method is proposed to forecast the oil flow rate of 30 well production data. Another hybrid model of EMD and MLMVN is also introduced as a benchmark. The proposed method includes several steps, as shown in Figure 4. The first step is to deliver pre-processing of all datasets. The pre-processing started by normalizing all values to the same range. For the CB dataset, the scaler was applied as in the original paper, which calculated the ratio to maximum production (9500 m3) [15]. For the CS dataset, a min-max scaler (−1 to 1) is applied for HONN and 0 to 1 for MLMVN. Additionally, the normalized value is processed with an EMD algorithm which constructs multiple IMFs (and residue) for each dataset. Due to the fact that the proposed learning algorithms are not able to learn from time-series data directly, the feature transformation using a lag feature transforms to the supervised-learning dataset. For this research, two up to five prior time series data were selected as inputs (i.e., Xt−5, Xt−4, Xt−3, Xt−2, Xt−1, for the lag feature of five) and the subsequent time series as a target (y). Then, the transformed feature feeds into HONN with the backpropagation learning method. For HONN and EMD-HONN, we chose hyperparameter as follows: the network architecture is one hidden layer for the activation function of tanh-tanh, the initial learning rate is 0.001 and is adaptive to the error of each epoch; if the error decreases, the learning rate multiplies by 0.7 and 1.05 if the error increase. The iteration for backpropagation learning (epoch) is 600, and the momentum is 0.9. The experiment was repeated with different hidden neuron configurations from 2 to 10 and also with the polynomial synaptic operation of linear (LSO), quadratic (QSO) and cubical (CSO). The best-performed model was selected to compete with other benchmark models.

Figure 4.

Proposed model of EMD-BP-HONN.

For the second hybrid model introduced using EMD-MLMVN, a similar framework has been utilized, with BP-HONN being replaced with BP-MLMVN. The architecture of BP-MLMVN of 1 hidden layer, with randomized initial weight and number of training epochs of 500. The experiment was repeated with different hidden neurons configuration from 2 to 10 to select the best performing configuration.

Additionally, three other forecasting methods were utilized for benchmarking. The most basic forecasting method is persistence or naïve, which takes the previous value (Xt−1) as the next step value (Xt+1) or forecasted value. For a more advanced baseline, two time series forecasting algorithms are used: autoregressive integrated moving average (ARIMA) and long short-term memory (LSTM). ARIMA is a very popular statistical model for forecasting time series data. It consists of three components: autoregression (p), moving average (q) and differencing (d). For this experiment, the variable p, d, q values are selected from 1 to 4. LSTM as the variance of recurrent neural network (RNN) can capture nonlinearity trends of well production forecast [19]. The hyperparameters for LSTM are the number of layers and activation function. The best of 20, 50 and 200 layers with combinations of tanh, relu and sigmoid activation functions are selected as the benchmarks for the proposed model.

3. Results and Discussion

3.1. Result of CB and CS Datasets

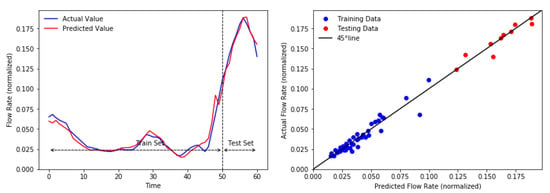

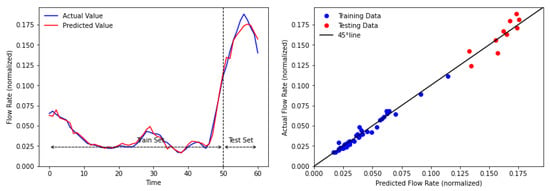

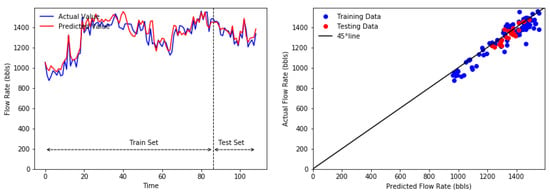

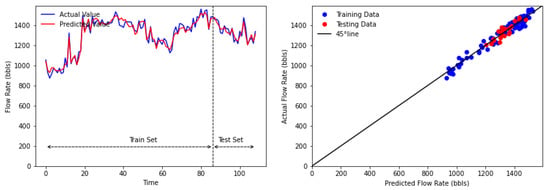

The result of the proposed methods and other benchmark methods for both oilfields are presented in this section. As mentioned in the previous section, the proposed models repeated runs with different parameters and configurations and selected the best to compete with benchmark models. An example of the best configuration for several wells can be seen in Table 3, which shows that different lag features and the number of hidden neurons provide the best MAPE. In the summary, as seen in Table 4, Table 5, Table 6 and Table 7, the proposed EMD-BP-HONN and EMD-MLMVN outperformed all benchmarked models with the smallest MAPE in 23 out of 30 wells. Interestingly, ARIMA models have a decent result compared to our proposed models. For other metrics measured, RMSE and R2 have consistent results, as seen in Table 5 and Table 6 for the CB dataset, in which the proposed methods have better forecasting performance than the benchmark models. Additionally, for detailed prediction, as an example, the prediction result of the proposed models for CB2 are presented in Figure 5 and Figure 6. The prediction results of the proposed models for CS18 are presented in Figure 7 and Figure 8.

Table 3.

Best result of HONN-based methods on several wells.

Table 4.

Result of CB oilfield in MAPE metric. The lowest score is in bold.

Table 5.

Result of CB oilfield in RMSE metric. The lowest score is in bold.

Table 6.

Result of CB oilfield in R2 metric. The highest score is in bold.

Table 7.

Result of CS oilfield in MAPE metric. The lowest score is in bold.

Figure 5.

Prediction result of EMD-BP-MLMVN for CB2.

Figure 6.

Prediction result of EMD-BP-HONN for CB2.

Figure 7.

Prediction result of EMD-BP-MLMVN for CS18.

Figure 8.

Prediction result of EMD-BP-HONN for CS18.

Another interesting finding, based on the result, is that the hybrid model indeed improves the performance of the base model. As seen in Table 4 and Table 7, by implementing EMD in the pre-processing stage, both BP-HONN and BP-MLMVN have been improved, on average, by 23% and 34%, respectively.

In regard to comparing our work to other studies, other studies proposed a DLSTM model that outperforms the HONN vanilla model for the Cambay Basin dataset [20]. However, instead of individual well production, the dataset used is the cumulative production of five wells, as in Table 1. Based on the result, the reported MAPE scores are 2.851 and 3.459 for DLSTM and vanilla-HONN, respectively. To compare the performance of EMD-BP-HONN and EMD-BP-MLMVN, the same dataset was carried out, and the result can be seen in Table 8. EMD-BP-HONN continues to show better performance than other methods that are reported in other papers.

Table 8.

Comparison to other study for cumulative production of Cambay Basin Oilfield.

3.2. Statistical Tests

In this section, a statistical test was applied to evaluate whether there is a significant difference between the methods being proposed and the benchmark models. The Friedman test uses null and alternative hypotheses. The null hypothesis (H0) implies that the mean for each population is equal; thus, there is no significant difference among methods. The alternate hypothesis implies that at least one population mean is different from the rest. If the p-value of the test is less than 0.05, the null hypothesis can be rejected.

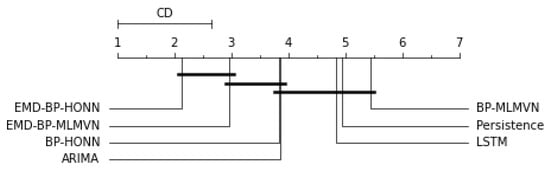

Using the Friedman chi-square test (using scipy python library) with MAPE metrics for all datasets, the results are as follows: statistic = 52.828 and p-value = 1.270 × 10−9. Seeing this result, the null hypothesis can be rejected. Then, to determine which methods are significantly different, the Nemenyi post hoc test was utilized, and the result is shown in Figure 9. The result shows that EMD-BP-HONN is significantly different from other benchmark methods except with EMD-BP-MLMVN. EMD-BP-MLMVN significantly differs from persistence and LSTM; however, it is not significantly different from ARIMA and BP-HONN.

Figure 9.

Critical difference graph for Nemenyi test.

4. Conclusions

In this study, we introduced a hybrid model of EMD-BP-HONN and EMD-BP-MLMVN for oil flow rate forecasting. The decomposition method of EMD was utilized in the pre-processing stage to make time-series data simpler; thus, it should increase the performance of the forecasting algorithm. The proposed methods were applied to 30 datasets collected from two oilfields, Cambay Basin, India and the Central Sumatra Basin, Indonesia. To compare the performance, time-series forecasting was tested as well. The proposed methods have significant results and outperformed the benchmark models in most datasets. In addition, by implementing the decomposition method prior to base models, the hybrid models were improved significantly in all datasets.

For future works, the hybrid models being proposed, EMD-BP-HONN and EMD-BP-MLMVN, could be improved with a more advanced version of the decomposition method. Selecting the best parameter can also be explored using an optimization algorithm to be able to search global optimum of parameters.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/pr10061137/s1, Spreadsheet S1: CB and CS dataset.

Author Contributions

Conceptualization, N.A.S. and J.N.P.; methodology, N.A.S. and J.N.P.; validation, T.B.A. and N.A.S.; formal analysis, J.N.P.; investigation, J.N.P.; data curation, J.N.P.; writing—original draft preparation, J.N.P.; writing—review and editing, J.N.P., N.A.S. and T.B.A.; visualization, J.N.P.; supervision, T.B.A. and N.A.S.; project administration, J.N.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The first author would like to thank several individuals who supported this experiment and paper preparation: Suharyanto, S.T., Ramdhan Ari Wibawa, P.E., Chairul Ichsan, P.E.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Meribout, M.; Al-Rawahi, N.; Al-Naamany, A.; Al-Bemani, A.; Al-Busaidi, K.; Meribout, A. Integration of impedance measurements with acoustic measurements for accurate two phase flow metering in case of high water-cut. Flow Meas. Instrum. 2010, 21, 8–19. [Google Scholar] [CrossRef]

- Höök, M.; Davidsson, S.; Johansson, S.; Tang, X. Decline and depletion rates of oil production: A comprehensive investigation. Philos. Trans. R. Soc. London. Ser. A Math. Phys. Eng. Sci. 2014, 372, 20120448. [Google Scholar] [CrossRef] [PubMed]

- Huang, Z.; Xie, D.; Zhang, H.; Li, H. Gas–oil two-phase flow measurement using an electrical capacitance tomography system and a Venturi meter. Flow Meas. Instrum. 2005, 16, 177–182. [Google Scholar] [CrossRef]

- Pourabdollah, K.; Mokhtari, B. Flow rate measurement of individual oil well using multivariate thermal analysis. Measurement 2011, 44, 2028–2034. [Google Scholar] [CrossRef]

- Ganat, T.A.; Hrairi, M.; Hawlader, M.; Farj, O. Development of a novel method to estimate fluid flow rate in oil wells using electrical submersible pump. J. Pet. Sci. Eng. 2015, 135, 466–475. [Google Scholar] [CrossRef]

- Henry, M.; Tombs, M.; Zhou, F. Field experience of well testing using multiphase Coriolis metering. Flow Meas. Instrum. 2016, 52, 121–136. [Google Scholar] [CrossRef]

- Thorn, R.; Johansen, G.A.; Hjertaker, B.T. Three-phase flow measurement in the petroleum industry. Meas. Sci. Technol. 2013, 24, 012003. [Google Scholar] [CrossRef]

- Geoffrey, F.H.; Alimonti, C. Multiphase flow metering. In Developments in Petroleum Science; Elsevier: Amsterdam, The Netherlands, 2009; p. 329. [Google Scholar]

- Wang, M.; Fan, Z.; Xing, G.; Zhao, W.; Song, H.; Su, P. Rate Decline Analysis for Modeling Volume Fractured Well Production in Naturally Fractured Reservoirs. Energies 2018, 11, 43. [Google Scholar] [CrossRef] [Green Version]

- Al-Qutami, T.A.; Ibrahim, R.; Ismail, I.; Ishak, M.A. Virtual multiphase flow metering using diverse neural network ensemble and adaptive simulated annealing. Expert Syst. Appl. 2018, 93, 72–85. [Google Scholar] [CrossRef]

- Xu, L.; Zhou, W.; Li, X.; Tang, S. Wet Gas Metering Using a Revised Venturi Meter and Soft-Computing Approximation Techniques. IEEE Trans. Instrum. Meas. 2011, 60, 947–956. [Google Scholar] [CrossRef]

- Shaban, H.; Tavoularis, S. Measurement of gas and liquid flow rates in two-phase pipe flows by the application of machine learning techniques to differential pressure signals. Int. J. Multiph. Flow 2014, 67, 106–117. [Google Scholar] [CrossRef]

- Ahmadi, M.A.; Ebadi, M.; Shokrollahi, A.; Majidi, S.M.J. Evolving artificial neural network and imperialist competitive algorithm for prediction oil flow rate of the reservoir. Appl. Soft Comput. 2013, 13, 1085–1098. [Google Scholar] [CrossRef]

- AlRassas, A.M.; Al-Qaness, M.A.A.; Ewees, A.A.; Ren, S.; Elaziz, M.A.; Damaševičius, R.; Krilavičius, T. Optimized ANFIS Model Using Aquila Optimizer for Oil Production Forecasting. Processes 2021, 9, 1194. [Google Scholar] [CrossRef]

- Chakra, N.C.; Song, K.-Y.; Gupta, M.M.; Saraf, D.N. An innovative neural forecast of cumulative oil production from a petroleum reservoir employing higher-order neural networks (HONNs). J. Pet. Sci. Eng. 2013, 106, 18–33. [Google Scholar] [CrossRef]

- Sheremetov, L.; Cosultchi, A.; Martínez-Muñoz, J.; Gonzalez-Sánchez, A.; Jiménez-Aquino, M. Data-driven forecasting of naturally fractured reservoirs based on nonlinear autoregressive neural networks with exogenous input. J. Pet. Sci. Eng. 2014, 123, 106–119. [Google Scholar] [CrossRef]

- Aizenberg, I.; Sheremetov, L.; Villa-Vargas, L.; Martinez-Muñoz, J. Multilayer Neural Network with Multi-Valued Neurons in time series forecasting of oil production. Neurocomputing 2016, 175, 980–989. [Google Scholar] [CrossRef]

- Aizenberg, I.; Moraga, C. Multilayer Feedforward Neural Network Based on Multi-valued Neurons (MLMVN) and a Backpropagation Learning Algorithm. Soft Comput. 2007, 11, 169–183. [Google Scholar] [CrossRef]

- Fan, D.; Sun, H.; Yao, J.; Zhang, K.; Yan, X.; Sun, Z. Well production forecasting based on ARIMA-LSTM model considering manual operations. Energy 2021, 220, 119708. [Google Scholar] [CrossRef]

- Sagheer, A.; Kotb, M. Time series forecasting of petroleum production using deep LSTM recurrent networks. Neurocomputing 2019, 323, 203–213. [Google Scholar] [CrossRef]

- Liu, W.; Gu, J. Forecasting oil production using ensemble empirical model decomposition based Long Short-Term Memory neural network. J. Pet. Sci. Eng. 2020, 189, 107013. [Google Scholar] [CrossRef]

- Wu, J.; Miu, F.; Li, T. Daily Crude Oil Price Forecasting Based on Improved CEEMDAN, SCA, and RVFL: A Case Study in WTI Oil Market. Energies 2020, 13, 1852. [Google Scholar] [CrossRef] [Green Version]

- Fan, G.-F.; Peng, L.-L.; Hong, W.-C.; Sun, F. Electric load forecasting by the SVR model with differential empirical mode decomposition and auto regression. Neurocomputing 2016, 173, 958–970. [Google Scholar] [CrossRef]

- Jin, F.; Li, Y.; Sun, S.; Li, H. Forecasting air passenger demand with a new hybrid ensemble approach. J. Air Transp. Manag. 2020, 83, 101744. [Google Scholar] [CrossRef]

- Boudraa, A.O.; Cexus, J.-C.; Saidi, Z. EMD-based signal noise reduction. Int. J. Inf. Commun. Eng. 2004, 1, 96–99. [Google Scholar]

- Yi, S.; Guo, K.; Chen, Z. Forecasting China’s Service Outsourcing Development with an EMD-VAR-SVR Ensemble Method. Procedia Comput. Sci. 2016, 91, 392–401. [Google Scholar] [CrossRef] [Green Version]

- Büyükşahin, Ü.Ç.; Ertekin, Ş. Improving forecasting accuracy of time series data using a new ARIMA-ANN hybrid method and empirical mode decomposition. Neurocomputing 2019, 361, 151–163. [Google Scholar] [CrossRef] [Green Version]

- Qiu, X.; Ren, Y.; Suganthan, P.; Amaratunga, G.A. Empirical Mode Decomposition based ensemble deep learning for load demand time series forecasting. Appl. Soft Comput. 2017, 54, 246–255. [Google Scholar] [CrossRef]

- Behnam, H.; Sadeghi, S.; Tavakkoli, J. Ultrasound elastography using empirical mode decomposition analysis. J. Med. Signals Sens. 2014, 4, 18–26. [Google Scholar] [CrossRef]

- Zhu, A.; Zhao, Q.; Wang, X.; Zhou, L. Ultra-Short-Term Wind Power Combined Prediction Based on Complementary Ensemble Empirical Mode Decomposition, Whale Optimisation Algorithm, and Elman Network. Energies 2022, 15, 3055. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).