Abstract

Feature extraction plays a key role in fault detection methods. Most existing methods focus on comprehensive and accurate feature extraction of normal operation data to achieve better detection performance. However, discriminative features based on historical fault data are usually ignored. Aiming at this point, a global-local marginal discriminant preserving projection (GLMDPP) method is proposed for feature extraction. Considering its comprehensive consideration of global and local features, global-local preserving projection (GLPP) is used to extract the inherent feature of the data. Then, multiple marginal fisher analysis (MMFA) is introduced to extract the discriminative feature, which can better separate normal data from fault data. On the basis of fisher framework, GLPP and MMFA are integrated to extract inherent and discriminative features of the data simultaneously. Furthermore, fault detection methods based on GLMDPP are constructed and applied to the Tennessee Eastman (TE) process. Compared with the PCA and GLPP method, the effectiveness of the proposed method in fault detection is validated with the result of TE process.

1. Introduction

The large scale and high complexity of modern chemical processes lead to greater challenges to the safety and stability of the processes. The fault detection and diagnose (FDD) methods, which can reduce the occurrence of faults and related losses, has received more attention []. As the first step in FDD and the basis for subsequent analysis, fault detection methods provide operators with real-time monitoring of process status and more response time for fault decision. With the widespread application of advanced measurement instruments and distribution control system (DCS), a large amount of data are collected and stored, which provides a good basis for the development of data-based fault detection methods [].

In past decades, multivariate statistical process monitoring (MSPM) methods have been the most intensively and widely studied data-driven fault detection methods []. MSPM methods obtain the projection matrix to extract data feature by considering the statistic feature variability of data, such as variance for PCA-based methods and high-order statistic for ICA-based methods. Further, extended methods for the dynamic, nonlinearity and other characteristics of process data are proposed to extract more accurate features of data for better detection performance [,,]. In fact, how to accurately extract the important features from massive data is the concern of most studies. However, the MSPM methods only focus on the global features of the data and the absence of local features represented by neighborhood information may compromise detection performance [].

Fortunately, the emergence of manifold learning provides a novel perspective to preserve the local feature of data. Several methods based on manifold learning have been proposed for dimension reduction in pattern recognition, such as Laplacian eigenmap (LE), locally linear embedding (LLE) and local tangent space alignment (LTSA) [,,]. Regarding the Out-of-Sample learning problem, their linear forms with explicit projection were proposed and have been introduced to the field of fault detection, such as local preserving projection (LPP), neighborhood preserving embedding (NPE), and linear local tangent space alignment (LLTSA) [,,]. The above manifold learning-based methods extract local features represented by neighborhood information, while ignoring the global features expressed by variance information and high-order statistics.

In order to solve the issues of MSPM and manifold learning methods in features extraction, several feature extraction methods which can extract global and local features of data simultaneously were proposed and applied to fault detection. Zhang et al. first proposed the global-local structure analysis (GLSA) by integrating the objective functions of PCA and LPP directly []. Similarly, Yu proposed the local and global principal component analysis (LGPCA) by constructing objective function based on the ratio of LPP to PCA []. However, the PCA model in the above methods required data with Gaussian distribution. For this issue, Luo proposed a unified framework, namely, global-local preserving projection (GLPP) to extract global and local features based on the distance relationship between neighbors and non-neighbors entirely []. With different forms of local feature extraction, NPE and LTSA were later extended to extract global and local features of the data for fault detection [,,]. Due to its lesser limitations on data distribution and low computational complexity, various GLPP-based improvements have been proposed, including dynamic, nonlinearity, non-parameterization, sparsity, and ensemble learning [,,,,,,,].

Most data-based fault detection methods, including MSPM-based and manifold learning-based methods, only rely on the more comprehensive and accurate feature extraction of normal operating conditions data to achieve better detection performance, which can contribute to detect any anomalies that exceed the defined normal range in the feature space obtained by normal data. However, data collected from normal operating conditions cannot be guaranteed to contain all features in normal operating conditions, which is a great challenge, and not always necessary either, as the purpose of fault detection is actually to distinguish the normal and abnormal operating condition based on data analysis. Due to the absence of discriminative features based on fault data, the feature extracted by the above methods may not be the optimal feature to distinguish normal operation conditions from real faults. Therefore, it is necessary to improve the performance of detection methods by considering fault data. Huang et al. proposed a novel slow feature analysis-based detection method and online fault information is used to reorder and select features online for obtaining fault-related feature []. However, the features selected by the above method are still derived from the slow features based on normal data. In terms of the above issue, the discriminative feature extraction method based on fault data should be introduced into the feature extraction of fault detection methods. Discriminant feature extraction methods represented by linear discriminant analysis (LDA) and its variants have been first applied in pattern recognition [,,]. Due to the limitation of LDA-based methods on data distribution, marginal fisher analysis (MFA) based on graph embedding framework was proposed by maximizing the separability between pairwise marginal data points []. On this basis, multiple marginal fisher analysis (MMFA) was proposed to solve the class-isolation issue by considering the multiple marginal data pairs []. However, there is little discussion on how to combine discriminative feature methods with inherent feature extraction methods to improve performance of fault detection.

In this paper, a novel feature extraction algorithm, which is named global-local marginal discriminant preserving projection (GLMDPP), is proposed and applied for fault detection. Due to its ability to extract global and local features of the data simultaneously, inherent features of both normal data and historical fault data are extracted by GLPP method. Inspired by GLPP, discriminative features extraction based on marginal sample pairs in MMFA is also extended to non-marginal sample pairs. Then, the objective functions of GLPP-based inherent features extraction and MMFA-based discriminative features extraction are integrated to obtain the optimal features which can separate normal conditions from historical fault while retaining full inherent features of the data. In addition, geodesic distance is introduced instead of Euclidean distance between non-neighbor or non-marginal sample pairs to represent intrinsic geometric structure more accurately. Statistics representing changes in feature space is calculated to establish a GLMDPP-based fault detection method.

The rest of paper is organized as follows. The basic methods related to the proposed method are briefly reviewed in Section 2. Section 3 presents the proposed GLMDPP method. GLMDPP-based fault detection procedure is developed in Section 4. The experimental results of the Tennessee Eastman process are discussed in Section 5. Section 6 provides the conclusion.

2. Preliminaries

2.1. Global-Local Preserving Projection

GLPP is a manifold learning-based feature extraction method which can preserving global and local features of data simultaneously []. In brief, GLPP extends the LPP-based adjacent relationship to the non-adjacent relationship to extract the comprehensive features of the data. The implementation procedures of GLPP are as follows:

Given a normalized data set , GLPP aims to obtain a projection matrix which could map the to . For each sample , the k nearest neighbors based on Euclidean distance are selected to construct local neighborhood . An adjacency weight matrix is constructed, and each element representing adjacency relationship between neighbor sample pairs is calculated by a heat kernel function as shown in Equation (1).

where σ1 is an empirical constant and represents the Euclidean distance. LPP-based sub-objective function for local feature extraction is presented as follows:

where is a diagonal matrix and each element can be calculated by . represents Laplacian matrix which can be calculated by L = D − W. Similar to the local feature extraction part, non-adjacency weight matrix is constructed as follows:

where σ2 and in represent the same meaning with . On the basis of the non-adjacency relationship, the sub-objective function for global feature extraction is presented as follows:

where is a diagonal matrix and each element can be calculated by . represents Laplacian matrix which can be calculated by .

In order to preserve both global and local features of the data, a weighted coefficient is introduced to integrate the two sub-objective functions as follows:

where . H is a diagonal matrix and each element can be calculated by . is a Laplacian matrix which can be calculated by . The weighted coefficient is determined based on the trade-off between the local and global features as follows:

where ρ denotes the spectral radius of the matrix. To avoid the singularity problem, the constraint is presented for the objective function of GLPP as follows:

The optimization problem consisting of Equations (5) and (7) can be transformed to the generalized eigenvalue decomposition problem as follows:

Projection matrix for preserving both global and local features of the data can be constructed by the eigenvectors corresponding to the d smallest eigenvalues.

2.2. Multiple Marginal Fisher Analysis

MMFA is a novel discriminative feature extraction method by maxing the interclass separability among multiple marginal point pairs and minimizing within-class scatter simultaneously []. Compared with LDA and MFA, limitations on Gaussian distribution of data and class-isolated issue are solved for better discriminative feature and wider applications.

Given a data set and each sample has its class label . By using the projection matrix , the discriminative features can be obtained as follows:

For the within-class relationship, K-nearest neighbor method is used to determine the nearest neighbor relationship with the same class. The similarity between pairs of nearest neighbor points with the same class label is defined as follows:

On the basis of above similarity, within-class compactness is characterized as follows:

where is a diagonal matrix and its element can be calculated by .The is Laplacian matrix and it can be obtained by .Then, distance-based k2 nearest-neighbor sample pairs separability between every two classes are determined as follows:

Furthermore, the interclass separability is defined by the above-mentioned nearest-neighbor sample pairs as follows:

On the basis of the interclass separability and within-class similarity defined in Equations (5) and (13), the following objective function is proposed by Fisher criterion.

The above-mentioned objective function can be transformed into a solution of the generalized eigenvalue problem as follows:

The optimal projection matrix consists of the eigenvectors corresponding to the d largest eigenvalues.

3. GLMDPP Method

3.1. Inherent Feature Extraction

Due to its ability to comprehensively extract data features and the absence of limitation on data distribution, GLPP is applied for the inherent features extraction of data in the proposed algorithm. Given a dataset and each class of dataset . denotes the normal condition data and the rest data denotes the c class of historical fault data. The K-nearest neighbors method based on Euclidean distance is used to determine the nearest neighbor relationship within each class of data in historical data. Compared to Euclidean distance, geodesic distance is introduced due to its more accurate estimation of the non-neighborhood distance on the data manifold []. The Dijkstra algorithm was used to estimate the geodesic distance by calculating the shortest path distance based on the adjacency relationship []. The adjacency weight matrix of GLPP is shown in Equation (1) and the Euclidean distance of non-adjacency weight matrix is replaced with the geodesic distance in Equation (3). Additionally, the value of the σ1 and σ2 is determined by the average value of the Euclidean distance in adjacency relationship and geodesic distance in non-adjacency relationship, respectively. Then, the sub-objective functions of global and local feature extraction are presented by Equations (2) and (4). Due to the consideration of historical fault data, the adjacency weight matrix and diagonal matrix of local feature extraction sub-objective function can be written as:

where represent the adjacency weight matrix in each class, and represent a sample importance in its class. Non-adjacency weight matrix and its corresponding matrix have the same form as above. Consistent with Equation (8), the objective function to extract both global and local features of the data is established as follows:

3.2. Discriminative Feature Extraction

To improve the defined feature subspace by considering only normal conditions, fault conditions in the historical data are also taken into account to obtain the optimal discriminative feature subspace. Different with MMFA which considers marginal sample pairs for any two classes of data, only k2 nearest neighbor sample pairs between normal data and each class of faulty conditions are considered as marginal sample pairs between classes. For subsequent integration, the weight form of the marginal sample pairs was adjusted to the form of a heat kernel function consistent with GLPP as Equation (19). Inspired from GLPP, non-marginal sample pairs are also introduced to extract the discriminative features comprehensively. Since marginal sample pairs have a more important role for partial overlap inter-class data in the original space, the form of weights for non-marginal sample pairs is constructed as follows:

where and represents the Euclidean distance and geodesic distance between and , respectively. Additionally, the value of the σ3 and σ4 is identified by the average value of the Euclidean distance in marginal sample pairs and geodesic distance in non-marginal sample pairs, respectively. Furthermore, sub-objective function for discriminative feature extraction is presented as follows:

where is a diagonal matrix and its element can be calculated by .The is a Laplacian matrix which is obtained by .

3.3. Formulation of FDGLPP

In order to preserve both the inherent and discriminant features of the data, two sub-objective functions are integrated by fisher criterion as follows:

Then, the above optimization problem is transformed to a generalized eigenvalue problem by the Lagrange multiplier method as follows:

Optimal discriminative feature projection matrix is obtained by the eigenvectors corresponding to the d largest eigenvalues.

4. GLMDPP-Based Fault Detection

Given the dataset , Z-Score standardization is used to normalize the dataset with the mean and standard deviation of normal dataset . Discriminative feature projection matrix is obtained by GLMDPP method and discriminative feature of the new sample can be calculated as follows:

Since the discriminative feature based on historical normal and fault data is already the most sensitive feature space for detecting faults, only the statistic is introduced for monitoring the variation of feature space as follows:

where is the covariance matrix of the discriminative feature projected from the normal data . The statistic control limit is presented as follows:

where represents the significance level and denotes a distribution with d and n-d degrees of freedom. If the statistic of a new sample exceeds its control limit, the process is considered as fault state; Otherwise, it is considered as normal state.

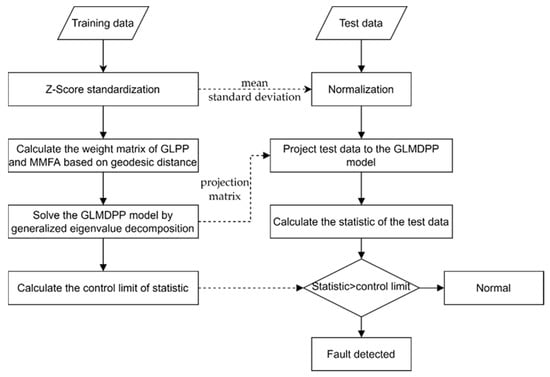

The complete procedure of GLMDPP-based fault detection method is shown in Figure 1, including two parts: offline modeling and online detection.

Figure 1.

Fault detection procedure based on GLMDPP algorithm.

Offline modelling:

- (1)

- Historical data including fault data and normal data is used as training data and Z-Score standardization is employed for normalize the training data via the mean and standard deviation of normal data as follows:

- (2)

- The Euclidean distance-based adjacency weight matrix and marginal sample pairs weight matrix are constructed. Based on the adjacency relationship, the geodesic distance is introduced to construct the non-adjacency weight matrix and the non-marginal sample pairs weight matrix.

- (3)

- On the basis of GLPP and MMFA, the objective function of GLMDPP which can extract both inherent and discriminant features simultaneously is constructed by fisher criterion.

- (4)

- The objective function of GLMDPP is solved by transforming to a generalized eigenvalue problem and projection matrix is obtained.

- (5)

- The control limit of statistics is calculated as shown in Equation (25)

Online monitoring:

- (1)

- Online test data is collected and normalized with the mean and variance of the normal training data.

- (2)

- The feature of test data is calculated by the projection matrix obtained from offline modelling.

- (3)

- statistics is calculated and compared with the control limit.

- (4)

- If statistic of online test data exceeds its control limit, fault is detected. Otherwise, return to (1).

5. Case Study

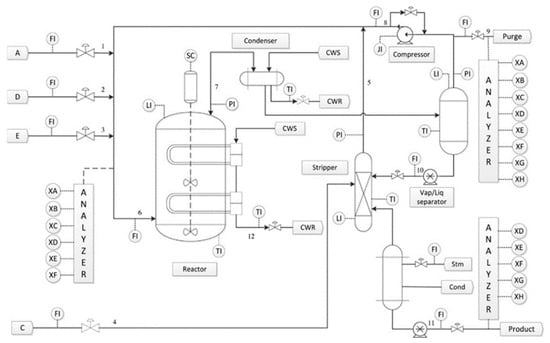

In order to demonstrate the fault detection performance of the proposed method, the TE process is used as a benchmark test []. The flow diagram of the TE process is shown in Figure 2. It contains five major unit operations, which are the reactor, the product condenser, a vapor–liquid separator, a recycle compressor and a product strip. The TE process involves 52 variables, including 41 measured variables and 11 manipulated variables. Due to 19 quality measured variables being sampled less frequently, the remaining 22 measured variables and 11 manipulated variables sampled every 3 min are usually utilized as monitoring variables. In the TE process, 21 types of faults can be generated to test the detection performance and the description of each fault can be seen in Table 1. The variable information of TE process is listed in Table 2.

Figure 2.

Flow diagram of the TE process.

Table 1.

Programmed faults in the TE process.

Table 2.

Variable information of TEP for process monitoring.

The data applied in this research is provided in Prof. Braatz’s homepage in MIT and it can be divided into training data and test data []. Normal data with 500 samples and 21 types of fault data with 480 samples each are used as training data. Corresponding with the above faults, 21 test datasets with 960 samples are used as test data and fault is introduced into the 161st sample in each dataset.

In order to illustrated the detection performance of the proposed method, GLPP-based and PCA-based fault detection methods is introduced for comparison. Similar to PCA, cumulative percent variance of 90% is used to determine the number (d) of projection vectors for three methods. The same number of nearest neighbors k1 = 10 is chosen for both GLPP and GLMDPP. The number of marginal sample pairs k2 = 50 is determined empirically for GLMDPP. The confidence level for control limit of statistic is set as 99% for three methods. To compare the detection performance of different methods quantitatively, fault detection rate (FDR) and false alarm rate (FAR) are adopted and their calculation is presented as follows:

The fault detection rates (FDRs) and false alarm rates (FARs) of three monitoring methods for TE process are shown in Table 3. Previous studies have shown that faults 3, 9 and 15 are difficult to detect due to their small magnitudes []. The detection performance of the rest 18 faults is compared and their average values are calculated. The bolded numbers in Table 3 represent the best FDR for the corresponding statistic under each fault. Obviously, the proposed method gives the highest detection rate for 17 of 18 faults, especially for fault 10, 16 and 21 with significant improvement. Compared with PCA that considers only global features of the data, the proposed method has higher detection rates on almost all faults. Compared to GLPP, the proposed method has the highest detection rate for 17 faults except for fault 2, and there is little difference on detection rate of fault 2. The performance of the proposed method can be further demonstrated by the average FDR of 18 faults given in Table 3. False alarm rate (FAR) is also an important indicator for evaluating the detection performance. It is easy to see that the of the proposed method has a low average FAR, which is only slightly higher than GLPP. In addition, fault detection time of different methods are presented in Table 4 and the earliest valid fault detection time is highlighted in bold. Compared with PCA, GLPP and the proposed method have outstanding performance in fault detection time. Compared with GLPP, the proposed method has the same detection time on most faults and earlier detection time especially on faults 8 and 12. The difference between the proposed method and the comparative methods in fault detection performance is due to the introduction of known fault data in the modeling process. Specifically, by combining inherent feature extraction method with discriminant feature extraction method, historical data including normal condition data and known faults data are merged into the training data, so as to achieve better detection performance on faults with similar characteristics to known cases. In general, the proposed method has superior detection performance among three methods due to its comprehensive extraction of inherent feature and discriminative feature.

Table 3.

FDRs (%) and FARs (%) of different method on TE process.

Table 4.

Fault detection time of different method on TE process.

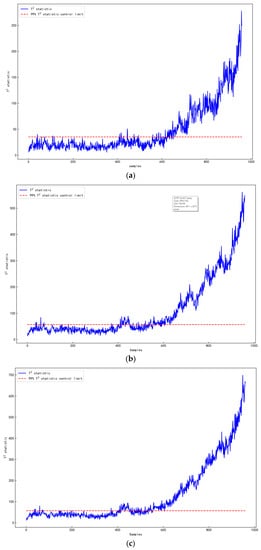

To further illustrate the effectiveness of the proposed method, fault detection results of three methods in fault 21 are presented in Figure 3. It can be found that the PCA method detects the fault at 627th sample and has the lowest FDR. Both GLPP and GLMDPP can detect this fault at 417th sample simultaneously. Compared with GLPP, GLMDPP not only has a higher FDR but also a lower FAR after detecting the fault.

Figure 3.

Detection charts of three methods for fault21: (a) PCA; (b) GLPP; (c) GLMDPP.

6. Conclusions

Regarding the absence of discriminative information in most fault detection methods based on normal condition data, a global-local marginal discriminant preserving projection (GLMDPP)-based fault detection method is proposed. Historical data, including normal and fault data, are used for the construction of the GLMDPP method. Specifically, GLPP and MMFA-based marginal relationships are integrated through the Fisher framework to extract both the inherent feature and discriminative feature of data, which is expected to have better performance on faults with similar characteristics to known cases. Comparing PCA-based and GLPP-based monitoring methods, the proposed monitoring method is tested by the TE process. The results confirm that the consideration of historical fault data can contribute to the improvement of fault detection performance which provides a new idea for improvement of fault detection method only based on normal data. Furthermore, the imbalance issue between historical fault data and normal data and multimodal characteristic in real processes will be studied in the future.

Author Contributions

Conceptualization, Y.L.; Methodology, Y.L.; Software, Y.L. and F.M.; Validation, Y.L., C.J. and W.S.; Formal Analysis, Y.L.; Investigation, Y.L., F.M. and C.J.; Resources, W.S. and J.W.; Data Curation, W.S. and Y.L.; Writing—Original Draft Preparation, Y.L.; Writing—Review and Editing, W.S., J.W., F.M. and Y.L.; Visualization, Y.L.; Supervision, W.S. and J.W.; Project Administration, W.S. and J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data applied in this research can be obtained at https://github.com/camaramm/tennessee-eastman-profBraatz (accessed on 22 November 2021).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Qin, S.J.; Chiang, L.H. Advances and opportunities in machine learning for process data analytics. Comput. Chem. Eng. 2019, 126, 465–473. [Google Scholar] [CrossRef]

- Ge, Z. Review on data-driven modeling and monitoring for plant-wide industrial processes. Chemom. Intell. Lab. Syst. 2017, 171, 16–25. [Google Scholar] [CrossRef]

- Joe Qin, S. Statistical process monitoring: Basics and beyond. J. Chemom. J. Chemom. Soc. 2003, 17, 480–502. [Google Scholar] [CrossRef]

- Ku, W.; Storer, R.H.; Georgakis, C. Disturbance detection and isolation by dynamic principal component analysis. Chemom. Intell. Lab. Syst. 1995, 30, 179–196. [Google Scholar] [CrossRef]

- Choi, S.W.; Lee, C.; Lee, J.-M.; Park, J.H.; Lee, I.-B. Fault detection and identification of nonlinear processes based on kernel PCA. Chemom. Intell. Lab. Syst. 2005, 75, 55–67. [Google Scholar] [CrossRef]

- Lee, J.M.; Qin, S.J.; Lee, I.B. Fault detection and diagnosis based on modified independent component analysis. AIChE J. 2006, 52, 3501–3514. [Google Scholar] [CrossRef]

- Hu, K.; Yuan, J. Multivariate statistical process control based on multiway locality preserving projections. J. Process Control 2008, 18, 797–807. [Google Scholar] [CrossRef]

- Roweis, S.T.; Saul, L.K. Nonlinear dimensionality reduction by locally linear embedding. Science 2000, 290, 2323–2326. [Google Scholar] [CrossRef] [Green Version]

- Belkin, M.; Niyogi, P. Laplacian eigenmaps and spectral techniques for embedding and clustering. In Proceedings of the 14th International Conference on Neural Information Processing Systems: Natural and Synthetic, Vancouver, BC, Canada, 8–14 December 2001. [Google Scholar]

- Zhang, Z.; Zha, H. Principal Manifolds and Nonlinear Dimensionality Reduction via Tangent Space Alignment. SIAM J. Sci. Comput. 2004, 26, 313–338. [Google Scholar] [CrossRef] [Green Version]

- He, X.; Niyogi, P. Locality preserving projections. Adv. Neural Inf. Processing Syst. 2003, 16, 153–160. [Google Scholar] [CrossRef]

- He, X.; Cai, D.; Yan, S.; Zhang, H.-J. Neighborhood preserving embedding. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05), Beijing, China, 17–21 October 2005; Volume 1, pp. 1208–1213. [Google Scholar]

- Zhang, T.; Yang, J.; Zhao, D.; Ge, X. Linear local tangent space alignment and application to face recognition. Neurocomputing 2007, 70, 1547–1553. [Google Scholar] [CrossRef]

- Zhang, M.; Ge, Z.; Song, Z.; Fu, R. Global–Local Structure Analysis Model and Its Application for Fault Detection and Identification. Ind. Eng. Chem. Res. 2011, 50, 6837–6848. [Google Scholar] [CrossRef]

- Yu, J. Local and global principal component analysis for process monitoring. J. Process Control 2012, 22, 1358–1373. [Google Scholar] [CrossRef]

- Luo, L. Process Monitoring with Global–Local Preserving Projections. Ind. Eng. Chem. Res. 2014, 53, 7696–7705. [Google Scholar] [CrossRef]

- Ma, Y.; Song, B.; Shi, H.; Yang, Y. Fault detection via local and nonlocal embedding. Chem. Eng. Res. Des. 2015, 94, 538–548. [Google Scholar] [CrossRef]

- Dong, J.; Zhang, C.; Peng, K. A novel industrial process monitoring method based on improved local tangent space alignment algorithm. Neurocomputing 2020, 405, 114–125. [Google Scholar] [CrossRef]

- Fu, Y. Local coordinates and global structure preservation for fault detection and diagnosis. Meas. Sci. Technol. 2021, 32, 115111. [Google Scholar] [CrossRef]

- Luo, L.; Bao, S.; Mao, J.; Tang, D. Nonlinear process monitoring using data-dependent kernel global–local preserving projections. Ind. Eng. Chem. Res. 2015, 54, 11126–11138. [Google Scholar] [CrossRef]

- Bao, S.; Luo, L.; Mao, J.; Tang, D. Improved fault detection and diagnosis using sparse global-local preserving projections. J. Process Control 2016, 47, 121–135. [Google Scholar] [CrossRef]

- Luo, L.; Bao, S.; Mao, J.; Tang, D. Nonlocal and local structure preserving projection and its application to fault detection. Chemom. Intell. Lab. Syst. 2016, 157, 177–188. [Google Scholar] [CrossRef]

- Zhan, C.; Li, S.; Yang, Y. Enhanced Fault Detection Based on Ensemble Global–Local Preserving Projections with Quantitative Global–Local Structure Analysis. Ind. Eng. Chem. Res. 2017, 56, 10743–10755. [Google Scholar] [CrossRef]

- Tang, Q.; Liu, Y.; Chai, Y.; Huang, C.; Liu, B. Dynamic process monitoring based on canonical global and local preserving projection analysis. J. Process Control 2021, 106, 221–232. [Google Scholar] [CrossRef]

- Huang, C.; Chai, Y.; Liu, B.; Tang, Q.; Qi, F. Industrial process fault detection based on KGLPP model with Cam weighted distance. J. Process Control 2021, 106, 110–121. [Google Scholar] [CrossRef]

- Cui, P.; Wang, X.; Yang, Y. Nonparametric manifold learning approach for improved process monitoring. Can. J. Chem. Eng. 2022, 100, 67–89. [Google Scholar] [CrossRef]

- Yang, F.; Cui, Y.; Wu, F.; Zhang, R. Fault Monitoring of Chemical Process Based on Sliding Window Wavelet DenoisingGLPP. Processes 2021, 9, 86. [Google Scholar] [CrossRef]

- Huang, J.; Ersoy, O.K.; Yan, X. Slow feature analysis based on online feature reordering and feature selection for dynamic chemical process monitoring. Chemom. Intell. Lab. Syst. 2017, 169, 1–11. [Google Scholar] [CrossRef]

- Fisher, R.A. The use of multiple measurements in taxonomic problems. Ann. Eugen. 1936, 7, 179–188. [Google Scholar] [CrossRef]

- Belhumeur, P.N.; Hespanha, J.P.; Kriegman, D.J. Eigenfaces vs. fisherfaces: Recognition using class specific linear projection. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 711–720. [Google Scholar] [CrossRef] [Green Version]

- Pylkkönen, J. LDA based feature estimation methods for LVCSR. In Proceedings of the Ninth International Conference on Spoken Language Processing, Pittsburgh, Pennsylvania, 17–21 September 2006. [Google Scholar]

- Yan, S.; Xu, D.; Zhang, B.; Zhang, H.-J.; Yang, Q.; Lin, S. Graph embedding and extensions: A general framework for dimensionality reduction. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 29, 40–51. [Google Scholar] [CrossRef] [Green Version]

- Huang, Z.; Zhu, H.; Zhou, J.T.; Peng, X. Multiple Marginal Fisher Analysis. IEEE Trans. Ind. Electron. 2019, 66, 9798–9807. [Google Scholar] [CrossRef]

- Fu, Y.; Luo, C. Joint Structure Preserving Embedding Model and Its Application for Process Monitoring. Ind. Eng. Chem. Res. 2019, 58, 20667–20679. [Google Scholar] [CrossRef]

- Dijkstra, E.W. A note on two problems in connexion with graphs. Numer. Math. 1959, 1, 269–271. [Google Scholar] [CrossRef] [Green Version]

- Downs, J.J.; Vogel, E.F. A plant-wide industrial process control problem. Comput. Chem. Eng. 1993, 17, 245–255. [Google Scholar] [CrossRef]

- Chiang, L.; Russell, E.; Braatz, R. Fault Detection and Diagnosis in Industrial Systems; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2001. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).