Abstract

With the rapid development of information technology, there is an increasing demand for the digital preservation of traditional festival culture and the extraction of relevant knowledge. However, existing research on Named Entity Recognition (NER) for Chinese traditional festival culture lacks support from high-quality corpora and dedicated model methods. To address this gap, this study proposes a Named Entity Recognition model, CLFF-NER, which integrates multi-source heterogeneous information. The model operates as follows: first, Multilingual BERT is employed to obtain the contextual semantic representations of Chinese and English sentences. Subsequently, a Multiconvolutional Kernel Network (MKN) is used to extract the local structural features of entities. Then, a Transformer module is introduced to achieve cross-lingual, cross-attention fusion of Chinese and English semantics. Furthermore, a Graph Neural Network (GNN) is utilized to selectively supplement useful English information, thereby alleviating the interference caused by redundant information. Finally, a gating mechanism and Conditional Random Field (CRF) are combined to jointly optimize the recognition results. Experiments were conducted on the public Chinese Festival Culture Dataset (CTFCDataSet), and the model achieved 89.45%, 90.01%, and 89.73% in precision, recall, and F1 score, respectively—significantly outperforming a range of mainstream baseline models. Meanwhile, the model also demonstrated competitive performance on two other public datasets, Resume and Weibo, which verifies its strong cross-domain generalization ability.

1. Introduction

Chinese traditional festivals are a crucial component of the long-standing historical and cultural heritage of the Chinese nation, boasting diverse forms and rich connotations. The formation of traditional festivals is not only a reflection of a nation’s historical development process but also a process of long-term accumulation and condensation of a country’s historical culture. The preservation of Chinese traditional festivals offers the following benefits: (1) it enables the intergenerational inheritance of these precious cultural heritages, allowing future generations to understand and recognize the historical and cultural roots of the Chinese nation; (2) certain festivals can drive the development of related industries such as tourism, catering, shopping, and entertainment, fostering a “festival economy”; (3) inheriting traditional festivals facilitates a better display of the profoundness and extensiveness of Chinese culture to the world, promotes exchanges and dialogs between different cultures, and enhances the international influence of Chinese culture. The application of digital technologies to protect Chinese traditional festival culture is a forward-looking approach that keeps pace with the times. Currently, the task of Chinese NER rarely involves traditional festivals. The purpose of this study is to attract more researchers to engage in this field and fill the gaps in relevant research.

NER is an information extraction technology that holds significant advantages in extracting valuable information from text data. It serves as a fundamental task for downstream applications such as Natural Language Processing (NLP) and information extraction systems [1,2,3]. Text encoding is also a key technology, and a high-quality encoder plays a crucial role in improving model performance. Recently, the BERT pre-trained language model [4,5] has been widely applied in NER tasks. By conducting unsupervised pre-training on large-scale text corpora, BERT generates word embeddings by considering both left and right contextual information, capturing comprehensive semantic dependencies and contextual nuances of the text. For instance, in the sentence “Apple Inc. released the new iPhone”, the representation of “Apple” is influenced by both “Inc.” and “iPhone”. Therefore, the model proposed in this study adopts the BERT pre-trained language model for sentence encoding. In recent years, various BERT-based deep learning and machine learning models have been proposed, such as BERT-BiLSTM [6], BERT-CRF [7], BERT-CNN [8], and BERT-BiLSTM-CRF [9]. However, these models often underperform on Chinese traditional festival entity recognition, as they struggle with long-tail, regional, or transliterated festival names, and generally lack mechanisms to incorporate external knowledge or cross-lingual information. Deep learning methods like Convolutional Neural Networks (CNNs) and Bidirectional Long Short-Term Memory (BiLSTM) networks are widely used in information extraction tasks. In the field of text processing, CNNs are mainly used to extract local information from sentences—sufficient local information usually contributes to improved model performance—while LSTM networks excel at capturing long-distance dependencies and are thus employed to extract contextual information from sentences.

This study focuses on Chinese traditional festivals and trains the model using the public CTFCDataSet. The data of this dataset is sourced from the China National Cultural Resource Repository (www.minwang.com.cn/mzwhzyk/674771/index.html (accessed on November to December 2023)). Inspired by studies [10,11,12] that introduce multimodal data to enhance entity recognition performance, this paper explores the use of English data to investigate whether cross-lingual information can improve the recognition of domain-specific entities in Chinese. Specifically, since Chinese traditional festivals often involve long-tail, regional, transliterated, or compound names, relying solely on Chinese text may lead to missed or inaccurate recognition. Therefore, in this study, Chinese sentences are translated into English. English is used as auxiliary information to be fused with Chinese features, aiming to improve the model’s performance in recognizing culturally rich festival entities. This study proposes the CLFF-NER model, based on the Multilingual BERT pre-trained language model, which can encode over 100 languages, including Chinese and English. Since the dataset used in this study only contains Chinese sentences, corresponding English sentences were translated from the Chinese ones. Therefore, Multilingual BERT was adopted as the encoder to simultaneously encode Chinese sentences and their translated English counterparts in this paper. The translation service was provided via Baidu Cloud (https://cloud.baidu.com (accessed on 7 November 2024)). The contributions of this study are as follows:

This study proposes the CLFF-NER model, an NER framework that integrates multi-source heterogeneous information. The model leverages English sentences as auxiliary information to enhance the performance of Chinese NER models.

The model uses a Transformer module to fully fuse Chinese and English features, while a GNN module selectively integrates English features into Chinese features. To the best of our knowledge, this study is the first to utilize English features as auxiliary information to improve the performance of Chinese NER models.

Experiments on the public Resume and Weibo datasets were conducted to verify the model’s generalization ability. The model achieved competitive results on both datasets, fully demonstrating its strong cross-domain generalization performance.

2. Related Work

In recent years, the versatility of NER has expanded to multiple domains, including medicine and finance [13,14,15,16,17,18,19,20,21]. In the medical domain, there are several existing datasets in this field, such as MIMIC-III, i2b2, NLM-2018, and BioCreative, each containing entities of varying quantities and types. This study focuses on Chinese traditional festivals; therefore, it employs the public CTFCDataSet. The data source of this dataset was specified in the previous section. The dataset used in this study includes six categories of entities, namely PER (Person), LOC (Location), DATE (Date), NATION (Ethnic Group), FESTIVAL (Festival), and ORG (Organization). For example, in the sentence “The Naqu Qiangtang Qiaqing Horse Racing Festival is a shining pearl in the traditional festival culture of Tibet and enjoys a high reputation both inside and outside the region,” “Naqu Qiangtang Qiaqing” is labeled as the LOC entity type, and “Horse Racing Festival” is labeled as the FESTIVAL entity type.

NER is one of the key technologies in information extraction, involving the detection and classification of words and phrases in text [22]. The understanding of a sentence’s meaning lies in grasping the key words within it, and these words are usually labeled as named entities of specific categories. Therefore, NER holds significant research significance and application value in NLP [23,24,25].

Probability-based models, including Hidden Markov Models (HMM) and CRFs, have been widely applied in various domains—until they were surpassed by neural networks in recent years. For instance, some papers introduced the LSTM-CRF model, which combines deep learning models and probability models [26,27,28]. The aim of this integration is to leverage the representation learning capability of neural networks and the structural loss of probability models.

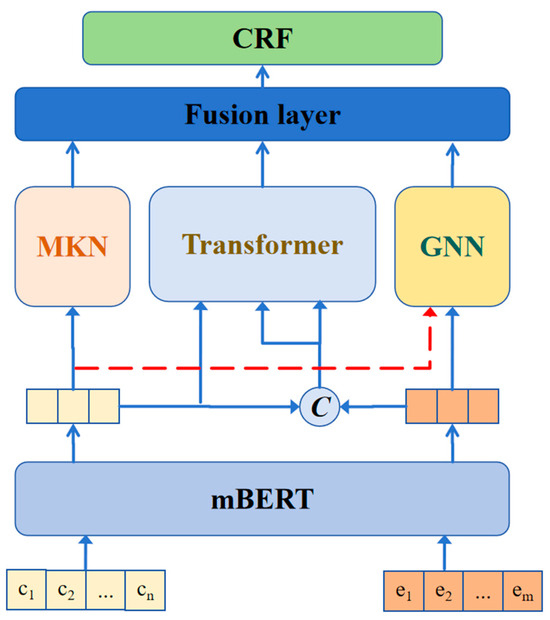

Existing NER models, including BERT-CRF, BERT-Softmax, BERT-BiLSTM-CRF, and BiLSTM-CRF, have achieved strong performance in general domains. However, they exhibit limitations in Chinese traditional festival recognition: low-frequency or regional festivals are often missed; transliterated or compound festival names are hard to identify; and none of these models incorporate external knowledge or cross-lingual information. These limitations motivate the development of CLFF-NER, which leverages multilingual features and heterogeneous information to improve the recognition of culturally rich festival terms, and its structure is illustrated in Figure 1.

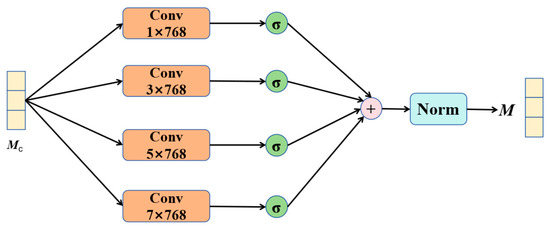

The CLFF-NER model uses CNNs to extract entity information of different lengths, and its structure is illustrated in Figure 2. This module utilizes four convolution kernels of different sizes to extract local information at four distinct scales. Researchers have proposed NER models tailored to different domains, in line with the unique characteristics of each field.

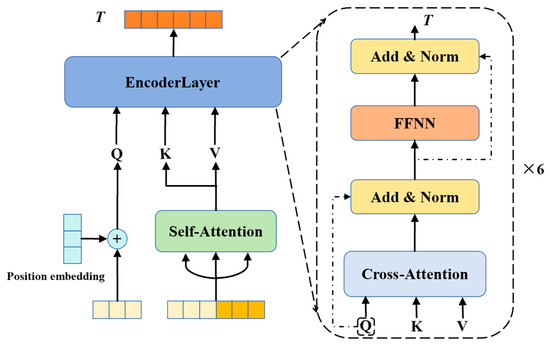

In recent years, due to its excellent performance and high efficiency, the Transformer has been widely applied in various downstream tasks, such as image segmentation and text translation. As a deep learning-based method, the Transformer architecture comprises encoder and decoder modules [29,30]. However, only the encoder module is utilized in the proposed model, and its primary function is to fully fuse Chinese and English features. Through multi-layer feature interaction computations, the model can achieve sufficient fusion of these bilingual features, and the structure of this Transformer module is illustrated in Figure 3.

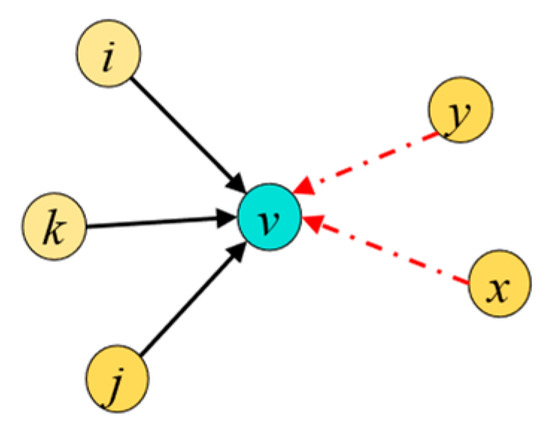

GNNs are a class of deep neural networks that leverage graph properties to extract features for each node. In this study, graph properties are employed to selectively integrate English features into Chinese features, and the structure of the GNN module is presented in Figure 4.

The proposed model operates as follows: First, Multilingual BERT is used to encode Chinese texts and their corresponding English translations. Second, the MKN module extracts multi-scale local information. Third, the Transformer and GNN modules handle the fusion of Chinese and English features—specifically, the Transformer performs non-discriminative fusion, while the GNN module selectively integrates English features into Chinese features using attention feature weights. Subsequently, the features from these three modules are fused; after decoding via CRF, the final prediction results are obtained. Detailed descriptions of these three modules are provided in Section 3.

3. Method

The structure of the model proposed in this study is illustrated in Figure 1. Specifically, this model consists of three parts, namely the encoding layer, the feature extraction layer, and the decoding layer. The encoding layer employs the pre-trained BERT model as its encoder. The feature extraction layer comprises three modules: the MKN module, the Transformer module, and the GNN module. Finally, the decoding layer performs decoding to generate the final recognition results.

Figure 1.

CLFF-NER Structure Diagram.

The MKN module is designed to extract multi-scale local features, making full use of local information to enrich sentence representations; the Transformer module fuses English and Chinese features; and the GNN module selectively fuses English features into Chinese features.

Given a Chinese sentence , and its corresponding English sentence , ci refers to the i-th character in the Chinese sentence, n represents the number of characters in the Chinese sentence, ej refers to the j-th character in the English sentence, and m represents the number of words in the English sentence. After BERT encoding, the corresponding embedding vectors Mc and Me are obtained, and the calculation formula is as follows:

Here, Mc ∈ B × n × 768 and Me ∈ B × m × 768, where B denotes the batch size. Detailed implementation details of each module will be provided in subsequent sections.

3.1. MKN Module

The MKN module is constructed using multiple CNNs with different convolution kernel sizes. The structure of this module is illustrated in Figure 2.

Figure 2.

Schematic diagram of the MKN structure.

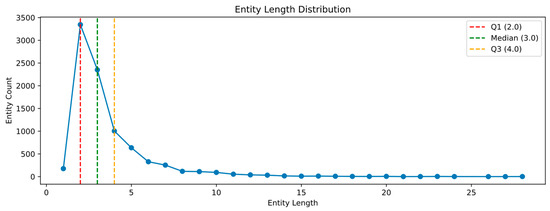

By statistically analyzing the entity length distribution in the dataset, it was found that over 90% of entities have a length ranging from 1 to 7. This indicates that local information in sentences plays a crucial role; thus, this module is designed to extract more local information to enrich sentence representations. Specifically, the module utilizes CNNs with 4 convolutional kernels of size i × 768 (where i = {1, 3, 5, 7}) to extract local features. Its calculation formula is as follows:

In the formula, Mc ∈ B × n × 768, where ReLU denotes the activation function max and Norm represents normalization.

3.2. Transformer Module

This module is improved based on the Transformer, where the self-attention mechanism of the Transformer is modified to a cross-attention mechanism. The structure of this module is illustrated in Figure 3. Specifically, the concatenated English and Chinese features serve as the key and value in the attention mechanism, while the Chinese features act as the query. Before feeding the features into the Transformer module, K and V undergo preliminary fusion via self-attention, and the fused results are then input into the Transformer module. The calculation formulas are as follows:

In the formula, ‘;’ denotes the concatenation operation, ∈ B × (n + m) × 768, key,value ∈ B × n × 768, In the formula, T denotes matrix transposition, and dk denotes the number of attention heads.

Figure 3.

Schematic diagram of the Transformer module structure.

The encoding layer of this module consists of 6 layers. The first layer performs queries , and for layers after the first, the input comes from the output of the previous layer . K and V always stand for key and value, respectively.

Positional encoding is also incorporated into the model, whose purpose is to inject positional information into the input sequence, thereby enabling the model to perceive the position of each element in the sequence. The calculation formula for positional encoding is as follows:

In the formula, pos denotes the actual positional information, and i represents whether the position is even or odd. Specifically, when pos is even, the positional encoding is calculated using Equation (6); when pos is odd, the positional encoding is calculated using Equation (7). embed_size denotes the embedding dimension. The calculation formula for this module is as follows:

In the formula, ∈ B × n × 768, H ∈ B × n × 768, where ReLU denotes the activation function max, and Norm represents normalization. Wi and bi are trainable matrices. Since the Q and K,V have different sequence lengths, the Scaled Dot-Product Attention is adopted to allow each Q position to attend to all K,V positions.

The function of this module is to fully fuse Chinese and English features using the attention mechanism; however, this process may introduce noisy information. Based on this consideration, a selective feature fusion module is proposed. This module, leveraging the attention mechanism, obtains weight scores of Chinese features relative to both Chinese and English features. Although selectively integrating English features into Chinese features reduces the introduction of noisy data, it also loses some effective information. Thus, this study employs both complete fusion and selective fusion of English features.

3.3. GNN Module

This study employs a GNN to selectively integrate English features into Chinese features. Compared with the Transformer module, this module reduces the introduction of noisy data, which can better improve the model’s performance. Its structure is illustrated in Figure 4. Specifically, in Figure 4, v, i, j, and k belong to Chinese nodes, while x and y are English nodes. The attention mechanism is used to obtain weight scores of Chinese features relative to both Chinese and English features, which are then used to construct the graph and the weights of the graph’s edges. The weight calculation method is as follows:

Figure 4.

GNN Structure Diagram.

In the formula, denotes the attention score of the i-th character to the j-th character. Based on the above calculation results, the calculation method for the aggregation of graph nodes is as follows:

In the formula, ∈ 1 × 768 denotes the embedding vector of the k-th character in the Chinese sentence, and Nc denotes the number of nodes connected by an edge to node i.

The result calculated by Equation (16) is the outcome of Chinese feature aggregation, which has not yet aggregated English features into Chinese features. To address this issue, English embeddings are aggregated into in a directed manner, and the formula is as follows:

In the formula, ∈ 1 × 768 denotes the embedding vector of the k-th character in the English sentence, and Ne denotes the number of nodes connected by an edge to node j. To avoid division by zero, a very small value is added to Ne. Finally, the output of the GNN module can be denoted as G ∈ n × 768, .

3.4. Decode Module

Appropriate feature fusion methods are a key technique for improving model performance. This work adopts residual addition to fuse different features, and the fused features are fed into a Conditional Random Field (CRF) for decoding. The calculation formula is as follows:

In the formula, denotes a trainable matrix, and FC denotes a fully connected layer.

For a given observation sequence X = {x1, x2, x3, …, xm} and its corresponding output label sequence Y = {y1, y2, y3, …, ym}, the results calculated by Equations (18) and (19) are input into the fully connected layer with the aim of mapping features to the label space. Finally, after CRF decoding, the probability scores for each label category are obtained. The calculation is as follows:

In the formula, denotes the output of the fully connected layer, and A denotes the transition matrix. The normalized calculation of scores(X, Y) is as follows:

In the formula, Z(X) denotes the normalization factor.

To address the class imbalance problem, the model uses a weighted cross-entropy loss function and assigns a weight to each label category. Additionally, it employs a maximum likelihood loss function to achieve better prediction performance. The calculation formulas for Equations (23) and (24) are as follows:

In the formula, N denotes the number of samples, c ∈ C denotes a label category, yic denotes the true label of the sequence, denotes the predicted label, wc denotes the weight of category c, Nc denotes the number of labels for category c, and No denotes the total number of labels. The final model loss is calculated as the following equation:

denotes the trainable matrix.

Finally, during the training phase, after decoding, the model obtains the sequence with the highest output probability.

4. Experiments

In this section, a series of experiments are conducted on the CTFCDataSet dataset to verify the performance of the proposed CLFF-NER method.

4.1. Dataset

The CTFCDataSet dataset consists of 4254 labeled data entries, containing six entity categories and 10,174 entities. Table 1 presents the statistical information of various entity types in this dataset, and Table 2 shows the statistics of the number of sentences in the train set and the test set.

Table 1.

Quantity Statistics of Various Entity Types in the CTFCDataSet Dataset.

Table 2.

Number of sentences in the train set and the test set in CTFCDataSet.

Data entities are divided into six categories: PER, DATE, ORG, LOC, FESTIVAL, and NATION. In the dataset, data is stored in the form of key–value pairs, with each pair consisting of a text part and a label part. Specifically, the text part contains text about traditional Chinese festival culture, while the label part includes the start and end positions of each entity, along with its category and the entity itself. The labeling scheme used is the ‘BIOS’ format, commonly used in NER tasks, where each character is labeled as B-X, I-X, S-X, or O. B-X indicates that the entity belongs to category X and is the beginning of the entity, I-X indicates that the character is part of an entity of category X and is inside the entity, and S-X denotes a single-character entity that belongs to category X, serving as both the start and end of the entity. The O label indicates that the character does not belong to any entity category.

The dataset is divided into a training set and a testing set in a 9:1 ratio, and the validation set is separated from the training set at a 9:1 ratio using the train_test_split method in the code.

4.2. Evaluation Metrics

This paper uses three metrics: precision (P), recall (R), and F1 score (F1) to evaluate the performance of the CLFF-NER method. Higher metric values indicate a better performance of the corresponding method.

Here, TP, TN, FP, and FN represent true positives, true negatives, false positives, and false negatives, respectively. The evaluation metrics P, R, and F1 can be calculated as follows:

4.3. Baselines

In the experiment, four typical NER models were selected as baselines to verify the performance of the proposed CLFF-NER model on the CTFCDataSet dataset.

BERT-CRF [7]: A model widely used for NER tasks. Combines BERT’s powerful contextual representations with the sequence labeling capability of CRF.

BERT-Softmax [4]: Simple structure with independent decoding, without relying on a CRF layer. Faster training and inference, easier to implement; suitable for scenarios with large datasets or less strict label sequence constraints.

BERT-BiLSTM-CRF [9]: Adds a BiLSTM layer on top of BERT embeddings to further capture sequential contextual features, followed by a CRF layer for sequence decoding. Integrates deep semantic representations (BERT), long-distance dependency modeling (BiLSTM), and label dependency modeling (CRF), generally achieving higher accuracy and robustness than the previous two models.

BiLSTM-CRF [31]: A pure deep learning approach using BiLSTM for contextual feature extraction and CRF for sequence decoding. Does not rely on pre-trained language models, has a clear structure, and can be trained stably on small to medium-sized datasets. It remains a strong baseline in traditional NER tasks.

4.4. Experimental Environment

In the experiments, all models were run using Keras (version 2.12.0), transformers (version 2.2.2), and Python 3.8. All experiments were conducted on the same machine with the following specifications: operating system Ubuntu 20.04.3 LTS, 31 GB RAM, Intel(R) Xeon(R) CPU E5-2686 v4, and one 12 GB NVIDIA GeForce GTX TITAN X GPU. The experimental hyperparameter settings are shown in Table 3.

Table 3.

Hyperparameter Settings.

4.5. Result

Based on the setup of the experimental environment, a series of experiments were conducted on the CTFCDataSet dataset, and the experimental results were analyzed. The experimental results are shown in Table 4.

Table 4.

Comparison of Experimental Results with Baseline Models.

Compared with baseline methods, the CLFF-NER method proposed in this study achieves the best performance on the Chinese Named Entity Recognition (NER) task using the CTFCDataSet dataset. Additionally, BERT-based methods generally perform better than those using BiLSTM models. In the stable state, all models attain stable performance in terms of the precision, recall, and F1 score metrics, respectively. Furthermore, the CLFF-NER method has advantages on the CTFCDataSet dataset and outperforms the baseline models significantly.

The generalization ability of a model is a crucial factor in evaluating its quality. To verify the generalization ability of the model proposed in this study, tests were conducted on three public datasets. As shown in Table 5 and Table 6.

Table 5.

Comparison on the Weibo dataset.

Table 6.

Comparison on the Resume dataset.

To better understand the model’s recognition performance for each entity type, the P, R, and F1 score were calculated for each entity category. The results are presented in Table 7.

Table 7.

Recognition results for each type of entity.

To verify the importance of each module, experimental validation was conducted. As shown in Table 8.

Table 8.

Ablation Experiment.

The MKN module proposed in this paper is designed to capture more local information, and the statistic on entity length distribution have been calculated, as shown in Figure 5.

Figure 5.

The CTFCDataSet’s Distribution of Entity Lengths.

5. Conclusions

5.1. Result Analysis

To verify whether the model performs well, experiments were conducted on three public datasets: CTFCDataSet, Resume, and Weibo. The experimental results indicate that the proposed model has good performance and generalization. A detailed analysis is as follows:

Table 4 shows that the proposed model achieves the best performance on the CTFCDataSet compared with the other four baseline models, indicating that the overall performance of the proposed model on the CTFCDataSet is good. Compared with BERT-CRF, the model’s P, R, and F1 values increase by 3.6%, 1.57%, and 2.60%, respectively; compared with BERT-Softmax, P, R, and F1 increase by 3.47%, 1.37%, and 2.44%, respectively; compared with BERT-LSTM-CRF, P and F1 increase by 0.89% and 0.32%, while R decreases by 0.27%; compared with BiLSTM-CRF, P, R, and F1 increase by 6.54%, 13.01%, and 9.89%, respectively. Compared with the four baseline models, the proposed model achieves higher F1 scores on the CTFCDataSet.

Table 5 shows that on the Resume dataset, the model proposed in this paper is highly competitive compared to other models, achieving the highest recall rate of 96.93% compared to several other models. Compared with the ISEA-NER model, the P value of our model decreases by 0.21%, the R value increases by 0.45%, and the F1 score increases by 0.11%; compared with KGNER, the F1 score increases by 0.04%; compared with HREB-CRF, the P, R, and F1 scores increase by 0.44%, 0.32%, and 0.37%, respectively; compared with GPAT-NER, the P and F1 values decrease by 0.41% and 0.04%, while the R value increases by 0.30%. Compared to the other four models, our model achieves performance that is either superior or comparable.

Table 6 shows that on the Weibo dataset, the model presented in this paper is highly competitive compared to the other models. It also achieves the highest recall rate of 72.84% among several models. Compared to the ISEA-NER model, this model improves the precision (P), recall (R), and F1 score by 1.47%, 2.34%, and 1.91%, respectively; compared to KGNER, the F1 score increases by 0.86%; compared to IFCG-NER, P, R, and F1 increase by 2.45%, 1.67%, and 2.06%, respectively; compared to MT-Learning, P and F1 decrease by 2.22% and 1.04%, while R rises by 0.14%. Compared with the other four models, the model in this paper also achieves performance that is either superior or comparable.

Table 7 provides the P, R, and F1 score for each entity category in the CTFCDataSet. The data reveals that all metrics for each entity category exceed 80%. The PER category achieves the highest P value, while the NATION category leads in R and F1 score. The ORG category exhibits the lowest values for P, R, and F1 score, which may be due to factors such as entity frequency or annotation accuracy. The NATION category achieves the highest F1 score at 95.45%, with P and R values of 93.75% and 97.22%, respectively. The PER category follows with an F1 score of 94.05%, and P and R values of 96.34% and 91.86%, respectively. Based on the data obtained from the experiments, the model demonstrates strong performance across all entity categories in the dataset.

Table 8 shows that removing any module leads to a decrease in the overall performance of the model. Therefore, each module contributes differently. The contribution of each module in Table 8 is calculated using Formula (31):

When measuring the importance of modules by relative improvement rate, the English module shows the most significant improvement, reaching 2.43%, followed by the GNN module at 2.06%, the MKN module at 1.92%, and the Transformer module at 1.00%. This indicates that English features and graph neural networks play a relatively crucial role in overall performance. By comparing the results after removing the GNN and Transformer modules, it is observed that the model’s performance improves when the Transformer is removed. According to the feature fusion mechanism of the GNN and Transformer modules, this phenomenon may be attributed to the Transformer module introducing excessive noise, whereas the GNN selectively integrates English features, which is conducive to enhancing model performance. When the English module is removed, the model experiences the largest performance drop, reaching 2.13%, indicating that the English data contains rich, beneficial information. Incorporating English data as an auxiliary input effectively improves the model’s performance. Furthermore, removing the MKN module results in a performance decline of 1.69%. As shown in Figure 5, approximately 90% of entities in the dataset have lengths between 1 and 7, suggesting that the rich local information captured by the MKN module contributes to the improvement of model performance.

Figure 5 shows the distribution intervals of entity lengths, where Q1 represents the lower quartile, Median represents the median, and Q3 represents the upper quartile. Q1 = 2 indicates that 25% of entity lengths fall between 1 and 2; Median = 3 indicates that 50% of entity lengths are less than or equal to 3; Q3 = 4 indicates that 75% of entity lengths are less than or equal to 4. The statistical results in Figure 5 suggest that local information is crucial for improving model performance, which is why the MKN module is designed to capture more local information.

To better understand the performance of the model, a line chart was used to depict the changes in loss during the training process. The trend of loss changes is shown in Figure 6.

Figure 6.

Training loss trend.

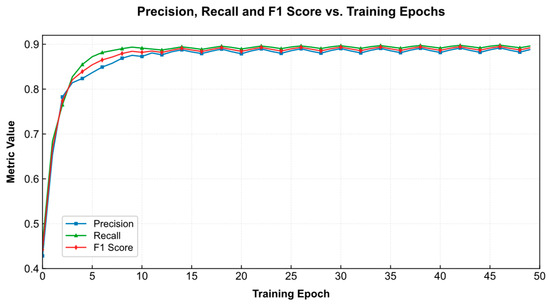

The trend of training loss in Figure 6 shows that the loss decreases rapidly at the beginning, and when the training reaches the 25th epoch, the training loss is close to zero, fully demonstrating that the model has a good convergence speed. To understand the stability of the model, the trends of accuracy, recall, and F1 score are plotted, as shown in Figure 7.

Figure 7.

Trends of precision, recall, and F1 score (CLFF-NER).

From the curve trend in Figure 7, it can be seen that the P, R, and F1 values of the model in this paper begin to stabilize after the 35th epoch, indicating that the model has good stability.

5.2. Error Analysis

To further evaluate the limitations of the proposed model, this section analyzes a representative misclassification involving a location entity. The case is shown in Table 9.

Table 9.

Case sentence.

The golden annotation shows that “云南民族村” is an O entity, its corresponding English is “Yunnan Ethnic Village”. However, the model incorrectly predicts “云南民族村” as LOC, while correctly identifying the long administrative entity. This reflects a typical false positive caused by geographic surface patterns. The phrase “云南民族村” begins with the province name “云南”, its corresponding English is “Yunnan”, and ends with the location-like suffix “村”, its corresponding English is “Village ”, causing the model to over-generalize and treat it as a geographic region. The analysis and summary of label prediction errors for the given examples are shown in Table 10. Cross-lingual embeddings and CRF transition probabilities further strengthen these biases.

Table 10.

The analysis and summary of label prediction errors.

This case demonstrates that the model performs well on standardized administrative locations but remains susceptible to semantic ambiguity between true geographic entities and cultural tourism venues, a common challenge in festival-related texts.

5.3. Summary and Future Work

The CLFF-NER model proposed in this paper integrates multi-source heterogeneous features, including multilingual contextual information, local structural information, and selective English features. Local features are extracted through the MKN module, cross-lingual feature fusion is achieved via the Transformer module, and the GNN module reduces noise interference. On the CTFCDataSet, the CLFF-NER model achieved an accuracy of 89.45%, a recall of 90.01%, and an F1 score of 89.73%, significantly outperforming several classic NER baseline models. Ablation experiments verified the contribution of each module to performance improvement, with the introduction of the English information module contributing the most, increasing the F1 score by 2.43%. CLFF-NER not only performed excellently on the CTFCDataSet but was also tested for generalization on two public NER datasets, Resume and Weibo, achieving competitive scores. On the Resume dataset, the F1 score reached 96.44%, remaining ahead of or on par with multiple advanced models. On the Weibo dataset, the F1 score reached 72.76%, also demonstrating strong robustness and domain adaptability. These results indicate that the model has good cross-domain transferability and cross-linguistic fusion capability. At the same time, experiments have also verified that introducing English data into the model can improve its performance.

The CLFF-NER model has already shown good performance and application potential in NER tasks within the field of traditional festival culture. In the future, exploration can focus on technical deepening, scenario expansion, and function upgrades to further unlock its value in cultural preservation and service areas. (1) Deep expansion of cultural digital protection: The current model has achieved the structured annotation of entities such as people, locations, and times in texts about traditional festivals. In the future, it will further integrate multimodal data (such as images, audio, and video materials) to construct a “text–multimodal” collaborative entity recognition framework, enabling accurate capture of non-text entities such as festival customs, clothing, and rituals. (2) Intelligent upgrade of cultural dissemination and tourism recommendation: In the future, a deeply personalized recommendation model will be built based on user characteristics to achieve precise matching of “festival cultural knowledge-tourism scenarios-user needs”. (3) Personalized and interactive exploration in education and popular science scenarios: Construct a layered content generation system for traditional cultural education, designing knowledge depth differences for different educational stages such as elementary, secondary, and adult education; develop interactive intelligent learning support systems, designing situational learning tasks based on entity recognition results to enhance learning interest and participation.

Author Contributions

Conceptualization, S.Y.; methodology, S.Y. and Y.H.; software, S.Y.; validation, S.Y. and K.H.; formal analysis, K.H. and W.L.; investigation, S.Y., K.H., and Y.H.; resources, S.Y.; data curation, S.Y. and W.L.; writing—original draft preparation, S.Y. and W.L.; writing—review and editing, W.L. and S.Y.; visualization, S.Y. and Y.H.; supervision, K.H. and W.L.; project administration, S.Y. and K.H.; funding acquisition, K.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by National Social Science Foundation of China [grant number 20BMZ092], SICNU Foundation for Experimental Technology [grant number SYJS2023013].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset is openly available in GitHub at https://github.com/ysh985/CTFCDataSet (14 June 2024).

Conflicts of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Geng, R.; Chen, Y.; Huang, R.; Qin, Y.; Zheng, Q. Planarized sentence representation for nested named entity recognition. Inf. Process. Manag. 2023, 60, 103352. [Google Scholar] [CrossRef]

- Qiu, Q.; Tian, M.; Huang, Z.; Xie, Z.; Ma, K.; Tao, L.; Xu, D. Chinese engineering geological named entity recognition by fusing multi-features and data enhancement using deep learning. Expert Syst. Appl. 2024, 238, 121925. [Google Scholar] [CrossRef]

- Yu, Y.T.; Wang, Z.B.; Wei, W.; Zhang, R.H.; Mao, X.L.; Feng, S.S.; Wang, F.; He, Z.Y.; Jiang, S. Exploiting global contextual information for document-level named entity recognition. Knowl.-Based Syst. 2024, 284, 111266. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018. [Google Scholar] [CrossRef]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. ALBERT: A lite BERT for self-supervised learning of language representations. arXiv 2020, arXiv:1909.11942. [Google Scholar] [CrossRef]

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.; Salakhutdinov, R.R.; Le, Q.V. XLNet: Generalized autoregressive pretraining for language understanding. arXiv 2019, arXiv:1906.08237. [Google Scholar] [CrossRef]

- Hu, S.; Zhang, H.; Hu, X.; Du, J. Chinese Named Entity Recognition based on BERT-CRF Model. In Proceedings of the 2022 IEEE/ACIS 22nd International Conference on Computer and Information Science (ICIS), Zhuhai, China, 26–28 June 2022; pp. 105–108. [Google Scholar] [CrossRef]

- Shen, Y.; Tan, M.; Sordoni, A.; Courville, A. Ordered neurons: Integrating tree structures into recurrent neural networks. arXiv 2018, arXiv:1810.09536. [Google Scholar] [CrossRef]

- Liu, Y.; Wei, S.; Huang, H.; Lai, Q.; Li, M.; Guan, L. Naming entity recognition of citrus pests and diseases based on the BERT-BiLSTM-CRF model. Expert Syst. Appl. 2023, 234, 121103. [Google Scholar] [CrossRef]

- Xu, M.; Hou, F.; Liu, J.; Zhang, M.; Shi, L.; Kou, F.; Guo, L.; Yu, P.S.; Hu, X. Multimodal named entity recognition in the era of large pre-trained models: A comprehensive survey. Inf. Fusion 2026, 127, 103767. [Google Scholar] [CrossRef]

- Kong, B.; Liu, S.; Jia, L.; Liang, Y.; Han, D.; Zhang, X. MINIGE-MNER: A multi-stage interaction network inspired by gene editing for multimodal named entity recognition. Neural Netw. 2026, 194, 108106. [Google Scholar] [CrossRef]

- Xu, M.; Peng, K.; Liu, J.; Zhang, Q.; Song, L.; Li, Y. Multimodal Named Entity Recognition based on topic prompt and multi-curriculum denoising. Inf. Fusion 2025, 124, 103405. [Google Scholar] [CrossRef]

- Zhang, H.; Lyu, L.; Chang, W.; Zhao, Y.; Peng, X. A Chinese medical named entity recognition method considering length diversity of entities. Eng. Appl. Artif. Intell. 2025, 150, 110649. [Google Scholar] [CrossRef]

- Li, J.; Sun, A.; Han, J.; Li, C. A Survey on Deep Learning for Named Entity Recognition. IEEE Trans. Knowl. Data Eng. 2022, 34, 50–70. [Google Scholar] [CrossRef]

- Francis, S.; Van Landeghem, J.; Moens, M.-F. Transfer Learning for Named Entity Recognition in Financial and Biomedical Documents. Information 2019, 10, 248. [Google Scholar] [CrossRef]

- Salinas Alvarado, J.; Verpsoor, K.; Baldwin, T. Domain Adaption of Named Entity Recognition to Support Credit Risk Assessment. In Proceedings of Australasian Language Technology Association Workshop; Hachey, B., Webster, K., Eds.; ACL: Parramatta, Australia, 2015; Available online: https://aclanthology.org/U15-1010/ (accessed on 14 June 2024).

- Song, B.; Li, F.; Liu, Y.; Zeng, X. Deep learning methods for biomedical named entity recognition: A survey and qualitative comparison. Brief Bioinform. 2021, 22, bbab282. [Google Scholar] [CrossRef] [PubMed]

- Loukas, L.; Fergadiotis, M.; Chalkidis, I.; Spyropoulou, E.; Malakasiotis, P.; Androutsopoulos, I.; Paliouras, G. FiNER: Financial Numeric Entity Recognition for XBRL Tagging. arXiv 2022, arXiv:2203.06482. [Google Scholar] [CrossRef]

- Wang, N.; Yang, H.; Wang, C.D. FinGPT: Instruction Tuning Benchmark for Open-Source Large Language Models in Financial Datasets. arXiv 2023, arXiv:2310.04793. [Google Scholar]

- Ogrinc, M.; Koroušić Seljak, B.; Eftimov, T. Zero-shot evaluation of ChatGPT for food named-entity recognition and linking. Front. Nutr. 2024, 11, 1429259. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Krstev, I.; Mishkovski, I.; Mirchev, M.; Golubova, B.; Gramatikov, S. Extracting Entities and Relations in Analyst Stock Ratings News. In ICT Innovations 2023. Learning: Humans, Theory, Machines, and Data; Mihova, M., Jovanov, M., Eds.; Communications in Computer and Information Science; Springer: Cham, Switzerland, 2024; Volume 1991. [Google Scholar] [CrossRef]

- Hu, Y.; Chen, Y.; Xu, Y. A shape composition method for named entity recognition. Neural Netw. 2025, 187, 107389. [Google Scholar] [CrossRef]

- Katz, D.M.; Hartung, D.; Gerlach, L.; Jana, A.; Bommarito, M.J., II. Natural language processing in the legal domain. arXiv 2023, arXiv:2302.12039. [Google Scholar] [CrossRef]

- Lee, K.; Wei, C.H.; Lu, Z. Recent advances of automated methods for searching and extracting genomic variant information from biomedical literature. Brief Bioinform. 2021, 22, bbaa142. [Google Scholar] [CrossRef]

- Li, I.; Pan, J.; Goldwasser, J.; Verma, N.; Wong, W.P.; Nuzumlalı, M.Y.; Rosand, B.; Li, Y.; Zhang, M.; Chang, D.; et al. Neural natural language processing for unstructured data in electronic health records: A review. Comput. Sci. Rev. 2022, 46, 100511. [Google Scholar] [CrossRef]

- Collobert, R.; Weston, J.; Bottou, L.; Karlen, M.; Kavukcuoglu, K.; Kuksa, P.P. Natural language processing (almost) from scratch. J. Mach. Learn. Res. 2011, 12, 2493–2537. [Google Scholar] [CrossRef]

- Hammerton, J.A. Named entity recognition with long short-term memory. In Proceedings of the Seventh Conference on Natural Language Learning, coNLL 2003, Edmonton, AB, Canada, 31 May–1 June 2003; Daelemans, W., Osborne, M., Eds.; ACL: Edmonton, AB, Canada, 2003; pp. 172–175. Available online: https://aclanthology.org/W03-0426/ (accessed on 14 June 2024).

- Lample, G.; Ballesteros, M.; Subramanian, S.; Kawakami, K.; Dyer, C. Neural architectures for named entity recognition. arXiv 2016. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Abilio, R.; Coelho, G.P.; da Silva, A.E.A. Evaluating Named Entity Recognition: A comparative analysis of mono- and multilingual transformer models on a novel Brazilian corporate earnings call transcripts dataset. Appl. Soft Comput. 2024, 166, 112158. [Google Scholar] [CrossRef]

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM-CRF Models for Sequence Tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar] [CrossRef]

- Mao, Q.; Li, J.; Meng, K. Improving Chinese Named Entity Recognition by Search Engine Augmentation. arXiv 2022, arXiv:2210.12662. [Google Scholar] [CrossRef]

- Gu, R.; Wang, T.; Deng, J.; Cheng, L. Improving Chinese Named Entity Recognition by Interactive Fusion of Contextual Representation and Glyph Representation. Appl. Sci. 2023, 13, 4299. [Google Scholar] [CrossRef]

- Hu, W.; He, L.; Ma, H.; Wang, K.; Xiao, J. KGNER: Improving Chinese Named Entity Recognition by BERT Infused with the Knowledge Graph. Appl. Sci. 2022, 12, 7702. [Google Scholar] [CrossRef]

- Fang, Q.; Li, Y.; Feng, H.; Ruan, Y. Chinese Named Entity Recognition Model Based on Multi-Task Learning. Appl. Sci. 2023, 13, 4770. [Google Scholar] [CrossRef]

- Sun, S.; Deng, M.; Yu, X.; Zhao, L. HREB-CRF: Hierarchical Reduced-bias EMA for Chinese Named Entity Recognition. arXiv 2025, arXiv:2503.01217. [Google Scholar] [CrossRef]

- Li, H.; Cheng, M.; Yang, Z.; Yang, L.; Chua, Y. Named entity recognition for Chinese based on global pointer and adversarial training. Sci. Rep. 2023, 13, 3242. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).