Leveraging the Graph-Based LLM to Support the Analysis of Supply Chain Information

Abstract

1. Introduction

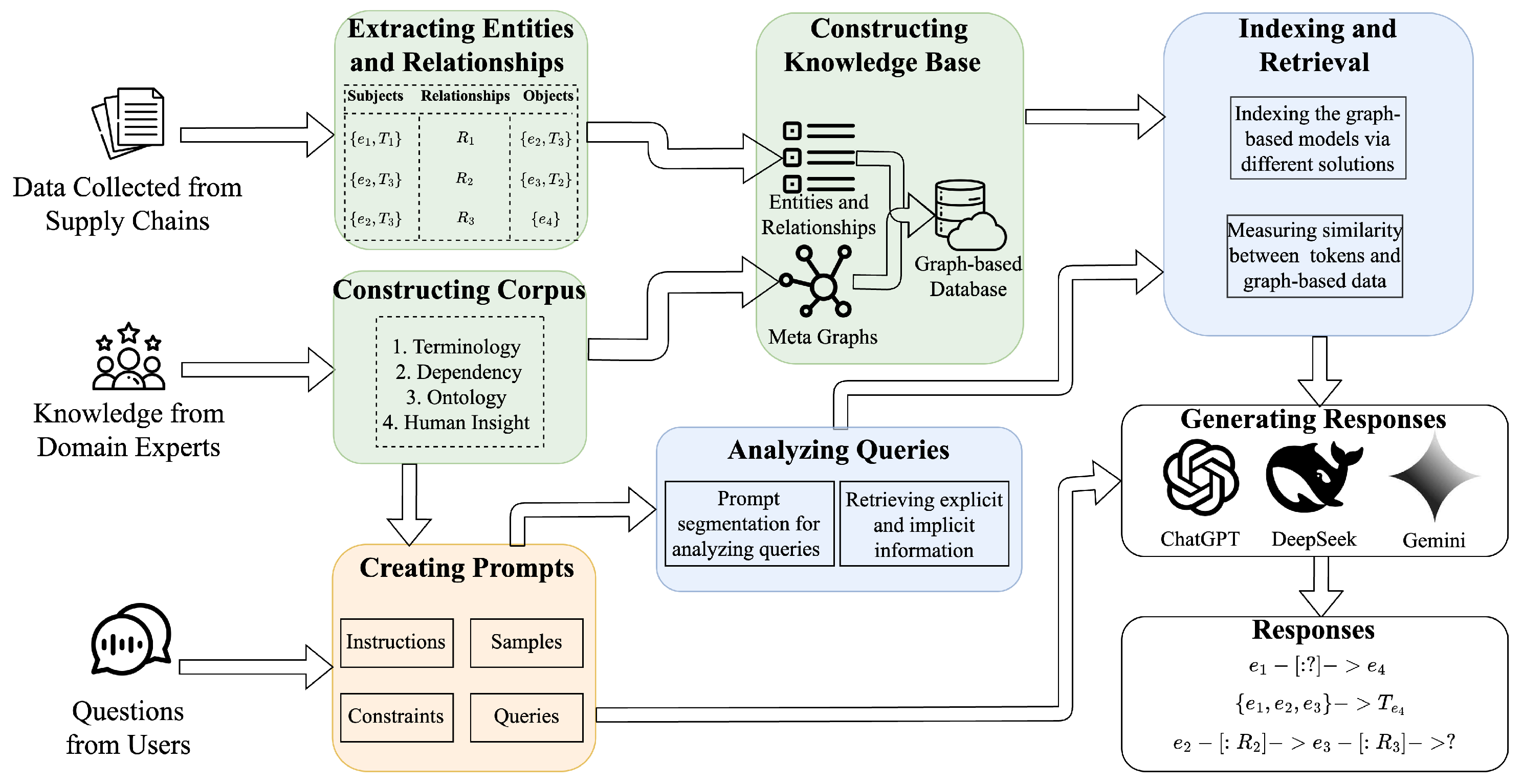

- Proposing a framework that integrates the knowledge base and LLM to support the analysis and reasoning across the graph-based data in the context of supply chain management;

- Constructing graph-based models of the domain knowledge to create the knowledge base to support LLM via proposing different indexing and retrieval solutions;

- Specifying and supporting the various tasks handled by the LLM by formulating the prompts.

2. Related Work

2.1. Overview of Graph-Based LLM

2.2. Overview of RAG

2.3. Applying LLM to Support Analysis of Supply Chain Information

3. Proposed Experimental Framework

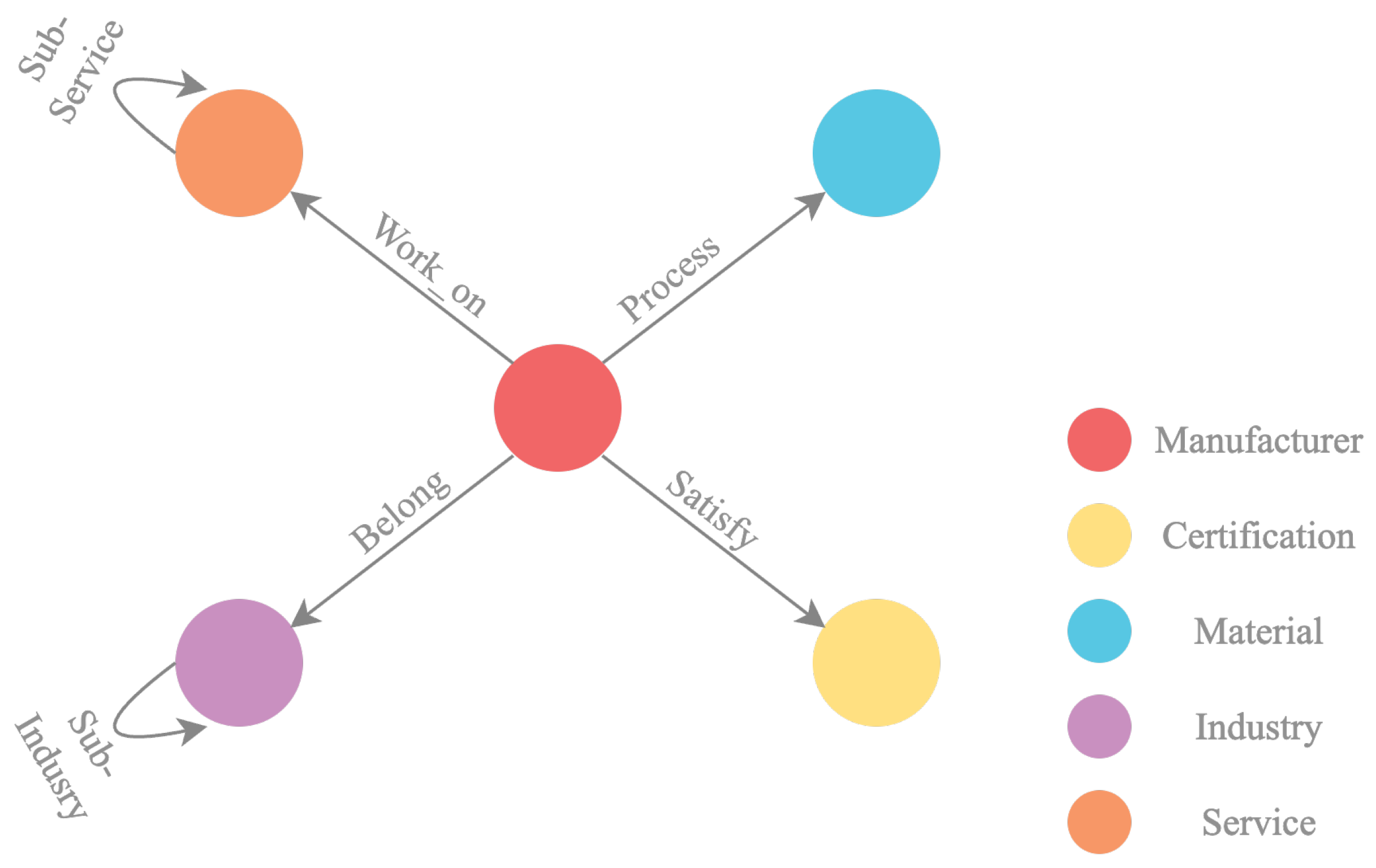

3.1. Graph-Based Models for Knowledge Construction

3.2. Indexing of the Graph-Based Models

3.3. Retrieval of Information from Knowledge Base

3.4. Prompt Creation to Support Query Analysis

4. Experimental Results

4.1. Constructing the Graph-Based Knowledge Base

4.2. Indexing and Retrieving Graph-Based Models

4.3. Creating Prompts for Generating Responses

- You are an expert AI assistant specializing in supply chain management;

- Your task is to give responses based on the retrieved multi-level graph-based models.

- The generated responses should follows this format: - (Entity A)-[:Relationship]→(Entity B).

- Based on the generated responses, we need you to provide a detailed reasoning process with human-understandable representation.

- If the retrieved models yield no direct matches for the queries, we need you to infer the possible results based on the known situations.

- User Queries: Which manufacturers work in the material field and satisfy ISO 9001?

- Try to retrieve the entities which obtain manufacturers in the material field from the knowledge base. For example, Entity Name 149401-us.all.biz is working in the material industry, and it satisfies ISO 9001.

- The generated responses should contain all the relationships and entities related to the company 149401-us.all.biz, formulated as follows:(149401-us.all.biz)-[:Certification]→ (ISO9001)(149401-us.all.biz)-[:Process]→ (Woods).

4.4. Performance Evaluation

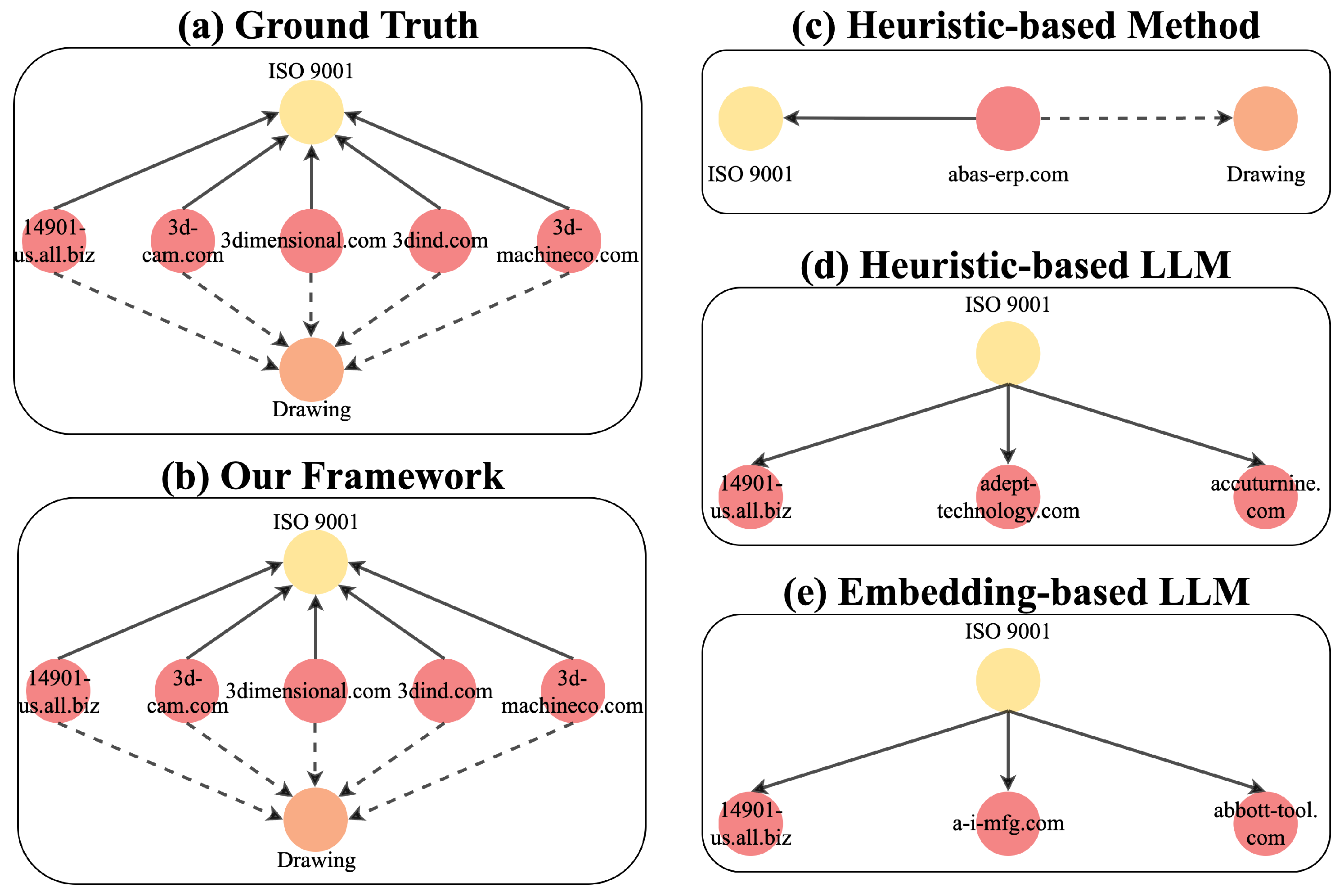

5. Error Analysis and Discussion

- Factual hallucination refers to responses in which the LLM follows the schema defined in the prompts but produces disinformation that violates factual accuracy. We observe this type of error commonly existed in LLM adopting embedding-based retrieval. For example, although the manufacturer actually meets the ISO 9001 standard, the LLM incorrectly states that it meets the ISO 14001 standard, as these two standards have closely related embeddings.

- Faithfulness hallucination refers to responses in which the LLM fails to provide valid answers. We observe this type of error frequently in LLMs that adopt heuristic-based retrieval, particularly when the model cannot retrieve relevant information from the knowledge base. For example, when an input prompt contains keywords that do not exist in the corpus, the LLM is unable to generate valid responses through heuristic-based retrieval and may instead produce incorrect answers (e.g., reproducing examples from few-shot learning in the prompts).

- The omittance of intermediate relationships within multi-depth reasoning refers to responses in which the LLM generates an output which imprecisely merges the multi-depth relationships of graph-based data retrieved from the knowledge base. We observe this type of error frequently in our proposed framework. For example, while the expected output should be [A]–(:r1)–[B]–>(:r2)–>[C], the actual output is [A]–>(:r3)–>[C], where the relationship r3 could be related to the semantics of r1 and r2; nevertheless, the intermediate relationships –(:r1)–[B]–>(:r2) are omitted. We believe this issue may arise because the generated responses contain multi-depth relationships (e.g., one or more hops across entities), which can cause the LLM to become confused about the expected output patterns.

6. Conclusions and Future Work

- While our framework adopts two different indexing and retrieval methods, these approaches can be further integrated into a unified solution to support the RAG process. This solution can simultaneously support the analysis of keywords and embedding-based data.

- While our framework currently relies solely on the inference and generation capabilities of the LLM, it can be further extended by developing domain-specific DL models through API calls triggered by special tokens. Such integration has the potential to further enhance overall performance.

- While our framework provides initial demonstrations, future work can explore integration with LLM frameworks such as LangChain to support broader industrial applications.

- The real-time performance needs to be further evaluated and optimized by applying pruning techniques to the LLM. Such pruning enables the deployment of our framework on edge devices.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Dolgui, A.; Ivanov, D.; Sokolov, B. Ripple effect in the supply chain: An analysis and recent literature. Int. J. Prod. Res. 2018, 56, 414–430. [Google Scholar] [CrossRef]

- Zheng, X.; Zhang, L. Risk assessment of supply-chain systems: A probabilistic inference method. Enterp. Inf. Syst. 2020, 14, 858–877. [Google Scholar] [CrossRef]

- Abdirad, M.; Krishnan, K. Industry 4.0 in logistics and supply chain management: A systematic literature review. Eng. Manag. J. 2021, 33, 187–201. [Google Scholar] [CrossRef]

- Maddikunta, P.K.R.; Pham, Q.V.; Prabadevi, B.; Deepa, N.; Dev, K.; Gadekallu, T.R.; Ruby, R.; Liyanage, M. Industry 5.0: A survey on enabling technologies and potential applications. J. Ind. Inf. Integr. 2022, 26, 100257. [Google Scholar] [CrossRef]

- Su, P.; Chen, D. Designing a knowledge-enhanced framework to support supply chain information management. J. Ind. Inf. Integr. 2025, 47, 100874. [Google Scholar] [CrossRef]

- Mishra, D.; Gunasekaran, A.; Papadopoulos, T.; Childe, S.J. Big Data and supply chain management: A review and bibliometric analysis. Ann. Oper. Res. 2018, 270, 313–336. [Google Scholar] [CrossRef]

- Gonzálvez-Gallego, N.; Molina-Castillo, F.J.; Soto-Acosta, P.; Varajao, J.; Trigo, A. Using integrated information systems in supply chain management. Enterp. Inf. Syst. 2015, 9, 210–232. [Google Scholar] [CrossRef]

- Wu, W.; Shen, L.; Zhao, Z.; Li, M.; Huang, G.Q. Industrial IoT and long short-term memory network-enabled genetic indoor-tracking for factory logistics. IEEE Trans. Ind. Inform. 2022, 18, 7537–7548. [Google Scholar] [CrossRef]

- Singla, T.; Anandayuvaraj, D.; Kalu, K.G.; Schorlemmer, T.R.; Davis, J.C. An empirical study on using large language models to analyze software supply chain security failures. In Proceedings of the 2023 Workshop on Software Supply Chain Offensive Research and Ecosystem Defenses, Copenhagen, Denmark, 30 November 2023; pp. 5–15. [Google Scholar]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of hallucination in natural language generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. In Advances in Neural Information Processing Systems, Proceedings of the Conference and Workshop on Neural Information Processing Systems 2022, New Orleans, LA, USA, 27 November–8 December 2022; NeurIPS Foundation: La Jolla, CA, USA, 2022; Volume 35, pp. 24824–24837. [Google Scholar]

- Peng, B.; Zhu, Y.; Liu, Y.; Bo, X.; Shi, H.; Hong, C.; Zhang, Y.; Tang, S. Graph retrieval-augmented generation: A survey. arXiv 2024, arXiv:2408.08921. [Google Scholar] [CrossRef]

- Wang, Y.; Cheng, Y.; Qi, Q.; Tao, F. IDS-KG: An industrial dataspace-based knowledge graph construction approach for smart maintenance. J. Ind. Inf. Integr. 2024, 38, 100566. [Google Scholar] [CrossRef]

- Su, P.; Xu, R.; Wu, W.; Chen, D. Integrating Large Language Model and Logic Programming for Tracing Renewable Energy Use Across Supply Chain Networks. Appl. Syst. Innov. 2025, 8, 160. [Google Scholar] [CrossRef]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.t.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive nlp tasks. In Advances in Neural Information Processing Systems, Proceedings of the Conference and Workshop on Neural Information Processing Systems 2020, Vancouver, BC, Canada, 6–12 December 2020; NeurIPS Foundation: La Jolla, CA, USA, 2020; Volume 33, pp. 9459–9474. [Google Scholar]

- Wu, T.; He, S.; Liu, J.; Sun, S.; Liu, K.; Han, Q.L.; Tang, Y. A Brief Overview of ChatGPT: The History, Status Quo and Potential Future Development. IEEE/CAA J. Autom. Sin. 2023, 10, 1122–1136. [Google Scholar] [CrossRef]

- Yenduri, G.; Srivastava, G.; Maddikunta, P.K.R.; Jhaveri, R.H.; Wang, W.; Vasilakos, A.V.; Gadekallu, T.R. Generative pre-trained transformer: A comprehensive review on enabling technologies, potential applications, emerging challenges, and future directions. arXiv 2023, arXiv:2305.10435. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://www.mikecaptain.com/resources/pdf/GPT-1.pdf (accessed on 4 November 2025).

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.; Salakhutdinov, R.R.; Le, Q.V. Xlnet: Generalized autoregressive pretraining for language understanding. In Advances in Neural Information Processing Systems, Proceedings of the Conference and Workshop on Neural Information Processing Systems 2019, Vancouver, BC, Canada, 8–14 December 2019; NeurIPS Foundation: La Jolla, CA, USA, 2019; Volume 32. [Google Scholar]

- Sahoo, P.; Singh, A.K.; Saha, S.; Jain, V.; Mondal, S.; Chadha, A. A systematic survey of prompt engineering in large language models: Techniques and applications. arXiv 2024, arXiv:2402.07927. [Google Scholar] [CrossRef]

- Dong, Q.; Li, L.; Dai, D.; Zheng, C.; Ma, J.; Li, R.; Xia, H.; Xu, J.; Wu, Z.; Liu, T.; et al. A survey on in-context learning. arXiv 2022, arXiv:2301.00234. [Google Scholar]

- Shi, F.; Chen, X.; Misra, K.; Scales, N.; Dohan, D.; Chi, E.H.; Schärli, N.; Zhou, D. Large language models can be easily distracted by irrelevant context. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 31210–31227. [Google Scholar]

- Hu, H.; Lu, H.; Zhang, H.; Song, Y.Z.; Lam, W.; Zhang, Y. Chain-of-symbol prompting elicits planning in large langauge models. arXiv 2023, arXiv:2305.10276. [Google Scholar]

- Su, P.; Chen, D. Adopting graph neural networks to analyze human–object interactions for inferring activities of daily living. Sensors 2024, 24, 2567. [Google Scholar] [CrossRef]

- Jin, B.; Liu, G.; Han, C.; Jiang, M.; Ji, H.; Han, J. Large language models on graphs: A comprehensive survey. IEEE Trans. Knowl. Data Eng. 2024, 26, 8622–8642. [Google Scholar] [CrossRef]

- Guo, T.; Guo, K.; Liang, Z.; Guo, Z.; Chawla, N.V.; Wiest, O.; Zhang, X. What indeed can GPT models do in chemistry? A comprehensive benchmark on eight tasks. arXiv 2023, arXiv:2305.18365. [Google Scholar]

- He, X.; Bresson, X.; Laurent, T.; Perold, A.; LeCun, Y.; Hooi, B. Harnessing explanations: Llm-to-lm interpreter for enhanced text-attributed graph representation learning. arXiv 2023, arXiv:2305.19523. [Google Scholar]

- Lacombe, R.; Gaut, A.; He, J.; Lüdeke, D.; Pistunova, K. Extracting molecular properties from natural language with multimodal contrastive learning. arXiv 2023, arXiv:2307.12996. [Google Scholar] [CrossRef]

- Kim, J.; Kwon, Y.; Jo, Y.; Choi, E. KG-GPT: A general framework for reasoning on knowledge graphs using large language models. arXiv 2023, arXiv:2310.11220. [Google Scholar] [CrossRef]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, H.; Wang, H. Retrieval-augmented generation for large language models: A survey. arXiv 2023, arXiv:2312.10997. [Google Scholar]

- Chen, J.; Lin, H.; Han, X.; Sun, L. Benchmarking large language models in retrieval-augmented generation. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 17754–17762. [Google Scholar]

- Huang, Y.; Li, Y.; Xu, Y.; Zhang, L.; Gan, R.; Zhang, J.; Wang, L. Mvp-tuning: Multi-view knowledge retrieval with prompt tuning for commonsense reasoning. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; Volume 1, pp. 13417–13432. [Google Scholar]

- Mavromatis, C.; Karypis, G. Gnn-rag: Graph neural retrieval for large language model reasoning. arXiv 2024, arXiv:2405.20139. [Google Scholar] [CrossRef]

- Li, B.; Mellou, K.; Zhang, B.; Pathuri, J.; Menache, I. Large language models for supply chain optimization. arXiv 2023, arXiv:2307.03875. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. In Advances in Neural Information Processing Systems, Proceedings of the Conference and Workshop on Neural Information Processing Systems 2020, Vancouver, BC, Canada, 6–12 December 2020; NeurIPS Foundation: La Jolla, CA, USA, 2020; Volume 33, pp. 1877–1901. [Google Scholar]

- Zheng, G.; Almahri, S.; Xu, L.; Minaricova, M.; Brintrup, A. LLMs in Supply Chain Management: Opportunities and Case Study. IFAC-PapersOnLine 2025, 59, 2951–2956. [Google Scholar] [CrossRef]

- Aghaei, R.; Kiaei, A.A.; Boush, M.; Vahidi, J.; Barzegar, Z.; Rofoosheh, M. The Potential of Large Language Models in Supply Chain Management: Advancing Decision-Making, Efficiency, and Innovation. arXiv 2025, arXiv:2501.15411. [Google Scholar] [CrossRef]

- Khan, T.; Emon, M.M.H.; Rahman, M.A. A systematic review on exploring the influence of Industry 4.0 technologies to enhance supply chain visibility and operational efficiency. Rev. Bus. Econ. Stud. 2024, 12, 6–27. [Google Scholar] [CrossRef]

- Han, K. Applying graph neural network to SupplyGraph for supply chain network. arXiv 2024, arXiv:2408.14501. [Google Scholar] [CrossRef]

- Zdravković, M.; Panetto, H.; Trajanović, M.; Aubry, A. An approach for formalising the supply chain operations. Enterp. Inf. Syst. 2011, 5, 401–421. [Google Scholar] [CrossRef]

- Kosasih, E.E.; Margaroli, F.; Gelli, S.; Aziz, A.; Wildgoose, N.; Brintrup, A. Towards knowledge graph reasoning for supply chain risk management using graph neural networks. Int. J. Prod. Res. 2024, 62, 5596–5612. [Google Scholar] [CrossRef]

- Tiddi, I.; Schlobach, S. Knowledge graphs as tools for explainable machine learning: A survey. Artif. Intell. 2022, 302, 103627. [Google Scholar] [CrossRef]

- Liu, S.; Zeng, Z.; Chen, L.; Ainihaer, A.; Ramasami, A.; Chen, S.; Xu, Y.; Wu, M.; Wang, J. TigerVector: Supporting Vector Search in Graph Databases for Advanced RAGs. arXiv 2025, arXiv:2501.11216. [Google Scholar] [CrossRef]

- He, X.; Tian, Y.; Sun, Y.; Chawla, N.; Laurent, T.; LeCun, Y.; Bresson, X.; Hooi, B. G-retriever: Retrieval-augmented generation for textual graph understanding and question answering. In Advances in Neural Information Processing Systems, Proceedings of the Conference and Workshop on Neural Information Processing Systems 2024, Vancouver, BC, Canada, 10–15 December 2024; NeurIPS Foundation: La Jolla, CA, USA, 2024; Volume 37, pp. 132876–132907. [Google Scholar]

- Shu, Y.; Yu, Z.; Li, Y.; Karlsson, B.F.; Ma, T.; Qu, Y.; Lin, C.Y. Tiara: Multi-grained retrieval for robust question answering over large knowledge bases. arXiv 2022, arXiv:2210.12925. [Google Scholar] [CrossRef]

- Lu, X. Automatic analysis of syntactic complexity in second language writing. Int. J. Corpus Linguist. 2010, 15, 474–496. [Google Scholar] [CrossRef]

- Yan, H.; Yang, J.; Wan, J. KnowIME: A system to construct a knowledge graph for intelligent manufacturing equipment. IEEE Access 2020, 8, 41805–41813. [Google Scholar] [CrossRef]

- Li, Y.; Liu, X.; Starly, B. Manufacturing service capability prediction with Graph Neural Networks. J. Manuf. Syst. 2024, 74, 291–301. [Google Scholar] [CrossRef]

- Team, G.; Anil, R.; Borgeaud, S.; Alayrac, J.B.; Yu, J.; Soricut, R.; Schalkwyk, J.; Dai, A.M.; Hauth, A.; Millican, K.; et al. Gemini: A family of highly capable multimodal models. arXiv 2023, arXiv:2312.11805. [Google Scholar] [CrossRef]

- Es, S.; James, J.; Anke, L.E.; Schockaert, S. Ragas: Automated evaluation of retrieval augmented generation. In Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics: System Demonstrations, St. Julian’s, Malta, 17–22 March 2024; pp. 150–158. [Google Scholar]

- Behmanesh, E.; Pannek, J. Modeling and random path-based direct encoding for a closed loop supply chain model with flexible delivery paths. IFAC-PapersOnLine 2016, 49, 78–83. [Google Scholar] [CrossRef]

- Zhang, J. Graph-toolformer: To empower llms with graph reasoning ability via prompt augmented by chatgpt. arXiv 2023, arXiv:2304.11116. [Google Scholar]

- Bast, H.; Haussmann, E. More accurate question answering on freebase. In Proceedings of the 24th ACM International on Conference on Information and Knowledge Management, Melbourne, Australia, 19–23 October 2015; pp. 1431–1440. [Google Scholar]

- Su, P.; Xiang, C.; Chen, D. Adopting Graph Neural Networks to Understand and Reason about Dynamic Driving Scenarios. IEEE Open J. Intell. Transp. Syst. 2025, 6, 579–589. [Google Scholar] [CrossRef]

| Label of Stereotypes | Relationship | Label of Stereotypes |

|---|---|---|

| Manufacturers | Process | Materials |

| Manufacturers | Satisfy | Certifications |

| Manufacturers | Work on | Services |

| Manufacturers | Belong | Industries |

| Industries | Sub-Industry | Industries |

| Services | Sub-Services | Services |

| Template A | Template B | Template C | Average | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Our Framework | 0.59 | 0.67 | 0.63 | 0.63 | 0.62 | 0.67 | 0.64 | 0.72 | 0.70 | 0.62 | 0.67 | 0.67 | |

| LLM-based Solution | without RAG | 0.28 | 0.25 | 0.26 | 0.25 | 0.22 | 0.23 | 0.23 | 0.20 | 0.21 | 0.25 | 0.22 | 0.23 |

| Embeddings Retrieval | 0.45 | 0.39 | 0.42 | 0.42 | 0.38 | 0.40 | 0.48 | 0.43 | 0.45 | 0.45 | 0.40 | 0.42 | |

| Heuristic Retrieval | 0.35 | 0.29 | 0.31 | 0.28 | 0.23 | 0.25 | 0.36 | 0.30 | 0.33 | 0.33 | 0.27 | 0.30 | |

| Heuristic Search | 0.33 | 0.28 | 0.30 | 0.19 | 0.16 | 0.17 | 0.34 | 0.29 | 0.31 | 0.29 | 0.24 | 0.26 | |

| The Proposed Framework | LLM-Based Solutions | The Heuristic Search | |||

|---|---|---|---|---|---|

| Without RAG | Embeddings Retrieval | Heuristic Retrieval | |||

| Average Time Elapse (second) | 2.43 | 2.13 | 4.35 | 2.66 | 6.73 |

| Worst-Case Execution Time (second) | 3.53 | 2.57 | 6.14 | 4.46 | 7.18 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, P.; Xu, R.; Chen, D. Leveraging the Graph-Based LLM to Support the Analysis of Supply Chain Information. Informatics 2025, 12, 124. https://doi.org/10.3390/informatics12040124

Su P, Xu R, Chen D. Leveraging the Graph-Based LLM to Support the Analysis of Supply Chain Information. Informatics. 2025; 12(4):124. https://doi.org/10.3390/informatics12040124

Chicago/Turabian StyleSu, Peng, Rui Xu, and Dejiu Chen. 2025. "Leveraging the Graph-Based LLM to Support the Analysis of Supply Chain Information" Informatics 12, no. 4: 124. https://doi.org/10.3390/informatics12040124

APA StyleSu, P., Xu, R., & Chen, D. (2025). Leveraging the Graph-Based LLM to Support the Analysis of Supply Chain Information. Informatics, 12(4), 124. https://doi.org/10.3390/informatics12040124

_Bryant.png)