GraderAssist: A Graph-Based Multi-LLM Framework for Transparent and Reproducible Automated Evaluation

Abstract

1. Introduction

1.1. Background and Motivation

1.2. Research Gap, Objectives, and Contributions

- It introduces GraderAssist, a rubric-guided multi-LLM framework for evaluating open-ended tasks;

- It formalizes a multi-evaluator setting with several LLMs as independent graders and GPT-4 as a reference for consistency analyses;

- It profiles model feedback (embeddings and clustering) to compare style, rubric adherence, and evaluative depth;

- It provides graph-based storage for traceable, reproducible, and queryable evaluation;

- It demonstrates the pedagogical relevance of rubric-guided multi-model assessment, emphasizing fairness, reliability, and interpretability.

1.3. Related Work

2. Materials and Methods

2.1. Dataset and Rubric Design

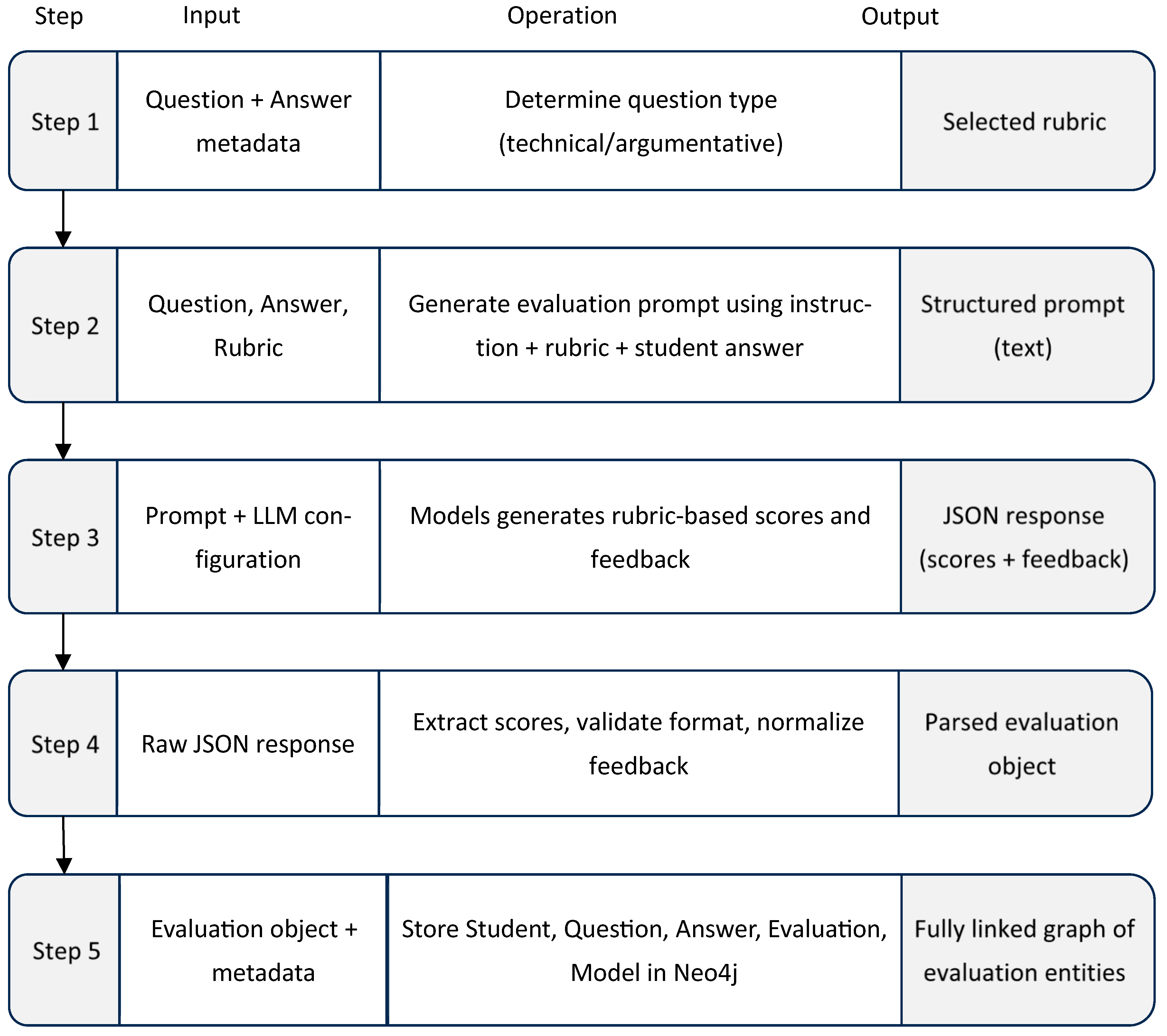

2.2. Evaluation Pipeline and Prompt Structure

2.3. Model Configuration and Scoring Protocol

2.4. Graph-Based Storage and Evaluation Traceability

3. Results

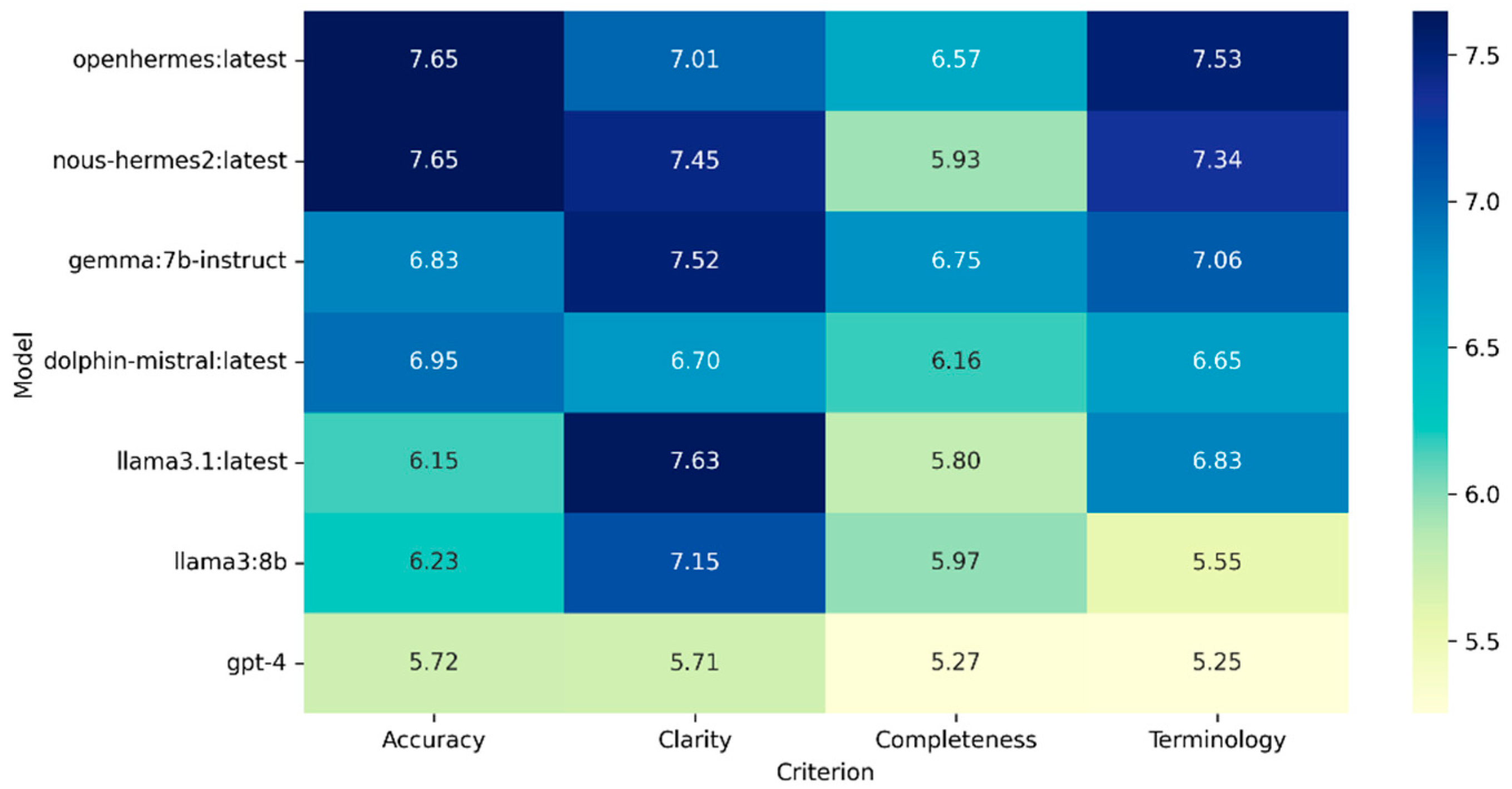

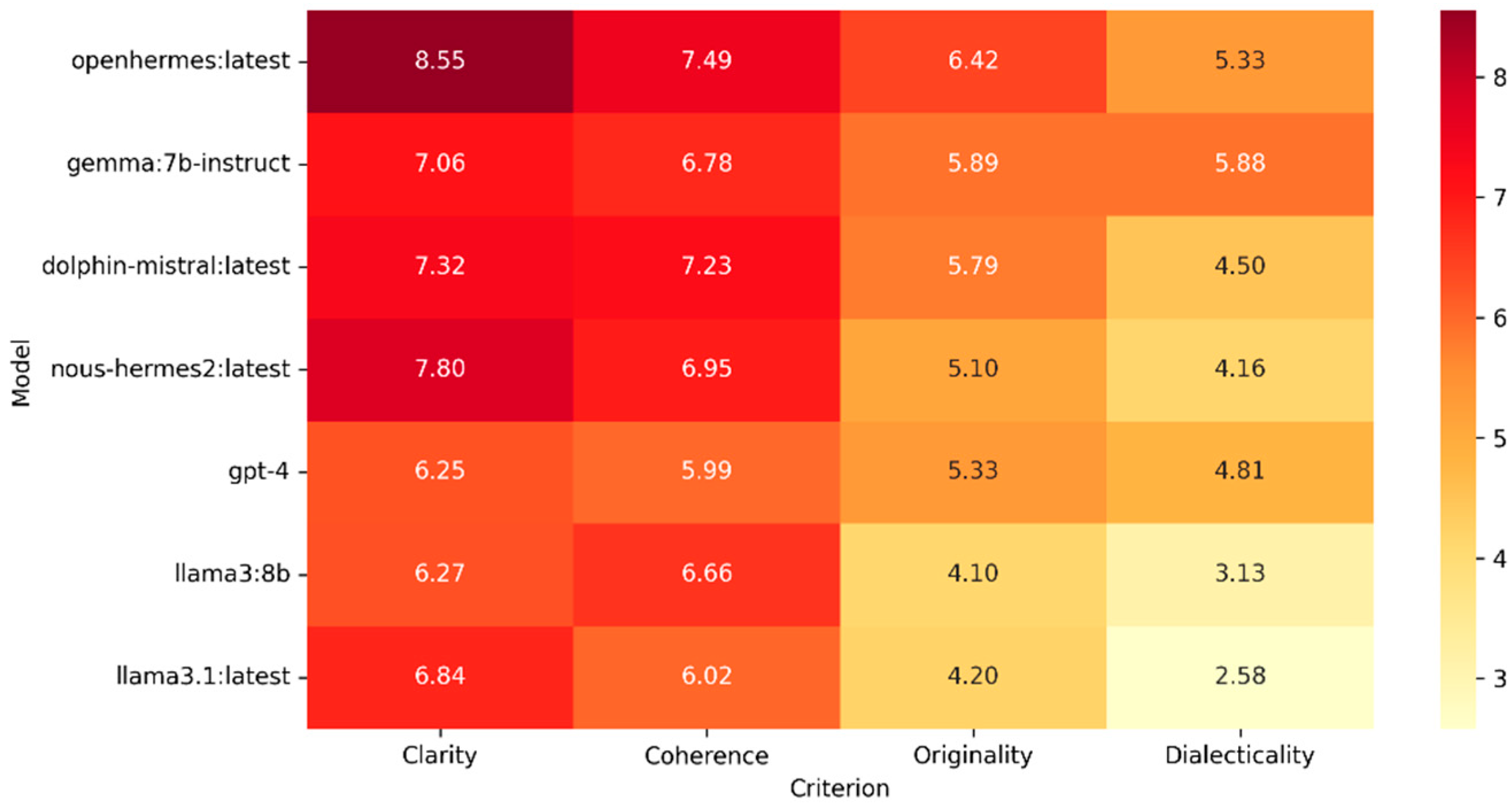

3.1. Cross-Model Scoring Drift per Rubric Criterion

3.2. Rubric Alignment and Stylistic Variation in Model-Generated Feedback

3.3. Semantic Divergence in Feedback Across LLMs

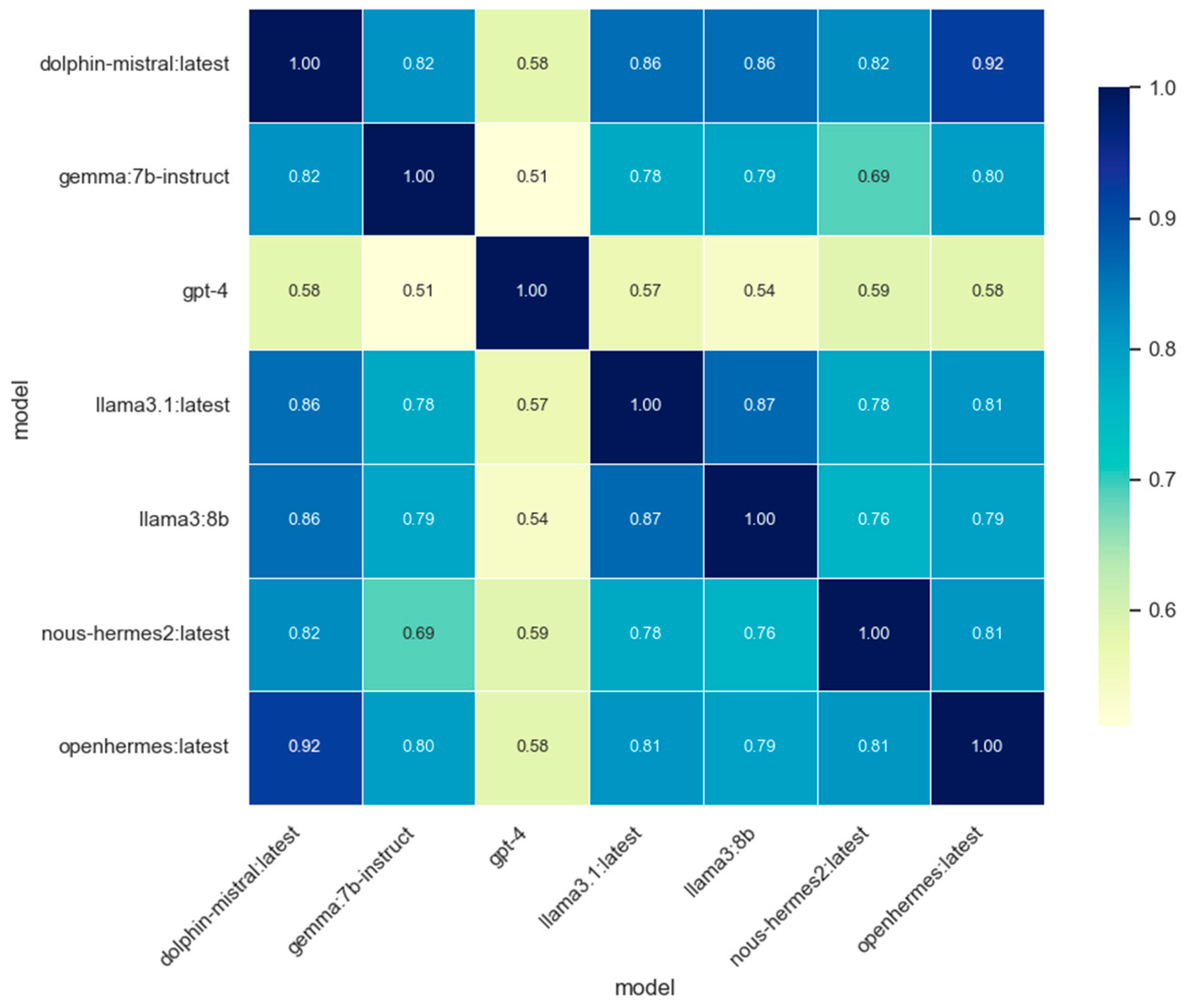

3.4. Inter-Model Scoring Agreement Across Shared Rubric Criteria

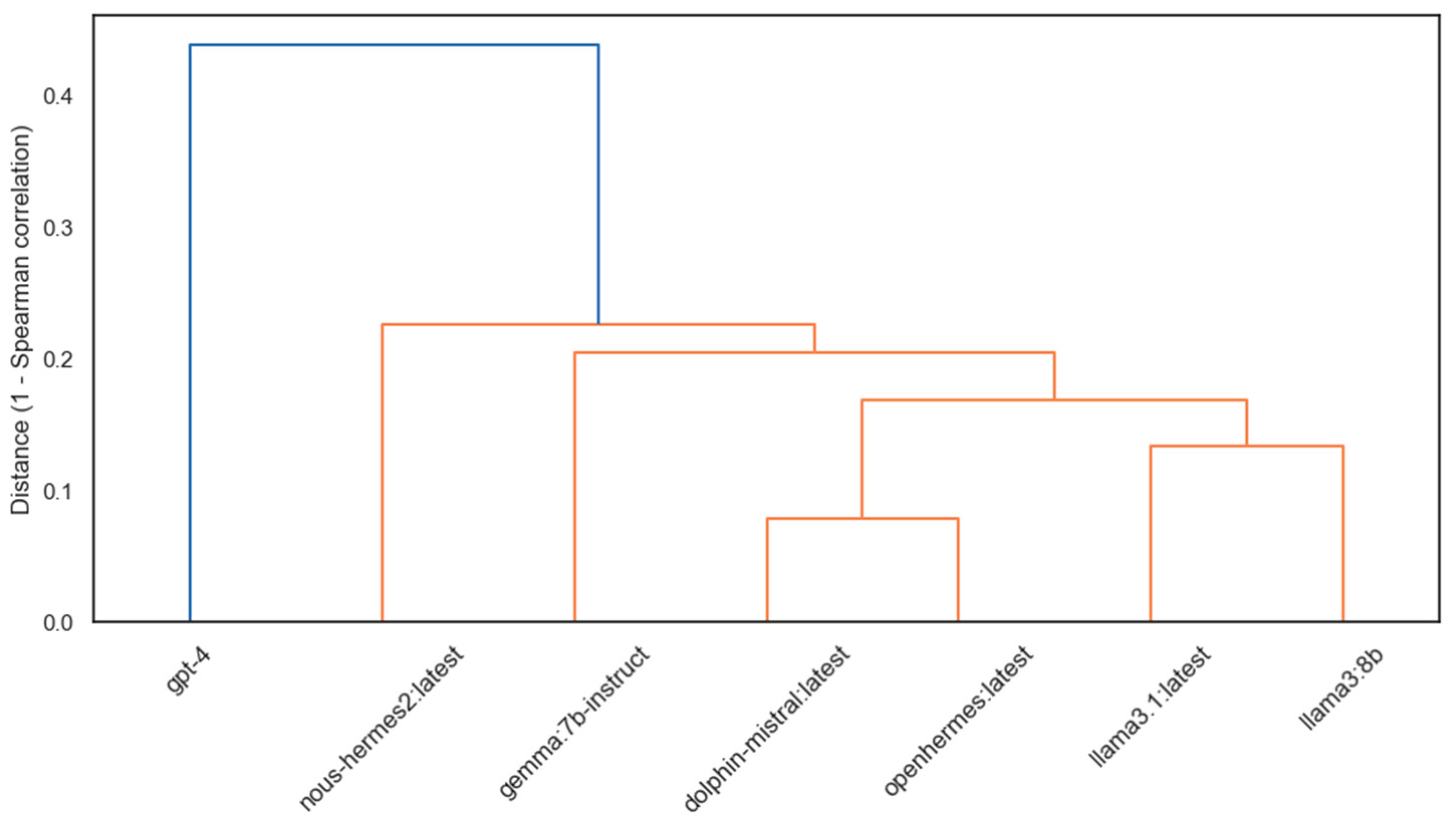

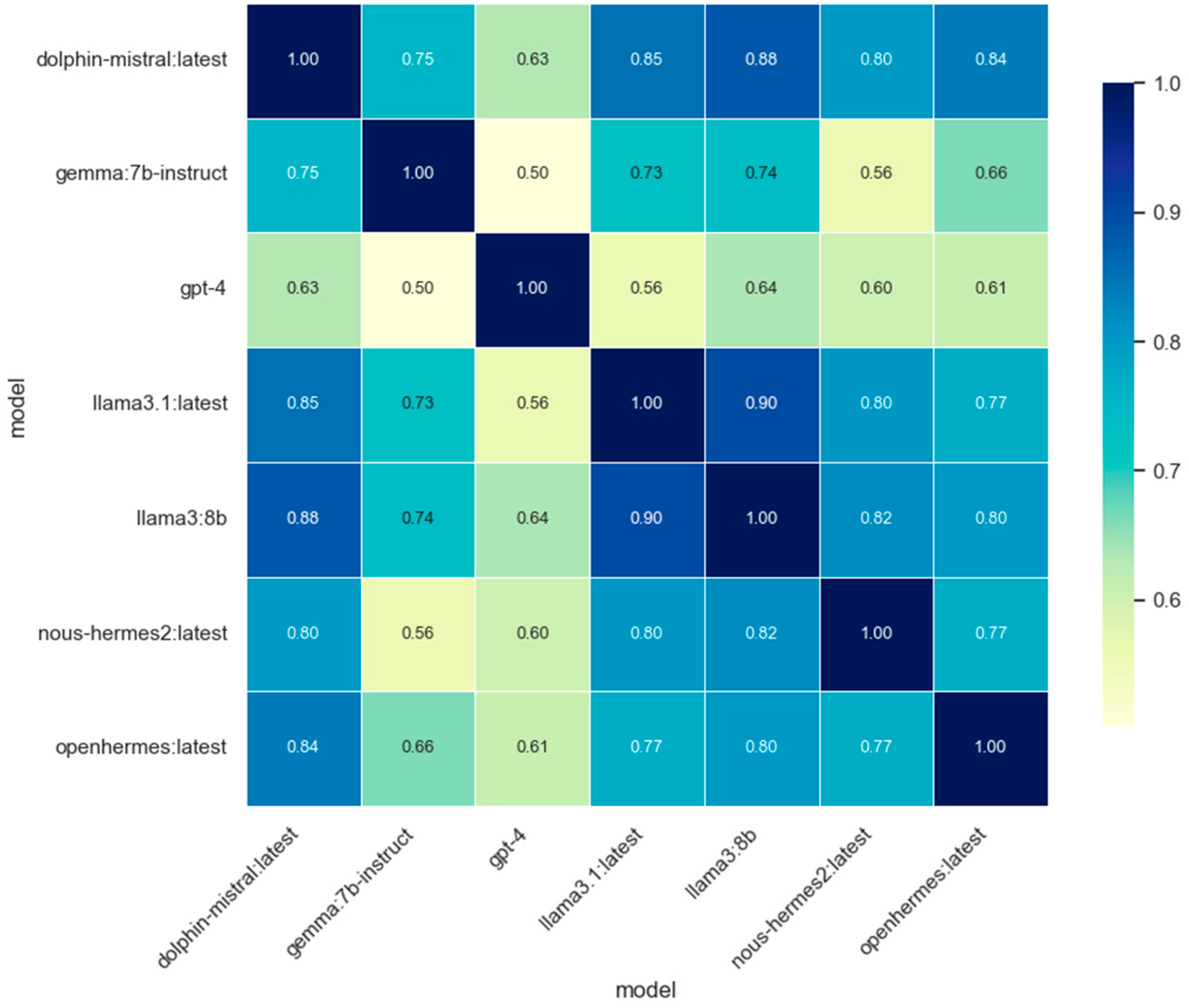

3.4.1. Technical Rubric

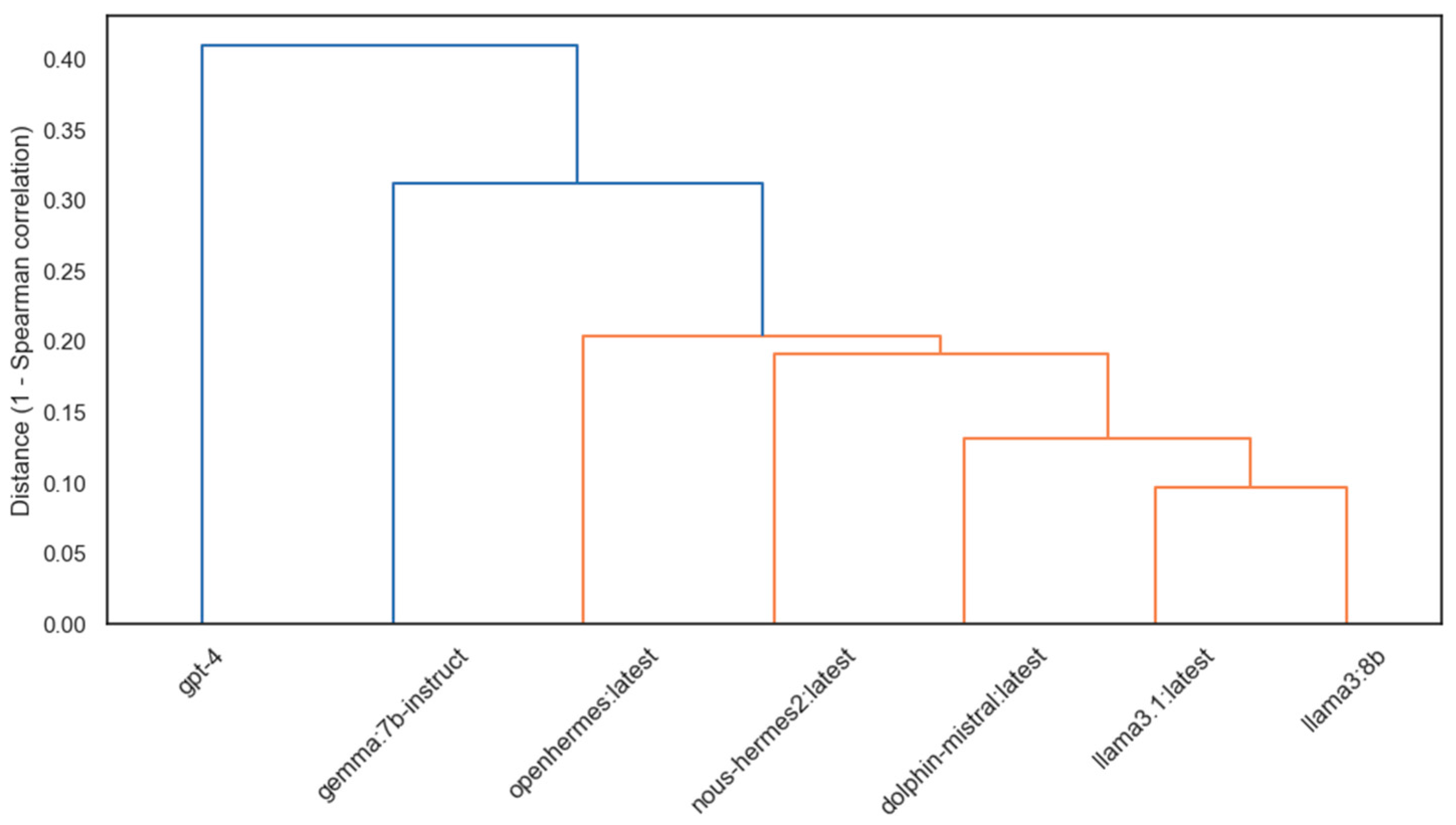

3.4.2. Argumentative Rubric

Interpretation and Implications

3.5. Evaluation Latency and Response Length

4. Discussion

4.1. Interpretation of Results

4.2. Implications for Automated Assessment

4.3. Methodological Considerations

4.4. Limitations

4.5. Future Work

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mistler, W. Formal Assessment in STEM Higher Education. J. Tech. Stud. 2025, 1, 429. [Google Scholar] [CrossRef]

- French, S.; Dickerson, A.; Mulder, R.A. A review of the benefits and drawbacks of high-stakes final examinations in higher education. High. Educ. 2024, 8, 893–918. [Google Scholar] [CrossRef]

- Cheng, Y.; Li, X.; Wang, Q.; Zhang, W. LUPDA: A comprehensive rubrics-based assessment model. Int. J. STEM Educ. 2025, 12, 21. [Google Scholar] [CrossRef]

- Tan, L.Y.; Hu, S.; Yeo, D.J.; Cheong, K.H. A Comprehensive Review on Automated Grading Systems in STEM Using AI Techniques. Mathematics 2025, 13, 2828. [Google Scholar] [CrossRef]

- Souza, M.; Margalho, É.; Lima, R.M.; Mesquita, D.; Costa, M.J. Rubric’s Development Process for Assessment of Project Management Competences. Educ. Sci. 2022, 12, 902. [Google Scholar] [CrossRef]

- Emirtekin, E. Large Language Model-Powered Automated Assessment: A Systematic Review. Appl. Sci. 2025, 15, 5683. [Google Scholar] [CrossRef]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training language models to follow instructions with human feedback. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2022), New Orleans, LA, USA, 28 November–9 December 2022; Available online: https://proceedings.neurips.cc/paper_files/paper/2022/hash/b1efde53be364a73914f58805a001731-Abstract-Conference.html (accessed on 17 September 2025).

- Mendonça, P.C.; Quintal, F.; Mendonça, F. Evaluating LLMs for Automated Scoring in Formative Assessments. Appl. Sci. 2025, 15, 2787. [Google Scholar] [CrossRef]

- Spearman, C. The Proof and Measurement of Association between Two Things. Am. J. Psychol. 1904, 15, 72–101. [Google Scholar] [CrossRef]

- Wei, Y.; Zhang, R.; Zhang, J.; Qi, D.; Cui, W. Research on Intelligent Grading of Physics Problems Based on Large Language Models. Educ. Sci. 2025, 15, 116. [Google Scholar] [CrossRef]

- Iacobescu, P.; Marina, V.; Anghel, C.; Anghele, A.-D. Evaluating Binary Classifiers for Cardiovascular Disease Prediction: Enhancing Early Diagnostic Capabilities. J. Cardiovasc. Dev. Dis. 2024, 11, 396. [Google Scholar] [CrossRef]

- Anghele, A.-D.; Marina, V.; Dragomir, L.; Moscu, C.A.; Anghele, M.; Anghel, C. Predicting Deep Venous Thrombosis Using Artificial Intelligence: A Clinical Data Approach. Bioengineering 2024, 11, 1067. [Google Scholar] [CrossRef] [PubMed]

- Dragosloveanu, S.; Vulpe, D.E.; Andrei, C.A.; Nedelea, D.-G.; Garofil, N.D.; Anghel, C.; Dragosloveanu, C.D.M.; Cergan, R.; Scheau, C. Predicting periprosthetic joint Infection: Evaluating supervised machine learning models for clinical application. J. Orthop. Transl. 2025, 54, 51–64. [Google Scholar] [CrossRef]

- Cisneros-González, J.; Gordo-Herrera, N.; Barcia-Santos, I.; Sánchez-Soriano, J. JorGPT: Instructor-Aided Grading of Programming Assignments with Large Language Models (LLMs). Future Internet 2025, 17, 265. [Google Scholar] [CrossRef]

- Pan, Y.; Nehm, R.H. Large Language Model and Traditional Machine Learning Scoring of Evolutionary Explanations: Benefits and Drawbacks. Educ. Sci. 2025, 15, 676. [Google Scholar] [CrossRef]

- Seo, H.; Hwang, T.; Jung, J.; Namgoong, H.; Lee, J.; Jung, S. Large Language Models as Evaluators in Education: Verification of Feedback Consistency and Accuracy. Appl. Sci. 2025, 15, 671. [Google Scholar] [CrossRef]

- Monteiro, J.; Sá, F.; Bernardino, J. Experimental Evaluation of Graph Databases: JanusGraph, Nebula Graph, Neo4j, and TigerGraph. Appl. Sci. 2023, 13, 5770. [Google Scholar] [CrossRef]

- Panadero, E.; Jonsson, A. The use of scoring rubrics for formative assessment purposes revisited: A review. Educ. Res. Rev. 2013, 9, 129–144. [Google Scholar] [CrossRef]

- Chan, C.-M.; Chen, W.; Su, Y.; Yu, J.; Xue, W.; Zhang, S.; Fu, J.; Liu, Z. ChatEval: Towards Better LLM-based Evaluators through Multi-Agent Debate. In Proceedings of the Twelfth International Conference on Learning Representations (ICLR), Vienna, Austria, 7–11 May 2024; Available online: https://openreview.net/forum?id=FQepisCUWu (accessed on 17 September 2025).

- Li, T.; Chiang, W.-L.; Frick, E.; Dunlap, L.; Zhu, B.; Gonzalez, J.E.; Stoica, I. From Live Data to High-Quality Benchmarks: The Arena-Hard Pipeline. Available online: https://lmsys.org/blog/2024-04-19-arena-hard/ (accessed on 12 September 2025).

- Bhat, V. RubricEval: A Scalable Human-LLM Evaluation Framework for Open-Ended Tasks. Available online: https://web.stanford.edu/class/cs224n/final-reports/256846781.pdf (accessed on 31 October 2025).

- Hashemi, H.; Eisner, J.; Rosset, C.; Van Durme, B.; Kedzie, C. LLM-RUBRIC: A Multidimensional, Calibrated Approach to Automated Evaluation of Natural Language Texts. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL), Bangkok, Thailand, 11–16 August 2024; pp. 13806–13834. [Google Scholar] [CrossRef]

- Feng, Z.; Zhang, Y.; Li, H.; Wu, B.; Liao, J.; Liu, W.; Lang, J.; Feng, Y.; Wu, J.; Liu, Z. TEaR: Improving LLM-based Machine Translation with Systematic Self-Refinement. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2025, Albuquerque, NM, USA, 29 April–4 May 2025; pp. 3922–3938. [Google Scholar] [CrossRef]

- Shinn, N.; Cassano, F.; Gopinath, A.; Narasimhan, K.; Yao, S. Reflexion: Language Agents with Verbal Reinforcement Learning. In Proceedings of the Advances in Neural Information Processing Systems 36—NeurIPS, New Orleans, LA, USA, 28 November–9 December 2023. [Google Scholar]

- Zhao, R.; Zhang, W.; Chia, Y.K.; Zhao, D.; Bing, L. Auto Arena of LLMs: Automating LLM Evaluations with Agent Peer-Battlaes and Committee Discussions. Available online: https://auto-arena.github.io/blog/ (accessed on 12 September 2025).

- Cantera, M.A.; Arevalo, M.-J.; García-Marina, V.; Alves-Castro, M. A Rubric to Assess and Improve Technical Writing in Undergraduate Engineering Courses. Educ. Sci. 2021, 11, 146. [Google Scholar] [CrossRef]

- Shahriar, S.; Lund, B.D.; Mannuru, N.R.; Arshad, M.A.; Hayawi, K.; Bevara, R.V.K.; Mannuru, A.; Batool, L. Putting GPT-4o to the Sword: A Comprehensive Evaluation of Language, Vision, Speech, and Multimodal Proficiency. Appl. Sci. 2024, 14, 7782. [Google Scholar] [CrossRef]

- Zhang, D.-W.; Boey, M.; Tan, Y.Y.; Jia, A.H.S. Evaluating large language models for criterion-based grading from agreement to consistency. npj Sci. Learn. 2024, 9, 79. [Google Scholar] [CrossRef]

- Meta. Introducing Meta Llama 3. Available online: https://ai.meta.com/blog/meta-llama-3/ (accessed on 17 September 2025).

- Nous Research. Nous-Hermes 2 Model Card. Available online: https://ollama.com/library/nous-hermes2:latest (accessed on 17 September 2025).

- Teknium. OpenHermes Model Card. Available online: https://ollama.com/library/openhermes (accessed on 17 September 2025).

- Hartford, E. Dolphin-Mistral Model Card. Available online: https://ollama.com/library/dolphin-mistral (accessed on 17 September 2025).

- Google. Gemma:7B-Instruct Model Card. Available online: https://ollama.com/library/gemma:7b-instruct (accessed on 17 September 2025).

- Renze, M. The Effect of Sampling Temperature on Problem Solving in Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; pp. 7346–7356. [Google Scholar] [CrossRef]

- Dong, B.; Bai, J.; Xu, T.; Zhou, Y. Large Language Models in Education: A Systematic Review. In Proceedings of the 2024 6th International Conference on Computer Science and Technologies in Education (CSTE), Xi’an, China, 19–21 April 2024. [Google Scholar] [CrossRef]

- Mazein, I.; Rougny, A.; Mazein, A.; Henkel, R.; Gütebier, L.; Michaelis, L.; Ostaszewski, M.; Schneider, R.; Satagopam, V.; Jensen, L.J.; et al. Graph databases in systems biology: A systematic review. Brief. Bioinform. 2024, 25, bbae561. [Google Scholar] [CrossRef] [PubMed]

- Asplund, E.; Sandell, J. Comparison of Graph Databases and Relational Databases Performance. Available online: https://su.diva-portal.org/smash/record.jsf?pid=diva2:1784349 (accessed on 31 October 2025).

- Chen, M.; Poulsen, S.; Alkhabaz, R.; Alawini, A. A Quantitative Analysis of Student Solutions to Graph Database Problems. In Proceedings of the the 26th ACM Conference on Innovation and Technology in Computer Science Education V. 1, Virtual Event, Germany, 26 June–1 July 2021; pp. 283–289. [Google Scholar] [CrossRef]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 3982–3992. [Google Scholar] [CrossRef]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating Text Generation with BERT. In Proceedings of the International Conference on Learning Representations (ICLR 2020), Addis Ababa, Ethiopia (Virtual), 26 April–1 May 2020; Available online: https://openreview.net/forum?id=SkeHuCVFDr (accessed on 17 September 2025).

- Poličar, P.G.; Stražar, M.; Zupan, B. openTSNE: A Modular Python Library for t-SNE Dimensionality Reduction and Embedding. J. Stat. Softw. 2024, 109, 1–30. [Google Scholar] [CrossRef]

- Gómez, J.; Vázquez, P.-P. An Empirical Evaluation of Document Embeddings and Similarity Metrics for Scientific Articles. Appl. Sci. 2022, 12, 5664. [Google Scholar] [CrossRef]

- Gauthier, T.D. Detecting Trends Using Spearman’s Rank Correlation Coefficient. Environ. Forensics 2001, 2, 359–362. [Google Scholar] [CrossRef]

- Vulpe, D.E.; Anghel, C.; Scheau, C.; Dragosloveanu, S.; Săndulescu, O. Artificial Intelligence and Its Role in Predicting Periprosthetic Joint Infections. Biomedicines 2025, 13, 1855. [Google Scholar] [CrossRef]

- Lazuka, M.; Anghel, A.; Parnell, T.P. LLM-Pilot: Characterize and Optimize Performance of your LLM Inference Services. In Proceedings of the SC ’24: International Conference for High Performance Computing, Networking, Storage and Analysis, Atlanta, GA, USA, 17–22 November 2024. [Google Scholar] [CrossRef]

| Question ID | Rubric Type | Question Text |

|---|---|---|

| Q1 | Technical | What is a version control system and why is it important in software development? |

| Q2 | Technical | Explain the difference between narrow AI and general AI. Provide examples for each. |

| Q3 | Technical | What is the Naive Bayes classifier and how is it applied in decision-making? |

| Q4 | Technical | Describe the main steps of the search algorithm used in an expert system. |

| Q5 | Technical | What role does facial recognition play in modern security applications? |

| Q6 | Argumentative | Should the use of facial recognition systems be restricted in public spaces? Justify your answer. |

| Q7 | Argumentative | Should teachers accept the use of GitHub by students instead of uploading assignments to Moodle? |

| Q8 | Argumentative | Can an AI-based system make ethical decisions responsibly? Argue. |

| Q9 | Argumentative | To what extent do online personalization algorithms affect users’ autonomy? |

| Q10 | Argumentative | Is it correct to consider that a machine that passes the Turing Test is “intelligent”? Why or why not? |

| Rubric Type | Criterion 1 | Criterion 2 | Criterion 3 | Criterion 4 |

| Technical | Accuracy | Clarity | Completeness | Terminology |

| Argumentative | Clarity | Coherence | Originality | Dialecticality |

| Model Name | Developer | Size | Deployment | Temperature | Context Length | Execution Environment |

|---|---|---|---|---|---|---|

| GPT-4 | OpenAI | Unknown | API (OpenAI) | N/A | 8192+ | Cloud |

| LLaMA3:8B | Meta | 8B | Ollama (local) | 0.0 | 8192 | Local |

| LLaMA3.1:latest | Meta | ~7B | Ollama (local) | 0.0 | 8192 | Local |

| Nous-Hermes2 | Nous Research | ~7B | Ollama (local) | 0.0 | 8192 | Local |

| OpenHermes:latest | Teknium | ~7B | Ollama (local) | 0.0 | 8192 | Local |

| Dolphin-Mistral:latest | Eric Hartford | ~7B | Ollama (local) | 0.0 | 8192 | Local |

| Gemma:7B-Instruct | ~7B | Ollama (local) | 0.0 | 8192 | Local |

| Model Name | Overall Mean Score |

|---|---|

| GPT-4 | 5.54 |

| LLaMA3:8B | 5.63 |

| LLaMA3.1:latest | 5.75 |

| Nous-Hermes2 | 6.55 |

| OpenHermes:latest | 7.07 |

| Dolphin-Mistral:latest | 6.41 |

| Gemma:7B-Instruct | 6.72 |

| Model | Feedback (Excerpt) |

|---|---|

| Gemma:7b-instruct | “The answer is somewhat vague. It doesn’t clearly explain what a version control system is or how it helps manage changes over time.” |

| LLaMA3.1:latest | “A version control system is not just about storing code, but also tracking changes, collaborating with others, and managing different versions.” |

| Nous-Hermes2:latest | “The answer is partially accurate as it does not explain what a version control system actually does. It lacks clarity and completeness, as well as the use of proper terminology.” |

| LLaMA3:8b | “This answer provides a good general understanding of version control systems but would benefit from more technical terminology and structured explanation.” |

| OpenHermes:latest | “Your answer is mostly accurate but lacks specific examples and technical terminology. Including version history or branching would have improved completeness.” |

| Dolphin-Mistral:latest | “This is a decent explanation, but it misses key aspects like change tracking and team collaboration. Completeness and terminology are limited.” |

| GPT -4 | “Your answer is partially accurate. It identifies the function of version control systems but lacks details on how changes are tracked and managed.” |

| Model | Feedback (Excerpt) |

|---|---|

| Gemma:7b-instruct | “The answer lacks a nuanced exploration of the topic. No counterpoints are considered, and the reasoning feels one-sided.” |

| LLaMA3.1:latest | “Your answer is clear in its main points, but could benefit from more originality and consideration of alternative views.” |

| Nous-Hermes2:latest | “The answer is clear and easy to understand. There is some attempt at structure, but no dialectical development or contrast of perspectives.” |

| LLaMA3:8b | “The answer is clear and easy to follow, but contains no critical discussion or originality. It reads more like a summary than an argument.” |

| OpenHermes:latest | “Your answer is clear and well-structured. However, it lacks depth and fails to address potential counterarguments.” |

| Dolphin-Mistral:latest | “The response makes a valid point but misses the opportunity to explore opposing arguments or demonstrate dialectical reasoning.” |

| GPT-4 | “The answer is concise and grammatically correct but underdeveloped in terms of argumentative structure. It does not consider opposing views or justify claims.” |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Anghel, C.; Anghel, A.A.; Pecheanu, E.; Cocu, A.; Craciun, M.V.; Iacobescu, P.; Balau, A.S.; Andrei, C.A. GraderAssist: A Graph-Based Multi-LLM Framework for Transparent and Reproducible Automated Evaluation. Informatics 2025, 12, 123. https://doi.org/10.3390/informatics12040123

Anghel C, Anghel AA, Pecheanu E, Cocu A, Craciun MV, Iacobescu P, Balau AS, Andrei CA. GraderAssist: A Graph-Based Multi-LLM Framework for Transparent and Reproducible Automated Evaluation. Informatics. 2025; 12(4):123. https://doi.org/10.3390/informatics12040123

Chicago/Turabian StyleAnghel, Catalin, Andreea Alexandra Anghel, Emilia Pecheanu, Adina Cocu, Marian Viorel Craciun, Paul Iacobescu, Antonio Stefan Balau, and Constantin Adrian Andrei. 2025. "GraderAssist: A Graph-Based Multi-LLM Framework for Transparent and Reproducible Automated Evaluation" Informatics 12, no. 4: 123. https://doi.org/10.3390/informatics12040123

APA StyleAnghel, C., Anghel, A. A., Pecheanu, E., Cocu, A., Craciun, M. V., Iacobescu, P., Balau, A. S., & Andrei, C. A. (2025). GraderAssist: A Graph-Based Multi-LLM Framework for Transparent and Reproducible Automated Evaluation. Informatics, 12(4), 123. https://doi.org/10.3390/informatics12040123

_Bryant.png)