Heart Attack Risk Prediction via Stacked Ensemble Metamodeling: A Machine Learning Framework for Real-Time Clinical Decision Support

Abstract

1. Introduction

2. Related Work

2.1. Machine Learning Models

2.2. System Applications

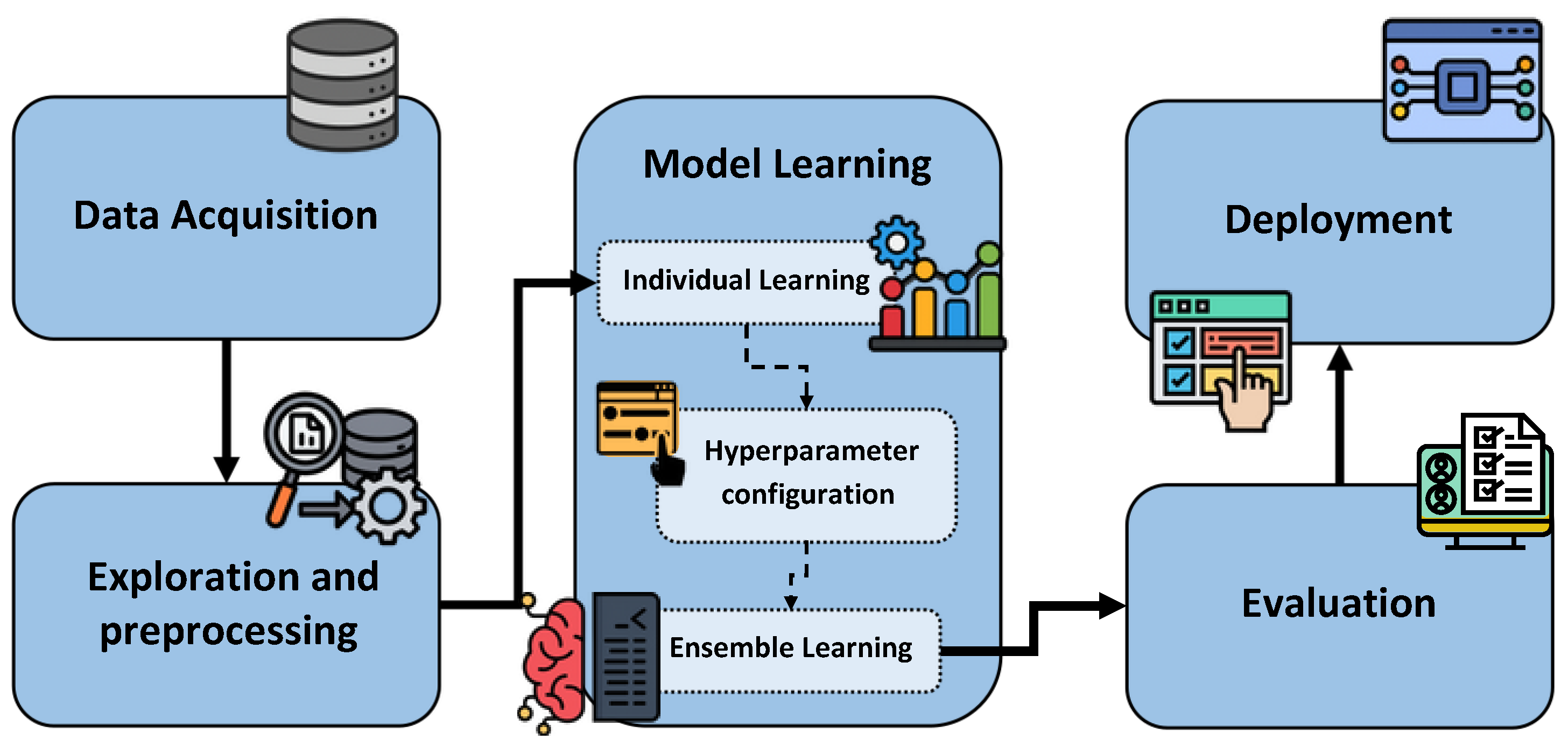

3. Proposed Methodology

3.1. Data Acquisition

- Dataset 1. This dataset was obtained from [35]. It was collected at Zheen Hospital in Erbil, Iraq, from January to May 2019. The dataset contains 1319 instances and includes nine key attributes: age, gender, heart rate, systolic blood pressure, diastolic blood pressure, blood sugar, CK-MB, troponin, and an output label indicating the presence (1) or absence (0) of a heart attack. Some features are normalized; for example, gender is represented as male = 1 and female = 0, while blood sugar is coded as 1 if it exceeds 120 and 0 otherwise. A fragment of Dataset 1 is shown in Table 1.

- Dataset 2. This dataset was obtained online from [36]. It was compiled from four medical databases (Cleveland, Hungary, Switzerland, and Long Beach V.) and consolidated into a single resource containing 1025 instances. Originally, it included 76 attributes; however, this version comprises a curated subset of 14 key features. Commonly used in cardiovascular studies, the dataset contains variables such as age, sex, chest pain type, resting blood pressure, cholesterol, fasting blood sugar, and electrocardiographic results. The main objective is to predict the likelihood of a patient having a heart attack based on these features, with the “target” attribute indicating the risk level. A fragment of Dataset 2 is shown in Table 2.

- Dataset 3. This dataset was obtained from [37]. It was collected in 2015 at the Faisalabad Institute of Cardiology and the Allied Hospital in Faisalabad, Punjab, Pakistan. It contains 299 instances and includes 13 features that provide clinical and lifestyle information, such as diabetes, creatinine phosphokinase, and platelet counts, among others. A fragment of Dataset 3 is shown in Table 3.

3.2. Exploration and Preprocessing

- Data verification. A general inspection of the dataset structure was conducted to identify potential data integrity issues, such as columns with missing values or data types incompatible with the classification models. Additionally, the target variable of the Dataset 2 was transformed to achieve binary classification, assigning 1 if its value is ≥50, and 0 otherwise.

- Deduplication. Duplicate data were identified and removed from the dataset. This technique helps reduce noise by removing repetitive entries, ensuring the dataset remains accurate and optimized for analysis.

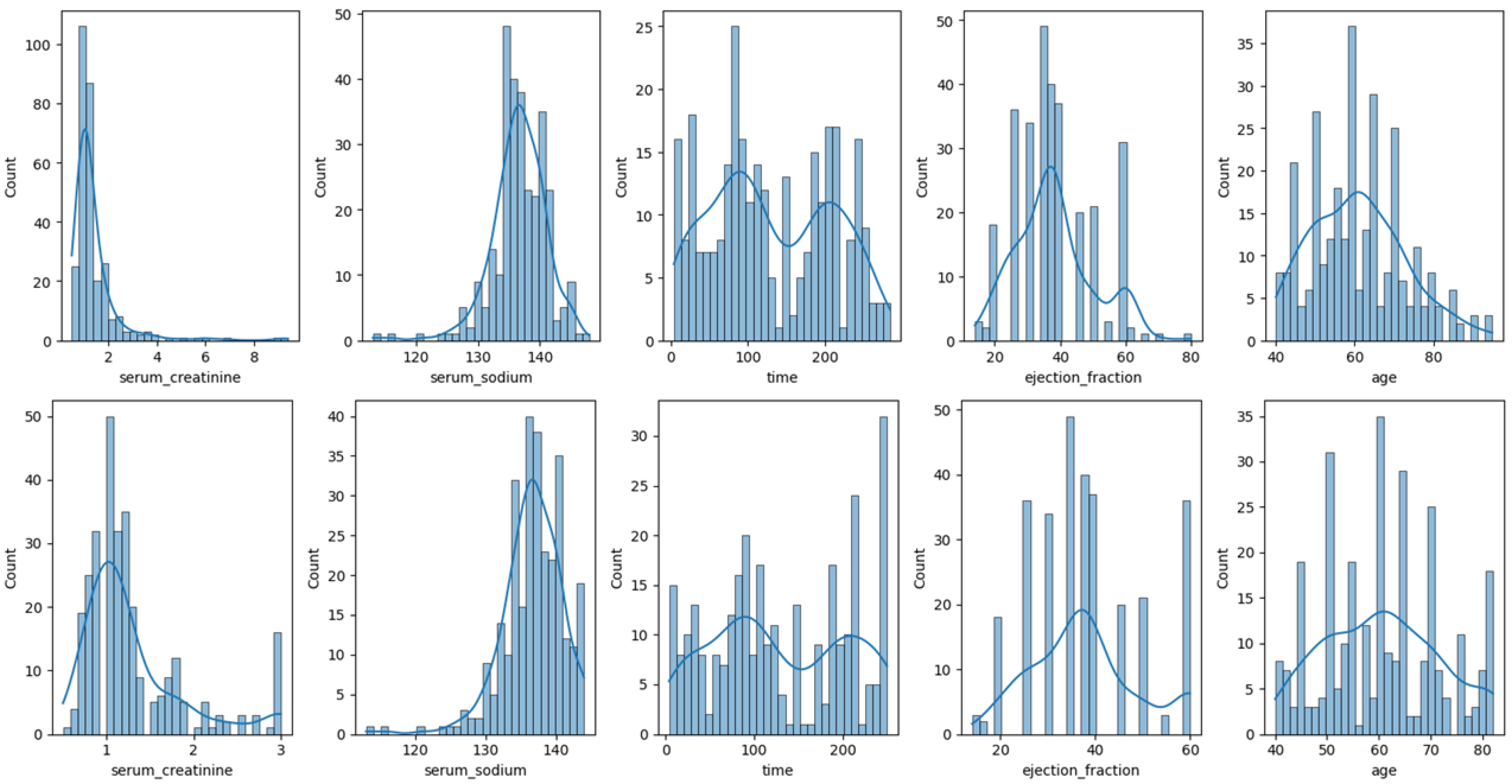

- Data distribution analysis. To better understand the nature of the data and identify possible anomalies, such as outliers, an exploratory analysis was conducted on all the numerical variables in the dataset. Distribution graphs were used to facilitate the observation and detection of aspects such as skewness, outliers, and potential transformations.

- Data transformation. A data transformation was performed to correct outliers. Two techniques were applied:

- -

- Winsorization. Following Dash et al. [39], we apply Winsorization to detect and manage outliers. This technique limits extreme values in the data by replacing the lowest and highest 1% of data points with the nearest values within that range. It reduces the impact of outliers and minimizes their influence on analyses, resulting in a more robust dataset.

- -

- Log transformation. After winsorization, a log transformation is applied using np.log1p (from numpy package), which calculates the natural logarithm of (1 + value). This technique normalizes skewed distributions, stabilizes variance, and makes features more suitable for modeling algorithms that assume normality [40].

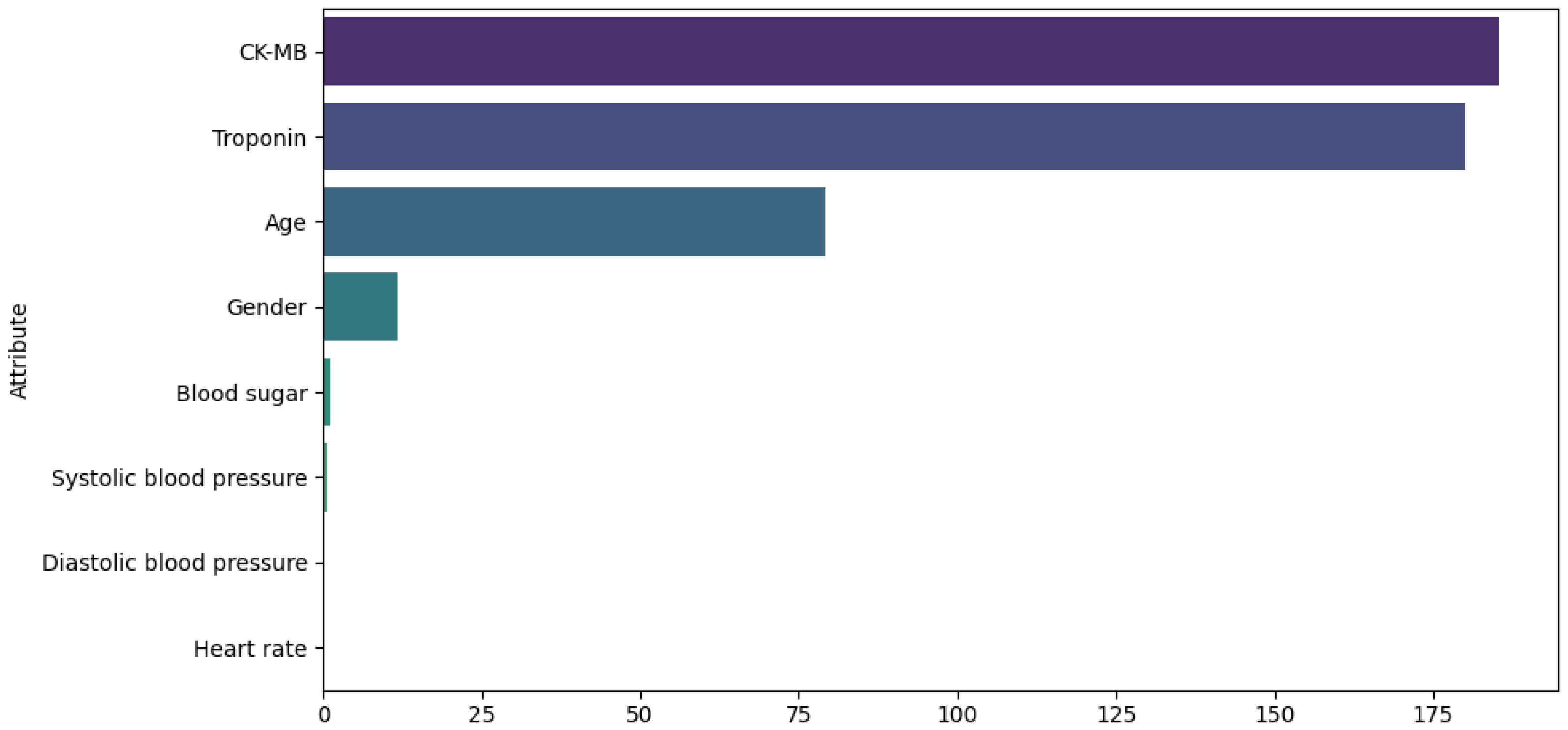

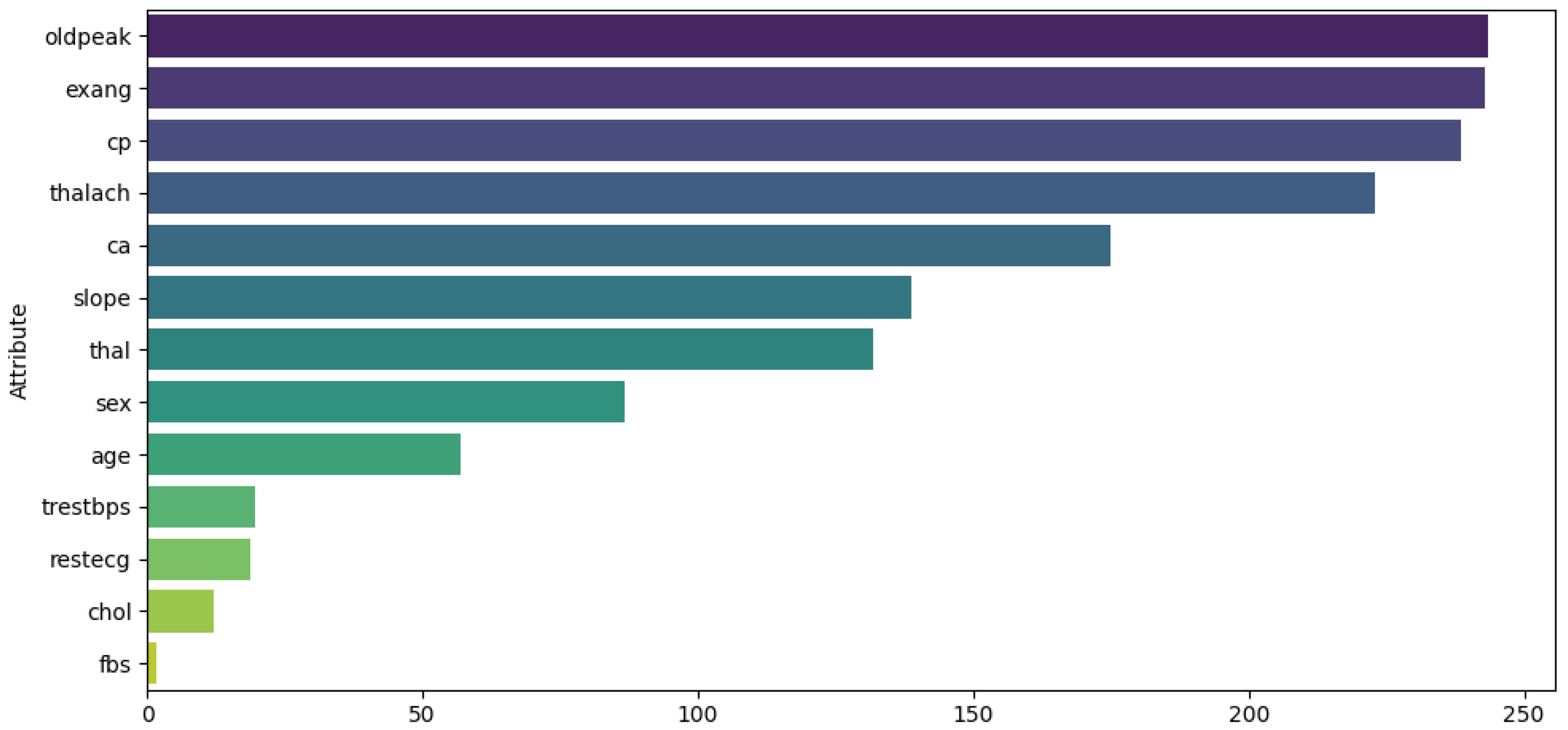

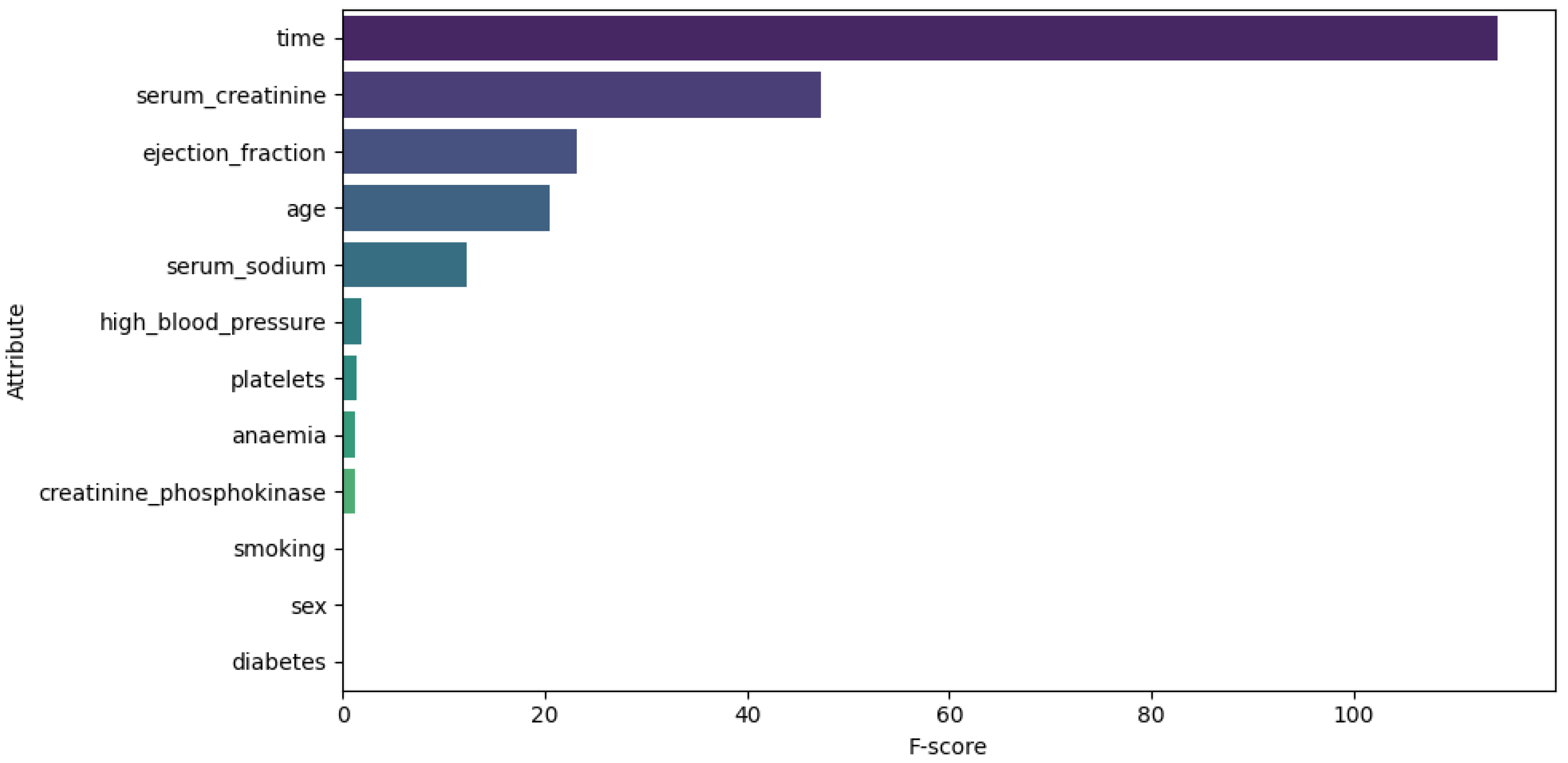

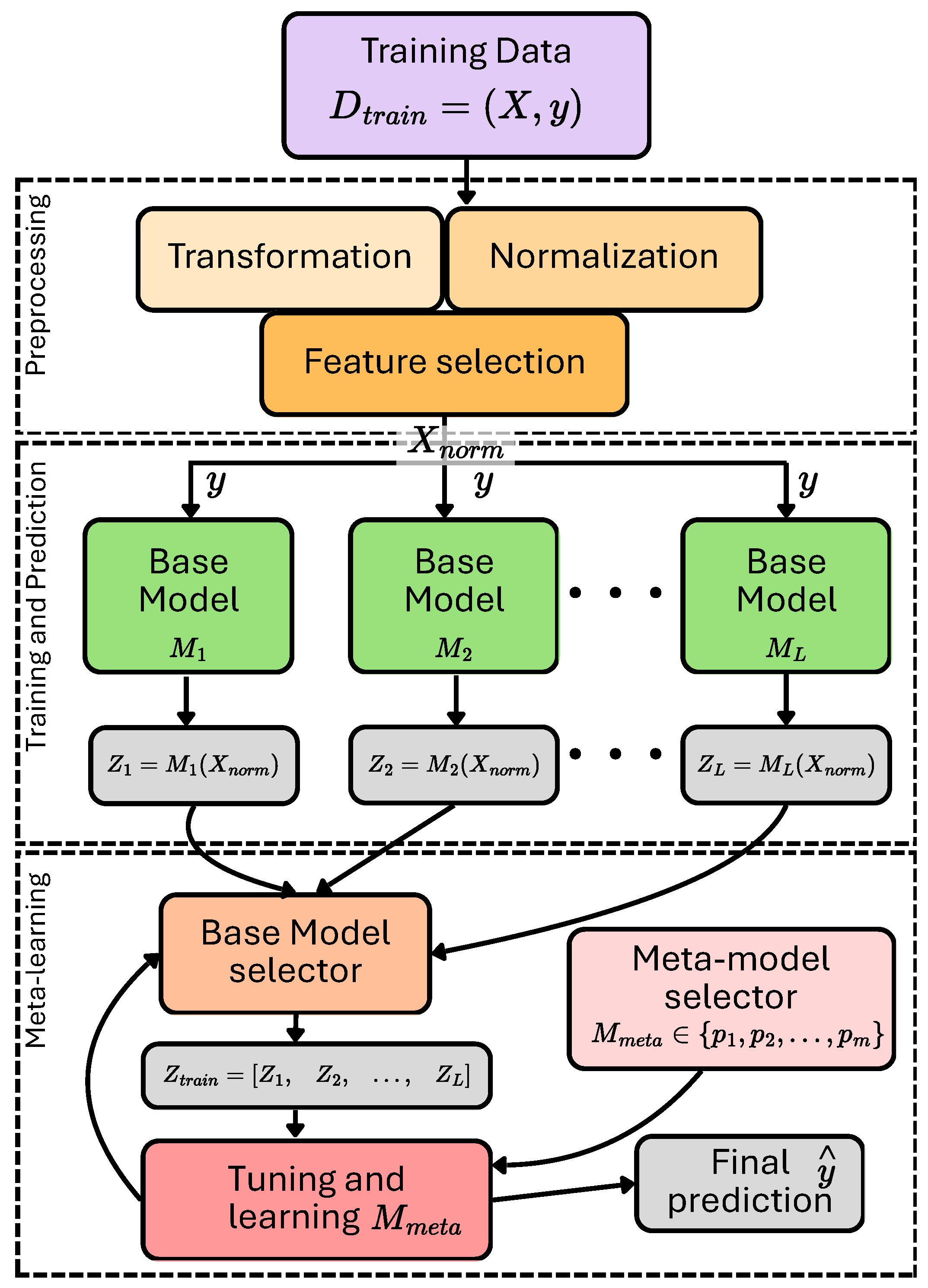

These techniques were applied exclusively to continuous data attributes to reduce outliers and data skewness. For Dataset 1, the attributes analyzed were heart rate, CK-MB, diastolic blood pressure, blood sugar, and troponin. Figure 2 illustrates the distribution of these attributes before (top) and after (bottom) the transformations.For Dataset 2, only the log transformation was applied to the trestbps and chol attributes. Figure 3 illustrates the distribution of these attributes before (top) and after (bottom) the transformation. For Dataset 3, transformations were applied to the time, ejection fraction, serum creatinine, serum sodium, and age features. Figure 4 illustrates the distribution of these attributes before (top) and after (bottom) the transformation. - Feature selection. The ANOVA F-score test was used to evaluate how feature values vary across different classes of the target variable, identifying those with the greatest discriminatory power. We computed the F-statistic for each feature to assess its predictive power regarding the target variable, as described in [41]. The features were then ranked based on this metric and visualized. This ranking plot helped us identify the most discriminative features. The final selection was made by identifying the inflection point in the plot, following an intuitive principle based on the elbow method to isolate a subset of highly informative features. Figure 5, Figure 6 and Figure 7 illustrate the comparison of the most important attributes for Datasets 1, 2, and 3, respectively. For Dataset 1, the selected attributes were CK-MB, Troponin, Age, and Gender. For Dataset 2, the selected attributes were exang, cp, oldpeak, thalach, and ca. For Dataset 3, the selected attributes were time, ejection fraction, serum creatinine, serum sodium, and age.

- Data split. The dataset was divided into two subsets: training (80%) and testing (20%). To achieve this, the target variable was separated from the predictor variables, allowing the model to learn to predict the target attribute using only the explanatory variables. The split was performed randomly to ensure an even distribution of data between the two subsets.

- Normalization. Some machine learning algorithms are sensitive to the scale of the variables, so standard normalization was applied to the input features. To avoid introducing bias into the model, the normalization parameters (mean and standard deviation) were computed exclusively from the training set and then applied to both the test data and any new data. This practice ensures proper generalization of the model and guarantees that the evaluation remains objective and consistent [16].

3.3. Model Learning

3.3.1. Individual Learning

- K-Nearest Neighbors (KNN): Classifies or predicts values based on the proximity of data (neighbors) to a given input [42]. The formula is presented in Equation (1).where is the response of classifying a sample . C is the set of labels or classes, and k is a number of neighbors. is a subset of the input (X) that contains the k closest points to (using a distance function such as Euclidean distance). Thus, is determined by the majority vote of labels in .

- Support Vector Machine (SVM): Its objective is to find the optimal hyperplane that separates the data into different classes, maximizing the distance between the closest points in each category [43]. A concise SVM decision function is presented in Equation (2).where is a function with the best-fitting margin (hyperplane minimizing the error), w and b are the weight vector and bias, respectively, and is a non-linear mapping function.

- Decision Tree (DT): DTs work by dividing data into branches based on questions or conditions, creating a tree-like structure. At each node, the algorithm makes a decision based on the characteristics of the dataset, eventually arriving at a prediction on the leaves [44]. Given a dataset D, the tree is recursively split into two parts ( and ) based on a feature i and a threshold . The splitting criterion is defined by a function S, as shown in Equation (3).where is an impurity measure or loss function such as Gini impurity, entropy, or variance reduction. Then, the idea is to get the best split through .

- Multi-layer Perceptron (MLP): It is a type of artificial neural network that consists of multiple layers. The input layer receives the dataset features, the hidden layers apply transformations using neurons and activation functions, and the output layer generates the final prediction [45]. The output of layer l is defined recursively as shown in Equations (4)–(8):where x is a sample from the input (X), is the weight matrix for layer l, b is the bias vector, is a non-linear activation function (e.g., sigmoid), and is the prediction.

- Logistic Regression (LR): This algorithm is based on the concept of probability and uses the sigmoid function to transform input values into probabilities ranging from 0 to 1, based on a decision boundary [46]. The sigmoid function and the probabilistic model are presented in Equations (9) and (10).where refers to the parameters vector and obtains a hypothesis with the given parameters.

- Naïve Bayes (NB): It is based on probability theory and Bayes’ Theorem, which assumes that all features are conditionally independent given the class label [47]. The prediction is given by Equation (11).where d refers to the feature space and the maximum a posteriori (MAP) determines the class prediction.

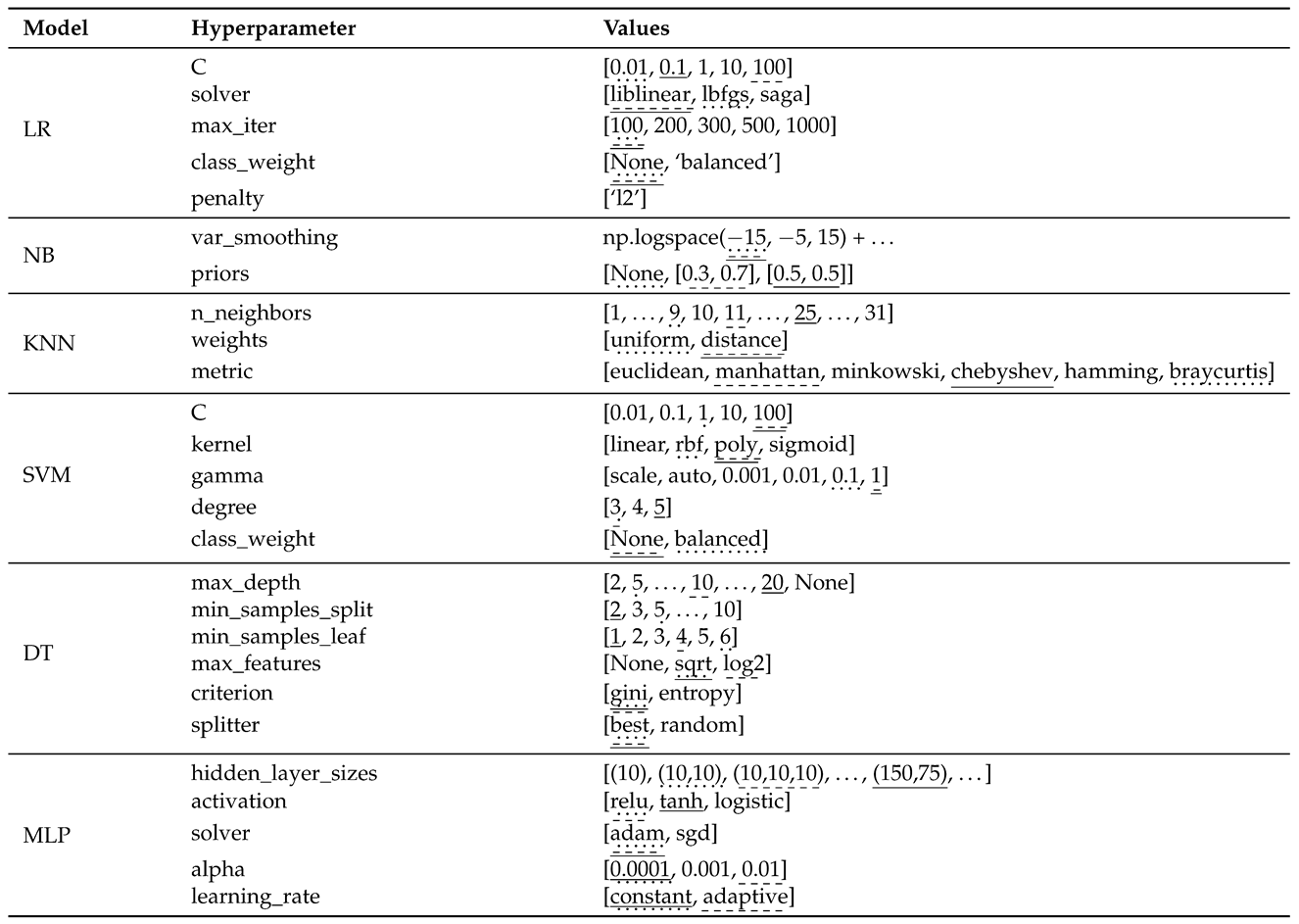

3.3.2. Hyperparameter Configuration

3.3.3. Ensemble Learning

3.4. Evaluation

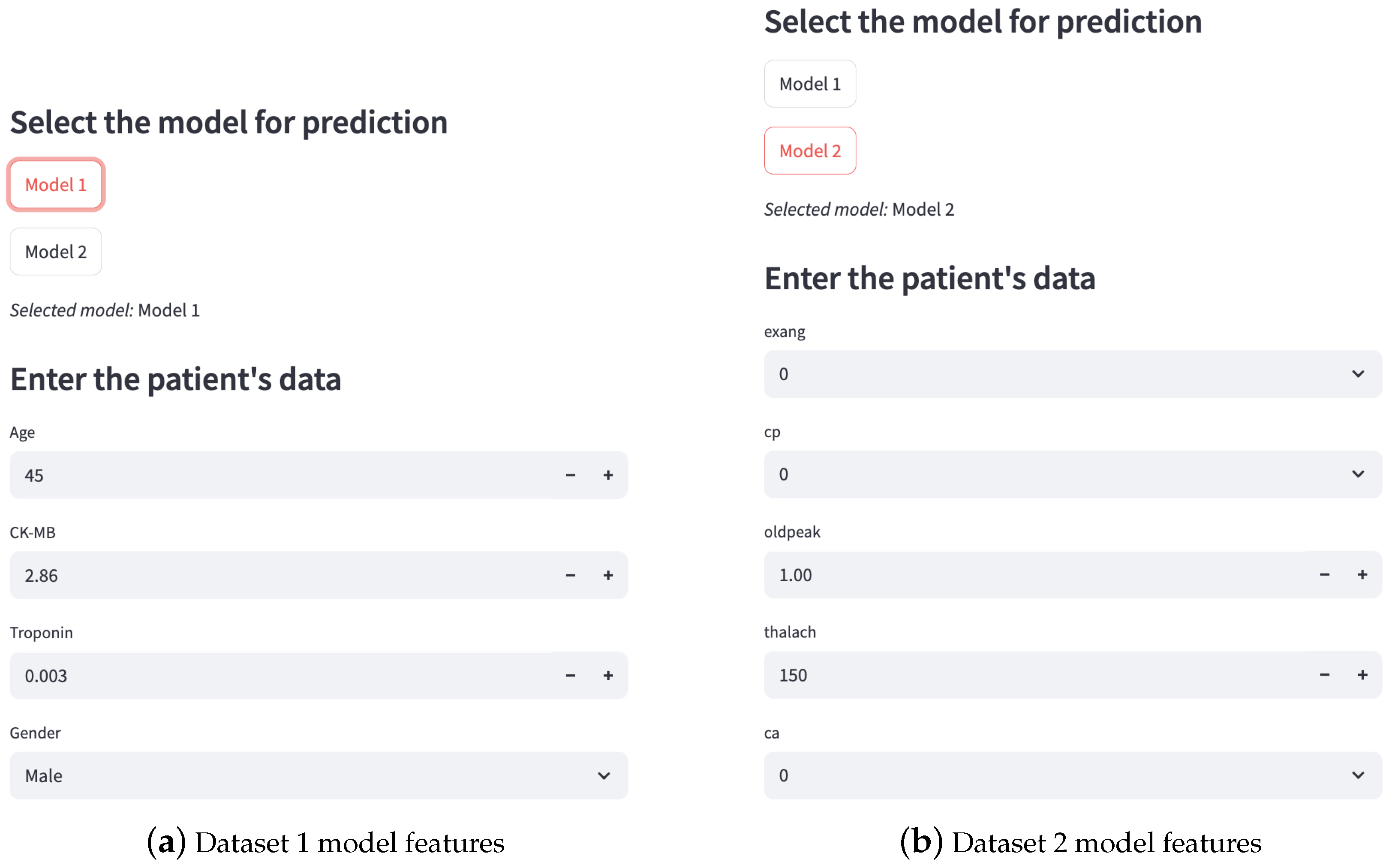

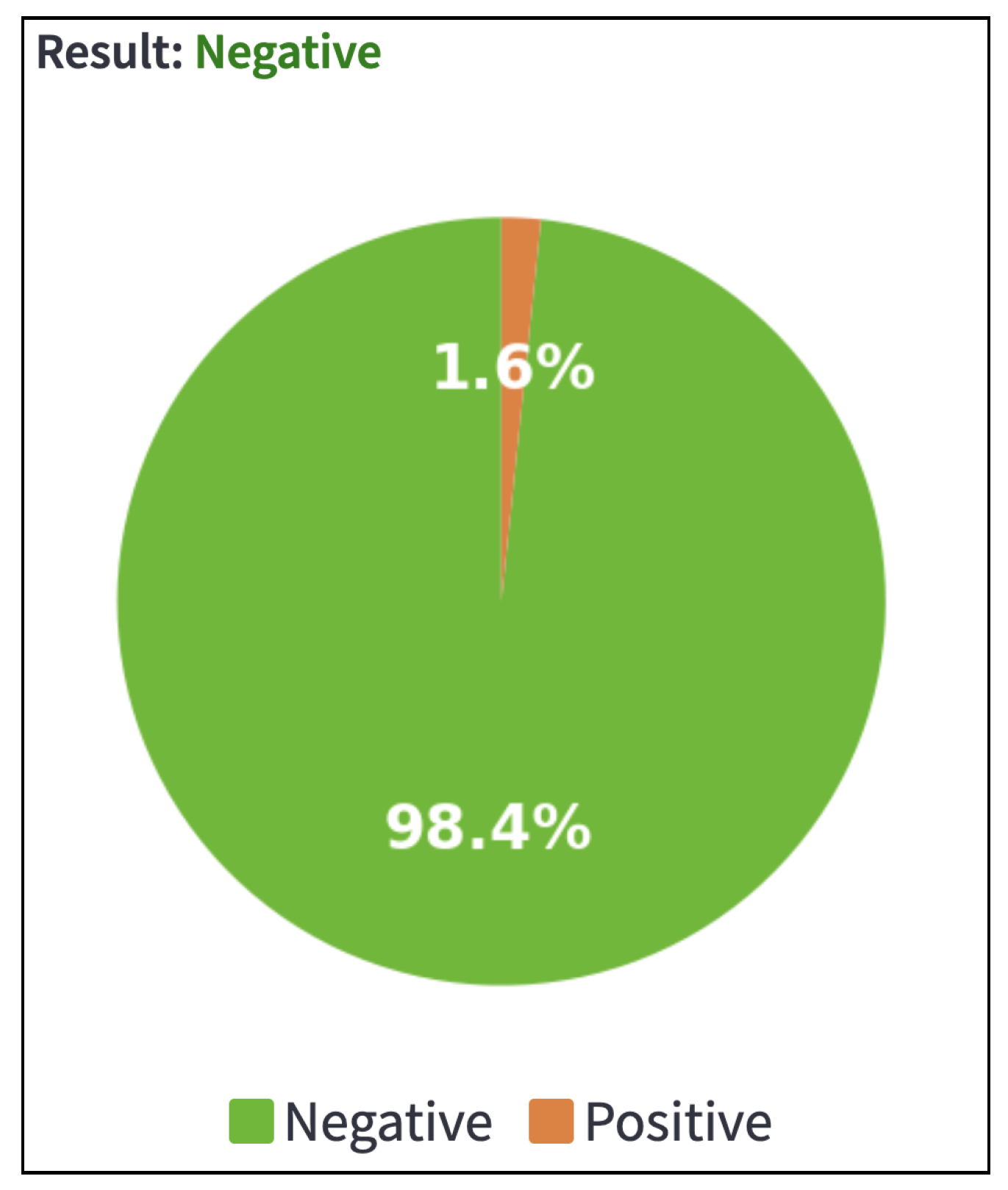

3.5. Deployment

4. Experiments

4.1. Dataset and Metrics

- True Positives (TP): Cases correctly classified as positive.

- False Positives (FP): Cases incorrectly classified as positive.

- True Negatives (TN): Cases correctly classified as negative.

- False Negatives (FN): Cases incorrectly classified as negative.

4.2. Implementation Details

- Exploration and preprocessing: The libraries pandas, numpy, matplotlib, and seaborn (versions 2.3, 2.3.2, 3.10.3, and 0.13.2, respectively) were used for data loading, exploration, visualization, and cleaning. Feature scaling was performed using StandardScaler, and winsorization techniques were applied with scipy (version 1.16.1).

- Modeling: The individual models, including Logistic Regression, Naive Bayes, K-Nearest Neighbors, Decision Trees, Support Vector Machines, and Multi-Layer Perceptrons, were implemented using the scikit-learn library (version 1.7.0).

- Tuning: Hyperparameter optimization was conducted using GridSearchCV from scikit-learn, with cross-validation folds set to .

- Evaluation: Model performance was assessed through metrics such as accuracy, precision, recall, F1-score, and AUC, using tools from the scikit-learn.metrics module.

- Ensemble: We select the best-performing models based on hyperparameter tuning results and apply ensemble techniques from the scikit-learn library using the StackingClassifier and VotingClassifier methods.

- Deployment: The web application was deployed using the Streamlit framework (version 1.46.0). The models and scalers were loaded with joblib (version 1.5.0), while the visual components were handled with matplotlib.

- Platform: All experiments were executed on a personal HP Victus computer equipped with an AMD Ryzen 7 processor (3.80 GHz), 32 GB of RAM, an Nvidia RTX 4070 GPU, and running Windows OS.

- Code: The source code, including training, validation, and web interface are available in the repository: https://github.com/SahidHernandez/Heart-Attack-Risk-Prediction-for-Real-Time-Clinical-Decision-Support, accessed on 7 October 2025.

5. Results

5.1. Individual and Ensemble Results

- RF, DT, and Voting ensembles: LR, NB, KNN and MLP.

- XGB and GBC ensembles: LR, NB, KNN, SVM, MLP.

- LR ensemble: LR, KNN, SVM and MLP.

5.2. Model Comparison

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- WHO. Cardiovascular Diseases (CVDs). 2021. Available online: https://www.who.int/news-room/fact-sheets/detail/cardiovascular-diseases-(cvds) (accessed on 14 May 2025).

- Alotaibi, A. Ensemble Deep Learning Approaches in Health Care: A Review. Comput. Mater. Contin. 2025, 82, 3741. [Google Scholar] [CrossRef]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef] [PubMed]

- Berdaly, A.; Abdiakhmetova, Z. Predicting heart disease using machine learning algorithms. J. Math. Mech. Comput. Sci. 2022, 115, 101–111. [Google Scholar] [CrossRef]

- Dritsas, E.; Trigka, M. Application of Deep Learning for Heart Attack Prediction with Explainable Artificial Intelligence. Computers 2024, 13, 244. [Google Scholar] [CrossRef]

- Dafni Rose, J.; Mohanaprakash, T.; Jeyamohan, H.; Jerusalin Carol, J. Enhancing Cardiovascular Disease Diagnosis through Bioinformatics and Machine Learning. ResearchSquare 2024, 1–25. [Google Scholar] [CrossRef]

- Doppala, B.P.; Bhattacharyya, D.; Janarthanan, M.; Baik, N. A reliable machine intelligence model for accurate identification of cardiovascular diseases using ensemble techniques. J. Healthc. Eng. 2022, 2022, 2585235. [Google Scholar] [CrossRef]

- Alizadehsani, R.; Abdar, M.; Roshanzamir, M.; Khosravi, A.; Kebria, P.M.; Khozeimeh, F.; Nahavandi, S.; Sarrafzadegan, N.; Acharya, U.R. Machine learning-based coronary artery disease diagnosis: A comprehensive review. Comput. Biol. Med. 2019, 111, 103346. [Google Scholar] [CrossRef]

- Brandt, V.; Schoepf, U.J.; Aquino, G.J.; Bekeredjian, R.; Varga-Szemes, A.; Emrich, T.; Bayer, R.R.; Schwarz, F.; Kroencke, T.J.; Tesche, C.; et al. Impact of machine-learning-based coronary computed tomography angiography–derived fractional flow reserve on decision-making in patients with severe aortic stenosis undergoing transcatheter aortic valve replacement. Eur. Radiol. 2022, 32, 6008–6016. [Google Scholar] [CrossRef]

- Sheakh, M.A.; Tahosin, M.S.; Akter, L.; Jahan, I.; Islam, M.N.; Siddiky, M.R.; Hasan, M.M.; Hasan, S. Comparative analysis of machine learning algorithms for ECG-based heart attack prediction: A study using Bangladeshi patient data. World J. Adv. Res. Rev. 2024, 23, 2572–2584. [Google Scholar] [CrossRef]

- Alshraideh, M.; Alshraideh, N.; Alshraideh, A.; Alkayed, Y.; Al Trabsheh, Y.; Alshraideh, B. Enhancing heart attack prediction with machine learning: A study at jordan university hospital. Appl. Comput. Intell. Soft Comput. 2024, 2024, 5080332. [Google Scholar] [CrossRef]

- Wang, Y. AI-Based Methods of Cardiovascular Disease Prediction and Analysis. In Proceedings of the International Conference on Engineering Management, Information Technology and Intelligence, Shanghai, China, 14 June 2024; pp. 724–729. [Google Scholar] [CrossRef]

- Budholiya, K.; Shrivastava, S.K.; Sharma, V. An optimized XGBoost based diagnostic system for effective prediction of heart disease. J. King Saud Univ. - Comput. Inf. Sci. 2022, 34, 4514–4523. [Google Scholar] [CrossRef]

- Yang, J. The prediction and analysis of heart disease using XGBoost algorithm. Appl. Comput. Eng 2024, 41, 61–68. [Google Scholar] [CrossRef]

- Assegie, T.A. Heart disease prediction model with k-nearest neighbor algorithm. Int. J. Inform. Commun. Technol. (IJ-ICT) 2021, 10, 225. [Google Scholar] [CrossRef]

- Bouqentar, M.A.; Terrada, O.; Hamida, S.; Saleh, S.; Lamrani, D.; Cherradi, B.; Raihani, A. Early heart disease prediction using feature engineering and machine learning algorithms. Heliyon 2024, 10, e38731. [Google Scholar] [CrossRef]

- Mahajan, P.; Uddin, S.; Hajati, F.; Moni, M.A. Ensemble learning for disease prediction: A review. Healthcare 2023, 11, 1808. [Google Scholar] [CrossRef]

- Dinh, A.; Miertschin, S.; Young, A.; Mohanty, S.D. A data-driven approach to predicting diabetes and cardiovascular disease with machine learning. BMC Med. Inform. Decis. Mak. 2019, 19, 211. [Google Scholar] [CrossRef] [PubMed]

- Mienye, I.D.; Sun, Y.; Wang, Z. An improved ensemble learning approach for the prediction of heart disease risk. Inform. Med. Unlocked 2020, 20, 100402. [Google Scholar] [CrossRef]

- Ali, L.; Niamat, A.; Khan, J.A.; Golilarz, N.A.; Xingzhong, X.; Noor, A.; Nour, R.; Bukhari, S.A.C. An optimized stacked Support Vector Machines based expert system for the effective prediction of heart failure. IEEE Access 2019, 7, 54007–54014. [Google Scholar] [CrossRef]

- Karadeniz, T.; Tokdemir, G.; Maraş, H.H. Ensemble methods for heart disease prediction. New Gener. Comput. 2021, 39, 569–581. [Google Scholar] [CrossRef]

- Almulihi, A.; Saleh, H.; Hussien, A.M.; Mostafa, S.; El-Sappagh, S.; Alnowaiser, K.; Ali, A.A.; Refaat Hassan, M. Ensemble learning based on hybrid deep learning model for heart disease early prediction. Diagnostics 2022, 12, 3215. [Google Scholar] [CrossRef] [PubMed]

- Tiwari, A.; Chugh, A.; Sharma, A. Ensemble framework for cardiovascular disease prediction. Comput. Biol. Med. 2022, 146, 105624. [Google Scholar] [CrossRef]

- Islam, M.N.; Raiyan, K.R.; Mitra, S.; Mannan, M.R.; Tasnim, T.; Putul, A.O.; Mandol, A.B. Predictis: An IoT and machine learning-based system to predict risk level of cardio-vascular diseases. BMC Health Serv. Res. 2023, 23, 171. [Google Scholar] [CrossRef]

- Ganesan, M.; Sivakumar, N. IoT based heart disease prediction and diagnosis model for healthcare using machine learning models. In Proceedings of the 2019 IEEE International Conference on System, Computation, Automation and Networking (ICSCAN), Pondicherry, India, 29–30 March 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Gupta, A.; Yadav, S.; Shahid, S.; U, V. HeartCare: IoT Based Heart Disease Prediction System. In Proceedings of the 2019 International Conference on Information Technology (ICIT), Shanghai, China, 20–23 December 2019; pp. 88–93. [Google Scholar] [CrossRef]

- Ahdal, A.A.; Rakhra, M.; Badotra, S.; Fadhaeel, T. An integrated Machine Learning Techniques for Accurate Heart Disease Prediction. In Proceedings of the 2022 International Mobile and Embedded Technology Conference (MECON), Noida, India, 10–11 March 2022; pp. 594–598. [Google Scholar] [CrossRef]

- Nancy, A.A.; Ravindran, D.; Raj Vincent, P.M.D.; Srinivasan, K.; Gutierrez Reina, D. IoT-Cloud-Based Smart Healthcare Monitoring System for Heart Disease Prediction via Deep Learning. Electronics 2022, 11, 2292. [Google Scholar] [CrossRef]

- Velmurugan, A.; Padmanaban, K.; Kumar, A.S.; Azath, H.; Subbiah, M. Machine learning IoT based framework for analysing heart disease prediction. AIP Conf. Proc. 2023, 2523, 020038. [Google Scholar] [CrossRef]

- Desai, F.; Chowdhury, D.; Kaur, R.; Peeters, M.; Arya, R.C.; Wander, G.S.; Gill, S.S.; Buyya, R. HealthCloud: A system for monitoring health status of heart patients using machine learning and cloud computing. Internet Things 2022, 17, 100485. [Google Scholar] [CrossRef]

- Golec, M.; Gill, S.S.; Parlikad, A.K.; Uhlig, S. HealthFaaS: AI-Based Smart Healthcare System for Heart Patients Using Serverless Computing. IEEE Internet Things J. 2023, 10, 18469–18476. [Google Scholar] [CrossRef]

- Shrestha, R.; Chatterjee, J.M. Heart disease prediction system using machine learning. LBEF Res. J. Sci. Technol. Manag. 2019, 1, 115–132. [Google Scholar]

- Effati, S.; Kamarzardi-Torghabe, A.; Azizi-Froutaghe, F.; Atighi, I.; Ghiasi-Hafez, S. Web application using machine learning to predict cardiovascular disease and hypertension in mine workers. Sci. Rep. 2024, 14, 31662. [Google Scholar] [CrossRef]

- Nashif, S.; Raihan, M.R.; Islam, M.R.; Imam, M.H. Heart disease detection by using machine learning algorithms and a real-time cardiovascular health monitoring system. World J. Eng. Technol. 2018, 6, 854–873. [Google Scholar] [CrossRef]

- Rashid, T.A.; Hassan, B. Heart Attack Dataset. Mendeley Data, V1. 2022. Available online: https://data.mendeley.com/datasets/wmhctcrt5v/1 (accessed on 7 October 2025).

- Zaganjori, J. Heart Attack Prediction -Analyzing Key Factors for Predicting Heart Disease Risk. 2024. Available online: https://www.kaggle.com/datasets/juledz/heart-attack-prediction (accessed on 5 June 2025).

- Chicco, D.; Jurman, G. Machine learning can predict survival of patients with heart failure from serum creatinine and ejection fraction alone. BMC Med. Inform. Decis. Mak. 2020, 20, 16. [Google Scholar] [CrossRef]

- El-Morr, C.; Jammal, M.; Ali-Hassan, H.; EI-Hallak, W. Machine Learning for Practical Decision Making; International Series in Operations Research & Management Science; Springer International Publishing: Cham, Switzerland, 2022. [Google Scholar]

- Dash, C.S.K.; Behera, A.K.; Dehuri, S.; Ghosh, A. An outliers detection and elimination framework in classification task of data mining. Decis. Anal. J. 2023, 6, 100164. [Google Scholar] [CrossRef]

- Wang, Q.; Shrestha, D.L.; Robertson, D.; Pokhrel, P. A log-sinh transformation for data normalization and variance stabilization. Water Resour. Res. 2012, 48, 1–7. [Google Scholar] [CrossRef]

- Ali, M.M.; Islam, M.S.; Uddin, M.N.; Uddin, M.A. A conceptual IoT framework based on Anova-F feature selection for chronic kidney disease detection using deep learning approach. Intell.-Based Med. 2024, 10, 100170. [Google Scholar] [CrossRef]

- Zhang, S.; Li, J. KNN classification with one-step computation. IEEE Trans. Knowl. Data Eng. 2021, 35, 2711–2723. [Google Scholar] [CrossRef]

- Guido, R.; Ferrisi, S.; Lofaro, D.; Conforti, D. An overview on the advancements of support vector machine models in healthcare applications: A review. Information 2024, 15, 235. [Google Scholar] [CrossRef]

- Helmud, E.; Fitriyani, F.; Romadiana, P. Classification comparison performance of supervised machine learning Random Forest and Decision Tree algorithms using confusion matrix. J. Sisfokom (Sistem Inf. Dan Komput.) 2024, 13, 92–97. [Google Scholar] [CrossRef]

- Rashedi, K.A.; Ismail, M.T.; Al Wadi, S.; Serroukh, A.; Alshammari, T.S.; Jaber, J.J. Multi-layer perceptron-based classification with application to outlier detection in Saudi Arabia stock returns. J. Risk Financ. Manag. 2024, 17, 69. [Google Scholar] [CrossRef]

- Ambrish, G.; Ganesh, B.; Ganesh, A.; Srinivas, C.; Dhanraj; Mensinkal, K. Logistic regression technique for prediction of cardiovascular disease. Glob. Transitions Proc. 2022, 3, 127–130. [Google Scholar] [CrossRef]

- Veziroğlu, M.; Veziroğlu, E.; Bucak, İ.Ö. Performance comparison between Naive Bayes and machine learning algorithms for news classification. In Bayesian Inference-Recent Trends; IntechOpen: London, UK, 2024. [Google Scholar]

- Géron, A. Hands-on Machine Learning with Scikit-Learn, Keras, and TensorFlow; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2022. [Google Scholar]

- Shukla, S.S.P.; Singh, M.P. Exploring ensemble optimized voting and stacking classifiers through Cross-validation for early detection of suicidal ideation. J. Intell. Fuzzy Syst. 2024, 47, 335–349. [Google Scholar] [CrossRef]

- Nti, I.K.; Adekoya, A.F.; Weyori, B.A. A comprehensive evaluation of ensemble learning for stock-market prediction. J. Big Data 2020, 7, 20. [Google Scholar] [CrossRef]

- Richards, T. Streamlit for Data Science: Create Interactive Data Apps in Python; Packt Publishing Ltd.: Birmingham, UK, 2023. [Google Scholar]

- Vujović, Ž. Classification model evaluation metrics. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 599–606. [Google Scholar] [CrossRef]

- Anshori, M.; Haris, M.S. Predicting heart disease using Logistic Regression. Knowl. Eng. Data Sci. (KEDS) 2022, 5, 188–196. [Google Scholar] [CrossRef]

- Abubaker, H.; Singh, J.; Muchtar, F.; Fattah, S. An Ensemble-Based Extra Feature Selection Approach for Predicting Heart Disease. In Proceedings of the The International Conference on Recent Innovations in Computing, Jammu, India, 26–27 October 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 551–563. [Google Scholar]

- Sharma, N.; Lalwani, P. Increasing the reliability of Heart Disease classification models using Class Balancing techniques with Feature Engineering. ResearchSquare 2023. [Google Scholar] [CrossRef]

- Tunç, Z.; Çiçek, İ.B.; Güldoğan, E.; Çolak, C. Assessment of associative classification approach for predicting mortality by heart failure. J. Cogn. Syst. 2020, 5, 41–45. [Google Scholar]

- Song, C.; Shi, X. ReActHE: A homomorphic encryption friendly deep neural network for privacy-preserving biomedical prediction. Smart Health 2024, 32, 100469. [Google Scholar] [CrossRef]

- Mohammed, A.; Kora, R. A comprehensive review on ensemble deep learning: Opportunities and challenges. J. King Saud-Univ.-Comput. Inf. Sci. 2023, 35, 757–774. [Google Scholar] [CrossRef]

- Elo, G.; Ghansah, B.; Kwaa-Aidoo, E. Critical Review of Stack Ensemble Classifier for the Prediction of Young Adults’voting Patterns Based on Parents’political Affiliations. Informing Sci. 2024, 27. [Google Scholar] [CrossRef]

- Jeffares, A.; Liu, T.; Crabbé, J.; van der Schaar, M. Joint Training of Deep Ensembles Fails Due to Learner Collusion. In Proceedings of the Advances in Neural Information Processing Systems; Oh, A., Naumann, T., Globerson, A., Saenko, K., Hardt, M., Levine, S., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2023; Volume 36, pp. 13559–13589. [Google Scholar]

| Age | Gender | Heart Rate | Systolic Blood Pressure | Diastolic Blood Pressure | Blood Sugar | CK-MB | Troponin | Result |

|---|---|---|---|---|---|---|---|---|

| 64 | 1 | 66 | 160 | 83 | 160 | 1.8 | 0.012 | negative |

| 21 | 1 | 94 | 98 | 46 | 296 | 6.75 | 1.06 | positive |

| 55 | 1 | 64 | 160 | 77 | 270 | 1.99 | 0.003 | negative |

| 64 | 1 | 70 | 120 | 55 | 270 | 13.87 | 0.122 | positive |

| 55 | 1 | 64 | 112 | 65 | 300 | 1.08 | 0.003 | negative |

| Age | Sex | Cp | Trestbps | Chol | fbs | Restecg | Thalach | Exang | Oldpeak | Slope | Ca | Thal | Target |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 52 | 1 | 0 | 125 | 212 | 0 | 1 | 168 | 0 | 1 | 2 | 2 | 3 | 0.23 |

| 53 | 1 | 0 | 140 | 203 | 1 | 0 | 155 | 1 | 3.1 | 0 | 0 | 3 | 0.37 |

| 70 | 1 | 0 | 145 | 174 | 0 | 1 | 125 | 1 | 2.6 | 0 | 0 | 3 | 0.24 |

| 61 | 1 | 0 | 148 | 203 | 0 | 1 | 161 | 0 | 0 | 2 | 1 | 3 | 0.28 |

| 62 | 0 | 0 | 138 | 294 | 1 | 1 | 106 | 0 | 1.9 | 1 | 3 | 2 | 0.21 |

| Age | Anemia | Creatinine Phosphokinase | Diabetes | Ejection Fraction | High Blood Pressure | Platelets | Serum Creatinine | Serum Sodium | Sex | Smoking | Time | Death Event |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 75 | 0 | 582 | 0 | 20 | 1 | 265,000 | 1.9 | 130 | 1 | 0 | 4 | 1 |

| 55 | 0 | 7861 | 0 | 38 | 0 | 263,358 | 1.1 | 136 | 1 | 0 | 6 | 1 |

| 65 | 0 | 146 | 0 | 20 | 0 | 162,000 | 1.3 | 129 | 1 | 1 | 7 | 1 |

| 50 | 1 | 111 | 0 | 20 | 0 | 210,000 | 1.9 | 137 | 1 | 0 | 7 | 1 |

| 65 | 1 | 160 | 1 | 20 | 0 | 327,000 | 2.7 | 116 | 0 | 0 | 8 | 1 |

|

| Model | Precision | Recall | F1-Score | Accuracy | AUC-ROC | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| LR | 0.929 | 0.901 | 0.866 | 0.898 | 0.896 | 0.898 | 0.877 | 0.898 | 0.943 | 0.958 |

| NB | 0.979 | 0.837 | 0.654 | 0.735 | 0.784 | 0.733 | 0.779 | 0.735 | 0.889 | 0.898 |

| KNN | 1.000 | 0.845 | 1.000 | 0.814 | 1.000 | 0.817 | 1.000 | 0.814 | 1.000 | 0.913 |

| SVM | 0.989 | 0.949 | 0.961 | 0.947 | 0.975 | 0.947 | 0.970 | 0.947 | 0.996 | 0.962 |

| DT | 0.994 | 0.989 | 0.995 | 0.989 | 0.995 | 0.989 | 0.993 | 0.989 | 0.999 | 0.989 |

| MLP | 0.997 | 0.959 | 0.972 | 0.958 | 0.984 | 0.959 | 0.981 | 0.958 | 0.997 | 0.981 |

| ENS-XGB | 0.992 | 0.977 | 0.992 | 0.977 | 0.992 | 0.977 | 0.992 | 0.977 | 0.999 | 0.984 |

| ENS-DT | 0.993 | 0.978 | 0.993 | 0.977 | 0.993 | 0.977 | 0.993 | 0.977 | 0.994 | 0.972 |

| ENS-LR | 0.994 | 0.981 | 0.994 | 0.981 | 0.994 | 0.981 | 0.994 | 0.981 | 1.000 | 0.981 |

| ENS-RF | 0.995 | 0.989 | 0.995 | 0.989 | 0.995 | 0.989 | 0.995 | 0.989 | 1.000 | 0.989 |

| ENS-Vot | 0.996 | 0.967 | 0.996 | 0.966 | 0.996 | 0.966 | 0.996 | 0.966 | 1.000 | 0.987 |

| ENS-GBC | 0.994 | 0.989 | 0.994 | 0.989 | 0.994 | 0.989 | 0.994 | 0.989 | 0.999 | 0.981 |

| Model | Precision | Recall | F1-Score | Accuracy | AUC-ROC | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| LR | 0.812 | 0.778 | 0.892 | 0.776 | 0.850 | 0.775 | 0.837 | 0.776 | 0.888 | 0.865 |

| NB | 0.798 | 0.771 | 0.838 | 0.771 | 0.817 | 0.771 | 0.806 | 0.771 | 0.868 | 0.847 |

| KNN | 0.995 | 0.976 | 1.000 | 0.976 | 0.998 | 0.976 | 0.998 | 0.976 | 1.000 | 0.999 |

| SVM | 0.974 | 0.951 | 0.986 | 0.951 | 0.980 | 0.951 | 0.979 | 0.951 | 0.995 | 0.974 |

| DT | 0.995 | 0.976 | 1.000 | 0.976 | 0.998 | 0.976 | 0.998 | 0.976 | 1.000 | 0.985 |

| MLP | 0.954 | 0.946 | 0.976 | 0.946 | 0.965 | 0.946 | 0.963 | 0.946 | 0.995 | 0.989 |

| ENS-XGB | 0.995 | 0.986 | 0.995 | 0.985 | 0.995 | 0.985 | 0.995 | 0.985 | 1.000 | 0.985 |

| ENS-DT | 0.995 | 0.986 | 0.995 | 0.985 | 0.995 | 0.985 | 0.995 | 0.985 | 0.995 | 0.985 |

| ENS-LR | 0.998 | 0.976 | 0.998 | 0.976 | 0.998 | 0.976 | 0.998 | 0.976 | 1.000 | 0.995 |

| ENS-RF | 0.998 | 0.962 | 0.998 | 0.961 | 0.998 | 0.961 | 0.998 | 0.961 | 1.000 | 0.999 |

| ENS-Vot | 0.998 | 0.976 | 0.998 | 0.976 | 0.998 | 0.976 | 0.998 | 0.976 | 1.000 | 0.999 |

| ENS-GBC | 0.998 | 0.976 | 0.998 | 0.976 | 0.998 | 0.976 | 0.998 | 0.976 | 1.000 | 0.980 |

| Model | Precision | Recall | F1-Score | Accuracy | AUC-ROC | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| LR | 0.785 | 0.882 | 0.646 | 0.883 | 0.708 | 0.882 | 0.824 | 0.883 | 0.880 | 0.948 |

| NB | 0.746 | 0.848 | 0.633 | 0.850 | 0.685 | 0.849 | 0.808 | 0.850 | 0.875 | 0.938 |

| KNN | 0.736 | 0.841 | 0.810 | 0.833 | 0.771 | 0.836 | 0.841 | 0.833 | 0.913 | 0.927 |

| SVM | 0.714 | 0.846 | 0.886 | 0.800 | 0.791 | 0.809 | 0.845 | 0.800 | 0.920 | 0.930 |

| DT | 0.695 | 0.850 | 0.924 | 0.767 | 0.793 | 0.778 | 0.841 | 0.767 | 0.944 | 0.888 |

| MLP | 0.915 | 0.873 | 0.823 | 0.867 | 0.867 | 0.869 | 0.916 | 0.867 | 0.967 | 0.947 |

| ENS-XGB | 0.873 | 0.785 | 0.848 | 0.838 | 0.860 | 0.810 | 0.909 | 0.888 | 0.968 | 0.931 |

| ENS-DT | 0.806 | 0.730 | 0.755 | 0.776 | 0.774 | 0.744 | 0.855 | 0.848 | 0.888 | 0.883 |

| ENS-LR | 0.826 | 0.722 | 0.725 | 0.764 | 0.772 | 0.742 | 0.858 | 0.850 | 0.940 | 0.929 |

| ENS-RF | 0.872 | 0.826 | 0.798 | 0.833 | 0.833 | 0.829 | 0.894 | 0.902 | 0.963 | 0.963 |

| ENS-Vot | 0.759 | 0.748 | 0.735 | 0.705 | 0.746 | 0.705 | 0.835 | 0.849 | 0.917 | 0.937 |

| ENS-GBC | 0.844 | 0.746 | 0.778 | 0.836 | 0.810 | 0.788 | 0.879 | 0.872 | 0.959 | 0.932 |

| Dataset | Method Used | Author | Accuracy (%) | AUC (%) |

|---|---|---|---|---|

| LR Classifier | [53] | 81.0 | 89.3 | |

| 1 | Extra Trees | [54] | 97.0 | 97.0 |

| Ensemble-Voting (LR, ADB, XGB, RF) | [55] | 98.0 | 98.0 | |

| Ensemble-Stacking-RF (LR, KNN, SVM, DT, MLP) | Proposed Model | 98.9 | 98.9 | |

| KNN Classifier | [15] | 93.0 | – | |

| 2 | MLP | [54] | 85.0 | 85.0 |

| XGB Classifier | [14] | 88.0 | – | |

| Ensemble-Stacking-DT (KNN, SVM, DT, MLP) | Proposed Model | 98.5 | 98.5 | |

| Logistic Regression | [37] | 83.8 | 82.2 | |

| 3 | Associative Classification | [56] | 86.6 | – |

| Homomorphic encryption Deep Learning | [57] | 91.1 | 89.7 | |

| Ensemble-Stacking-RF (LR, NB, KNN, MLP) | Proposed Model | 90.2 | 96.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nava-Martinez, B.N.; Hernandez-Hernandez, S.S.; Rodriguez-Ramirez, D.A.; Martinez-Rodriguez, J.L.; Rios-Alvarado, A.B.; Diaz-Manriquez, A.; Martinez-Angulo, J.R.; Guerrero-Melendez, T.Y. Heart Attack Risk Prediction via Stacked Ensemble Metamodeling: A Machine Learning Framework for Real-Time Clinical Decision Support. Informatics 2025, 12, 110. https://doi.org/10.3390/informatics12040110

Nava-Martinez BN, Hernandez-Hernandez SS, Rodriguez-Ramirez DA, Martinez-Rodriguez JL, Rios-Alvarado AB, Diaz-Manriquez A, Martinez-Angulo JR, Guerrero-Melendez TY. Heart Attack Risk Prediction via Stacked Ensemble Metamodeling: A Machine Learning Framework for Real-Time Clinical Decision Support. Informatics. 2025; 12(4):110. https://doi.org/10.3390/informatics12040110

Chicago/Turabian StyleNava-Martinez, Brandon N., Sahid S. Hernandez-Hernandez, Denzel A. Rodriguez-Ramirez, Jose L. Martinez-Rodriguez, Ana B. Rios-Alvarado, Alan Diaz-Manriquez, Jose R. Martinez-Angulo, and Tania Y. Guerrero-Melendez. 2025. "Heart Attack Risk Prediction via Stacked Ensemble Metamodeling: A Machine Learning Framework for Real-Time Clinical Decision Support" Informatics 12, no. 4: 110. https://doi.org/10.3390/informatics12040110

APA StyleNava-Martinez, B. N., Hernandez-Hernandez, S. S., Rodriguez-Ramirez, D. A., Martinez-Rodriguez, J. L., Rios-Alvarado, A. B., Diaz-Manriquez, A., Martinez-Angulo, J. R., & Guerrero-Melendez, T. Y. (2025). Heart Attack Risk Prediction via Stacked Ensemble Metamodeling: A Machine Learning Framework for Real-Time Clinical Decision Support. Informatics, 12(4), 110. https://doi.org/10.3390/informatics12040110