1. Introduction

Several crucial improvements in neural networks have been made in recent years. One of them is the attention mechanism, which has significantly improved classification and regression models in many machine learning areas, including the natural language processing models, computer vision, etc. [

1,

2,

3,

4,

5]. The idea behind the attention mechanism is to assign weights to features or examples in accordance with their importance and their impact on the model predictions. The attention weights are learned by incorporating an additional feedforward neural network within a neural network architecture. Additionally, the success of the attention models as components of the neural network motivates one to extend this approach to other machine learning models different from neural networks, for example, to random forests (RFs) [

6]. Following this idea, a new model called the attention-based random forest (ABRF) has been developed [

7,

8]. This model incorporates the attention mechanism into ensemble-based models such as RFs and the gradient boosting machine [

9,

10]. The ABRF models stem from the interesting interpretation [

1,

11] of the attention mechanism through the Nadaraya–Watson kernel regression model [

12,

13]. The Nadaraya–Watson regression model learns a non-linear function by using a weighted average of data using a specific normalized kernel as a weighting function. A detailed description of the model can be found in

Section 3. According to [

7,

8], attention weights in the Nadaraya–Watson regression are assigned to decision trees in an RF depending on examples which fall into leaves of trees. Weights in ABRF have trainable parameters and use Huber’s

-contamination model [

14] for defining the attention weights. Huber’s

-contamination model can be regarded as a set of convex combinations of probability distributions, where one of the distributions is considered as a set of trainable attention parameters. A detailed description of the model is provided in

Section 5.1. In accordance with the

-contamination model, each attention weight consists of two parts: the softmax operation with the tuning coefficient

and the trainable bias of the softmax weight with coefficient

. One of the improvements of ABRF, which has been proposed in [

15], is based on joint incorporating self-attention and attention mechanisms into the RF. The proposed models outperform ABRF, but this outperformance is not sufficient, because this model provided inferior results for several datasets. Therefore, we propose a set of models which can be regarded as extensions of ABRF and which are based on two main ideas.

The first idea is to introduce a two-level attention, where one of the levels is the “leaf” attention, i.e., the attention mechanism is applied to every leaf of a tree. As a result, we obtain the attention weights assigned to leaves and the attention weights assigned to trees. The attention weights of trees depend on the corresponding weights of leaves which belong to these trees. In other words, the attention at the second level depends on the attention at the first level, i.e., we obtain the attention of the attention. Due to the “leaf” attention, the proposed model will be abbreviated as LARF (leaf attention-based random forest).

One of the peculiarities of LARFs is using a mixture of Huber’s

-contamination models instead of the single contamination model, as has been conducted in ABRF. This peculiarity stems from the second idea behind the model, which takes into account the softmax operation with different parameters. In fact, we replace the standard softmax operation by the weighted sum of the softmax operations with different parameters. With this idea, we achieve two goals. First of all, we partially solve the problem of the tuning parameters of the softmax operations which are a part of the attention operations. Each value of the tuning parameter from the predefined set (from the predefined grid) is used in a separate softmax operation. Then, weights of the softmax operations in the sum are trained jointly while training other parameters. This approach can also be interpreted as the linear approximation of the softmax operations with trainable weights and with different values of tuning parameters. However, a more interesting goal is that some analogs of the multi-head attention [

16] are implemented by using the mixture of contamination models, where “heads” are defined by selecting a value of the corresponding softmax operation parameter.

Additionally, in contrast to ABRF [

8], where the contamination parameter

of Huber’s model was a tuning parameter, the LARF model considers this parameter as the training one. This allows us to significantly reduce the model tuning time and avoid the enumeration of the parameter values in accordance with the grid. The same is implemented for the mixture of the Huber’s models.

Different configurations of LARF produce a set of models, which depend on trainable and tuning parameters of the two-level attention and on algorithms for their calculation.

We investigate two types of RFs in the experiments: original RFs and Extremely Randomized Trees (ERT) [

17]. According to [

17], the ERT algorithm chooses a split point randomly for each feature at each node and then selects the best split among these. In contrast to ERTs, original RFs choose the most optimal (not random) split of a set of features at each node in accordance with a criterion, for example, with the Gini impurity [

6].

Our contributions can be summarized as follows:

We propose new two-level attention-based RF models, where the attention mechanism at the first level is applied to every leaf of trees, the attention at the second level incorporates the “leaf” attention, and it is applied to trees. The training of the two-level attention is reduced to solving the standard quadratic optimization problem.

A mixture of Huber’s -contamination models is used to implement the attention mechanism at the second level. The mixture allows us to replace a set of tuning attention parameters (the temperature parameters of the softmax operations) with trainable parameters, whose optimal values are computed by solving the quadratic optimization problem. Moreover, this approach can be regarded as an analog of the multi-head attention.

We propose an approach to convert the tuning contamination parameters ( parameters) in the mixture of the -contamination models into trainable parameters. Their optimal values are also computed by solving the quadratic optimization problem.

Many numerical experiments with real datasets are performed for studying LARFs. They demonstrate outperforming results of some LARF modifications. The code of the proposed algorithms can be found at

https://github.com/andruekonst/leaf-attention-forest (accessed on 20 April 2023).

This paper is organized as follows. The related work can be found in

Section 2. A brief introduction to the attention mechanism as the Nadaraya–Watson kernel regression is given in

Section 3. A general approach to incorporating the two-level attention mechanism into the RF is provided in

Section 4. Ways for the implementation of the two-level attention mechanism and constructing several attention-based models by using the mixture of Huber’s e-contamination models are considered in

Section 5. Numerical experiments with real data, which illustrate properties of the proposed models, are provided in

Section 6. The concluding remarks can be found in

Section 7.

4. Two-Level Attention-Based Random Forest

One of the powerful machine learning models handling tabular data is the RF, which can be regarded as an ensemble of T decision trees so that each tree is trained on a subset of examples randomly selected from the training set. In the original RF, the final RF prediction for the testing example is determined by averaging predictions obtained for all trees.

Let

be the index set of examples which fall into the same leaf in the

k-th tree as

. One of the ways to construct the attention-based RF is to introduce the mean vector

defined as the mean of the training vectors

which fall into the same leaf as

. However, this simple definition can be extended by incorporating the Nadaraya–Watson regression into the leaf. In this case, we can write

where

is the attention weight in accordance with the Nadaraya–Watson kernel regression.

In fact, (

4) can be regarded as the self-attention. The idea behind (

4) is that we find the mean value of

by assigning weights to training examples which fall into the corresponding leaf in accordance with their vicinity to the vector

.

In the same way, we can define the mean value of regression outputs corresponding to examples falling into the same leaf as

:

Expression (

5) can be regarded as the attention. The idea behind (

5) is to obtain the prediction provided by the corresponding leaf by using the standard attention mechanism or Nadaraya–Watson regression. In other words, we weigh predictions provided by the

k-th leaf of a tree in accordance with the distance between the feature vector

, which falls into the

k-th leaf, and all the feature vectors

which fall into the same leaf. It should be noted that the original regression tree provides the averaged prediction; i.e., it corresponds to the case when all

are identical for all

and equal to

.

We suppose that the attention mechanisms used above are non-parametric. This implies that weights do not have trainable parameters. It is assumed that

The “leaf” attention introduced above can be regarded as the first-level attention in a hierarchy of the attention mechanisms. It characterizes how the feature vector fits the corresponding tree.

If we suppose that the whole RF consists of

T decision trees, then, the set of

,

, in the framework of the attention mechanism, can be regarded as a set of keys for every

, the set of

,

, can be regarded as a set of values. This implies that the final prediction

of the RF can be computed by using the Nadaraya–Watson regression, namely,

Here,

is the attention weight with the vector

of trainable parameters which belong to a set

so that they are assigned to each tree. The attention weight

is defined by the distance between

and

. It is assumed due to properties of the attention weights in the Nadaraya–Watson regression that the following condition is valid:

The above “random forest” attention can be regarded as the second-level attention which assigns weights to trees in accordance with their impact on the RF prediction corresponding to .

The main idea behind the approach is to use the above attention mechanisms jointly. After substituting (

4) and (

5) into (

7), we obtain

or

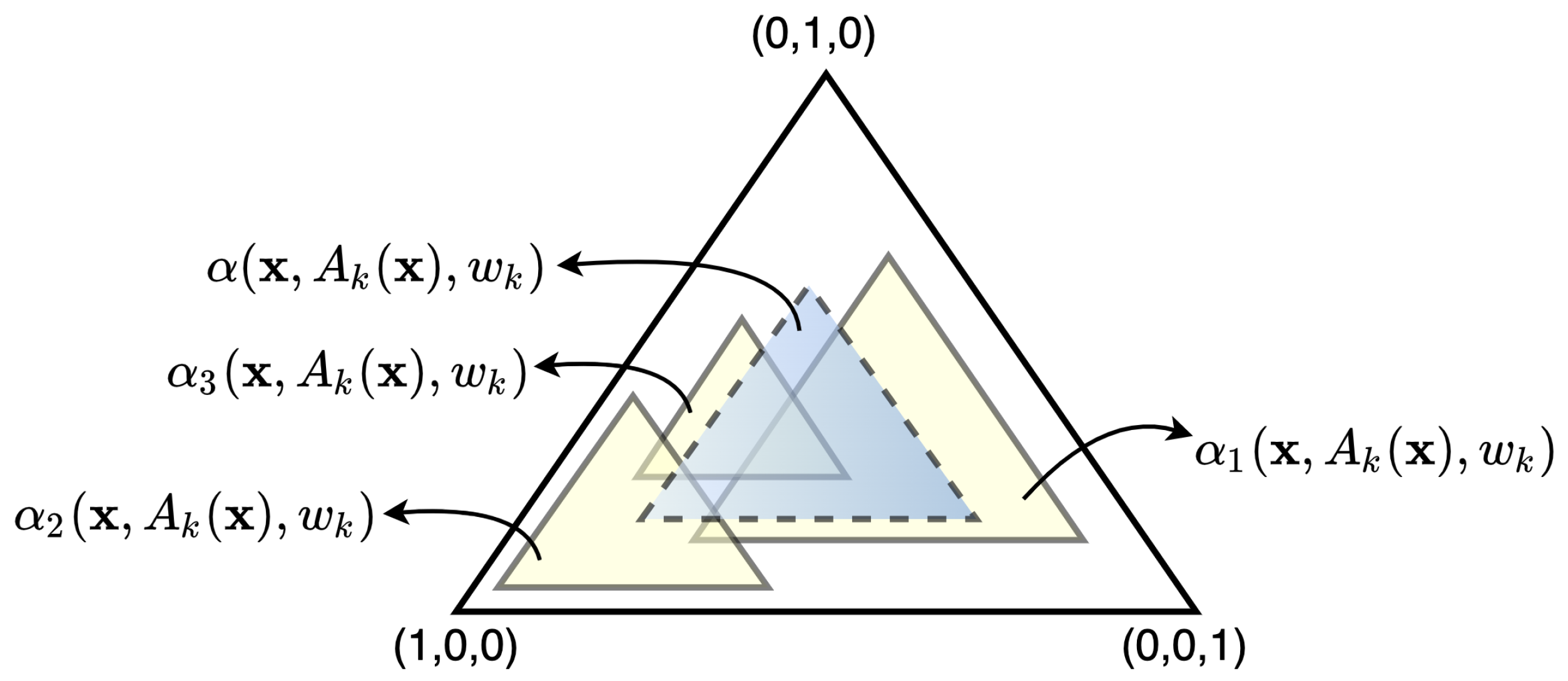

A scheme of the two-level attention is shown in

Figure 1. It is observed from

Figure 1 how the attention at the second level depends on the “leaf” attention at the first level.

In total, we obtain the trainable attention-based RF with parameters

, which are defined by minimizing the expected loss function over the set

of parameters, respectively, as follows:

The loss function can be rewritten as the following:

The optimal trainable parameters

are computed depending on forms of the attention weights

in the optimization problem (

12). It should be noted that the problem (

12) may be complex from the computation point of view. Therefore, one of our results is to propose such a form of the attention weights

that reduces the problem (

12) to a convex quadratic optimization problem, whose solution does not meet any difficulties.

It is important to point out that the additional sets of trainable parameters can be introduced into the definition of the attention weights

. On the one hand, we obtain more flexible attention mechanisms in this case due to the parametrization of the training weights

. On the other hand, many trainable parameters lead to the increasing complexity of the optimization problem (

11) and the possible overfitting of the whole RF.

6. Numerical Experiments

Let us introduce notations for different models of the attention-based RFs.

RF (ERT): the original RF (the ERT) without applying attention mechanisms;

ARF (LARF): the attention-based forest which has the following modifications: -ARF, -LARF, -w-ARF, and -w-LARF.

In all experiments, RFs as well as ERTs consist of 100 trees. To select the best tuning parameters in numerical experiments, a 3-fold cross-validation on the training set consisting of examples with 100 repetitions is performed. The search for the best parameter is carried out by considering all its values in a predefined grid. A cross-validation procedure is subsequently used to select their appropriate values. The testing set for computing the accuracy measures is comprised of examples. In order to obtain desirable estimates of the vectors and , all trees in the experiments are trained so that at least 10 examples fall into every leaf of a tree. Value 10 for the number of examples is taken for two reasons. On the one hand, we have to compute the mean vectors and and to obtain unbiased estimators. On the other hand, it is difficult to expect that a large number of examples will fall into every leaf by a small number of training examples. Therefore, our prior experiments have demonstrated that this parameter should be 10. We also use all features at each split of decision trees.

We do not consider models -ARF, -ARF, -LARF, and -LARF, because they can be regarded as special cases of the corresponding models M-ARF, --ARF, M-LARF, and --LARF when . The value of M is an integer tuning parameter, and it is tuned in the interval from 1 to 20. Set of the softmax operation parameters is defined as . In particular, if , then the set of consists of one element . The parameter of the first-level attention in the “leaf” is taken equal to 1.

Numerical results are presented in tables where the best results are shown in bold. The coefficient of determination denoted and the mean absolute error (MAE) are used for the regression evaluation. The greater value of the coefficient of determination and the smaller MAE we have, the better results we achieve.

The proposed approach is studied by applying datasets which are taken from open sources. The dataset Diabetes is downloaded from the R Packages; datasets Friedman 1, 2 and 3 are retrieved from the site:

https://www.stat.berkeley.edu/~breiman/bagging.pdf (accessed on 20 April 2023); datasets Regression and Sparse are taken from package “Scikit-Learn”; datasets Wine Red, Boston Housing, Concrete, Yacht Hydrodynamics, Airfoil can be found in the UCI Machine Learning Repository [

44]. These datasets with their numbers of features

m and numbers of examples

n are given in

Table 2. A more detailed information can be found from the aforementioned data resources.

Values of the measure

for several models, including RF,

-

-ARF,

-

-LARF,

-ARF, and

-LARF, are shown in

Table 3. The results are obtained by training the RF. The optimal values of

are also given in the table. It can be observed from

Table 3 that

-

-LARF outperforms all models for most datasets. Moreover,

Table 3 shows that the two-level attention models (

-

-LARF and

-LARF) provide better results than models which do not use the “leaf” attention (

-

-ARF and

-ARF). It should be also noted that all attention-based models outperform the original RF. The same relationship between the models occurs for another accuracy measure (MAE). It is clearly shown in

Table 4.

Another important question is how the attention-based models perform when the ERT is used. The corresponding values of

and MAE are shown in

Table 5 and

Table 6, respectively.

Table 5 also contains the optimal values

. In contrast to the case of using the RF, it can be observed from the tables that

-LARF outperforms

-

-LARF for several models. It can be explained by reducing the accuracy due to a larger number of training parameters (parameters

) and overfitting for small datasets. It is also worth noting that models based on ERTs provide better results than models based on RFs. However, this improvement is not significant. This is observed in

Table 7, where the best results are collected for models based on ERTs and RFs.

Table 7 shows that the results are identical for several datasets, namely, for datasets Friedman 1, 2, 3, Concrete, and Yacht. If one is to apply the

t-test to compare the values of

obtained for two models, then, according to [

45], the

t-statistics is distributed in accordance with the Student distribution with the

degrees of freedom (11 datasets). The obtained

p-value is

. We can conclude that the outperformance of the ERT is not statistically significant because

.

We take the number of trees in RFs equal to 100, because our goal is to compare RFs and the proposed modifications of LARF with the same parameters of numerical experiments. We also study how values of

depend on different numbers of decision trees for the considered datasets. The corresponding numerical results for the RF and the ERT are shown in

Table 8 and

Table 9, respectively, by 100, 400, 700, and 1000 trees. It can be observed in

Table 8 and

Table 9 that

insignificantly increases with the number of trees. However, it is important to point out that the largest values of

for RFs and ERTs obtained by

do not exceed the values of

for LARF modifications presented in

Table 3,

Table 4,

Table 5 and

Table 6.

It should be pointed out that the proposed models can be regarded as extensions of the attention-based RF (

-ABRF) presented in [

8]. Therefore, it is also worth comparing the two-level attention models with

-ABRF.

Table 10 shows the values of

obtained by using

-ABRF and the best values of the proposed models when the RF and the ERT are used.

If we compare the results presented in

Table 10 by applying the

t-tests in accordance with [

45], then tests for the proposed models and

-ABRF based on the RF and the ERT provide

p-values equal to

and

, respectively. The tests demonstrate the clear outperformance of the proposed models in comparison with

-ABRF.

It is obvious that the tuning parameters of the proposed modifications, for example, , are not optimal due to the validation procedure and due to the grid of values used in experiments. However, we can observe from the numerical results that the proposed modifications outperform RFs or -ABRF even with suboptimal values of the tuning parameters.

_Bryant.png)