1. Introduction

The theory of information-based asset pricing proposed by

Brody et al. (

2007,

2008a,

2008b) and

Macrina (

2006) is concerned with the determination of the price processes of financial assets from first principles. In particular, the market filtration is constructed explicitly, rather than simply assumed, as it is in traditional approaches. The simplest version of the model is as follows. We fix a probability space

. An asset delivers a single random cash flow

at some specified time

, where time 0 denotes the present. The cash flow is a function of a random variable

, which we can think of as a “market factor” that is in some sense revealed at time

T. In the general situation there will be many factors and many cash flows, but for the present we assume that there is a single factor

such that the sole cash flow at time

T is given by

for some Borel function

. For simplicity we assume that interest rates are constant and that

is the risk neutral measure. We require that

should be integrable. Under these assumptions, the value of the asset at time 0 is

where

denotes expectation under

and

r is the short rate. Since the single “dividend” is paid at time

T, the value of the asset at any time

is of the form

where

is the market filtration. The task now is to model the filtration, and this will be done explicitly.

In traditional financial modelling, the filtration is usually taken to be fixed in advance. For example, in the widely-applied Brownian-motion-driven model for financial markets, the filtration is generated by an

n-dimensional Brownian motion. A detailed account of the Brownian framework can be found, for example, in

Karatzas and Shreve (

1998). In the information-based approach, however, we do not assume the filtration to be given

a priori. Instead, the filtration is constructed in a way that specifically takes into account the structures of the information flows associated with the cash flows of the various assets under consideration.

In the case of a single asset generating a single cash flow, the idea is that the filtration should contain partial or “noisy” information about the market factor

, and hence the impending cash flow, in such a way that

is

-measurable. This can be achieved by allowing

to be generated by a so-called information process

with the property that for each

t such that

the random variable

is

-measurable. Then by constructing specific examples of cádlàg processes having this property, we are able to formulate a variety of specific models. The resulting models are finely tuned to the structures of the assets that they represent, and therefore offer scope for a useful approach to financial risk management. In previous work on information-based asset pricing, where precise definitions can be found that expand upon the ideas summarized above, such models have been constructed using Brownian bridge information processes (

Brody et al. (

2007,

2008a,

2009,

2010,

2011),

Filipović et al. (

2012),

Hughston and Macrina (

2012),

Macrina (

2006),

Mengütürk (

2013),

Rutkowski and Yu (

2007)), gamma bridge information processes (

Brody et al. (

2008b)), Lévy random bridge information processes (

Hoyle (

2010),

Hoyle et al. (

2011,

2015,

2020),

Mengütürk (

2018)) and Markov bridge information processes (

Macrina (

2019)). In what follows we present a new model for the market filtration, based on the variance-gamma process. The idea is to create a two-parameter family of information processes associated with the random market factor

. One of the parameters is the information flow-rate

. The other is an intrinsic parameter

m associated with the variance gamma process. In the limit as

m tends to infinity, the variance-gamma information process reduces to the type of Brownian bridge information process considered by

Brody et al. (

2007,

2008a) and

Macrina (

2006).

The plan of the paper is as follows. In

Section 2 we recall properties of the gamma process, introducing the so-called scale parameter

and shape parameter

. A standard gamma subordinator is defined to be a gamma process with

. The mean at time

t of a standard gamma subordinator is

t. In Theorem 1 we prove that an increase in the shape parameter

m results in a transfer of weight from the Lévy measure of any interval

in the space of jump size to the Lévy measure of any interval

such that

and

. Thus, roughly speaking, an increase in

m results in an increase in the rate at which small jumps occur relative to the rate at which large jumps occur. This result concerning the interpretation of the shape parameter for a standard gamma subordinator is new as far as we are aware.

In

Section 3 we recall properties of the variance-gamma process and the gamma bridge, and in Definition 1 we introduce a new type of process, which we call a normalized variance-gamma bridge. This process plays an important role in the material that follows. In Lemmas 1 and 2 we work out various properties of the normalized variance-gamma bridge. Then in Theorem 2 we show that the normalized variance-gamma bridge and the associated gamma bridge are jointly Markov, a property that turns out to be crucial in our pricing theory. In

Section 4, at Definition 2, we introduce the so-called variance-gamma information process. The information process carries noisy information about the value of a market factor

that will be revealed to the market at time

T, where the noise is represented by the normalized variance-gamma bridge. In Equation (

58) we present a formula that relates the values of the information process at different times, and by use of that we establish in Theorem 3 that the information process and the associated gamma bridge are jointly Markov.

In

Section 5, we consider a market where the filtration is generated by a variance gamma information process along with the associated gamma bridge. In Lemma 3 we work out a version of the Bayes formula in the form that we need for asset pricing in the present context. Then in Theorem 4 we present a general formula for the price process of a financial asset that at time

T pays a single dividend given by a function

of the market factor. In particular, the

a priori distribution of the market factor can be quite arbitrary, specified by a measure

on

, the only requirement being that

should be integrable. In

Section 6 we present a number of examples, based on various choices of the payoff function and the distribution for the market factor, the results being summarized in Propositions 1–4. We conclude with comments on calibration, derivatives, and how one determines the trajectory of the information process from market prices.

2. Gamma Subordinators

We begin with some remarks about the gamma process. Let us as usual write

for the non-negative real numbers. Let

and

m be strictly positive constants. A continuous random variable

on a probability space

will be said to have a gamma distribution with scale parameter

and shape parameter

m if

where

denotes the standard gamma function for

, and we recall the relation

. A calculation shows that

, and

. There exists a two-parameter family of gamma processes of the form

on

. By a gamma process with scale

and shape

m we mean a Lévy process

such that for each

the random variable

is gamma distributed with

If we write and for the so-called Pochhammer symbol, we find that . It follows that and , where and , or equivalently , and .

The Lévy exponent for such a process is given for

by

and for the corresponding Lévy measure we have

One can then check that the Lévy-Khinchine relation

holds for an appropriate choice of

p (

Kyprianou 2014, Lemma 1.7).

By a standard gamma subordinator we mean a gamma process for which . This implies that and . The standard gamma subordinators thus constitute a one-parameter family of processes labelled by m. An interpretation of the parameter m is given by the following:

Theorem 1. Let be a standard gamma subordinator with parameter m. Let be the Lévy measure of the interval for . Then for any interval such that and the ratiois strictly greater than one and strictly increasing as a function of m. Proof. By the definition of a standard gamma subordinator we have

Let

and note that the integrand in the right hand side of (

10) is a decreasing function of the variable of integration. This allows one to conclude that

from which it follows that

and hence

. To show that

is strictly increasing as a function of

m we observe that

where the so-called exponential integral function

is defined for

by

See

Abramowitz and Stegun (

1972), Section 5.1.1, for properties of the exponential integral. Next, we compute the derivative of

, which gives

where

We note that

which shows that the sign of the derivative in (

14) is strictly positive if and only if

But clearly

for

, which after a change of integration variables and use of (

15) implies

which is equivalent to (

17), and that completes the proof. □

We see therefore that the effect of an increase in the value of m is to transfer weight from the Lévy measure of any jump-size interval to any possibly-overlapping smaller-jump-size interval of the same length. The Lévy measure of such an interval is the rate of arrival of jumps for which the jump size lies in that interval.

3. Normalized Variance-Gamma Bridge

Let us fix a standard Brownian motion

on

and an independent standard gamma subordinator

with parameter

m. By a standard variance-gamma process with parameter

m we mean a time-changed Brownian motion

of the form

It is straightforward to check that

is itself a Lévy process, with Lévy exponent

The other object we require going forward is the gamma bridge (

Brody et al. (

2008b),

Emery and Yor (

2004),

Yor (

2007)). Let

be a standard gamma subordinator with parameter

m. For fixed

the process

defined by

for

and

for

will be called a standard gamma bridge, with parameter

m, over the interval

. One can check that for

the random variable

has a beta distribution (

Brody et al. 2008b, pp. 6–9). In particular, one finds that its density is given by

where

It follows then by use of the integral formula

that for all

we have

and hence

Accordingly, one has

and therefore

One observes, in particular, that the expectation of does not depend on m, whereas the variance of decreases as m increases.

Definition 1. For fixed , the process defined by for and for will be called a normalized variance gamma bridge.

We proceed to work out various properties of this process. We observe that

is conditionally Gaussian, from which it follows that

and

. Therefore

and

; and thus by use of (

28) we have

Now, recall (

Yor (

2007),

Emery and Yor (

2004)) that the gamma process and the associated gamma bridge have the following fundamental independence property. Define

Then, for every it holds that and are independent. In particular and are independent for and . It also holds that and are independent for and . Furthermore, we have:

Lemma 1. If and then and are independent.

Proof. We recall that if a random variable

X is normally distributed with mean

and variance

then

where

is defined by

Since

is conditionally Gaussian, by use of the tower property we find that

where the last line follows from the independence of

and

. □

By a straightforward extension of the argument we deduce that if and then and are independent. Further, we have:

Lemma 2. If and then and are independent.

Proof. We recall that the Brownian bridge

defined by

for

and

for

is Gaussian with

,

, and

for

. Using the tower property we find that

where in the final step we use (

30) along with properties of the Brownian bridge. □

A straightforward calculation shows that if

and

then

With this result at hand we obtain the following:

Theorem 2. The processes and are jointly Markov.

Proof. To establish the Markov property it suffices to show that for any bounded measurable function

, any

, and any

, we have

We present the proof for

. Thus we need to show that

As a consequence of (

38) we have

Therefore, it suffices to show that

Let us write

for the joint density of

. Then for the conditional density of

and

given

we have

Thus,

Similarly,

where for the conditional density of

and

given

we have

Note that the conditional probability densities that we introduce in formulae such as those above are “regular” conditional densities (

Williams 1991, p. 91). We shall show that

Writing

for the joint distribution function, we see that

where the last step follows as a consequence of Lemma 2. Thus we have

where the next to last step follows by virtue of the fact that

and

are independent for

and

. Similarly,

and hence

Thus we deduce that

and

and the theorem follows. □

5. Information Based Pricing

Now we are in a position to consider the valuation of a financial asset in the setting just discussed. One recalls that

is understood to be the risk-neutral measure and that the interest rate is constant. The payoff of the asset at time

T is taken to be an integrable random variable of the form

for some Borel function

h, where

is the information revealed at

T. The filtration is generated jointly by the variance-gamma information process

and the associated gamma bridge

. The value of the asset at time

is then given by the general expression (

2), which on account of Theorem 3 reduces in the present context to

and our goal is to work out this expectation explicitly.

Let us write

for the

a priori distribution function of

. Thus

and we have

Occasionally, it will be typographically convenient to write in place of , and similarly for other distribution functions. To proceed, we require the following:

Lemma 3. Let X be a random variable with distribution and let Y be a continuous random variable with distribution and density . Then for all for which we have where denotes the conditional distribution , and where Proof. For

any two random variables

X and

Y it holds that

Here we have used the fact that for each

there exists a Borel measurable function

such that

. Then for

we define

Hence

By symmetry, we have

from which it follows that we have the relation

Moving ahead, let us consider the measure

on

defined for each

by setting

for any

. Then

is absolutely continuous with respect to

. Indeed, suppose that

for some

. Now,

. But if

, then

, and hence

, and therefore

. Thus

vanishes for any

for which

vanishes. It follows by the Radon-Nikodym theorem that for each

there exists a density

such that

Note that

is determined uniquely apart from its values on

-null sets. Inserting (

73) into (

71) we obtain

and thus by Fubini’s theorem we have

It follows then that

is determined uniquely apart from its values on

-null sets, and we have

This relation holds quite generally and is symmetrical between

X and

Y. Indeed, we have not so far assumed that

Y is a continuous random variable. If

Y is, in fact, a continuous random variable, then its distribution function is absolutely continuous and admits a density

. In that case, (

76) can be written in the form

from which it follows that for each value of

x the conditional distribution function

is absolutely continuous and admits a density

such that

The desired result (

65) then follows from (

73) and (

78) if we observe that

and that concludes the proof. □

Armed with Lemma 3, we are in a position to work out the conditional expectation that leads to the asset price, and we obtain the following:

Theorem 4. The variance-gamma information-based price of a financial asset with payoff at time T is given for by Proof. To calculate the conditional expectation of

, we observe that

by the tower property, where the inner expectation takes the form

Here by Lemma 3 the conditional distribution function is

Therefore, the inner expectation in Equation (

81) is given by

But the right hand side of (

84) depends only on

and

. It follows immediately that

which translates into Equation (

80), and that concludes the proof. □

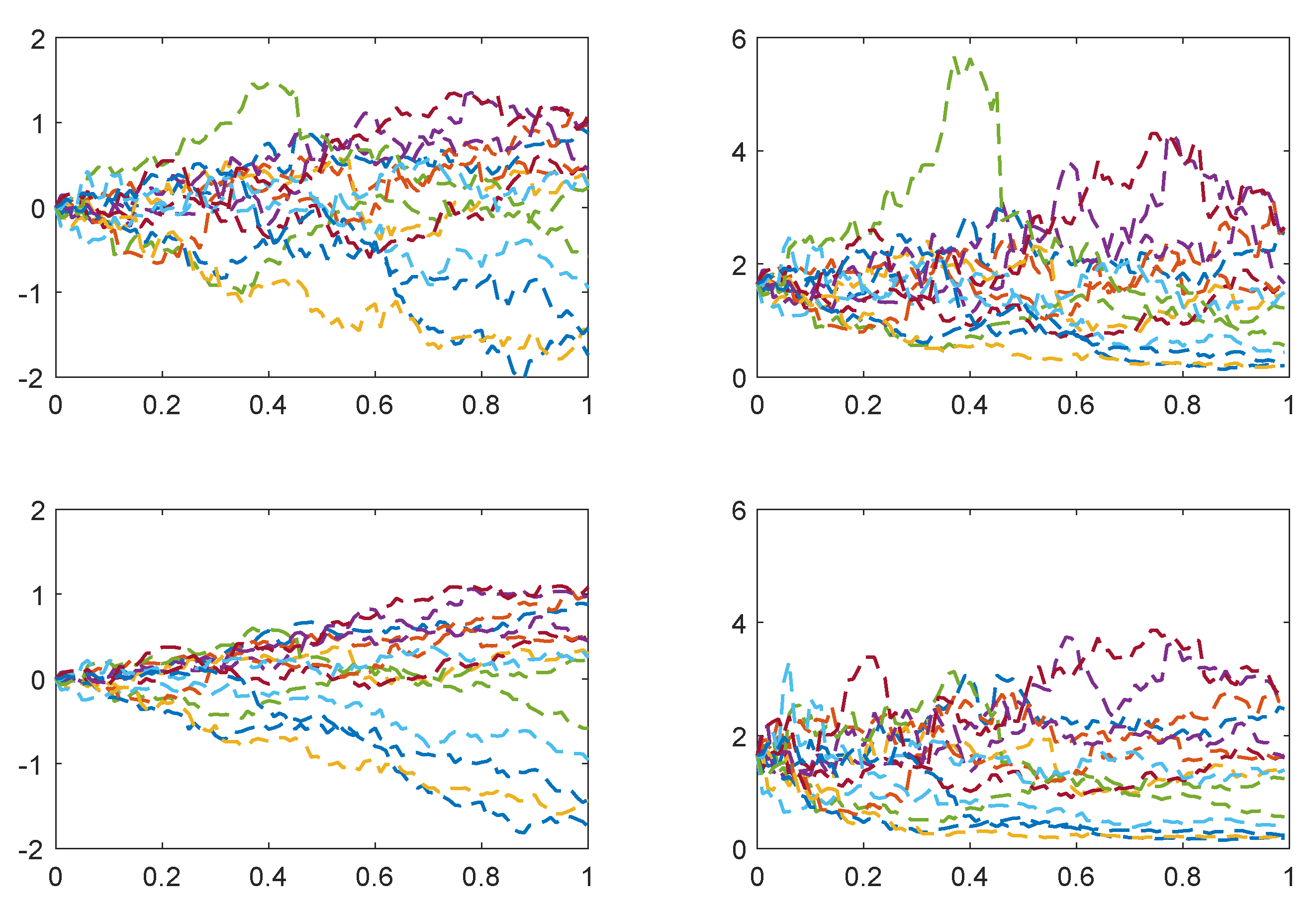

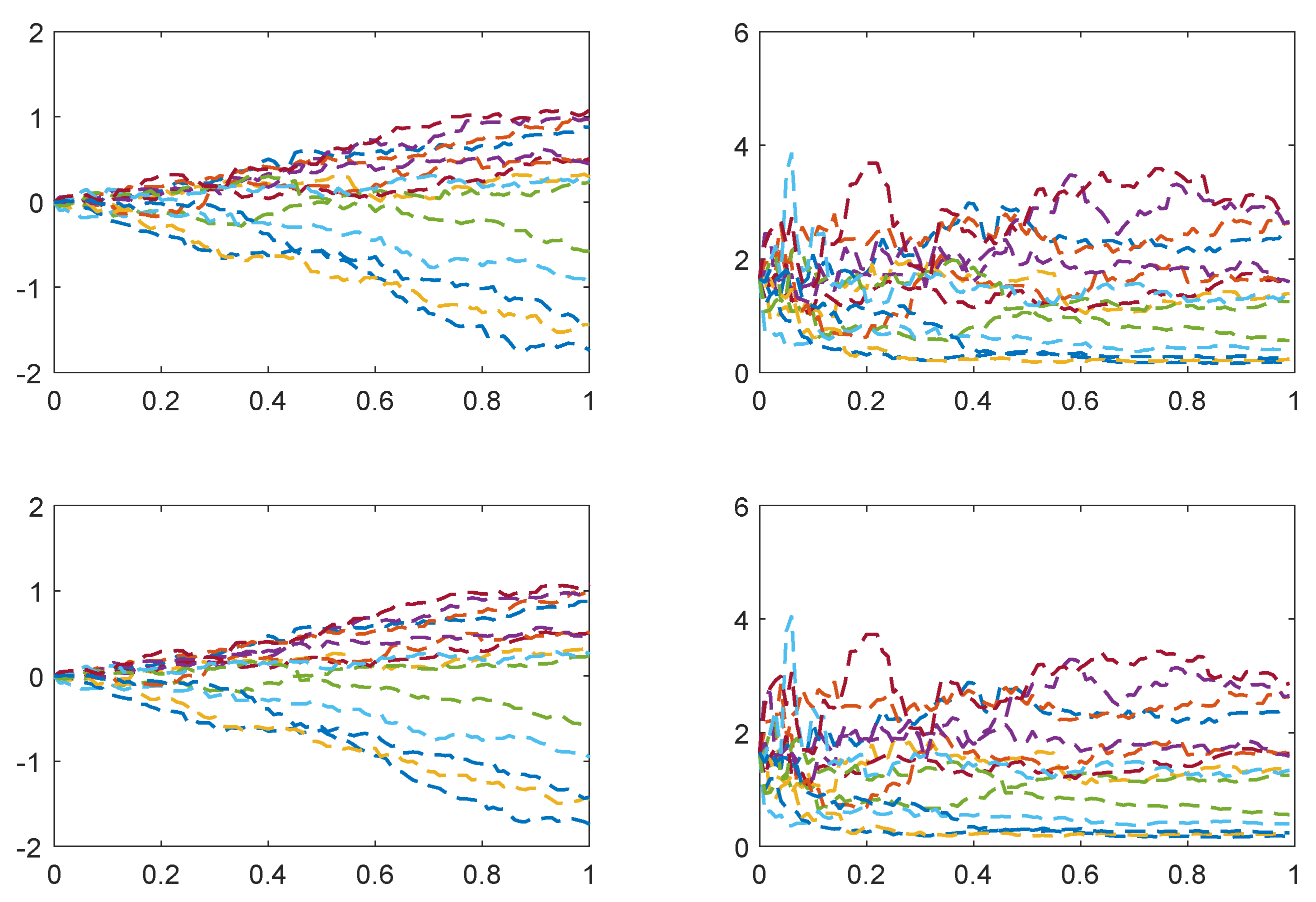

6. Examples

Going forward, we present some examples of variance-gamma information pricing for specific choices of (a) the payoff function

and (b) the distribution of the market factor

. In the figures, we display sample paths for the information processes and the corresponding prices. These paths are generated as follows. First, we simulate outcomes for the market factor

. Second, we simulate paths for the gamma process

over the interval

and an independent Brownian motion

. Third, we evaluate the variance gamma process

over the interval

by subordinating the Brownian motion with the gamma process, and we evaluate the resulting gamma bridge

. Fourth, we use these ingredients to construct sample paths of the information processes, where these processes are given as in Definition 2. Finally, we evaluate the pricing formula in Equation (

80) for each of the simulated paths and for each time step.

Example 1: Credit risky bond. We begin with the simplest case, that of a unit-principal credit-risky bond without recovery. We set

, with

and

, where

. Thus, we have

where

and

denotes the Dirac measure concentrated at the point

a, and we are led to the following:

Proposition 1. The variance-gamma information-based price of a unit-principal credit-risky discount bond with no recovery is given by Now let

denote the outcome of chance. By use of Equation (

57) one can check rather directly that if

= 1, then

, whereas if

= 0, then

. More explicitly, we find that

whereas

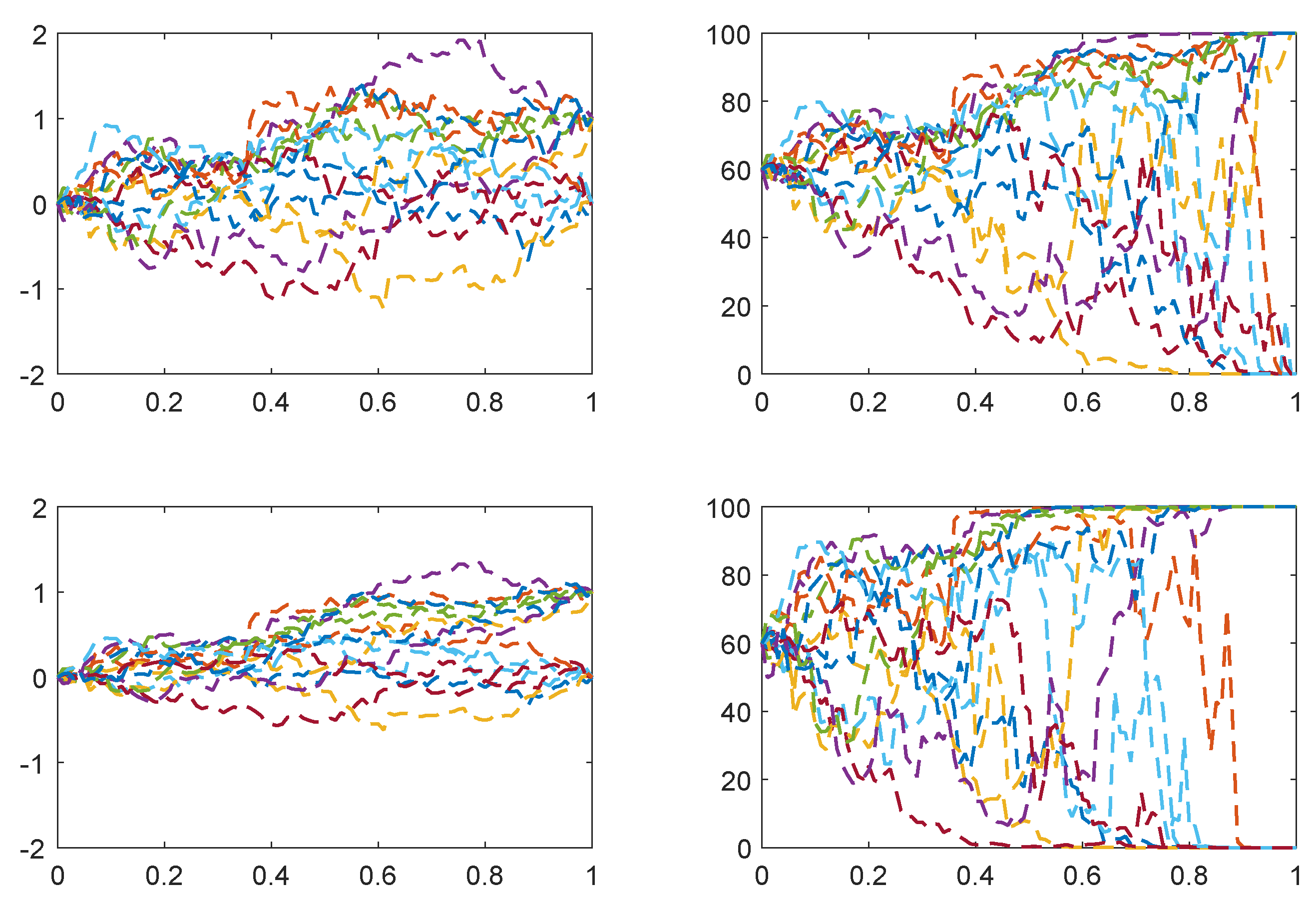

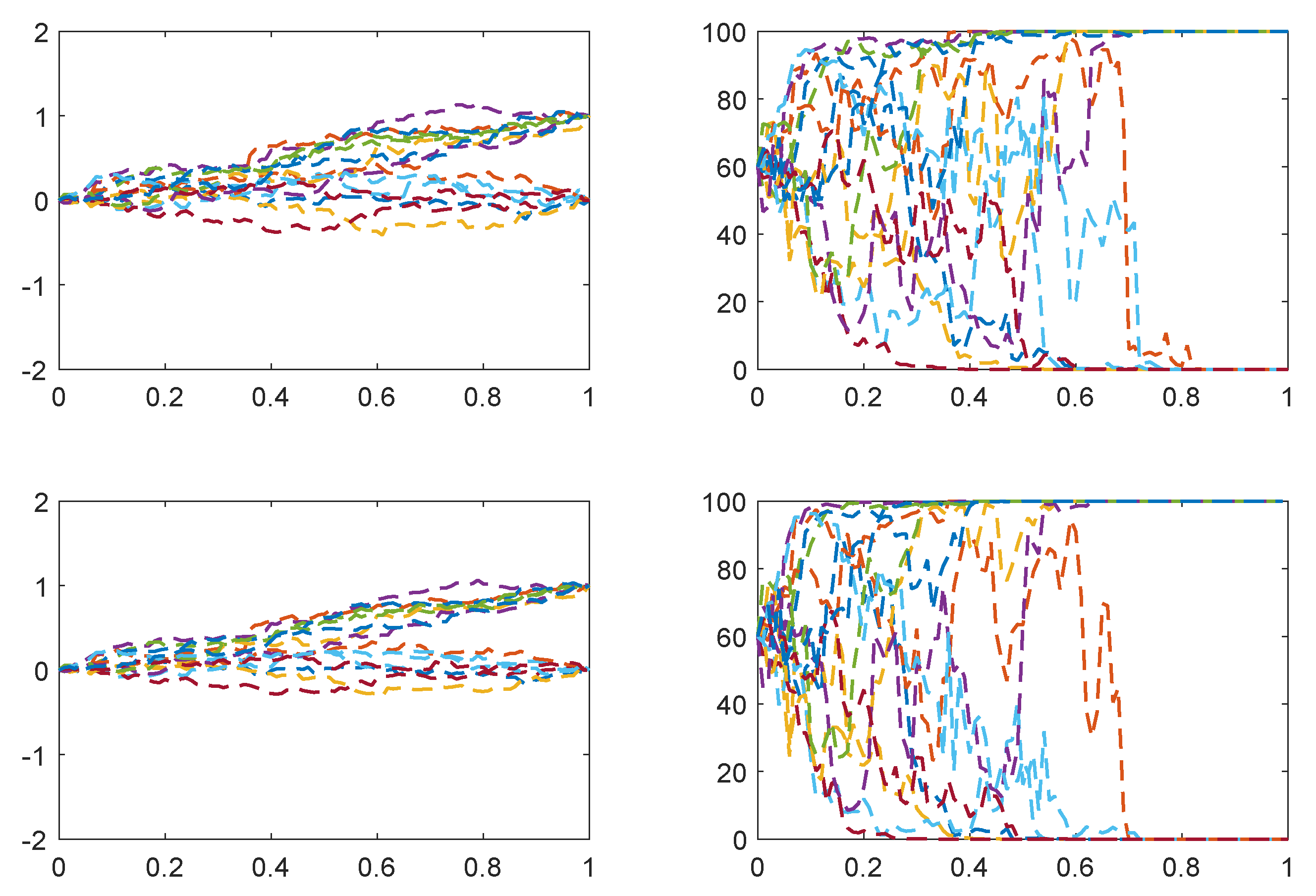

and the claimed limiting behaviour of the asset price follows by inspection. In

Figure 1 and

Figure 2 we plot sample paths for the information processes and price processes of credit risky bonds for various values of the information flow-rate parameter. One observes that for

the information processes diverge, thus distinguishing those bonds that default from those that do not, only towards the end of the relevant time frame; whereas for higher values of

the divergence occurs progressively earlier, and one sees a corresponding effect in the price processes. Thus, when the information flow rate is higher, the final outcome of the bond payment is anticipated earlier, and with greater certainty. Similar conclusions hold for the interpretation of

Figure 3 and

Figure 4.

Example 2: Random recovery. As a somewhat more sophisticated version of the previous example, we consider the case of a defaultable bond with random recovery. We shall work out the case where

and the market factor

takes the value

c with probability

and

is uniformly distributed over the interval

with probability

, where

. Thus, for the probability measure of

we have

and for the distribution function we obtain

The bond price at time

t is then obtained by working out the expression

and it should be evident that one can obtain a closed-form solution. To work this out in detail, it will be convenient to have an expression for the incomplete first moment of a normally-distributed random variable with mean

and variance

. Thus we set

and for convenience we set

Then we have

and of course

where

is defined by (

34). We also set

Finally, we obtain the following:

Proposition 2. The variance-gamma information-based price of a defaultable discount bond with a uniformly-distributed fraction of the principal paid on recovery is given bywhere Example 3: Lognormal payoff. Next we consider the case when the payoff of an asset at time

T is log-normally distributed. This will hold if

and

. It will be convenient to look at the slightly more general payoff obtained by setting

with

. If we recall the identity

which holds for

and

, a calculation gives

where

For

, the price is thus given in accordance with Theorem 4 by

Then clearly we have

and a calculation leads to the following:

Proposition 3. The variance-gamma information-based price of a financial asset with a log-normally distributed payoff such that is given for by More generally, one can consider the case of a so-called power-payoff derivative for which

where

is the payoff of the asset priced above in Proposition 3. See

Bouzianis and Hughston (

2019) for aspects of the theory of power-payoff derivatives. In the present case if we write

for the value of the power-payoff derivative at time

t, we find that

where

Example 4: Exponentially distributed payoff. Next we consider the case where the payoff is exponentially distributed. We let

, so

, and take

. A calculation shows that

where we set

and

As a consequence we obtain:

Proposition 4. The variance-gamma information-based price of a financial asset with an exponentially distributed payoff is given bywhere and are defined as in Example 2. 7. Conclusions

In the examples considered in the previous section, we have looked at the situation where there is a single market factor , which is revealed at time T, and where the single cash flow occurring at T depends on the outcome for . The value of a security with that cash flow is determined by the information available at time t. Given the Markov property of the extended information process it follows that there exists a function of three variables such that , and we have worked out this expression explicitly for a number of different cases, given in Examples 1–4. The general valuation formula is presented in Theorem 4.

It should be evident that once we have specified the functional dependence of the resulting asset prices on the extended information process, then we can back out values of the information process and the gamma bridge from the price data. So in that sense the process

is “visible” in the market, and can be inferred directly, at any time, from a suitable collection of prices. This means, in particular, that given the prices of a certain minimal collection of assets in the market, we can then work out the values of other assets in the market, such as derivatives. In the special case we have just been discussing, there is only a single market factor; but one can see at once that the ideas involved readily extend to the situation where there are multiple market factors and multiple cash flows, as one expects for general securities analysis, following the principles laid out in

Brody et al. (

2007,

2008a), where the merits and limitations of modelling in an information-based framework are discussed in some detail.

The potential advantages of working with the variance-gamma information process, rather than the highly tractable but more limited Brownian information process should be evident—these include the additional parametric freedom in the model, with more flexibility in the distributions of returns, but equally important, the scope for jumps. It comes as a pleasant surprise that the resulting formulae are to a large extent analytically explicit, but this is on account of the remarkable properties of the normalized variance-gamma bridge process that we have exploited in our constructions. Keep in mind that in the limit as the parameter

m goes to infinity our model reduces to that of the Brownian bridge information-based model considered in

Brody et al. (

2007,

2008a), which in turn contains the standard geometric Brownian motion model (and hence the Black-Scholes option pricing model) as a special case. In the case of a single market factor

, the distribution of the random variable

can be inferred by observing the current prices of derivatives for which the payoff is of the form

for

. The information flow-rate parameter

and the shape parameter

m can then be inferred from option prices. When multiple factors are involved, similar calibration methodologies are applicable.