Abstract

Traditionally, actuaries have used run-off triangles to estimate reserve (“macro” models, on aggregated data). However, it is possible to model payments related to individual claims. If those models provide similar estimations, we investigate uncertainty related to reserves with “macro” and “micro” models. We study theoretical properties of econometric models (Gaussian, Poisson and quasi-Poisson) on individual data, and clustered data. Finally, applications in claims reserving are considered.

1. Introduction

1.1. Macro and Micro Methods

For more than a century, actuaries have been using run-off triangles to project future payments, in non-life insurance. In the 1930s, [1] formalized this technique that originated from the popular chain ladder algorithm. In the 1990s, [2] proved that the chain ladder estimate can be motivated by a simple stochastic model, and later on [3] provided a comprehensive overview on stochastic models that can be connected with the chain ladder method, including regression models that could be seen as extension of the so-called “factor” methods used in the 1970s.

The terminology of [4] and [5] were used in macro-level models for reserving. In the 1970s, [6] suggested using some marked point process of claims to project future payments, and quantify the reserves. More recently, [7,8,9,10] or [11] (among many others) investigated further some probabilistic micro-level models. These models handle claims related data on an individual basis, rather than aggregating by underwriting year and development period. As mentioned in [12], these methods have not (yet) found great popularity in practice, since they are more difficult to apply. Nevertheless, several papers address that issue, with several stochastic processes to model the dynamics of payments, such as [13]—extended in [14,15] or [16], and more recently [17] and [18].

All macro-level models are based on aggregate data found in a run-off triangle, which is their strength, but also probably their weakness. Indeed, with regulation rules, such as Solvency II or IFRS 4—Phase 2 norms, the goal is no longer to get (only) a best-estimate, but it becomes necessary to have the full conditional distribution of future cash-flows.

Macro-level approaches, such as the popular chain ladder model, have undeniable properties. Those models are part of the actuarial folklore and extensions have been derived to have more general and more realistic models. They are easy to understand, and can be mentioned in financial communication, without disclosing too much information. From a computational perspective, those models can also be implemented in a single spreadsheet and more advanced libraries, such as the ChainLadder package in R can also beused.

Nevertheless, those macro-level models have also major drawbacks, as discussed for instance in [16] or [17], and references therein. More specifically, we wish to highlight two important points:

- Those models neglect a lot of information that is available on a micro-level (per individual claim). Some additional covariates can be used, as well as exposure, etc. In most applications, not only is that information available, but usually, it has a valuable predictive power. To use that additional information, one cannot simply modify macro-level models, and it is necessary to change the general framework of the model. It becomes possible to emphasize large losses and to distinguish them from regular claims, to get more detailed information about future payments, etc.

- As discussed in this paper, macro-level models on aggregated data can be seen as models on clusters and not on individual observations, as we will do with micro-level models. In the context of macro-level models for loss reserving, [3] mention that prediction errors can be large, because of the small number of observations used in run-off triangles and the fact that clusters are usually not homogeneous. Quantifying uncertainty in claim reserving methods is not only important in actuarial practice and to assess accuracy of predictive models, it is also a regulatory issue. Finally, a small sample size can cause a lack of robustness and a risk of over-parametrization for macro-level models.

On the other hand, micro-level models have many pros. They can incorporate micro-level covariates, and model micro-structure of claims development. Thus, they can take into account heterogeneity, structural changes, etc. (see [19] for a discussion). Several studies, with empirical comparisons between macro- and micro-level models (see, e.g., [17]), illustrate that in many scenarios, reserves obtained with micro-level models have higher precision than macro-level ones. Further, since those models are fitted on much more data than aggregated ones, variability of predictions is usually smaller, which increases model robustness and reduces the risk of over-fitting. More specifically, [15] and [16] obtained, on real data analysis, lower variance on the total amount of reserves with “micro” models than with “macro” ones. A natural question is about the generality of such result. Should “micro” model generate less variability than standard “macro” ones? That is the question that initiated this paper.

1.2. Agenda

In Section 2, we detail intuitive results we expect when aggregating data by clusters, moving from micro-level models to macro-level ones. More precisely, we explain why with a linear model and a Poisson regression, macro- and micro-level models are equivalent. We also discuss the case of the Poisson regression model with random intercept. In Section 3, we study “micro” and “macro” models in the context of claims reserving, on real data, as well as simulated ones. More specifically, we present a methodology that allows to generate—by random generation—some micro-datasets obtained from macro-datasets. We investigate also the impact of adding micro-type covariates, allowing for various types of correlations. Finally, we present concluding remarks in Section 4.

2. Clustering in Generalized Linear Mixed Models

In the economic literature, several papers discuss the use of “micro” vs. “macro” data, for instance in the context of unemployment duration in [20] or in the context of inflation in [21]. In [20], it is mentioned that both models are interesting, since “micro” data can be used to capture heterogeneity while “macro” data can capture cycle and more structural patterns. In [21], it is demonstrated that both heterogeneity and aggregation might explain the persistence of inflation at the macroeconomic level.

In order to clarify notation, and make sure that objects are well defined, we use small letters for sample values, e.g., , and capital letters for underlying random variables, e.g., in the sense that is a realisation of random variable . Hence, in the case of the linear model (see Subsection 2.1), we usually assume that , and then is the vector of estimated parameters, in the sense that while (here covariates are given, and non stochastic). Since is seen as a random variable, we can write .

With a Poisson regression, with . In that case, . The estimate parameter is a function of the observations, ’s, while is a function of the observations and of the random variable . In the context of the Poisson regression, recall that as n, the number of observations, goes to infinity. With a quasi-Poisson regression, does not have, per se, a proper distribution. Nevertheless, its moments are well defined, in the sense that , where φ denotes the dispersion parameter (see Subsection 2.2). For convenience, we will denote , with an abuse of notation.

In this section, we will derive some theoretical results regarding aggregation in econometric models.

2.1. The Multiple Linear Regression Model

For a vector of parameters , we consider a (multiple) linear regression model,

where observations belong to a cluster g and are indexed by i within a cluster g, , . Assume further assumptions of the classical linear regression model [22], i.e.,

- (LRM1)

- no multicollinearity in the data matrix;

- (LRM2)

- exogeneity of the independent variables , , ; and

- (LRM3)

- homoscedasticity and nonautocorrelation of error terms with .

Stacking observations within a cluster yield the following model

where

with similar assumptions except for . Those two models are equivalent, in the sense that the following proposition holds.

Proposition 1.

Proof.

- (i)

- The ordinary least-squares estimator for - from Model (1)—is defined aswhich can also be writtenNow, observe thatwhere the first term is independent of (and can be removed from the optimization program), and the term with cross-elements sums to 0. Hence,where is the least square estimator of from Model (2), when weights are considered.

- (ii)

In the proposition above, the equality should be understood as the equality between estimators. Hence we have the following corollary.

Corollary 2.

Proof.

Straightforward calculations lead to and .

- (i)

- LetFor the equality of variances, we have

- (ii)

- LetThe proof of the equality of variances is similar.

2.2. The Quasi-Poisson Regression

A similar result can be obtained in the context of Poisson regressions. A generalized linear model [23] is made up of a linear predictor , a link function that describes how the expected value depends on this linear predictor and a variance function that describes how the variance depends on the expected value , where denotes the dispersion parameter. For the Poisson model, the variance is equal to the mean, i.e., and . This may be too restrictive for many actuarial illustrations, which often show more variation than given by expected values. We use the term over-dispersed for a model where the variance exceeds the expected value. A common way to deal with over-dispersion is a quasi-likelihood approach (see [23] for further discussion) where a model is characterized by its first two moments.

Consider either a Poisson regression model, or a quasi-Poisson one,

In the case of a Poisson regression,

and in the context of a quasi-Poisson regression,

with for a quasi-Poisson regression ( for over-dispersion). Here again, stacking observations within a cluster yield the following model (on the sum and not the average value, to have a valid interpretation with a Poisson distribution)

In the context of a Poisson regression,

and in the context of a quasi-Poisson regression,

with for a quasi-Poisson regression. Here again, those two models (“micro” and “macro”) are equivalent, in the sense that the following proposition holds.

Proposition 3.

Proof.

- (i)

- Maximum likelihood estimator of is the solution ofor equivalentlyWith offsets , , maximum likelihood estimator of is the solution (as previously, we can remove ) ofHence, , as (unique) solutions of the same system of equations.

- (ii)

- The sum of predicted values is☐

Nevertheless, as we will see later on, the Corollary obtained in the context of a Gaussian linear model does not hold in the context of a quasi-Poisson regression.

Corollary 4.

Proof.

- (i)

- (ii)

- By using a similar argument, we have when n goes to infinity☐

In small or moderate-sized samples, it should be noted that and may be biased for and , respectively. Generally, this bias is negligible compared with the standard errors (see [24,25]).

In the quasi-Poisson micro-level model (from Model (7)), as discussed above, the estimator of is the solution of the quasi-score function

which implies . The classical Pearson estimator for the dispersion parameter is

Empirical evidence (see [26]) support the use of the Pearson estimator for estimating because it is the most robust against the distributional assumption. In a similar way, the quasi-Poisson macro-level model (from Model (10)), the estimator of is the solution of

which implies here also . The dispersion parameter is estimated by

Clearly, involving the following results.

Corollary 5.

Proof.

- (i)

- The property that variances are not equal is a direct consequence of classical results from the theory of generalized linear models (see [23]), since the covariance matrices of estimators are given byandwhen n goes to infinity. Thus, covariance matrices of estimators are asymptotically equal for the Poisson regression model but differ for the quasi-Poisson model because .

- (ii)

- Since the MLE and the QLE share the same asymptotic distribution (see [23]), the proof is similar to Corollary 4(ii).

2.3. Poisson Regression with Random Effect

In the micro-level model described by Equation (7), observations made for the same event (subject) at different periods are supposed to be independent. Within-subject correlation can be included in the model by adding random, or subject-specific, effects in the linear predictor. In the Poisson regression model with random intercept, the between-subject variation is modeled by a random intercept γ which represents the combined effects of all omitted covariates.

Let represent the sum of all observations from subject t, in the cluster g and

where is the identity matrix, and the T-dimensional Gaussian distribution with mean μ and covariance matrix Σ. Straightforward calculations lead to

Hence,

This last equation shows that the Poisson regression model with random intercept leads to an over-dispersed marginal distribution for the variable . The maximum likelihood estimation for parameters requires Laplace approximation and numerical integration (see the Chapter 4 of [27] for more details). This model is a special case of multilevel Poisson regression model and estimation can be performed with various statistical softwares such as HLM, SAS, Stata and R (with package lme4).

One may be interested to verify the need of a source of between-subject variation. Statistically, it is equivalent to testing the variance of γ to be zero. In this particular case, the null hypothesis places on the boundary of the model parameter space which complicates the evaluation of the asymptotic distribution of the classical likelihood ratio test (LRT) statistic. From the very general result of [28], it can be demonstrated (see [29]) that the asymptotic null distribution of the LRT statistic is a mixture of and as . In this case, obtaining an equivalent macro-level model is of little practical interest since the construction of the variance-covariance matrix would require knowledge of the individual (“micro”) data.

3. Clustering and Loss Reserving Models

A loss reserving macro-level model is constructed from data summarized in a table called run-off triangle. Aggregation is performed by occurrence and development periods (typically years). For occurrence period i, , and for development period j, , let and represent the total cumulative paid amount and the incremental paid amount, respectively with , , .

where columns, rows and diagonals represent development, occurrence and calendar periods, respectively. Each incremental cell can be seen as a cluster stacking amounts paid in the same development period j for the occurrence period i. These payments come from M claims and let represent the sum of all observations from claims k in the cluster . It should be noted that all claims are not necessarily represented in each of the clusters.

To calculate a best estimate for the reserve, the lower part of the triangle must be predicted and the total reserve amount is

To quantify uncertainty in estimated claims reserve, we consider the mean square error of prediction (MSEP). Let be a -mesurable estimator for and a -mesurable predictor for R where represents the set of observed clusters. The MSEP is

Independence between R and is assumed, so the equation is simplified as follows

and the unconditional MSEP is

3.1. The Quasi-Poisson Model for Reserves

3.1.1. Construction

From the theory presented in Subsection 2.2, we construct quasi-Poisson macro- and micro-level models for reserves. For both models, constitutive elements are defined in Table 1.

Table 1.

Quasi-Poisson macro- and micro-level models for reserve (). All clusters and all payments are independent.

As a direct consequence of Proposition 3, the best estimate for the total reserve amount is

where represents unobserved clusters. For both models, the Proposition 6 gives results for the unconditional MSEP.

Proposition 6.

In the quasi-Poisson macro-level model, the unconditional MSEP is given by

where and are defined by Equation (12). The unconditional MSEP for the quasi-Poisson micro-level model is similar with replaced by .

Proof.

The proof for the macro-level model is done in [25]. For the micro-level model, we have

Although is not an unbiased estimator of , the bias is generally of small order and by using the approximation for , we obtain

By using the fact that and the remark at the end of Subsection 2.2, we obtain

☐

Thus, the difference between the variability in macro- and micro-level models results from the difference between dispersion parameters. Define standardized residuals for both models

Direct calculations lead to

Thus, if the total number of payments () is greater than the value , then the micro-level Model (7) will lead to a greater precision for the best estimate of the total reserve amount and conversely. Adding one or more covariate(s) at the micro level will decrease the numerator of Ψ and will increase the interest of the micro-level model.

3.1.2. Illustration and Discussion

To illustrate these results, we consider the incremental run-off triangle from UK Motor Non-Comprehensive account (published by [30]) presented in Table 2 where each cell , , is assumed to be a cluster g, i.e., the value is the sum of independent payments. Simulations and computations were performed in R, using packages ChainLadder and gtools. The final reserve amount obtained from the Mack’s model [2] is $28,655,773.

Table 2.

Incremental run-off triangle for macro-level model (in 000’s).

Based on the construction detailed in Table 1, we consider 2 macro-level models

and 2 micro-level models

To create micro-level datasets from the "macro" one, we perform the following procedure:

- simulate the number of payments for each cluster assuming , ;

- for each cluster, simulate a vector of proportions assuming , ;

- for each cluster, define

- adjust Model C and Model D; and

- calculate the best estimate and the MSEP of the reserve by using Proposition 6.

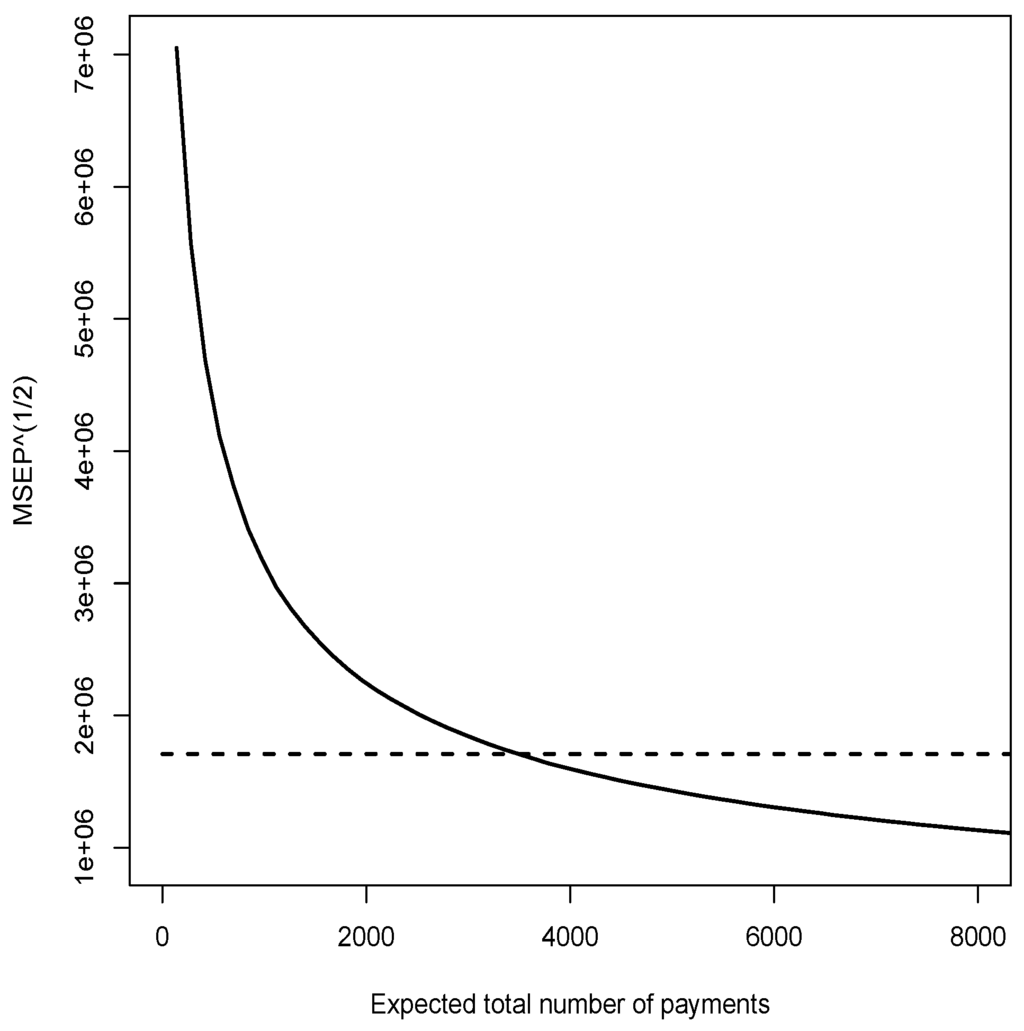

For each value of θ, we repeat this procedure 1000 times and we calculate the average best estimate and the average MSEP. Results are presented in Table 3 and Figure 1. For Poisson regression (Model A and C), results are similar, which is consistent with Corollary 4. For micro-level models, convergence of towards (11622) is fast. For quasi-Poisson regression (Model B and D), expected values are equal and Figure 1 shows as a function of the expected total number of payments for the portfolio. Above a certain level, (close to 3400 here), accuracy of the “micro” approach exceeds the “macro”. Again, those results are consistent with Corollary 5. Here, we consider that the expected number of payments by cluster ) is constant but it would also be possible to consider a mixture model where , , and . This modification does not change the conclusions. Finally, a comparison of estimated MSEP for both Poisson and quasi-Poisson models confirms the presence of over-dispersion in the data.

Table 3.

Results.

Figure 1.

Square root of the mean square error of prediction obtained for Model D (solid line) and Model B (broken line) from simulated values for increasing expected number of payments for the portfolio.

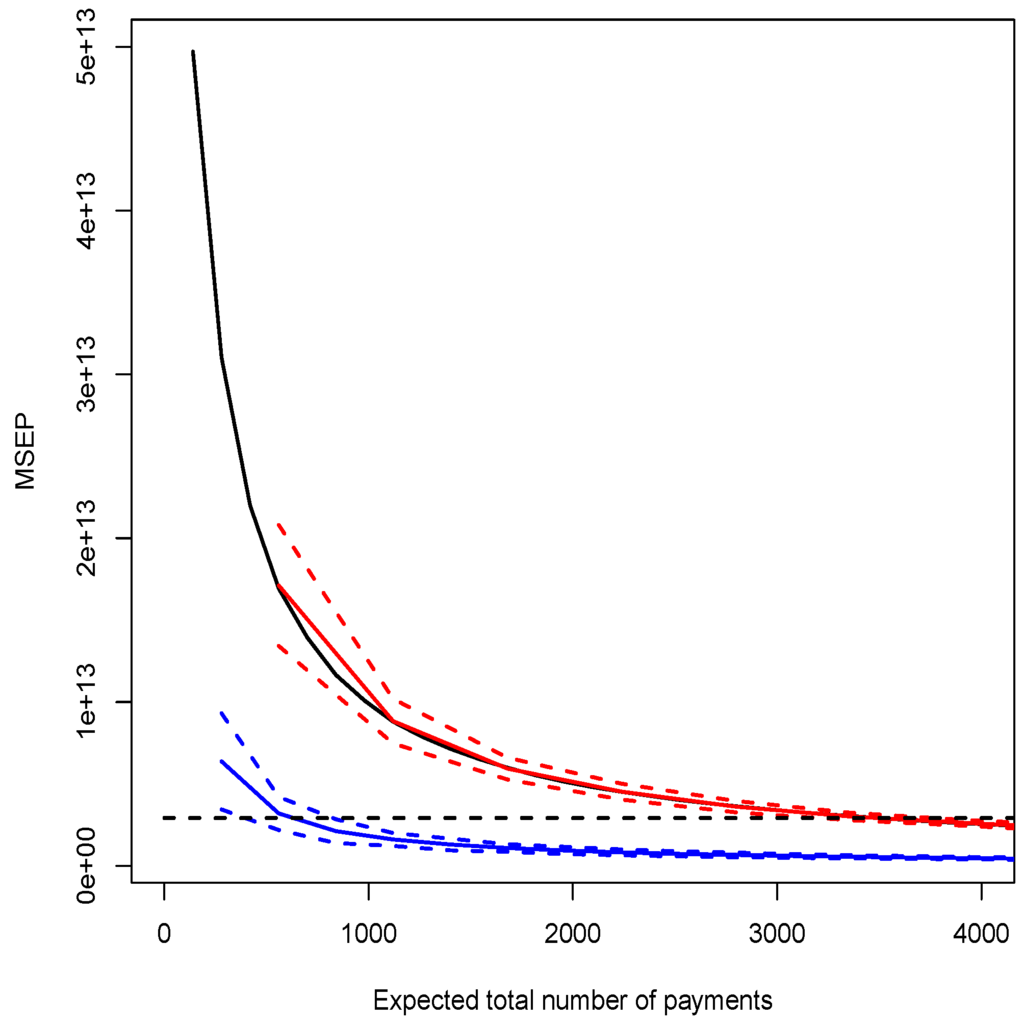

In order to illustrate the impact of adding a covariate at the micro-level, we define a quasi-Poisson micro-level model with a weakly correlated covariate (Model E) and with a strongly correlated covariate (Model F). Following a similar procedure, we obtain results presented in the bottom part of Table 3 and in Figure 2.

Figure 2.

Mean square error of prediction () obtained from simulated values as a function of the expected number of payments for Model E (red lines) and Model F (blue lines). For comparison purposes, the MSEP obtained for the Model D (solid black line) and the Model B (broken black line) are added.

As opposed to standard classical results on hierarchical models, the average of explanatory variable within a cluster () has not been added to the macro-level model (Model B), for several reasons,

- (i)

- impossible to compute that average without individual data;

- (ii)

- discrete explanatory variables used in the micro-level model; and

- (iii)

- since claims reserve model have a predictive motivation, it is risky to project the value of an aggregated variable on future clusters.

With an explanatory variable highly correlated with the response variable, results obtained with Model D and E are very close. As claimed by Proposition 6 and Equation (14), an explanatory variable highly correlated with the response variable will decrease the value of , and lowers the threshold above which the micro-level model is more accurate than the macro-level one.

The quasi-Poisson macro-level model (Model B) with maximum likelihood estimators leads to the same reserves as the chain-ladder algorithm and the Mack’s model (see [31]), assuming the clusters exposure, for , is one. To obtain similar results with a quasi-Poisson micro-level model (Model D), a similar assumption is necessary: exposure of each claim within cluster is . That assumption implies, on a micro level, that predicted individual payments are proportional to . That assumption has unfortunately no foundation.

In the Poisson and quasi-Poisson micro-level models (Model C and D), payments related to the same claim, in two different clusters are supposed to be non-correlated. As discussed in the previous Section, it is possible to include dependencies among payments for a given claim using a Poisson regression with random effects.

3.2. The Mixed Poisson Model for Reserves

3.2.1. Construction

From the results obtained in Subsection 2.3, it is possible to construct a micro-model for the reserves that includes a random intercept. The later will allow to model dependence between payments from a given claim. Note that it is not relevant to construct an aggregated model with random effects that could be compared with individual ones. In the context of claims reserves, represents the sum of payments made for claim t within cluster g. The assumptions of that model (called model G) are

Because of the hierarchical structure of the model, predictions can be derived from several philosophical perspectives (see [32], and references therein). In our example, we focus on the following unconditional predictions

and on the conditional ones, where the unknown component of claim t is predicted by the so-called best linear estimate (that minimizes the MSEP) (see [25])

It is then possible to compute the overall best estimate for the total amount of reserves.

3.2.2. Illustration and Discussion

We illustrate these results with the same macro-level dataset. In order to construct a micro-level model from Table 2, we follow a procedure similar to the one described in the previous section,

- 1-3.

- see previous section;

- 4.

- for each accident year, allocate randomly the source (t) of each payment;

- 5.

- fit model G; and

- 6.

- compute the best estimate and the MSEP of the reserve.

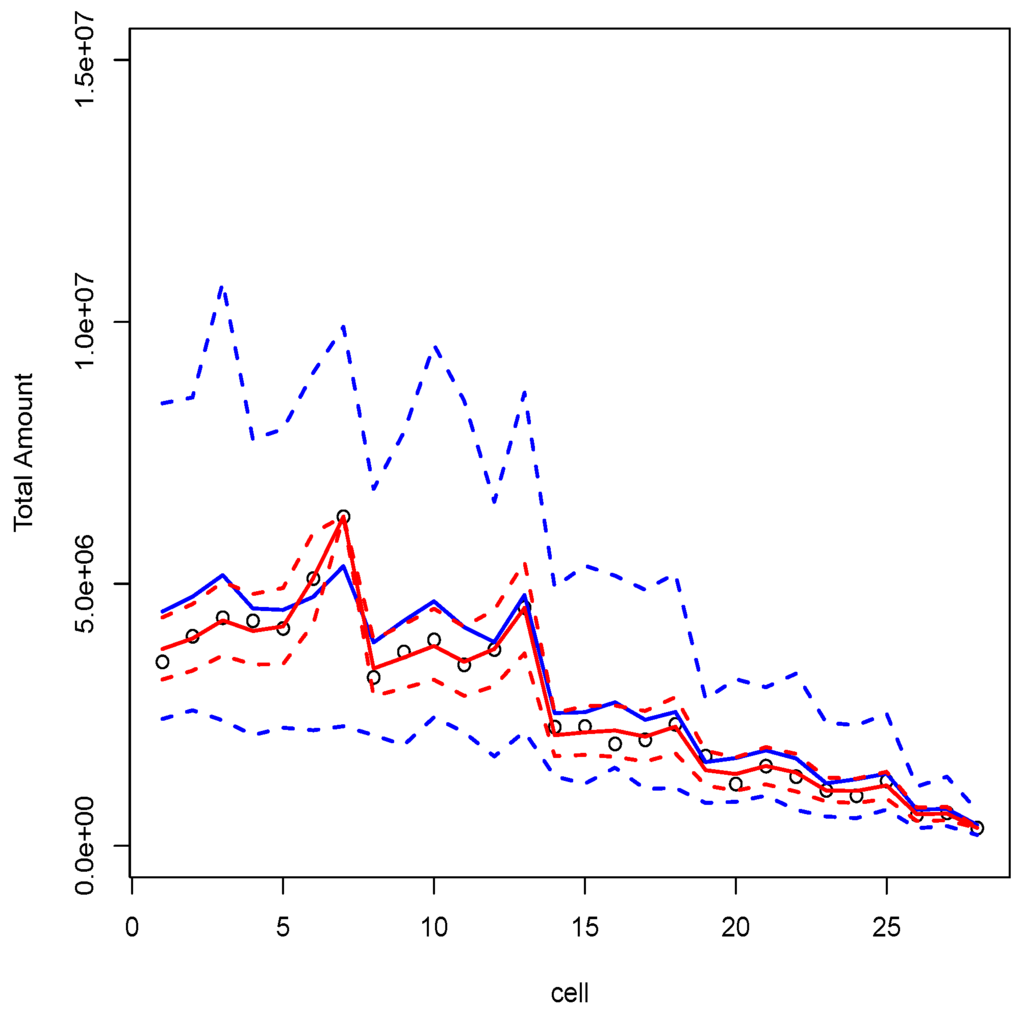

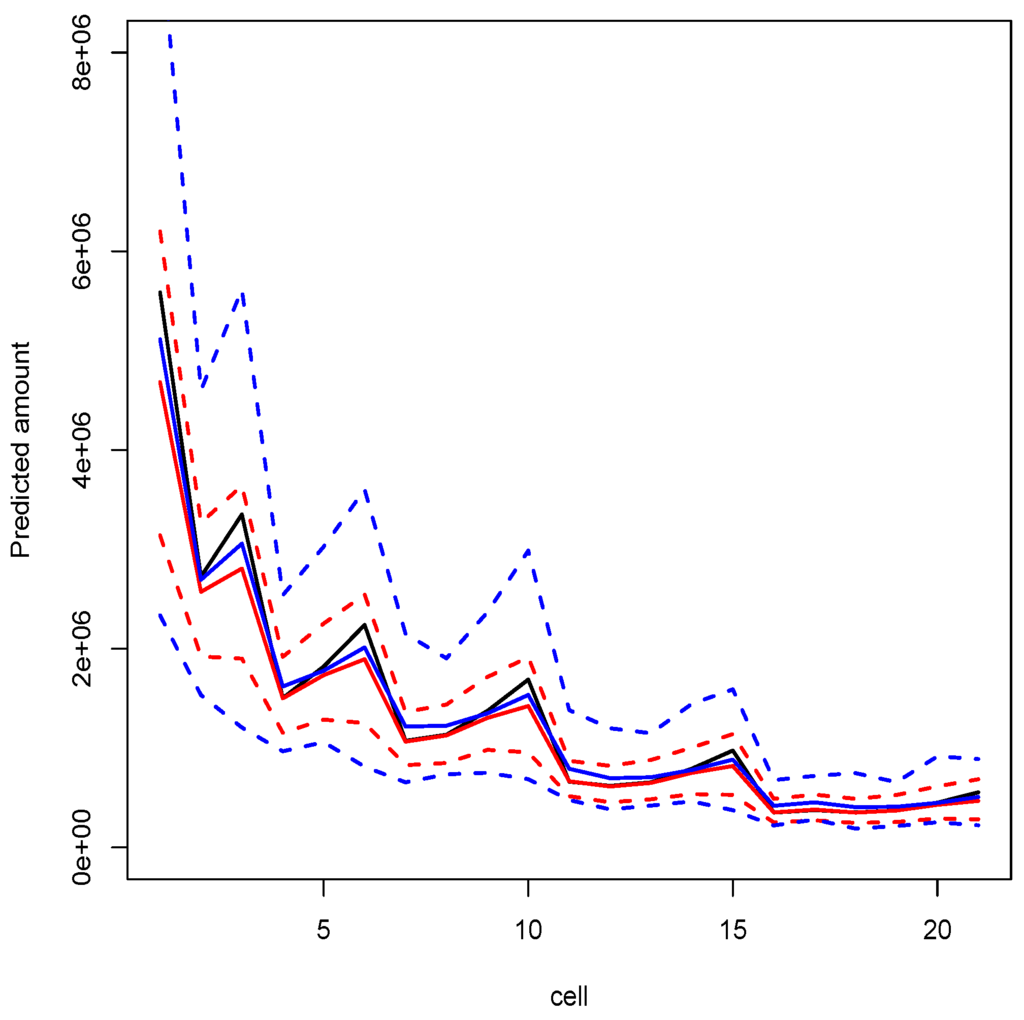

For a fixed value of θ, the procedure is repeated 1000 times. Various values were considered for θ (10, 25, 50, 100 and 250), and results were similar. In order to avoid heavy tables, only the case where is mentioned here. Simulations and computations were performed with R, relying on package lme4. Final results are reported in Table 4. On Figure 3 we can see predictions of the model on observed data, while on Figure 4 we can see predictions of the model for non-observed cells (with in both cases). For each simulation, a LRT is performed to check the non-nullity of the variance term and to confirm the necessity of including a random intercept in the model, which means that correlation among payments (related to the same claim) is positive. Observe that with the mixed model, the log-likelihood is approximated using numerical integration, which might bias p-values of the test. To avoid that, p have been confirmed using a bootstrap procedure (using package glmmML).

Table 4.

Numerical results for . Results for different values of θ are similar.

Figure 3.

Observed data (circles) with conditional predictions (red lines) and unconditional ones (blue lines) from Model G with .

Figure 4.

Predictions with the quasi-Poisson macro-level model (strong black line), with conditional predictions (red lines) and unconditional ones (blue lines) from Model G with .

4. Conclusions

In this article, we study equivalence (as well as non-equivalence) between Poisson and quasi-Poisson regression models, obtained on aggregated (so called macro-level) and non-aggregated (micro-level) datasets, in order to understand when using micro-level models might over-perform macro-level ones. Those models are used here in the context of estimating claims reserves. The uncertainty is quantified using—as in standard macro-level approaches—the MSEP. We also investigate the impact of adding micro-level covariates in the model. Finally, we discuss the use of mixed Poisson regression, in the case of micro-level data, that might take into account possible dependence between observations in different clusters.

We illustrate theoretical results on simulated data, generated from cumulated payments, in R. A methodology that allows us to generate such micro-level datasets is described. In a first part, we compare results obtained with Poisson and quasi-Poisson regression, on micro- and macro-level datasets. That comparison reveals that in the context of a quasi-Poisson regression model, the expected number of claims plays a crucial role, since above a given threshold, the micro-level model is more robust than the macro-one. Moreover, the presence (or absence) of covariates can affect this threshold.

In a second part, we analyse predictions obtained using a mixed Poisson regression model, i.e., a Poisson regression model with a random component that characterizes dependence among payments on the same claim, at different dates. The necessity of this random component is verified by using a likelihood-ratio test. That study reveals that such a dependence might have a non-negligible impact on predictions.

Of course, that study is only the first step and several directions for future research can be intuited. For instance, as micro-level models are based on much more observations than macro-level ones, more robust estimation techniques, such as generalized method of moments, can be considered.

Acknowledgments

The authors thank two anonymous referees and the associate editor for useful comments which helped to improve the paper substantially. The first author received financial support from Natural Sciences and Engineering Research Council of Canada (NSERC) and the ACTINFO chair. The second author received financial support from Natural Sciences and Engineering Research Council of Canada (NSERC).

Author Contributions

Both authors contributed equally to this work.

Conflicts of Interest

The authors declare no conflict of interest.

References

- E. Astesan. Les réserves techniques des sociétés d’assurances contre les accidents automobiles. Paris, France: Librairie générale de droit et de jurisprudence, 1938. [Google Scholar]

- R. Mack. “Distribution-free calculation of the standard error of chain ladder reserve estimates.” ASTIN Bull. 23 (1993): 213–225. [Google Scholar] [CrossRef]

- P.D. England, and R.J. Verrall. “Stochastic claims reserving in general insurance.” Br. Actuar. J. 8 (2003): 443–518. [Google Scholar] [CrossRef]

- J. Van Eeghen. “Loss reserving methods.” In Surveys of Actuarial Studies 1. The Hague, The Netherlands: Nationale-Nederlanden, 1981. [Google Scholar]

- G.C. Taylor. Claims Reserving in Non-Life Insurance. Amsterdam, The Netherlands: North-Holland, 1986. [Google Scholar]

- J.E. Karlsson. “The expected value of IBNR claims.” Scand. Actuar. J. 1976 (1976): 108–110. [Google Scholar] [CrossRef]

- E. Arjas. “The claims reserving problem in nonlife insurance—Some structural ideas.” ASTIN Bull. 19 (1989): 139–152. [Google Scholar] [CrossRef]

- W.S. Jewell. “Predicting IBNYR events and delays I. Continuous time.” ASTIN Bull. 19 (1989): 25–55. [Google Scholar] [CrossRef]

- R. Norberg. “Prediction of outstanding liabilities in non-life insurance.” ASTIN Bull. 23 (1993): 95–115. [Google Scholar] [CrossRef]

- O. Hesselager. “A Markov model for loss reserving.” ASTIN Bull. 24 (1994): 183–193. [Google Scholar] [CrossRef]

- R. Norberg. “Prediction of outstanding liabilities II: Model variations and extensions.” ASTIN Bull. 29 (1999): 5–25. [Google Scholar] [CrossRef]

- O. Hesselager, and R.J. Verrall. “Reserving in Non-Life Insurance.” Available online: http://onlinelibrary.wiley.com (accesssed on 29 February 2016).

- X.B. Zhao, X. Zhou, and J.L. Wang. “Semiparametric model for prediction of individual claim loss reserving.” Insur. Math. Econ. 45 (2009): 1–8. [Google Scholar] [CrossRef]

- X. Zhao, and X. Zhou. “Applying copula models to individual claim loss reserving methods.” Insur. Math. Econ. 46 (2010): 290–299. [Google Scholar] [CrossRef]

- M. Pigeon, K. Antonio, and M. Denuit. “Individual loss reserving using paid-incurred data.” Insur. Math. Econ. 58 (2014): 121–131. [Google Scholar] [CrossRef]

- K. Antonio, and R. Plat. “Micro-level stochastic loss reserving for general insurance.” Scand. Actuar. J. 2014 (2014): 649–669. [Google Scholar] [CrossRef]

- X. Jin, and E.W. Frees. “Comparing Micro- and Macro-Level Loss Reserving Models.” Madison, WI, USA: Presentation at ARIA, 2015. [Google Scholar]

- A. Johansson. “Claims Reserving on Macro- and Micro-Level.” Master’s Thesis, Royal Institute of Technology, Stockholm, Sweden, 2015. [Google Scholar]

- J. Friedland. Estimating Unpaid Claims Using Basic Techniques. Arlington, VA, USA: Casualty Actuarial Society, 2010. [Google Scholar]

- G.J. Van den Berga, and B. van der Klaauw. “Combining micro and macro unemployment duration data.” J. Econom. 102 (2001): 271–309. [Google Scholar] [CrossRef]

- F. Altissimo, B. Mojon, and P. Zaffaroni. Fast Micro and Slow Macro: Can Aggregation Explain the Persistence of Inflation? European Central Bank Working Papers; 2007, Volume 0729. [Google Scholar]

- W.H. Greene. Econometric Analysis, 5th ed. Upper Saddle River, NJ, USA: Prentice Hall, 2003. [Google Scholar]

- P. McCullagh, and J.A. Nelder. Generalized Linear Models. London, UK: Chapman & Hall, 1989. [Google Scholar]

- G.M. Cordeiro, and P. McCullagh. “Bias correction in generalized linear models.” J. R. Stat. Soc. B 53 (1991): 629–643. [Google Scholar]

- M. Wüthrich, and M. Merz. Stochastic Claims Reserving Methods. Hoboken, NJ, USA: Wiley Interscience, 2008. [Google Scholar]

- M. Ruoyan. “Estimation of Dispersion Parameters in GLMs with and without Random Effects.” Stockholm University, 2004. Available online: http://www2.math.su.se/matstat/reports/serieb/2004/rep5/report.pdf (accessed on 29 February 2016).

- T.A.B. Snijders, and R.J. Bosker. Multilevel Analysis: An Introduction to Basic and Advanced Multilevel Modeling. Thousand Oaks, CA, USA: Sage Publishing, 2012. [Google Scholar]

- S.G. Self, and K.Y. Liang. “Asymptotic properties of maximum likelihood estimators and likelihood ratio tests under nonstandard conditions.” J. Am. Stat. Assoc. 82 (1987): 605–610. [Google Scholar] [CrossRef]

- D. Dunson. Random Effect and Latent Variable Model Selection. Lecture Notes in Statistics; New York, NY, USA: Springer-Verlag, 2008, Volume 192. [Google Scholar]

- S. Christofides. “Regression models based on log-incremental payments.” Claims Reserv. Man. 2 (1997): D5.1–D5.53. [Google Scholar]

- T. Mack, and G. Venter. “A comparison of stochastic models that reproduce chain ladder reserve estimates.” Insur. Math. Econ. 26 (2000): 101–107. [Google Scholar] [CrossRef]

- A. Skrondal, and S. Rabe-Hesketh. “Prediction in multilevel generalized linear models.” J. R. Stat. Soc. A 172 (2009): 659–687. [Google Scholar] [CrossRef]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).