1. Introduction

In the field of risk and insurance, probability theory and statistical models play important roles in various aspects, such as determining insurance pricing, assessing financial risks, and understanding claim size, claim count, and aggregate-loss distributions (

Bahnemann 1996). Due to the stochastic nature of insurance data, the underlying data generation mechanism is always unknown, and choosing a suitable probability model to fit insurance data is a challenging problem. The statistical literature has considered different approaches to handling model uncertainty in such cases. For instance, one may use a statistical model with a larger number of parameters to increase flexibility and then test the significance of the added parameters. Another approach is to impose prior probability distributions on the model parameters in a Bayesian paradigm. While these methods offer benefits and advantages, they also come with their own set of drawbacks. For instance, increasing the number of model parameters amplifies model complexity and presents challenges in parameter estimation. Additionally, the roles of these added parameters in determining the distribution’s location, scale, and shape may become ambiguous. Furthermore, misspecification of the underlying probability model in fitting insurance data may lead to a substantial loss of efficiency in statistical analysis, inaccurate conclusions, and improper decisions. Therefore, it is crucial to identify the best-fit model from a pool of candidate models and understand the consequences of model misspecification in analyzing insurance risk data. This understanding significantly overcomes the negative impact of model misspecification and leads to feasible estimates of the characteristics under study. An interesting insight into the disparity arising from incorrect model identification followed by misspecified inferences can be found in

Gustafson (

2001).

The consequences of model misspecification have been explored across different domains in the statistical literature.

Dumonceaux and Antle (

1973) was the first to discuss the effects of model misspecification, in the form of a statistical hypothesis test problem, for analyzing the lifetimes of ball bearings using two competing probability models. Several studies have been dedicated to model discrimination and misspecification themes between two competing models. An interesting application analyzing electromigration failure in the interconnects of integrated circuits was presented by

Basavalingappa et al. (

2017). Besides such experiments being conducted in accelerated settings, followed by transforming the observed failures to represent lifetimes in regular conditions, the occurrence of failures in ICs is also sporadic. Another study in the context of a rare event, i.e., modeling high magnitude earthquakes in the Himalayan regions and the subsequent effects of model misspecification, can be found in

Pasari (

2018). The problem of model misspecification has also been explored for censored-sample cases (

Block and Leemis 2008;

Dey and Kundu 2012). Some other intriguing directions of model misspecification include the analysis of strength distribution for brittle materials by

Basu et al. (

2009) and one-shot device test data by

Ling and Balakrishnan (

2017). A comprehensive investigation into the impact of misspecification of statistical models in insurance and risk management, particularly concerning censored, truncated, and outlier-laden insurance loss and claims data, is of practical interest. Following the insights of scholars such as

White (

1982),

Chow (

1984), and

Fomby and Hill (

2003), we examine the misspecification of statistical models and investigate the behavior of point estimators for key risk measures.

Before conducting statistical inference (such as estimation, prediction, hypothesis testing, etc.) on insurance data, it is often assumed that the data originate from a specific parametric probability model on which the analysis is based. A vast array of probability distributions is available in the literature for fitting insurance data. For instance, distributions such as gamma, log-gamma, lognormal, and Weibull are commonly employed to model insurance claim size, while Poisson, negative binomial, and Delaporte distributions are often used to model insurance claim counts (

Brazauskas and Kleefeld 2011;

Hewitt and Lefkowitz 1979;

Rolski et al. 1999;

Zelinková 2015). However, in practical applications, when employing a statistical distribution for data analysis, the underlying probability distributions of various risk elements and the stochastic mechanisms that generate loss and claims data are often unknown. Additionally, the probability model may be misspecified, and the insurance datasets may be contaminated or contain outliers.

Broadly, an insurance company encounters two types of fund claim variables. One is the extent of the payout, in monetary terms, commonly referred to as the claim severity (size) variable, and the other is the variable representing the number of claims (also known as claim frequency) arising in a given period (

Loss Data Analytics Core Team 2020). In this study, our interest lies in exploring the risks associated with the amount paid for the claim, commonly referred to as the claim size. An important characteristic of a claim severity model is its ability to represent the worst potential loss due to adverse events such as natural disasters, accidents, or other unforeseen circumstances that an insurer might experience over a specified duration at a certain confidence level

, commonly referred to as Value-at-Risk (VaR) (

Jorion 2006, chap. 5;

Christoffersen 2012). Thus, VaR helps assess the potential financial impact of extreme but plausible events over a specific time horizon. By quantifying the maximum expected loss within a certain confidence level (e.g.,

or

), insurers can better understand their exposure to risk and make informed decisions regarding capital reserves, reinsurance arrangements, and pricing strategies. Let

X be the random variable representing the loss size. For a pre-specified value

(e.g.,

), a general definition of VaR with confidence level

is

. Thus, for a confidence level of

, the VaR can be interpreted as the threshold loss such that the probability of incurring a loss greater than this amount is at most

. If the loss variable

X has CDF

with parameter vector

, then its quantile function is denoted by

(

Tse 2023). Therefore, the VaR with confidence level

corresponds to the

quantile of the loss distribution, i.e.,

, for a given time horizon.

In the context of the importance of selecting a suitable distribution for analyzing insurance data,

Yu (

2020) considered six parametric distributions for modeling and analyzing operational loss data related to external fraud types in retail banking branches of major commercial banks in China from 2009 to 2015. They observed that different distributional assumptions yielded significantly different VaR estimates, leading to inconsistent conclusions. As emphasized by

Wang et al. (

2020), selecting an appropriate claim severity distribution is crucial, especially in the presence of extreme values.

Hogg and Klugman (

1984) investigated claim severities in non-life insurance, such as those resulting from hurricane damage or automobile accidents, and noted their tendency to exhibit a heavy-tailed skewed nature. Various heavy-tailed as well as regular models, including gamma, lognormal, log-gamma, Weibull, Pareto, and log-logistic, have been employed in modeling non-life insurance data (see, for example,

Kleiber and Kotz 2003;

Klugman et al. 2012, and the references cited therein). Additionally, when claim sizes are large, settlements are often delayed due to case-by-case handling, necessitating consideration of the role of inflation, which can contaminate claim sizes (

Hogg and Klugman 1984;

Kaas et al. 2008). Hence, a robust estimation procedure is required to analyze claim severities.

In this paper, we aim to examine the effects of model misspecification on modeling insurance data. Since the underlying data-generating mechanism of insurance data is often unknown, and such data frequently contain influential observations and/or outliers, we propose two robust weighted estimation criteria based on likelihood and divergence measures. We then compare the performance of the proposed methods through Monte Carlo simulation. Our goal is to advance the understanding of model fitting and analysis for insurance and risk data, as well as the related inferential methods, and help practicing actuaries and academic actuaries better understand the effects and consequences of model misspecification when model uncertainty is present. With an emphasis on practical implementation and pedagogical clarity, this paper provides accessible and robust tools for practitioners and educators in actuarial science and risk analysis.

The rest of this paper is organized as follows.

Section 2 provides an overview of the probability models used in this study, along with a brief review of their applications for non-life insurance claim size data. In

Section 3, we discuss the estimation of model parameters and VaR using the likelihood method and density power divergence (DPD) method.

Section 4 introduces the rationale of the proposed data-driven model ranking and selection approach based on likelihood and DPD measures, followed by a Monte Carlo simulation study in

Section 5 that details the performance of the proposed procedures, considering both the presence and absence of data contamination.

Section 6 presents two real data analyses to illustrate the proposed model ranking and selection method developed in this work. Finally, in

Section 8, we provide concluding remarks and directions for future work.

2. Probability Models for Claim Severity

In this section, we consider six commonly used probability models in insurance and finance, namely the Fisk, Fréchet, Lomax, lognormal, paralogistic, and Weibull distributions, to model the severity of claims for non-life insurance data. For notation convenience, we denote these six models as in the remaining length of the article, where and represents the Fisk, Fréchet, Lomax, lognormal, paralogistic, and Weibull distributions, respectively. The corresponding probability density functions (PDFs), cumulative distribution functions (CDFs), and VaR values of model with parameter vectors are denoted as , and , (), respectively. Although we primarily considered six probability distributions in this paper, the methodologies developed can be applied to any candidate models with different probability distributions. Moreover, the methodologies can be extended to candidate statistical models with different numbers of parameters by suitably penalizing models with more parameters based on the practitioner’s preference and the parsimony principle. Additionally, the methodologies discussed here can be extended directly to truncated probability distributions, which are often more appropriate for claims subject to deductibles or policy limits.

2.1. Fisk Distribution

The Fisk distribution was originally proposed by

Fisk (

1961) as a limiting form of the Champernowne distribution

Champernowne (

1952) in connection with modeling income. A random variable

X following a Fisk distribution (denoted as

) has PDF, CDF, and VaR

respectively, where

is the parameter vector with

as the shape parameter and

representing the median of the distribution.

The Fisk distribution is also known as the log-logistic distribution because it can be obtained by using the logarithmic transformation of the logistic distribution. The Fisk distribution offers insight into the distribution of income sizes, derived from the reasoning of income generation processes

Singh and Maddala (

1976). In the context of income inequality, the Fisk distribution provides a mathematical representation of the unequal distribution of income. For more details on income distribution and income inequality, the readers may refer to

Kakwani (

1980) and

Kakwani and Son (

2022).

Buch-larsen et al. (

2005) discussed the suitability of the Fisk distribution to model non-life insurance data since it is capable of accommodating small as well as large claim sizes, owing to its heavy-tailed nature, which is otherwise a challenge for regular models.

2.2. Fréchet Distribution

The Fréchet distribution, named after mathematician Maurice René Fréchet, is a probability distribution derived as the limiting distribution of extreme values. It is also known as the inverse Weibull distribution, which has various applications in economics, hydrology, and meteorology to model extreme events or phenomena. It is often employed to describe the distribution of extreme values in a dataset, such as maximum wind speeds, flood levels, or income levels (

Kotz and Nadarajah 2000).

A random variable

X follows a Fréchet distribution (denoted as

) with PDF, CDF, and VaR

:

where

is a parameter vector,

is the scale parameter and

is the shape parameter.

2.3. Lomax Distribution

The Lomax distribution was introduced by K. S.

Lomax (

1954), which is commonly employed in modeling income distributions, insurance claim sizes, and other phenomena where observations are bounded from below and exhibit heavy-tailed behavior. This distribution offers valuable insights into extreme events and tail behavior, making it a valuable tool in various analytical and modeling contexts. A random variable

X has a Lomax distribution (denoted as

) with PDF, CDF, and VaR

:

respectively, where

is a parameter vector,

is the scale parameter and

is the shape parameter.

2.4. Lognormal Distribution

The lognormal distribution is often used to model phenomena where the logarithm of the variable of interest is normally distributed, such as the distribution of stock prices, income levels, or the size of biological populations. Mathematically, if a random variable

is normally distributed, then the distribution of

X is said to be lognormal. It is particularly useful when dealing with variables that are inherently positive and skewed, as the lognormal distribution naturally accommodates these characteristics. The lognormal distribution is also known as the Galton distribution. For more details on lognormal distribution, one may refer to Chapter 14 of

Johnson et al. (

1994), the book by

Crow and Shimizu (

1988), and the references therein.

The PDF, CDF, and VaR

of a lognormal variate

X (denoted as

) are

respectively, where

is a parameter vector with

as the scale parameter,

as the shape parameter,

and

representing the CDF and inverse CDF (quantile function) of the standard normal distribution.

2.5. Paralogistic Distribution

The paralogistic distribution is a statistical distribution used primarily in econometrics, finance, and risk management, which is characterized by its flexibility in modeling skewed or heavy-tailed data. As demonstrated by

Kleiber and Kotz (

2003), the paralogistic distribution can be applied to economic modeling of wealth and income. The paralogistic distribution allows for asymmetry and heavier tails, which provides flexibility in capturing the tail behavior of the data.

A random variable

X has a paralogistic distribution (denoted as

) with PDF, CDF, and VaR

:

respectively, where

is a parameter vector,

is the scale parameter and

is the shape parameter.

2.6. Weibull Distribution

A random variable

X is said to follow a Weibull distribution (denoted as

) with PDF, CDF, and VaR

:

respectively, where

is a parameter vector,

is the scale parameter and

is the shape parameter.

3. Motivation and Estimation of Model Parameters

Given the pivotal role of statistical distributions in modeling insurance and claims data, this study aims to investigate the implications and consequences of model misspecification and offer practical and transparent solutions to mitigate its adverse effects. In this section, we use the real dataset

dataCar from the

insuranceData package (

Wolny-Dominiak and Trzesiok 2014) in R (

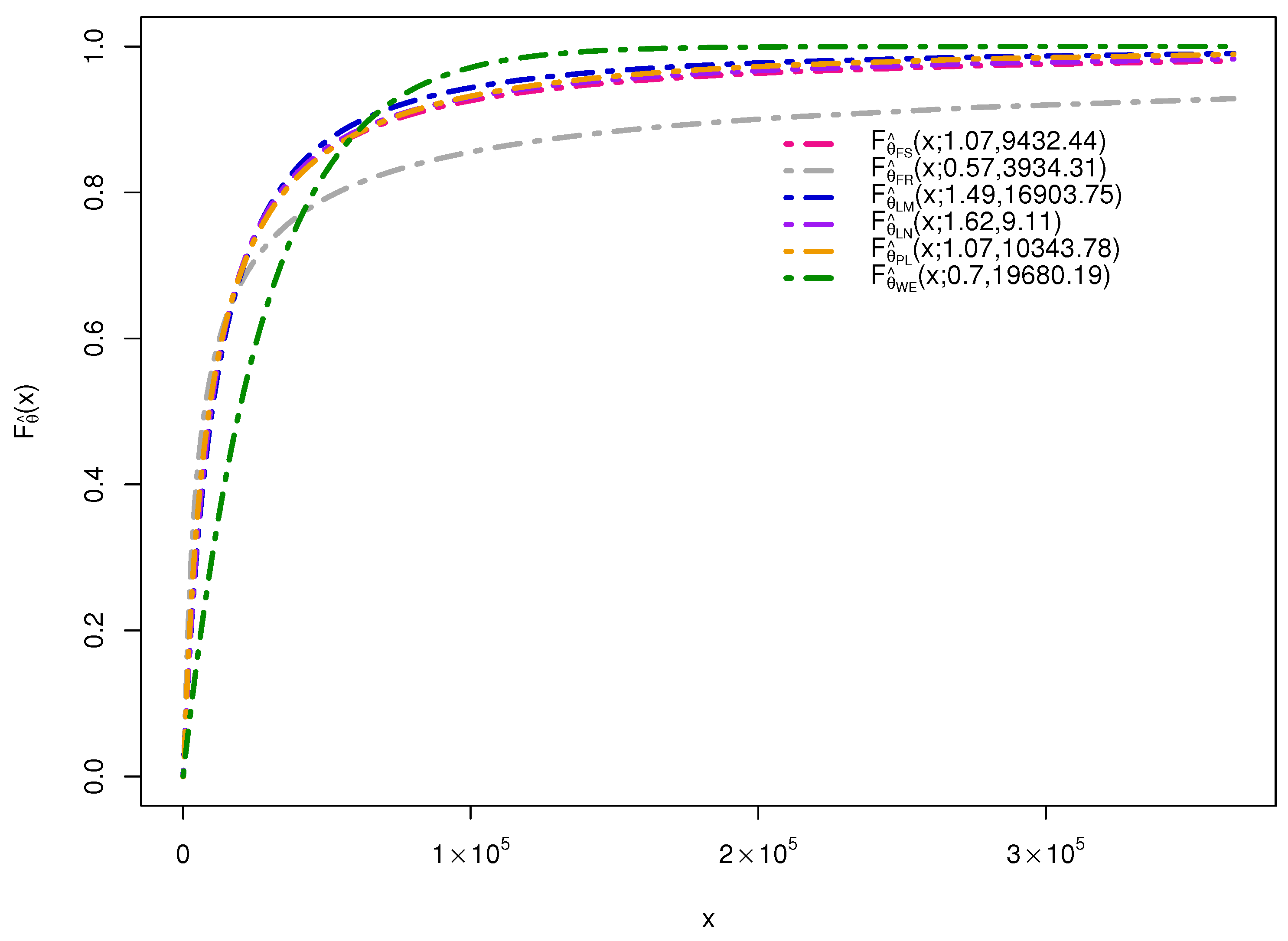

R Core Team 2025) to motivate the study by examining the behavior of the estimated PDFs and CDFs for all candidate models described in

Section 2, as illustrated in

Figure 1. The dataset contains information on vehicle insurance policies issued between 2004 and 2005, with variables such as vehicle value (in

$10,000), the number of claims filed, and the amount of claims. The variable of interest is the claim amount. Specifically, we use the numeric variable

clmcst0 for cases in which a claim was filed (based on the

clm variable, where 0 indicates no claim, and 1 indicates at least one claim). From

Figure 1, the fitted PDFs and CDFs appear broadly similar. However, the maximum likelihood estimates (MLEs) of the parameters, presented in

Table 1, differ substantially among different models. Because tail behavior affects the value of VaR with confidence level

, especially when

is close to 1, and is sensitive to model choice, we also report parameter estimates from the minimum density power divergence (MDPD) estimator, a more robust alternative, which will be introduced in subsequent sections and used later in our selection and ranking procedures (

Table 2).

In such cases, fitting candidate models to a real dataset and comparing the fits with the empirical density or distribution may suggest that all the models are suitable for explaining the random phenomenon of interest. However, these models can differ substantially, especially in their tail behavior. Given the heavy-tailed nature of non-life insurance claims, parameter estimation or model discrimination based solely on likelihood-based criteria can be restrictive due to their lack of robustness. Tail-related statistics, such as VaR, are particularly sensitive to the choice of the underlying model, which may lead to misleading conclusions and decisions. To illustrate this point, we report the true VaR values at the 95% and 99% levels for these candidate distributions in

Table 1, where it is evident that the VaR estimates differ considerably across models. In such situations, it is intuitively appealing to estimate model parameters using robust procedures, such as the minimum DPD estimation method.

Furthermore, since the VaR values of all the considered models differ significantly (see

Table 1), in such a situation, estimating the VaR based on the given data using a single model will likely yield an estimator with a much higher risk of incorrect conclusions, intuitively, it may be argued that for such cases, adopting a model averaging method for estimating the parameters of interest would substantially reduce the risks associated with model uncertainty and misspecification (

Steel 2020).

In the following, we first discuss the estimation procedures used to estimate the parameters of the considered models. Subsequently, we provide a mathematical framework for the proposed model averaging techniques used to obtain VaR estimates. For the estimation of the unknown parameter vector of a considered model, under the assumption that the data has originated from , we adopt the method of maximum likelihood and the method of minimized density power divergence, respectively. Since the true population is unknown, assuming any of the above six models to be true is considerable for the type of random variable being studied here, irrespective of the true underlying model.

3.1. Likelihood-Based Estimation

Let

be an independent and identically distributed (i.i.d.) sample of size

n from

,

. Then, the likelihood function (LF) for the parameter vector

based on the observed sample

x is

The maximum likelihood estimate (MLE) of the parameter vector

, denoted as

, is obtained by maximizing

or, equivalently, the log-likelihood

with respect to

, i.e.,

Owing to the invariance property of MLEs, we can obtain the MLE of a function

by substituting

into

, i.e.,

is the MLE of

. Hence, the MLE of VaR for a specific confidence level

of the

j-th model can be obtained as

. For notational convenience, we denote the MLE of VaR with confidence level

under distribution

as

.

3.2. Divergence-Based Estimation

The minimum density power divergence estimation method was proposed by

Basu et al. (

1998) as a contemporary to the existing estimation methods based on density-based divergence measures. The density power divergence (DPD) measures the difference between a data-driven PDF

and an assumed parametric model

with parameter vector

,

being the set of distributions having densities conforming to a dominating measure. Mathematically, it is defined as

where

for all PDFs on the Lebesgue measure and is 0 almost everywhere if and only if

. The MDPD estimator of the parameter vector

, denoted as

, is obtained by minimizing the DPD measure in Equation (

21) with respect to

, i.e.,

.

For general families of distribution, the MDPD estimating equation has the form

where

is the score function. For

,

induces a down-weighting mechanism, in which observations that are outliers under the assumed model receive smaller weights. As

, minimizing Equation (

21) reduces to the MLE, yielding fully efficient estimators in which all observations (outliers included) are effectively weighted equally. At

, minimizing Equation (

21) corresponds to the minimum

distance criterion. Thus,

acts as a tuning parameter that controls the trade-off between robustness and efficiency of the resulting estimators.

Thus, based on a random sample

x of size

n from

, the MDPD estimate

can be obtained by minimizing the empirical form of Equation (

21) given below:

or by solving

where

is the score function corresponding to the density

, instead of minimizing Equation (

21), since the term

in Equation (

21) is independent of

and the term

can be replaced by

, where

serves an empirical estimate of

, owing to its linear involvement. Note that, as a limiting case, when

, the DPD reduces to the Kullback-Leibler divergence and yields an estimator equivalent to the MLE. On the other hand, the MDPD estimator is equivalent to the minimum mean square error estimator for

. Pertaining to the behavior of the DPD measure for

in

, a reasonable choice of

lies between 0 and 1 since the efficiency of the estimators gradually decreases with the increase in

(

Ghosh et al. 2017;

Jones et al. 2001).

One of the interesting properties of the MDPD estimation method is that the MDPD estimators also adhere to the invariance principle, akin to the MLEs. This property allows us to study the behavior of VaR or any other characteristic of a probability model based on MDPD estimates without delving into any complicated mathematical framework. Thus, the MDPD estimator of VaR for a given model can be obtained as , corresponding to the chosen confidence level . Once again, for notational convenience, we denote the MDPD estimate of VaR with confidence level as .

4. Estimating Value-at-Risk via Model Averaging

Based on earlier discussions, it is essential to identify the best-fitting model to minimize the degree of uncertainty and inconclusiveness in estimating the quantity of interest. However, owing to the closeness of these models in highly dense regions and highly dissimilar tail behaviors, the effect of misspecification on the VaR in the case of claim size data requires special attention. Two significant kinds of model misspecifications can be considered: (i) a wrongly specified model (e.g., the underlying model is lognormal, but a Weibull model is assumed) and (ii) a contaminated-observation model (e.g., the underlying model is Weibull, but some contaminated observations/outliers follow a different distribution or the same class of distributions with different parameters). For empirical investigation, following

White (

1982) and

Chow (

1984), we examine the consequences of model misspecification in terms of the relative bias and relative variability when the MLE and MDPD are used to estimate the VaR through a Monte Carlo simulation study.

This section describes the proposed model selection and ranking approaches based on the maximum likelihood method and the minimum density power divergence method. We consider the practical situation that a specific model with probability density () is considered as the assumed model for modeling a given dataset. Based on this assumption, the MLEs and MDPD estimates of the model parameters, as well as the VaR, can be obtained, along with the associated maximized value of the LF and minimized value of the DPD measure, for the given dataset.

4.1. Model Selection Approach

We consider a model selection approach to select the best-fitting model for a particular dataset. Here, we consider two different approaches for model selection based on the maximized value of the LF and the minimized value of the DPD function for a given dataset x.

For the approach based on the maximized LF, the probability distribution with the maximized value of the likelihood has an intuitive appeal and can be reasonably claimed to be the best among all the considered models. Thus, for a given dataset, we can order all the

k candidate models based on the magnitude of the maximized value of the LF if these models have the same number of parameters. Specifically, we denote

, where the subscript

indicates the ordered position of a model, and the notation “>” means “is preferable to”. The same idea can be implemented using the DPD measure. For a given dataset

x, the model yielding the minimum value of the DPD statistic

in Equation (

21) can be considered as the closest one to the true model

among all the candidate models. Hence, based on the minimized value of the DPD statistic, one can order the models such that

, where

is the model with the smallest value of the DPD statistic,

is the model with the second smallest value of the DPD statistic, and so on. In other words,

means model

is the most preferable model based on the likelihood function approach, and

means model

is the most preferable model based on the DPD measure approach. For the model selection approach, after selecting the most probable model, we can estimate the VaR with the selected models based on likelihood function and DPD measure, i.e., models

and

, respectively.

4.2. Model Ranking Approach

To reduce the risk of misspecification and improve the accuracy of estimating the quantity of interest, model averaging methods have been widely adopted and found useful across various domains (see, for example,

Chatfield 1995;

Dormann et al. 2018;

Okoli et al. 2018;

Wintle et al. 2003). One argument for using the VaR estimates from multiple models for a given dataset is to mitigate the potential risks associated with relying on a single selected model, especially since the true model is unknown in practice. Model averaging essentially protects against a potentially suboptimal or misspecified model. Moreover, estimates from an individual model may exhibit high variance, whereas combining estimates from multiple models can effectively reduce this variance, leading to a more stable and accurate overall estimate. An ensemble estimate is typically less susceptible to the limitations of any single model and leverages the collective strengths of multiple models.

Kass and Raftery (

1995) and

Raftery (

1995) discussed the implementation of model averaging in the Bayesian paradigm through the use of posterior odds and/or the Bayes factor. However, the Bayesian model averaging methods can be sensitive to the choice of priors. An interesting yet simple method for model averaging in the frequentist framework was explored by

Buckland et al. (

1997) in which scaled weights

are assigned to each of the candidate models

(

) and the weighted average is the final estimate of the quantity of interest.

Following

Buckland et al. (

1997), we compute the weighted estimate of the quantity of interest. For instance, if the quantity of interest is VaR, instead of estimating the VaR based on the model with the maximized value of the LF or the model with the minimum value of the DPD statistic (i.e., the model selection approach in

Section 4.1), we consider the best

models to obtain an estimate of VaR. Suppose the MLEs and MDPD estimates of VaR at

level based on models

and

are

and

, respectively, then using the model ranking approach, the weighted VaR estimates based on maximum likelihood and DPD, denoted as

and

, can be obtained as follows:

respectively, with weights

and

,

, such that

The associated weights

(

) are considered proportional to the maximized (minimized) value of LF (DPD), i.e.,

(

). Specifically, for the likelihood approach, suppose

is the value of maximum likelihood based on model

and hence,

, then, for the top

models, the weights in terms of the maximized value of the LF can be expressed as

for

, such that

, and

. Similarly, for the divergence approach, suppose

is the value of density power divergence based on model

, hence,

, and since the values of

for all considered models are less than 0, for the top

models, the weights in terms of the minimized values of the DPD can be expressed as

for

, such that

, and

.

Note that the model selection approach discussed in

Section 4.1 can be formulated as a special case of the model ranking approach by setting

and

(or

and

). Furthermore, employing a specific model

without model selection can be viewed as a special case within the framework of the proposed model ranking approach, achieved by assigning

and

for

. Consequently, the efficacy of the proposed model ranking approach aligns with that of the non-model selection approach when suitable values for

and the weights are chosen. See

Li et al. (

2020);

Mehrabani and Ullah (

2020);

Miljkovic (

2025);

Miljkovic and Grün (

2021) and related work for further discussion of weighted estimates from multiple models.

5. Monte Carlo Simulation Study

In this section, a Monte Carlo simulation study is used to evaluate the performance of the proposed methodologies in the presence and absence of contamination. To compare the performance of different approaches and estimators, we consider the simulated biases and root mean square errors (RMSEs) of the MLE and MDPD estimates of VaR with confidence level

under statistical distribution

based on

simulations, which can be computed as

where

and

are the MLE and MDPD estimate of VaR of

with confidence level

in the

s-th simulation, and

is the true value of VaR of

with confidence level

.

To facilitate the comparison of the various estimation methods and the proposed model selection/ranking strategies for addressing model uncertainty, we compute the relative bias and relative RMSE of two estimators, say

and

, defined as

respectively. The absolute value of the relative bias in Equation (

30) and the value of relative RMSE in Equation (31) less than 1 indicate that estimator

is a better estimator compared to

. The sign of the relative bias in Equation (

30) indicates if the directions of the biases of the two estimators

and

are the same (positive RBias) or they are opposite to each other. The simulation results are obtained based on a simulation size

and reported in the Appendices.

First, considering the assumed model and the true model agree (i.e., without model misspecification), we compare the performance of the likelihood-based and divergence-based parameter estimation procedures presented in

Section 3. The average MLEs and MDPD estimates of

for the six probability models in

Section 2 (

, the biases and RMSEs of the MLEs, and the relative biases and relative RMSEs of the MDPD estimates relative to the MLEs (i.e.,

and

) are presented in

Table A1. From

Table A1, it is evident that when the assumed model is indeed the true model and without any contaminated observations, the performance of the MLE is better than that of the MDPD estimator in terms of MSE, while the MDPD estimator provides smaller biases compared to the MLE in some situations.

In the following subsection, we present the results of the Monte Carlo simulation study to investigate the performance of the estimation procedures and the proposed model selection and ranking approaches under model misspecification with and without contamination. In

Section 5.1, we compare the performance of the proposed model selection and model ranking procedures based on likelihood and divergence methods where contamination is not present. Additionally, since estimators based on DPD measures are known to be robust, we consider a contamination model in

Section 5.2 and compare the performances of the proposed procedures to determine whether methods based on DPD are relatively better than those based on LF.

5.1. Performance of the Proposed Methods in the Absence of Contamination

To assess the performance of the proposed methods in the absence of contamination, the data is assumed to be governed by one of the six candidate distributions discussed in

Section 2. Specifically, we generate random samples of size

n from

,

,

,

,

, and

for

simulations.

Before evaluating the performance of the proposed model selection and model ranking approaches, we study the performance of the MLE and MDPD estimation methods for VaR under model misspecification with the non-model-selection approach (i.e., estimate the VaR under the assumed model

). For each dataset simulated from a true model (say, model

), the parameter vector and the corresponding VaR values are estimated under the assumed model using MLE and MDPD estimation methods. In

Table A2,

Table A3 and

Table A4, we present the average VaR estimates with confidence level

, the relative biases relative to the bias of the MLEs, when the assumed model is the true model (i.e.,

and

) and the relative RMSEs relative to the RMSE of the MLEs when the assumed model is the true model (i.e.,

and

) respectively. Therefore, when the assumed model is the true model (values highlighted in boldface), the value of

and

is 1 under the likelihood method.

Table A2,

Table A3 and

Table A4 illustrate that when the model is misspecified, biases in estimation arise, regardless of the method used for estimating the VaR. Furthermore, although some RMSEs may be smaller in certain instances compared to those observed under correct model specifications, overall, RMSEs tend to be higher when the model is misspecified. Particularly noteworthy is the substantial increase in biases and RMSEs when the model is misspecified as a Fréchet distribution. These findings underscore the imperative of developing effective strategies to mitigate the impact of model misspecification.

Here, we also evaluate the performance of VaR estimates based on the proposed model selection and model ranking approach presented in

Section 4. For each simulated dataset, we perform the model selection approach by using the likelihood-based and the divergence-based approaches; then, based on the selected model (i.e., the most probable model), we compute the estimate of VaR by using the MLE or MDPD estimation methods, as

and

, respectively. To evaluate the performance of the estimates

and

using the model selection approach, we compute the relative biases and relative RMSEs relative to the biases and RMSEs of the MLEs under the correctly specified model

, i.e.,

and

for the model selection approach using MLE, and

and

for the DPD measure approach).

In

Table A5, the average values of the estimates of VaR, based on the model selection approach, along with the associated relative biases and the relative RMSEs of all the proposed estimators, relative to the corresponding bias and RMSEs of the MLEs obtained under the assumption of the correctly specified model. Moreover, we present the simulation proportion of the best-fitting model in

Table A6 (in terms of maximized likelihood or minimized divergence) among all competing models. The boldfaced proportions in

Table A6 represent the proportions of the true model from which the data is generated, being selected as the best model in terms of maximized likelihood or minimized DPD in

simulations. The other proportions for different assumed models against a given true model indicate the proportions of the assumed model being chosen as the best model in the

simulations, which is the model then used to compute the final VaR estimates with Equation (

24).

For the model ranking approach, we consider

and 3, i.e., use the top two and top three most probable models to compute the weighted average estimate of VaR. In

Table A7 and

Table A8, we present the average estimates, the relative biases, and relative RMSEs relative to the MLEs under the true model for

and

with

and 3, respectively. To evaluate the performance of the proposed model ranking approach in identifying the true model, in

Table A9 and

Table A10, we present the proportion of times the true model is ranked within the top

(denoted by order of preference

for

; and order of preference

for

) and the proportion of times the true model is not ranked as top

(denoted by order of preference = 0). In other words, the order of preference 0 indicates the case when the true model is not used for computing the weighted average VaR estimate.

The results in

Table A7,

Table A8,

Table A9 and

Table A10 indicate that, as expected, estimation procedures without knowledge of the true model generally perform worse than MLEs under the true model. Among the model selection (

) and model ranking approaches (

and

), the relative MSEs are smaller for

compared to

or

for most models considered, except that of the Weibull distribution. The value of

is subjective and is determined by the degree of confidence in the performance of the estimator under study. Specifically, when the level of uncertainty in the quantity to be estimated is high, larger values of

are more appropriate, and vice versa.

Although these estimators do not perform as well as those using the true model, model averaging offers better credibility since an incorrect model would be highly inconsistent. Additionally,

Table A9 and

Table A10 show that the proposed model selection and ranking techniques may not have high discriminating power for all models. For example, models like Fisk, Lomax, and paralogistic have proportions of the true model used for VaR calculation via the model ranking method of less than 0.5, likely due to the similarity of the candidate distributions, as discussed in

Section 3.

5.2. Performance of the Proposed Methods in the Presence of Contamination

In non-life insurance claims, it is common to encounter some exceptionally high-magnitude observations. Although these occurrences are infrequent, yet, they can significantly distort model fitting and model selection if the criteria used are not robust. Literature suggests that the density power divergence method is a robust estimation criterion (see, for example,

Basu et al. 1998,

2013;

Jones et al. 2001, and the articles cited therein). When analyzing and fitting probability models to contaminated datasets, two key questions often arise. The first is how to identify and estimate the effect of contamination or outliers, especially if they are of significant importance. The second is how to design an estimator that is minimally affected by outliers, allowing the true characteristics of the data, free from contamination, to be accurately studied. In practical applications, contamination models can be treated as a convex mixture of two distributions (

Brownie et al. 1983;

Punzo and Tortora 2021).

In this subsection, we evaluate the performance of the model selection and model ranking approaches under contamination models. We generate contaminated data under a fixed contamination model, where a fixed proportion of the sample is obtained from the contamination model, and the remaining observations in the sample come from the original model of interest. Here, the observed random sample from the original model is denoted as , and the observed random sample from the contamination model is respectively denoted as . The following algorithm is used to generate a contaminated sample of size n for a given :

Generate ) from , where is integer value of ;

Generate ) from ;

Return the sample .

For the probability distributions

and

, we consider the six probability distributions described in

Section 2, where

has the same probability law as

, but with different values of scale parameters. The values of parameters of the contamination models were chosen to yield significantly high values of claim sizes, which would mostly appear as outliers for the original model (

Wellmann and Gather 1999).

The performances of the proposed methods are evaluated in terms of their relative biases and relative RMSEs in connection with the biases and RMSEs of the MLEs of VaR values obtained based on the correctly specified model in the presence of contamination. An intuitive justification for comparing the proposed methods to the MLEs in the presence of contamination is that divergence-based measures are known to be robust. Therefore, it is reasonable to believe that one or more deviant observations should not significantly affect the divergence-based estimator’s general behavior for the characteristic of interest, which is the estimate of VaR of the original model.

As discussed in the contamination-free case in

Section 5.1, before evaluating the performance of the proposed model selection and model ranking approaches, we examine the performance of the MLE and MDPD estimation methods for VaR under model misspecification and contamination without employing model selection.

Table A18 presents the average VaR estimates along with their associated biases and RMSEs for the MLEs, and the relative biases and relative RMSEs for the MDPD estimates for some selected models. The table highlights the significant deviations of the VaR estimates from those obtained from the correctly specified model. Additionally, the biases and RMSEs of the MLEs for VaR are notably higher in the presence of contamination compared to the scenario without contamination.

We then evaluate the performance of the MLE and MDPD estimation methods for VaR under model misspecification with data contamination. Specifically, for the case with 10% contamination (i.e.,

),

Table A19,

Table A20 and

Table A21 present the average VaR estimates along with their associated relative biases and relative RMSEs. These metrics are compared to the bias and MSE of the MLEs under the correctly specified model. The results in these tables indicate that the MDPD estimator for VaR outperforms the MLE in terms of reduced relative risks, particularly for values of the tuning parameter that are away from 0. Additionally, the findings highlight that using a non-model selection approach can lead to misleading VaR estimates when the model is misspecified.

Table A22,

Table A23 and

Table A24 present the average VaR estimates using the model selection approach (i.e., model ranking approach with (

) and the model ranking approach with (

) and (

), along with their corresponding relative biases and relative RMSEs, compared to the MLE under the correctly specified model with 10% data contamination. Additionally, the simulation proportions of the true model being included in the final VaR estimate calculation based on the model selection approach and the model ranking approach with (

) and (

) are shown in

Table A19,

Table A20 and

Table A21. These simulation results demonstrate that the proposed model selection and ranking approaches, particularly those based on the minimized DPD, provide more accurate VaR estimates compared to using a prespecified model when contamination is present in the dataset. Interestingly, there are cases (e.g., when the true model is lognormal or Weibull) where the true model is rarely used in the final VaR estimates. Overall, the model averaging technique based on minimized DPD offers a more accurate procedure for estimating VaR, with significantly better reliability in the presence of contamination, even though the true model may seldom be correctly identified.

To keep this paper concise, we present only some representative simulation results here; additional simulation results are provided in Appendices

Appendix B and

Appendix D.

7. Extension to Candidate Models with Unequal Number of Parameters

In the preceding sections, the candidate probability models (see

Section 2) are all two-parameter models, implying equal model complexity. This choice is common in comparative studies, as models with the same number of parameters allow for a fair comparison.

However, in some situations, researchers may consider probability models with different numbers of parameters. In such cases, model complexity must be explicitly accounted for when comparing model fits. In this section, we address the question of whether the model selection and ranking procedure based on either the LF or the DPD measure remains valid and practical when the candidate set contains models with unequal numbers of parameters. Although our earlier development assumed that all candidate models have the same number of unknown parameters, this section demonstrates how the proposed method can be extended to accommodate models with differing parameter counts.

When the likelihood-based estimation method described in

Section 3.1 is used for parameter estimation, information-based criteria—such as the Akaike information criterion (AIC), the Bayesian information criterion (BIC), and the deviance information criterion (DIC)—are widely adopted for model selection (see, for example,

Burnham and Anderson 2002;

Castilla et al. 2020;

Karagrigoriou and Mattheou 2009). These criteria incorporate a penalty for the number of model parameters, thereby addressing differences in model complexity. For example, the AIC is given by

where

is the number of parameters in the model,

is the value of maximized likelihood function defined in Equation (

19) evaluated at the MLE

defined in Equation (

20). The AIC balances goodness-of-fit with parsimony by penalizing excessive parameters, favoring models that remain simple yet adequately explain the data. A smaller AIC value reflects a more desirable trade-off between fit and complexity.

When the divergence-based estimation method described in

Section 3.2 is employed, the robust divergence-based Bayesian criterion (DBBC) proposed by

Kurata and Hamada (

2020), denoted

can serve as a model selection and ranking tool. It is defined as

where

denotes the number of parameters in the model and

is the minimized value of the DPD measure given in Equation (

22), evaluated at

. Like the AIC,

penalizes models with more parameters, and a lower value indicates a better compromise between model fit and complexity.

For illustration, consider a set of four candidate models, where two are generalized versions of the other two and naturally include them as special cases. Specifically, we use the exponentiated Weibull distribution, denoted as

, (

Mudholkar and Srivastava 1993), and the exponentiated Fréchet distribution, denoted as

, (

Nadarajah and Kotz 2003), analyze the vehicle insurance dataset discussed in

Section 3 and

Section 6.1. The PDFs of

and

are given by

where

and

are the respective parameter vectors;

and

are the scale parameters, while

and

are the shape parameters.

Table 4 presents the model ranking results based on the AIC and the

criteria for

and

. The four candidate models include two basic two-parameter models (the Fréchet (

FR) and Weibull (

WE) distributions) and their corresponding three-parameter generalizations, the exponentiated Fréchet (

EF) and exponentiated Weibull (EW) distributions.

Across both AIC and measures, the EF and EW models consistently outperform their simpler counterparts ( and , respectively) for all values considered here. This improvement can be attributed to the inclusion of an additional shape parameter in the EF and EW distributions, which increases model flexibility and allows a better adaptation to the tail behavior and overall distributional shape of the data.

Importantly, both AIC and incorporate a penalty term for model complexity, ensuring that a model is not favored solely because it has more parameters. The fact that EF and EW achieve lower (better) values for these penalized criteria indicates that the improvement in goodness of fit more than compensates for the increase in parameter count. In other words, the additional shape parameter provides meaningful explanatory power rather than overfitting noise in the data.

These results suggest that, in this application, allowing greater distributional flexibility via an additional shape parameter can lead to a statistically justifiable improvement in model performance, even when penalizing for increased complexity. For explanatory purposes, the VaR estimates at

for the four candidate models are reported in

Table 5.

It is worth noting that the methodology proposed here can be applied with model-selection criteria beyond AIC and . For example, goodness-of-fit measures, such as the Kolmogorov-Smirnov distance and the Anderson-Darling statistic, can be considered.

8. Concluding Remarks

The findings presented in this article provide significant insights into the implications and mitigation strategies for model misspecification in insurance claims data analysis. By exploring various probability models and adopting both likelihood-based and divergence-based estimation methods, this study highlights the importance of accurate model selection and ranking in minimizing the adverse effects of model uncertainty. The numerical examples and simulation studies demonstrate that the proposed model selection and ranking approaches, particularly those based on the divergence measure, offer robust solutions for estimating risk measures such as VaR.

The practical implications of this research are profound, as these methods effectively address inaccuracies arising from model misspecification. These methods provide practicing and academic actuaries with advanced tools to mitigate risks and achieve more reliable outcomes in their risk assessments, even in the presence of model misspecification. Practically, relying on a single, potentially misspecified model can yield large errors and misleading conclusions for VaR. By aggregating across competing models and using robust estimators when appropriate, our approach yields more stable VaR estimates and decisions that are less sensitive to outliers, which are common in insurance claims data.

This, in turn, enhances the reliability of insurance data analysis, ultimately leading to better decision-making in the insurance industry. Future research could expand on these methods to refine the accuracy and applicability of model selection and ranking approaches in various actuarial contexts.

A few limitations of the proposed methodologies should also be acknowledged. Under model uncertainty, the true data-generating process is unknown, and all candidate parametric models are approximations; likelihood-based and divergence-based estimators target pseudo-true parameters, and the resulting risk measures may still be biased if the candidate models are poorly specified. Although aggregating across multiple probability distributions and employing robust estimators such as the MDPD can substantially reduce sensitivity to misspecification and outliers, the performance of the approach ultimately depends on the researcher’s ability to select a reasonably rich and flexible set of candidate models. If these candidates are uniformly inadequate, the resulting estimates may remain unreliable.