Online Assessment of Motor, Cognitive, and Communicative Achievements in 4-Month-Old Infants

Abstract

:1. Introduction

2. Materials and Methods

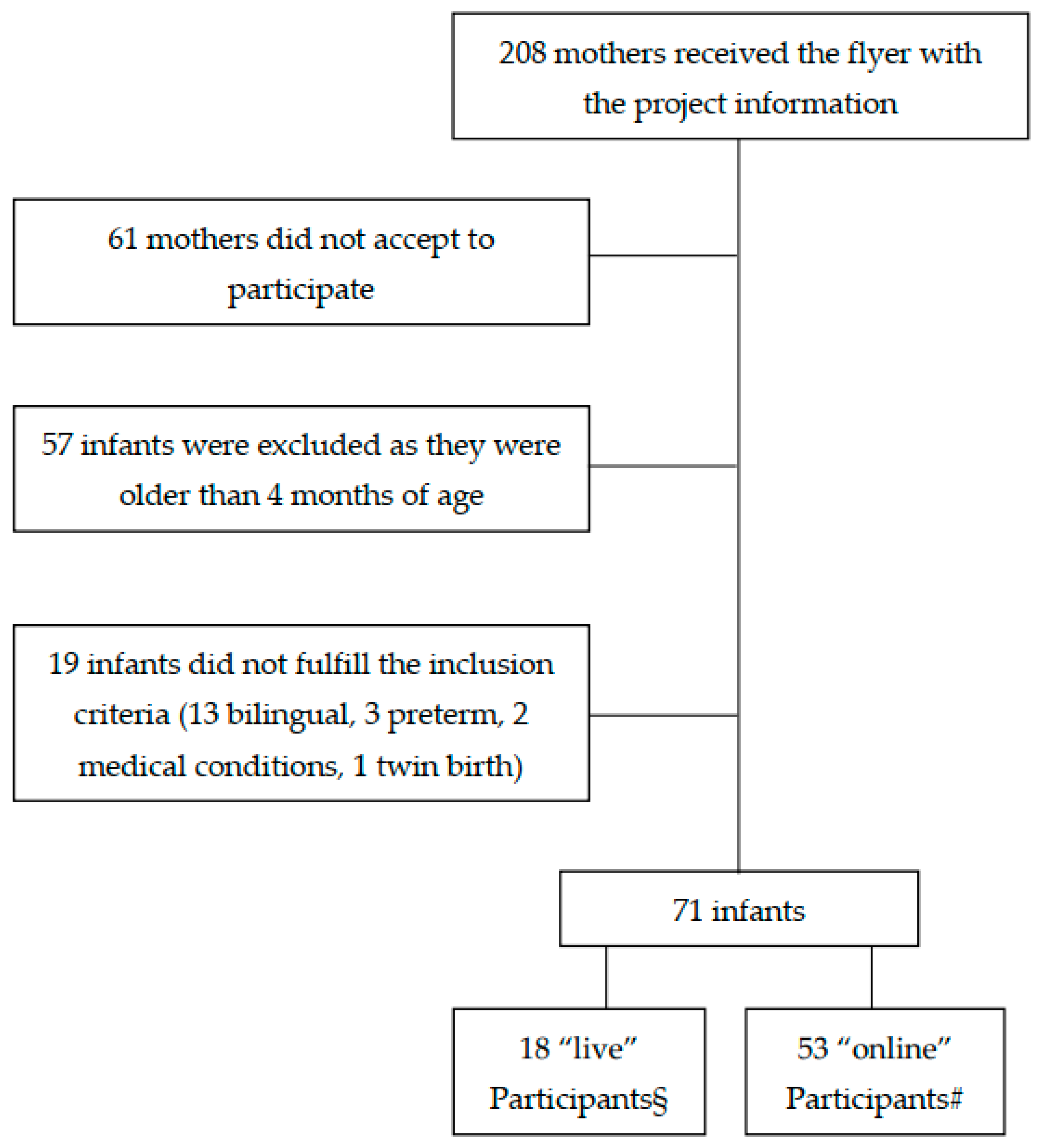

2.1. Participants

2.2. Measures

2.3. Procedure

2.4. Data Analyses

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Badawy, S.M.; Radovic, A. Digital approaches to remote pediatric health care delivery during the COVID-19 pandemic: Existing evidence and a call for further research. JMIR Pediatr. Parent. 2020, 3, e20049. [Google Scholar] [CrossRef] [PubMed]

- Galway, N.; Stewart, G.; Maskery, J.; Bourke, T.; Lundy, C.T. Fifteen-minute consultation: A practical approach to remote consultations for paediatric patients during the COVID-19 pandemic. Arch. Dis. Child.-Educ. Pract. 2020, 106, 206–209. [Google Scholar] [CrossRef]

- Wall-Haas, C.L. Connect, engage: Televisits for children with asthma during COVID-19 and after. J. Nurse Pract. 2021, 17, 293–298. [Google Scholar] [CrossRef] [PubMed]

- Bokolo, A. Jnr Implications of telehealth and digital care solutions during COVID-19 pandemic: A qualitative literature review. Inform. Health Soc. Care 2021, 46, 68–83. [Google Scholar] [CrossRef]

- Adjerid, I.; Kelley, K. Big data in psychology: A framework for research advancement. Am. Psychol. 2018, 73, 899–917. [Google Scholar] [CrossRef]

- van Gelder, M.M.H.J.; Merkus, P.J.F.M.; van Drongelen, J.; Swarts, J.W.; van de Belt, T.H.; Roeleveld, N. The PRIDE study: Evaluation of online methods of data collection. Paediatr. Perinat. Epidemiol. 2020, 34, 484–494. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tran, M.; Cabral, L.; Patel, R.; Cusack, R. Online recruitment and testing of infants with Mechanical Turk. J. Exp. Child Psychol. 2017, 156, 168–178. [Google Scholar] [CrossRef]

- Rhodes, M.; Rizzo, M.; Foster-Hanson, E.; Moty, K.; Leshin, R.; Wang, M.M.; Ocampo, J.D. Advancing developmental science via unmoderated remote research with children. J. Cogn. Dev. 2020, 21, 477–493. [Google Scholar] [CrossRef]

- Birnbaum, M.H. Human research and data collection via the internet. Annu. Rev. Psychol. 2004, 55, 803–832. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Semmelmann, K.; Hönekopp, A.; Weigelt, S. Looking tasks online: Utilizing webcams to collect video data from home. Front. Psychol. 2017, 8, 1582. [Google Scholar] [CrossRef] [Green Version]

- Sheskin, M.; Scott, K.; Mills, C.M.; Bergelson, E.; Bonawitz, E.; Spelke, E.S.; Fei-Fei, L.; Keil, F.C.; Gweon, H.; Tenenbaum, J.B.; et al. Online developmental science to foster innovation, access, and impact. Trends Cogn. Sci. 2020, 24, 675–678. [Google Scholar] [CrossRef]

- Sheskin, M.; Keil, F. TheChildLab.com: A Video Chat Platform for Developmental Research; TheChildLab.com: New Haven, CT, USA, 2018. [Google Scholar] [CrossRef]

- Zaadnoordijk, L.; Buckler, H.; Cusack, R.; Tsuji, S.; Bergmann, C. A global perspective on testing infants online: Introducing ManyBabies-AtHome. Front. Psychol. 2021, 12, 703234. [Google Scholar] [CrossRef]

- Frank, M.C.; Sugarman, E.; Horowitz, A.C.; Lewis, M.L.; Yurovsky, D. Using tablets to collect data from young children. J. Cogn. Dev. 2016, 17, 1–17. [Google Scholar] [CrossRef]

- Schidelko, L.P.; Schünemann, B.; Rakoczy, H.; Proft, M. Online testing yields the same results as lab testing: A validation study with the false belief task. Front. Psychol. 2021, 12, 703238. [Google Scholar] [CrossRef]

- Nelson, P.M.; Scheiber, F.; Laughlin, H.M.; Demir-Lira, Ö. Comparing face-to-face and online data collection methods in preterm and full-term children: An exploratory study. Front. Psychol. 2021, 12, 733192. [Google Scholar] [CrossRef]

- Venkatesh, S.; DeJesus, J.M. Studying children’s eating at home: Using synchronous videoconference sessions to adapt to COVID-19 and beyond. Front. Psychol. 2021, 12, 703373. [Google Scholar] [CrossRef] [PubMed]

- Scott, K.; Chu, J.; Schulz, L. Lookit (part 2): Assessing the viability of online developmental research, results from three case studies. Open Mind 2017, 1, 15–29. [Google Scholar] [CrossRef]

- Smith-Flores, A.S.; Perez, J.; Zhang, M.H.; Feigenson, L. Online measures of looking and learning in infancy. Infancy 2022, 27, 4–24. [Google Scholar] [CrossRef]

- Bochynska, A.; Dillon, M.R. Bringing home baby euclid: Testing infants’ basic shape discrimination online. Front. Psychol. 2021, 12, 734592. [Google Scholar] [CrossRef]

- Bánki, A.; de Eccher, M.; Falschlehner, L.; Hoehl, S.; Markova, G. Comparing online webcam-and laboratory-based eye-tracking for the assessment of infants’ audio-visual synchrony perception. Front. Psychol. 2022, 12, 733933. [Google Scholar] [CrossRef]

- Libertus, K.; Violi, D.A. Sit to talk: Relation between motor skills and language development in infancy. Front. Psychol. 2016, 7, 475. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Boonzaaijer, M.; van Wesel, F.; Nuysink, J.; Volman, M.J.; Jongmans, M.J. A home-video method to assess infant gross motor development: Parent perspectives on feasibility. BMC Pediatr. 2019, 19, 392. [Google Scholar] [CrossRef] [PubMed]

- Bayley, N. Bayley Scales of Infant and Toddler Development, 3rd ed.; Bayley-III; The Psychological Corporation: San Antonio, TX, USA, 2006. [Google Scholar]

- Shields, M.M.; McGinnis, M.N.; Selmeczy, D. Remote research methods: Considerations for work with children. Front. Psychol. 2021, 12, 703706. [Google Scholar] [CrossRef] [PubMed]

- Manning, B.L.; Harpole, A.; Harriott, E.M.; Postolowicz, K.; Norton, E.S. Taking language samples home: Feasibility, reliability, and validity of child language samples conducted remotely with video chat versus in-person. J. Speech Lang. Hear. Res. 2020, 63, 3982–3990. [Google Scholar] [CrossRef]

- Lamb, M.E.; Bornstein, M.H.; Teti, D.M. Development in Infancy: An Introduction; Lawrence Erlbaum Associates: Mahweh, NJ, USA, 2002. [Google Scholar]

- Chuey, A.; Asaba, M.; Bridgers, S.; Carrillo, B.; Dietz, G.; Garcia, T.; Leonard, J.A.; Liu, S.; Merrick, M.; Radwan, S.; et al. Moderated online data-collection for developmental research: Methods and replications. Front. Psychol. 2021, 12, 734398. [Google Scholar] [CrossRef]

- Pearson Edition. Administering the Bayley Scales of Infant and Toddler Development, 4th ed.; Bayley-4 Via Telepractice. Available online: https://www.pearsonassessments.com/content/school/global/clinical/us/en/professional-assessments/digital-solutions/telepractice/telepractice-and-the-bayley-4.html (accessed on 28 February 2022).

| Variable | Group | Testing Modality | Student’s t-test/Chi Square | |

|---|---|---|---|---|

| Online (N = 53) | Live (N = 18) | |||

| Infant age (months, mean ± standard deviation) | 4.11 ± 0.21 | 4.19 ± 0.15 | t (69) = −1.217, p = 0.228 | |

| Infant gender (n and %) | Males | 27 (50.9) | 10 (55.6) | χ21 = 0.115, p = 0.735 |

| Females | 26 (49.1) | 8 (44.4) | ||

| Number of siblings (n and %) | Zero | 30 (56.6) | 9 (50.0) | χ23 = 3.154, p = 0.368 |

| One | 21 (39.6) | 7 (38.9) | ||

| Two | 2 (3.8) | 1 (5.6) | ||

| Three | 0 | 1 (5.6) | ||

| Infant birth weight for gestational age (n and %) | Adequate for gestational age | 42 (79.2) | 14 (77.8) | χ22 = 2.836, p = 0.242 |

| Small for gestational age | 5 (9.4) | 0 | ||

| Large for gestational age | 6 (11.3) | 4 (22.2) | ||

| Maternal education (n and %) | Middle school | 0 | 1 (5.6) | χ24 = 3.348, p = 0.501 |

| High school | 2 (3.8) | 1 (5.6) | ||

| Bachelor’s | 9 (17.0) | 2 (11.1) | ||

| Master’s | 33 (62.3) | 11 (61.1) | ||

| PhD or equivalent | 9 (17.0) | 3 (16.7) | ||

| Income (EURO) (n and %) | <EUR 10,000 | 1 (1.9) | 0 | χ29 = 6.134, p = 0.726 |

| EUR 10,000–19,000 | 6 (11.3) | 1 (5.6) | ||

| EUR 20,000–29,000 | 5 (9.4) | 2 (11.1) | ||

| EUR 30,000–39,000 | 7 (13.2) | 1 (5.6) | ||

| EUR 40,000–49,000 | 8 (15.1) | 3 (16.7) | ||

| EUR 50,000–59,000 | 4 (7.5) | 4 (22.2) | ||

| EUR 60,000–69,000 | 2 (3.8) | 1 (5.6) | ||

| EUR 70,000–79,000 | 2 (3.8) | 1 (5.6) | ||

| EUR 80,000–89,000 | 3 (5.7) | 0 | ||

| >EUR 100,000 | 2 (3.8) | 0 | ||

| Missing | 13 (24.5) | 5 (27.8) | ||

| Test Modality | N | Mean | Standard Deviation | Levene’s Test (F) | Levene’s Test (p) | t | df | p | Observed Cohen’s d | Minimum Detectable Cohen’s d # | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Online Assessment Subscales | |||||||||||

| Cognitive | Live | 18 | 7.389 | 0.916 | 2.273 | 0.137 | −1.377 | 62 | 0.174 | 0.38 | 0.79 |

| Online | 46 | 7.674 | 0.668 | ||||||||

| Receptive Communication | Live | 18 | 4.440 | 0.511 | 0.101 | 0.752 | 1.848 | 43 | 0.072 | 0.56 | 0.87 |

| Online | 27 | 4.110 | 0.641 | ||||||||

| Expressive Communication | Live | 18 | 5.670 | 0.485 | 4.354 | 0.041 | 1.458 | 47.853 | 0.151 § | 0.42 | 0.79 |

| Online | 46 | 5.430 | 0.750 | ||||||||

| Fine Motor | Live | 18 | 5.610 | 1.577 | 3.945 | 0.051 | −1.159 | 62 | 0.251 § | 0.30 | 0.79 |

| Online | 46 | 6.070 | 1.340 | ||||||||

| Gross Motor | Live | 17 | 8.240 | 1.821 | 0.096 | 0.759 | 0.825 | 45 | 0.414 | 0.25 | 0.87 |

| Online | 30 | 7.800 | 1.690 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gasparini, C.; Caravale, B.; Focaroli, V.; Paoletti, M.; Pecora, G.; Bellagamba, F.; Chiarotti, F.; Gastaldi, S.; Addessi, E. Online Assessment of Motor, Cognitive, and Communicative Achievements in 4-Month-Old Infants. Children 2022, 9, 424. https://doi.org/10.3390/children9030424

Gasparini C, Caravale B, Focaroli V, Paoletti M, Pecora G, Bellagamba F, Chiarotti F, Gastaldi S, Addessi E. Online Assessment of Motor, Cognitive, and Communicative Achievements in 4-Month-Old Infants. Children. 2022; 9(3):424. https://doi.org/10.3390/children9030424

Chicago/Turabian StyleGasparini, Corinna, Barbara Caravale, Valentina Focaroli, Melania Paoletti, Giulia Pecora, Francesca Bellagamba, Flavia Chiarotti, Serena Gastaldi, and Elsa Addessi. 2022. "Online Assessment of Motor, Cognitive, and Communicative Achievements in 4-Month-Old Infants" Children 9, no. 3: 424. https://doi.org/10.3390/children9030424

APA StyleGasparini, C., Caravale, B., Focaroli, V., Paoletti, M., Pecora, G., Bellagamba, F., Chiarotti, F., Gastaldi, S., & Addessi, E. (2022). Online Assessment of Motor, Cognitive, and Communicative Achievements in 4-Month-Old Infants. Children, 9(3), 424. https://doi.org/10.3390/children9030424