Assessing the Predictive Validity of Risk Assessment Tools in Child Health and Well-Being: A Meta-Analysis

Abstract

1. Introduction

- (a)

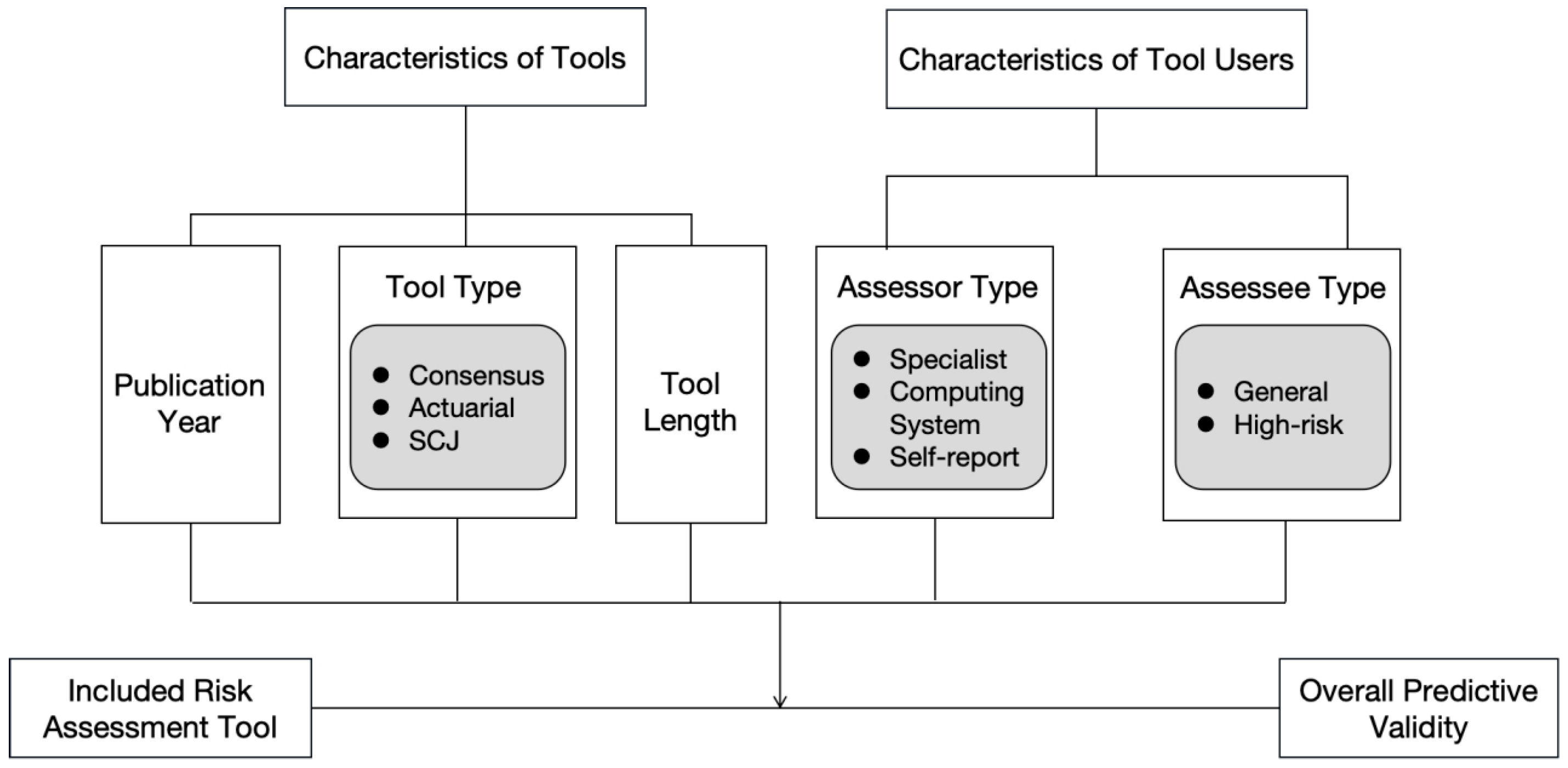

- Consensus instruments are developed by compiling and refining risk factors through expert analyses of various case types, resulting in a consensus-based checklist. These tools support child healthcare and well-being professionals in identifying both the conditions that contribute to harmful behaviors and the family strengths that enhance caregivers’ protective capacities [8,9,10]. Such assessments rely on the evaluator’s values, professional expertise, and capacity to integrate and apply knowledge to form subjective, descriptive judgments [11]. These tools are particularly valuable in addressing complex cases [12,13].

- (b)

- Actuarial instruments are grounded in utility theory and rely on equations, formulas, charts, algorithms, or actuarial tables to generate graded estimates of the likelihood of harm to a child, typically expressed through standardized scoring systems [14,15,16]. These tools are valued for their predictive validity and their capacity to enhance consistency in decisions related to family intervention and service provision [17]. However, the predictive variables used in these instruments are derived from large-scale studies or meta-analyses, and their inclusion is contingent upon the quality and robustness of existing research [18].

- (c)

- Structured clinical judgment (SCJ) instruments, a more recent development in the field, combine professional judgment methodologies to bridge the gap between clinical practice and scientifically grounded (actuarial) approaches to risk assessment [16,19]. These instruments are not direct hybrids of consensus- and actuarial-based tools; rather, they are purposefully designed to mitigate the limitations of both while preserving their respective strengths [20]. SCJ tools provide structured guidelines informed by the operationalization of variables across multiple dimensions. While some of these guidelines are empirically supported, the final decision-making authority remains with the practitioner [15,18]. Unlike actuarial instruments, the specific items included in SCJ tools are derived from comprehensive literature reviews rather than specific datasets [20].

2. Literature Review and Framework

3. Methods and Materials

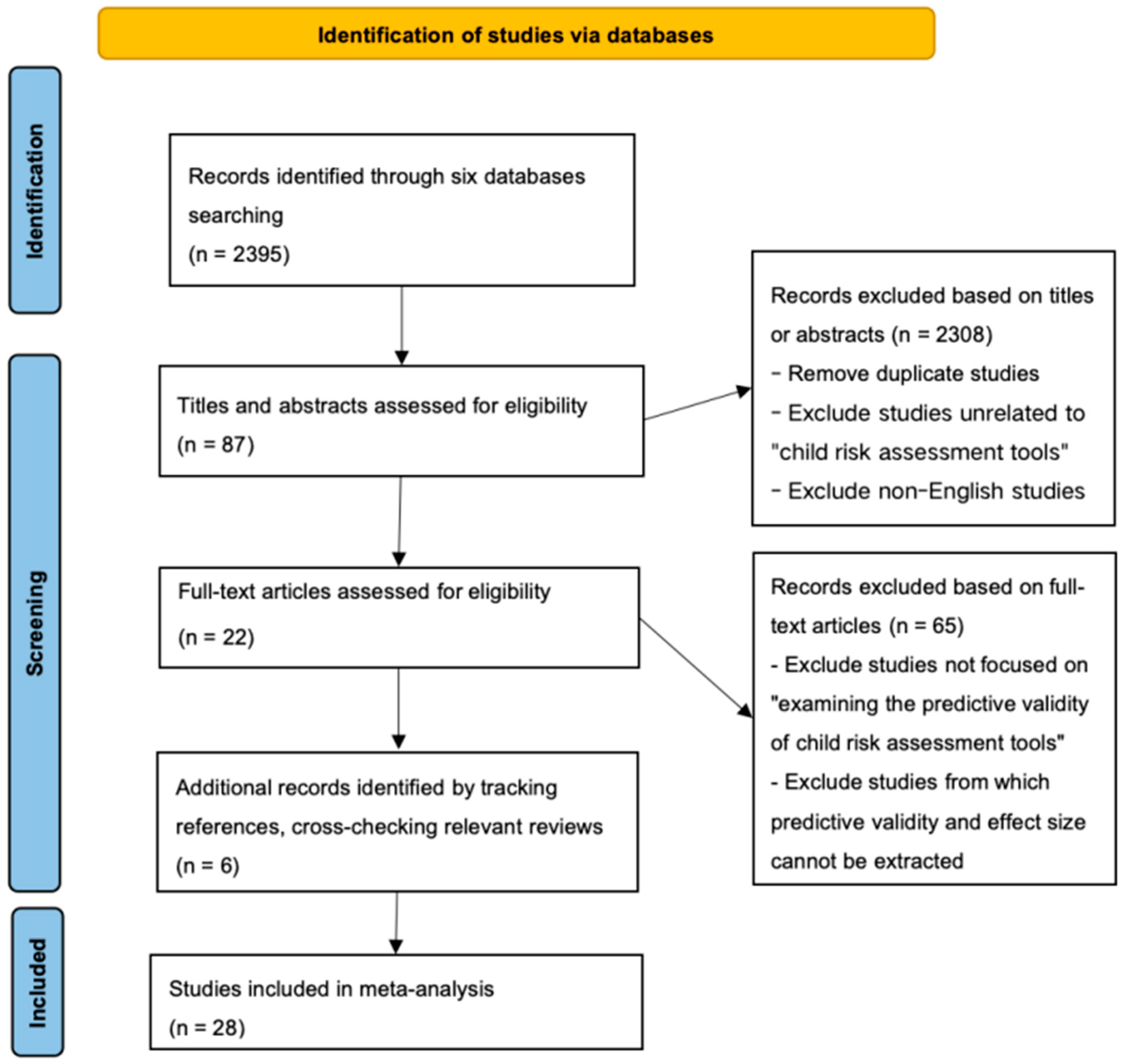

3.1. Data Collection

3.2. Coding and Quality Assessment

3.3. Statistical Analysis Process

4. Results

4.1. Descriptive Analysis

4.2. Bias Testing

4.3. Main Effect Analysis

4.4. Moderation Effect Analysis

5. Discussion

5.1. Implications for Practice

5.2. Strengths and Limitations of This Study

6. Conclusions

Funding

Conflicts of Interest

Appendix A

| Tool Abbreviation Name | Tool Full Name |

|---|---|

| AAPI–2 | The Adult-Adolescent Parenting Inventory–2 |

| ARIJ | Actuarial Risk Assessment Instrument for Child Protection |

| CAP | Child Abuse Potential Inventory |

| CARE-NL | Child Abuse Risk Evaluation—Dutch version |

| CARAS | Child Abuse Risk Assessment Scale |

| C-CAPS | Cleveland Child Abuse Potential Scale |

| CFAFA | California Family Assessment Factor Analysis |

| CFRA | California Family Risk Assessment |

| CGRA | Concept-guided risk assessment |

| CLCS | Check List of Child Safety |

| CT | Connecticut risk assessment |

| CTSPC | Parent-Child Conflict Tactics Scales |

| ERPANS | Early Risks of Physical Abuse and Neglect Scale |

| FACE-CARAS | Functional Analysis in Care Environments-Child and Adolescent Risk-Assessment Suite |

| IPARAN | Identification of Parents at Risk for child Abuse and Neglect |

| M- SDM FRA | Minnesota SDM Family Risk Assessment |

| ORA | Ontario’s risk assessment tool |

| PMCTR-J | Prediction model for child maltreatment recurrence in Japan |

| PRM | Predictive risk model |

| RAM | Risk Assessment Matrix |

| SDM | Michigan Structured Decision-Making System’s Family Risk Assessment of Abuse and Neglect |

| SPARK | Structured Problem Analysis of Raising Kids |

| SPUTOVAMO-R2/R3 | SPUTOVAMO-R2/R3 |

| TeenHITSS | Teen Hurt-Insult-Threaten-Scream-Sex |

| WRAM | Washington Risk Assessment Matrix |

References

- WHO. Countries Pledge to Act on Childhood Violence Affecting Some 1 Billion Children. Available online: https://www.who.int/news/item/07-11-2024-countries-pledge-to-act-on-childhood-violence-affecting--some-1-billion-children (accessed on 3 January 2025).

- UNICEF China. Child Protection. Available online: https://www.unicef.cn/en/what-we-do/child-protection (accessed on 3 January 2025).

- Benjelloun, G. Hidden Scars: How Violence Harms the Mental Health of Children; Office of the Special Representative of the Secretary-General on Violence against Children, United Nations: San Francisco, CA, USA, 2020; Available online: https://violenceagainstchildren.un.org/news/hidden-scars-how-violence-harms-mental-health-children (accessed on 3 January 2025).

- Pecora, P.J. Investigating Allegations of Child Maltreatment: The Strengths and Limitations of Current Risk Assessment Systems. Child. Youth Serv. 1991, 15, 73–92. [Google Scholar] [CrossRef]

- Parton, N.; Thorpe, D.; Wattam, C. Child Protection: Risk and the Moral Order, 1st ed.; Bloomsbury Academic: London, UK, 1997; ISBN 978-1-350-36265-9. [Google Scholar]

- English, D.J.; Pecora, P.J. Risk Assessment as a Practice Method in Child Protective Services. Child. Welf. 1994, 73, 451–473. [Google Scholar]

- van der Put, C.E.; Assink, M.; Boekhout van Solinge, N.F. Predicting Child Maltreatment: A Meta-Analysis of the Predictive Validity of Risk Assessment Instruments. Child Abus. Negl. 2017, 73, 71–88. [Google Scholar] [CrossRef]

- Baird, C.; Wagner, D. The Relative Validity of Actuarial- and Consensus-Based Risk Assessment Systems. Child. Youth Serv. Rev. 2000, 22, 839–871. [Google Scholar] [CrossRef]

- Cash, S.J. Risk Assessment in Child Welfare: The Art and Science. Child. Youth Serv. Rev. 2001, 23, 811–830. [Google Scholar] [CrossRef]

- Barber, J.; Trocmé, N.; Goodman, D.; Shlonsky, A.; Black, T.; Leslie, B. The Reliability and Predictive Validity of Consensus-Based Risk Assessment; Centre of Excellence for Child Welfare: Toronto, ON, Canada, 2000. [Google Scholar]

- Doueck, H.J.; Levine, M.; Bronson, D.E. Risk Assessment in Child Protective Services: An Evaluation of the Child at Risk Field System. J. Interpers. Violence 1993, 8, 446–467. [Google Scholar] [CrossRef]

- Schwalbe, C. Re-Visioning Risk Assessment for Human Service Decision Making. Child. Youth Serv. Rev. 2004, 26, 561–576. [Google Scholar] [CrossRef]

- Stewart, A.; Thompson, C. Comparative Evaluation of Child Protection Assessment Tools; Griffith University: Brisbane, Australia, 2004. [Google Scholar]

- Grove, W.M.; Meehl, P.E. Comparative Efficiency of Informal (Subjective, Impressionistic) and Formal (Mechanical, Algorithmic) Prediction Procedures: The Clinical–Statistical Controversy. Psychol. Public. Policy Law. 1996, 2, 293–323. [Google Scholar] [CrossRef]

- Barlow, J.; Fisher, J.D.; Jones, D. Systematic Review of Models of Analysing Significant Harm; Department for Education: London, UK, 2012. [Google Scholar]

- Doyle, M.; Dolan, M. Violence Risk Assessment: Combining Actuarial and Clinical Information to Structure Clinical Judgements for the Formulation and Management of Risk. J. Psychiatr. Ment. Health Nurs. 2002, 9, 649–657. [Google Scholar] [CrossRef]

- Hart, S.D. The Role of Psychopathy in Assessing Risk for Violence: Conceptual and Methodological Issues. Leg. Criminol. Psychol. 1998, 3, 121–137. [Google Scholar] [CrossRef]

- Heilbrun, K.; Yasuhara, K.; Shah, S. Violence Risk Assessment Tools: Overview and Critical Analysis. In Handbook of Violence Risk Assessment; International Perspectives on Forensic Mental Health; Routledge/Taylor & Francis Group: New York, NY, USA, 2010; pp. 1–17. ISBN 978-0-415-96214-8. [Google Scholar]

- Dolan, M.; Doyle, M. Violence Risk Prediction. Clinical and Actuarial Measures and the Role of the Psychopathy Checklist. Br. J. Psychiatry 2000, 177, 303–311. [Google Scholar] [CrossRef] [PubMed]

- Douglas, K.S.; Reeves, K.A. Historical-Clinical-Risk Management-20 (HCR-20) Violence Risk Assessment Scheme: Rationale, Application, and Empirical Overview. In Handbook of Violence Risk Assessment; International Perspectives on Forensic Mental Health; Routledge/Taylor & Francis Group: New York, NY, USA, 2010; pp. 147–185. ISBN 978-0-415-96214-8. [Google Scholar]

- Russell, J. Predictive Analytics and Child Protection: Constraints and Opportunities. Child Abus. Negl. 2015, 46, 182–189. [Google Scholar] [CrossRef] [PubMed]

- D’andrade, A.; Austin, M.J.; Benton, A. Risk and Safety Assessment in Child Welfare: Instrument Comparisons. J. Evid.-Based Soc. Work 2008, 5, 31–56. [Google Scholar] [CrossRef] [PubMed]

- Johnson, W. Post-Battle Skirmish in the Risk Assessment Wars: Rebuttal to the Response of Baumann and Colleagues to Criticism of Their Paper, “Evaluating the Effectiveness of Actuarial Risk Assessment Models”. Child. Youth Serv. Rev. 2006, 28, 1124–1132. [Google Scholar] [CrossRef]

- Johnson, W. The Risk Assessment Wars: A Commentary: Response to “Evaluating the Effectiveness of Actuarial Risk Assessment Models” by Donald Baumann, J. Randolph Law, Janess Sheets, Grant Reid, and J. Christopher Graham, Children and Youth Services Review, 27, pp. 465–490. Child. Youth Serv. Rev. 2006, 28, 704–714. [Google Scholar] [CrossRef]

- Hanson, R.K.; Morton-Bourgon, K.E. The Accuracy of Recidivism Risk Assessments for Sexual Offenders: A Meta-Analysis of 118 Prediction Studies. Psychol. Assess. 2009, 21, 1–21. [Google Scholar] [CrossRef]

- Baumann, D.J.; Law, J.R.; Sheets, J.; Reid, G.; Graham, J.C. Evaluating the Effectiveness of Actuarial Risk Assessment Models. Child. Youth Serv. Rev. 2005, 27, 465–490. [Google Scholar] [CrossRef]

- Bartelink, C.; van Yperen, T.A.; ten Berge, I.J. Deciding on Child Maltreatment: A Literature Review on Methods That Improve Decision-Making. Child Abus. Negl. 2015, 49, 142–153. [Google Scholar] [CrossRef]

- van der Put, C.E.; Assink, M.; Stams, G.J.J.M. The Effectiveness of Risk Assessment Methods: Commentary on “Deciding on Child Maltreatment: A Literature Review on Methods That Improve Decision-Making”. Child Abus. Negl. 2016, 59, 128–129. [Google Scholar] [CrossRef]

- Bartelink, C.; van Yperen, T.A.; Ten Berge, I.J. Reply to the Letter to the Editor of Van Der Put, Assink, & Stams about “Deciding on Child Maltreatment: A Literature Review on Methods That Improve Decision-Making”. Child Abus. Negl. 2016, 59, 130–132. [Google Scholar] [CrossRef]

- Saini, S.M.; Hoffmann, C.R.; Pantelis, C.; Everall, I.P.; Bousman, C.A. Systematic Review and Critical Appraisal of Child Abuse Measurement Instruments. Psychiatry Res. 2019, 272, 106–113. [Google Scholar] [CrossRef] [PubMed]

- McNellan, C.R.; Gibbs, D.J.; Knobel, A.S.; Putnam-Hornstein, E. The Evidence Base for Risk Assessment Tools Used in U.S. Child Protection Investigations: A Systematic Scoping Review. Child Abus. Negl. 2022, 134, 105887. [Google Scholar] [CrossRef]

- Schwalbe, C.S. Risk Assessment for Juvenile Justice: A Meta-Analysis. Law Hum. Behav. 2007, 31, 449–462. [Google Scholar] [CrossRef] [PubMed]

- Glass, G.V. Primary, Secondary, and Meta-Analysis of Research. Educ. Res. 1976, 5, 3–8. [Google Scholar] [CrossRef]

- Zeng, X.; Zhuang, L.; Yang, Z.; Dong, S. Quality Assessment Tools for Non-Randomized Experimental Studies, Diagnostic Test Studies, and Animal Experiments: A Series on Meta-Analysis (No. 7). Chin. J. Evid.-Based Cardiovasc. Med. 2012, 4, 496–499. [Google Scholar]

- Swets, J.A.; Dawes, R.M.; Monahan, J. Psychological Science Can Improve Diagnostic Decisions. Psychol. Sci. Public Interest 2000, 1, 1–26. [Google Scholar] [CrossRef]

- Rice, M.E.; Harris, G.T. Comparing Effect Sizes in Follow-up Studies: ROC Area, Cohen’s d, and r. Law Hum. Behav. 2005, 29, 615–620. [Google Scholar] [CrossRef]

- Ruscio, J. A Probability-Based Measure of Effect Size: Robustness to Base Rates and Other Factors. Psychol. Methods 2008, 13, 19–30. [Google Scholar] [CrossRef]

- Van der Put, C.E.; Stolwijk, I.J.; Staal, I.I.E. Early Detection of Risk for Maltreatment within Dutch Preventive Child Health Care: A Proxy-Based Evaluation of the Long-Term Predictive Validity of the SPARK Method. Child Abus. Negl. 2023, 143, 106316. [Google Scholar] [CrossRef]

- Day, P.; Woods, S.; Gonzalez, L.; Fernandez-Criado, R.; Shakil, A. Validating the TeenHITSS to Assess Child Abuse in Adolescent Populations. Fam. Med. 2023, 55, 12–19. [Google Scholar] [CrossRef]

- Moon, C.A. Construct and Predictive Validity of the AAPI-2 in a Low-Income, Urban, African American Sample. Child. Youth Serv. Rev. 2022, 142, 106646. [Google Scholar] [CrossRef]

- Vial, A.; van der Put, C.; Stams, G.J.J.M.; Dinkgreve, M.; Assink, M. Validation and Further Development of a Risk Assessment Instrument for Child Welfare. Child Abus. Negl. 2021, 117, 105047. [Google Scholar] [CrossRef]

- de Ruiter, C.; Hildebrand, M.; van der Hoorn, S. The Child Abuse Risk Evaluation Dutch Version (CARE-NL): A Retrospective Validation Study. J. Fam. Trauma Child Custody Child Dev. 2020, 17, 37–57. [Google Scholar] [CrossRef]

- Schols, M.W.A.; Serie, C.M.B.; Broers, N.J.; de Ruiter, C. Factor Analysis and Predictive Validity of the Early Risks of Physical Abuse and Neglect Scale (ERPANS): A Prospective Study in Dutch Public Youth Healthcare. Child Abus. Negl. 2019, 88, 71–83. [Google Scholar] [CrossRef]

- Evans, S.A.; Young, D.; Tiffin, P.A. Predictive Validity and Interrater Reliability of the FACE-CARAS Toolkit in a CAMHS Setting. Crim. Behav. Ment. Health 2019, 29, 47–56. [Google Scholar] [CrossRef]

- Lo, W.C.; Fung, G.P.; Cheung, P.C. Factors Associated with Multidisciplinary Case Conference Outcomes in Children Admitted to a Regional Hospital in Hong Kong with Suspected Child Abuse: A Retrospective Case Series with Internal Comparison. Hong Kong Med. J. 2017, 23, 454–461. [Google Scholar] [CrossRef]

- van der Put, C.E.; Bouwmeester-Landweer, M.B.R.; Landsmeer-Beker, E.A.; Wit, J.M.; Dekker, F.W.; Kousemaker, N.P.J.; Baartman, H.E.M. Screening for Potential Child Maltreatment in Parents of a Newborn Baby: The Predictive Validity of an Instrument for Early Identification of Parents At Risk for Child Abuse and Neglect (IPARAN). Child Abus. Negl. 2017, 70, 160–168. [Google Scholar] [CrossRef]

- Schouten, M.C.M.; van Stel, H.F.; Verheij, T.J.M.; Houben, M.L.; Russel, I.M.B.; Nieuwenhuis, E.E.S.; van de Putte, E.M. The Value of a Checklist for Child Abuse in Out-of-Hours Primary Care: To Screen or Not to Screen. PLoS ONE 2017, 12, e0165641. [Google Scholar] [CrossRef]

- van der Put, C.E.; Hermanns, J.; van Rijn-van Gelderen, L.; Sondeijker, F. Detection of Unsafety in Families with Parental and/or Child Developmental Problems at the Start of Family Support. BMC Psychiatry 2016, 16, 15. [Google Scholar] [CrossRef]

- Horikawa, H.; Suguimoto, S.P.; Musumari, P.M.; Techasrivichien, T.; Ono-Kihara, M.; Kihara, M. Development of a Prediction Model for Child Maltreatment Recurrence in Japan: A Historical Cohort Study Using Data from a Child Guidance Center. Child Abus. Negl. 2016, 59, 55–65. [Google Scholar] [CrossRef]

- van der Put, C.E.; Assink, M.; Stams, G.J.J.M. Predicting Relapse of Problematic Child-Rearing Situations. Child. Youth Serv. Rev. 2016, 61, 288–295. [Google Scholar] [CrossRef]

- Johnson, W.; Clancy, T.; Bastian, P. Child Abuse/Neglect Risk Assessment under Field Practice Conditions: Tests of External and Temporal Validity and Comparison with Heart Disease Prediction. Child. Youth Serv. Rev. 2015, 56, 76–85. [Google Scholar] [CrossRef]

- Dankert, E.W.; Cuddeback, G.S.; Scheurich, M.J.; Green, D.A.; Crichton, K. Risk Assessment Validation: A Prospective Study; California Department of Social Services, Children and Family Services Division: Fort Bragg, CA, USA, 2014. [Google Scholar]

- Vaithianathan, R.; Maloney, T.; Putnam-Hornstein, E.; Jiang, N. Children in the Public Benefit System at Risk of Maltreatment: Identification Via Predictive Modeling. Am. J. Prev. Med. 2013, 45, 354–359. [Google Scholar] [CrossRef] [PubMed]

- Coohey, C.; Johnson, K.; Renner, L.M.; Easton, S.D. Actuarial Risk Assessment in Child Protective Services: Construction Methodology and Performance Criteria. Child. Youth Serv. Rev. 2013, 35, 151–161. [Google Scholar] [CrossRef]

- Staal, I.I.E.; Hermanns, J.M.A.; Schrijvers, A.J.P.; van Stel, H.F. Risk Assessment of Parents’ Concerns at 18 Months in Preventive Child Health Care Predicted Child Abuse and Neglect. Child Abus. Negl. 2013, 37, 475–484. [Google Scholar] [CrossRef][Green Version]

- Chan, K.L. Evaluating the Risk of Child Abuse: The Child Abuse Risk Assessment Scale (CARAS). J. Interpers. Violence 2012, 27, 951–973. [Google Scholar] [CrossRef][Green Version]

- Ezzo, F.; Young, K. Child Maltreatment Risk Inventory: Pilot Data for the Cleveland Child Abuse Potential Scale. J. Fam. Violence 2012, 27, 145–155. [Google Scholar] [CrossRef]

- Baumann, D.J.; Grigsby, C.; Sheets, J.; Reid, G.; Graham, J.C.; Robinson, D.; Holoubek, J.; Farris, J.; Jeffries, V.; Wang, E. Concept Guided Risk Assessment: Promoting Prediction and Understanding. Child. Youth Serv. Rev. 2011, 33, 1648–1657. [Google Scholar] [CrossRef]

- Johnson, W.L. The Validity and Utility of the California Family Risk Assessment under Practice Conditions in the Field: A Prospective Study. Child Abus. Negl. 2011, 35, 18–28. [Google Scholar] [CrossRef]

- Barber, J.G.; Shlonsky, A.; Black, T.; Goodman, D.; Trocmé, N. Reliability and Predictive Validity of a Consensus-Based Risk Assessment Tool. J. Public Child Welf. 2008, 2, 173–195. [Google Scholar] [CrossRef]

- Sledjeski, E.M.; Dierker, L.C.; Brigham, R.; Breslin, E. The Use of Risk Assessment to Predict Recurrent Maltreatment: A Classification and Regression Tree Analysis (CART). Prev. Sci. 2008, 9, 28–37. [Google Scholar] [CrossRef] [PubMed]

- Ondersma, S.J.; Chaffin, M.J.; Mullins, S.M.; LeBreton, J.M. A Brief Form of the Child Abuse Potential Inventory: Development and Validation. J. Clin. Child Adolesc. Psychol. 2005, 34, 301–311. [Google Scholar] [CrossRef] [PubMed]

- Loman, L.A.; Siegel, G.L. Predictive Validity of the Family Risk Assessment Instrument-An Evaluation of the Minnesota SDM Family Risk Assessment: Final Report; Institute of Applied Research: St. Louis, MO, USA, 2004. [Google Scholar]

- Chaffin, M.; Valle, L.A. Dynamic Prediction Characteristics of the Child Abuse Potential Inventory. Child Abus. Negl. 2003, 27, 463–481. [Google Scholar] [CrossRef]

- Rosenthal, R. The File Drawer Problem and Tolerance for Null Results. Psychol. Bull. 1979, 86, 638–641. [Google Scholar] [CrossRef]

- Egger, M.; Smith, G.D.; Schneider, M.; Minder, C. Bias in Meta-Analysis Detected by a Simple, Graphical Test. BMJ 1997, 315, 629–634. [Google Scholar] [CrossRef]

- Flynn, G.; O’Neill, C.; McInerney, C.; Kennedy, H.G. The DUNDRUM-1 Structured Professional Judgment for Triage to Appropriate Levels of Therapeutic Security: Retrospective-Cohort Validation Study. BMC Psychiatry 2011, 11, 43. [Google Scholar] [CrossRef]

- Gillingham, P. Can Predictive Algorithms Assist Decision-Making in Social Work with Children and Families? Child. Abus. Rev. 2019, 28, 114–126. [Google Scholar] [CrossRef]

- Hirschman, D.; Bosk, E.A. Standardizing Biases: Selection Devices and the Quantification of Race. Sociol. Race Ethn. 2019, 6, 348–364. [Google Scholar] [CrossRef]

- Cash, S.J.; Berry, M. Family Characteristics and Child Welfare Services: Does the Assessment Drive Service Provision? Fam. Soc. 2002, 83, 499–507. [Google Scholar] [CrossRef]

- White, A.; Walsh, P. Risk Assessment in Child Welfare: An Issues Paper; Ashfield, N.S.W., Ed.; Centre for Parenting and Research: Melbourne, Australia, 2006; ISBN 978-1-74190-016-3. [Google Scholar]

- Boer, D.P.; Hart, S.D. Sex Offender Risk Assessment: Research, Evaluation, ‘Best-Practice’ Recommendations and Future Directions. In Violent and Sexual Offenders; Willan: London, UK, 2009; ISBN 978-0-203-72240-4. [Google Scholar]

| Study | Tool Abbreviation Name | Tool Type | Tool Length | Assessor Type | Assessee Type | Sample Size |

|---|---|---|---|---|---|---|

| Van der Put 2023 [38] | SPARK | SCJ | 24 | Specialists | General Groups | 1582 |

| Day 2023 [39] | TeenHITSS | Actuarial | 5 | Self-report | General Groups | 251 |

| Day 2023 [39] | CTSPC | Actuarial | 22 | Self-report | General Groups | 251 |

| Moon 2022 [40] | AAPI–2 | Consensus | 40 | Self-report | High-risk Groups | 218 |

| Vial 2021 [41] | ARIJ | Actuarial | 30 | Specialists | High-risk Groups | 3681 |

| Ruiter 2020 [42] | CARE-NL | SCJ | 18 | Specialists | High-risk Groups | 211 |

| Schols 2019 [43] | ERPANS | Actuarial | 31 | Specialists | General Groups | 1257 |

| Evans 2019 [44] | FACE-CARAS | Actuarial | 48 | Specialists | High-risk Groups | 123 |

| Lo 2017 [45] | RAM | SCJ | 15 | Specialists | High-risk Groups | 265 |

| Van der Put 2017 [46] | IPARAN | Actuarial | 16 | Self-report | General Groups | 4692 |

| Schouten 2017 [47] | SPUTOVAMO-R2/R3 | Actuarial | 5 | Self-report | General Groups | 50,671 |

| Van der Put 2016 [48] | CFRA | Actuarial | 25 | Specialists | High-risk Groups | 491 |

| Horikawa 2016 [49] | PMCTR-J | Actuarial | 6 | Specialists | High-risk Groups | 716 |

| Van der Put 2016 [50] | CLCS | SCJ | 75 | Specialists | High-risk Groups | 3963 |

| Johnson 2015 [51] | CFRA | Actuarial | 25 | Specialists | General Groups | 236 |

| Dankert 2014 [52] | CFRA | Actuarial | 25 | Specialists | High-risk Groups | 11,444 |

| Vaithianathan 2013 [53] | PRM | Actuarial | 132 | Computing System | High-risk Groups | 17,396 |

| Coohey 2013 [54] | CFRA | Actuarial | 21 | Specialists | High-risk Groups | 6832 |

| Staal 2013 [55] | SPARK | SCJ | 16 | Specialists | General Groups | 1850 |

| Chan 2012 [56] | CARAS | Actuarial | 64 | Self-report | General Groups | 2363 |

| Ezzo 2012 [57] | C-CAPS | Actuarial | 40 | Specialists | High-risk Groups | 118 |

| Baumann 2011 [58] | CGRA | SCJ | 77 | Specialists | High-risk Groups | 1199 |

| Johnson 2011 [59] | CFRA | Actuarial | 20 | Specialists | High-risk Groups | 6543 |

| Barber 2008 [60] | ORA | Consensus | 22 | Specialists | High-risk Groups | 1118 |

| Sledjeski 2008 [61] | CT | Actuarial | 24 | Specialists | High-risk Groups | 244 |

| Ondersma 2005 [62] | CAP | Actuarial | 160 | Self-report | High-risk Groups | 713 |

| Loman 2004 [63] | M-SDM FRA | SCJ | 25 | Specialists | High-risk Groups | 15,100 |

| Chaffin 2003 [64] | CAP | Actuarial | 160 | Self-report | High-risk Groups | 459 |

| Baird 2000 [8] | SDM | Actuarial | NR | Specialists | High-risk Groups | 929 |

| Baird 2000 [8] | WRAM | Consensus | NR | Specialists | High-risk Groups | 908 |

| Baird 2000 [8] | CFAFA | Consensus | NR | Specialists | High-risk Groups | 876 |

| Moderating Variables | N | Effect Size (N) | Mean Fisher’s z (95% CI) | SE | Mean AUC | F (df1, df2) | p | Level 2 | Level 3 |

|---|---|---|---|---|---|---|---|---|---|

| Overall effect | 28 | 65 | 0.336 (0.259, 0.412) | 0.038 | 0.686 | <0.001 | |||

| Tool type | 5.499 | 0.006 ** | 0.043 | 0.002 | |||||

| Actuarial | 19 | 44 | 0.291 (0.223, 0.359) | 0.034 | 0.662 | ||||

| SCJ | 10 | 13 | 0.463 (0.323, 0.603) | 0.070 | 0.751 | ||||

| Consensus | 4 | 8 | 0.142 (−0.029, 0.313) | 0.086 | 0.580 | ||||

| Tool length | 28 | 65 | 0.341 (0.339, 0.343) | 0.001 | 0.689 | 1.927 | 0.170 | 0.031 | 0.028 |

| Publication year | 28 | 65 | 0.399 (0.387, 0.411) | 0.006 | 0.719 | 0.943 | 0.335 | 0.036 | 0.019 |

| Assessor type | 0.215 | 0.807 | 0.035 | 0.023 | |||||

| Specialists | 24 | 48 | 0.339 (0.155, 0.523) | 0.092 | 0.687 | ||||

| Self-report | 7 | 16 | 0.316 (0.156, 0.475) | 0.080 | 0.675 | ||||

| Computing system | 1 | 1 | 0.481 (−0.026, 0.989) | 0.254 | 0.760 | ||||

| Assessee type | 0.684 | 0.411 | 0.035 | 0.021 | |||||

| General | 8 | 11 | 0.392 (0.235, 0.549) | 0.078 | 0.715 | ||||

| High-risk | 21 | 54 | 0.318 (0.138, 0.497) | 0.090 | 0.676 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, N.; Pan, X.; Zhao, F. Assessing the Predictive Validity of Risk Assessment Tools in Child Health and Well-Being: A Meta-Analysis. Children 2025, 12, 478. https://doi.org/10.3390/children12040478

Zhu N, Pan X, Zhao F. Assessing the Predictive Validity of Risk Assessment Tools in Child Health and Well-Being: A Meta-Analysis. Children. 2025; 12(4):478. https://doi.org/10.3390/children12040478

Chicago/Turabian StyleZhu, Ning, Xiaoqing Pan, and Fang Zhao. 2025. "Assessing the Predictive Validity of Risk Assessment Tools in Child Health and Well-Being: A Meta-Analysis" Children 12, no. 4: 478. https://doi.org/10.3390/children12040478

APA StyleZhu, N., Pan, X., & Zhao, F. (2025). Assessing the Predictive Validity of Risk Assessment Tools in Child Health and Well-Being: A Meta-Analysis. Children, 12(4), 478. https://doi.org/10.3390/children12040478