Machine Learning for ADHD Diagnosis: Feature Selection from Parent Reports, Self-Reports and Neuropsychological Measures

Highlights

- Social problems, executive dysfunction, and self-regulation were top predictors of ADHD beyond core symptoms across machine learning models.

- Ex-Gaussian reaction-time parameters outperformed traditional indices of the continuous performance task.

- Prioritizing these key predictors can streamline ADHD assessment and support earlier referral and intervention.

- Interpretable machine learning models can support clinical decision-making by highlighting the most informative features.

Abstract

1. Introduction

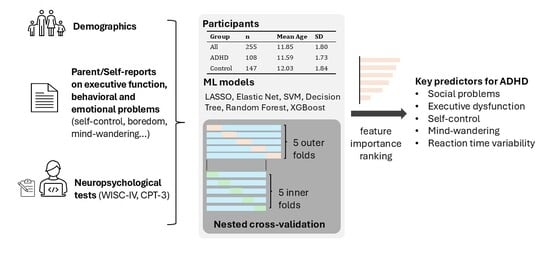

2. Materials and Methods

2.1. Participants

2.2. Measures

2.2.1. Demographics

2.2.2. Cognitive Task Performance

2.2.3. Self and Parent Ratings

2.3. Data Preparation

2.4. Models

- LASSO and Elastic Net regularized logistic regression models were fit using the glmnet package (v4.1-8) with penalty strength (and the mixing parameter for Elastic Net) tuned over log-scaled grids.

- An SVM with radial basis function(RBF) kernel was tuned over log-scaled grids of cost and kernel width using the kernlab engine (v0.9.33).

- Random Forests (ranger, v0.17.0) were tuned on the number of predictors sampled at each split, while the number of trees was fixed at 300.

- Gradient-boosted trees were fit using xgboost (v1.7.11.1) with a logistic objective; tree count was fixed at 300, and tuning was performed for maximum depth and learning rate.

- A single decision tree (rpart, v4.1.23) was tuned on the cost-complexity pruning parameter and maximum depth.

2.5. Nested Cross-Validation

2.6. Model-Specific Feature Importance

- For LASSO and Elastic Net, absolute standardized coefficients from tuned fits on the full dataset (with the same preprocessing procedure) were used as direct measures of feature importance.

- For the SVM with RBF kernel, permutation importance was estimated as the drop in AUC.

- Random Forest and decision tree models provided impurity-based importances, which were averaged across outer folds.

- For XGBoost, gain-based importances were computed within each outer fold and then averaged.

2.7. Model-Agnostic Feature Importance

3. Results

3.1. Sample Characteristics

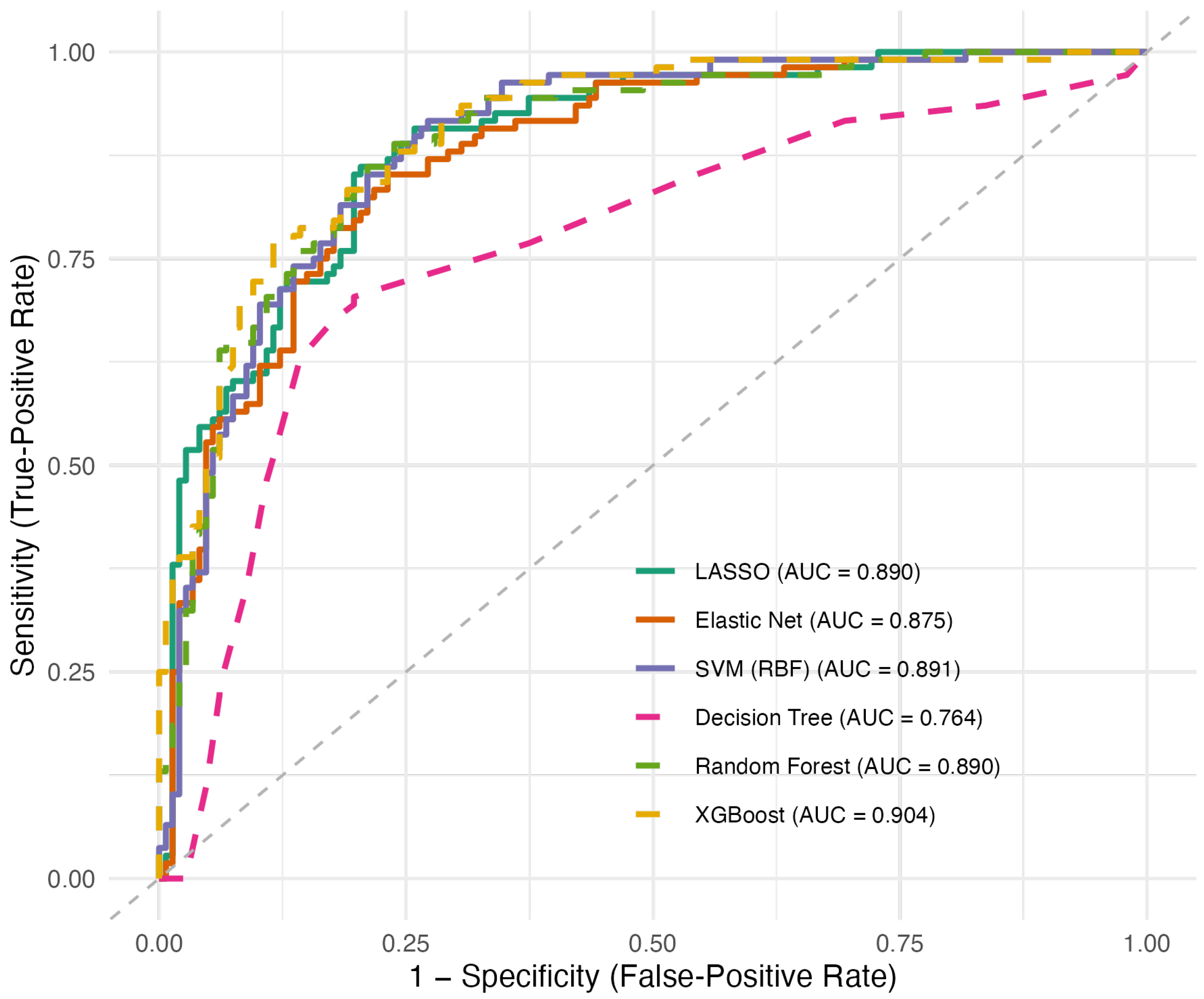

3.2. Model Performance

3.3. Model-Specific Feature Contributions

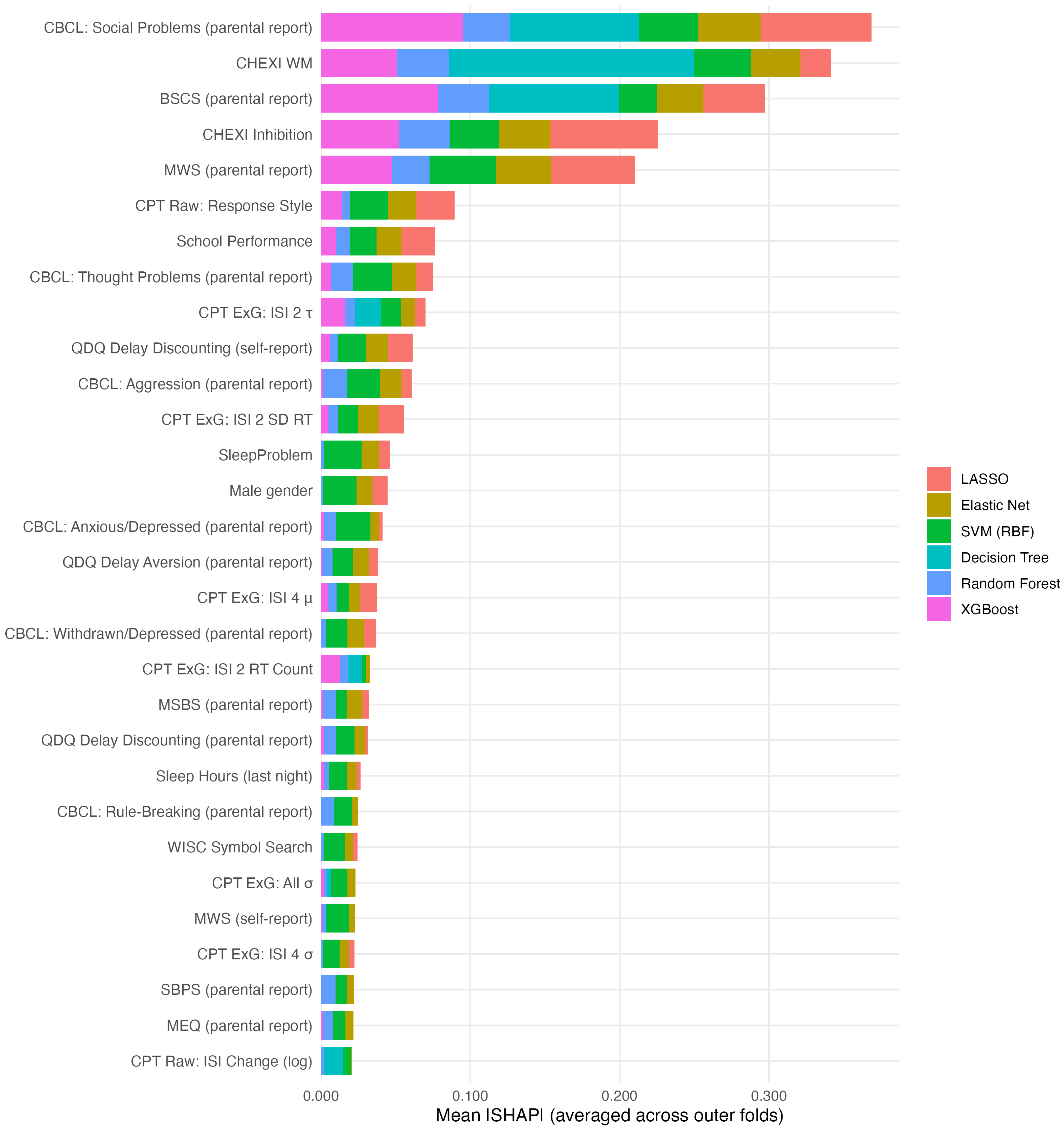

3.4. Model-Agnostic Feature Contributions

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ayano, G.; Demelash, S.; Gizachew, Y.; Tsegay, L.; Alati, R. The Global Prevalence of Attention Deficit Hyperactivity Disorder in Children and Adolescents: An Umbrella Review of Meta-Analyses. J. Affect. Disord. 2023, 339, 860–866. [Google Scholar] [CrossRef]

- Cortese, S.; Song, M.; Farhat, L.C.; Yon, D.K.; Lee, S.W.; Kim, M.S.; Park, S.; Oh, J.W.; Lee, S.; Cheon, K.A. Incidence, Prevalence, and Global Burden of ADHD from 1990 to 2019 across 204 Countries: Data, with Critical Re-Analysis, from the Global Burden of Disease Study. Mol. Psychiatry 2023, 28, 4823–4830. [Google Scholar] [CrossRef]

- Polanczyk, G.V.; Salum, G.A.; Sugaya, L.S.; Caye, A.; Rohde, L.A. Annual Research Review: A Meta-analysis of the Worldwide Prevalence of Mental Disorders in Children and Adolescents. J. Child Psychol. Psychiatry 2015, 56, 345–365. [Google Scholar] [CrossRef]

- Brocki, K.C.; Nyberg, L.; Thorell, L.B.; Bohlin, G. Early Concurrent and Longitudinal Symptoms of ADHD and ODD: Relations to Different Types of Inhibitory Control and Working Memory. J. Child Psychol. Psychiatry 2007, 48, 1033–1041. [Google Scholar] [CrossRef] [PubMed]

- Alderson, R.M.; Rapport, M.D.; Hudec, K.L.; Sarver, D.E.; Kofler, M.J. Competing Core Processes in Attention-Deficit/Hyperactivity Disorder (ADHD): Do Working Memory Deficiencies Underlie Behavioral Inhibition Deficits? J. Abnorm. Child Psychol. 2010, 38, 497–507. [Google Scholar] [CrossRef]

- Virring, A.; Lambek, R.; Jennum, P.J.; Møller, L.R.; Thomsen, P.H. Sleep Problems and Daily Functioning in Children With ADHD: An Investigation of the Role of Impairment, ADHD Presentations, and Psychiatric Comorbidity. J. Atten. Disord. 2017, 21, 731–740. [Google Scholar] [CrossRef] [PubMed]

- Rosen, P.J.; Factor, P.I. Emotional Impulsivity and Emotional and Behavioral Difficulties among Children with ADHD: An Ecological Momentary Assessment Study. J. Atten. Disord. 2015, 19, 779–793. [Google Scholar] [CrossRef]

- Wehmeier, P.M.; Schacht, A.; Barkley, R.A. Social and Emotional Impairment in Children and Adolescents with ADHD and the Impact on Quality of Life. J. Adolesc. Health 2010, 46, 209–217. [Google Scholar] [CrossRef]

- Chan, E.; Hopkins, M.R.; Perrin, J.M.; Herrerias, C.; Homer, C.J. Diagnostic Practices for Attention Deficit Hyperactivity Disorder: A National Survey of Primary Care Physicians. Ambul. Pediatr. 2005, 5, 201–208. [Google Scholar] [CrossRef] [PubMed]

- Rogers, E.A.; Graves, S.J.; Freeman, A.J.; Paul, M.G.; Etcoff, L.M.; Allen, D.N. Improving Accuracy of ADHD Subtype Diagnoses with the ADHD Symptom Rating Scale. Child Neuropsychol. 2022, 28, 962–978. [Google Scholar] [CrossRef]

- Parker, A.; Corkum, P. ADHD Diagnosis: As Simple As Administering a Questionnaire or a Complex Diagnostic Process? J. Atten. Disord. 2016, 20, 478–486. [Google Scholar] [CrossRef]

- Ünal, D.; Mustafaoğlu Çiçek, N.; Çak, T.; Sakarya, G.; Artik, A.; Karaboncuk, Y.; Özusta, Ş.; Çengel Kültür, E. Comparative Analysis of the WISC-IV in a Clinical Setting: ADHD vs. Non-ADHD. Arch. Pédiatrie 2021, 28, 16–22. [Google Scholar] [CrossRef]

- Huang-Pollock, C.L.; Karalunas, S.L.; Tam, H.; Moore, A.N. Evaluating Vigilance Deficits in ADHD: A Meta-Analysis of CPT Performance. J. Abnorm. Psychol. 2012, 121, 360–371. [Google Scholar] [CrossRef] [PubMed]

- Gualtieri, C.T.; Johnson, L.G. ADHD: Is Objective Diagnosis Possible? Psychiatry 2005, 2, 44. [Google Scholar] [PubMed]

- Cao, M.; Martin, E.; Li, X. Machine Learning in Attention-Deficit/Hyperactivity Disorder: New Approaches toward Understanding the Neural Mechanisms. Transl. Psychiatry 2023, 13, 236. [Google Scholar] [CrossRef]

- Guyon, I.; Elisseeff, A. An Introduction to Variable and Feature Selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Sun, G.W.; Shook, T.L.; Kay, G.L. Inappropriate Use of Bivariable Analysis to Screen Risk Factors for Use in Multivariable Analysis. J. Clin. Epidemiol. 1996, 49, 907–916. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.S.; Talarico, F.; Metes, D.; Song, Y.; Wang, M.; Kiyang, L.; Wearmouth, D.; Vik, S.; Wei, Y.; Zhang, Y.; et al. Early Identification of Children with Attention-Deficit/Hyperactivity Disorder (ADHD). PLoS Digit. Health 2024, 3, e0000620. [Google Scholar] [CrossRef]

- Mikolas, P.; Vahid, A.; Bernardoni, F.; Süß, M.; Martini, J.; Beste, C.; Bluschke, A. Training a Machine Learning Classifier to Identify ADHD Based on Real-World Clinical Data from Medical Records. Sci. Rep. 2022, 12, 12934. [Google Scholar] [CrossRef]

- Christiansen, H.; Chavanon, M.L.; Hirsch, O.; Schmidt, M.H.; Meyer, C.; Müller, A.; Rumpf, H.J.; Grigorev, I.; Hoffmann, A. Use of Machine Learning to Classify Adult ADHD and Other Conditions Based on the Conners’ Adult ADHD Rating Scales. Sci. Rep. 2020, 10, 18871. [Google Scholar] [CrossRef]

- Garcia-Argibay, M.; Zhang-James, Y.; Cortese, S.; Lichtenstein, P.; Larsson, H.; Faraone, S.V. Predicting Childhood and Adolescent Attention-Deficit/Hyperactivity Disorder Onset: A Nationwide Deep Learning Approach. Mol. Psychiatry 2023, 28, 1232–1239. [Google Scholar] [CrossRef] [PubMed]

- Lin, I.C.; Chang, S.C.; Huang, Y.J.; Kuo, T.B.J.; Chiu, H.W. Distinguishing Different Types of Attention Deficit Hyperactivity Disorder in Children Using Artificial Neural Network with Clinical Intelligent Test. Front. Psychol. 2023, 13, 1067771. [Google Scholar] [CrossRef]

- Tsamardinos, I.; Rakhshani, A.; Lagani, V. Performance-Estimation Properties of Cross-Validation-Based Protocols with Simultaneous Hyper-Parameter Optimization. Int. J. Artif. Intell. Tools 2015, 24, 1540023. [Google Scholar] [CrossRef]

- Vabalas, A.; Gowen, E.; Poliakoff, E.; Casson, A.J. Machine Learning Algorithm Validation with a Limited Sample Size. PLoS ONE 2019, 14, e0224365. [Google Scholar] [CrossRef]

- Steinert, S.; Ruf, V.; Dzsotjan, D.; Großmann, N.; Schmidt, A.; Kuhn, J.; Küchemann, S. A Refined Approach for Evaluating Small Datasets via Binary Classification Using Machine Learning. PLoS ONE 2024, 19, e0301276. [Google Scholar] [CrossRef] [PubMed]

- Wechsler, D. Wechsler Intelligence Scale for Children–Fourth Edition (WISC-IV); The Psychological Corporation: San Antonio, TX, USA, 2003. [Google Scholar]

- Chen, H.Y.; Hua, M.S.; Chang, B.S.; Chen, Y.H. Development of Factor-Based WISC-IV Tetrads: A Guild to Clinical Practice. Psychol. Test. 2011, 58, 585–611. [Google Scholar]

- Conners, C.K. Continuous Performance Test III; Multi-Health Systems: Toronto, ON, Canada, 2014. [Google Scholar]

- Bella-Fernández, M.; Martin-Moratinos, M.; Li, C.; Wang, P.; Blasco-Fontecilla, H. Differences in Ex-Gaussian Parameters from Response Time Distributions between Individuals with and without Attention Deficit/Hyperactivity Disorder: A Meta-Analysis. Neuropsychol. Rev. 2024, 34, 320–337. [Google Scholar] [CrossRef]

- Gu, S.L.H.; Gau, S.S.F.; Tzang, S.W.; Hsu, W.Y. The Ex-Gaussian Distribution of Reaction Times in Adolescents with Attention-Deficit/Hyperactivity Disorder. Res. Dev. Disabil. 2013, 34, 3709–3719. [Google Scholar] [CrossRef]

- Carriere, J.S.; Seli, P.; Smilek, D. Wandering in Both Mind and Body: Individual Differences in Mind Wandering and Inattention Predict Fidgeting. Can. J. Exp. Psychol. Can. Psychol. Exp. 2013, 67, 19. [Google Scholar] [CrossRef]

- Struk, A.; Carriere, J.; Cheyne, J.; Danckert, J. A Short Boredom Proneness Scale. Assessment 2017, 24, 346–359. [Google Scholar] [CrossRef] [PubMed]

- Hsu, C.F.; Chen, V.C.H.; Ni, H.C.; Chueh, N.; Eastwood, J.D. Boredom Proneness and Inattention in Children with and without ADHD: The Mediating Role of Delay Aversion. Front. Psychiatry 2025, 16, 1526089. [Google Scholar] [CrossRef]

- Hunter, J.A.; Dyer, K.J.; Cribbie, R.A.; Eastwood, J.D. Exploring the Utility of the Multidimensional State Boredom Scale. Eur. J. Psychol. Assess. 2016, 32, 241–250. [Google Scholar] [CrossRef]

- Clare, S.; Helps, S.; Sonuga-Barke, E.J. The Quick Delay Questionnaire: A Measure of Delay Aversion and Discounting in Adults. ADHD Atten. Deficit Hyperact. Disord. 2010, 2, 43–48. [Google Scholar] [CrossRef]

- Tangney, J.P.; Baumeister, R.F.; Boone, A.L. High Self-Control Predicts Good Adjustment, Less Pathology, Better Grades, and Interpersonal Success. J. Personal. 2008, 72, 271–324. [Google Scholar] [CrossRef]

- Catale, C.; Meulemans, T.; Thorell, L.B. The Childhood Executive Function Inventory: Confirmatory Factor Analyses and Cross-Cultural Clinical Validity in a Sample of 8-to 11-Year-Old Children. J. Atten. Disord. 2015, 19, 489–495. [Google Scholar] [CrossRef]

- Achenbach, T.M. Manual for the Child Behavior Checklist/4-18 and 1991 Profile; University of Vermont: Burlington, VT, USA, 1991. [Google Scholar]

- Gau, S.S.F.; Shang, C.Y.; Liu, S.K.; Lin, C.H.; Swanson, J.M.; Liu, Y.C.; Tu, C.L. Psychometric Properties of the Chinese Version of the Swanson, Nolan, and Pelham, Version IV Scale–Parent Form. Int. J. Methods Psychiatr. Res. 2008, 17, 35–44. [Google Scholar] [CrossRef] [PubMed]

- Kuhn, M.; Wickham, H. Tidymodels: A Collection of Packages for Modeling and Machine Learning Using Tidyverse Principles; The R Project for Statistical Computing: Vienna, Austria, 2020. [Google Scholar]

- Greenwell, B. Fastshap: Fast Approximate Shapley Values; The R Project for Statistical Computing: Vienna, Austria, 2024. [Google Scholar]

- Hall, C.L.; Valentine, A.Z.; Groom, M.J.; Walker, G.M.; Sayal, K.; Daley, D.; Hollis, C. The Clinical Utility of the Continuous Performance Test and Objective Measures of Activity for Diagnosing and Monitoring ADHD in Children: A Systematic Review. Eur. Child Adolesc. Psychiatry 2016, 25, 677–699. [Google Scholar] [CrossRef] [PubMed]

- Peterson, B.S.; Trampush, J.; Brown, M.; Maglione, M.; Bolshakova, M.; Rozelle, M.; Miles, J.; Pakdaman, S.; Yagyu, S.; Motala, A.; et al. Tools for the Diagnosis of ADHD in Children and Adolescents: A Systematic Review. Pediatrics 2024, 153, e2024065854. [Google Scholar] [CrossRef]

- Sims, D.M.; Lonigan, C.J. Multi-Method Assessment of ADHD Characteristics in Preschool Children: Relations between Measures. Early Child. Res. Q. 2012, 27, 329–337. [Google Scholar] [CrossRef]

- Arildskov, T.W.; Sonuga-Barke, E.J.S.; Thomsen, P.H.; Virring, A.; Østergaard, S.D. How Much Impairment Is Required for ADHD? No Evidence of a Discrete Threshold. J. Child Psychol. Psychiatry 2022, 63, 229–237. [Google Scholar] [CrossRef]

- Epstein, J.N.; Loren, R.E. Changes in the Definition of ADHD in DSM-5: Subtle but Important. Neuropsychiatry 2013, 3, 455–458. [Google Scholar] [CrossRef] [PubMed]

- Sibley, M.H.; Evans, S.W.; Serpell, Z.N. Social Cognition and Interpersonal Impairment in Young Adolescents with ADHD. J. Psychopathol. Behav. Assess. 2010, 32, 193–202. [Google Scholar] [CrossRef]

- Kofler, M.J.; Rapport, M.D.; Bolden, J.; Sarver, D.E.; Raiker, J.S.; Alderson, R.M. Working Memory Deficits and Social Problems in Children with ADHD. J. Abnorm. Child Psychol. 2011, 39, 805–817. [Google Scholar] [CrossRef]

- Bunford, N.; Evans, S.W.; Langberg, J.M. Emotion Dysregulation Is Associated with Social Impairment among Young Adolescents with ADHD. J. Atten. Disord. 2018, 22, 66–82. [Google Scholar] [CrossRef] [PubMed]

- Boonstra, A.M.; Oosterlaan, J.; Sergeant, J.A.; Buitelaar, J.K. Executive Functioning in Adult ADHD: A Meta-Analytic Review. Psychol. Med. 2005, 35, 1097–1108. [Google Scholar] [CrossRef]

- Martel, M.; Nikolas, M.; Nigg, J.T. Executive Function in Adolescents With ADHD. J. Am. Acad. Child Adolesc. Psychiatry 2007, 46, 1437–1444. [Google Scholar] [CrossRef] [PubMed]

- Kazemi, M.; Kazempoor Dehbidi, Z. Investigating the Relationship between Self-regulation and Self-control in Adolescents with Attention Deficit Hyperactivity Disorder. Iran. Evol. Educ. Psychol. J. 2022, 4, 432–440. [Google Scholar] [CrossRef]

- Saura-Garre, P.; Vicente-Escudero, J.L.; Checa, S.; Castro, M.; Fernández, V.; Alcántara, M.; Martínez, A.; López-Soler, C. Assessment of Hyperactivity-Impulsivity and Attention Deficit in Adolescents by Self-Report and Its Association with Psychopathology and Academic Performance. Front. Psychol. 2022, 13, 989610. [Google Scholar] [CrossRef]

- Biederman, J.; Fitzgerald, M.; Uchida, M.; Spencer, T.J.; Fried, R.; Wicks, J.; Saunders, A.; Faraone, S.V. Towards Operationalising Internal Distractibility (Mind Wandering) in Adults with ADHD. Acta Neuropsychiatr. 2017, 29, 330–336. [Google Scholar] [CrossRef]

- Conijn, J.M.; Smits, N.; Hartman, E.E. Determining at What Age Children Provide Sound Self-Reports: An Illustration of the Validity-Index Approach. Assessment 2020, 27, 1604–1618. [Google Scholar] [CrossRef]

- Nasby, W. Private Self-Consciousness, Self-Awareness, and the Reliability of Self-Reports. J. Personal. Soc. Psychol. 1989, 56, 950. [Google Scholar] [CrossRef]

- Owens, J.S.; Goldfine, M.E.; Evangelista, N.M.; Hoza, B.; Kaiser, N.M. A Critical Review of Self-perceptions and the Positive Illusory Bias in Children with ADHD. Clin. Child Fam. Psychol. Rev. 2007, 10, 335–351. [Google Scholar] [CrossRef]

- Sibley, M.H.; Pelham Jr, W.E.; Molina, B.S.; Gnagy, E.M.; Waxmonsky, J.G.; Waschbusch, D.A.; Derefinko, K.J.; Wymbs, B.T.; Garefino, A.C.; Babinski, D.E. When Diagnosing ADHD in Young Adults Emphasize Informant Reports, DSM Items, and Impairment. J. Consult. Clin. Psychol. 2012, 80, 1052. [Google Scholar] [CrossRef]

- Wiener, J.; Malone, M.; Varma, A.; Markel, C.; Biondic, D.; Tannock, R.; Humphries, T. Children’s Perceptions of Their ADHD Symptoms: Positive Illusions, Attributions, and Stigma. Can. J. Sch. Psychol. 2012, 27, 217–242. [Google Scholar] [CrossRef]

- Du Rietz, E.; Kuja-Halkola, R.; Brikell, I.; Jangmo, A.; Sariaslan, A.; Lichtenstein, P.; Kuntsi, J.; Larsson, H. Predictive Validity of Parent- and Self-Rated ADHD Symptoms in Adolescence on Adverse Socioeconomic and Health Outcomes. Eur. Child Adolesc. Psychiatry 2017, 26, 857–867. [Google Scholar] [CrossRef] [PubMed]

- Merwood, A.; Greven, C.U.; Price, T.S.; Rijsdijk, F.; Kuntsi, J.; McLoughlin, G.; Larsson, H.; Asherson, P.J. Different Heritabilities but Shared Etiological Influences for Parent, Teacher and Self-Ratings of ADHD Symptoms: An Adolescent Twin Study. Psychol. Med. 2013, 43, 1973–1984. [Google Scholar] [CrossRef]

- Edebol, H.; Helldin, L.; Norlander, T. Objective Measures of Behavior Manifestations in Adult ADHD and Differentiation from Participants with Bipolar II Disorder, Borderline Personality Disorder, Participants with Disconfirmed ADHD as Well as Normative Participants. Clin. Pract. Epidemiol. Ment. Health CP EMH 2012, 8, 134–143. [Google Scholar] [CrossRef]

- Arrondo, G.; Mulraney, M.; Iturmendi-Sabater, I.; Musullulu, H.; Gambra, L.; Niculcea, T.; Banaschewski, T.; Simonoff, E.; Döpfner, M.; Hinshaw, S.P. Systematic Review and Meta-Analysis: Clinical Utility of Continuous Performance Tests for the Identification of Attention-Deficit/Hyperactivity Disorder. J. Am. Acad. Child Adolesc. Psychiatry 2024, 63, 154–171. [Google Scholar] [CrossRef]

- Varma, S.; Simon, R. Bias in Error Estimation When Using Cross-Validation for Model Selection. BMC Bioinform. 2006, 7, 91. [Google Scholar] [CrossRef]

| Domain | Tools | Variables |

|---|---|---|

| Demographics | Demographic questionnaire | Age, Education, Gender, Handedness, and School performance; Sleep hours (last night), Sleep hours (typical night), and Sleep problems; History of preterm birth, epilepsy or head trauma. |

| Cognitive Performance | Wechsler Intelligence Scale for Children, 4th Edition (WISC-IV) | Scaled scores on Digit Span, Matrix Reasoning, Similarities, Symbol Search, and Estimated IQ. |

| Conners’ Continuous Performance Test (CPT) | CPT Raw scores Response Style, Hits, , Omissions, Commissions, and Perseverations. Hit Reaction Time (HRT) and Hit Reaction Time Standard Deviation (HRT SD), Variability, HRT Block Change and HRT Inter-Stimulus Interval (ISI) Change, analyzed in both raw and log-transformed form. ISI Change: Change in RT across ISIs. | |

| CPT ex-Gaussian parameters Mean RT (all trials), RT Count (total number of RT observations included across all trials), and SD RT. (Gaussian mean component across all trials). (Gaussian SD component across all trials). (exponential tail). The ex-Gaussian metrics are computed for all trials, including the first half of all trials, last half of all trials, and across ISIs of 1, 2 and 4 s. Variable names follow the convention: CPT ExG 〈condition〉 〈metric〉. e.g., CPT ExG: ISI 1 . | ||

| Self-ratings | Brief Self-Control Scale (BSCS), Quick Delay Questionnaire (QDQ), Multidimensional State Boredom Scale (MSBS), Short Boredom Proneness Scale (SBPS), and Mind-Wandering: Spontaneous Scale (MWS) | Quick Delay Questionnaire (QDQ) includes two subscales: delay aversion and delay discounting. For all other measures, total scores were used. |

| Parent-ratings | Childhood Executive Function Inventory (CHEXI), Child Behavior Checklist (CBCL), BSCS, QDQ, MSBS, SBPS, and MWS | CHEXI subscales, including Inhibition and Working Memory (WM). CBCL subscales, including Aggression, Anxious/Depressed, and Rule-Breaking. Social Problems, Somatic Complaints, Thought Problems, and Withdrawn/Depressed. For all other measures, total scores were used. |

| Characteristic | Overall | Control | ADHD | Statistic | p-Value |

| N = 255 | N = 147 | N = 108 | t/OR | ||

| Age | 11.85 (1.80) | 12.03 (1.84) | 11.59 (1.73) | 1.96 | .051 |

| Gender | 2.87 | <.001 | |||

| Female | 75 (29%) | 56 (38%) | 19 (18%) | ||

| Male | 180 (71%) | 91 (62%) | 89 (82%) | ||

| Handedness | 0.86 | .829 | |||

| Left | 24 (9.4%) | 13 (8.8%) | 11 (10%) | ||

| Right | 231 (91%) | 134 (91%) | 97 (90%) |

| Model | Accuracy | AUC | F1 |

| LASSO | 0.799 (0.067) | 0.898 (0.052) | 0.755 (0.076) |

| Elastic Net | 0.799 (0.071) | 0.886 (0.056) | 0.755 (0.081) |

| SVM(RBF) | 0.804 (0.068) | 0.897 (0.055) | 0.764 (0.081) |

| Decision Tree | 0.761 (0.028) | 0.747 (0.038) | 0.690 (0.041) |

| Random Forest | 0.808 (0.050) | 0.900 (0.034) | 0.767 (0.045) |

| XGBoost | 0.824 (0.023) | 0.906 (0.032) | 0.787 (0.019) |

| Feature | LASSO | Elastic Net | SVM (RBF) | Random Forest | XGBoost | Decision Tree |

| CBCL Syndrome: Social Problems (parent-reported) | 2 | 1 | 2 | 3 | 1 | 4 |

| CHEXI Inhibition Total | 1 | 2 | 14 | 1 | 4 | 2 |

| MWS Total (parent-reported) | 3 | 3 | 1 | 5 | 5 | 9 |

| CHEXI WM Total | 7 | 5 | 17 | 2 | 3 | 1 |

| BSCS Total (parent-reported) | 4 | 4 | 32 | 4 | 2 | 3 |

| CPT Raw: Response Style | 9 | 6 | 6 | 17 | 11 | — |

| School Performance | 5 | 7 | 31 | 12 | 10 | — |

| CBCL Syndrome: Thought Problems (parent-reported) | 10 | 9 | 28 | 7 | 18 | 10 |

| CPT ExG: ISI 2 SD RT | 6 | 10 | 23 | 19 | 19 | 17 |

| QDQ Delay Discounting (self-reported) | 8 | 8 | 16 | 20 | 27 | 41 |

| CPT ExG: ISI 2 | 64 | 13 | 19 | 24 | 8 | 13 |

| Delay Aversion (self-reported) | 22 | 36 | 38 | 27 | 16 | — |

| CBCL Syndrome: Anxious/Depressed (parent-reported) | 80 | 14 | 15 | 15 | 33 | 12 |

| WISC Similarities (SS) | 13 | 28 | 20 | 54 | 28 | — |

| MWS Total (self-reported) | 19 | 33 | 13 | 34 | 47 | — |

| QDQ Delay Discounting (parent-reported) | 78 | 19 | 22 | 10 | 41 | 7 |

| CBCL Syndrome: Aggression (parent-reported) | 84 | 18 | 35 | 6 | 30 | 5 |

| CPT ExG: All | 39 | 16 | 10 | 65 | 26 | 23 |

| CPT Raw: Hits | 24 | 38 | 50 | 32 | 22 | 22 |

| CPT ExG: ISI 1 | 58 | 23 | 12 | 57 | 17 | — |

| SBPS Total (self-reported) | 21 | 35 | 59 | 36 | 20 | 36 |

| CPT ExG: ISI 4 | 67 | 17 | 55 | 26 | 13 | — |

| CPT Raw: Block Change (log) | 35 | 47 | 37 | 30 | 29 | — |

| CPT ExG: All RT Count | 41 | 52 | 69 | 18 | 15 | 20 |

| CPT Raw: RT SD (log) | 31 | 44 | 66 | 16 | 23 | — |

| CPT Raw: ISI Change (log) | 37 | 49 | 42 | 37 | — | 16 |

| CPT Raw: Omissions | 26 | 20 | 52 | 62 | — | 27 |

| CPT Raw: Hit RT | 29 | 42 | 18 | 45 | 54 | — |

| CPT Raw: Perseverations | 28 | 41 | 43 | 55 | 21 | — |

| WISC Digit Span (SS) | 14 | 29 | 25 | 68 | 56 | 37 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dai, Y.-W.; Hsu, C.-F. Machine Learning for ADHD Diagnosis: Feature Selection from Parent Reports, Self-Reports and Neuropsychological Measures. Children 2025, 12, 1448. https://doi.org/10.3390/children12111448

Dai Y-W, Hsu C-F. Machine Learning for ADHD Diagnosis: Feature Selection from Parent Reports, Self-Reports and Neuropsychological Measures. Children. 2025; 12(11):1448. https://doi.org/10.3390/children12111448

Chicago/Turabian StyleDai, Yun-Wei, and Chia-Fen Hsu. 2025. "Machine Learning for ADHD Diagnosis: Feature Selection from Parent Reports, Self-Reports and Neuropsychological Measures" Children 12, no. 11: 1448. https://doi.org/10.3390/children12111448

APA StyleDai, Y.-W., & Hsu, C.-F. (2025). Machine Learning for ADHD Diagnosis: Feature Selection from Parent Reports, Self-Reports and Neuropsychological Measures. Children, 12(11), 1448. https://doi.org/10.3390/children12111448