Sentiment Analysis Methods for HPV Vaccines Related Tweets Based on Transfer Learning

Abstract

1. Introduction

2. Related Work

3. Methods

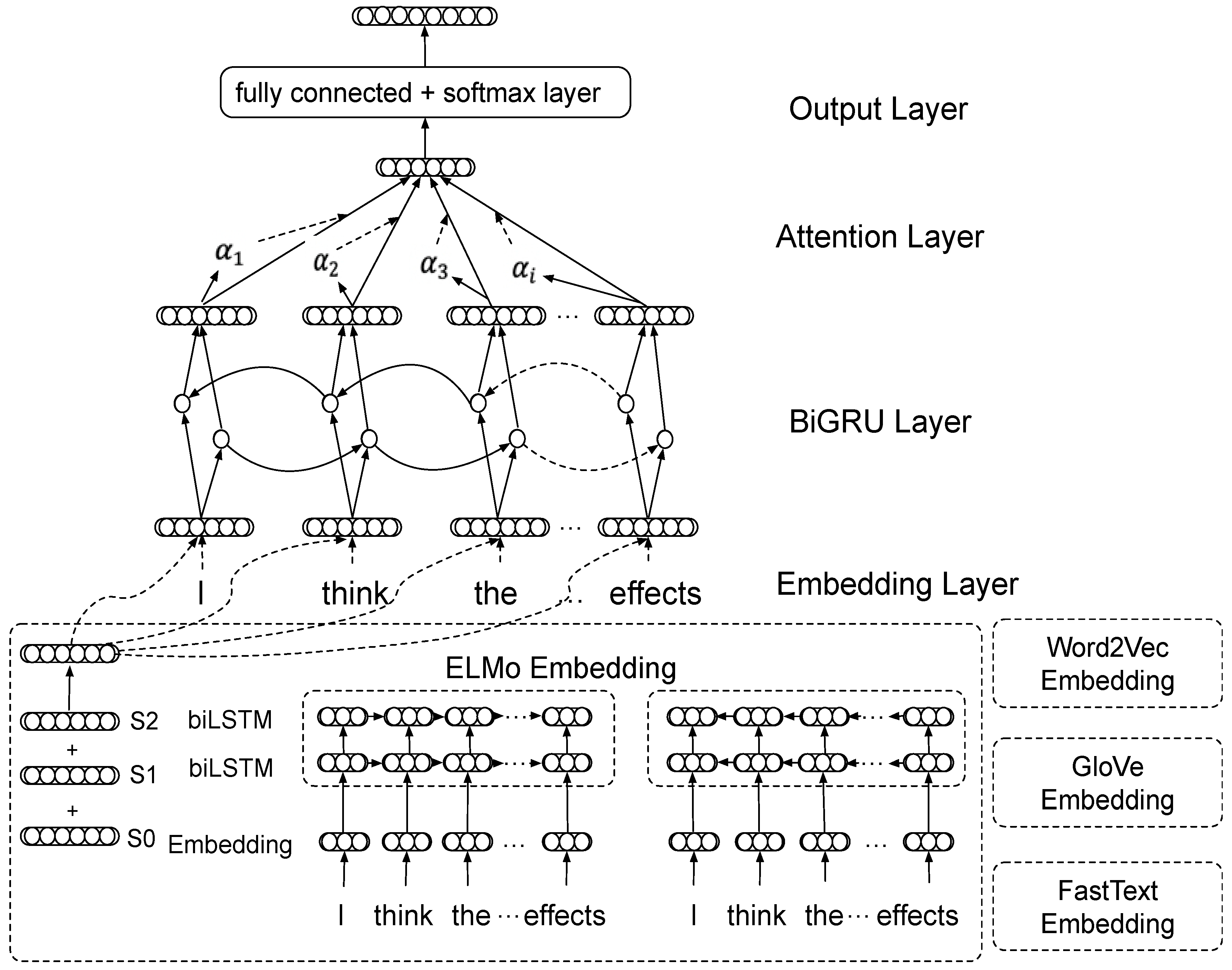

3.1. Diverse Word Embeddings Processed by BiGRU-Att

3.1.1. Embedding Layer

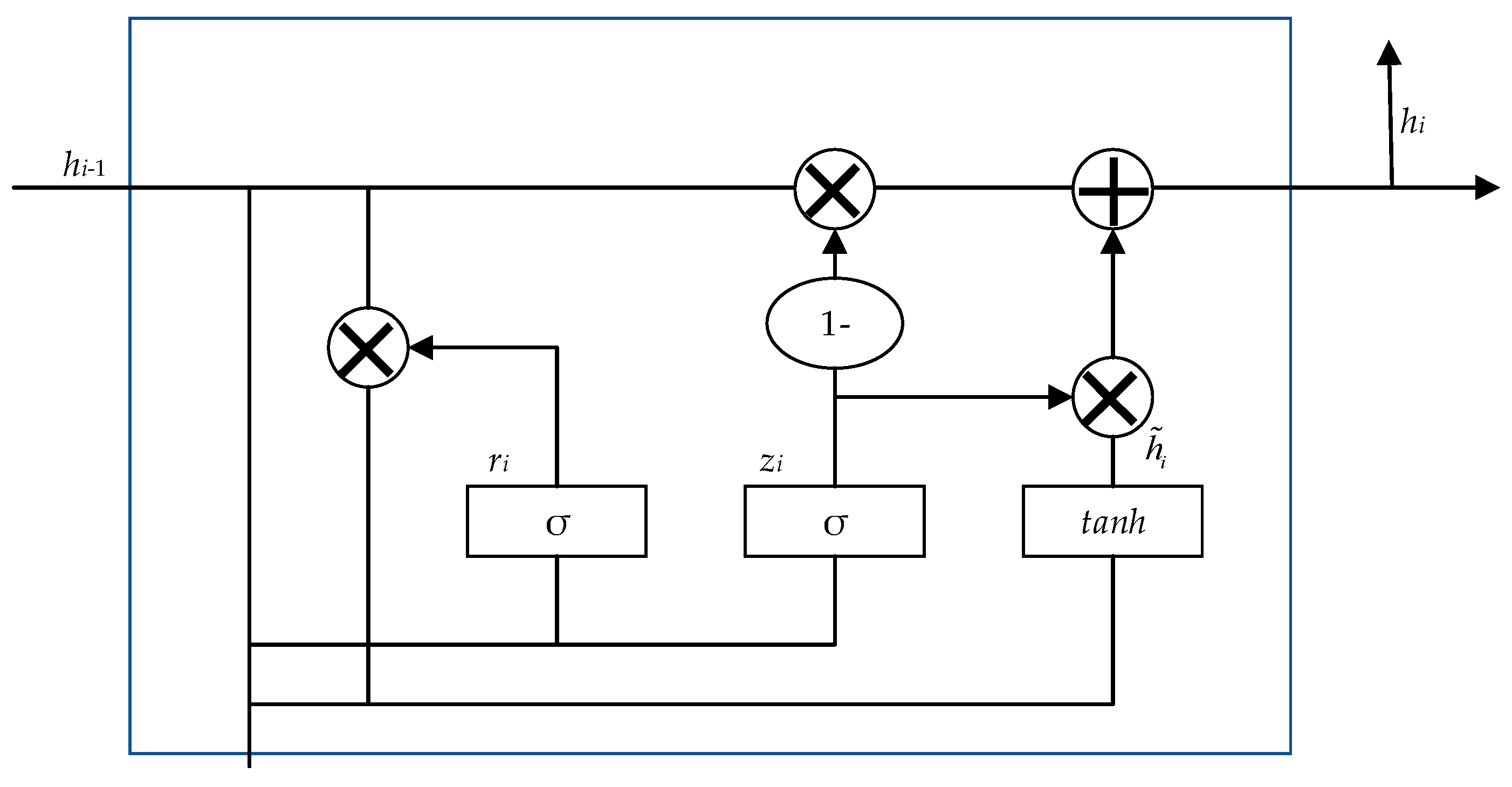

3.1.2. BiGRU Layer

3.1.3. Attention Layer

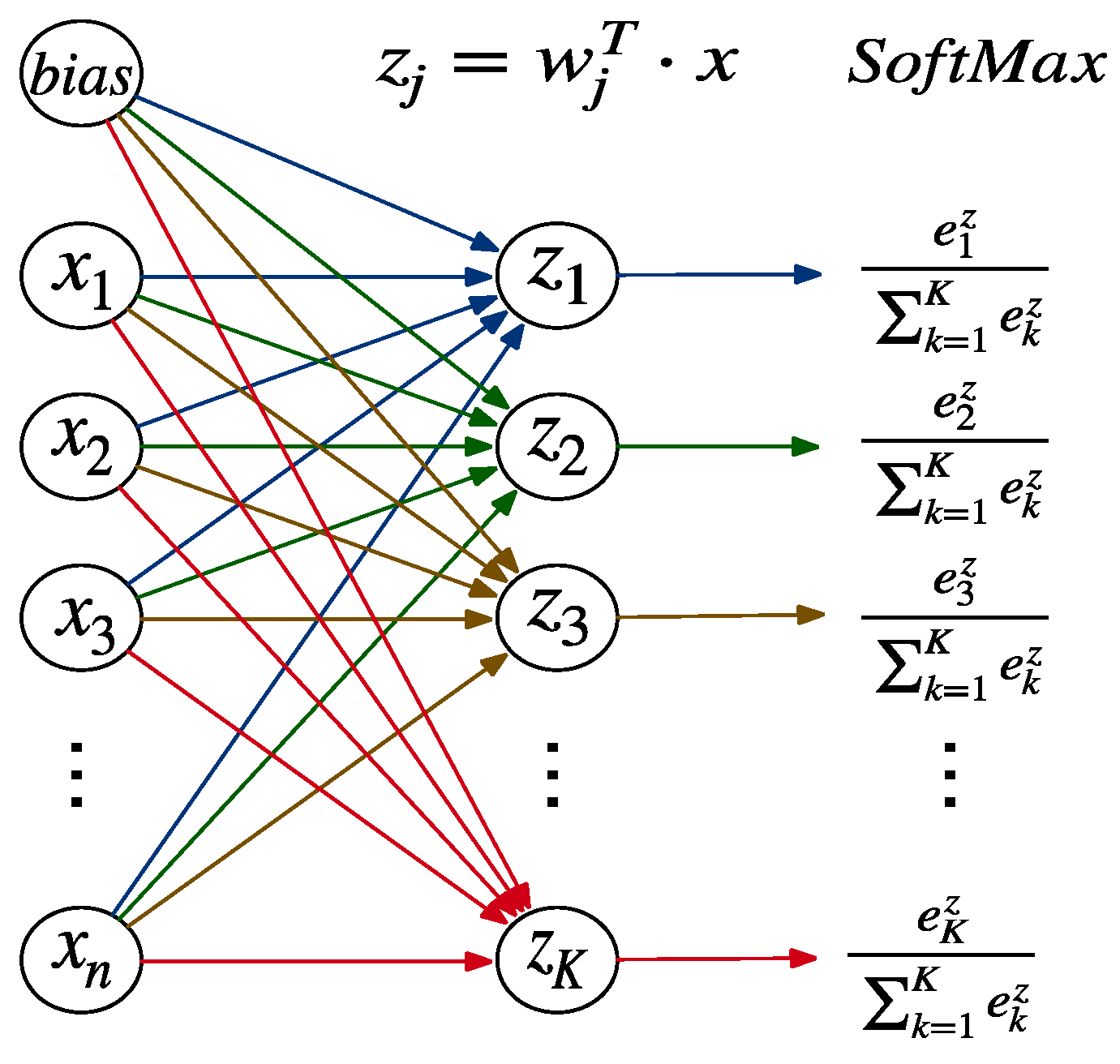

3.1.4. Output Layer

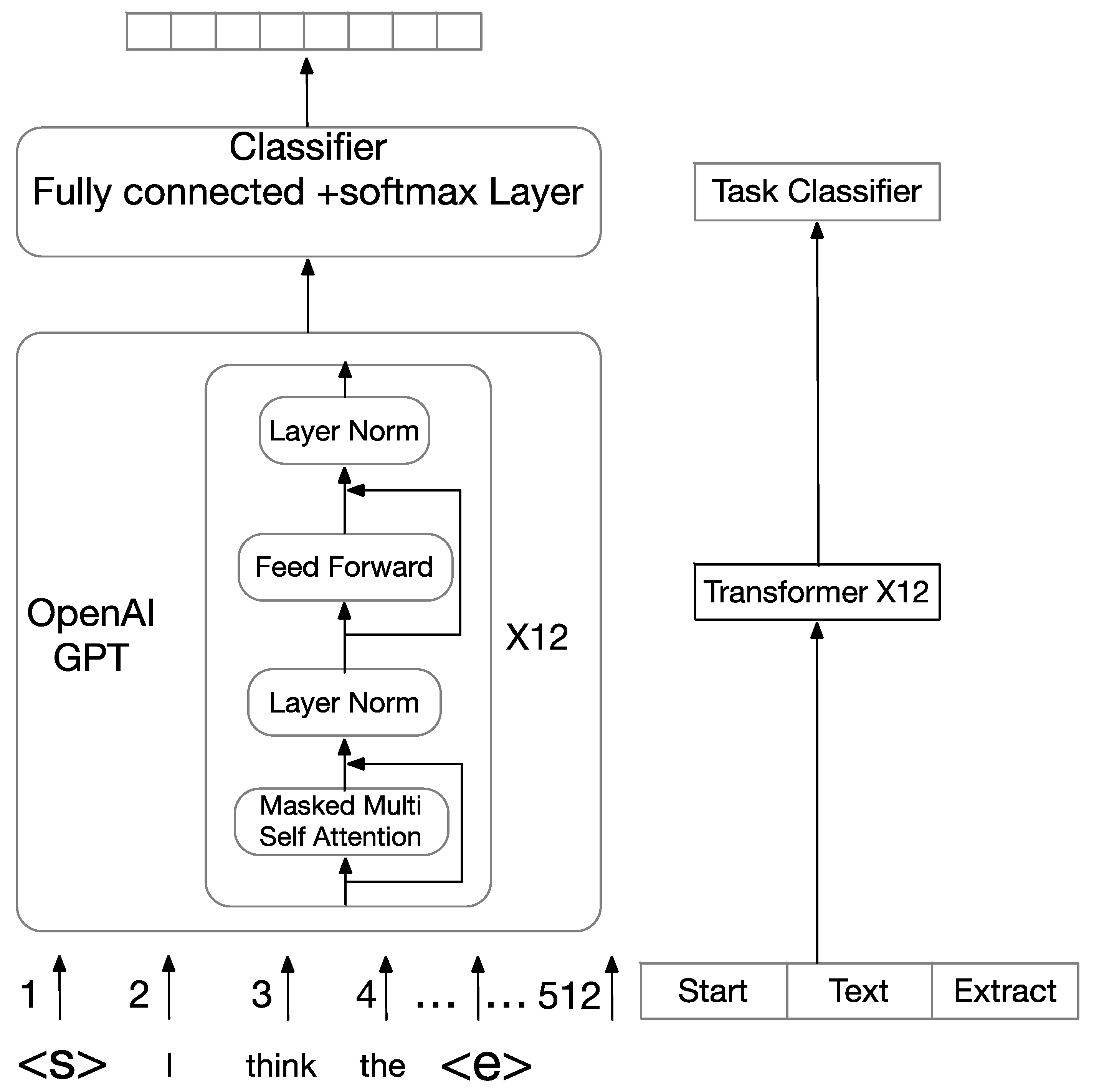

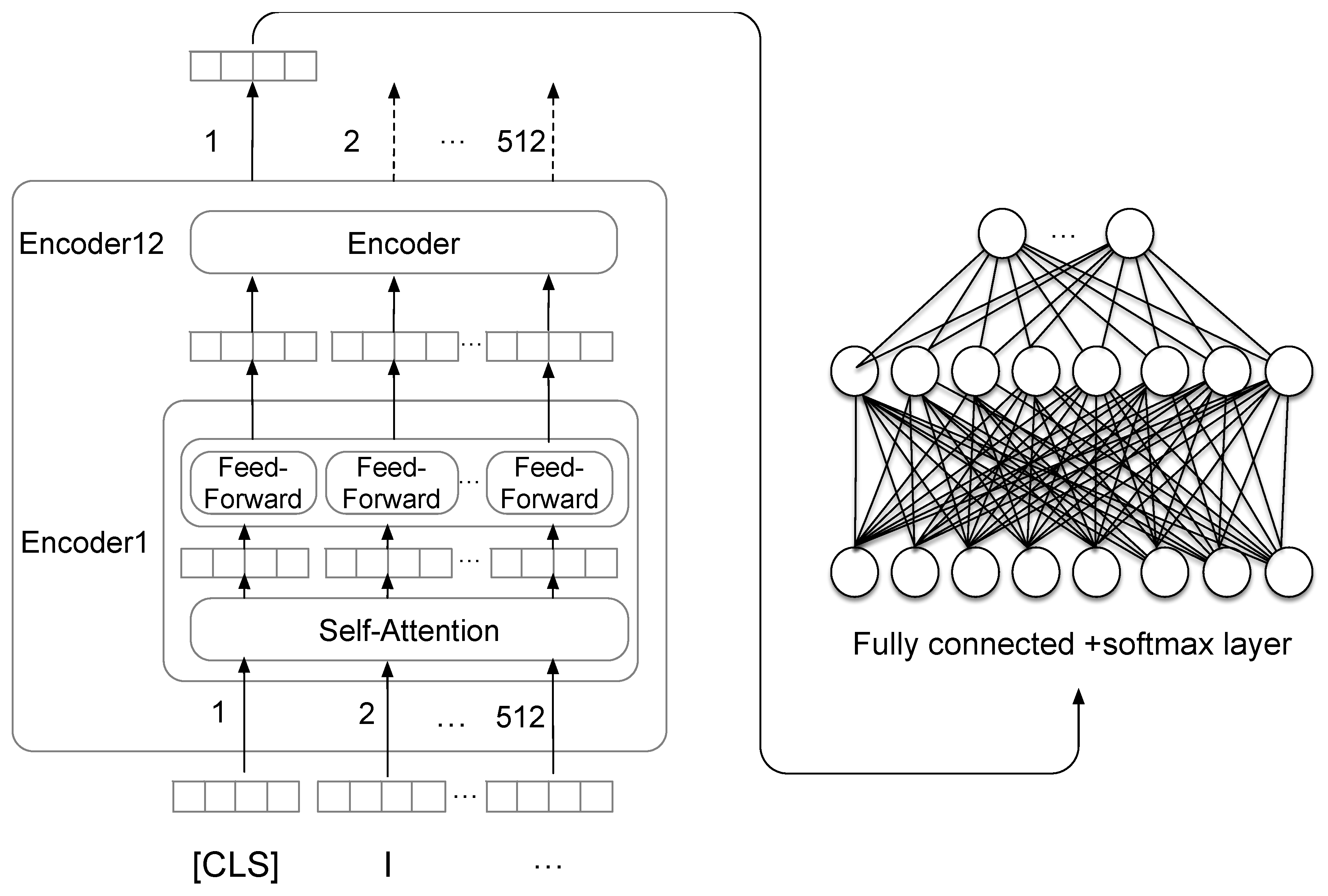

3.2. Fine-Tuning Pre-trained Models

3.2.1. Fine-Tuning GPT

3.2.2. Fine-Tuning BERT

4. Experiments and Results

4.1. Data Source and Data Processing

4.1.1. Data Source

4.1.2. Data Processing

4.2. Experimental Setup

4.3. Baselines

4.4. Results

4.4.1. Average of Micro Index

4.4.2. Standard Deviation and Root Mean Square Error

5. Discussion

5.1. Micro- Scores in Each Fold

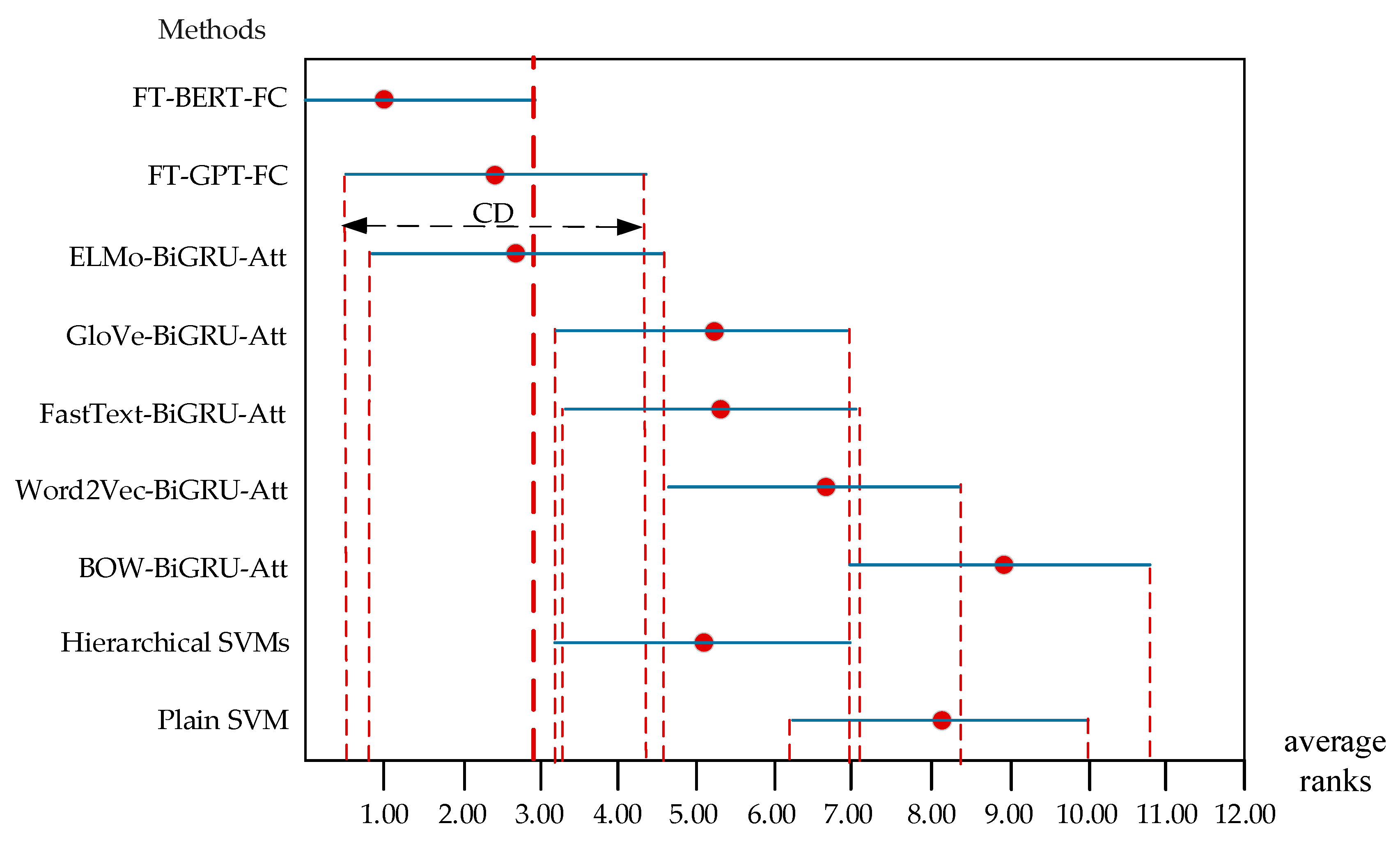

5.2. Statistical Test

5.3. Limitations and Future Researches

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Stanley, M. Pathology and epidemiology of HPV infection in females. Gynecol. Oncol. 2010, 117, S5–S10. [Google Scholar] [CrossRef]

- Gianfredi, V.; Bragazzi, N.L.; Mahamid, M.; Bisharat, B.; Mahroum, N.; Amital, H.; Adawi, M. Monitoring public interest toward pertussis outbreaks: An extensive Google Trends–based analysis. Public Health 2018, 165, 9–15. [Google Scholar] [CrossRef]

- Tekumalla, R.; Banda, J.M. A large-scale twitter dataset for drug safety applications mined from publicly existing resources. arXiv 2020, arXiv:2003.13900. [Google Scholar]

- Kim, S.J.; Marsch, L.A.; Hancock, J.; Das, A. Scaling up research on drug abuse and addiction through social media big data. J. Med. Internet Res. 2017, 19, e353. [Google Scholar] [CrossRef]

- Myslín, M.; Zhu, S.-H.; Chapman, W.; Conway, M.; Cobb, N.; Emery, S.; Hernández, T. Using twitter to examine smoking behavior and perceptions of emerging tobacco products. J. Med. Internet Res. 2013, 15, e174. [Google Scholar] [CrossRef]

- Rao, G.; Zhang, Y.; Zhang, L.; Cong, Q.; Feng, Z. MGL-CNN: A hierarchical posts representations model for identifying depressed individuals in online forums. IEEE Access 2020, 8, 32395–32403. [Google Scholar] [CrossRef]

- Cong, Q.; Feng, Z.; Li, F.; Xiang, Y.; Rao, G.; Tao, C. X-A-BiLSTM: A deep learning approach for depression detection in imbalanced data. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 1624–1627. [Google Scholar]

- Franco, E.; Harper, D.M. Vaccination against human papillomavirus infection: A new paradigm in cervical cancer control. Vaccine 2005, 23, 2388–2394. [Google Scholar] [CrossRef]

- HealthyPeople.gov, Immunization and Infectious Diseases. Available online: https://www.healthypeople.gov/2020/topics-objectives/topic/immunization-and-infectious-diseases/national-snapshot (accessed on 6 May 2020).

- Du, J.; Xu, J.; Song, H.-Y.; Liu, X.; Tao, C. Optimization on machine learning based approaches for sentiment analysis on HPV vaccines related tweets. J. Biomed. Semant. 2017, 8, 9. [Google Scholar] [CrossRef]

- Dunn, A.G.; Leask, J.; Zhou, X.; Mandl, K.D.; Coiera, E.; Zhang, C.; Briones, R. Associations between exposure to and expression of negative opinions about human papillomavirus vaccines on social media: An Observational Study. J. Med. Internet Res. 2015, 17, e144. [Google Scholar] [CrossRef]

- Dunn, A.G.; Surian, D.; Leask, J.; Dey, A.; Mandl, K.D.; Coiera, E. Mapping information exposure on social media to explain differences in HPV vaccine coverage in the United States. Vaccine 2017, 35, 3033–3040. [Google Scholar] [CrossRef]

- Rao, G.; Huang, W.; Feng, Z.; Cong, Q. LSTM with sentence representations for document-level sentiment classification. Neurocomputing 2018, 308, 49–57. [Google Scholar] [CrossRef]

- Le, G.M.; Radcliffe, K.; Lyles, C.; Lyson, H.C.; Wallace, B.; Sawaya, G.; Pasick, R.; Centola, D.; Sarkar, U. Perceptions of cervical cancer prevention on twitter uncovered by different sampling strategies. PLoS ONE 2019, 14, e0211931. [Google Scholar] [CrossRef]

- Heaton, J.; Goodfellow, I.; Bengio, Y.; Courville, A. Deep learning. Genet. Program. Evolvable Mach. 2017, 19, 305–307. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 1817. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. [Google Scholar]

- Howard, J.; Ruder, S. Universal language model fine-tuning for text classification. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; (Volume 1: Long Papers). Association for Computational Linguistics (ACL): Stroudsburg, PA, USA, 2018; pp. 328–339. [Google Scholar]

- Adhikari, A.; Ram, A.; Tang, R.; Lin, J. DocBERT: BERT for Document Classification 2019. arXiv 2019, arXiv:1904.08398. [Google Scholar]

- Salathé, M.; Khandelwal, S. Assessing vaccination sentiments with online social media: Implications for infectious disease dynamics and control. PLoS Comput. Biol. 2011, 7, e1002199. [Google Scholar] [CrossRef]

- Sarker, A.; Ginn, R.; Nikfarjam, A.; Pimpalkhute, P.; Oconnor, K.; Gonzalez, G. Mining twitter for adverse drug reaction mentions: A corpus and classification benchmark. In Proceedings of the Fourth Workshop on Building and Evaluating Resources for Health and Biomedical Text Processing (BioTxtM 2014), Reykjavík, Iceland, 31 May 2014. [Google Scholar]

- Kim, Y. Convolutional neural networks for sentence classification. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Quatar, 25–29 October 2014; pp. 1746–1751. [Google Scholar]

- Zhang, Y.; Roller, S.; Wallace, B.C.; Knight, K.; Nenkova, A.; Rambow, O. MGNC-CNN: A simple approach to exploiting multiple word embeddings for sentence classification. In Proceedings of the 2016 Conference of the north American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; Association for Computational Linguistics (ACL): Stroudsburg, PA, USA, 2016; pp. 1522–1527. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.; Dean, J. Distributed representations of words and phrases and their compositionality. arXiv 2013, arXiv:1310.4546, 3111–3119. [Google Scholar]

- Joulin, A.; Grave, E.; Bojanowski, P.; Mikolov, T.; Lapata, M.; Blunsom, P.; Koller, A. bag of TRICKS for efficient text classification. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 2, Short Papers, Valencia, Spain, 3–7 April 2017; Association for Computational Linguistics (ACL): Stroudsburg, PA, USA, 2017; pp. 427–431. [Google Scholar]

- McCann, B.; Bradbury, J.; Xiong, C.; Socher, R. Learned in Translation: Contextualized Word Vectors. In Proceedings of the Advances in Neural Information Processing Systems. arXiv 2017, 6294–6305. [Google Scholar]

- Peters, M.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep contextualized word representations. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), New Orleans, LA, USA, 1–6 June 2018; Association for Computational Linguistics (ACL): Stroudsburg, PA, USA, 2018; pp. 2227–2237. [Google Scholar]

- Zheng, J.; Chen, X.; Du, Y.; Li, X.; Zhang, J. Short Text Sentiment Analysis of Micro-blog Based on BERT. In Lecture Notes in Electrical Engineering; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2019; Volume 590, pp. 390–396. [Google Scholar]

- Wang, T.; Lu, K.; Chow, K.P.; Zhu, Q. COVID-19 Sensing: Negative sentiment analysis on social media in China via Bert Model. IEEE Access 2020, 8, 138162–138169. [Google Scholar] [CrossRef]

- Müller, M.; Salathé, M.; Kummervold, P. COVID-twitter-bert: A natural language processing model to Analyse COVID-19 content on twitter. arXiv 2020, arXiv:2005.07503. [Google Scholar]

- Azzouza, N.; Akli-Astouati, K.; Ibrahim, R. TwitterBERT: Framework for Twitter Sentiment Analysis Based on Pre-trained Language Model Representations. In Advances in Intelligent Systems and Computing; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2019; Volume 1073, pp. 428–437. [Google Scholar]

- Myagmar, B.; Li, J.; Kimura, S. Cross-domain sentiment classification with bidirectional contextualized transformer language models. IEEE Access 2019, 7, 163219–163230. [Google Scholar] [CrossRef]

- Rao, G.; Peng, C.; Zhang, L.; Wang, X.; Zhiyong, F. A Knowledge Enhanced Ensemble Learning Model for Mental Disorder Detection on Social Media. In Proceedings of the 13th International Conference on Knowledge Science, Engineering and Management (KSEM 2020), Hangzhou, China, 28–30 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 181–192. [Google Scholar]

- Biseda, B.; Mo, K. Enhancing Pharmacovigilance with Drug Reviews and Social Media. arXiv 2020, arXiv:2004.08731. [Google Scholar]

- API Overview. Available online: https://dev.twitter.com/overview/api (accessed on 6 August 2020).

- Parambath, S.P.; Usunier, N.; Grandvalet, Y. Optimizing F-measures by cost-sensitive classification. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2123–2131. [Google Scholar]

- Yang, Z.; Yang, D.; Dyer, C.; He, X.; Smola, A.; Hovy, E.; Knight, K.; Nenkova, A.; Rambow, O. Hierarchical Attention Networks for Document Classification. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; Association for Computational Linguistics (ACL): Stroudsburg, PA, USA, 2016; pp. 1480–1489. [Google Scholar]

- Dem, J. Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 1993, 7, 1–30. [Google Scholar]

- Friedman, M. The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J. Am. Stat. Assoc. 1937, 32, 675–701. [Google Scholar] [CrossRef]

- Iman, R.L.; Davenport, J.M. Approximations of the critical region of the fbietkan statistic. Commun. Stat. Theory Methods 1980, 9, 571–595. [Google Scholar] [CrossRef]

- Nemenyi, P.B. Distribution-free Multiple Comparisons; Princeton University: Princeton, NJ, USA, 1963. [Google Scholar]

| Category | Topic (HPV) | Sentiment | Sentiment (Subclass) | Tweet Numbers (Proportion) | Example |

|---|---|---|---|---|---|

| 1 | Unrelated | / | / | 2016 (33.6%) | Only three U.S. states mandate recommended HPV vaccine http://t.co/YCInira89m via @Reuters |

| 2 | Related | Positive | / | 1153 (19.2%) | RT @GlowHQ: Dear #HPV Vaccination. You are safe & effective. Why don’t more states require you? @VICE http://t.co/QRL26SA4GO http://t.co/gY. |

| 3 | Neutral | / | 1386 (23.1%) | Gardasil HPV Vaccine Safety Assessed In Most Comprehensive Study To Date http://t.co/4g3ztZdSU4 via @forbes. | |

| 4 | Negative | NegSafety | 912 (15.2%) | Worries about HPV vaccine: European Union medicines agency investigating reports of rar http://t.co/bMOr3XveVC http://t.co/jZeHFkCDpl. | |

| 5 | NegEfficacy | 46 (0.77%) | ACOG is now “recommending” ob/gyn’s to push HPV vaccine despite its ineffectiveness & it’s notorious track record of killing &maiming ppl. | ||

| 6 | NegResistant | 6 (0.1%) | #HPVvaccine “would introduce sexual activity in young women, that would inappropriately introduce promiscuity” http://t.co/zEnDdyVP8a. | ||

| 7 | NegCost | 6 (0.1%) | RT @kylekirkup: I’m no public health expert, but huh?! If you’re male & want free HPV vax in BC, you have to come out. At age 11. http://. | ||

| 8 | NegOthers | 475 (7.93%) | Sanofi Sued in France over Gardasil #HPV #Vaccine –http://t.co/LruYf4c0co. |

| Unprocessed Tweets | Processed Tweets |

|---|---|

| @margin What’s your attitude about the vaccination? https://stamp.jsp?tp=&arnumber=897 Please write me back @Daviadaxa soon!!!!! http://#view=home&op=translate&sl=auto | What’s your attitude about the vaccination url please write me back soon url |

| Parameter | Value |

|---|---|

| Loss Function | Categorical cross-entropy |

| Train-Test Split | 10-fold cross-validation |

| Optimizer | Adam |

| Learning Rate | 0.001 |

| Back-Propagation | ReLu |

| Batch Size | 32 |

| Dropout | 0.25 |

| Hidden State GRU | 64 |

| Fold | Methods-Other Teams | Methods-Our Works | Average/Fold | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Plain SVM [10] | Hierarchical SVMs [10] | BOW-BiGRU-Att | Word2Vec-BiGRU-Att | FastText-BiGRU-Att | GloVe-BiGRU-Att | ELMo-BiGRU-Att | FT-GPT-FC | FT-BERT-FC | ||

| F-1 | 0.682 | 0.739 | 0.658 | 0.710 | 0.728 | 0.719 | 0.750 | 0.743 | 0.7891 | 0.724 |

| F-2 | 0.671 | 0.698 | 0.650 | 0.699 | 0.701 | 0.704 | 0.724 | 0.722 | 0.755 | 0.702 |

| F-3 | 0.639 | 0.682 | 0.643 | 0.677 | 0.673 | 0.680 | 0.707 | 0.721 | 0.750 | 0.686 |

| F-4 | 0.693 | 0.743 | 0.669 | 0.724 | 0.737 | 0.727 | 0.745 | 0.768 | 0.778 | 0.732 |

| F-5 | 0.658 | 0.721 | 0.645 | 0.681 | 0.712 | 0.691 | 0.722 | 0.730 | 0.762 | 0.702 |

| F-6 | 0.677 | 0.728 | 0.662 | 0.700 | 0.680 | 0.703 | 0.731 | 0.735 | 0.771 | 0.710 |

| F-7 | 0.642 | 0.690 | 0.631 | 0.686 | 0.719 | 0.695 | 0.712 | 0.721 | 0.753 | 0.694 |

| F-8 | 0.669 | 0.729 | 0.660 | 0.712 | 0.723 | 0.719 | 0.736 | 0.744 | 0.776 | 0.719 |

| F-9 | 0.690 | 0.735 | 0.668 | 0.703 | 0.718 | 0.702 | 0.749 | 0.747 | 0.791 | 0.723 |

| F-10 | 0.678 | 0.723 | 0.649 | 0.681 | 0.691 | 0.677 | 0.721 | 0.730 | 0.762 | 0.701 |

| Average/Method | 0.670 | 0.719 | 0.654 | 0.697 | 0.708 | 0.702 | 0.730 | 0.736 | 0.769 | / |

| Research Team | Methods | SD | RMSE |

|---|---|---|---|

| Other Teams | Plain SVM [10] | 0.018 | 0.017 |

| Hierarchical SVMs [10] | 0.022 | 0.021 | |

| Our Works | BOW-BiGRU-Att | 0.013 | 0.012 |

| Word2Vec-BiGRU-Att | 0.014 | 0.013 | |

| FastText-BiGRU-Att | 0.023 | 0.021 | |

| GloVe-BiGRU-Att | 0.017 | 0.016 | |

| ELMo-BiGRU-Att | 0.016 | 0.015 | |

| FT-GPT-FC | 0.016 | 0.015 | |

| FT-BERT-FC | 0.015 | 0.014 |

| Fold | Methods-Other Teams | Methods-Our Works | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Plain SVM [10] | Hierarchical SVMs [10] | BOW-BiGRU-Att | Word2Vec-BiGRU-Att | FastText-BiGRU-Att | GloVe-BiGRU-Att | ELMo-BiGRU-Att | FT-GPT-FC | FT-BERT-FC | |

| F-1 | 8 | 4 | 9 | 7 | 5 | 6 | 2 | 3 | 1 |

| F-2 | 8 | 7 | 9 | 6 | 5 | 4 | 2 | 3 | 1 |

| F-3 | 9 | 4 | 8 | 6 | 7 | 5 | 3 | 2 | 1 |

| F-4 | 8 | 4 | 9 | 7 | 5 | 6 | 3 | 2 | 1 |

| F-5 | 8 | 7 | 9 | 6 | 5 | 4 | 2 | 3 | 1 |

| F-6 | 8 | 4 | 9 | 7 | 5 | 6 | 3 | 2 | 1 |

| F-7 | 8 | 4 | 9 | 6 | 7 | 5 | 3 | 2 | 1 |

| F-8 | 8 | 6 | 9 | 7 | 3 | 5 | 4 | 2 | 1 |

| F-9 | 8 | 7 | 9 | 6 | 5 | 4 | 2 | 3 | 1 |

| F-10 | 8 | 4 | 9 | 7 | 5 | 6 | 3 | 2 | 1 |

| 8.1 | 5.1 | 8.9 | 6.5 | 5.2 | 5.1 | 2.7 | 2.4 | 1.0 | |

| 65.61 | 26.01 | 79.21 | 42.25 | 27.04 | 26.01 | 7.29 | 5.76 | 1.00 | |

| 8.11.90 | 5.11.90 | 8.91.90 | 6.51.90 | 5.21.90 | 5.11.90 | 2.71.90 | 2.41.90 | 1.01.90 | |

| No. | Tweet | Annotated Category | The Category Identified by FT-BERT-FC |

|---|---|---|---|

| 1 | Warts are cause by HPV | Unrelated | Neutral |

| 2 | @handronicus she is not pleased with me. She hasn’t been this mad since I got the cervical cancer vaccine (only sluts get HPV duh) | NegResistant | NegOthers |

| 3 | RT @kylekirkup: I’m no public health expert, but huh?! If you’re male & want free HPV vax in BC, you have to come out. At age 11. http://… | NegCost | NegSafety |

| … | … | … |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Fan, H.; Peng, C.; Rao, G.; Cong, Q. Sentiment Analysis Methods for HPV Vaccines Related Tweets Based on Transfer Learning. Healthcare 2020, 8, 307. https://doi.org/10.3390/healthcare8030307

Zhang L, Fan H, Peng C, Rao G, Cong Q. Sentiment Analysis Methods for HPV Vaccines Related Tweets Based on Transfer Learning. Healthcare. 2020; 8(3):307. https://doi.org/10.3390/healthcare8030307

Chicago/Turabian StyleZhang, Li, Haimeng Fan, Chengxia Peng, Guozheng Rao, and Qing Cong. 2020. "Sentiment Analysis Methods for HPV Vaccines Related Tweets Based on Transfer Learning" Healthcare 8, no. 3: 307. https://doi.org/10.3390/healthcare8030307

APA StyleZhang, L., Fan, H., Peng, C., Rao, G., & Cong, Q. (2020). Sentiment Analysis Methods for HPV Vaccines Related Tweets Based on Transfer Learning. Healthcare, 8(3), 307. https://doi.org/10.3390/healthcare8030307