A Taxonomy for Augmented and Mixed Reality Applications to Support Physical Exercises in Medical Rehabilitation—A Literature Review

Abstract

1. Introduction

- Identifies different forms of Visual Guidance aimed to help patients in their rehabilitation, for example, by allowing them to perform exercises autonomously at home without any supervision by a therapist;

- Provides an overview of the current use of the variety of AR/MR Technologies used for visual output in the field of physical rehabilitation;

- Derives insights regarding the relations between Patient Types, Medical Purposes, Technologies, and specific Visual Guidance approaches used to support the patient in their rehabilitation process.

2. Materials and Methods

2.1. Eligibility Criteria

- The performance of physical exercises in medical rehabilitation. As mentioned above in the introduction, medical rehabilitation consists of a variety of treatment methods, including the fields of physiotherapy, occupational therapy, and physical therapy [2]. As these types of therapy are interrelated to each other, we will refer to all kinds of physical exercises performed by patients within these fields by the term physical rehabilitation.

- The output technologies Augmented and Mixed Reality (AR/MR) as means to support the different types of physical exercises. The available vast amount of research in combination with the potential that future developments of the technology offer, we think, merits such a rather strict technological focus of this literature review. As has been the approach by other researchers (e.g., [18]), we will not distinguish between AR and MR but use the terms interchangeably or refer to them as AR/MR. As a foundation, we mostly follow the definition of Azuma, who summarized in his early survey of 1997 that AR (a) combines the real and virtual, (b) is interactive in real-time, and (c) is registered in 3D [19]. In particular, the last requirement is not always met with all types of output technology. For example, smart glasses often superimpose a digital layer without registering this in 3D. For the matter of thoroughness and because the practical definitions of AR and MR are also evolving [20], we still included such approaches in our review. It is noteworthy to mention that we did not actively exclude applications employing Virtual Reality (VR) as long as our eligibility criteria were met. However, the number of VR applications found may not be representative, since we did not explicitly search for the term Virtual Reality during our paper selection process.

- The actual use of visual stimuli or visual guidance to support patients during the rehabilitation task. Here, we did not limit our review to graphical stimuli but included representations with text as well.

2.2. Literature Sources and Search Strategies

- The research must take place in the context of physical rehabilitation, i.e., physical exercises in medical rehabilitation. Therefore, at least one of the following search terms had to be included in the title, keywords, or abstract: “Physical Therapy”, “Physiotherapy”, “Physical exercise”, “Physical rehabilitation”, “Occupational Therapy”, “Stroke rehabilitation”.

- Furthermore, the research must use some kind of AR-/MR-based visual guidance or stimuli to support the patient in their rehabilitation. Consequently, one of these search terms must be found in the title, keywords, or abstract in addition to the first requirement: “Mixed Reality”, “Extended Reality”, “Augmented Reality”, “Visual Cues”, “Visualization”, “Visualisation”, “Video-based”.

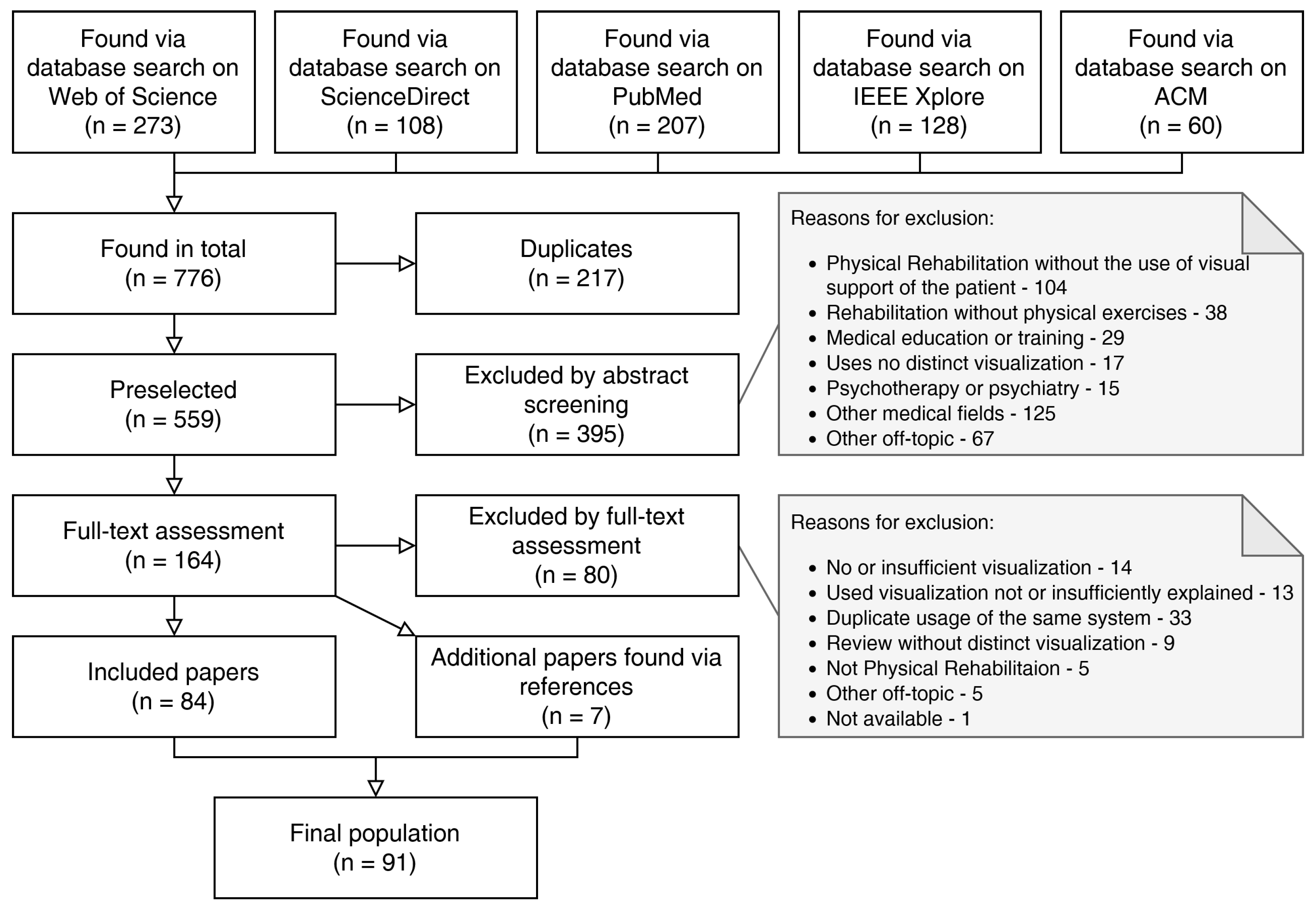

2.3. Study Selection

- The reference addresses physical exercises in medical rehabilitation, but without the use of AR/MR-based or comparable visual guidance or support for the patient (104).

- The reference addresses medical rehabilitation but not physical exercise (38).

- The reference addresses the use of visual support in medical education or training, aimed at the medical professional (29).

- The reference uses no distinct visualization, but instead either considers the feasibility of conventional rehabilitation delivered via video calls or the effect of commercially available mobile games on general activity (17).

- The reference explicitly considers psychotherapy or psychiatry without physical exercise (15).

- The reference addresses other medical fields, such as medical imaging or surgery (125).

- The reference is off-topic in other ways, for example, including other data visualization in Machine Learning, Artificial Intelligence, Veterinary Sciences, applications not employing AR/MR or VR, and game reviews (67).

- The references utilize no or an insufficient level of visualization (14).

- The references mention a system using visualization but do not explain it in enough detail, which would help other researchers and practitioners to build upon their results (13).

- The references utilize a system or application which was already (and better) explained in another paper included in the data-set. This took place many times as research groups would commonly deploy a single application for a number of distinct papers, such as a preliminary report, a feasibility study, a paper explaining the technical development, and a clinical long-term study (33).

- The references are literature reviews showing no distinct visualization, but refer to other potentially interesting papers (9).

- The references are off-topic in other ways (5).

- In addition, one single reference could not be procured (1).

3. Results

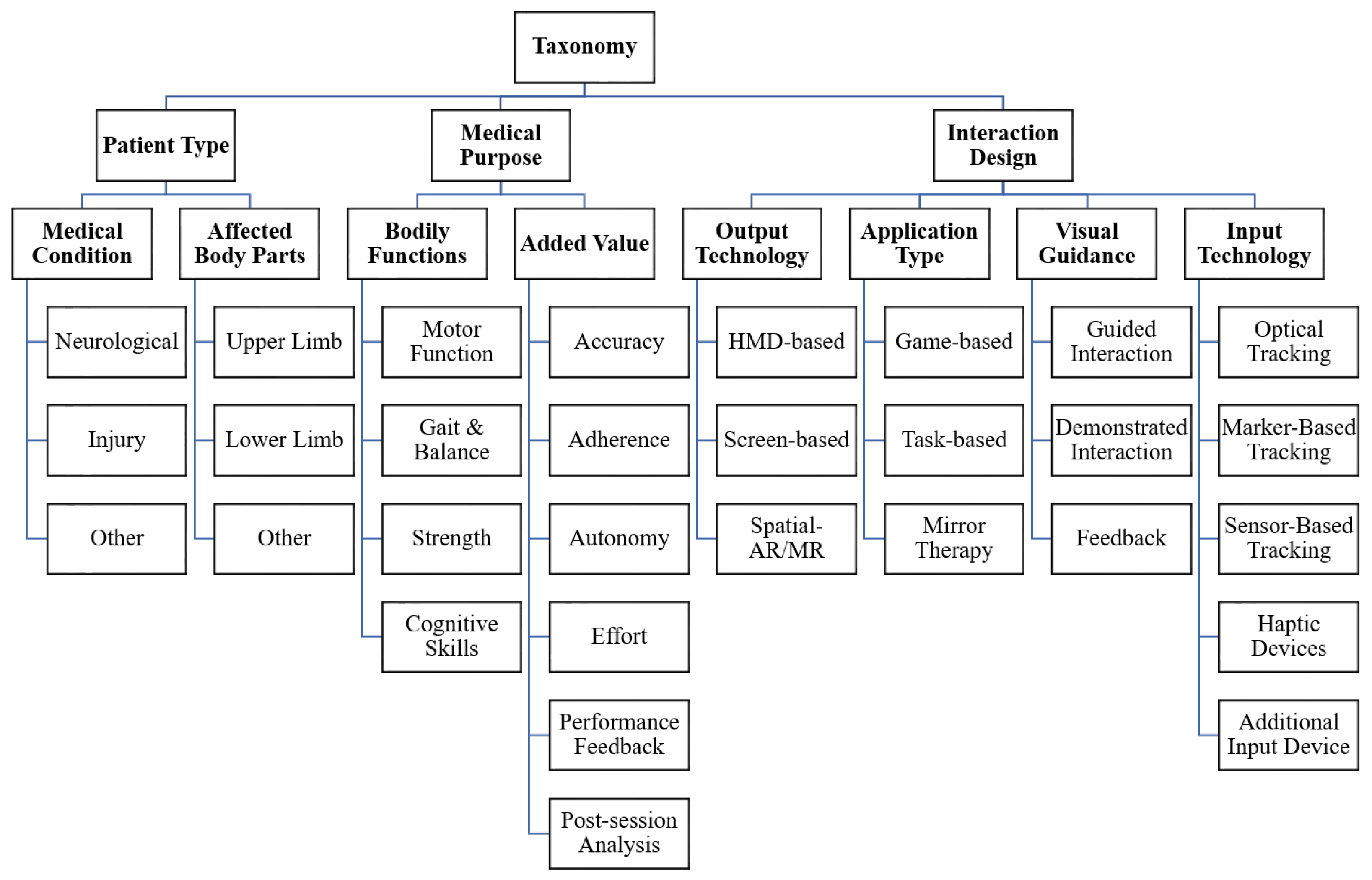

3.1. Taxonomy Construction

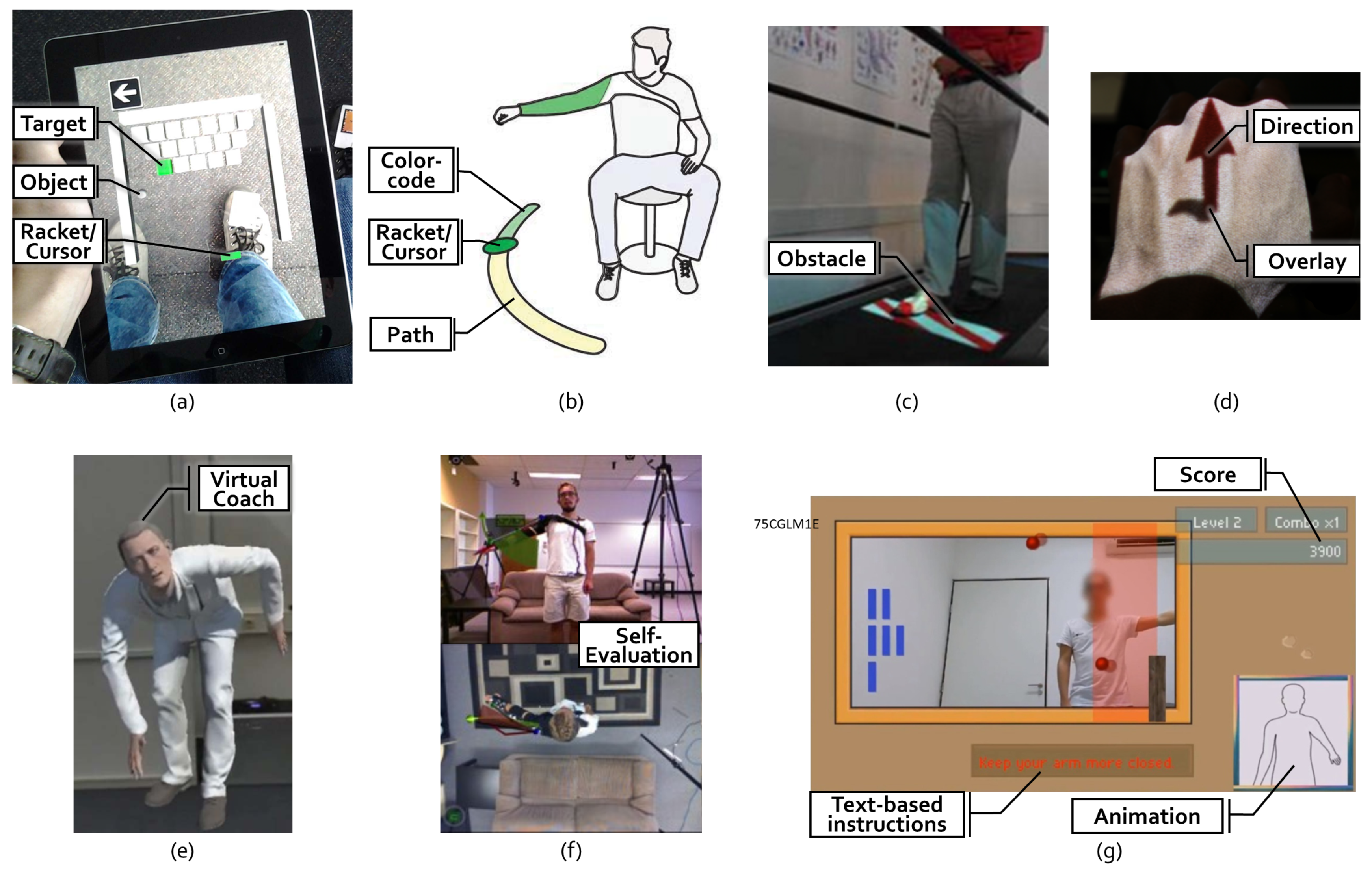

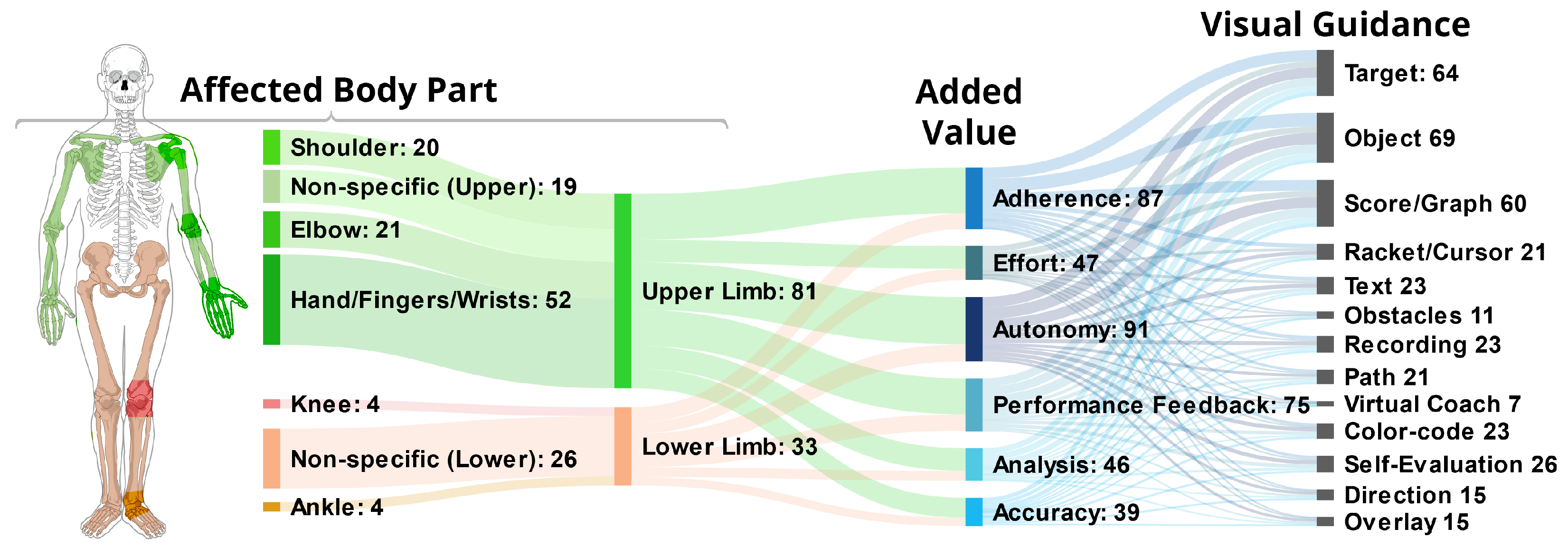

- Target: A total of 64 (56%) of the applications use some form of Target, which we define as a spatial destination to be reached by the patient. For example, in Garcia and Navarro [13], the user controls a ball to hit bricks that act as a target with a paddle controlled by the foot movement (see Figure 3a). Similarly, another game in Bouteraa et al. [27] shows randomly appearing targets that patients need to grasp with their hand and the help of an exoskeleton.

- Path: Related to targets are Paths, which not only show the destination but also a trajectory to be followed. Compared to targets, paths provide information during the entire execution of the exercise. In total, 21 (18%) of the applications use this type of visualization. In SleeveAR [12], a path is shown to direct the arm movement in order to execute the exercise (see Figure 3b). Physio@Home [28] visualizes a wedge that includes a movement arc for proper arm guidance (see Figure 3f).

- Direction: Overall, 15 (13%) applications do not show an entire path but indicate a Direction to be followed instead, usually visualized by an arrow. In the ARDance game [29], the user has to move the AR marker towards the left or right diagonal directions to complete dance moves. LightGuide [30] uses a simple 3D arrow projected onto the user’s hand to visualize the direction of movement. It also uses the Hue cue, a visual hint that uses spatial coloring to indicate direction (see Figure 3d). Tang et al. [28] also visualize such an arrow in addition to their path (see Figure 3f).

- Object: The most common element is Objects, found in 69 (61%) of the analyzed applications. Objects, unlike targets, can be interacted with in some way. For example, in the Ocean Catch Game by Park et al. [31], the user trains grasping movements by catching virtual fish. Similarly, Alamri et al. created a Shelf Exercise [32], which reenacts the motion of placement by showing a virtual cup that can be placed on different spots in a shelf by the user.

- Racket/Cursor: A Racket or Cursor is an object directly controlled by the patient which allows them to manipulate other objects; 21 (18%) of the analyzed solutions utilize such a racket. For example, with the help of a marker, the bar is used to hit the ball in a game of Pong as shown by Dinevan et al. [33]. FruitNinja as applied by Seyedebrahimi et al. [34] shows a circle to depict the current position of the hand to control fruit targets appearing on the screen.

- Obstacle:Obstacles describes hindrances to be avoided or bypassed. They are employed in 11 (10%) of the applications. To facilitate practicing gait and balance exercises, the obstacles can be projected directly onto the treadmill [35] (see Figure 3c) or shown in the form of virtual objects such as blocks or tree trunks [36] to perform certain leg movements.

- Recording: The demonstration may be delivered by a prerecorded Video, Animation, or Picture, as it was the case in 23 (20%) of the analyzed applications. For instance, UNICARE Home+ by Yeo et al. [38] shows a guide video on the side of the screen to depict exercise movement. Another example from Khan et al. [39] uses the “follow the leader” approach by showing an animated virtual avatar to be followed by the user.

- Virtual Coach: Unlike with an unchanging recording, a Virtual Coach provides interaction with the user. Such a coach was used in seven (6%) of the analyzed solutions. The holographic representation of a virtual coach as depicted in Mostajeran et al. [37] allows users to look at the coach from different angles, see instructions from the same perspective and fosters interaction (see Figure 3e).

- Overlay: An Overlay displays a visual instruction directly perceived on the user’s body. In total, 15 (13%) applications employed such an Overlay. In a Kinect-based system as shown by Pachoulakis et al. [40], the user’s body is overlaid with skeleton joints displaying the trainer’s movement, allowing him to follow the exercise.

- Text: In total, 23 (20%) applications deliver Text-Based Instructions or Feedback. ARKanoidAR [11] uses text-based instructions to guide the user in performing the exercise correctly (see Figure 3g). Additionally, a virtual piano game [41] motivates patients by showing text-based feedback such as “Well Done” based on the performance of the user.

- Score/Graph: A total of 60 (53%) of the analyzed solutions provide the patients with some kind of informative Graph, Score or Progress Bar related to their performance. When the task is performed correctly, the score is increased, as depicted in FruitNinja [34] or ARKanoidAR [11] games. Such game elements increase the user’s motivation to keep performing the exercise.

- Color-code: Information can also be transported using a Color-Code which is used by 23 (20%) applications. To illustrate if the user has reached the target appropriately, interACTION [42] uses a color-coded mechanism to inform the patient. The Target is originally displayed in red. Once the user approaches the target, it changes its color to yellow and once the target is reached, it turns green. Another approach used by [15] is to compare the user’s pose and the desired pose with a color code. Once the user’s pose matches the desired pose, the circular glyphs are highlighted in green.

- Self-Evaluation: Altogether, 26 (23%) applications grant the patient some kind of improved Self-Evaluation delivered by either another camera angle or mirror to visualize the real affected body part better as shown in Physio@Home [28] (see Figure 3f), or by displaying an avatar or skeleton based on motion tracking data to grant information on the patient’s own movement. One such example is depicted in [26] for gait symmetry, where the user’s whole body movement is shown via an avatar with different views for a better understanding of gait deviations.

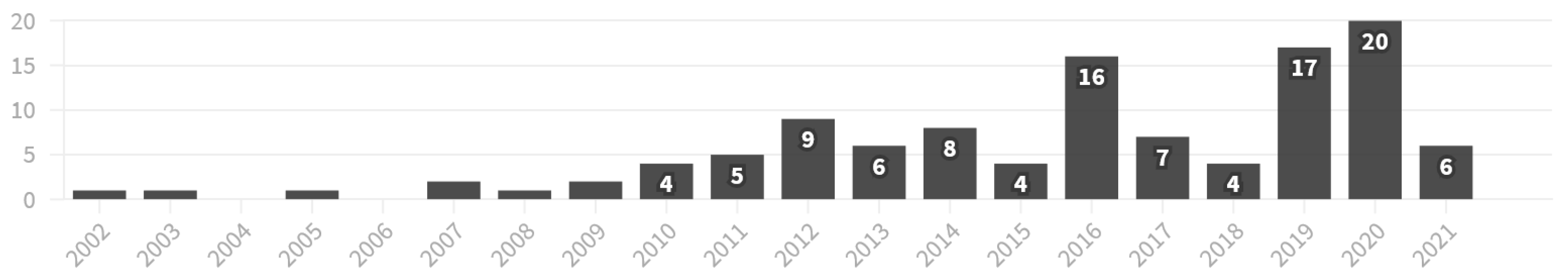

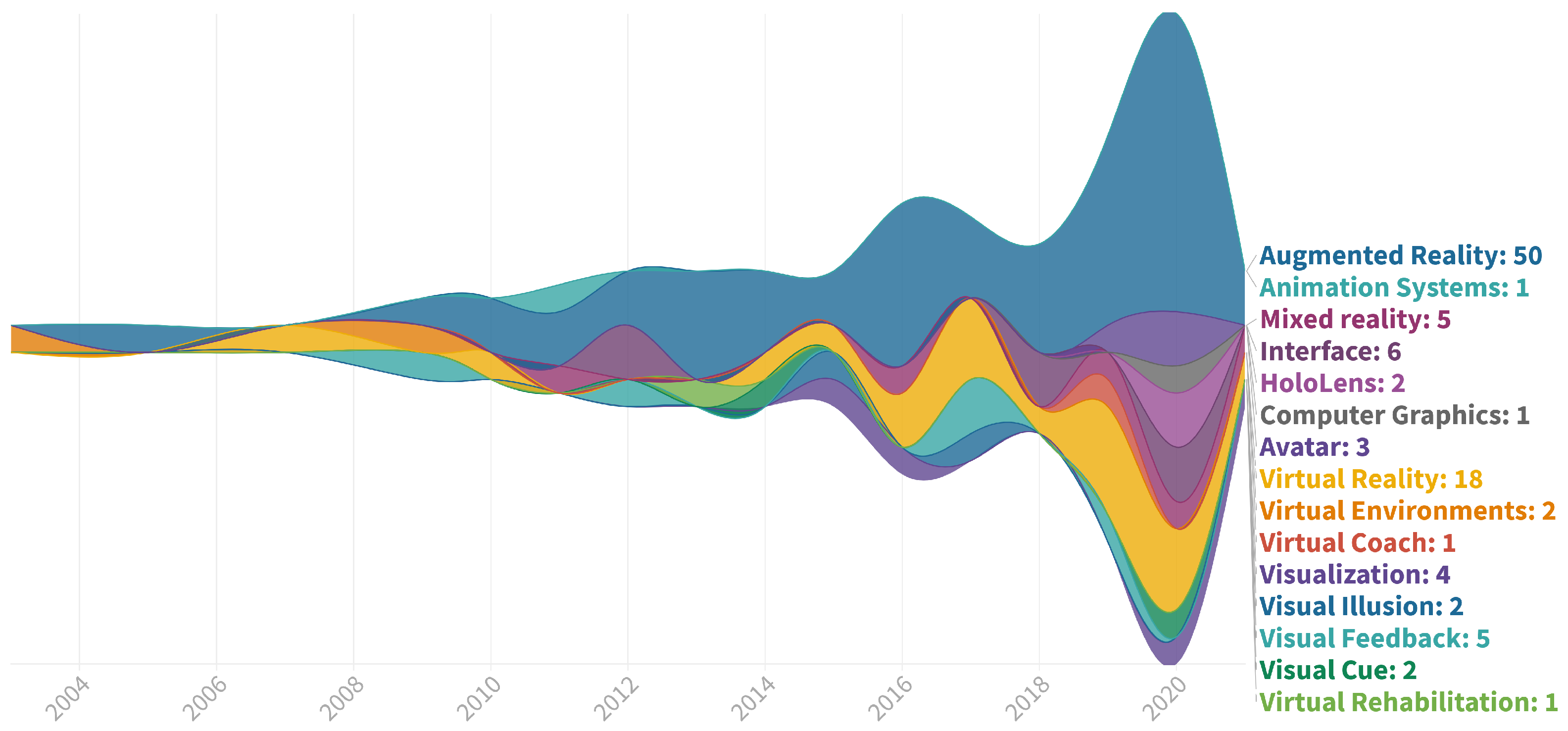

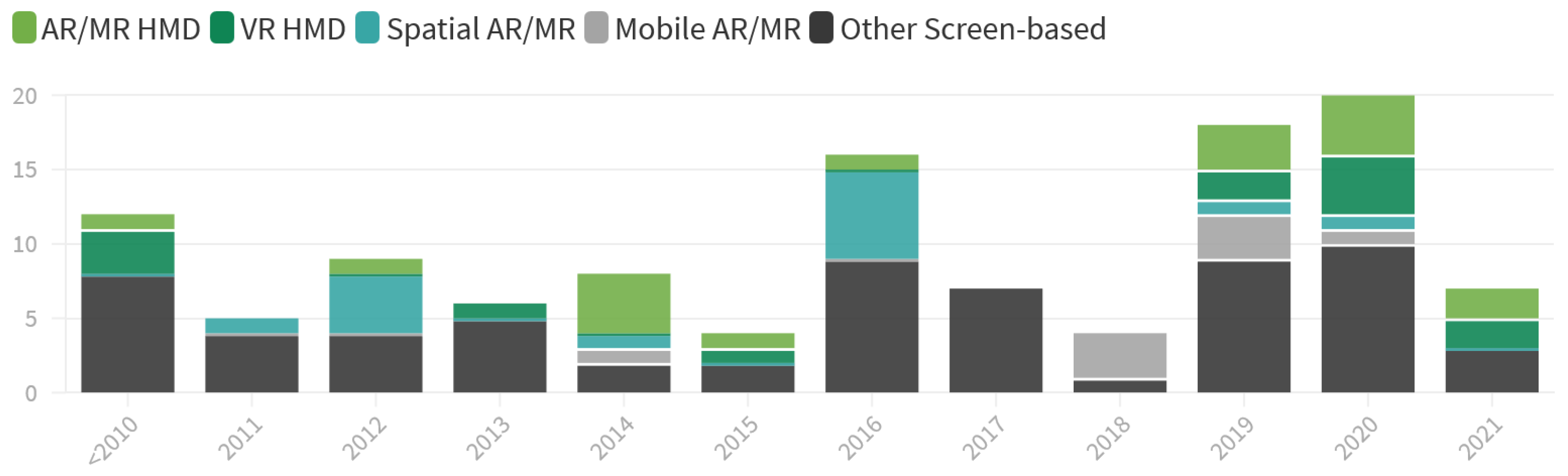

3.2. Time-Based Analysis

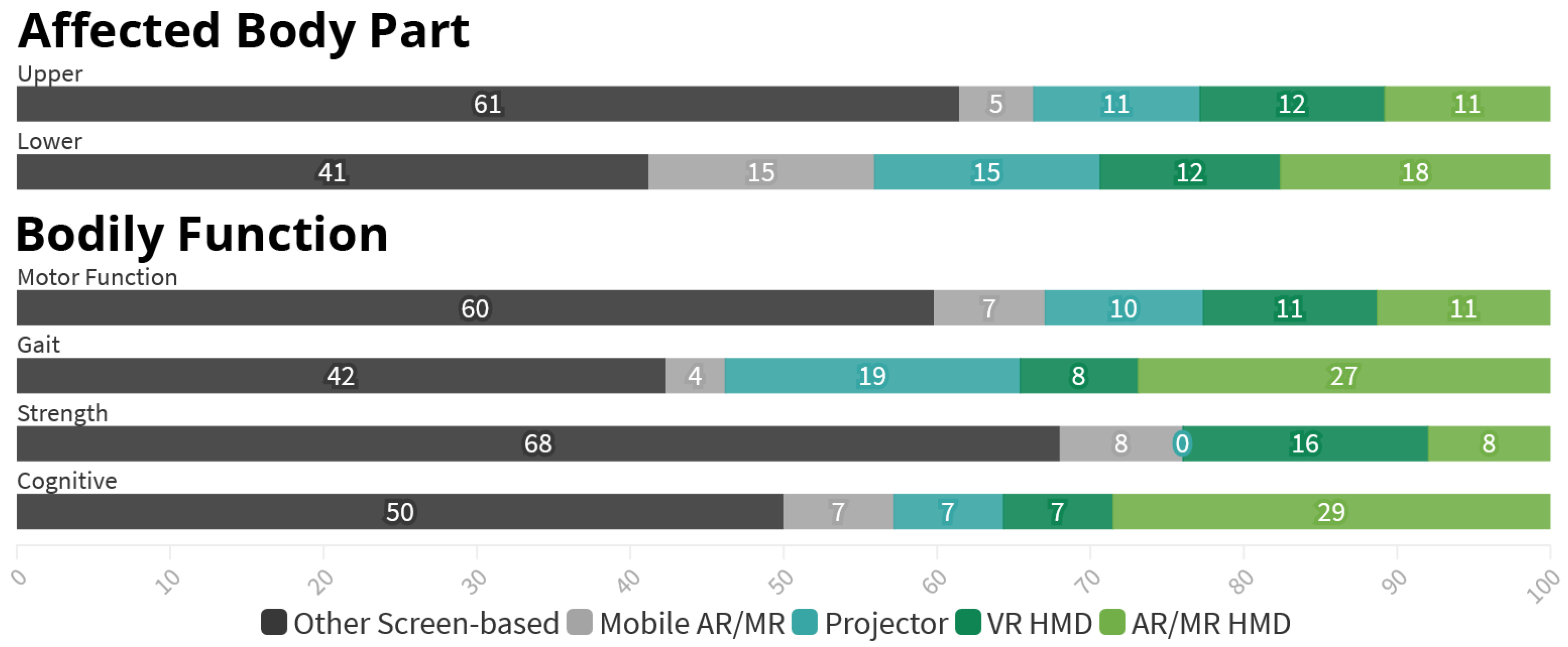

3.3. Patient Type and Bodily Functions

- First, studies aimed at Upper Limb are predominately focusing on individual parts of the limb, sometimes multiple, as seen in Figure 6. Among the 81 references covering Upper Limb, 51 (63%) target either the Hand, Fingers or Wrist, 21 (26%) the Elbow and 20 (25%) the Shoulder, leaving only 19 (23%) references without a specific focus. In contrast, the 33 references regarding Lower Limb are overwhelmingly nonspecific (79%) with only 7 applications (21%) focusing on either the Knee, Ankle or both.

- Further, there is a difference in the targeted Bodily Functions depending on the considered Body Part. 79 (98%) out of the 81 Upper Body applications target the restoration or improvement of specific Motor Functions, while 19 (23%) aim to increase the patient’s muscle Strength. In comparison, with 24 (73%) the largest fraction of the 33 Lower Limb research addresses the patient’s Gait or Balance, especially in order to prevent future falls. Improvement of Specific Motor Function, however, is only targeted in 19 (58%) references here. Muscle Strength is also considered less often, finding explicit mentioning in five (15%) of the references.

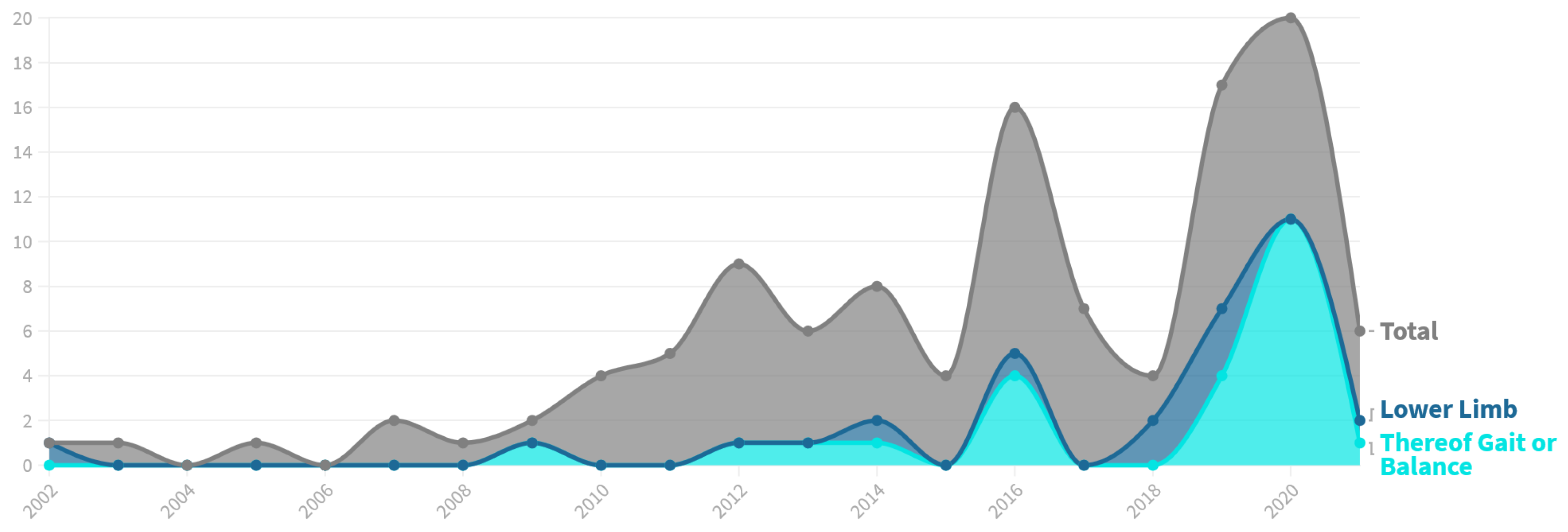

- Lastly, the research focused on both Lower Limb and Gait has increased immensely in recent years, as illustrated in Figure 7. Prior to 2019, we identified a total of 13 references targeting Lower Limb, representing only 18% of the research papers published in this time frame. Further, only eight of these (62%) aim at Gait or Balance. Compared to that, 20 out of 43 applications published since 2019 target Lower Limb (47%). Additionally, the share of Lower Limb research focusing Gait or Balance rose to 80%.

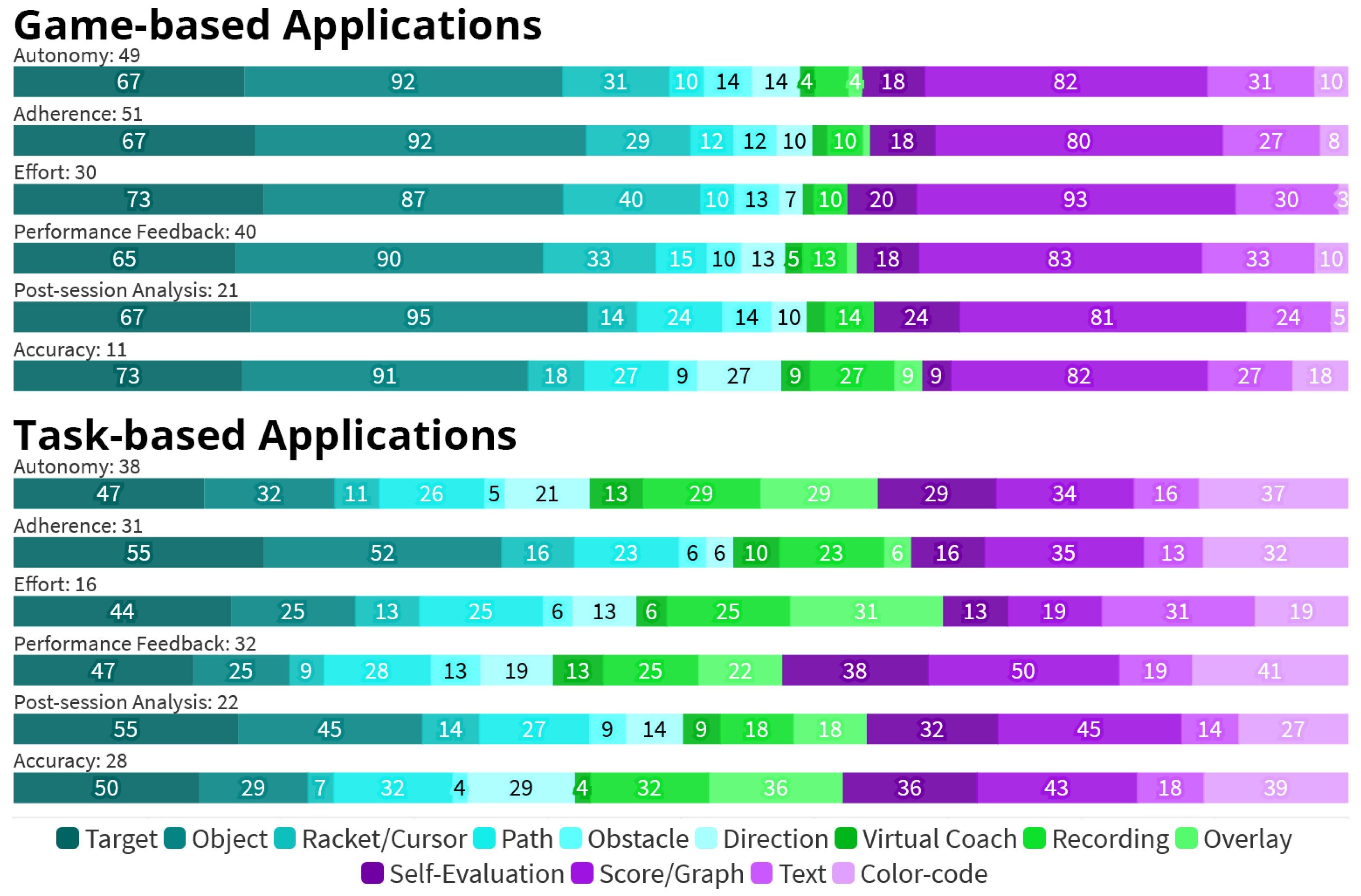

3.4. Medical Purpose and Application Types

- Regarding the Bodily Functions, 11 out of the 13 (85%) applications aiming to provide Cognitive training do so by employing Game elements.

- The 55 Game-based applications are especially often used to increase the patients’ motivation, with 51 (93%) of them targeting increased Adherence. For the 53 Task-based applications, Adherence is a much less prominent goal, which is only addressed by 31 (58%) applications. In total, 30 (55%) of the Game-based applications further aim to increase the patients’ Effort during the session, compared to only 16 (30%) within the set of Task-based approaches.

- Instead, 28 (53%) Task-based applications focus on increased Accuracy, compared to only 11 (20%) within the set of Game-based applications.

- In general, Task-based applications are more balanced in their use of visual elements, while Game-based are more focused on certain elements, namely Objects (91%), Scores or Graphs (80%), and Targets (65%).

- The most common visual elements implemented in Game-based applications are from the Guided Interaction group. Elements from this group are used in 53 Game-based applications (96%) and make up 59% of all elements used here. In comparison, they are used in 38 Task-based applications (72%) and account for 46% of elements used.

- In contrast, only nine Game-based applications (16%) utilize one form of Demonstrated Interaction each, accounting for 4% of elements. Within Task-based applications, 24 (47%) employ at least one Demonstrated Interaction element, accounting for 19% of all elements.

4. Discussion

4.1. Implications for Visual Guidance

- Even for non-game-like applications, the use of AR/MR still provides a certain fascination and helps to keep users engaged. Still, if this is just a rather short-dated novelty effect or can be something that is sustained over time, remains to be seen.

- There is some evidence that there is a closer relation between specific types of Visual Guidance elements and certain Medical Purposes, such as Gait & Balance being addressed through Obstacle elements. However, again, the current body of work does not provide systematic studies of such aspects but base this conclusion on the overall evaluation of an application.

- There is growing evidence that simple video-based tele-rehabilitation approaches can not compete with AR/MR approaches, which should provide an interesting opportunity for future business models, as currently tele-rehabilitation and tele-fitness courses dominate the home market.

4.2. Implications for Output Technology

4.3. Research Opportunities

- The analyzed literature reveals a strong focus on rehabilitation following Neurological conditions, especially Stroke. This may be due to research grants rather being provided to researchers who try to tackle such universal problems. Still, it would help if more research would then try to generalize the findings to other Medical Conditions. In particular, research dealing with AR/MR-based interventions following Injury or physical Disabilities is severely lacking.

- Research on Upper Limb is both more prevalent in general and more diverse, routinely focusing on individual joints and muscles, which at least can be partly explained through the importance of the Hands (and related body parts) for everyday activities and independence. Contrary, a majority of Lower Limb research targets Gait or Balance. The research focused on the use of AR/MR technology in rehabilitation and exercises of individual joints and muscles of the Lower Limb remains scarce. Additionally, research aimed at muscle Strength is severely underrepresented as well, in particular for Lower Limb.

- Regarding the utilized Output Technology, Screens still account for almost half of all display types used, even in most recent years. In contrast, there is ample research opportunity for more advanced AR/MR technologies such as HMDs and Spatial-AR through projection. HMDs might have an advantage for home-based exercise, but Spatial-AR could be installed in facilities and provide much of the same experience without the drawbacks of a (currently) heavy-weight head-worn device.

- The Visual Guidance elements Virtual Coach and on-body Overlays are relatively seldom types of demonstration, although the existing research shows promising results (e.g., [48,49]). While both Virtual Coaches and on-body Overlays are quite complex to realize and require a tracking of the environment or body, we think there is an opportunity here, in particular in combination with modern AR/MR-HMDs and their integrated inside-out tracking functionality.

- Furthermore, there is a lack of research explicitly comparing the effectiveness and advantages of selected Visual Guidance elements. While a few references did compare elements [14,28,30], they only do so aimed at very specific use cases. Therefore, this literature review cannot provide more guidance towards the usefulness and appropriateness of certain elements. Still, our review can serve as an inspirational starting point for practitioners and other researchers alike.

4.4. Limitations and Weaknesses

- Firstly, in this scoping literature review, information was gathered from different studies and sources without assessing or weighing the quality, accuracy, and validity of the papers. Instead, the focus was on collecting evidence to provide an overview of the research topic and assist researchers. Therefore, no assertions or suggestions can be made about the feasibility of the employed visualisations, nor is possible to derive a fair general comparison between them.

- Furthermore, the review was exploratory, employing an open-coding process. Therefore, the chosen categories, codes, and assignments could be considered arbitrary. In order to mitigate this factor, all steps leading to and including the final coding process were performed by at least two independent researchers and discussed with a third reviewer before a final call was made.

- The selection of search keywords is, as with every literature review, a topic for debate. We deliberately did not impose a predefined set of keywords on the search process, but rather applied an iterative process, adding relevant keywords along the way.

- Lastly, within this review, we identified distinct applications. Occasionally, this led to an inclusion of several such applications presented within a single reference or by the same research group. Although distinct, these routinely share many similarities, such as using the same technology, thereby distorting results. Therefore, the results do not accurately describe the amount and quality of research in each category. In order to limit this bias, we did not include papers describing an identical system, but only the one best representing it.

4.5. Strengths

- The review address the broad topic of the use of Augmented and Mixed Reality applications in several areas of medical rehabilitation and for multiple conditions and affected body parts. Further, it takes into consideration a broad spectrum of sources, employing different methods and study designs. It is therefore able to provide an overview of the extent, range and nature of currently evolving areas of research as well as summarize research findings.

- By doing this, it also aids to identify trends, needs and research gaps to aid in future research.

- The review further provides a first taxonomy to classify the research area. The advantage of the exploratory approach employing an open-coding method lies in its bottom-up construction of categories and items based on actual research and relevant items within the sources.

- Additionally, several in-depth qualitative comparisons of selected recent studies were conducted, to provide a better understanding of the reasoning behind technology choices for Output Technology, this paper aims to further guide future research and experiment set-ups.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ACM | Association for Computing Machinery |

| AR | Augmented Reality |

| HCI | Human Computer Interaction |

| HMD | Head-Mounted Display |

| IEEE | Institute of Electrical and Electronics Engineers |

| MR | Mixed Reality |

| VR | Virtual Reality |

| WHO | World Health Organization |

Appendix A

| 1st Author | Ref. | Med. Condition | Aff. Body Part | Bodily Function | Added Value |

|---|---|---|---|---|---|

| Achanccaray | [52] | NE, NS | UL, FH | MF | AC, AH, EF |

| Agopyan | [55] | NE, NS | LL, KN | MF, GB | AC, EF |

| Alamri | [32] a | NE, NS | UL, FH | MF | AH, AU, PA |

| Alamri | [32] b | NE, NS | UL, FH | MF | AH, AU, PA, PF |

| Alexandre | [56] | NE, NS, CO | UL, FH | MF, ST | AC, AH, AU, PA, PF |

| Aung | [57] a | NE, NS | UL | MF, ST | AH, AU, EF, PF |

| Aung | [57] b | NE, NS | UL, FH, SH, EL | MF, ST | AH, AU, EF, PF |

| Aung | [58] | NE, NS | UL, FH, SH, EL | MF | AU, EF |

| Bakker | [23] | NE, NS | BO | CG | AH, AU, EF, PF |

| Baran | [59] | NE, NS | UL, FH | MF | AH, AU, PA, PF |

| Bell | [42] | IS | LL, KN | MF | AC, AH, AU, PF |

| Bouteraa | [27] | NE, NS | UL, FH | MF | AH, PA, PF |

| Broeren | [60] | NE, NS | UL | MF | AH, AU, PF |

| Broeren | [61] | NE, NS | UL, FH | MF, CG | AH, AU, EF |

| Burke | [62] a | NE, NS | UL | MF, ST | AH, AU, EF, PF |

| Burke | [62] b | NE, NS | UL | MF, ST, CG | AH, AU, PF |

| Camporesi | [63] | CO | UL | MF | AH, AU, PA, PF |

| Cavalcanti | [11] | NE | UL, SH, EL | MF | AC, AH, AU, EF, PA, PF |

| Chang | [51] | NE, NS | LL, KN | MF, GB | AH, EF, PA, PF |

| Chen | [43] | NE, NS | LL | GB | AU |

| Colomer | [14] a | NE, NS | UL, FH | MF | AH, AU, EF, PF |

| Colomer | [14] b | NE, NS | UL, FH, SH, EL | MF | AH, AU, EF, PF |

| Corrêa | [64] | CO | UL, FH, EL | MF | AH, EF |

| Da Gama | [65] | NE, NS | UL, FH, SH, EL | MF | AC, AH, AU, EF, PF |

| Dancu | [66] | NE, NS | UL, FH | MF | AC, AU, PF |

| David | [67] | NE, NS | UL, SH, EL | MF | AH, AU, EF, PF |

| Desai | [68] a | NE, NS | LL | GB | AH, AU, EF, PA, PF |

| Desai | [68] b | NE, NS | UL, EL | MF | AH, AU, EF, PA, PF |

| Desai | [68] c | NE, NS | UL, EL | MF, CG | AH, AU, EF, PA, PF |

| Dinevan | [33] a | NE, NS | UL, FH | MF | AH, AU, PF |

| Dinevan | [33] b | NE, NS | UL, SH | MF, ST | AH, AU, PF |

| Enam | [69] | NE, NS | LL, AN | GB | AH, EF |

| Fonteyn | [47] | NE | LL | MF, GB | AU, PA, PF |

| Garcia | [13] | IS | LL, AN | MF | AH, AU, EF, PF |

| Gauthier | [70] | NE, NS | UL, FH, SH, EL | MF, ST, CG | AH, AU, EF |

| Grimm | [71] | NE, NS | UL, FH, SH, EL | MF | AC, AH, PA |

| Guo | [72] | NE, NS | UL, LL | MF | AH, AU, EF, PA, PF |

| Hacioglu | [73] | CO | UL, FH | MF | AH |

| Halic | [74] | CO | UL, FH | MF, ST | AH, AU, PA, PF |

| Han | [49] | CO | UL | MF | AC, AU, PF |

| Mohd Hashim | [53] | NE, NS | LL | MF, CG | AH, AU |

| Held | [36] | NE, NS | LL | MF, GB, CG | AU, PF |

| Hoermann | [75] | NE, NS | UL, FH | MF, CG | AH, AU, EF |

| Hoermann | [76] | NE, NS | UL, FH | MF | AH, EF |

| 1st Author | Ref. | Output Tech. | Application Type | Visual Guidance | Input Tech. |

|---|---|---|---|---|---|

| Achanccaray | [52] | HVR | TB, MI | OB, RE | AD |

| Agopyan | [55] | SC | TB | SE | MBT, AD |

| Alamri | [32] a | HVR | TB | TA, OB | MBT |

| Alamri | [32] b | HVR | TB | OB, PT | MBT |

| Alexandre | [56] | SC | GA | TA, OB, RC, GS | SBT, HD |

| Aung | [57] a | SC | GA | OB, RC, SE, GS | MBT, AD |

| Aung | [57] b | SC | GA | TA, OB, DI, SE, GS | MBT, AD |

| Aung | [58] | SC | TB, MI | TA, PT, OV | MBT, AD |

| Baker | [23] | HAR | GA | TA, OB, RC, RE, GS | SBT |

| Baran | [59] | SC | TB | OB, PT | MBT, AD |

| Bell | [42] | SC | TB | RE, GS, CC | MBT, SBT |

| Bouteraa | [27] | SC | GA | TA, OB, SE, GS | OT, AD |

| Broeren | [60] | SC | GA | TA, OB, GS | HD |

| Broeren | [61] | SC | GA | TA, OB, RC, OS, GS | HD |

| Burke | [62] a | SC | GA | TA, OB, RC, GS | MBT |

| Burke | [62] b | SC | TB | TA, OB, GS, CC | MBT |

| Camporesi | [63] | SC | TB | VT, RE, SE, GS | OT, MBT |

| Cavalcanti | [11] | SC | GA | TA, OB, RC, RE, GS, TE | OT |

| Chang | [51] | HAR | GA | TA, OB, PT, GS, TE | SBT, AD |

| Chen | [43] | SC | GA | OB, DI, OV, SE, GS, TE | OT |

| Colomer | [14] a | SAR | GA | TA, OB, GS, TE | OT |

| Colomer | [14] b | SAR | GA | OB, RC, GS | OT |

| Corrêa | [64] | SC | TB | OB, CC | MBT |

| Da Gama | [65] | SC | GA | TA, OB, RE, GS, TE | OT |

| Dancu | [66] | SC | TB | TA, VT, RE, SE, GS | OT |

| David | [67] | SC | GA | TA, GS | OT |

| Desai | [68] a | SC | GA | OB, SE, GS | OT |

| Desai | [68] b | SC | GA | OB, SE, GS | OT |

| Desai | [68] c | SC | GA | TA, OB, SE, GS, TE | OT |

| Dinevan | [33] a | SC | GA | OB, RC, GS | MBT |

| Dinevan | [33] b | SC | GA | OB, RC, GS, TE | MBT, AD |

| Enam | [69] | SAR | TB | TA | AD |

| Fonteyn | [47] | SAR | TB | TA, OS | MBT, AD |

| Garcia | [13] | MAR | GA | TA, OB, RC, PT | MBT |

| Gauthier | [70] | SC | GA | TA, OB, OS, SE, GS | OT |

| Grimm | [71] | SC | TB | TA, OB | SBT, HD |

| Guo | [72] | MAR | GA | TA, OB, PT, GS, TE | OT |

| Hacioglu | [73] | SC | GA | OB, PT | OT |

| Halic | [74] | SC | GA | OB, OS, GS | SBT |

| Han | [49] | HAR | TB | RE, OV | OT, SBT, AD |

| Mohd Hashim | [53] | HVR | GA | OB, GS, TE | OT |

| Held | [36] | HAR | GA | TA, OB, PT, OS, DI, TE, CC | SBT |

| Hoermann | [75] | SC | GA, MI | OB, GS, CC | OT, AD |

| Hoermann | [76] | SC | MI | RE | AD |

| 1st Author | Ref. | Med. Condition | Aff. Body Part | Bodily Function | Added Value |

|---|---|---|---|---|---|

| Ines | [77] | NE, NS | UL, FH, EL | MF | AH, AU, EF, PA |

| Jin | [78] | NE, NS | LL | GB, ST | AC, AH, AU, PA |

| Jung | [79] | NE, NS | LL, AN | MF, GB, ST | |

| Keskin | [44] a | NE, NS | UL, FH | MF | AH, AU, EF, PA |

| Keskin | [44] b | NE, NS | UL, FH, EL | MF | AH, AU, EF, PA, PF |

| Khan | [39] | CO | UL, LL | GB | AC, AU |

| King | [80] | NE, NS | UL, FH | MF | AH, AU, EF |

| Klein | [81] | NE, NS | UL, SH | MF | EF |

| Kloster | [21] | IS | BO | AH, AU, PF | |

| Koroleva | [82] a | NE, NS | LL | MF, GB | AC, PA, PF |

| Koroleva | [82] b | NE, NS | UL, FH | MF, ST | AC, PA, PF |

| Kowatsch | [24] | CO | UL, LL, BO | ST | AH, AU, EF, PF |

| LaPiana | [83] | NE, NS | UL, FH | MF, ST | AH, AU, EF |

| Lee | [84] | NE, NP | LL | GB | AH, AU, EF |

| Liu | [85] a | NE, NS | UL, FH | MF, ST | AH, AU, PF |

| Liu | [85] b | NE, NS | UL, FH | MF, ST | AH, AU, PF |

| Liu | [85] c | NE, NS | UL, FH, SH, EL | MF, ST | AC, AH, AU, PF |

| Liu | [26] a | NE, NS | LL | GB | AU, PF |

| Liu | [26] b | NE, NS | UL, FH | MF | AH, AU |

| Lledó | [86] | NE, NS | UL | MF | AC, AH, AU, PA, PF |

| Loureiro | [87] | NE, NS | UL, FH, SH, EL | MF | AC, AH |

| Luo | [88] | NE, NS | UL, FH | MF | AH |

| Manuli | [89] | NE, NS | LL | MF, GB, ST, CG | AH, EF, PF |

| Monge | [90] | NE, NS, IS, CO | LL | MF | AH, AU, EF, PF |

| Mostajeran | [48] | CO | GB | AU, PA, PF | |

| Mostajeran | [37] | CO | MF, GB, CG | AC, AH, AU, PA, PF | |

| Mouraux | [91] | NE, IS | UL | MF | AH, AU |

| Muñoz | [92] | NE, IS | UL, FH, SH, EL | MF | AC, AH, AU, PA, PF |

| Nanapragasam | [93] | NE, NS | LL | GB | |

| Pachoulakis | [40] | NE, NP | UL, FH, SH, EL, LL | MF | AC, AH, AU, EF, PA, PF |

| Paredes | [54] | CO | LL | GB, CG | AH, AU, EF, PA, PF |

| Park | [31] | NE, NS | UL, FH | MF | AH, AU |

| Phongamwong | [46] | NE, NS | LL | MF, GB | AC, PA, PF |

| Ramirez | [94] | NE, NS | LL | ST | AH, AU, EF |

| Regenbrecht | [95] | NE, NS | UL, FH | MF | AH |

| Saraee | [96] | NE, NS, IS, CO | UL | MF, ST | AH, EF, PA |

| Seyedebrahimi | [34] | NE, NS, NP | UL, FH | MF, CG | EF |

| Shen | [41] | NE, NS | UL, FH | MF | AC, AH, AU, PF |

| Sodhi | [30] a | CO | UL, FH | MF | AC, AU |

| Sodhi | [30] b | CO | UL, FH | MF | AC, AU |

| Sodhi | [30] c | CO | UL, FH | MF | AC, AU |

| Sodhi | [30] d | CO | UL, FH | MF | AC, AU, PF |

| Song | [97] a | NE, NS | UL | MF | AH, AU, EF, PF |

| Song | [97] b | NE, NS | UL | MF, CG | AH, AU, PF |

| Sousa | [12] | IS | UL, SH, EL | MF | AC, AH, AU, EF, PA, PF |

| Sror | [98] | NE, NS | UL, EL | MF | AC, EF, PF |

| 1st Author | Ref. | Output Tech. | Application Type | Visual Guidance | Input Tech. |

|---|---|---|---|---|---|

| Ines | [77] | SAR | GA | TA, OB | MBT |

| Jin | [78] | HAR | GA | TA, OB, PT, OS, DI, GS | OT, AD |

| Jung | [79] | HVR | TB | RE, SE | AD |

| Keskin | [44] a | SC | TB | TA, OB | OT |

| Keskin | [44] b | SC | GA | OB, RC, GS | OT |

| Khan | [39] | HVR | TB | RE | MBT |

| King | [80] | SC | GA | TA, OB, RC, GS | MBT |

| Klein | [81] | SC | TB, MI | OV | MBT, AD |

| Kloster | [21] | HVR | GA | TA, TE, CC | SBT |

| Koroleva | [82] a | SC | TB | PT, OS, GS | OT |

| Koroleva | [82] b | SC | GA | OB, PT, GS, CC | OT |

| Kotwasch | [24] | HAR, SC | TB | VT, TE | SBT |

| LaPiana | [83] | HVR | GA | TA, OB, GS | MBT |

| Lee | [84] | HAR | TB | RE, TE | |

| Liu | [85] a | SC | TB | TA, OB, RC, GS, CC | MBT |

| Liu | [85] b | SC | GA | OB, RC, GS | MBT |

| Liu | [85] c | SC | TB | TA, OB, RC, PT, GS, CC | MBT |

| Liu | [26] a | SC | TB | SE | MBT, AD |

| Liu | [85] b | SC | MI | RE, OV | MBT, AD |

| Lledó | [86] | SC | TB | TA, OB, GS, CC | HD |

| Loureiro | [87] | SC | TB | TA, OB, PT | HD |

| Luo | [88] | HAR | TB | OB | SBT |

| Manuli | [89] | SC | TB | TA, OS | AD |

| Monge | [90] | MAR | GA | VT, GS | SBT, AD |

| Mostajeran | [48] | HAR | TB | VT | OT |

| Mostajeran | [37] | HAR | GA | TA, OB, PT, VT | OT, AD |

| Mouraux | [91] | SC | GA, MI | TA, OB, SE, CC | OT |

| Muñoz | [92] | SC | GA | OB, DI, RE, SE, GS | OT |

| Nanapragasam | [93] | SC | TA, SE | MBT, AD | |

| Pachoulakis | [40] | SC | TB | PT, DI, RE, OV, GS, TE | OT |

| Paredes | [54] | HAR | GA | TA, OB, OS, GS, TE | OT, SBT, AD |

| Park | [31] | HVR | GA | TA, OB, GS | OT |

| Phongamwong | [46] | SC | TB | SE, GS | MBT, AD |

| Ramirez | [94] | MAR | GA | TA, OB, GS, TE | SBT |

| Regenbrecht | [95] | SC | GA | OB | OT |

| Saraee | [96] | SC | TB | TA, OB, RC, PT | HD |

| Seyedebrahimi | [34] | SAR, SC | GA | TA, RC, GS | MBT |

| Shen | [41] | HAR | GA | TA, OB, DI, OV, GS, TE, CC | MBT, SBT |

| Sodhi | [30] a | SAR | TB | DI, OV | OT |

| Sodhi | [30] b | SAR | TB | DI, OV | OT |

| Sodhi | [30] c | SAR | TB | TA, PT, OV, CC | OT |

| Sodhi | [30] d | SAR | TB | DI, OV, CC | OT |

| Song | [97] a | MAR | GA | TA, OB, RC, GS | OT |

| Song | [97] b | MAR | GA | TA, OB, GS, TE | OT |

| Sousa | [12] | SAR | TB | TA, RC, PT, OV, GS, CC | MBT |

| Sror | [98] | SC | TB | TA, TE | OT, HD |

| 1st Author | Ref. | Med. Condition | Aff. Body Part | Bodily Function | Added Value |

|---|---|---|---|---|---|

| Syed Ali Fathima | [99] | CO | UL, FH, SH, EL | MF | AH, AU, PA, PF |

| Tang | [28] | IS | UL, SH | MF, ST | AC, AU, PF |

| Theunissen | [50] a | NE, NS | LL | MF, GB | AC, AH, AU, EF, PA, PF |

| Theunissen | [50] b | NE, NS | LL | MF, GB | AC, AH, AU, EF, PA, PF |

| Theunissen | [50] c | NE, NS | LL | MF, GB | AC, AH, AU, EF, PA, PF |

| Thikey | [100] | NE, NS | LL, AN, KN | MF, GB | AC, AH, PA, PF |

| Timmermans | [35] a | NE, NS | LL | GB | AH, AU, PF |

| Timmermans | [35] b | NE, NS | LL | GB | AH, AU, PF |

| Timmermans | [35] c | NE, NS | LL | GB | AH, AU, PF |

| Toh | [29] | NE, NS | UL, FH | MF | AH, AU, EF, PF |

| Trojan | [101] a | NE, NS, CO | UL, FH | MF | AH, AU, PA, PF |

| Trojan | [101] b | NE, NS, CO | UL, FH | MF | AH, AU, PA, PF |

| Trojan | [101] c | NE, NS, CO | UL, FH | MF | AH, AU, PA, PF |

| Trojan | [101] d | NE, NS, CO | UL, FH | MF | AH, AU, PA, PF |

| Velloso | [22] | CO | ST | AC, AU, EF, PA, PF | |

| Vidrios-Serrano | [45] | NE, NS | UL | MF | AH, AU, PA |

| Voinea | [102] | NE, NS | UL, FH | MF | AH, AU |

| Wei | [103] | NE, NS | UL | MF | AH, AU, PA, PF |

| Xue | [104] | NE, NS, IS, CO | UL, FH, SH, EL | MF, ST | AC, AH, AU, PA, PF |

| Yang | [105] | CO | UL, LL | MF | AC, AU, PA, PF |

| Yeh | [106] | NE, NS | UL, FH, SH | MF | AC, AH, AU, PA |

| Yeo | [38] | CO | UL, SH | MF | AC, AH, AU, PA, PF |

| Yu | [15] a | CO | UL | MF, ST | AC, AU, PF |

| Yu | [15] b | CO | UL | MF, ST | AC, AU, PF |

| 1st Author | Ref. | Output Tech. | Application Type | Visual Guidance | Input Tech. |

|---|---|---|---|---|---|

| Syed Ali Fathima | [99] | SC | GA | TA, OB, GS | OT |

| Tang | [28] | SC | TB | TA, PT, DI, OV, SE, GS, TE, CC | MBT |

| Theunissen | [50] a | SC | GA | GS | MBT, SBT, AD |

| Theunissen | [50] b | MAR | GA | TA, OB, GS | SBT |

| Theunissen | [50] c | SC | TB | SBT | |

| Thikey | [100] | SC | TB | TA, SE, GS, CC | MBT |

| Timmermans | [35] a | SAR | TB | OS | AD |

| Timmermans | [35] b | SAR | TB | TA | AD |

| Timmermans | [35] c | SAR | GA | OB | AD |

| Toh | [29] | SC | GA | TA, OB, RC, OS, DI, GS, TE | MBT |

| Trojan | [101] a | HAR | MI | TA, RE, CC | OT |

| Trojan | [101] b | HAR | MI | TA, RE | OT |

| Trojan | [101] c | HAR | GA, MI | TA, OB, RE | OT |

| Trojan | [101] d | HAR | MI | OB, RE | OT |

| Velloso | [22] | SC | TB | DI, RE, OV, SE, GS, TE, CC | OT, SBT |

| Vidrios-Serrano | [45] | HAR | TB | TA, OB, RC, CC | MBT, HD |

| Voinea | [102] | HVR | TB, MI | VT, RE, SE | |

| Wei | [103] | SC | TB | SE, GS, CC | AD |

| Xue | [104] | MAR | GA | TA, OB | OT |

| Yang | [105] | HVR | TB | TA, OB, OV, SE | SBT |

| Yeh | [106] | SC | TB | TA, OB | MBT |

| Yeo | [38] | SC | TB | TA, DI, RE, SE, GS, TE | OT |

| Yu | [15] a | HVR | TB | TA, OB, PT, DI, RE, SE, CC | OT |

| Yu | [15] b | HVR | TB | PT, SE, CC | OT, MBT |

| Category | Abbreviation | ||

|---|---|---|---|

| Medical Condition | NE = Neurological | NS = Stroke | NP = Parkinson |

| IS = Injury/Surgery | CO = Other Condition | ||

| Affected Body part | UL = Upper Limb | FH = Hand/Fingers/Wrists | SH = Shoulder |

| EL = Elbow | LL = Lower Limb | AN = Ankle | |

| KN = Knee | BO = Other | ||

| Bodily Function | MF = Motor function | GB = Gait/Balance | ST = Strength |

| CG = Cognitive function | |||

| Added Value | AH = Adherence | PA = Post-session Analysis | AC = Accuracy |

| AU = Autonomy | PF = Performance Feedback | EF = Effort | |

| Output Technology | HVR = VR HMD | HAR = AR/MR HMD | SAR = Spatial AR/MR |

| MAR = Mobile AR/MR | SC = Other Screen-based | ||

| Application Type | GA = Game-based | TB = Task-based | MI = Mirror Therapy |

| Visual Guidance | TA = Target | OB = Object | RC = Racket/Cursor |

| PT = Path | OS = Obstacle | DI = Direction | |

| VT = Virtual Coach | RE = Recording | OV = Overlay | |

| SE = Self-Evaluation | GS = Score/Graph | TE = Text | |

| CC = Color-code | |||

| Input Technology | OT = Optical Tracking | MBT = Marker-based Tracking | AD = Additional Device |

| HD = Haptic Device | SBT = Sensor-based Tracking |

References

- Gimigliano, F.; Negrini, S. The World Health Organization “Rehabilitation 2030: A call for action”. Eur. J. Phys. Rehabil. Med. 2017, 53, 155–168. [Google Scholar] [CrossRef] [PubMed]

- Lenz, G. Rehabilitation Delivery. In Encyclopedia of Public Health; Springer: Dordrecht, The Netherlands, 2008; pp. 1243–1246. [Google Scholar] [CrossRef]

- WHO. Global Health Estimates: Life Expectancy and Leading Causes of Death and Disability; WHO: Genava, Switzerland, 2021. [Google Scholar]

- Virani, S.S.; Alonso, A.; Benjamin, E.J.; Bittencourt, M.S.; Callaway, C.W.; Carson, A.P.; Chamberlain, A.M.; Chang, A.R.; Cheng, S.; Delling, F.N.; et al. Heart disease and stroke statistics—2020 update: A report from the American Heart Association. Circulation 2020, 141, 139–596. [Google Scholar] [CrossRef] [PubMed]

- McKenna, C.; Chen, P.; Barrett, A.M. Stroke: Impact on Life and Daily Function. In Changes in the Brain: Impact on Daily Life; Springer: Berlin, Germany, 2017; pp. 87–115. [Google Scholar] [CrossRef]

- Sjölund, B.H. Physical Medicine and Rehabilitation. In Encyclopedia of Pain; Springer: Berlin/Heidelberg, Germany, 2013; Volume 4, pp. 2912–2916. [Google Scholar] [CrossRef]

- Kirch, W. Physical Therapy. In Encyclopedia of Public Health; Springer: Dordrecht, The Netherlands, 2008; pp. 1108–1109. [Google Scholar]

- Bulat, P. Occupational Therapy. In Encyclopedia of Public Health; Springer: Dordrecht, The Netherlands, 2008. [Google Scholar] [CrossRef]

- Langer, A.; Gassner, L.; Flotz, A.; Hasenauer, S.; Gruber, J.; Wizany, L.; Pokan, R.; Maetzler, W.; Zach, H. How COVID-19 will boost remote exercise-based treatment in Parkinson’s disease: A narrative review. NPJ Parkinson’s Dis. 2021, 7, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Cavalcanti, V.C.; Santana, M.I.D.; Gama, A.E.D.; Correia, W.F. Usability assessments for augmented reality motor rehabilitation solutions: A systematic review. Int. J. Comput. Games Technol. 2018, 2018, 5387896. [Google Scholar] [CrossRef]

- Cavalcanti, V.C.; Ferreira, M.I.d.S.; Teichrieb, V.; Barioni, R.R.; Correia, W.F.M.; Da Gama, A.E.F. Usability and effects of text, image and audio feedback on exercise correction during augmented reality based motor rehabilitation. Comput. Graph. 2019, 85, 100–110. [Google Scholar] [CrossRef]

- Sousa, M.; Vieira, J.; Medeiros, D.; Arsénio, A.; Jorge, J. SleeveAR: Augmented reality for rehabilitation using realtime feedback. In Proceedings of the International Conference on Intelligent User Interfaces, Sonoma, CA, USA, 7–10 March 2016; pp. 175–185. [Google Scholar] [CrossRef]

- Garcia, J.A.; Navarro, K.F. The mobile RehApp™: An AR-based mobile game for ankle sprain rehabilitation. In Proceedings of the SeGAH 2014—IEEE 3rd International Conference on Serious Games and Applications for Health, Rio de Janeiro, Brazil, 14–16 May 2014. [Google Scholar] [CrossRef]

- Colomer, C.; Llorens, R.; Noé, E.; Alcañiz, M. Effect of a mixed reality-based intervention on arm, hand, and finger function on chronic stroke. J. NeuroEng. Rehabil. 2016, 13, 45. [Google Scholar] [CrossRef]

- Yu, X.; Angerbauer, K.; Mohr, P.; Kalkofen, D.; Sedlmair, M. Perspective Matters: Design Implications for Motion Guidance in Mixed Reality. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality, ISMAR 2020, Virtual, 9–13 November 2020; pp. 577–587. [Google Scholar] [CrossRef]

- von Elm, E.; Schreiber, G.; Haupt, C.C. Methodische Anleitung für Scoping Reviews (JBI-Methodologie). Z. Evid. Fortbild. Qual. Gesundhwes. 2019, 143, 1–7. [Google Scholar] [CrossRef]

- Arksey, H.; O’Malley, L. Scoping studies: Towards a methodological framework. Int. J. Soc. Res. Methodol. Theory Pract. 2005, 8, 19–32. [Google Scholar] [CrossRef]

- Merino, L.; Schwarzl, M.; Kraus, M.; Sedlmair, M.; Schmalstieg, D.; Weiskopf, D. Evaluating Mixed and Augmented Reality: A Systematic Literature Review (2009–2019). arXiv 2020, arXiv:2010.05988. [Google Scholar]

- Azuma, R.T. A Survey of Augmented Reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Speicher, M.; Hall, B.D.; Nebeling, M. What is Mixed Reality? In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; Volume 15. [Google Scholar] [CrossRef]

- Kloster, M.; Babic, A. Mobile VR-Application for Neck Exercises. In Studies in Health Technology and Informatics; IOS Press: Amsterdam, The Netherlands, 2019; Volume 262, pp. 206–209. [Google Scholar] [CrossRef]

- Velloso, E.; Bulling, A.; Gellersen, H. MotionMA. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Montréal, QC, Canada, 22–27 April 2006; ACM: New York, NY, USA, 2013; pp. 1309–1318. [Google Scholar] [CrossRef]

- Bakker, M.; Boonstra, N.; Nijboer, T.; Holstege, M.; Achterberg, W.; Chavannes, N. The design choices for the development of an Augmented Reality game for people with visuospatial neglect. Clin. eHealth 2020, 3, 82–88. [Google Scholar] [CrossRef]

- Kowatsch, T.; Lohse, K.M.; Erb, V.; Schittenhelm, L.; Galliker, H.; Lehner, R.; Huang, E.M. Hybrid ubiquitous coaching with a novel combination of mobile and holographic conversational agents targeting adherence to home exercises: Four design and evaluation studies. J. Med. Internet Res. 2021, 23, e23612. [Google Scholar] [CrossRef] [PubMed]

- Raskar, R.; Welch, G.; Fuchs, H. Spatially augmented reality. In Augmented Reality: Placing Artificial Objects in Real Scenes; CRC Press: Boca Raton, FL, USA, 1999; pp. 64–71. [Google Scholar]

- Liu, L.Y.; Sangani, S.; Patterson, K.K.; Fung, J.; Lamontagne, A. Real-Time Avatar-Based Feedback to Enhance the Symmetry of Spatiotemporal Parameters after Stroke: Instantaneous Effects of Different Avatar Views. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 878–887. [Google Scholar] [CrossRef] [PubMed]

- Bouteraa, Y.; Abdallah, I.B.; Elmogy, A.M. Training of Hand Rehabilitation Using Low Cost Exoskeleton and Vision-Based Game Interface. J. Intell. Robot. Syst. Theory Appl. 2019, 96, 31–47. [Google Scholar] [CrossRef]

- Tang, R.; Yang, X.D.; Bateman, S.; Jorge, J.; Tang, A. Physio@Home: Exploring visual guidance and feedback techniques for physiotherapy exercises. In Proceedings of the Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 4123–4132. [Google Scholar] [CrossRef]

- Toh, A.; Jiang, L.; Keong Lua, E. Augmented Reality Gaming for Rehab@Home; Technical report; ACM: New York, NY, USA, 2011. [Google Scholar]

- Sodhi, R.; Benko, H.; Wilson, A. LightGuide. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 179–188. [Google Scholar] [CrossRef]

- Park, W.; Kim, J.; Kim, M. Efficacy of virtual reality therapy in ideomotor apraxia rehabilitation. Medicine 2021, 100, e26657. [Google Scholar] [CrossRef]

- Alamri, A.; Kim, H.N.; El Saddik, A. A decision model of stroke patient rehabilitation with augmented reality-based games. In Proceedings of the IEEE 2010 International Conference on Autonomous and Intelligent Systems, Povoa de Varzim, Portugal, 21–23 June 2010. [Google Scholar] [CrossRef]

- Dinevan, A.; Aung, Y.M.; Al-Jumaily, A. Human computer interactive system for fast recovery based stroke rehabilitation. In Proceedings of the 2011 11th International Conference on Hybrid Intelligent Systems (HIS), Malacca, Malaysia, 5–8 December 2011; pp. 647–652. [Google Scholar] [CrossRef]

- Seyedebrahimi, A.; Khosrowabadi, R.; Hondori, H.M. Brain Mechanism in the Human-Computer Interaction Modes Leading to Different Motor Performance. In Proceedings of the 2019 27th Iranian Conference on Electrical Engineering (ICEE), Yazd, Iran, 30 April–2 May 2019; pp. 1802–1806. [Google Scholar] [CrossRef]

- Timmermans, C.; Roerdink, M.; van Ooijen, M.W.; Meskers, C.G.; Janssen, T.W.; Beek, P.J. Walking adaptability therapy after stroke: Study protocol for a randomized controlled trial. Trials 2016, 17, 425. [Google Scholar] [CrossRef]

- Held, J.P.O.; Yu, K.; Pyles, C.; Veerbeek, J.M.; Bork, F.; Heining, S.M.; Navab, N.; Luft, A.R. Augmented reality-based rehabilitation of gait impairments: Case report. JMIR mHealth uHealth 2020, 8, e17804. [Google Scholar] [CrossRef]

- Mostajeran, F.; Steinicke, F.; Ariza Nunez, O.J.; Gatsios, D.; Fotiadis, D. Augmented Reality for Older Adults: Exploring Acceptability of Virtual Coaches for Home-based Balance Training in an Aging Population. In Proceedings of the Conference on Human Factors in Computing Systems, Online, 23 April 2020. [Google Scholar] [CrossRef]

- Yeo, S.M.; Lim, J.Y.; Do, J.G.; Lim, J.Y.; In Lee, J.; Hwang, J.H. Effectiveness of interactive augmented reality-based telerehabilitation in patients with adhesive capsulitis: Protocol for a multi-center randomized controlled trial. BMC Musculoskelet. Disord. 2021, 22, 386. [Google Scholar] [CrossRef]

- Khan, O.; Ahmed, I.; Cottingham, J.; Rahhal, M.; Arvanitis, T.N.; Elliott, M.T. Timing and correction of stepping movements with a virtual reality avatar. PLoS ONE 2020, 15, e0229641. [Google Scholar] [CrossRef]

- Pachoulakis, I.; Xilourgos, N.; Papadopoulos, N.; Analyti, A. A Kinect-Based Physiotherapy and Assessment Platform for Parkinson’s Disease Patients. J. Med. Eng. 2016, 2016, 9413642. [Google Scholar] [CrossRef]

- Shen, Y.; Gu, P.W.; Ong, S.K.; Nee, A.Y. A novel approach in rehabilitation of hand-eye coordination and finger dexterity. Virtual Real. 2012, 16, 161–171. [Google Scholar] [CrossRef]

- Bell, K.M.; Onyeukwu, C.; McClincy, M.P.; Allen, M.; Bechard, L.; Mukherjee, A.; Hartman, R.A.; Smith, C.; Lynch, A.D.; Irrgang, J.J. Verification of a portable motion tracking system for remote management of physical rehabilitation of the knee. Sensors 2019, 19, 1021. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Hu, B.; Gao, Y.; Liao, Z.; Li, J.; Hao, A. Lower Limb Balance Rehabilitation of Post-stroke Patients Using an Evaluating and Training Combined Augmented Reality System. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Recife, Brazil, 9–13 November 2020; pp. 217–218. [Google Scholar] [CrossRef]

- Keskin, Y.; Gürcan Atçi, A.; Ürkmez, B.; Akgül, Y.S.; Özaras, N.; Aydin, T. Efficacy of a video-based physical therapy and rehabilitation system in patients with post-stroke hemiplegia: A randomized, controlled, pilot study. Turk Geriatr Dergisi 2020, 23, 118–128. [Google Scholar] [CrossRef]

- Vidrios-Serrano, C.; Bonilla, I.; Vigueras-Gomez, F.; Mendoza, M. Development of a haptic interface for motor rehabilitation therapy using augmented reality. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Milan, Italy, 25–29 August 2015; pp. 1156–1159. [Google Scholar] [CrossRef]

- Phongamwong, C.; Rowe, P.; Chase, K.; Kerr, A.; Millar, L. Treadmill training augmented with real-time visualisation feedback and function electrical stimulation for gait rehabilitation after stroke: A feasibility study. BMC Biomed. Eng. 2019, 1, 20. [Google Scholar] [CrossRef]

- Fonteyn, E.M.; Heeren, A.; Engels, J.J.C.; Boer, J.J.; van de Warrenburg, B.P.; Weerdesteyn, V. Gait adaptability training improves obstacle avoidance and dynamic stability in patients with cerebellar degeneration. Gait Posture 2014, 40, 247–251. [Google Scholar] [CrossRef]

- Mostajeran, F.; Katzakis, N.; Ariza, O.; Freiwald, J.P.; Steinicke, F. Welcoming a holographic virtual coach for balance training at home: Two focus groups with older adults. In Proceedings of the 26th IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 1465–1470. [Google Scholar] [CrossRef]

- Han, P.H.; Chen, K.W.; Hsieh, C.H.; Huang, Y.J.; Hung, Y.P. AR-Arm: Augmented visualization for guiding arm movement in the first-person perspective. In Proceedings of the ACM International Conference Proceeding Series. Association for Computing Machinery, Geneva, Switzerland, 25–27 February 2016. [Google Scholar] [CrossRef]

- Theunissen, T.; Ensink, C.; Bakker, R.; Keijsers, N. Movin(g) Reality: Rehabilitation after a CVA with Augmented Reality. In Proceedings of the European Conference on Pattern Languages of Programs, Virtual. 1–4 July 2020. [Google Scholar] [CrossRef]

- Chang, W.C.; Ko, L.W.; Yu, K.H.; Ho, Y.C.; Chen, C.H.; Jong, Y.J.; Huang, Y.P. EEG analysis of mixed-reality music rehabilitation system for post-stroke lower limb therapy. J. Soc. Inf. Disp. 2019, 27, 372–380. [Google Scholar] [CrossRef]

- Achanccaray, D.; Izumi, S.I.; Hayashibe, M. Visual-Electrotactile Stimulation Feedback to Improve Immersive Brain-Computer Interface Based on Hand Motor Imagery. Comput. Intell. Neurosc. 2021, 2021, 8832686. [Google Scholar] [CrossRef]

- Mohd Hashim, S.H.; Ismail, M.; Manaf, H.; Hanapiah, F.A. Development of dual cognitive task virtual reality game addressing stroke rehabilitation. In Proceedings of the 2019 3rd International Conference on Virtual and Augmented Reality, Perth, WN, Australia, 23–25 February 2019; pp. 21–25. [Google Scholar] [CrossRef]

- Paredes, T.V.; Postolache, O.; Monge, J.; Girao, P.S. Gait rehabilitation system based on mixed reality. In Proceedings of the 2021 Telecoms Conference (ConfTELE), Leiria, Portugal, 11–12 February 2021. [Google Scholar] [CrossRef]

- Agopyan, H.; Griffet, J.; Poirier, T.; Bredin, J. Modification of knee flexion during walking with use of a real-time personalized avatar. Heliyon 2019, 5, e02797. [Google Scholar] [CrossRef]

- Alexandre, R.; Postolache, O.; Girao, P.S. Physical Rehabilitation based on Smart Wearable and Virtual Reality Serious Game. In Proceedings of the 2019 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Auckland, New Zealand, 20–23 May 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Aung, Y.M.; Al-Jumaily, A. AR based upper limb rehabilitation system. In Proceedings of the 2012 4th IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob), Rome, Italy, 24–27 June 2012; pp. 213–218. [Google Scholar] [CrossRef]

- Aung, Y.M.; Al-Jumaily, A.; Anam, K. A novel upper limb rehabilitation system with self-driven virtual arm illusion. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2014, 2014, 3614–3617. [Google Scholar] [CrossRef]

- Baran, M.; Lehrer, N.; Siwiak, D.; Chen, Y.; Duff, M.; Ingalls, T.; Rikakis, T. Design of a home-based adaptive mixed reality rehabilitation system for stroke survivors. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2011, 2011, 7602–7605. [Google Scholar] [CrossRef]

- Broeren, J.; Rydmark, M.; Björkdahl, A.; Sunnerhagen, K.S. Assessment and training in a 3-dimensional virtual environment with haptics: A report on 5 cases of motor rehabilitation in the chronic stage after stroke. Neurorehabil. Neural Repair 2007, 21, 180–189. [Google Scholar] [CrossRef] [PubMed]

- Broeren, J.; Claesson, L.; Goude, D.; Rydmark, M.; Sunnerhagen, K.S. Virtual rehabilitation in an activity centre for community-dwelling persons with stroke: The possibilities of 3-dimensional computer games. Cerebrovasc. Dis. 2008, 26, 289–296. [Google Scholar] [CrossRef] [PubMed]

- Burke, J.W.; McNeill, M.D.; Charles, D.K.; Morrow, P.J.; Crosbie, J.H.; McDonough, S.M. Augmented reality games for upper-limb stroke rehabilitation. In Proceedings of the 2010 Second International Conference on Games and Virtual Worlds for Serious Applications, Braga, Portugal, 25–26 March 2010; pp. 75–78. [Google Scholar] [CrossRef]

- Camporesi, C.; Kallmann, M.; Han, J.J. VR solutions for improving physical therapy. In Proceedings of the 2013 IEEE Virtual Reality (VR), Lake Buena Vista, FL, USA, 18–20 March 2013; pp. 77–78. [Google Scholar] [CrossRef]

- Corrêa, D.; Ficheman, I.K.; De, R.; Lopes, D.; Klein, A.N.; Nakazune, S.J. Augmented Reality in Occupacional Therapy. In Proceedings of the 2013 8th Iberian Conference on Information Systems and Technologies (CISTI), Lisboa, Portugal, 19–22 June 2013. [Google Scholar]

- Da Gama, A.E.F.; Chaves, T.M.; Figueiredo, L.S.; Baltar, A.; Meng, M.; Navab, N.; Teichrieb, V.; Fallavollita, P. MirrARbilitation: A clinically-related gesture recognition interactive tool for an AR rehabilitation system. Comput. Methods Programs Biomed. 2016, 135, 105–114. [Google Scholar] [CrossRef] [PubMed]

- Dancu, A. Motor learning in a mixed reality environment. In Proceedings of the 7th Nordic Conference on Human-Computer Interaction Making Sense Through Design—NordiCHI ’12, Copenhagen, Denmark, 14–17 October 2012; ACM Press: New York, NY, USA, 2012; p. 811. [Google Scholar] [CrossRef]

- David, L.; Bouyer, G.; Otmane, S. Towards an upper limb self-rehabilitation assistance system after stroke. In Proceedings of the Virtual Reality International Conference—Laval Virtual 2017, Laval, France, 22–24 March 2017; ACM: New York, NY, USA, 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Desai, K.; Bahirat, K.; Ramalingam, S.; Prabhakaran, B.; Annaswamy, T.; Makris, U.E. Augmented reality-based exergames for rehabilitation. In Proceedings of the 7th International Conference on Multimedia Systems, MMSys 2016, Klagenfurt, Austria, 10–13 May 2016; Association for Computing Machinery, Inc.: New York, NY, USA, 2016; pp. 232–241. [Google Scholar] [CrossRef]

- Enam, N.; Veerubhotla, A.; Ehrenberg, N.; Kirshblum, S.; Nolan, K.J.; Pilkar, R. Augmented-reality guided treadmill training as a modality to improve functional mobility post-stroke: A proof-of-concept case series. Top. Stroke Rehabil. 2021, 28, 624–630. [Google Scholar] [CrossRef] [PubMed]

- Gauthier, L.V.; Kane, C.; Borstad, A.; Strahl, N.; Uswatte, G.; Taub, E.; Morris, D.; Hall, A.; Arakelian, M.; Mark, V. Video Game Rehabilitation for Outpatient Stroke (VIGoROUS): Protocol for a multi-center comparative effectiveness trial of in-home gamified constraint-induced movement therapy for rehabilitation of chronic upper extremity hemiparesis. BMC Neurol. 2017, 17, 109. [Google Scholar] [CrossRef]

- Grimm, F.; Naros, G.; Gharabaghi, A. Compensation or restoration: Closed-loop feedback of movement quality for assisted reach-to-grasp exercises with a multi-joint arm exoskeleton. Front. Neurosci. 2016, 10, 280. [Google Scholar] [CrossRef][Green Version]

- Guo, G.; Segal, J.; Zhang, H.; Xu, W. ARMove: A smartphone augmented reality exergaming system for upper and lower extremities stroke rehabilitation: Demo abstract. In SenSys 2019—Proceedings of the 17th Conference on Embedded Networked Sensor Systems; Association for Computing Machinery, Inc.: New York, NY, USA, 2019; pp. 384–385. [Google Scholar] [CrossRef]

- Hacioglu, A.; Ozdemir, O.F.; Sahin, A.K.; Akgul, Y.S. Augmented reality based wrist rehabilitation system. In Proceedings of the 2016 24th Signal Processing and Communication Application Conference (SIU), Zonguldak, Turkey, 16–19 May 2016; pp. 1869–1872. [Google Scholar] [CrossRef]

- Halic, T.; Kockara, S.; Demirel, D.; Willey, M.; Eichelberger, K. MoMiReS: Mobile mixed reality system for physical & occupational therapies for hand and wrist ailments. In Proceedings of the 2014 IEEE Innovations in Technology Conference, Warwick, RI, USA, 16 May 2014. [Google Scholar] [CrossRef]

- Hoermann, S.; Santos, L.F.D.; Morkisch, N.; Jettkowski, K.; Sillis, M.; Cutfield, N.J.; Schmidt, H.; Hale, L.; Kruger, J.; Regenbrecht, H.; et al. Computerized mirror therapy with augmented reflection technology for stroke rehabilitation: A feasibility study in a rehabilitation center. In Proceedings of the 2015 International Conference on Virtual Rehabilitation (ICVR), Valencia, Spain, 9–12 June 2015; pp. 199–206. [Google Scholar] [CrossRef]

- Hoermann, S.; Ferreira dos Santos, L.; Morkisch, N.; Jettkowski, K.; Sillis, M.; Devan, H.; Kanagasabai, P.S.; Schmidt, H.; Krüger, J.; Dohle, C.; et al. Computerised mirror therapy with Augmented Reflection Technology for early stroke rehabilitation: Clinical feasibility and integration as an adjunct therapy. Disabil. Rehabil. 2017, 39, 1503–1514. [Google Scholar] [CrossRef]

- Ines, D.L.; Abdelkader, G.; Hocine, N. Mixed reality serious games for post-stroke rehabilitation. In Proceedings of the 2011 5th International Conference on Pervasive Computing Technologies for Healthcare (PervasiveHealth) and Workshops, Dublin, Ireland, 23–26 May 2011; pp. 530–537. [Google Scholar] [CrossRef][Green Version]

- Jin, Y.; Monge, J.; Postolache, O.; Niu, W. Augmented Reality with Application in Physical Rehabilitation. In Proceedings of the 2019 International Conference on Sensing and Instrumentation in IoT Era (ISSI), Lisbon, Portugal, 29–30 August 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Jung, G.U.; Moon, T.H.; Park, G.W.; Lee, J.Y.; Lee, B.H. Use of Augmented Reality-Based Training with EMG-Triggered Functional Electric Stimulation in Stroke Rehabilitation. J. Phys. Therapy Sci. 2013, 25, 147–151. [Google Scholar] [CrossRef]

- King, M.; Hale, L.; Pekkari, A.; Persson, M. An affordable, computerized, table-based exercise system for stroke survivors. Disabil. Rehabil. Assist. Technol. 2010, 5, 288–293. [Google Scholar] [CrossRef]

- Klein, A.; De Assis, G.A. A markeless augmented reality tracking for enhancing the user interaction during virtual rehabilitation. In Proceedings of the 2013 XV Symposium on Virtual and Augmented Reality, Cuiaba, Brazil, 28–31 May 2013; pp. 117–124. [Google Scholar] [CrossRef]

- Koroleva, E.S.; Tolmachev, I.V.; Alifirova, V.M.; Boiko, A.S.; Levchuk, L.A.; Loonen, A.J.M.; Ivanova, S.A. Serum BDNF’s Role as a Biomarker for Motor Training in the Context of AR-Based Rehabilitation after Ischemic Stroke. Brain Sci. 2020, 10, 623. [Google Scholar] [CrossRef]

- LaPiana, N.; Duong, A.; Lee, A.; Alschitz, L.; Silva, R.M.; Early, J.; Bunnell, A.; Mourad, P. Acceptability of a mobile phone–based augmented reality game for rehabilitation of patients with upper limb deficits from stroke: Case study. JMIR Rehabil. Assist. Technol. 2020, 7, e17822. [Google Scholar] [CrossRef] [PubMed]

- Lee, A.; Hellmers, N.; Vo, M.; Wang, F.; Popa, P.; Barkan, S.; Patel, D.; Campbell, C.; Henchcliffe, C.; Sarva, H. Can google glass™ technology improve freezing of gait in parkinsonism? A pilot study. Disabil. Rehabil. Assist. Technol. 2020, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Mei, J.; Zhang, X.; Lu, X.; Huang, J. Augmented reality-based training system for hand rehabilitation. Multimed. Tools Appl. 2017, 76, 14847–14867. [Google Scholar] [CrossRef]

- Lledó, L.D.; Díez, J.A.; Bertomeu-Motos, A.; Ezquerro, S.; Badesa, F.J.; Sabater-Navarro, J.M.; García-Aracil, N. A comparative analysis of 2D and 3D tasks for virtual reality therapies based on robotic-assisted neurorehabilitation for post-stroke patients. Front. Aging Neurosci. 2016, 8, 205. [Google Scholar] [CrossRef] [PubMed]

- Loureiro, R.; Amirabdollahian, F.; Topping, M.; Driessen, B.; Harwin, W. Upper Limb Robot Mediated Stroke Therapy-GENTLE/s Approach. Auton. Rob. 2003, 15, 35–51. [Google Scholar] [CrossRef]

- Luo, X.; Kline, T.; Fischer, H.C.; Stubblefield, K.A.; Kenyon, R.V.; Kamper, D.G. Integration of augmented reality and assistive devices for post-stroke hand opening rehabilitation. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2005, 2005, 6855–6858. [Google Scholar] [CrossRef]

- Manuli, A.; Maggio, M.G.; Latella, D.; Cannavò, A.; Balletta, T.; De Luca, R.; Naro, A.; Calabrò, R.S. Can robotic gait rehabilitation plus Virtual Reality affect cognitive and behavioural outcomes in patients with chronic stroke? A randomized controlled trial involving three different protocols. J. Stroke Cerebrovasc. Dis. 2020, 29, 104994. [Google Scholar] [CrossRef]

- Monge, J.; Postolache, O. Augmented Reality and Smart Sensors for Physical Rehabilitation. In Proceedings of the 2018 International Conference and Exposition on Electrical And Power Engineering (EPE), Iasi, Romania, 18–19 October 2018; pp. 1010–1014. [Google Scholar] [CrossRef]

- Mouraux, D.; Brassinne, E.; Sobczak, S.; Nonclercq, A.; Warzée, N.; Sizer, P.S.; Tuna, T.; Penelle, B. 3D augmented reality mirror visual feedback therapy applied to the treatment of persistent, unilateral upper extremity neuropathic pain: A preliminary study. J. Man. Manip. Therapy 2017, 25, 137–143. [Google Scholar] [CrossRef]

- Muñoz, G.F.; Mollineda, R.A.; Casero, J.G.; Pla, F. A rgbd-based interactive system for gaming-driven rehabilitation of upper limbs. Sensors 2019, 19, 3478. [Google Scholar] [CrossRef]

- Nanapragasam, A.; Pelah, A.; Cameron, J.; Lasenby, J. Visualizations for locomotor learning with real time feedback in VR. In Proceedings of the 6th Symposium on Applied Perception in Graphics and Visualization—APGV ’09, Chania, Crete, Greece, 30 September–2 October 2009; ACM Press: New York, NY, USA, 2009; p. 139. [Google Scholar] [CrossRef]

- Ramírez, E.R.; Petrie, R.; Chan, K.; Signal, N. A Tangible Interface and Augmented Reality Game for Facilitating Sit-to-Stand Exercises for Stroke Rehabilitation. In Proceedings of the ACM International Conference Proceeding Series—IOT ’18, Santa Barbara, CA, USA, 15–18 October 2018; Association for Computing Machinery: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- Regenbrecht, H.; Collins, J.; Hoermann, S. A leap-supported, hybrid AR interface approach. In Proceedings of the 25th Australian Computer-Human Interaction Conference on Augmentation, Application, Innovation, Collaboration—OzCHI ’13, Adelaide, Australia, 25–29 November 2013; ACM Press: New York, NY, USA, 2013; pp. 281–284. [Google Scholar] [CrossRef]

- Saraee, E.; Betke, M. Dynamic Adjustment of Physical Exercises Based on Performance Using the Proficio Robotic Arm. In Proceedings of the ACM International Conference Proceeding Series—PETRA’16, Corfu Island, Greece, 29 June–1 July 2016; Association for Computing Machinery: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Song, X.; Ding, L.; Zhao, J.; Jia, J.; Shull, P. Cellphone Augmented Reality Game-based Rehabilitation for Improving Motor Function and Mental State after Stroke. In Proceedings of the 2019 IEEE 16th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Chicago, IL, USA, 19–22 May 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Sror, L.; Vered, M.; Treger, I.; Levy-Tzedek, S.; Levin, M.F.; Berman, S. A virtual reality-based training system for error-augmented treatment in patients with stroke. In Proceedings of the 2019 International Conference on Virtual Rehabilitation (ICVR), Tel Aviv, Israel, 21–24 July 2019; pp. 1–2. [Google Scholar] [CrossRef]

- Syed Ali Fathima, S.J.; Shankar, S. AR using NUI based physical therapy rehabilitation framework with mobile decision support system:A global solution for remote assistance. J. Glob. Inf. Manag. 2018, 26, 36–51. [Google Scholar] [CrossRef]

- Thikey, H.; Grealy, M.; van Wijck, F.; Barber, M.; Rowe, P. Augmented visual feedback of movement performance to enhance walking recovery after stroke: Study protocol for a pilot randomised controlled trial. Trials 2012, 13, 163. [Google Scholar] [CrossRef] [PubMed]

- Trojan, J.; Diers, M.; Fuchs, X.; Bach, F.; Bekrater-Bodmann, R.; Foell, J.; Kamping, S.; Rance, M.; Maaß, H.; Flor, H. An augmented reality home-training system based on the mirror training and imagery approach. Behav. Res. Methods 2014, 46, 634–640. [Google Scholar] [CrossRef] [PubMed]

- Voinea, A.; Moldoveanu, A.; Moldoveanu, F. 3D visualization in IT systems used for post stroke recovery: Rehabilitation based on virtual reality. In Proceedings of the 2015 20th International Conference on Control Systems and Computer Science, Bucharest, Romania, 27–29 May 2015; pp. 856–862. [Google Scholar] [CrossRef]

- Wei, X.; Chen, Y.; Jia, X.; Chen, Y.; Xie, L. Muscle Activation Visualization System Using Adaptive Assessment and Forces-EMG Mapping. IEEE Access 2021, 9, 46374–46385. [Google Scholar] [CrossRef]

- Xue, Y.; Zhao, L.; Xue, M.; Fu, J. Gesture Interaction and Augmented Reality based Hand Rehabilitation Supplementary System. In Proceedings of the 2018 IEEE 3rd Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 October 2018; pp. 2572–2576. [Google Scholar] [CrossRef]

- Yang, U.; Kim, G.J. Implementation and Evaluation of “Just Follow Me”: An Immersive, VR-Based, Motion-Training System. Presence Teleoperators Virtual Environ. 2002, 11, 304–323. [Google Scholar] [CrossRef]

- Yeh, S.C.; Rizzo, A.; McLaughlin, M.; Parsons, T. VR enhanced upper extremity motor training for post-stroke rehabilitation: Task design, clinical experiment and visualization on performance and progress. Stud. Health Technol. Inf. 2007, 125, 506–511. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Butz, B.; Jussen, A.; Rafi, A.; Lux, G.; Gerken, J. A Taxonomy for Augmented and Mixed Reality Applications to Support Physical Exercises in Medical Rehabilitation—A Literature Review. Healthcare 2022, 10, 646. https://doi.org/10.3390/healthcare10040646

Butz B, Jussen A, Rafi A, Lux G, Gerken J. A Taxonomy for Augmented and Mixed Reality Applications to Support Physical Exercises in Medical Rehabilitation—A Literature Review. Healthcare. 2022; 10(4):646. https://doi.org/10.3390/healthcare10040646

Chicago/Turabian StyleButz, Benjamin, Alexander Jussen, Asma Rafi, Gregor Lux, and Jens Gerken. 2022. "A Taxonomy for Augmented and Mixed Reality Applications to Support Physical Exercises in Medical Rehabilitation—A Literature Review" Healthcare 10, no. 4: 646. https://doi.org/10.3390/healthcare10040646

APA StyleButz, B., Jussen, A., Rafi, A., Lux, G., & Gerken, J. (2022). A Taxonomy for Augmented and Mixed Reality Applications to Support Physical Exercises in Medical Rehabilitation—A Literature Review. Healthcare, 10(4), 646. https://doi.org/10.3390/healthcare10040646