Abstract

Medical image semantic segmentation is essential in computer-aided diagnosis systems. It can separate tissues and lesions in the image and provide valuable information to radiologists and doctors. The breast ultrasound (BUS) images have advantages: no radiation, low cost, portable, etc. However, there are two unfavorable characteristics: (1) the dataset size is often small due to the difficulty in obtaining the ground truths, and (2) BUS images are usually in poor quality. Trustworthy BUS image segmentation is urgent in breast cancer computer-aided diagnosis systems, especially for fully understanding the BUS images and segmenting the breast anatomy, which supports breast cancer risk assessment. The main challenge for this task is uncertainty in both pixels and channels of the BUS images. In this paper, we propose a Spatial and Channel-wise Fuzzy Uncertainty Reduction Network (SCFURNet) for BUS image semantic segmentation. The proposed architecture can reduce the uncertainty in the original segmentation frameworks. We apply the proposed method to four datasets: (1) a five-category BUS image dataset with 325 images, and (2) three BUS image datasets with only tumor category (1830 images in total). The proposed approach compares state-of-the-art methods such as U-Net with VGG-16, ResNet-50/ResNet-101, Deeplab, FCN-8s, PSPNet, U-Net with information extension, attention U-Net, and U-Net with the self-attention mechanism. It achieves 2.03%, 1.84%, and 2.88% improvements in the Jaccard index on three public BUS datasets, and 6.72% improvement in the tumor category and 4.32% improvement in the overall performance on the five-category dataset compared with that of the original U-shape network with ResNet-101 since it can handle the uncertainty effectively and efficiently.

1. Introduction

Medical imaging is the most important approach in the early detection and diagnosis of diseases. A trustworthy computer-aided diagnosis (CAD) system is designed to assist doctors and radiologists in making a diagnostic decision. Image segmentation is one of the most important steps in a CAD system. It can detect lesions and separate them from the background. The accuracy of segmentation can affect if the CAD system is trustable or not. Image segmentation has been applied to computed tomography (CT) imaging for lung and nasopharyngeal cancer [1,2], magnetic resonance (MR) imaging for breast, musculoskeletal and brain [3,4], chest and dental X-ray imaging [5,6], and ultrasound imaging [7]. Before the advance of the deep convolutional neural network, medical image segmentation methods were based on classic machine learning and computer vision methods such as watershed-based method [1], thresholding method [8], clustering method [9], active contour model [10], Markov model [11], etc.

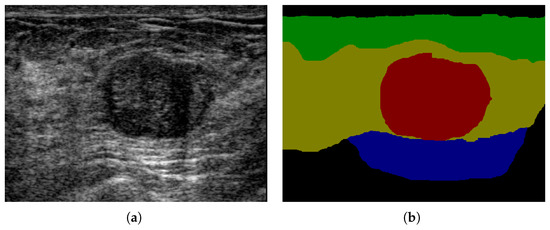

Comparing with CT, MR, X-Ray imaging, ultrasound imaging is harmless, low cost, and potable. Breast ultrasound (BUS) imaging is one of the most important modalities for breast cancer early detection [12,13]. However, BUS images are usually in low contrast, and poor quality and have inherent speckle noise and shadows [14]. It is critical to develop computer-aided diagnosis systems for breast ultrasound images, especially for breast anatomy segmentation (multi-category BUS semantic segmentation). The location relation between breast tissues and the tumor can provide important context information in breast cancer diagnosis (shown in Figure 1). For example, the tumor region (red) is much more likely located in the mammary layer (yellow) than in other layers. The breast anatomy can also provide important information for breast density calculation which has high correlation with cancer risk [15]. There are only few researches in multi-category BUS semantic segmentation because most BUS image datasets only contain ground truths for tumors. In [16], U-Net was applied to BUS image segmentation with three categories: tumor, mammary layer, and background. The location relation between the mammary layer and the tumor was employed to refine the segmentation results. An encoder-decoder network with deep boundary regularized constraint, and adaptive domain transfer was proposed [17] to segment four layers in BUS images. In [18], a deep learning method based on a self-attention mechanism was proposed for breast anatomy layer segmentation.

Figure 1.

Breast anatomy: (a) BUS image; (b) ground truth; green: fat layer, yellow: mammary layer, blue: muscle layer, red: tumor, and black: background.

Although there are some segmentation networks for BUS image [19,20,21,22] which increase the performance of BUS image segmentation, there are three main challenges in breast ultrasound (BUS) image segmentation: (1) The edges of the lesion area in BUS images are generally blurred (as shown in Figure 1a). (2) The background regions in BUS images contain similar intensity to the lesion area. (3) The boundaries of different breast tissue layers are hard to classify, which is a disadvantage for the segmentation of breast tissues. Those challenges are the uncertainty in BUS images. Meanwhile, deep learning algorithms also contain uncertainty. In [23,24], it shows epistemic and aleatoric uncertainty in deep learning architecture and medical images. The entropy [24] and hierarchical resolution segmentation [23] are used to estimate the uncertainty [23]. Attention mechanisms in convolutional neural networks demonstrate that different pixels and channels in a feature map contain different importance degrees in making the final classification decision. They can provide context information to generate novel features and present noise in the original feature map. Attention mechanisms can also reduce the random uncertainty in deep learning methods in pixels and channels of the convolutional features by spatial-wise and channel-wise attention mechanisms [25,26]. The uncertainty in the pixels and channels measures the difficulty in classifying the pixels and channels into different categories. However, attention mechanisms cannot handle the non-random/statistical uncertainty. Fuzzy logic methods [27,28] are used to handle non-random uncertainty in many classic machine learning and deep learning algorithms.

In order to increase the accuracy of BUS image segmentation in CAD systems, and take the advantages of both attention mechanisms and fuzzy logic, two novel fuzzy attention mechanisms: the spatial-wise and channel-wise fuzzy blocks are added to the classic U-shape network with a ResNet-101 network structure, and the Spatial and Channel-wise Fuzzy Uncertainty Reduction Network (SCFURNet) is proposed to reduce uncertainty and noise in BUS images and to conduct the semantic segmentation. The major contributions of this research are:

- The proposed spatial-wise fuzzy blocks (SFBs) are applied to measure and reduce the spatial uncertainties (spatial dimension) in convolutional feature maps, and the proposed channel-wise fuzzy blocks (CFBs) are proposed to handle the channel uncertainties (channel dimension) in convolutional feature maps.

- A novel membership function in deep learning is designed. Membership functions in fuzzy blocks are defined by convolutional operator with a Sigmoid activation function to increase the non-linearity of the membership function.

- A novel fuzzy logic uncertainty measurement method is proposed. Fuzzy entropy [29,30,31] calculated by the memberships of different categories are utilized to measure the uncertainties for pixels and channels. Uncertain pixels and channels are those with higher fuzzy entropies (details will be discussed in Section 3).

The paper is organized as follows: We briefly review the related works in Section 2. Section 3 introduces the proposed spatial and channel-wise fuzzy uncertainty reduction method. Section 4 shows the semantic segmentation results on four datasets and compares the proposed method with state-of-the-art methods. Discussions based on experimental results are presented in Section 5. The conclusions are in Section 6.

2. Related Works

2.1. BUS Image Segmentation

Classic machine learning and computer vision approaches have been applied to BUS image segmentation and classification [32,33]. A gray-level thresholding method was proposed to find the region of interests (ROIs) of tumors, and the area growing method was employed for tumor segmentation on ROIs [8]. A method based on k-means clustering [34] was reported. The classic k-means clustering was enhanced by Ant Colony Optimization (ACO) in initializing cluster centroid, and a regularization term was added to the k-means clustering function to increase the stability of the clustering method. The non-deep learning methods apply classic machine learning algorithms and computer vision methods to BUS image segmentation. The performances are depended on datasets and the manually extracted features, such as texture, gray-level intensity, frequency features, etc.

Recently, deep convolutional neural network-based approaches have been widely utilized in image semantic segmentation. Semantic segmentation approaches are frequently based on deep convolutional neural networks because they can learn features automatically. Such characteristic avoids selecting features manually and reduces noise effect in some cases. There are also researches in BUS image semantic segmentation using deep learning. Deep learning based semantic segmentation of BUS image can provide a better understanding of BUS image and the category information of each pixel, which is important in trustworthy CAD systems. However, most of the BUS image semantic segmentation methods are only focus on tumor area and background area. In [35], fully convolutional network (FCN) [36] was utilized for tumor segmentation in BUS images. Three networks were utilized and compared with LeNet [37], U-Net [38], and a pre-trained FCN with AlexNet [39]. A stacked denoising auto-encoder (SDAE) was employed to diagnose breast ultrasound lesions and lung CT nodules in [40]. In [41], a deep learning approach was specifically designed for small tumors. Different sizes of convolutional kernels were employed for convolutional blocks to detect tumors. Multi-category BUS semantic segmentation is important for breast cancer diagnosis. The experiment results show that deep learning methods achieve good results on BUS image semantic segmentation. However, deep learning methods require a great number of training samples. Moreover, most of the previous deep learning methods do not consider the non-random uncertainty inside deep learning architectures.

2.2. Attention Mechanisms

Attention mechanism in convolutional neural networks is popularly used [42] to reduce noise and uncertainty. It assigns the weights to pixels or channels of feature maps to express the importance. In [43], a spatial-wise attention gate was proposed in the decoder of U-Net. The encoder and decoder information were combined to calculate a weight tensor before concatenating the encoder-feature map and the decoder information. The weight tensor multiplied with the encoder-feature map. The attention coefficients were bigger in the target areas than those in the background, and the results were better than that of the original U-Net. In [44], Hu et al. proposed a channel-wise attention mechanism, Squeeze-and-Excitation Networks (SE-Nets). A convolutional operator transformed the feature map in each convolutional block. Then, in each channel, a global average pooling was performed to calculate the mean value of each channel. The results were used as the weight values for the channels in the original feature map. The SE block in SE-Nets was applied to network architectures such as VGG-16, ResNet-101, etc., and achieved good improvement. In [45], both spatial-wise and channel-wise attention mechanisms were applied to the image caption. The network structure followed VGG-19 [46] and ResNet-152 [47]. In each convolutional block, the weights of spatial-wise attention were based on the original feature map and last sentence context information. The mean value for each channel of the original feature map and last sentence context information was used to calculate the channel-wise attention weights. Another spatial and channel-wise attention FCN [48] was proposed for crowd counting. The network structure followed VGG-16 [46] architecture. The spatial-wise and channel-wise attention weights were computed by the original feature map in the same convolutional block. The original feature map was inputted to three convolutional kernels in the spatial-wise attention. Then, reshaping and transposing operators were applied to the outputs of the convolutional kernels to obtain three new features. For channel-wise attention weights, only one convolutional kernel was utilized. Then, it was reshaped and transposed to three different sizes. The attention weights were computed by multiplying and adding three different size features.

2.3. Fuzzy Logic in Deep Learning

The attention mechanism can reduce uncertainty and noise in convolutional feature maps; however, uncertainties are not caused by randomness only and cannot be handled by statistics, probabilities, and attention mechanisms well. Fuzzy logic has been utilized to handle the uncertainties successfully in image processing. A fuzzy clustering method, fuzzy c-means clustering [49] was applied to image segmentation. The fuzzy clustering method achieved better performance than the non-fuzzy version. A fuzzy contrast enhancement method [50] was proposed. The maximum entropy principle was utilized to map the image from the feature domain to the fuzzy domain. A fuzzy cellular automata framework [51] was proposed to handle the uncertainty in BUS images. The cellular automata results were transformed into the fuzzy domain, and a majority voting strategy was utilized. In order to remove speckle noise and inhomogeneous echoes, two kinds of texture features were involved. In [52], an adaptive fuzzy neural network was proposed. Input samples were mapped into the fuzzy domain by a trainable Gaussian membership function. Huang et al. [53] proposed a fuzzy logic-based FCN for multi-layer BUS image segmentation and a conditional random field-based method for post-processing. However, the fuzzy logic operator was only applied to the input image and the first convolutional feature. Also, this method only solved uncertainty in the pixel dimension.

3. Methods

3.1. Overview

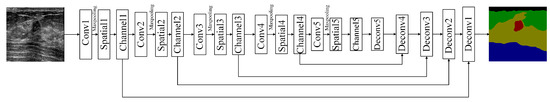

Figure 2 illustrates the entire network structure for the proposed SCFURNet. The proposed SCFURNet is based on a U-Shape network that contains an encoder branch for feature extraction and a decoder branch for segmentation. The encoder network contains five convolutional blocks, and the decoder network contains five deconvolutional blocks. SCFURNet consists of the U-Shape network and two novel components: (1) the spatial-wise fuzzy block (SFB) and (2) the channel-wise fuzzy block (CFB). We add five SFBs and five CFBs to the five convolutional blocks in the encoder network. The output for each convolutional block is processed by an SFB and a CFB sequentially and then inputted to the next convolutional block. This process indicates that the SFBs and the CFBs reduce the uncertainty of convolutional features from five convolutional blocks. Convolutional blocks in VGG-16 [46] and ResNet-101 [47] network structures are utilized as the encoder in the proposed network for comparison. Two different kinds of convolutional blocks in VGG-16 and ResNet-101 are used as the encoder network to compare the performance of different kinds of convolutional blocks and show the effectiveness of the proposed SFB and CFB in different kinds of convolutional blocks. The SFB and the CFB will be explained in detail in Section 3.2 and Section 3.3, respectively.

Figure 2.

The proposed network structure.

3.2. Spatial-Wise Fuzzy Block

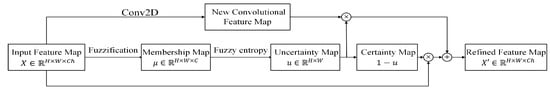

An SFB is utilized to calculate the uncertainty of each pixel and reduce the uncertainty in each convolutional feature map. In the SFB, there are three major components: fuzzification, uncertainty representation, and uncertainty reduction. The flowchart of the SFB is shown in Figure 3.

Figure 3.

Spatial-wise fuzzy block.

3.2.1. Fuzzification

Each input node from the original feature map is mapped to the fuzzy domain by membership function :

where represents the membership function; represents the input node i (here it is a pixel in the input feature map , H, W, and represent the height, width, and the number of channels of the feature map, respectively); represents the memberships of the input node, where C is the number of categories. In some researches [50,52], was an S-shape function, Sigmoid function, or Gaussian function.

In this research, the original features are transformed into fuzzy domain by the trainable Sigmoid membership function:

where is the pixel in the input feature map. and are two trainable parameters for the trainable Sigmoid function, and represents the membership in the category.

The Sigmoid membership function can be performed by a convolutional operation. In this research, two convolutional layers are used as the membership function:

where represents the spatial membership map for input feature map X; Conv1 × 1 represents the 2-dimensional (2D) convolutional layer; both convolutional layers contain C kernels. Here, two-layer convolution is utilized, and it can enable the membership to fit different categories. is defined as the membership vector of pixel i in X. The outputs are normalized by the Soft-max function.

3.2.2. Uncertainty Representation

Fuzzy logic is used to handle uncertainty. The memberships express the degrees that the pixel belongs to the categories and can measure the uncertainty. There is an observation for uncertain pixels: it is hard to assign to a category if a pixel contains similar memberships of different categories. Fuzzy entropy is utilized to reflect such observation, i.e., an uncertain pixel is defined as a pixel with high fuzzy entropy (close to 1), and a certain pixel is defined as a pixel with low fuzzy entropy (close to 0).

For membership vector , the fuzzy entropy is defined as below [54]:

where C represents category number; and represents the membership of category r. If the memberships for all categories are the same (), the entropy is the highest (). It is hard to assign a category when the memberships for all categories are the same.

In the SFB, the memberships are utilized to calculate the fuzzy entropy as Equation (5):

where is the uncertainty degree of pixel i, which is in [0, 1]. 0 represents low uncertainty, and 1 represents high uncertainty. Every pixel in the input feature map contains the corresponding uncertainty degree. The uncertainty degrees for all pixels consist of the uncertainty map. The uncertainty map has the same size as the input feature map.

3.2.3. Uncertainty Reduction

If the uncertainty degree is close to 1, the feature for pixel i generated in the convolutional block is uncertain. If the uncertain degree is close to 0, the feature for pixel i obtained in the convolutional block is useful for the final decision. The features of uncertain pixels should reduce weight in the novel feature map. The features will replace the uncertain pixels to reduce the uncertainty.

Shown as Figure 3, the uncertainty map () which consists of uncertainty degrees () in Equation (5) is utilized as the weight in the combination of the input feature map and a novel feature map; represents the certainty map:

where represents the refined feature map after reducing uncertainty; Conv2D represents a 2D convolutional layer with kernels, stride = 1 and padding = 1; ⊗ represents the pixel-wise multiplication between u or and each channel of Conv2D(X) or X, and ⊕ represents the pixel-wise summation of matrices. This uncertainty reduction operator indicates that if u is close to 0, i.e., X has low uncertainty, the weights of original features remain high. If u is close to 1, i.e., X has high uncertainty, the weights of original features are reduced and should be replaced. Therefore, a novel feature is extracted by a 3 × 3 convolutional layer. The refined feature map is passed to the next operator.

In this section, a novel fuzzification method is utilized to transform the original convolutional feature maps into the fuzzy domain. Then, uncertainty is computed using fuzzy entropy. New convolutional features and original features are combined to reduce the uncertainties.

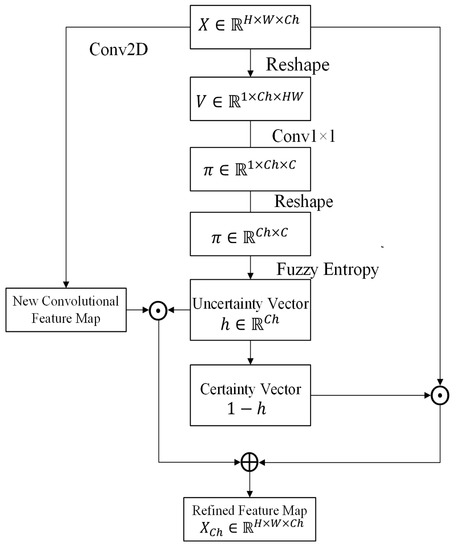

3.3. Channel-Wise Fuzzy Block

After reducing the uncertainty in pixels, the uncertainty in channels is processed by the proposed CFBs. Motivated by the channel-wise attention mechanisms [44,45] and fuzzy logic, the CFB utilizes the fuzzy entropy to measure the uncertainty degree of the channels of feature maps. An uncertain channel is a channel with higher fuzzy entropy (close to 1). There are also three major components in the CFB: fuzzification, uncertainty representation, and uncertainty reduction (Figure 4).

Figure 4.

Channel-wise fuzzy block.

3.3.1. Fuzzification

Let be the input feature map. H and W represent the height and width of the feature map, respectively, and is the number of channels. To calculate the uncertainty degree of each channel, it firstly transforms the input feature map into the fuzzy domain in the channel dimension. It reshapes X to , where is the feature vector of channel j. For each , a trainable Sigmoid membership function is utilized to transfer feature vector to the fuzzy domain:

where represents the membership of category r for channel j; and are parameters of channel j. The membership is also performed by using two convolutional layers with C kernels. In order to process V using 2D convolutional layer, is reshaped to before convolutional operators. Then, the convolutional operators are applied:

where represents the channel membership map for the input feature map X; and C represents the number of categories. Then, is reshaped to and for each channel, there is a membership vector .

3.3.2. Uncertainty Representation

After obtaining the memberships, the fuzzy entropy is computed:

where represents the fuzzy entropy of channel j which measures the uncertainty degree of channel j. Finally, the uncertainty degrees of all channels in the feature map consist of the uncertainty vector .

3.3.3. Uncertainty Reduction

Similar to the SFB, the uncertainty vector h is utilized as the weight vector for combining the input feature map and a novel feature map. The novel feature map is generated by a convolutional operator. Each element in h is the weight value of the corresponding channel:

where is the feature map after applying the CFB; ⊙ represents the multiplication between the channel of the feature map and the corresponding scalar , where . The channel-wise uncertainty reduction operator indicates if h is close to 0, the corresponding channels in the input feature map have low uncertainties, and these channels should contain high weights. If h is close to 1, i.e., the corresponding channels have high uncertainties. The weights of these channels are reduced, and the input feature should be replaced by a new feature.

3.4. Loss Function

The loss function for the proposed SCFURNet can be expressed as the summation of cross entropy and fuzzy entropies from spatial and channel fuzzy blocks:

is the classic cross-entropy loss function:

where x is the input of the network; is the label of x in one-hot encoding. If x is in the category, the corresponding element in is 1 and other elements are 0; represents the proposed network and Soft-max function:

where represents the output of the network; r represents the class index, and C represents the number of categories.

is computed by the fuzzy entropy () in the SFBs in Equation (5). Because the SFBs are applied to five convolutional blocks, there are five fuzzy entropy maps from the five convolutional blocks and is defined by the summation of fuzzy entropy maps.

where i represents the pixel index and l represents the index of convolutional blocks.

The loss terms and are the uncertainty degrees in five spatial and channel-wise fuzzy blocks. Adding these two loss terms can minimize the classification loss and uncertainty in pixel and channel dimensions simultaneously to obtain less uncertainty feature maps. The error propagates using a standard back-propagation algorithm [55].

4. Experimental Results

4.1. Datasets

To show the effectiveness of the proposed network in BUS image semantic segmentation, two kinds of experiments are designed: (1) multi-object (multi-layer) semantic segmentation, and (2) binary semantic segmentation (tumor and background). The multi-object semantic segmentation is performed on a dataset having 325 BUS images. The dataset is collected by the Second Affiliated Hospital of Harbin Medical University and the First Affiliated Hospital of Harbin Medical University. An experienced radiologist from the First Affiliated Hospital of Harbin Medical University delineate the boundaries of the four breast layers and tumors. The privacy of the patient is well protected. The pixel-wise ground truths for five categories: fat layer, mammary layer, muscle layer, tumor, and background are generated according to the manually delineated boundaries. In multi-object semantic segmentation task, the proposed method is compared with state-of-the-art deep learning segmentation methods such as U-Net with VGG-16 [46], U-Net with ResNet-50/ResNet-101 [47], Deeplab [56], FCN-8s [36], PSPNet [57], and U-Net with information extension [16]. We also compare the proposed methods with some spatial and channel-wise attention modules such as attention U-Net [43], SE-Net [44], and self-attention mechanism [58].

The binary semantic segmentation is performed on three public BUS image datasets [35,59,60]. Dataset [48] contains 163 BUS images including 109 benign samples and 54 malignant samples. Dataset [60] contains 780 BUS images including 437 benign, 210 malignant, and 133 no tumor images. Ref. [59] is a BUS image benchmark with 562 images and lists five non-deep learning methods [10,61,62,63,64] for BUS image segmentation. In this task, state-of-the-art semantic segmentation network structures are also applied for comparison. Also, five traditional tumor segmentation methods [10,61,62,63,64] are utilized for comparison. The summary of the four datasets used in experiments is listed in Table 1.

Table 1.

Dataset Properties.

4.2. Experiment Details

4.2.1. Preprocessing and Augmentation

Because of the number limitation of samples, the training samples are augmented by horizontal flip, horizontal shift, vertical shift, rotation, zooming, and shear mapping. The input images are all gray-level images and intensities are mapped into [−1, 1] by () [65], where x represents the original intensity. No other augmentation methods are used except U-Net with information extension [16]. In [16], the input images are firstly preprocessed by histogram equalization. Then, images are transformed into the wavelet domain. New three-channel images with grey-level intensity in the first channel, wavelet approximation coefficients in the second channel, and wavelet detail coefficients in the third channel are utilized for training the original U-Net with ResNet-101 network.

4.2.2. Experiment Environment

All the networks in this section are not pre-trained using other datasets. The network weights are initialized randomly. The input image is resized to . The batch size is 12. The optimizing method is the stochastic gradient descent (SGD) method, with a learning rate of 0.001 and momentum of 0.99. The training epoch number is 80. All the comparison networks and the proposed method are trained using a computer with Ubuntu 18.04 system, Intel (R) Xeon (R) CPU E5-2620 2.10GHz and 8 NVIDIA GeForce 1080 graphics cards, and each one has 8 Gigabyte memory. The implementation uses PyTorch 1.6.0.

4.3. Metrics

In binary semantic segmentation task, it utilizes metrics in [59] to evaluate the performance. There are five area metrics: true positive ratio (TPR), false positive ratio (FPR), Jaccard index (JI), Dice’s coefficient (DSC), and area error ratio (AER). The area metrics are defined in the following equation:

where is the set of pixels generated by the proposed method or existing methods, and is the set of pixels in the ground truths.

In the multi-object semantic segmentation task, intersection over union (IoU, also known as the Jaccard index in the binary task) is a typical metric in semantic segmentation and is chosen as the metric here. It is computed by:

where and are the sets of pixels generated by the algorithms and ground truths, respectively. Mean IoU (, and C represents the number of categories) over five categories to evaluate the overall performance.

4.4. Multi-Object Semantic Segmentation of BUS Images

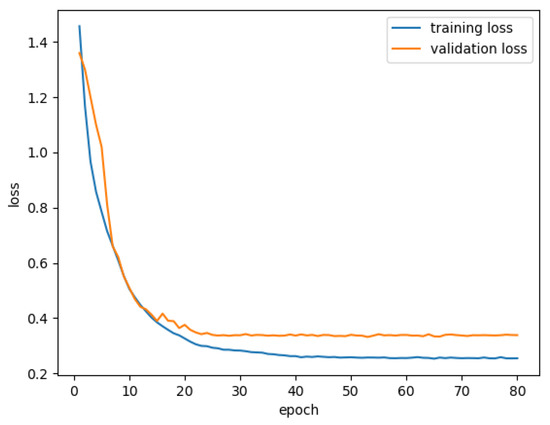

In this section, we discuss the performance of SCFURNet on the multi-layer dataset. We first present segmentation results of SCFURNet with different numbers of fuzzy blocks (SFBs and CFBs); next explain ablation study for the proposed fuzzy blocks; then visualize uncertainty maps obtained by fuzzy blocks; finally discuss the quantitative semantic segmentation results of SCFURNet and all compared methods. The dataset with 325 BUS images is utilized, and each of them contains pixel-wise ground truths of five categories. 10-fold validation is also utilized. The proposed SFBs and CFBs are applied to U-Net with VGG-16/ResNet-101 as the encoder. The training and validation loss curve is shown in Figure 5. The loss is calculated based the average of 10-fold validation.

Figure 5.

Training and validation loss curves for m1ulti-object semantic segmentation of BUS images.

4.4.1. Segmentation Performance and the Number of Fuzzy Blocks

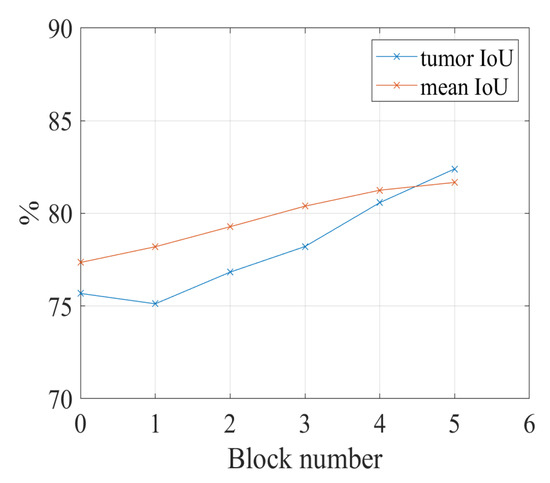

In this subsection, we discuss the relation between the number of fuzzy blocks used in the network and the performance of the segmentation. The U-Net with ResNet-101 is utilized in this research. The proposed SFB and the CFB are applied to the encoder of the U-Net with ResNet-101. The ResNet-101 contains 5 convolutional blocks; therefore, we use 5 fuzzy blocks as the maximum number to conduct experiments for comparison. In the first experiment, there is no fuzzy block applied to the U-Net with ResNet-101. In the second experiment, the proposed spatial and channel-wise fuzzy blocks are applied to the first convolutional block. We continue adding the spatial and channel-wise fuzzy blocks to the second, third, fourth, and fifth convolutional blocks and keeping the fuzzy blocks in the previous convolutional blocks.

Figure 6 shows IoU results vs. the number of convolutional blocks. When we apply the spatial and channel-wise fuzzy blocks to all five convolutional blocks, the proposed network achieves the best performance on both tumor category and the overall performance.

Figure 6.

The relation between the number of fuzzy blocks and the segmentation performance. Block number = 1: the fuzzy blocks are applied to the first convolutional block; block number = 2: the fuzzy blocks are applied to the first and second convolutional blocks together; block number = 3: the fuzzy blocks are applied to the convolutional blocks 1, 2, and 3; block number = 4: the fuzzy blocks are applied to the convolutional blocks 1, 2, 3, and 4; block number = 5: the fuzzy blocks are applied to the convolutional blocks 1, 2, 3, 4, and 5. The reason for the maximum number of blocks is 5 will be given in Section 4.4.1.

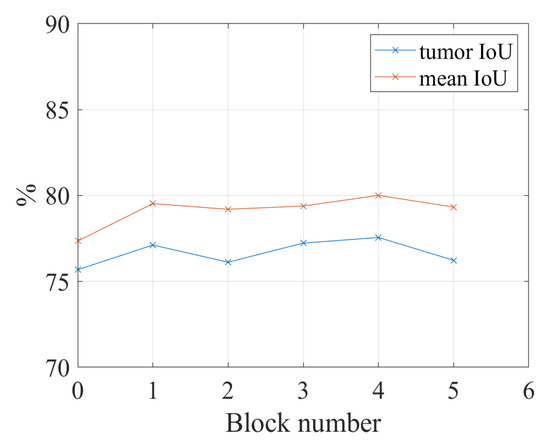

In order to show the increasing performance in Figure 6 is caused by the fuzzy blocks in deeper convolutional blocks or the combination of the former fuzzy blocks and the newly added fuzzy blocks, another experiment is conducted. In this experiment, the fuzzy blocks are added to the five convolutional blocks of ResNet-101 individually. For example, the fuzzy blocks are added to the second convolutional block of ResNet-101; there is no fuzzy block in convolutional blocks 1, 3, 4, and 5. The experiment results in Figure 7 show that there is a slight increase in performance when applying fuzzy blocks to convolutional blocks 1 to 5; however, the performance cannot outperform the performance of using fuzzy blocks in five convolutional blocks together. When we only add a fuzzy block to the fourth convolutional block, the IoU for the tumor is the highest, which is 77.56%; however, when we add fuzzy blocks to all five convolutional blocks, the IoU for the tumor is 82.40%. Therefore, the spatial and channel-wise fuzzy blocks are applied to five convolutional blocks in the following experiments.

Figure 7.

The relation between the number of fuzzy blocks and the segmentation performance. The fuzzy blocks are applied to the convolutional blocks individually. Block number = 1: the fuzzy blocks are applied to the first convolutional block; block number = 2: the fuzzy blocks are applied to the second convolutional block; block number = 3: the fuzzy blocks are applied to the third convolutional block; block number = 4: the fuzzy blocks are applied to the fourth convolutional block; block number = 5: the fuzzy blocks are applied to the fifth convolutional block.

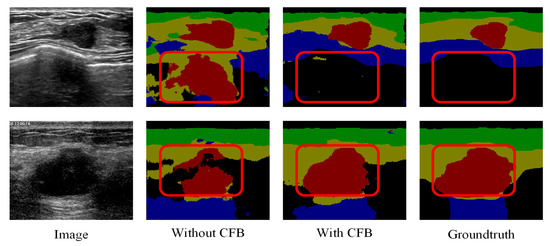

4.4.2. Ablation Study for Fuzzy Blocks

We employed the SFB and the CFB in five convolutional blocks to reduce the uncertainty in the feature maps. To verify the performance of each fuzzy block, we conduct experiments with different settings in Table 2.

Table 2.

Ablation Study on Multi-object Dataset. SFB: Spatial-wise Fuzzy Block, CFB: Channel-wise Fuzzy Block.

As shown in Table 2, it compares two convolutional structures: VGG-16 and ResNet-101. Meanwhile, it adopts the SFB and the CFB individually in each network. Compared with the U-Net with VGG-16, employing the SFB brings a 1.94% increase in tumor IoU and 2.41% in mean IoU. Meanwhile, employing the CFB in U-Net with VGG-16 outperforms the baseline by 0.97% in tumor IoU and 3.68% in mean IoU. When the two fuzzy blocks are used together to the U-Net with VGG-16, the performance further improved to 78.34% in tumor IoU and 79.36% in mean IoU. When changing the convolutional structure to ResNet-101, the performance of using two fuzzy blocks together becomes 82.40% in tumor IoU and 81.67% in mean IoU. Here we choose to show the tumor segmentation results and overall performance because tumors are the most important object in BUS image segmentation. The experiment results show that each fuzzy block can reduce uncertainty in the feature maps and increase the performance of tumor segmentation.

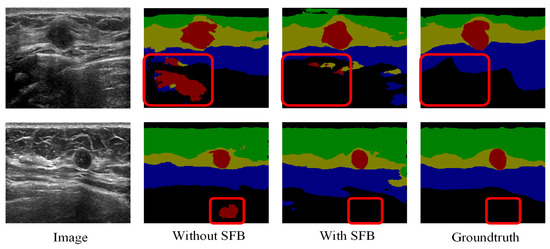

The effectiveness of the proposed channel and spatial-wise fuzzy blocks can be shown in Figure 8 and Figure 9, respectively. The most common misclassification is the tumor area and the background area because both areas contain low intensities. The misclassification patches are marked by red rectangles in Figure 8 and Figure 9. They are correctly classified when applied the SFB or CFB individually.

Figure 8.

Segmentation results of U-Net with ResNet-101 and CFB on multi-object dataset. Green: fat layer, yellow: mammary layer, blue: muscle layer, red: tumor, and black: background. The red rectangles represent the mis-segmented regions by the baseline module.

Figure 9.

Segmentation results of U-Net with ResNet-101 and SFB on multi-object dataset. Green: fat layer, yellow: mammary layer, blue: muscle layer, red: tumor, and black: background. The red rectangles represent the mis-segmented regions by the baseline module.

4.4.3. Visualization of Fuzzy Blocks

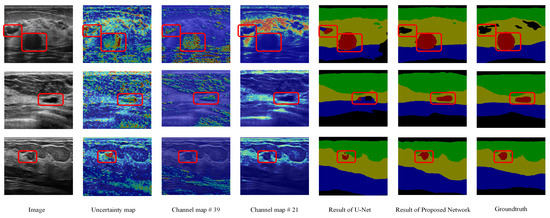

In this part, the uncertainty maps obtained by the SFB and selected channels in the processed feature maps are visualized for a better understanding of the proposed SFB and the CFB.

The SFB is utilized to measure the uncertainty degree of pixels in the input feature map and reduce the effect of the uncertain pixels. Therefore, the uncertainty map generated in the SFB can show the uncertain pixels and corresponding uncertainty degrees (refer to Figure 10). For example, the areas marked by red rectangles are background and tumor areas in the first row. They have similar intensities. In the uncertainty map, these areas are high uncertainty areas. The original U-Net misclassifies the background area; however, the proposed method can correct it (shown in columns 5 and 6). In the second row and third row, the tumor areas are also marked as the uncertain areas, i.e., the original U-Net cannot handle these areas. The heatmaps indicate that the proposed SFB can find the uncertain areas of the input feature maps, and it can also measure the uncertainty degree of the pixels.

Figure 10.

Visualization results of fuzzy blocks on the multi-object dataset. For each row, we show an input image, an uncertainty map from the SFB; red represents a high value and blue represents a low value in the heatmap. We also provide two channel maps from the outputs of the CFB, the results of the original U-Net and the proposed method, and the groundtruths. Green: fat layer, yellow: mammary layer, blue: muscle layer, red: tumor, and black: background. The red rectangles represent the mis-segmented regions by the baseline module or tumor regions.

For CFB, it is hard to give a comprehensible visualization about the uncertainty map directly because each channel of the input feature map only contains an uncertainty value. Instead, we show some processed channels to see whether they highlight clear semantic areas. In Figure 10, we display the 39th and 21st channels of each feature map after employing a CFB. We can see that in the 21st channel of the feature map, the highlighted areas are in the mammary layers. The 39th channel of the feature map highlights the area of the tumor. However, some areas in other categories contain high response in the 39th channel of the feature maps as well (such as the muscle layer in the first and third rows and the fat layer in the second row). These results indicate that the proposed fuzzy blocks can help generate feature maps with clear semantic information.

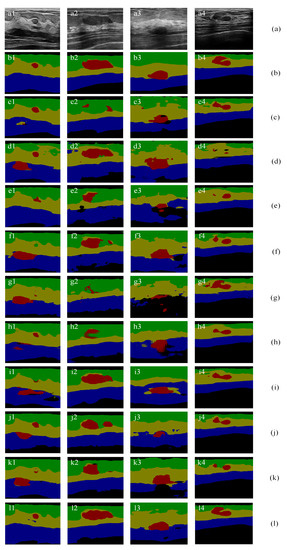

4.4.4. Semantic Segmentation Results

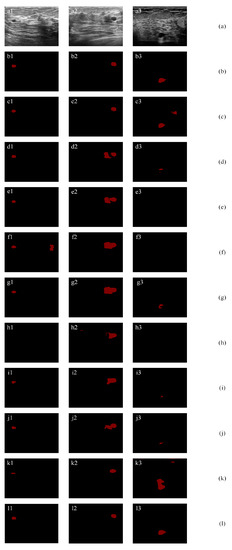

Figure 11 illustrates segmentation results of SCFURNet and nine compared methods for four representative BUS images in the multi-object datasets. Figure 11b shows the pixel-wise ground truths: the green areas are fat layers; the yellow areas are mammary layers; the blue areas are muscle layers; the red areas are tumors, and the black areas are background areas.

Figure 11.

Multi-object semantic segmentation of BUS images: (a) original images; (b) ground truths; (c) results of ResNet-101 + self-attention mechanism; (d) results of attention U-Net; (e) results of ResNet-50; (f) results of ResNet-101; (g) results of Deeplab; (h) results of PSPNet; (i) results of U-Net with wavelet transform; and (j) results of FCN-8s; (k) results of SE-Net (ResNet-101); (l) results of the proposed method.

The results in Figure 11i are obtained when the input images are the three-channel images with gray-level intensity in the first channel, wavelet approximation coefficients in the second channel, and wavelet detail coefficients in the third channel and the network structure is the U-shape network with ResNet-101. The results in Figure 11f are obtained when the images are the original gray-level images, and the network structure is the same as the network used in Figure 11i. Comparing Figure 11i and Figure 11f, the tumor segmentation results in Figure 11i2,i4 are better than that in Figure 11f2,f4. However, the results in Figure 11i1,i3 are not improved. The experiment results of using wavelet feature in the input layer prove that involving wavelet feature cannot handle some misclassification such as the background area and tumor area because they contain similar feature values in both wavelet domain and space domain.

The proposed method generates new convolutional features. New convolutional feature maps and original convolutional feature maps are combined using uncertainty degree as the weights in pixels and channels. It reduces the effect of uncertain pixels and uncertain channels. This mechanism overcomes the drawback in Figure 11i. For example, in Figure 11f3, the original U-Net with ResNet-101 can segment the tumor. In Figure 11i3, when adding wavelet features, the segmentation results of tumors and the mammary layer become worse. Other network structures also do not handle these images well. The quantitative results of Figure 11 show that the proposed method improves the second-best method significantly by 2.45%, 3.38%, and 14.36% for the mIoU for Figure 11a1–a3. The overall mIoU only improves 0.53% for Figure 11a4; however, the proposed method improves the second-best method by 11.71% for tumor IoU. The performances are shown in Table 3. Bold numbers represent the corresponding best results. The IoU increases 6.72% in tumor segmentation compared with that of the original U-Net with ResNet-101. It achieves a 7.52% improvement in IoU in tumor segmentation compared with that of the U-Net with ResNet-101 and wavelet transform. The proposed method achieves 4.32% and 4.05% improvements in overall mIoU compared with that of the U-Net with gray-level intensity and wavelet transform, respectively. The proposed method achieves the best performance in tumor segmentation and the best overall performance among all methods. The overall performance indicates that the proposed method can handle misclassification caused by similar feature values of different layers because the proposed method can reduce the weights of the similar features of different layers and add novel features.

Table 3.

Results of Multi-object Semantic Segmentation. Evaluation Metric is IoU (%).

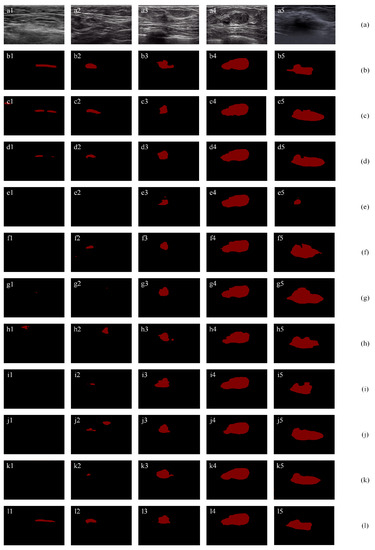

4.5. Semantic Segmentation on Three Public Two-Category Datasets

We also conduct experiments on three two-category public datasets to evaluate the performance of SCFURNet on the binary segmentation (tumor and background) task.

4.5.1. Overall Performance on Three Public Datasets

The proposed SFB and CFB are applied to a U-Net with ResNet-101 network because it achieves better results compared with U-Net with VGG-16 in Section 4.4.2. All other compared deep networks such as ResNet-50, ResNet-101, and FCN-8s are trained to segment tumors in these three datasets. Because of the limited number of samples (the total number of samples for 3 datasets is only 1505), 10-fold validation is utilized: (1) each of the three datasets is divided into 10 groups randomly; (2) pick 9 groups of each dataset as the training set and the rest 1 group as the testing set; and (3) the final evaluation metrics are calculated by the average of 10 experiments.

Figure 12 shows the segmentation results using the three two-category datasets [35,59,60]. Figure 12 (a) shows the original images and (b) shows the ground truths. For Figure 12a1 containing a narrow and long tumor, most methods (e1, f1, g1, i1, and j1) fail to segment the tumor; h1 mistakenly segments a wrong tumor region; c1 and d1 segment the tumor region with small JI values of 41.65% and 17.61%, respectively; the proposed method l1 achieves the highest JI value of 55.19%. For Figure 12a2, most methods (e2, f2, g2, h2, i2, j2, and k2) fail to segment the tumor or mistakenly segment a wrong tumor region since their JI values are less than 26%. For three methods (c2, d2, and l2) correctly segment the tumor region, the proposed method (l1) achieves the highest JI value of 88.38%, which significantly outperforms c2 (44.28%) and d2 (35.01%). For Figure 12a3 containing an irregular tumor, the proposed method (l3) achieves the highest TPR, JI, DSC, and the lowest AER values. Specifically, it outperforms the second-best method by 2.78%, 15.41%, 8.55%, and 35.02% for TPR, JI, DSC, and AER, respectively. For Figure 12a4 containing a big tumor, all methods achieve good segmentation results. The proposed method (l3) achieves the highest TPR, JI, and DSC values of 97.57%, 93.17%, and 96.47%, and the lowest FPR and AER value of 4.72% and 7.15%. For Figure 12a5 containing an irregular tumor with unclear contour, the proposed method (l3) achieves the best segmentation results with the highest JI of 84.86%, the highest DSC of 91.81% and the lowest AER of 17.30%.

Figure 12.

Segmentation results using public dataset: (a) original images; (b) ground truths; (c) results of ResNet-101 with self-attention mechanism; (d) results of a SE-Net (ResNet-101); (e) results of attention U-Net; (f) results of ResNet-50; (g) results of ResNet-101; (h) results of Deeplab; (i) results of PSPNet; (j) results of U-Net with wavelet transform; and (k) results of FCN-8s; (l) results of proposed method.

Table 4 summarizes segmentation results of SCFURNet, 9 deep learning methods, and 5 classic machine learning methods in terms of five measures on the dataset [59]. Five non-deep learning methods [10,61,62,63,64] are also involved in the comparison using this dataset. Results in Table 4 show: (1) deep learning methods obtain improvements compared with traditional BUS image segmentation methods listed in [59]; (2) some famous deep learning architectures such as Deeplab, PSPNet, do not obtain improvements for dataset [59] and the possible reason is the limited number of the samples; and (3) the proposed method achieves the best results since it can solve the small target problems and uncertainties in the boundary areas.

Table 4.

Results of Two-class Semantic Segmentation on Dataset [59].

Table 5 summarizes segmentation results of SCFURNet and 9 peer deep learning methods on Dataset [35] and Dataset [60]. The proposed method achieves the best results among all evaluation metrics compared with state-of-the-art deep learning methods on three public datasets except the FPR and AER on dataset [35]. The self-attention mechanism in ResNet-101 obtains lower FPR and AER on dataset [35]. Lower FPR and AER indicate that non-local context information provided by the self-attention mechanism can help to reduce errors in segmentation. However, the proposed method achieves the best overall performance by reducing uncertainty in pixels and channels. The proposed method achieves 2.03%, 1.84%, and 2.88% in the Jaccard index on three public BUS datasets compared with that of the original U-shape network with ResNet-101, respectively.

Table 5.

Results of Two-class Semantic Segmentation on Dataset [35] and Dataset [60].

4.5.2. Small Tumor Segmentation

In this section, we show the effectiveness of the proposed method on small tumor segmentation. Small tumors are hard to segment due to their small size, low intensity, and tumor-like regions in BUS images. Figure 13a1 contains a very small tumor. The proposed method (l1) achieves the highest TPR, JI, and DSC values of 92.78%, 81.82%, and 90.00%, and the lowest AER value of 20.62%. It improves the second-best method by 1.12%, 1.78%, 0.98%, and 0.54% in terms of TP, JI, DSC, and AER, respectively. Figure 13a2 contains a small tumor close to a tumor-like region. Most existing methods mistakenly classify the tumor-like region. The proposed method (l1) achieves the highest JI and DSC values of 85.11% and 91.95%, and the lowest AER value of 16.91%. It improves the second-best method by 5.16%, 2.78%, and 14.64% in terms of JI, DSC, and AER, respectively. Figure 13a3 contains a small tumor that is located in a region in low intensity, which makes it hard to be distinguished from the background. The proposed method (l1) achieves the highest JI and DSC values of 70.63% and 82.79%, and the lowest AER values of 39.50%. It improves the second-best method by 9.75%, 5.71%, and 17.16% in terms of JI, DSC, and AER, respectively. It is due to the fact that the small tumor contains similar feature values with noise patches or background patches. However, the proposed method achieves the best results in small tumor images; therefore, it can achieve the best overall performance on all datasets.

Figure 13.

Small tumor segmentation: (a) original images; (b) ground truths; (c) results of ResNet-101 with self-attention mechanism; (d) results of a SE-Net (ResNet-101); (e) results of attention U-Net; (f) results of ResNet-50; (g) results of ResNet-101; (h) results of Deeplab; (i) results of PSPNet; (j) results of U-Net with wavelet transform; and (k) results of FCN-8s; (l) results of proposed method.

5. Discussion

5.1. Comparison with Previous Studies and Potential Usefulness

We propose a novel SCFURNet with SFBs and CFBs to reduce the uncertainty in convolutional feature maps. The proposed method can perform a anatomy segmentation on BUS images. In general, four significant advantages of the proposed network surpasses previous BUS image segmentation methods.

First, the proposed SFBs and CFBs are individual blocks that do not depend on network structures. They can be easily integrated into different network structures, such as VGG-16 and ResNet. Most other attention mechanisms are either designed with new network structures or have limitations when applied to other networks. Second, as shown in Table 2, the proposed SFBs and CFBs can be used with different networks, and removing either block will lead to worse performance on BUS image segmentation. That is because the SFBs and CFBs can both find the fuzzy regions and channels in the feature maps and reduce their weights, refers to Figure 10. Third, our SFBs and CFBs can also be used in the semantic segmentation of other datasets besides BUS images. Fourth, in Section 4.5.2, we prove that the proposed SFBs and CFBs can help detect small tumor regions. Small tumors and low-intensity background regions have high uncertainty degrees (Figure 10). We focus on those uncertain regions and refine their feature maps to get better segmentation results.

Potential usefulness: the proposed SCFURNet can be applied to build trustworthy ultrasound image CAD systems from a clinical perspective. The propsed method can be used in splitting the BUS images into breast layer structures. It is helpful to diagnosis benign and malignant tumors in BUS images in clinical applications. We also explain the attention mechanism based on fuzzy logic and uncertainty, while existing attention methods are based on statistics and probability.

5.2. Limitations

While the proposed SCFURNet can measure the uncertainty and refine convolutional feature maps to get better segmentation results, there are some limitations. First, the proposed method is based on other supervised deep learning networks, which means we still need to use pixel-wise ground truths to train the SCFURNet to classify five classes on BUS images. The labor cost of generating pixel-wise ground truths is high. Second, the evaluation of the proposed method is limited. Due to the fact that we only have a limited number of samples for training and validation, we use the 10-fold cross-validation method in the experiment section. There is no independent test set, which means our experiment results might overfit to specific datasets and the generalizability of the proposed method is untested.

6. Conclusions

In this paper, we design a trustworthy SCFURNet for BUS image semantic segmentation. SCFURNet consists of two kinds of fuzzy blocks: spatial-wise fuzzy blocks (SFBs) and channel-wise fuzzy blocks. The proposed method can segment five breast layer structures of BUS images. The proposed SCFURNet achieves 2.03%, 1.84%, and 2.88% improvements in the Jaccard index using three public BUS datasets compared with that of the original U-shape network with ResNet-101. SCFURNet also improves the original U-shape network with ResNet-101 by 6.72% for tumor IoU and by 4.32% for mean IoU in the five-category BUS dataset.

SCFURNet achieves the best results due to the following reasons: (1) The proposed spatial and channel-wise fuzzy blocks can locate uncertain pixels and uncertain channels in feature maps and can reduce the influence of uncertain pixels and channels; (2) By reducing the uncertainty in feature maps, some patches having similar features with that of tumor areas can be classified correctly, especially for small tumors; (3) The fuzzy entropy of memberships can measure the uncertainty degree of pixels and channels accurately. The experimental results validate the following claims: (1) there are uncertainty and noise in BUS images, especially for small tumors and background areas; (2) the proposed method can reflect the uncertain pixels and uncertain channels and generate better feature maps; and (3) the proposed method can solve small target problem.

In the future, we plan to explore novel methods to extract more certain features which directly have low fuzzy entropy compared with convolutional operators. We also plan to develop different uncertainty representation methods and compare them with fuzzy entropy. Another research direction is designing weakly supervised method to reduce the labor cost in ground truth generation. Finally, we will try to extend the proposed network to other image segmentation dataset with more training samples, such as the nuclei image classification and segmentation dataset, PanNuke [66].

Author Contributions

Conceptualization, K.H. and H.-D.C.; Data curation, Y.Z. and P.X.; Investigation, Y.Z. and P.X.; Methodology, K.H. and H.-D.C.; Resources, Y.Z.; Software, K.H.; Supervision, H.-D.C.; Validation, K.H.; Visualization, K.H.; Writing—original draft, K.H. and H.-D.C.; Writing—review & editing, K.H., Y.Z., H.-D.C. and P.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. Datasets can be found here: Dataset 1 [35], link: http://www2.docm.mmu.ac.uk/STAFF/m.yap/dataset.php, Dataset 2 [60], link: https://scholar.cu.edu.eg/?q=afahmy/pages/dataset, Dataset 3 [59], link: http://cvprip.cs.usu.edu/busbench/. Data sharing is not applicable for Multi-layer Dataset.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shojaii, R.; Alirezaie, J.; Babyn, P. Automatic lung segmentation in CT images using watershed transform. In Proceedings of the IEEE International Conference on Image Processing 2005, Genoa, Italy, 11–14 September 2005; Volune 2, p. II–1270. [Google Scholar] [CrossRef]

- Gu, Z.; Cheng, J.; Fu, H.; Zhou, K.; Hao, H.; Zhao, Y.; Liu, J. CE-Net: Context encoder network for 2D medical image segmentation. IEEE Trans. Med. Imaging 2019, 38, 2281–2292. [Google Scholar] [CrossRef] [PubMed]

- Yu, Q.; Huang, K.; Zhu, Y.; Chen, X.; Meng, W. Preliminary results of computer-aided diagnosis for magnetic resonance imaging of solid breast lesions. Breast Cancer Res. Treat. 2019, 177, 419–426. [Google Scholar] [CrossRef] [PubMed]

- Gilles, B.; Magnenat-Thalmann, N. Musculoskeletal MRI segmentation using multi-resolution simplex meshes with medial representations. Med. Image Anal. 2010, 14, 291–302. [Google Scholar] [CrossRef]

- Saad, M.N.; Muda, Z.; Ashaari, N.S.; Hamid, H.A. Image segmentation for lung region in chest X-ray images using edge detection and morphology. In Proceedings of the 2014 IEEE International Conference on Control System, Computing and Engineering (ICCSCE 2014), Penang, Malaysia, 28–30 November 2014; pp. 46–51. [Google Scholar] [CrossRef]

- Son, L.H.; Tuan, T.M. A cooperative semi-supervised fuzzy clustering framework for dental X-ray image segmentation. Expert Syst. Appl. 2016, 46, 380–393. [Google Scholar] [CrossRef]

- Huang, Q.; Luo, Y.; Zhang, Q. Breast ultrasound image segmentation: A survey. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 493–507. [Google Scholar] [CrossRef] [PubMed]

- Huang, Q.; Yang, F.; Liu, L.; Li, X. Automatic segmentation of breast lesions for interaction in ultrasonic computer-aided diagnosis. Inf. Sci. 2015, 314, 293–310. [Google Scholar] [CrossRef]

- Moon, W.K.; Lo, C.M.; Chen, R.T.; Shen, Y.W.; Chang, J.M.; Huang, C.S.; Chen, J.H.; Hsu, W.-W.; Chang, R.F. Tumor detection in automated breast ultrasound images using quantitative tissue clustering. Med. Phys. 2014, 41, 042901. [Google Scholar] [CrossRef]

- Liu, B.; Cheng, H.D.; Huang, J.; Tian, J.; Tang, X.; Liu, J. Probability density difference-based active contour for ultrasound image segmentation. Pattern Recognit. 2010, 43, 2028–2042. [Google Scholar] [CrossRef]

- Xian, M.; Huang, J.; Zhang, Y.; Tang, X. Multiple-domain knowledge based MRF model for tumor segmentation in breast ultrasound images. In Proceedings of the 2012 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 2021–2024. [Google Scholar] [CrossRef]

- Cheng, H.D.; Shan, J.; Ju, W.; Guo, Y.; Zhang, L. Automated breast cancer detection and classification using ultrasound images: A survey. Pattern Recognit. 2010, 43, 299–317. [Google Scholar] [CrossRef]

- Xian, M.; Zhang, Y.; Cheng, H.D.; Xu, F.; Zhang, B.; Ding, J. Automatic breast ultrasound image segmentation: A survey. Pattern Recognit. 2018, 79, 340–355. [Google Scholar] [CrossRef]

- Bian, C.; Lee, R.; Chou, Y.H.; Cheng, J.Z. Boundary regularized convolutional neural network for layer parsing of breast anatomy in automated whole breast ultrasound. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Quebec City, QC, Canada, 10–14 September 2017; pp. 259–266. [Google Scholar] [CrossRef]

- Vourtsis, A.; Berg, W.A. Breast density implications and supplemental screening. Eur. Radiol. 2019, 29, 1762–1777. [Google Scholar] [CrossRef] [PubMed]

- Huang, K.; Cheng, H.D.; Zhang, Y.; Zhang, B.; Xing, P.; Ning, C. Medical Knowledge Constrained Semantic Breast Ultrasound Image Segmentation. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 1193–1198. [Google Scholar] [CrossRef]

- Lei, B.; Huang, S.; Li, R.; Bian, C.; Li, H.; Chou, Y.H.; Cheng, J.Z. Segmentation of breast anatomy for automated whole breast ultrasound images with boundary regularized convolutional encoder–decoder network. Neurocomputing 2018, 321, 178–186. [Google Scholar] [CrossRef]

- Lei, B.; Huang, S.; Li, H.; Li, R.; Bian, C.; Chou, Y.H.; Qin, J.; Zhou, P.; Gong, X.; Cheng, J.Z. Self-co-attention neural network for anatomy segmentation in whole breast ultrasound. Med. Image Anal. 2020, 64, 101753. [Google Scholar] [CrossRef] [PubMed]

- Shen, X.; Wang, L.; Zhao, Y.; Liu, R.; Qian, W.; Ma, H. Dilated transformer: Residual axial attention for breast ultrasound image segmentation. Quant. Imaging Med. Surg. 2022, 12, 4512–4528. [Google Scholar] [CrossRef] [PubMed]

- Shi, J.; Vakanski, A.; Xian, M.; Ding, J.; Ning, C. EMT-NET: Efficient multitask network for computer-aided diagnosis of breast cancer. In Proceedings of the 2022 IEEE 19th International Symposium on Biomedical Imaging (ISBI), Kolkata, India, 28–31 March 2022; pp. 1–5. [Google Scholar]

- Wang, K.; Liang, S.; Zhong, S.; Feng, Q.; Ning, Z.; Zhang, Y. Breast ultrasound image segmentation: A coarse-to-fine fusion convolutional neural network. Med. Phys. 2021, 48, 4262–4278. [Google Scholar] [CrossRef] [PubMed]

- Tang, F.; Wang, L.; Ning, C.; Xian, M.; Ding, J. CMU-Net: A Strong ConvMixer-based Medical Ultrasound Image Segmentation Network. arXiv 2022, arXiv:2210.13012. [Google Scholar]

- Baumgartner, C.F.; Tezcan, K.C.; Chaitanya, K.; Hötker, A.M.; Muehlematter, U.J.; Schawkat, K.; Becker, A.S.; Donati, O.; Konukoglu, E. PHiSeg: Capturing Uncertainty in Medical Image Segmentation. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2019, Shenzhen, China, 13–17 October 2019; pp. 119–127. [Google Scholar] [CrossRef]

- Wang, G.; Li, W.; Aertsen, M.; Deprest, J.; Ourselin, S.; Vercauteren, T. Aleatoric uncertainty estimation with test-time augmentation for medical image segmentation with convolutional neural networks. Neurocomputing 2019, 338, 34–45. [Google Scholar] [CrossRef]

- Gao, C.; Ye, H.; Cao, F.; Wen, C.; Zhang, Q.; Zhang, F. Multiscale fused network with additive channel–spatial attention for image segmentation. Knowl.-Based Syst. 2021, 214, 106754. [Google Scholar] [CrossRef]

- Punn, N.S.; Agarwal, S. RCA-IUnet: A residual cross-spatial attention-guided inception U-Net model for tumor segmentation in breast ultrasound imaging. Mach. Vis. Appl. 2022, 33, 27. [Google Scholar] [CrossRef]

- Zhang, H.; Li, H.; Chen, N.; Chen, S.; Liu, J. Novel fuzzy clustering algorithm with variable multi-pixel fitting spatial information for image segmentation. Pattern Recognit. 2022, 121, 108201. [Google Scholar] [CrossRef]

- Alomoush, W.; Khashan, O.A.; Alrosan, A.; Houssein, E.H.; Attar, H.; Alweshah, M.; Alhosban, F. Fuzzy Clustering Algorithm Based on Improved Global Best-Guided Artificial Bee Colony with New Search Probability Model for Image Segmentation. Sensors 2022, 22, 8956. [Google Scholar] [CrossRef] [PubMed]

- Kittaneh, O.A.; Khan, M.A.U.; Akbar, M.; Bayoud, H.A. Average Entropy: A New Uncertainty Measure with Application to Image Segmentation. Am. Stat. 2016, 70, 18–24. [Google Scholar] [CrossRef]

- Maassen, H.; Uffink, J.B. Generalized entropic uncertainty relations. Phys. Rev. Lett. 1988, 60, 1103. [Google Scholar] [CrossRef]

- Wehner, S.; Winter, A. Entropic uncertainty relations—A survey. New J. Phys. 2010, 12, 025009. [Google Scholar] [CrossRef]

- Karthik, R.; Menaka, R.; Kathiresan, G.; Anirudh, M.; Nagharjun, M. Gaussian Dropout Based Stacked Ensemble CNN for Classification of Breast Tumor in Ultrasound Images. IRBM 2021, 43, 715–733. [Google Scholar] [CrossRef]

- Khanna, P.; Sahu, M.; Singh, B.K. Improving the classification performance of breast ultrasound image using deep learning and optimization algorithm. In Proceedings of the 2021 IEEE International Conference on Technology, Research, and Innovation for Betterment of Society (TRIBES), Raipur, India, 17–19 December 2021; pp. 1–6. [Google Scholar]

- Samundeeswari, E.S.; Saranya, P.K.; Manavalan, R. Segmentation of Breast Ultrasound image using Regularized K-Means (ReKM) clustering. In Proceedings of the 2016 International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), Chennai, India, 23–25 March 2016; pp. 1379–1383. [Google Scholar] [CrossRef]

- Yap, M.H.; Pons, G.; Marti, J.; Ganau, S.; Sentis, M.; Zwiggelaar, R.; Davison, A.K.; Marti, R.; Yap, M.H.; Pons, G.; et al. Automated Breast Ultrasound Lesions Detection Using Convolutional Neural Networks. IEEE J. Biomed. Health Inform. 2018, 22, 1218–1226. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; NeurIPS: Long Beach, CA, USA, 2017; pp. 1097–1105. [Google Scholar]

- Cheng, J.Z.; Ni, D.; Chou, Y.H.; Qin, J.; Tiu, C.M.; Chang, Y.C.; Huang, C.S.; Shen, D.; Chen, C.M. Computer-aided diagnosis with deep learning architecture: Applications to breast lesions in US images and pulmonary nodules in CT scans. Sci. Rep. 2016, 6, 1–13. [Google Scholar] [CrossRef]

- Shareef, B.; Xian, M.; Vakanski, A. Stan: Small tumor-aware network for breast ultrasound image segmentation. In Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), Iowa City, IA, USA, 3–7 April 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; Bengio, Y. Show, Attend and Tell: Neural Image Caption Generation with Visual Attention. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; Bach, F., Blei, D., Eds.; PMLR: Lille, France, 2015; Volume 37, pp. 2048–2057. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Rueckert, D. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Zhang, H.; Xiao, J.; Nie, L.; Shao, J.; Liu, W.; Chua, T.S. Sca-cnn: Spatial and channel-wise attention in convolutional networks for image captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5659–5667. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Gao, J.; Wang, Q.; Yuan, Y. SCAR: Spatial-/channel-wise attention regression networks for crowd counting. Neurocomputing 2019, 363, 1–8. [Google Scholar] [CrossRef]

- Chuang, K.S.; Tzeng, H.L.; Chen, S.; Wu, J.; Chen, T.J. Fuzzy c-means clustering with spatial information for image segmentation. Comput. Med. Imaging Graph. 2006, 30, 9–15. [Google Scholar] [CrossRef]

- Cheng, H.D.; Xu, H. A novel fuzzy logic approach to contrast enhancement. Pattern Recognit. 2000, 33, 809–819. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, Y.; Han, B.; Zhang, Y.; Zhang, X.; Su, Y. Fully automatic Breast ultrasound image segmentation based on fuzzy cellular automata framework. Biomed. Signal Process. Control 2018, 40, 433–442. [Google Scholar] [CrossRef]

- Deng, Y.; Ren, Z.; Kong, Y.; Bao, F.; Dai, Q. A Hierarchical Fused Fuzzy Deep Neural Network for Data Classification. IEEE Trans. Fuzzy Syst. 2017, 25, 1006–1012. [Google Scholar] [CrossRef]

- Huang, K.; Zhang, Y.; Cheng, H.; Xing, P.; Zhang, B. Semantic segmentation of breast ultrasound image with fuzzy deep learning network and breast anatomy constraints. Neurocomputing 2021, 450, 319–335. [Google Scholar] [CrossRef]

- Al-Sharhan, S.; Karray, F.; Gueaieb, W.; Basir, O. Fuzzy entropy: A brief survey. In Proceedings of the 10th IEEE International Conference on Fuzzy Systems (Cat. No. 01CH37297), Melbourne, Australia, 2–5 December 2001; Volume 3, pp. 1135–1139. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar] [CrossRef]

- Zhang, H.; Goodfellow, I.; Metaxas, D.; Odena, A. Self-Attention Generative Adversarial Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; Chaudhuri, K., Salakhutdinov, R., Eds.; PMLR: Lille, France, 2019; Volume 97, pp. 7354–7363. [Google Scholar]

- Zhang, Y.; Xian, M.; Cheng, H.D.; Shareef, B.; Ding, J.; Xu, F.; Huang, K.; Zhang, B.; Ning, C.; Wang, Y. BUSIS: A Benchmark for Breast Ultrasound Image Segmentation. Healthcare 2022, 10, 729. [Google Scholar] [CrossRef]

- Al-Dhabyani, W.; Gomaa, M.; Khaled, H.; Fahmy, A. Dataset of breast ultrasound images. Data Brief 2020, 28, 104863. [Google Scholar] [CrossRef]

- Xian, M.; Zhang, Y.; Cheng, H.D. Fully automatic segmentation of breast ultrasound images based on breast characteristics in space and frequency domains. Pattern Recognit. 2015, 48, 485–497. [Google Scholar] [CrossRef]

- Shan, J.; Cheng, H.D.; Wang, Y. Completely automated segmentation approach for breast ultrasound images using multiple-domain features. Ultrasound Med. Biol. 2012, 38, 262–275. [Google Scholar] [CrossRef] [PubMed]

- Shao, H.; Zhang, Y.; Xian, M.; Cheng, H.D.; Xu, F.; Ding, J. A saliency model for automated tumor detection in breast ultrasound images. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 1424–1428. [Google Scholar] [CrossRef]

- Liu, Y.; Cheng, H.D.; Huang, J.; Zhang, Y.; Tang, X. An effective approach of lesion segmentation within the breast ultrasound image based on the cellular automata principle. J. Digit. Imaging 2012, 25, 580–590. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.A.; Bottou, L.; Orr, G.B.; Müller, K.R. Efficient backprop. In Neural Networks: Tricks of the Trade; Springer: New York, NY, USA, 2012; pp. 9–48. [Google Scholar] [CrossRef]

- Gamper, J.; Koohbanani, N.A.; Benet, K.; Khuram, A.; Rajpoot, N. PanNuke: An open pan-cancer histology dataset for nuclei instance segmentation and classification. In Proceedings of the European Congress on Digital Pathology, Warwick, UK, 10–13 April 2019; Springer: New York, NY, USA, 2019; pp. 11–19. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).