1. Introduction

In several fields, such as computing and electronics engineering, it is of great interest to analyze complex devices with several macro-states that evolve by time. It is usual to consider Markov processes for this analysis, but in multiple occasions, the spent times in each macro-state are not exponentially distributed. In this context, a new approach is to consider that the spent time in each macro-state is phase-type (PH) distributed. In this case, it is assumed that each macro-state is composed of internal performance states that have a Markovian behavior. One interesting aspect is that the modeling of the stochastic process can only occur when the macro-state process can be observed. For this new process, the Markovianity is lost.

A phase-type distribution is defined as the distribution of the absorption time in an absorbing Markov chain. These probability distributions constitute a class of distributions on the positive real axis, which seems to strike a balance between generality and tractability, thanks to its good properties. This class of distributions was introduced by Neuts [

1,

2] and allows the modeling of complex problems in an algorithmic and computational way. It has been widely applied in fields such as engineering to model complex systems [

3,

4,

5], queueing theory [

6], risk theory [

7] and electronics engineering [

8].

The good properties of PH distributions due to their appealing probabilistic arguments constitute their main feature of being mathematically tractable. Several well-known probability distributions, e.g., exponential, Erlang, Erlang generalized, hyper-geometric, and Coxian distributions, among others, are particular cases of PH. One of the most important PH properties is that whatever nonnegative probability distribution can be approximated as much as desired through a PH, accounting for the fact that the PH class is dense in the set of probability distributions on the nonnegative half-line [

9]. It allows the consideration of general distributions through PH approximations. Exact solutions to many complex problems in stochastic modeling can be obtained either explicitly or numerically by using matrix-analytic methods. The main features of PH distributions are described in depth in [

10].

A macro-state stochastic process is built here to model the behavior of different random telegraph noise (RTN) signals. Given that the behavior of the different macro-states (levels) is not exponentially distributed, we assume each level to be composed of multiple internal phases that can be related with other levels. We show that if the internal process, by considering internal states inside the macro-states, has a Markovian behavior, the process between levels is not. For the latter, the sojourn time in each level is phase-type distributed depending on the initial time. This fact is of great interest and gives us more information about the device’s internal process.

Advanced statistical techniques are key tools to model complex physical and engineering problems in many different areas of expertise. In this context, a new statistical methodology is presented; we will concentrate in the study of resistive memories [

11,

12], also known as resistive random access memories (RRAMs), a subgroup of a wide class of electron devices named memristors [

13]. They constitute a promising technology with great potential in several applications in the integrated circuit realm.

Circuit design is a tough task because of the high number of electronic components included in integrated circuits, therefore, electronic design automation (EDA) tools are essential. These software tools need compact models that represent the physics of the devices in order to fulfil their role in aiding circuit design. Although there are many works in compact modeling in the RRAM literature [

14,

15], there is a lot to be done, in particular in certain areas related to variability and noise. Variability is essential in modeling due to the RRAM operation’s inherent stochasticity, since the physics is linked to random physical mechanisms [

16,

17]. Another issue of great importance in these devices is noise. Among the different types of noise, random telegraph noise is of great concern [

18]. The disturbances produced by one or several traps (physical active defects within the dielectric) inside the conductive filament or close to it alter charge transport mechanisms and consequently, current fluctuations can occur (

Figure 1) that lead to RTN [

14,

15,

19,

20]. This noise can affect the correct device operation in applications linked to memory-cell arrays and neuromorphic hardware [

14,

16,

21], posing important hurdles to the use of this technology in highly scaled integrated circuits. Nevertheless, RTN fluctuations can also be beneficial, for instance, when used as entropy sources in random number generators, an employment of most interest in cryptography [

22,

23].

The application in this issue is addressed in the current work, in particular with the statistical description of RTN signals in RRAMs. This study, in addition to physically characterizing the devices, can be used for compact modeling and, therefore, as explained above, for developing the software tools needed for circuit design. Accounting for the aforementioned intrinsic stochasticity of these devices, the choice of a correct statistical strategy to attack this problem is essential in the analysis. In this respect, we use the PH distributions, which have already been employed to depict some facets of RRAM variability [

24]; nonetheless, as far as we know, they have not been used in RTN analysis.

In our study, we deal with devices whose structure is based on the Ni/HfO

2/Si stack. The fabrication details, as well as their electric characterization, are given in [

25]. RTN measurements were recorded in the time domain by means of an HP-4155B semiconductor parameter analyzer (

Figure 1). The current fluctuation between levels (see the marked levels in red in

Figure 1), ΔI, and the spent time in each of the levels are key parameters to analyze. The associated time with the current low level is known as emission time (τ

e), which is linked to the time the active defect keeps a “captured” electron trapped until it releases it (through this time, it is said that the defect is occupied). On the other hand, the capture time (τ

c) represents the time taken to capture an electron by an empty defect.

The manuscript is organized as follows: in

Section 2, the proposed statistical procedure is described; in

Section 3, we deepen on the measures associated; and in

Section 4, the number of visits to a determined macro-state is presented. The parameter estimation is unfolded in

Section 5, and the application of the methodology developed here in

Section 6. Finally, conclusions related to the main contributions of this work are presented in

Section 7.

2. Statistical Methodology

Different RTN signals have been employed here, such as the ones shown in

Figure 1. We have determined the number of current levels in order to be able to calculate the emission and capture times for a certain time interval. The current thresholds (red lines in

Figure 1) were chosen to allow the extraction algorithm to calculate the time intervals corresponding to the levels defined previously.

In order to explain these issues, a new model is first built in transient and stationary regimes.

2.1. The Model

We assume a stochastic process {

X(

t);

t ≥ 0} with macro-state space

. Each macro-state

k, level

k, is composed of

nk internal phases or states denoted as

for

h = 1,…,

nk. We assume that the device internal performance is governed by a Markov process, {

J(

t);

t ≥ 0}, with state space

, and with an initial distribution

θ, and the following generator matrix expressed by blocks:

where the matrix block

Qij contains the transition intensities between the states of the macro-states from

i to

j.

Throughout this work, we will denote as the matrix with zeros except the column matrix block k of Q, and as the matrix Q with zeros in the k-th block column. These matrices give the transition intensities of macro-state k and of any macro-state different to k, respectively.

Thus:

It can be seen that the following equality holds:

Given the Q-matrix of the Markov process, the transition probability matrix for the process {J(t); t ≥ 0} is given by P(t) = exp{Qt}. From this and from the initial distribution θ, the transient distribution at time t is given by (P{X(t) = }, P{X(t) = },…, P{X(t) = }) = a(t) = θ⋅P(t). The order of this vector is .

The transition probabilities for the process {

X(

t);

t ≥ 0} can be obtained from the transition probabilities of the Markov process {

J(

t);

t ≥ 0}. If it is denoted as

H(⋅,⋅) where the transition between macro-states

i→

j is given by:

This transition probability can be expressed in a matrix-algorithmic form as follows. Denoting by

Ak a matrix of zeros with order

except the block corresponding to the macro-state

k, which is the identity matrix, then:

where

a(

t)

Aie is the probability of being in the macro-state

i at time

t, with

e being a column vector of ones with an appropriate order. A similar reasoning can be done with the embedded jumping probability.

Note that the matrix H is a stochastic matrix, and therefore it is a transition matrix of a Markov process. However, an important remark is that the Markov process related to the matrix H does not correspond to the process defined in this section. In fact, this process is non-homogeneous and not Markovian.

2.2. Stationary Distribution

The stationary distribution for the process {X(t); t ≥ 0} can be calculated by blocks from the stationary distribution for the process {J(t); t ≥ 0}. It is well known that this distribution verifies the balance equations and the normalization equation . We denote as the block of corresponding to the macro-state k. It is denoted as e (k = 1,...,r) to the stationary distribution for the macro-state k of the process {X(t); t ≥ 0}.

The stationary distribution has been worked out by blocks in order to reduce the computational cost, according to the macro-states, and applying matrix-analytic methods.

As stated above, the stationary distribution verifies the following system:

It has been solved by using matrix-analytics methods, and the solution is given by

where

for any

i,

j = 1,…,

r with

i ≠

jThe vector

is worked out as follows:

Where, given a matrix

A,

A* is the matrix

A without the last column, and:

Finally, the stationary distribution of the process {

X(

t);

t ≥ 0} is given by

.

| Algorithm to calculate the stationary distribution |

| Step 1. For i, j =1,…, r and i ≠ j |

| Compute |

| Step 2. For j = r−1,…, 2 { |

| For i = 1,…, j { |

| Compute } |

| For i = 1,…, j { |

| Compute (i ≠ j)}} |

| Step 3. Compute and B |

| Step 4. Compute |

| Step 5. Compute and |

| |

| Example. Case r = 4 |

| Step 1. |

| ; ; |

| ; ; |

| ; ; |

| Step 2. |

| ; ; |

| ; |

|

|

|

|

|

|

| Step 3. |

|

| Step 4. |

|

| Step 5. |

| ; ; |

5. Parameter Estimation

The likelihood function when the exact change time between macro-states is known is achieved. For a device

l we observe

ml transition times, denoted as:

where the last time is a complete or a censoring time.

The time corresponds to the transition from to . These macro-states are:.

This device contributes to the likelihood function: likelihood function:

where

is 0 if the last time is a censoring time, and is 1 otherwise.

When

m independent devices are considered:

This function is maximized by considering that Qaa is a square sub-stochastic matrix whose main diagonal is negative and the rest are positive values, and Qab a matrix with positive elements with for any a and b.

Other log-likelihood function is given from the transition probabilities of the process

6. Application of the Developed Methodology

We have made use of measurements of current-time traces in a unipolar RRAM. The RTN signals had an easy pattern, so different features of the device could be studied. Current traces showed, for instance, the number of current levels in the signal, and also the frequency at which each of these levels was active. The developed methodology allows the modeling of the internal states under an approach based on hidden Markov processes that produces the observed output (RTN signal measured). By analyzing the signals shown in

Figure 1, we are able to determine these states and characterize them, probabilistically speaking, in order to understand their nature. It is possible to reproduce similar signals in case they are needed for a circuit simulation or physical characterization of the traps that help to generate the signals.

Specifically, we are going to analyze the behavior of several different current-time traces (RTN25, RTN26, RTN27) that show RTN for the described devices in the introduction. In addition, a long (more than three hours with millions of measured data) RTN current-time trace was measured and used here for the same device. Due to the fact that all signals come from devices with the same characteristics of fabrication, the difference between them lies in the applied voltage that produces the variations of electric current. Naturally, the measurement time for the long RTN was different. These measurements have previously been characterized in different ways [

19,

20]; however, in this new approach, the internal Markov chain that leads to the observed data set was identified, and the model, whose methodology is developed in this work, was estimated. In particular, in this application, it was shown that the short signals (RTN25, RTN26, and RTN27) had a Markovian internal behavior, whereas the developed new methodology was applied to the long RTN trace for its modeling.

6.1. Series RTN25-26-27

As stated above, the signals RTN25, RTN26, and RTN27 were produced by devices whose structure is based on the Ni/HfO2/Si stack. Nevertheless, different voltages were applied: 0.34 volts, 0.35 volts, and 0.36 volts, respectively. On the other hand, the measurement time was similar for each one of these series.

Hidden Markov Models and the Latent Markov chain for RTN25/26/27 traces

To study the number of possible latent levels hidden into the signals, we considered hidden Markov models (HMMs) [

26,

27]. Whereas in simple Markov models, the state of the device is visible to the observer at each time, in HMMs, only the output of the device is visible, while the state that leads to a determined output is hidden. Each state is associated with a set of transition probabilities (one per each state) defining how likely it is for the system, being in a given state at a given instant of time, to switch to another possible state (including a transition to the same state) at the successive instants of time.

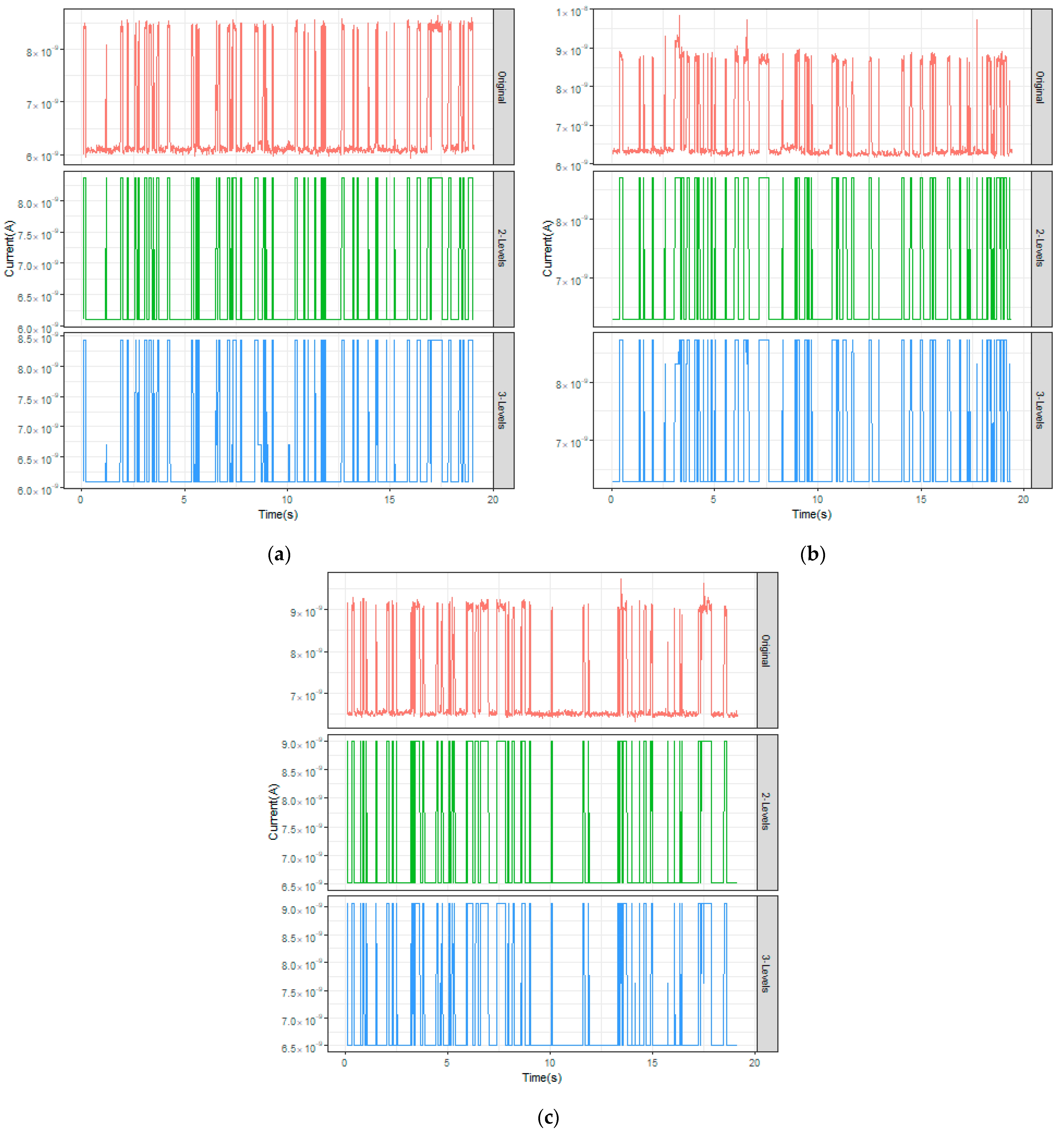

We analyzed different data sets for RTN25, RTN26, RTN27, and the hidden states from the corresponding signals were discriminated. The best fit was achieved for latent levels 2 and 3 for RTN25, RTN26, RTN27.

Figure 2 shows the original observed RTN signals and the corresponding latent levels given by the model for both cases. This analysis was performed using the depmixS4 package of R-cran [

28].

The RTN25/26/27 series was analyzed. The proportional number of times that the chain is in the latent states for the different proposed models is shown in

Table 1.

For each model, the continuous Markov chain associated to the latent states was estimated, and the corresponding stationary distribution was achieved. This is shown in

Table 2.

These values can be interpreted as the proportional time that the device is in each latent state in the stationary regime for the embedded continuous Markov chain. We observed that these values were very close to zero for the RTN25/26/27 devices. We tested this fact, and do not think it can be rejected.

Therefore, we assumed that the internal performance of the devices behaved as a Markov model with two latent states for the RTN25/26/27 traces. The Markov chains for two latent states for the different cases were estimated. The exponentiality of the sojourn time in each state cannot be rejected according to the p-values obtained by means of a Kolmogorov–Smirnov test, and the expected number of visits up to a certain time as explained in

Section 4.1 also was estimated. These estimations are shown in

Table 3.

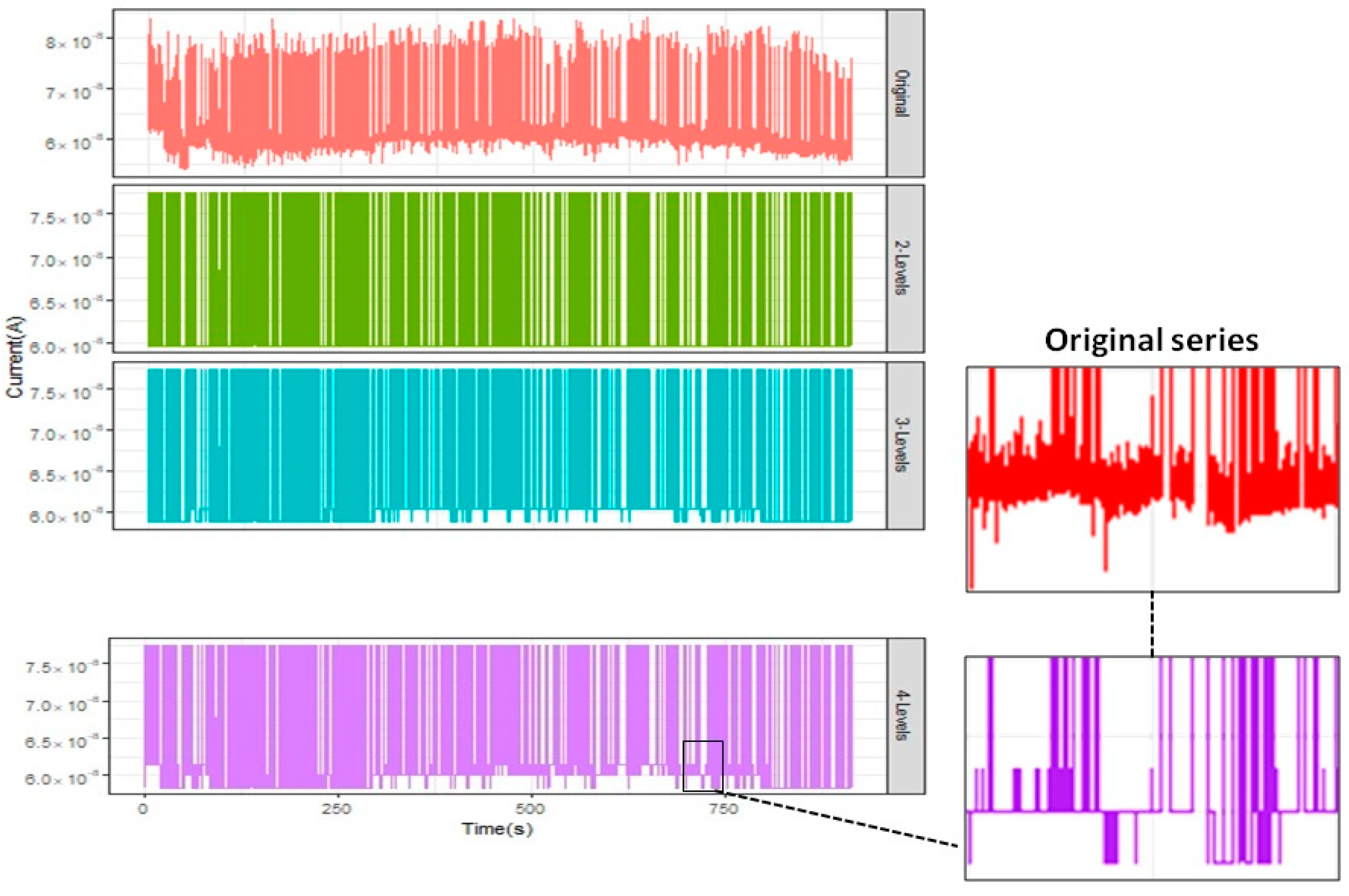

6.2. Long RTN Trace

A similar exploratory analysis for this trace was carried out. This signal was supplied with 0.5 V, and its behavior was measured for more than three hours. To study the number of possible latent levels hidden in the signal, we again considered hidden Markov chains. The best fit was achieved for latent levels 3 and 4 for this long RTN trace.

Figure 3 shows the original observed signal (a time interval of the whole signal was considered for the sake of computing feasibility) and the corresponding latent levels given by the model for both cases. This analysis also was performed using the depmixS4 package of R-cran.

We have focused on the four latent states. If the HMM is considered, the proportional time that the process was in each latent state was 0.3243048, 0.1219429, 0.1635429, and 0.3902095. If more latent states were assumed, negligible proportions would appear. Therefore, four different latent states were assumed. Before applying the new model to the data set, a statistical analysis was performed that considered classical techniques. For these latent states, the exponentiality of the sojourn time was tested and rejected through a Kolmogorov–Smirnov test for any latent state by obtaining the following p-values: 0.00027, 0.0045, 0.0000, and 0.0001, respectively. Next, we studied whether these times could be described as PH distributions. After multiple analyses, the best PH distributions for the latent states had 2, 2, 4, and 3 internal states, respectively. The structures of these PH distributions were generalized Coxian/Erlang distributions. Then, the internal behavior for each latent state passed across multiple internal states in a sequential way, one-by-one. The Anderson–Darling test was applied to test the goodness of fit, and obtained p-values of 0.8972, 0.4405, 0.0752, and 0.9876, respectively, for each latent state.

Generator of the Internal Markov Process

Given the previous analysis, we have observed that the sojourn time distribution in each macro-state (latent state) was phase-type distributed with a sequential degradation (generalized Coxian degradation). That is, the macro-states 1, 2 were composed of two phases (internal states), the macro-state 3 of four phases, and the macro-state 4 of three states. Thus, we assume the sojourn time in a macro-state i (level i) is PH distributed with representation

with the following structure:

| Macro-state 1 | Macro-state 2 |

| |

| Macro-state 3 | Macro-state 4 |

| |

We assume that when the device leaves the macro-state i, it goes to macro-state j with a probability of pij, where pii = 0 and , and the sojourn time in this new macro-state begins with the corresponding initial distribution αj.

Thus, the behavior of the device is governed by a stochastic process {

X(t); t ≥ 0} with an embedded Markov process {

J(

t);

t ≥ 0}, as described in

Section 2.1. The macro-state space is {

1, 2, 3, 4} and the state space

.

In this case, the matrix blocks are

and

for

i,

j = 1,...,

r and

I ≠

j. Therefore, the matrix generator is given by:

The parameters were estimated by considering the likelihood function built in

Section 5. The estimated parameters with a value of the logL = −4066.118120 are:

Therefore, the generator of the Markov process {

J(

t);

t ≥ 0} is:

with initial distribution

.

The stationary distribution was estimated for the process {

X(

t);

t ≥ 0} (given in

Table 4) from

Section 2.2. It can be interpreted as the proportional time in each macro-state (long-run).

Finally, the mean number of visits to each macro-state up to a certain time

t was obtained following

Section 4.1. It is shown in

Table 5.

7. Conclusions

A real problem motivates the construction of a new stochastic process that accounts for the internal performance of different macro-states by considering that it follows an internal Markovian behavior. We have shown that the homogeneity and Markovianity was lost in the developed new macro-state model. The sojourn time in each macro-state was phase-type distributed depending on the initial observed time. The stationary distribution was calculated through matrix-algorithmic methods, and the distribution of the number of visits to a determined macro-state between any two different times was calculated using a Laplace transform. The mean number of visits depending on times was worked out explicitly.

The new developed methodology enables the modeling of complex systems in an algorithmic way by solving classic calculus problems. Thanks to the proposed development, the results and measures were worked out in an easier way and could be interpreted. Matrix analysis and Laplace transform techniques were used to determine the properties of the model, and algorithms to obtain quantitative results were provided. Given that everything was carried out algorithmically, is the model was implemented computationally and was successfully applied to study different random telegraph noise signals measured for unipolar resistive memories.

Resistive memory random telegraph noise signals were analyzed in depth in order to characterize them from a probabilistic point of view. These signals are essential, since they can pose a limit in the performance of certain applications; in addition, this type of noise can be good as an entropy source in the design of random number generators for cryptography. From the proposed model, we showed that a latent state of a resistive memory RTN long signal is composed of multiple internal states. Of course, the applications of a phase-type model, as given in this work, are not restricted to the RRAM context.