Abstract

Confusion matrices are numerical structures that deal with the distribution of errors between different classes or categories in a classification process. From a quality perspective, it is of interest to know if the confusion between the true class A and the class labelled as B is not the same as the confusion between the true class B and the class labelled as A. Otherwise, a problem with the classifier, or of identifiability between classes, may exist. In this paper two statistical methods are considered to deal with this issue. Both of them focus on the study of the off-diagonal cells in confusion matrices. First, McNemar-type tests to test the marginal homogeneity are considered, which must be followed from a one versus all study for every pair of categories. Second, a Bayesian proposal based on the Dirichlet distribution is introduced. This allows us to assess the probabilities of misclassification in a confusion matrix. Three applications, including a set of omic data, have been carried out by using the software R.

1. Introduction

Confusion matrices are the standard way of summarizing the performance of a classification method. This is an issue of crucial interest in a variety of applied scientific disciplines, such as Geostatistics, mining data, mining text, Economy, Biomedicine or Bioinformatics, to cite only a few. A confusion matrix is obtained as a result of applying a control sampling on a dataset to which a classifier has been applied. Provided that the qualitative response to be predicted has categories, the confusion matrix will be a matrix, where the rows represent the actual or reference classes and the columns the predicted classes (or vice versa). So the diagonal elements correspond to the items properly classified, and the off-diagonal to the wrong ones. If a classifier is fair or unbiased, then the errors of classification between two given categories A and B must happen randomly, that is, it is expected that they occur approximately with the same relative frequency in every direction. Quite often, this is not the case, and a kind of systematic error occurs in a direction, that is, the observed value in a cell is considerably greater (or smaller) than its symmetric in the confusion matrix. In this paper, by classification bias, we mean this kind of systematic error, which happens between categories in a specific direction. As for the mechanism causing it, we distinguish:

- 1.

- The classification bias can be due to deficiencies in the method of classification. For instance, it is well known [1] that an inappropriate choice of k in the k-nearest neighbor (k-nn) classifier may produce this effect. In case of being detected, the method of selection of k must be revised;

- 2.

- On the other hand, the classification bias may be caused by the existence of a unidirectional confusion between two or more categories, that is, the classes under consideration are not well separated. In case of being detected, maybe additional predictors related to distinguish between these specific classes must be incorporated in the process of classification. Think, for instance, of a problem of classification related to the use of land, and two given categories, such as water and rice; the probability of confusing water with rice is not the same as that of confusing rice with water.

For all the aforementioned reasons we consider that it is of interest to pay attention to structure of misclassifications. In this paper, first marginal homogeneity tests are proposed to identify this problem in a global way. These are based on Stuart–Maxwell test [2] and Bhapkar test [3]. In affirmative case, a One versus All methodology is proposed [4], in which Mc-Nemar tests are proposed for every pair of classes. Since in this context, quite often, prior information can be available, which must be incorporated in the process of estimation [5], a Bayesian method based on the Dirichlet-Multinomial distribution is developed to estimate the probabilities of confusion between the classes previously detected. To illustrate the use of our proposal, three applications are considered. Application 1 corresponds to the field of Geostatistics [6]. There, a matrix is considered and studied in detail. Classification bias is detected in two categories. Bayesian estimates of probabilities of overprediction and underprediction in these categories are given, along with other Bayesian summaries. Application 2 corresponds to a problem of classification in text mining, specifically, literary genres [7]. In spite of the large number of categories, , our strategy allowed us to detect bias of classification in several categories and to estimate the associated probabilities [8]. Finally, in Application 3, a really difficult problem of diagnosis for Inflammatory Bowel Disease based on omic data is considered [9]. In this case, , as novelty, this fact allows us to visualize the posterior distributions associated to the different classes. We highlight that a serious problem of overprediction for the Chron Disease has been detected and estimated. As for recent works and references dealing with this topic in confusion matrices, we highlight that most papers focus on the assessment of the overall accuracy of the classification process, kappa coefficient, and methods to improve these measurements, see, for instance, [6,10,11] and references therein. Areas in which bias of classification, and its associated problems are of interest, can be seen in [1,12,13,14,15]. However, a scarce number of papers consider the study of the off-diagonal cells in a confusion matrix. In this sense, the paper by Tsendbazar et al. [16] can be cited where similarity matrices between classes are proposed to be used as weights for the computation of global accuracy measurements. On the other hand, the problem of inference with misclassified multinomial data from a Bayesian point of view is addressed in [17]. All these references show that this topic is of interest for a better definition of classes, and the improvement of the global process of classification. The statistical tools proposed can be used for a better comprehension of information in a confusion matrix. As computational tools, we highlight that the R Software [18] and packages [19,20,21] have been used.

It is of interest to highlight that the results proposed in this paper can be considered as a new metric to be applied to multi-class classification problems in machine learning [22]. In this sense, the first technique introduced in this paper, that is, marginal homogeneity tests, can be used to detect systematic problems of a classifier. On the other hand, the second one, based on a Bayesian analysis of a confusion matrix, can be used as a micro technique which allows us to compare several classifiers. As novelty, we highlight that we propose measurements to assess the performance of a classifier along with summaries about the variability of these measurements, which is not usual in machine learning.

2. Materials and Methods

In this section, we first propose considering a confusion matrix (or error matrix) as a statistical tool for the analysis of paired observations.

Let Y and Z be two categorical variables with categories. Let Y be the variable that denotes the reference (or actual) categories and Z the predicted classes. As a result of the classification process, the confusion matrix given in Table 1 is obtained, and denotes the number of observations in the cell for .

Table 1.

Confusion matrix.

The accuracy of Table 1 is

where equals to the total number of elements in the table, that is, the accuracy is the proportion of items properly classified. Other common global measurements of the performance of a classifier are the kappa index, sensitivity, specificity, Mathew’s correlation coefficient, F1-score and, for the tables, the area under the ROC curve (AUC) [6]. All of them are global measurements, focusing mainly in proportion of items properly classified, and do not pay attention to structure in the off-diagonal elements.

Let us introduce the notation to address the problem at hand. So, let us define the probability of occurs in the cell which corresponds to the ith row and the jth column, . is the joint probability mass function (pmf) of .

The marginal pmf’s of Y and Z, denoted as and , respectively, are obtained as:

where

and will be the basis on which to propose marginal homogeneity tests.

3. Marginal Homogeneity

Taking into account that cells in a confusion matrix can be seen as data for matched pairs of classes, we propose to test if marginal homogeneity can be assumed between the row and columns of this matrix, which is equivalent to test if the row and column probabilities agree for all the categories, that is:

Note that (1) states that the proportion of items classified in the sth class agrees with the proportion of actual or reference items in this class. If this agreement happens for all the categories, then this fact suggests that there do no exist systematic problems of classification (or classification bias) in our confusion matrix. This is the main idea on which to build our proposal.

3.1. 2 × 2 Table

Let us first introduce the method for a confusion matrix. Here, we propose to apply the McNemar type test [3] tailored for this context. So, for , let us consider:

Note that, in a classification problem, one of the variables refers to the actual category and the other one to the predicted class; so, in this context, the null hypothesis establishes that the probability of the class to be predicted is equal to the proportion of actual elements in the ith class. This agreement suggests that the performance of our classifier is good. On the other hand, the alternative hypothesis establishes that these probabilities significantly disagree. Therefore, if the null hypothesis is rejected, it can be concluded that there exists significant evidence of problems with this category. Nevertheless, we want to highlight that the emphasis must be on the method, since this test allows us to focus on the probabilities associated with the off-diagonal elements in a confusion matrix, that is, the probabilities of the wrongly classified or misclassified elements, since (2) is equivalent to:

To prove the equivalence between (2) and (3), it is enough to note that

and therefore (2) can be reduced to (3).

Test (3) can be solved following an exact approach, based on the binomial test, or an asymptotic one, based on chi-squared type statistics.

Binomial approach. Let us consider the number of misclassifications and a new variable C defined as:

C is a Bernoulli variable with success probability

The test given in (3) is equivalent to:

Let the statistic be the number of misclassified observations in the cell. Under the null hypothesis proposed in (6), T follows a binomial distribution, . Therefore, the binomial test can be applied. Recall that the p-value of test proposed in (6) is

A point of practical interest is that the exact approach allows us to carry out one-sided tests, which can also be solved in terms of the previously cited binomial test. The one-sided tests are

In terms of the variable C, introduced in (5), the one-sided tests proposed in (7) are equivalent to:

respectively. The interest of these one-sided tests will be seen in the practical applications.

Asymptotic approach. Under this approach [23], the following statistic can be considered to solve (3):

or the statistic with continuity correction proposed by Edwars [24]

In both cases, we have that where is the result of applying (or ) to our observed confusion matrix.

3.2. General Case

For a confusion matrix resulting from a multi-class classifier, , the Stuart-Maxwell test [3], also known as Generalized McNemar test can be considered. This test is aimed at finding evidence of significant differences between the actual and predicted probabilities in any of the categories, specifically

This test is based on the paired differences , where . Note that, is omitted since , as result of . Under the null hypothesis of marginal homogeneity, it was proven in [3] that and the statistic,

is asymptotically distributed as a chi-square variable with degrees of freedom.

In (10), and are the estimated covariance matrix of vector , whose elements are given by

A similar test was proposed by Bhapkar [3] based in the statistic,

where the elements of are estimated by

Both statistics are related via

and therefore they are equivalent.

3.3. Post-Hoc Analysis

If the null hypothesis is rejected in previous tests, we do not know which particular differences between probabilities of categories are significant. Our proposal is to use post hoc tests to explore which categories are significantly different while controlling the experiment-wise error rate. To reach this end, a One versus All approach is proposed. Specifically, for the ith category, with , let us consider:

Similarly to (4), note that:

Therefore (11) is equivalent to test:

Note that states that the proportion of elements belonging to the ith class () and that are classified into other ones () must agree with the proportion of elements which belong to the remaining classes () and have been wrongly predicted or misclassified in the ith category ().

To carry out the test proposed in (12), consider the confusion submatrix.

The McNemar test, given in Section 3.1, can be applied to Table 2 with the statistic test , which is distributed under the null hypothesis proposed in (12) as . We highlight that one-sided tests can also be carried out straightforwardly by applying the results in Section 3.1, which will allow us to draw conclusions about the specific problems with the categories under consideration.

Table 2.

Table 2 × 2.

4. Bayesian Methodology

In this section, a Bayesian approach, based on the multinomial-Dirichlet model, is proposed to estimate the probabilities of misclassification in the confusion matrix.

Definition 1

(Multinomial distribution). Let r and n be positive integers and let be numbers satisfying , , and . The discrete random vector follows a multinomial distribution with n trials and cell probabilities if the joint probability mass function (pmf) of is:

on the set of such that each is a nonnegative integer and (13) is denoted as: .

Recall that the multinomial distribution is used to describe an experiment consisting of n independent trials, where each trial results in one of r mutually exclusive outcomes. The probability of the jth outcome on every trial is . For , is the count of the number of times the jth outcome happened in the n trials. Some properties of interest for our purposes are listed in next lemma, additional details can be seen in [25].

Lemma 1.

Let with . Then,

- 1.

- The marginal distributions are binomials, , .

- 2.

- and , .

- 3.

- .

Remark 1.

In the multinomial distribution, all the coordinates in the vector are related, since their sum must be n. This fact results in all the pairwise covariances being negative, , . Moreover, note that the negative correlation is greater for variables with higher success probability. This makes sense, as the sum of the variables in the vector is constrained at n, so if one starts to get big, the others tend not to. These appreciations will be of interest in our applications.

Next, the Dirichlet distribution is introduced. Recall that this model is conjugate prior of the multinomial distribution [26].

Definition 2.

Let in the -simplex, that is, . The random vector follows a Dirichlet distribution with parameters , , if the joint probability density function (pdf) of θ is

where is the gamma function. (14) is denoted as .

Lemma 2.

Let . If , then the mode of is reached at

Lemma 3.

Let . Then

- 1.

- The marginal distributions are Beta distributed,

- 2.

- The mean and variance marginals are

The Dirichlet-multinomial model can be applied in a confusion matrix as follows. Note that, in the confusion matrix defined in Tabla Table 1 the number of elements in the kth row, denoted as , is fixed (since the rows are the actual or reference categories). Our proposal is to deal with every row as a multinomial distribution with trials and r possible outcomes (these are to be classified in the classes) whose probabilities are denoted as ,

and counts the number of elements in the kth reference category classified in the jth class, for .

Remark 2.

In terms of the notation introduced in Section 2, .

As prior distribution for a Dirichlet distribution is proposed

Given a confusion matrix, whose observed rows are denoted by , by applying Bayes Theorem, and since the Dirichlet distribution is a conjugated prior for the Multinomial model, the posterior distribution for is

where ∝ stands for proportional to.

Therefore,

5. Applications

5.1. Application 1

First a confusion matrix taken from the fields of Geostatistics and Image Processing [6] is considered. The matrix has four categories () and was obtained from an unsupervised classification method from a Landsat Thematic Mapper image. It is given in Table 3. The categories related to the land use are: FallenLeaf, Conifers, Agricultural and Scrub. Rows correspond to the Actual classes and columns to the Predicted classes. The sample size is . As for a global measurement of classification, we have that the . Certain asymmetry or misclassification is observed in the off-diagonal elements, which suggests the existence of classification bias or significant differences between pairs of categories. Let us formalize these appreciations.

Table 3.

Confusion matrix: Land use.

5.1.1. Marginal Homogeneity

Since we have a matrix, to test the multiple marginal homogeneity Stuart-Maxwell or Bhapkar tests must be applied. Summaries are given in Table 4. These are the observed values of statistics, degrees of freedom (df) of their asymptotic distributions, , and the corresponding p-values ().

Table 4.

Marginal Homogeneity (Land use).

In both tests, we reach the conclusion that there exists significant evidence to reject the null hypothesis of marginal homogeneity. Next step it is to look for those categories with serious deficiencies in the classification process. The One versus All methodology proposed in Section 2 is applied for every category. The necessary auxiliary submatrices are labelled next as Table 5, Table 6, Table 7 and Table 8.

Table 5.

Auxiliary matrix FallenLeaf.

Table 6.

Auxiliarymatrix Conifers.

Table 7.

Auxiliary matrix Agricultural.

Table 8.

Auxiliary matrix Scrub.

McNemar tests are applied to Table 5, Table 6, Table 7 and Table 8. The results for two-sided and one-sided tests are given in Table 9.

Table 9.

McNemar test for every category.

Remark 3.

In order to properly interpret the p-values in Table 9, the problem of multiple comparisons must be taken into account. For a significance level and by applying the Bonferroni correction, every test should be carried out for significance level. Other corrections could also be applied.

Note that, from p-values in Table 9, there exist evidence to reject the marginal homogeneity for the categories FallenLeaf and Scrub, which correspond to p-values and in Table 9, respectively. Let us go into details and consider the following test for FallenLeaf

where . The p-value of this test is p-value= , so is rejected, that is, there exists significant evidence to reject that the proportion of elements in the category Fallen Leaf and they are misclassified in others, , is greater or equal to . Therefore may be supposed. As it was seen in Section 3.1, McNemar test allows us to restrict our attention to cells (1, 2) and (2, 1) in Table 5. So is equivalent to suppose that , that is, in this case the dominant probability is that of actual observations in other categories and are predicted as FallenLeaf, . So we may conclude that there exists confusion between the rest of categories and , since much more observations are assigned to FallenLeaf class than those really belong to. It could be said that there exists an overprediction of observations in the class .

Analogously, for the class, the test which corresponds to p-value= 0.0000022 is:

with .

In this case, we have the opposite situation, since the null hypothesis is rejected, there exists evidence to reject that the probability of actual being and being classified in other categories, , is less than or equal to . Therefore, it can be concluded that is the dominant probability. So it can be said that an important part of actual observations in give rise to confusion, and an important part of them are predicted in other classes, therefore causing an underprediction misclassification problem.

Since we have detected problems in certain categories, it is of interest to estimate the associated probabilities. This issue is studied in the next section from a Bayesian perspective.

5.1.2. Bayesian Approach

In this subsection for every category, a uniform prior distribution is considered, which corresponds to the Dirichlet distribution with . Given as the kth row in Table 3, the posterior distribution is:

with for .

Explicitly, for the category Fallen Leaf, and by applying (19)

From (16), the mean, variance and standard deviation of the posterior marginal distributions are given in Table 10. They are denoted by , and , respectively.

Table 10.

Bayesian summaries in A_FallenLeaf.

The column in Table 10 provides the Bayes estimates of conditional probabilities to

under quadratic loss function. We highlight the good estimate which has been obtained in this case with

Remark 4.

The mode of the posterior distribution can also be given as Bayesian estimates of conditional probabilities to . For the distribution in (20), it would be

Similarly, the Bayesian summaries are obtained for the rest of the categories. They are listed in Table 11 for Actual Connifers, in Table 12 for Actual Agricultural, and in Table 13 for Actual Scrub.

Table 11.

Bayesian summaries in .

Table 12.

Bayesian summaries in .

Table 13.

Bayesian summaries in .

Conclusions

As a summary of previous tables, Table 14 is given with the Bayesian estimates of probabilities in every conditional distribution.

Table 14.

Summary Bayesian estimates of conditional probabilities in the problem.

Let us look to these conditional distributions. First we focus on the fourth column in Table 14, where the conditional probabilities associated with category have been estimated. Note that

is quite low. Moreover, we have that

It could be said that there exists an underprediction of the Scrub category, since observations which are actual Scrub are often misclassified as FallenLeaf or Agricultural. These appreciations are coherent with the result in test (18).

As for the first column, corresponding to the conditional probabilities in the class , we highlight the good estimates obtained for = 0.83. However, note that, in the first row of Table 14, we have

which are coherent with results in test (17). It could be said that those elements which are the actual in the class FallenLeaf are properly classified, but there exists problems of confusion of other categories to FallenLeaf, specifically actual Agricultural and Scrub observations are often misclassified as FallenLeaf. Both facts cause an overprediction of the FallenLeaf class.

Next, 95% credible intervals are given: equal tails, denoted as (, ) and Highest Posterior Density (HPD) intervals, denoted as (, ). Both intervals are obtained from the marginal distributions of posterior Dirichlet distribution given in (19) and by using R software [18] and package [19]. Table 15 provides these intervals for the posterior distributions to , Table 16 to , Table 17 to and Table 18 to .

Table 15.

95% credible intervals: .

Table 16.

95% credible intervals: .

Table 17.

95% credible intervals: .

Table 18.

95% credible intervals: .

The credible intervals are quite similar. Recall that the HPD intervals are more precise.

5.2. Application 2

In this application, a confusion matrix with categories is considered, Table 19. This matrix is obtained as a result of applying classification processes of literary genres in books by using text mining techniques [7]. The categories under consideration are , , Fantasy(Fan), , and . We have 50 actual observations in every category. The interest of this application is to illustrate the performance of our proposal in a different field, text mining, and a bigger, , confusion matrix.

Table 19.

Confusion matrix: Literary genres.

5.2.1. Marginal Homogeneity

In Table 20, the summaries of applying Stuart–Maxwell and Bhapkar tests to the confusion matrix proposed in Table 19 are listed. In both tests, the conclusion that there exists significant evidence to reject the null hypothesis of multiple marginal homogeneity is reached, and therefore the One versus All strategy based on the McNemar test is applied to every category listed in Table 19. The most relevant summaries of one-sided tests are given in Table 21 and Table 22.

Table 20.

Marginal homogeneity (Literary Genres).

Table 21.

Literary Genres: p-values of tests in which was accepted.

Table 22.

Literary Genres: p-values of tests in which was accepted.

From p-values in Table 21, it could be concluded that , and categories are overpredicted with problems of classification of some of the other categories to these ones. On the other hand, from p-values in Table 22, and are underpredicted, and actual observations in these categories are misassigned to other ones.

Next, Bayesian techniques are applied, which allow us to assess these appreciations.

5.2.2. Bayesian Approach

A similarly process to the one explained in Application 1 has been followed. That is, a noninformative prior Dirichlet distribution is considered for every category, , with . The summary of Bayesian estimates of conditional probabilities are provided in Table 23.

Table 23.

Summary of Bayesian estimates of conditional probabilities in .

For those categories in which was accepted an overprediction problem is expected to happen. From Table 21, these are , and . It can be seen in Table 23, that in these categories the estimated probability of right classification is high

Moreover, from the analysis by rows in these categories, we can observe that the estimated probabilities that actual observations in other categories are classified in these ones are high. As an illustration, consider the category , and note that:

A similar analysis can be carried out for and .

On the other hand, for those categories in which was accepted an underprediction problem is expected to happen. These are , , and , see Table 22. In these categories the estimated probability of right classification are moderated, see , in the diagonal of Table 23. From the analysis by columns in Table 23, note that actual observations in these categories are classified in other ones also with moderate probabilities (around 0.10 or 0.20).

To conclude, take a look at and . In both cases actual observations in (or ) are wrongly misclassified in , and , but also it receives misclassifications of actual observations in or , (for Fiction from History and Comedy). There exists a balance between both opposite streams, which is not detected by the tests in our proposal.

5.3. Application 3

In this case the confusion matrix given in Table 24 is considered. It is taken from [9] (Figure 1, E). This matrix is obtained as result of applying an artificial intelligence classification method for the diagnosis of Inflammatory Bowel Disease (IBD) based on fecal multiomics data. IBD’s are Crohn’s disease (CD) and Ulcerative Colitis (UC). nonIBD refers to the control group. We chose this example because IBD’s are really difficult to diagnose and classify, and their accurate diagnosis is really an important issue in Medicine, details can be seen in [9].

Table 24.

Confusion matrix: Inflammatory Bowel Disease (IBD).

Huang et al. proposed in [9] a method with high accuracy for the diagnosis of different types of IBD. Specifically, the accuracy of Table 24 is , which in this context is considered high. However certain asymmetry is observed in the off-diagonal elements of Table 24,which due to the importance of the problem under consideration deserves additional analysis.

5.3.1. Homogeneity

Similarly to previous applications, the results of applying multiple homogeneity test are given in Table 25. The marginal homogeneity is again rejected. So the one versus all strategy is applied, and their summaries are listed in Table 26.

Table 25.

Marginal homogeneity test (IBD).

Table 26.

IBD: McNemar test for every category.

The analysis of results in Table 26 shows that:

- 1.

- For the control group, nonnIBD, there does not exist evidence to reject the null hypothesis of marginal homogeneity. Therefore we do not detect any systematic errors in this category;

- 2.

- For UC, the test,with . It is obtained p-value , and therefore the null hypothesis is rejected, which suggests underprediction of the UC category.

- 3.

- For CD, the test,with , is obtained, which suggests overprediction of CD disease.

Since in this example we have evidence of problems of misclassification, the next step is to assess the conditional probabilities of interest.

5.3.2. Bayesian Approach

In this case, a noninformative prior distribution is first considered. Since other possibilities are also possible, later a sequential use of Bayes is illustrated.

Noninformative Prior Distributions

Let us consider a prior Dirichlet distribution with , as in previous applications the Bayes estimates of conditional probabilities are obtained along with the variances and standards deviations of marginal distributions. As novelty in this application, we highlight that since we are dealing with categories, the posterior distribution associated to each category can be represented in the two-dimensional simplex, which allows a visual analysis of these joint distributions. To obtain the graphical representation in the two-dimensional simplex, 1000 values have been generated by using the R software, a grid has been established and the corresponding contour plots have been displayed.

From results in Table 27, we highlight that

Table 27.

Bayesian summaries in .

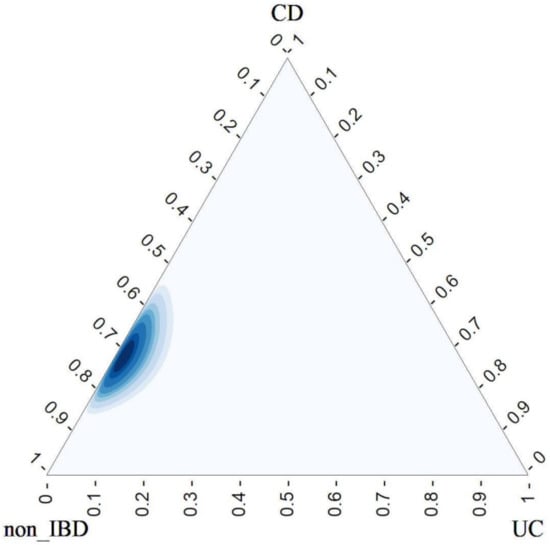

Although in the control group, , there is no evidence of classification bias, the estimated probability of being classified as CD is relatively high. As for the plot given in Figure 1, note that the joint posterior distribution is quite concentrated and close to vertex. The mode of this posterior distribution can also be given as Bayes estimates of the conditional probabilities, these are:

and These estimates are quite close to the previous ones.

Figure 1.

Posterior Dirichlet in A_nonIBD.

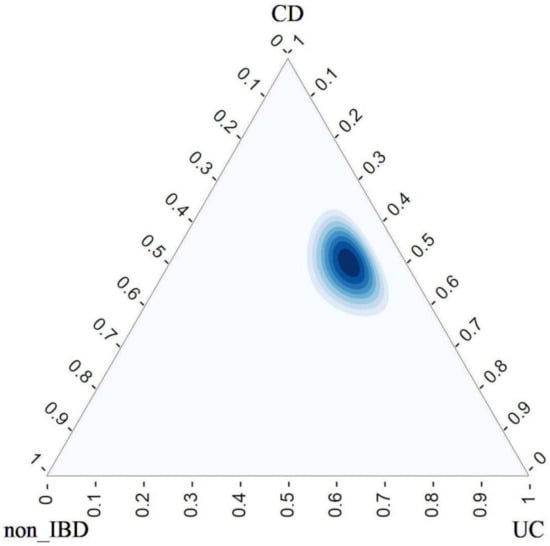

In the category, Table 28, we found that, is quite low, and , that is, the estimated probability of an individual with UC to be diagnosed as CD is surprisingly high.

Table 28.

Bayesian summaries in .

As for the joint posterior distribution plotted in Figure 2, we highlight that the area of highest posterior density is closer to the CD vertex than to UC vertex. This is coherent with the result in test (26), and confirms the underprediction of UC category in favour of CD.

Figure 2.

Posterior Dirichlet in A_UC.

The mode of this posterior distribution is:

Again, these estimates are quite close to the previous ones.

Finally, let us study the CD category in Table 29.

Table 29.

Bayesian summaries in .

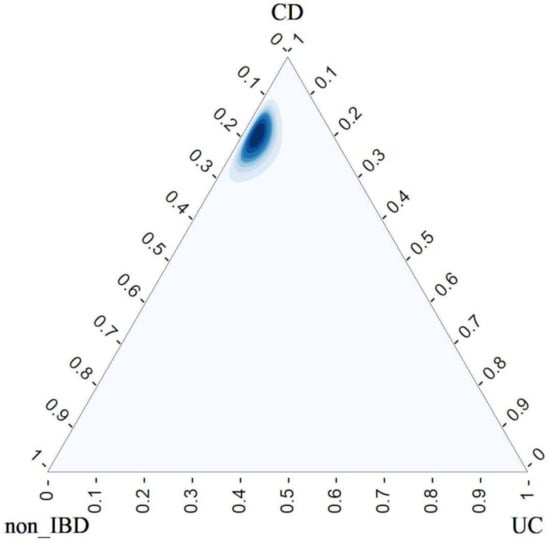

We highlight that the estimated probability of the right classification is the highest one, and the area of highest posterior density is close to CD vertex, see Figure 3. The mode of Figure 3 is .

Figure 3.

Posterior Dirichlet in A_CD.

All these facts allow us to conclude that there exists a serious problem of overprediction of CD and underprediction of UC. To asses this fact estimates of conditional probabilities have been given. As for the joint posterior distributions, note that for and , they are close to the corresponding vertex as it can be seen in Figure 1 and Figure 3 respectively, which is good for a right classification. However, for is clear the confusion with the category CD, see Figure 2.

For completeness, credible intervals are given in Appendix A along with results for a uniform discrete prior in r points.

5.3.3. Sequential Use of Bayes Theorem

In this subsection, it is shown that if new information is available then the Bayes theorem can be used in a sequential way to update our beliefs. Moreover our estimates exhibit less variability as it next illustrated.

Step 1, (confusion matrix ). Consider the Dirichlet-multinomial model for every row in a confusion matrix, denoted as . That is, for , we have a prior Dirichlet distribution for ,

and , the kth row with the counts in , is distributed as

Given , the posterior distribution for is

Step 2, (confusion matrix ). If, in the same problem of classification, a new confusion matrix, is obtained in a set of independent observations of those considered to build , then the distribution given in (28) can be considered as prior in Step 2 to get a new posterior distribution. Specifically, let

and the kth row with the counts in , where

Given , the posterior distribution for is

To illustrate the sequential method, let us consider as the matrix given in Table 24 and the matrix given in Table 30.

Table 30.

matrix (IBD).

The different estimates are listed in the following tables.

We highlight the increase of precision we got when the new information is incorporated in the process of estimation. Note that the standard deviations of posterior distributions listed in Table 31, Table 32 and Table 33 are less than those listed in Table 27, Table 28 and Table 29. This is the main merit of the sequential use of Bayes’ theorem.

Table 31.

Bayesian summaries Step 2 in .

Table 32.

Bayesian summaries Step 2 in .

Table 33.

Bayesian summaries Step 2 in .

6. Discussion

The aim of this paper is to propose methods to detect the bias of classification, as well as overprediction and underprediction problems associated to categories in a confusion matrix. The methods may be applied to confusion matrices obtained as result of applying supervised learning algorithms, such as logistic regression, linear and quadratic discriminant analysis, naive Bayes, k-nearest neighbors, classification trees, random forests, boosting or support vector machines, among others. First marginal homogeneity tests are introduced. They are based on applying techniques to matched pairs of observations tailored to this context. Second, a Bayesian methodology, based on the multinomial-Dirchlet distribution is developed, which allows us to confirm and to assess the magnitudes of these problems by using prior information. Three applications taken from peer-reviewed and different scientific literature have been carried out. They illustrate relevant aspects related to the performance of our proposal, mainly varying the dimension r of the confusion matrix. In all of them, the results obtained have been satisfactory. We consider that these new methods are of interest for a better definition of classes, to improve the performance of classification methods, and to assess the global process of classification. As for related work, we highlight the results given in [22], where an excellent review of metrics to deal with multi-class classification tasks is given. There, usual indicators such as accuracy, recall, F1-Score, and kappa coefficients, among others along with their properties can be found. In this sense, we highlight that the Bayesian results given in Section 4 can be used as a micro method, with the additional merit of providing measurements about the variability of summaries proposed. In this sense, the standard deviation of posterior distributions can be used. To carry out a comparison of the results in our paper to existing metrics can be of interest in future works. Additionally, we intend to depply study the structure of confusions, for instance, to analyze if certain classes have a common confusion structure or not, their relationships, the effect of the sample size, or dealing with unbalanced classes.

Author Contributions

Conceptualization, methodology and writing, I.B.-C.; validation and software, R.M.C.-G. All authors have read and agreed to the published version of the manuscript.

Funding

The research of Rosa M. Carrillo-García has been funded by Grant PI3 “Programa IMUS de Iniciación a la Investigación”, IMUS, Seville, 2021.

Data Availability Statement

References have been given where the confusion matrices used in the applications can be found.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Application 3

In this Appendix, for completeness, 95% credible intervals are given for the setting studied in Section 5.3, that is Application 3. Also results for another prior distribution, the Perks prior or discrete uniform prior distribution in r points are included. Similar results to those for the continuous case are obtained.

Table A1.

credible intervals: .

Table A1.

credible intervals: .

| P_nonIBD | 0.5518703 | 0.7931919 | 0.5564379 | 0.7971693 |

| P_UC | 0.0044345 | 0.0971910 | 0.0008478 | 0.0837975 |

| P_CD | 0.1762997 | 0.4096195 | 0.1716235 | 0.4040643 |

Table A2.

credible intervals: .

Table A2.

credible intervals: .

| P_nonIBD | 0.0547901 | 0.2302899 | 0.0477798 | 0.2195273 |

| P_UC | 0.2478722 | 0.5019668 | 0.2448073 | 0.4985839 |

| P_CD | 0.3683954 | 0.6316046 | 0.3683954 | 0.6316046 |

Table A3.

credible intervals: .

Table A3.

credible intervals: .

| P_nonIBD | 0.0973247 | 0.2421973 | 0.0933429 | 0.2370862 |

| P_UC | 0.0113483 | 0.0877318 | 0.0076403 | 0.0800716 |

| P_CD | 0.7111385 | 0.8692713 | 0.7155517 | 0.8728854 |

These credible intervals are useful to asses the possible values of interest in the problem under consideration.

Remark A1

(Perks prior or discrete prior distribution in r points). A similar study to the one conducted in Section 5.3 was carried out by using a discrete prior distribution in r points, that is , . Similar results were obtained, which are listed in Table A37.

Table A4.

Summaries (A discrete uniform prior).

Table A4.

Summaries (A discrete uniform prior).

| A_nonIBD | A_UC | A_CD | |

|---|---|---|---|

| P_nonIBD | 0.691 | 0.122 | 0.16 |

| P_UC | 0.025 | 0.372 | 0.035 |

| P_CD | 0.284 | 0.506 | 0.806 |

References

- Goin, J.E. Classification Bias of the k-Nearest Neighbor Algorithm. IEEE Trans. Pattern Anal. Mach. Intell. 1984, PAMI-6, 379–381. [Google Scholar] [CrossRef] [PubMed]

- Black, S.; Gonen, M. A Generalization of the Stuart-Maxwell Test. In SAS Conference Proceedings: South-Central SAS Users Group 1997; Applied Logic Associates, Inc.: Houston, TX, USA, 1997. [Google Scholar]

- Sun, X.; Yang, Z. Generalized McNemar’s Test for Homogeneity of the Marginal Distributions. In Proceedings of the SAS Global Forum Proceedings, Statistics and Data Analysis, San Antonio, TX, USA, 16–19 March 2008; Volume 382, pp. 1–10. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J.H. The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd ed.; Springer: New York, NY, USA, 2009. [Google Scholar]

- Barranco-Chamorro, I.; Luque-Calvo, P.; Jiménez-Gamero, M.; Alba-Fernández, M. A study of risks of Bayes estimators in the generalized half-logistic distribution for progressively type-II censored samples. Math. Comput. Simul. 2017, 137, 130–147. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data. Principles and Practices, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Carrillo-García, R.M. Text Mining: Principios Básicos, Aplicaciones, Técnicas y Casos Prácticos. Master’s Thesis, Universidad de Sevilla, Sevilla, Spain, 2021. [Google Scholar]

- Carrillo-García, R.M. Algorithms and Applications in Statistical Data Mining; PI3: Programa IMUS de Iniciación a la Investigación; Instituto de Matemáticas de la Universidad de Sevilla: Sevilla, Spain, 2021. [Google Scholar]

- Huang, Q.; Zhang, X.; Hu, Z. Application of Artificial Intelligence Modeling Technology Based on Multi-Omics in Noninvasive Diagnosis of Inflammatory Bowel Disease. J. Inflamm. Res. 2021, 14, 1933–1943. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Frazier, P.; Kumar, L. Comparative Assessment of the Measures of Thematic Classification Accuracy. Remote Sens. Environ. 2007, 107, 606–616. [Google Scholar] [CrossRef]

- Pontius, R.; Millones, M. Death to Kappa: Birth of quantity disagreement and allocation disagreement for accuracy assessment. Int. J. Remote Sens. 2011, 32, 4407–4429. [Google Scholar] [CrossRef]

- Lance, R.F.; Kennedy, M.L.; Leberg, P.L. Classification Bias in Discriminant Function Analyses used to Evaluate Putatively Different Taxa. J. Mammal. 2000, 81, 245–249. [Google Scholar] [CrossRef] [Green Version]

- Schmidt, R.L.; Walker, B.S.; Cohen, M.B. Verification and classification bias interactions in diagnostic test accuracy studies for fine-needle aspiration biopsy. Cancer Cytopathol. 2015, 123, 193–201. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rivas-Ruiz, F.; Pérez-Vicente, S.; González-Ramírez, A. Bias in clinical epidemiological study designs. Allergol. Immunopathol. 2013, 41, 54–59. [Google Scholar] [CrossRef] [PubMed]

- Barranco-Chamorro, I.; Muñoz Armayones, S.; Romero-Losada, A.; Romero-Campero, F. Multivariate Projection Techniques to Reduce Dimensionality in Large Datasets. In Smart Data. State-of-the-Art Perspectives in Computing and Applications; CRC Press, Taylor & Francis Group: Boca Raton, FL, USA, 2019. [Google Scholar]

- Tsendbazar, N.; de Bruin, S.; Mora, B.; Schouten, L.; Herold, M. Comparative assessment of thematic accuracy of GLC maps for specific applications using existing reference data. Int. J. Appl. Earth Obs. Geoinf. 2016, 44, 124–135. [Google Scholar] [CrossRef]

- Pérez, C.J.; Girón, F.J.; Martín, J.; Ruiz, M.; Rojano, C. Misclassified multinomial data: A Bayesian approach. Rev. Real Acad. Cienc. Exactas Fís. Nat. Ser. A Mat. (RACSAM) 2007, 101, 71–80. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021. [Google Scholar]

- Meredith, M.; Kruschke, J. HDInterval: Highest (Posterior) Density Intervals. R Package Version 0.2.2. 2020. Available online: https://cran.r-project.org/web/packages/HDInterval/index.html (accessed on 12 July 2021).

- Signorell, A.; Aho, K.; Alfons, A.; Anderegg, N.; Aragon, T.; Arachchige, C.; Arppe, A.; Baddeley, A.; Barton, K.; Bolker, B.; et al. DescTools: Tools for Descriptive Statistics. R Package Version 0.99.44. 2021. Available online: https://cran.r-project.org/web/packages/DescTools/index.html (accessed on 12 December 2021).

- Tsagris, M.; Athineou, G. Compositional: Compositional Data Analysis. R Package Version 4.8. 2021. Available online: https://cran.r-project.org/web/packages/Compositional/index.html (accessed on 10 August 2021).

- Grandini, M.; Bagli, E.; Visani, G. Metrics for Multi-Class Classification: An Overview. arXiv 2020, arXiv:2008.05756. [Google Scholar]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef] [PubMed]

- Edwards, A. Note on the “correction for continuity” in testing the significance of the difference between correlated proportions. Psychometrika 1948, 13, 185–187. [Google Scholar] [CrossRef] [PubMed]

- Balakrishnan, N.; Johnson, N.L.; Kotz, S. Multinominal Distributions. In Discrete Multivariate Distributions; Series in Probability and Statistics; Wiley: Hoboken, NJ, USA, 1997; Chapter 2. [Google Scholar]

- Kotz, S.; Balakrishnan, N.; Johnson, N.L. Dirichlet and Inverted Dirichlet Distributions. In Continuous Multivariate Distributions: Models and Applications; Series in Probability and Statistics; Wiley: Hoboken, NJ, USA, 2000; Volume 1, Chapter 49; pp. 458–527. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).