1. Introduction

Solving systems of linear equations is one of the most important subjects in numerical linear algebra. In particular, applied mathematics and engineering often require the solution of linear systems with tridiagonal coefficient matrices. Solving tridiagonal linear systems generally involves finding

N-dimensional vectors

, such that

for given

N-by-

N tridiagonal matrices

A and

N-dimensional vectors

. The cyclic reduction method is a finite-step direct method for computing solutions

[

1,

2]. The cyclic reduction method first transforms tridiagonal coefficient matrices

A to pentadiagonal matrices with all subdiagonal (and superdiagonal) entries equal to 0. The right vectors

are, of course, simultaneously changed. Two non-zero off-diagonals of the coefficient matrices gradually leave the diagonals in the iterative cyclic reductions, with the coefficient matrices eventually being reduced to diagonal matrices. Error analysis of the cyclic reduction method has been reported in [

3], and a variant of the cyclic reduction method has been also presented, for example, in [

4]. The stride reduction method is a generalization of the cyclic reduction method that can solve problems where

A are

M-tridiagonal matrices,

M is the bandwidth, and there are two non-zero off-diagonals consisting of

,

, …,

and the

,

, …,

entries [

5]. Each stride reduction, including cyclic reduction, narrows the distribution of the roots of characteristic polynomials associated with the coefficient matrices if

A are symmetric positive definite [

6,

7]. This is a desirable property that does not increase the difficulty of solving systems of linear systems.

Here, we consider positive integers

satisfying

. The cyclic reduction method is extended to solve a problem where coefficient matrices

are block tridiagonal matrices [

2,

8] expressed using

m square matrices

,

, …,

and

rectangular matrices

,

, …,

and

,

, …,

as:

A block tridiagonal matrix can be regarded as a block matrix obtained by replacing the diagonal entries in a tridiagonal matrix with square matrices and the subdiagonal entries with rectangular matrices. This extended method is called the block cyclic reduction method. The forward stability of iterative block cyclic reductions has been discussed in [

9], but changes in roots of characteristic polynomials of coefficient matrices have not been studied. Block cyclic reductions do not work well in floating point arithmetic if they greatly disperse the roots. Thus, the main purpose of this paper is to clarify whether block cyclic reductions narrow the root distribution like stride reductions.

The remainder of this paper is organized as follows.

Section 2 briefly explains the block cyclic reduction method used for solving block tridiagonal linear systems.

Section 3 shows that block

M-tridiagonal matrices can be transformed to block tridiagonal (1-tridiagonal) matrices without changing the eigenvalues. Then, we interpret the transformation from

M-tridiagonal matrices to

-tridiagonal matrices in the block cyclic reduction as a composite transformation of the block tridiagonalization, its inverse, and the transformation from block tridiagonal to block 2-tridiagonal matrices. In

Section 4, we find the relationship between the inverses of block tridiagonal matrices and those of block 2-tridiagonal matrices.

Section 5 looks at the roots of characteristic polynomials of coefficient matrices transformed by block cyclic reductions compared with those of original coefficient matrices

A in cases where

A are block tridiagonal and symmetric positive definite or negative definite.

Section 6 gives two numerical examples for observing coefficient matrices and the roots of their characteristic polynomials appearing in iterative block cyclic reductions.

Section 7 concludes the paper.

2. Block Cyclic Reduction

In this section, we briefly explain the block cyclic reduction method used for solving linear systems with block tridiagonal coefficient matrices.

We consider the following

N-by-

N block-band matrix given using

m square matrices

,

, ⋯,

and

rectangular matrices

,

, ⋯,

and

,

, ⋯,

as:

where the superscript in parentheses appearing in

specifies the position of non-zero off-diagonal bands

and

. If

,

, and

are all 1-by-1 matrices for every

i, then

is the

M-tridiagonal matrix [

6] and

,

, and

are the

,

, and

entries of

, respectively. Thus, we hereinafter refer to

as the block

M-tridiagonal matrix and

,

, and

as the

,

, and

blocks of

, respectively. Note that block 1-tridiagonal matrices are the usual block tridiagonal matrices. In the case where

,

, and

are not 1-by-1 matrices, we must pay attention to the number of rows and columns of

,

, and

. For example, we can compute the matrix product

but cannot define

if

, unlike in the case where

,

, and

are all 1-by-1 matrices.

We hereinafter consider the case where

are all nonsingular. Here, we prepare an

N-by-

N block

M-tridiagonal matrix involving non-zero blocks

,

, and

:

where

are the

-by-

identity matrices. The number of rows and columns of

,

, and

coincide with those of

,

, and

, respectively. In other words,

has the same block structure as

. Then, we can easily observe that the

and

blocks are all zero and the

and

blocks of

are all non-zero, meaning that

becomes an

N-by-

N block

-tridiagonal matrix

, The non-zero blocks

,

, and

appearing in the

,

, and

blocks are also expressed using

,

, and

as:

Thus, by multiplying the block

M-tridiagonal linear system

by

from the left on both sides, we transform it to the block

-tridiagonal linear system

, where

. This transformation is the block cyclic reduction [

2]. We can again apply the block cyclic reduction to the block

-tridiagonal linear system

if the diagonal blocks

in the block

-tridiagonal matrix

are all nonsingular. The iterative block cyclic reductions therefore cause non-zero off-diagonal blocks to gradually leave the diagonal blocks, eventually generating linear systems with block diagonal matrices.

3. Composite Transformation

In this section, we first show that block M-tridiagonal matrices can be transformed into block tridiagonal (1-tridiagonal) matrices while preserving the eigenvalues. Next, we consider the transformation from the block M-tridiagonal matrix to the block -tridiagonal matrix in terms of the transformation from the block tridiagonal (1-tridiagonal) matrix to the block 2-tridiagonal matrix with two similarity transformations.

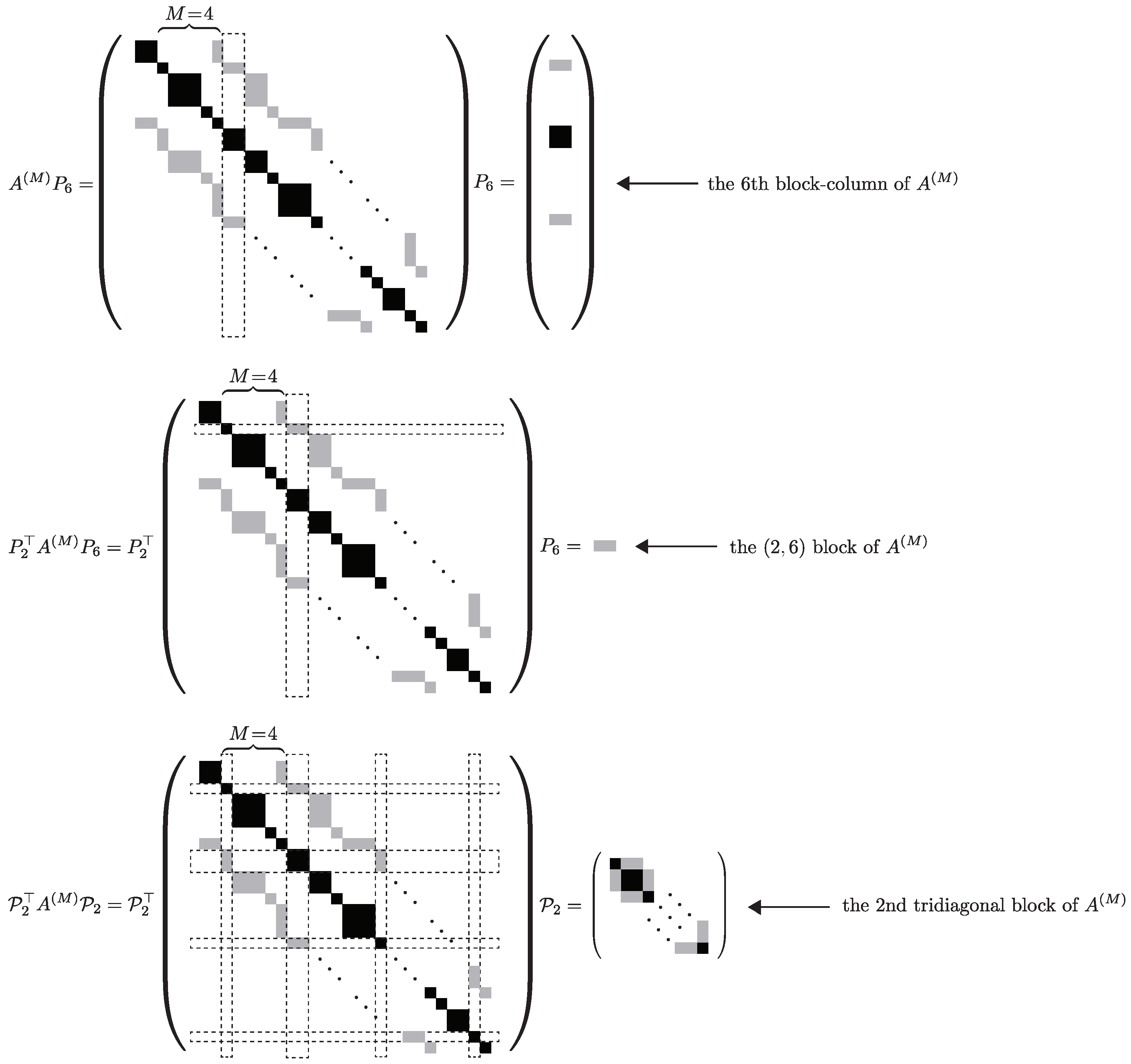

We consider

N-by-

matrices:

where

O denotes the zero matrix, whose entries are all 0, and the number of rows in the 1st, 2nd, ⋯,

mth blocks are equal to those in

,

, ⋯,

, respectively. Then, it is obvious that

,

, ⋯,

become the 1st, 2nd, ⋯,

mth block-columns of

, respectively. Furthermore, for

, it is observed that

,

, ⋯,

coincide with the 1st, 2nd, ⋯,

mth block-rows of

, respectively. Thus, we see that

are the

blocks of

for

—namely:

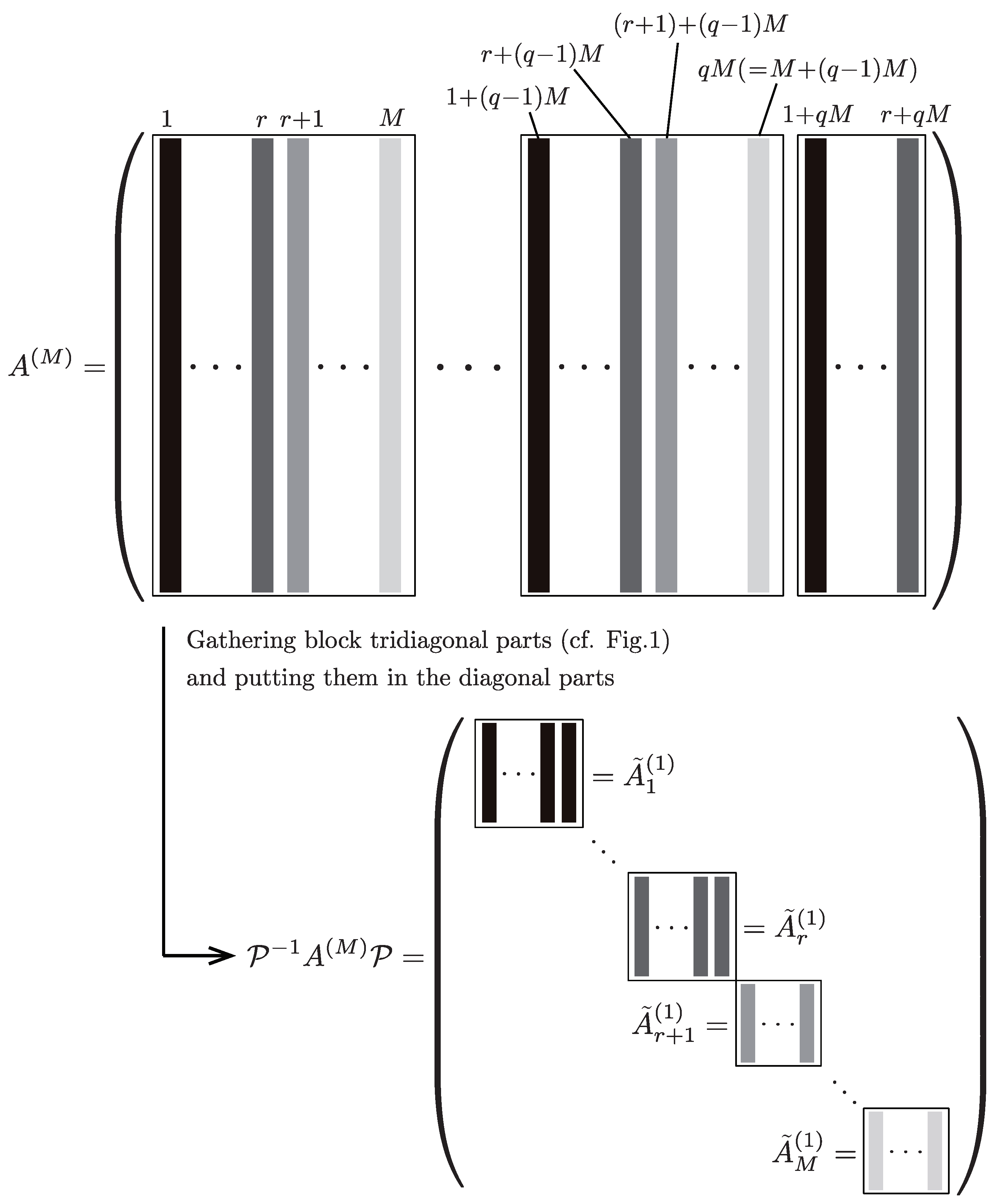

Here, we introduce

matrices

for

, where

q and

r are the quotient and the remainder after the division of

m by

M, respectively. Then, it follows that:

Thus, by gathering these, we can derive:

Similarly, by letting

for

, we obtain:

See, also,

Figure 1 for an auxiliary example of gathering a block tridiagonal part, as shown in (

6) and (

7). Therefore, using the permutation matrix

, we can complete a block tridiagonalization of

as:

where

. Here, we may regard

as a block diagonal matrix in terms of

. However, we emphasize that

,

are nothing but auxiliary matrices and are essentially block tridiagonal matrices in terms of realistic blocks

,

, and

. Furthermore, in the following sections, we should recognize that (

8) is a block tridiagonalization and not a block diagonalization of

.

Figure 2 gives a sketch of a block tridiagonalization of

. Noting that the

is an orthogonal matrix—namely,

, we can determine that

has the same eigenvalues as

. To summarize, we can divide a linear system with the block

M-tridiagonal coefficient matrix

into

M linear systems with the block tridiagonal coefficient matrices

,

, ⋯,

without the loss of the eigenvalues of

.

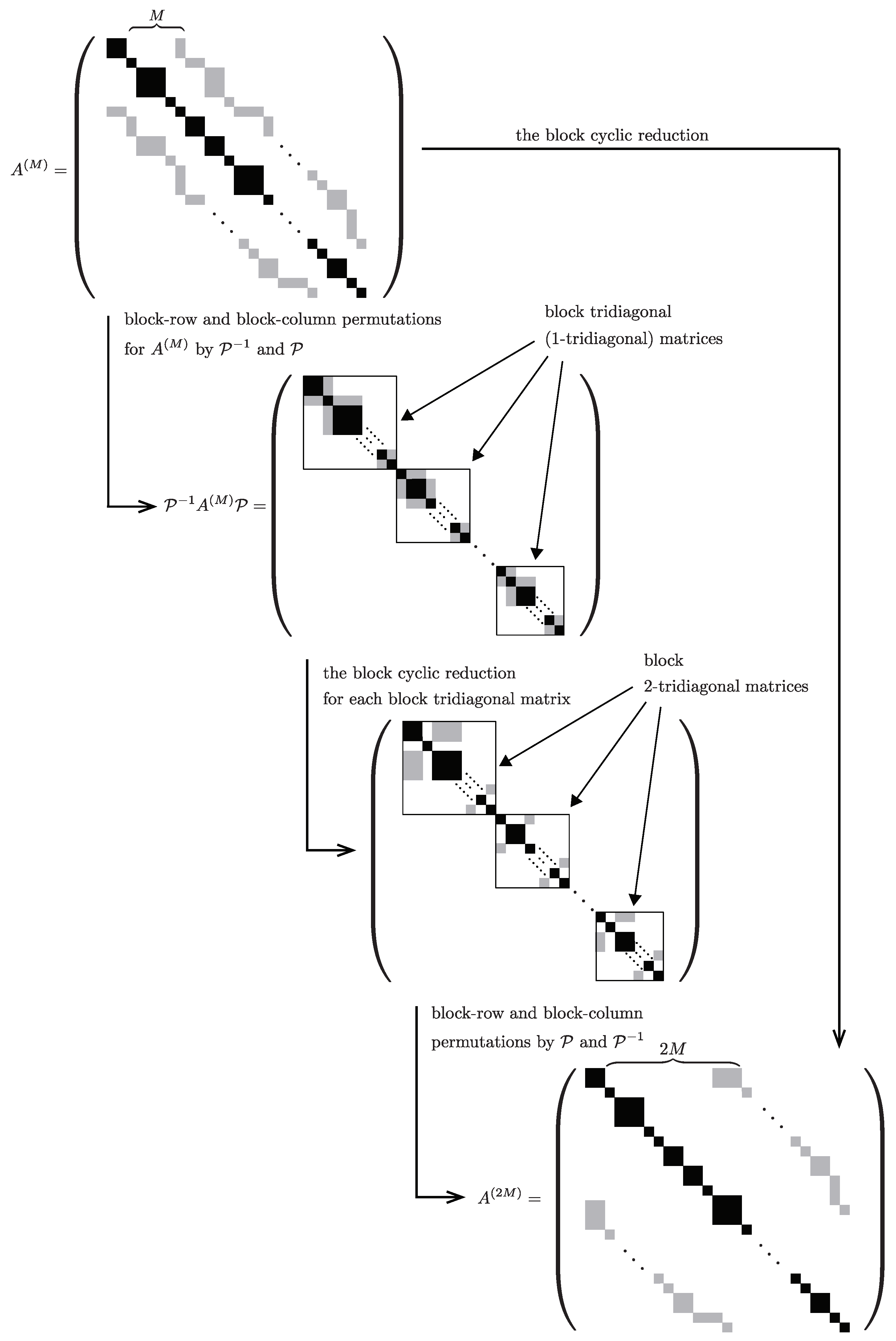

Recalling that

, we can decompose

into:

Noting that

has the same block structure as

, whose block tridiagonalization is shown in

Section 3, we can immediately derive:

where

Combining (

8) and (

10) with (

9), we obtain:

This implies that the transformation from

to

can also be completed using three transformations: (1) the block tridiagonalization from

to

; (2) the transformations from the block 1-tridiagonal matrices

to the block 2-tridiagonal matrices

; and (3) the block

-tridiagonalization from

to

. See

Figure 3 for the relationships among the block tridiagonal (1-tridiagonal), 2-tridiagonal,

M-tridiagonal, and

-tridiagonal matrices.

4. Inverses of Block 1-Tridiagonal and 2-Tridiagonal Matrices

In this section, we express the inverse of the block 1-tridiagonal matrix using that of the block 2-tridiagonal matrix . This is useful for comparing the roots of the characteristic polynomials of the block 1-tridiagonal matrix and the block 2-tridiagonal matrix in the next section.

We introduce two auxiliary matrices and , where involving the -dimensional identity matrices . Then, we derive the following lemma for an expression using D and of transformation matrix .

Lemma 1. The transformation matrix can be decomposed using D and into: Proof. It is obvious that

and

are both block 1-tridiagonal matrices. It is easy to check that

where

denotes the

blocks of a matrix. Similarly, it turns out that

. Thus, by noting that

, we can derive:

Furthermore, it can easily be observed that

. Noting that

D is nonsingular, we obtain (

14). □

Since it is obvious that , it holds that . This implies that the eigenvalues of coincide with those of . Thus, is nonsingular if is nonsingular. From Lemma 1, it immediately follows that . Therefore, the inverse of exists if is nonsingular. The following proposition gives the relationship of to and .

Proposition 1. For the blocks and , it holds that:where O denotes the zero matrix whose entries are all 0 as shown previously. Accordingly, Proof. Observing the

ith block-row on both sides of the trivial identity

, we can obtain

m matrix equations:

Multiplying both sides of the 1st, 2nd, ⋯,

mth equations in (

18) from the right by

,

, ⋯,

, respectively, we can rewrite (

18) as:

where

,

and

. Similarly, by focusing on the

jth block column of both sides of

and multiplying the 1st, 2nd, ⋯,

mth equations from the left by

,

, ⋯,

, respectively, we can derive:

Adding the

kth equation of (

19) to that of (

20), multiplying this by

, and letting

, we can thus obtain:

where

and

. The summation for

of (

21) leads to:

From Lemma 1, we can see that:

. Since it follows from

that

, we can obtain:

Consequently, by combining (

22) with (

23), we can derive:

which implies (

16). The matrix identity

also gives the relationship of blocks in

and

:

Considering (

24) and (

25), we then have (

17). □

Proposition 1 plays a key role in understanding the change in the roots of characteristic polynomials of coefficient matrices in linear systems after block cyclic reductions.

5. Roots of Characteristic Polynomial Sequence

In this section, we first investigate roots of characteristic polynomials of the block 2-tridiagonal matrix , which is transformed from the block 1-tridiagonal matrix in the block cyclic reduction under the assumption that is symmetric positive definite.

With the help of Proposition 1, we present a theorem for the roots of characteristic polynomials of and .

Theorem 1. Assume that the block tridiagonal matrix is symmetric positive definite. Then, for the block 2-tridiagonal matrix , it holds that:where denotes the kth largest root of the characteristic polynomial of a matrix—namely, the kth largest eigenvalue of a matrix. Proof. Let

be a normalized eigenvector corresponding to

. Noting that

and

are both symmetric and considering the Rayleigh quotient [

10], we can derive:

This equality holds in (

27) if and only if

is the eigenvector of

corresponding to

, while the equality holds in (

28) if and only if

is also the eigenvector of

corresponding to

. The inequalities (

27) and (

28) immediately lead to:

Using Proposition 1, we can rewrite the Rayleigh quotient

as:

From (

29) and (

30), we can see that:

Similarly, from a comparison of the Rayleigh quotient

with the minimal eigenvalue

, it follows that:

Thus, by combining (

31) with (

32), we can obtain:

Since the eigenvalues of a matrix are the reciprocals of eigenvalues of its inverse matrix, we therefore have (

26). □

The following theorem is a specialization of Theorem 1.

Theorem 2. Assume that the block tridiagonal matrix is symmetric positive definite and that its eigenvalues are distinct from each other. Furthermore, let the non-zero blocks all be nonsingular square matrices with the same matrix size. Then, for the block 2-tridiagonal matrix , it holds that: Proof. We begin by reconsidering the proof of Theorem 1. The proof of (

33) is completed by proving

and

. The equality in (

31) does not hold if the equality in (

29) holds. We recall here that the equality in (

29) does not hold if

is not the eigenvector of at least either

or

corresponding to

. Noting that

and

, we can thus see that

if the eigenvector of

corresponding to

is not equal to that of

corresponding to

. Similarly, the inequality

holds if the eigenvector of

corresponding to

is not equal to that of

corresponding to

.

Let us assume here that

is the eigenvector of both

and

corresponding to

—namely,

and

. From

, we can then derive:

This implies that

is also the eigenvector of

corresponding to

. Noting that

does not have multiple eigenvalues, we can thus obtain

. Let

denote the

-dimensional vector in the

ith block-rows of

. Then, by observing that:

we can specify

as:

where

denotes the zero vector whose entries are all 0. Thus, by focusing on the 1st, 3rd, ⋯,

mth block rows on both sides of

with odd

m, we have:

Since

,

, ⋯,

are all nonsingular. (

34) immediately leads to

,

, ⋯,

. Namely,

is the zero vector. This contradicts the assumption that

is the eigenvector of both

and

corresponding to

. The contradiction is similarly derived in the case where

m is even. Therefore, we conclude that

. We also have

along the same lines as the above proof. □

We recall here that the transformation from the block M-tridiagonal matrix to the block -tridiagonal matrix can be regarded as a composite transformation of the transformations from the block tridiagonal matrices to the block 2-tridiagonal matrices and two similarity transformations. By combining this with Theorems 1 and 2, we can derive the following theorem concerning the roots of the characteristic polynomials of and .

Theorem 3. Assume that the block M-tridiagonal matrix is symmetric positive definite, where . Then, for the block -tridiagonal matrix , it holds that: Furthermore, let the roots of the characteristic polynomials of be distinct from each other, the non-zero blocks all be nonsingular square matrices, and their matrix sizes all be the same. Then, it holds that: It is obvious that

,

, ⋯ are symmetric if the block tridiagonal matrix

is also symmetric in the original linear system

. From Theorem 3, we can recursively see that

,

, ⋯ are positive definite if the original coefficient matrix

is also positive definite. We then conclude that:

or

as long as the block cyclic reductions are repeated. The discussion in this section can easily be changed for the case where the original coefficient matrix

is negative definite.

6. Numerical Examples

In this section, we give two examples for observing changes in coefficient matrices in the iterative block cyclic reductions for block tridiagonal linear systems and numerically verifying Theorems 1 and 2 with respect to the roots of the characteristic polynomials of coefficient matrices. The numerical illustration was carried out on a computer; OS: Mac OS Monterey (ver. 12.0.1), CPU: 2.4 GHz Intel Core i9. For this, we employed the MATLAB software (R2021a). Computed values are given in floating-point arithmetic.

We first consider the case where

is the 9-by-9 symmetric block tridiagonal and positive definite matrix with

,

, and

:

where the values of

,

and

are computed using the MATLAB function eig and rounded to 4 digits after the decimal point. The diagonal blocks are all symmetric and their matrix sizes are distinct from one another. Since the determinants of the

,

, and

blocks are, respectively, 85, 322, and 89, the diagonal blocks are all nonsingular. The non-zero subdiagonal (and superdiagonal) blocks are not square matrices, but the transposes of the

and

blocks coincide with those of the

and

blocks, respectively. After the 1st block cyclic reduction,

is transformed to the symmetric block 2-tridiagonal matrix:

where all non-zero entries are rounded to 4 digits after the decimal point. Using the MATLAB function eig, we can see that

and

. Thus,

. It is also easy to check that the diagonal blocks of

are all nonsingular. This implies that a block cyclic reduction can again be applied to the linear system with the coefficient matrix

. The 2nd block cyclic reduction then simplifies

as the block diagonal matrix:

The MATLAB function eig immediately returns and . Therefore, .

Next, we deal with the case where

is the 9-by-9 symmetric block tridiagonal and negative definite matrix with

,

and

,

This is an example matrix appearing in the discretization of Poisson’s equation [

11]. It is different from the 1st example matrix in that all the blocks have the same matrix size. It is obvious that the non-zero blocks are all 3-by-3 nonsingular. A block cyclic reduction then transforms

into the symmetric block 2-tridiagonal matrix:

where

,

, and

. Since the non-zero blocks in

are all nonsingular, we can simplify

as the block diagonal matrix:

with

,

, and

. Thus, it can numerically be observed that:

.

7. Concluding Remarks

This paper focused on coefficient matrices in linear systems obtained from block iterative cyclic reductions. We showed that block M-tridiagonal coefficient matrices can be transformed to block tridiagonal matrices without changing the eigenvalues. We interpreted transformations from block M-tridiagonal matrices to block -tridiagonal matrices as composite transformations of the block tridiagonalizations, with their inverses and transformations from block tridiagonal matrices to block 2-tridiagonal matrices appearing in the first step of the block cyclic reduction method. We then used this interpretation to consider the other steps of the block cyclic reduction method. We found a relationship between the inverses of block tridiagonal matrices and block 2-tridiagonal matrices in the first step, which helped us to clarify the main results of this paper—i.e., the first step narrows the distribution of roots of characteristic polynomials associated with coefficient matrices, and the other steps also do this if the original coefficient matrices are symmetric positive or negative definite. This property suggests that each block cyclic reduction does not make it difficult to solve a linear system with a symmetric positive or negative definite block tridiagonal matrix, which will be useful for dividing a large-scale linear system into several small-scale ones.

A remarkable point of our approach is, as a result, useful regardless of whether coefficient matrices are tridiagonal or block tridiagonal. However, the coefficient matrices are currently limited to be symmetric positive or negative definite. For example, in the nonsymmetric Toeplitz case [

12], our approach cannot grasp root distribution of the characteristic polynomial. Future work thus involves developing our approach so that root distribution can be examined in the cases of various coefficient matrices.