A Mating Selection Based on Modified Strengthened Dominance Relation for NSGA-III

Abstract

:1. Introduction

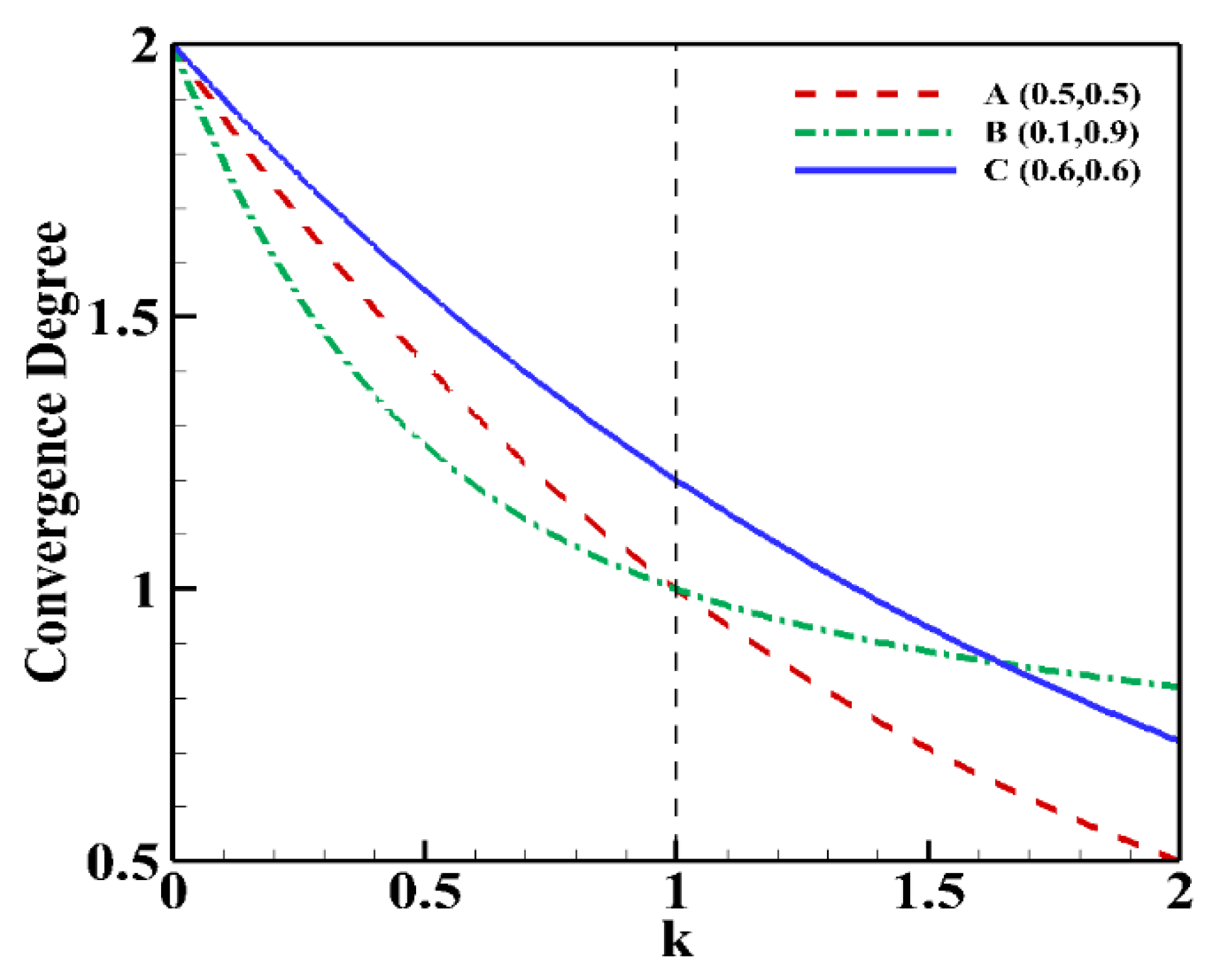

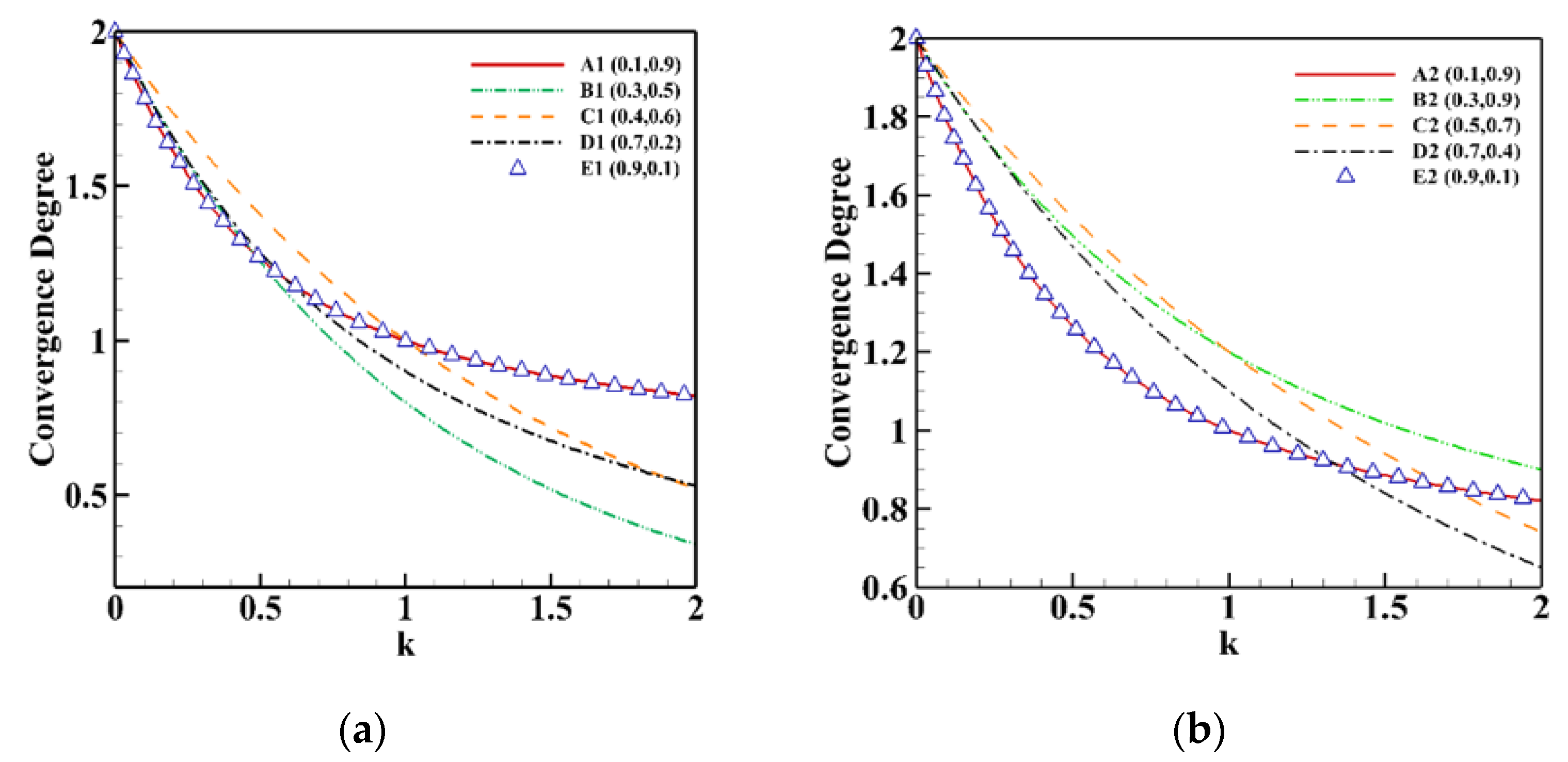

2. Related Study and Motivation

- Approaches that expand the dominance area by modifying the dominance relationship such as α-dominance, generalized Pareto dominance (GPO) [20].

- (a)

- Pareto dominance, (1-k) dominance and L-dominance are good at achieving diversity but poor at promoting convergence.

- (b)

- CDAS, GPO and Grid-based methods are good at achieving convergence but poor at maintaining diversity.

- (c)

- S-CDAS is poor at promoting both diversity and convergence.

3. Controlled Strengthened Dominance-Based Mating Selection with Adaptive Ensemble of Parameters for NSGA-III (NSGA-III*)

- (1)

- Mating selection that employs modified SDR.

- (2)

- Environmental selection is similar to standard NSGA-III with weight vectors and traditional Pareto dominance.

| Algorithm 1: NSGA-III* pseudo-code |

| 01: Generate initial population (N) |

| 02: t = 1; |

| 03: While (t < tmax) do |

| 04: |

| 05: |

| 06: |

| 07: |

| 08: |

| 09: |

| 10: |

| 11: End While |

3.1. Initialization

3.2. Mating Selection with Modified SDR and Offspring Generation

| Algorithm 2: |

| 01: Generate initial population (N) |

| 02: |

| 03: |

| 04: End For |

| 05: |

3.3. Normalization

3.4. Environmental Selection

| Algorithm 3: Environmental_selection |

| (Merged Population at generation), W (Reference Point Set), N (Size of Population) |

| 01: |

| 02: |

| 03: |

| 04: Else |

| 05: |

| 06: |

| 07: |

| 08: |

| 09: |

| 10: |

| 11: End If |

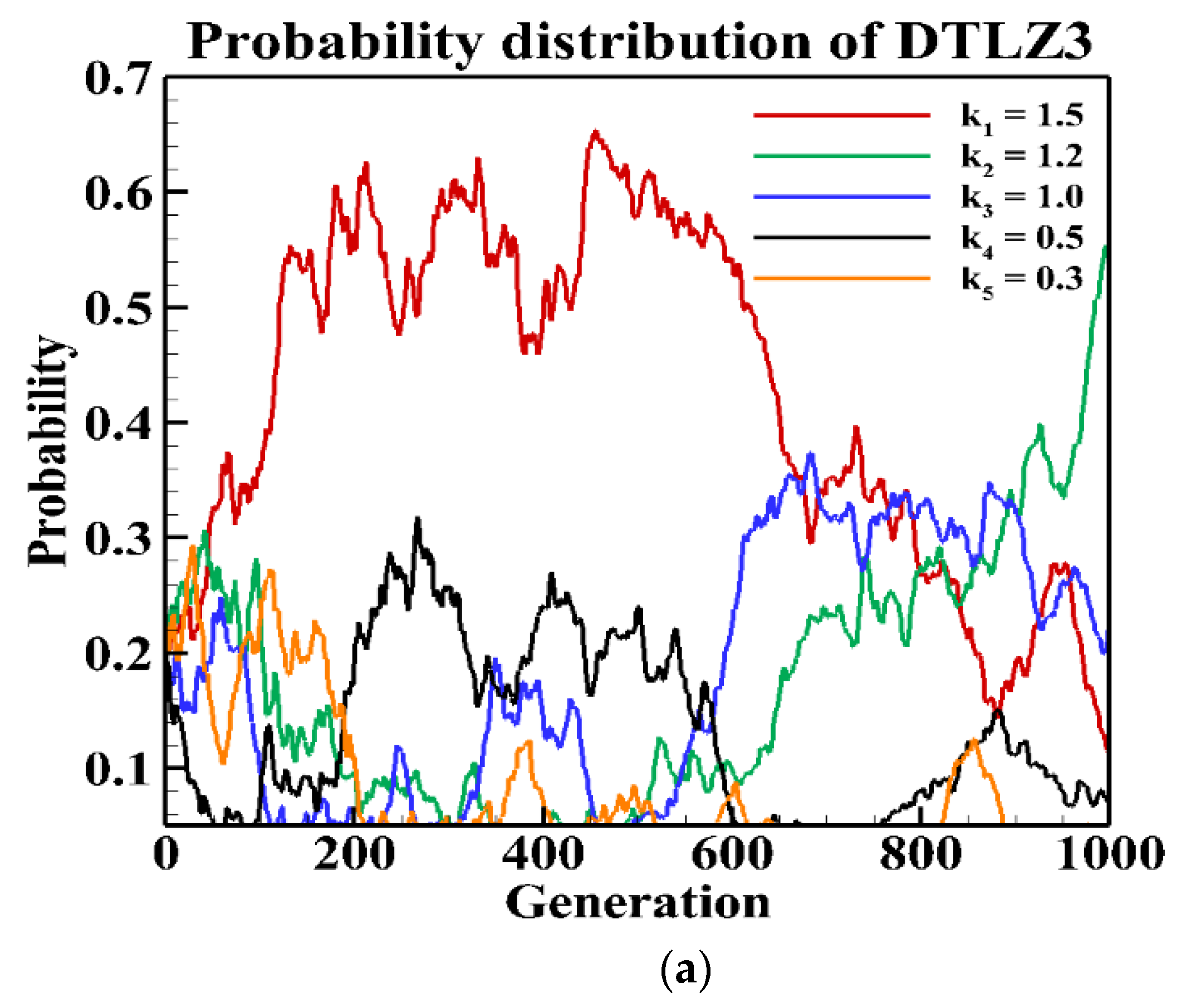

3.5. Adaptation of the Probability of Parameters in the Ensemble Pool

| Algorithm 4: |

| 01: count the number of occurrences of solutions for each k. |

| 02: |

| 03: |

| 04: |

| 05: |

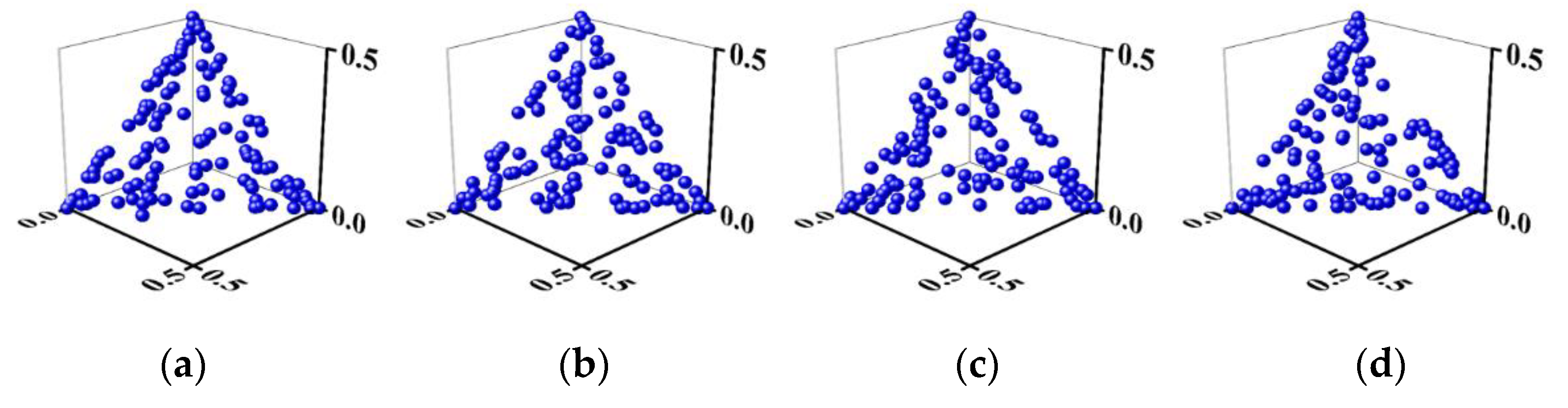

4. Experimental Setup, Results and Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Goldberg, D.E. Genetic Algorithms in Search, Optimization and Machine Learning; Addison-Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 1989. [Google Scholar]

- Ikeda, K.; Kita, H.; Kobayashi, S. Failure of Pareto-based MOEAs: Does non-dominated really mean near to optimal? In Proceedings of the 2001 Congress on Evolutionary Computation (IEEE Cat. No.01TH8546), Seoul, Korea, 27–30 May 2001; Volume 2, pp. 957–962. [Google Scholar]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef] [Green Version]

- Knowles, J.; Corne, D. The Pareto archived evolution strategy: A new baseline algorithm for Pareto multiobjective optimisation. In Proceedings of the 1999 Congress on Evolutionary Computation-CEC99 (Cat. No. 99TH8406), Washington, DC, USA, 6–9 July 1999; Volume 1, pp. 98–105. [Google Scholar]

- Zitzler, E.; Laumanns, M.; Thiele, L. SPEA2: Improving the Strength Pareto Evolutionary Algorithm; Eidgenössische Technische Hochschule Zürich (ETH), Institut für Technische Informatik und Kommunikationsnetze (TIK): Zurich, Switzerland, 2001; Volume 103. [Google Scholar]

- Zhang, Q.; Li, H. MOEA/D: A Multiobjective Evolutionary Algorithm Based on Decomposition. IEEE Trans. Evol. Comput. 2007, 11, 712–731. [Google Scholar] [CrossRef]

- Liu, H.; Gu, F.; Zhang, Q. Decomposition of a Multiobjective Optimization Problem Into a Number of Simple Multiobjective Subproblems. IEEE Trans. Evol. Comput. 2014, 18, 450–455. [Google Scholar] [CrossRef] [Green Version]

- Li, K.; Deb, K.; Zhang, Q.; Kwong, S. An Evolutionary Many-Objective Optimization Algorithm Based on Dominance and Decomposition. IEEE Trans. Evol. Comput. 2015, 19, 694–716. [Google Scholar] [CrossRef]

- Ishibuchi, H.; Tsukamoto, N.; Sakane, Y.; Nojima, Y. Indicator-based evolutionary algorithm with hypervolume approximation by achievement scalarizing functions. In Proceedings of the 12th Annual Conference on Genetic and Evolutionary Computation, Portland, OR, USA, 11–13 July 2010. [Google Scholar]

- Bader, J.; Zitzler, E. HypE: An Algorithm for Fast Hypervolume-Based Many-Objective Optimization. Evol. Comput. 2011, 19, 45–76. [Google Scholar] [CrossRef] [PubMed]

- Pamulapati, T.; Mallipeddi, R.; Suganthan, P.N. ISDE +—An Indicator for Multi and Many-Objective Optimization. IEEE Trans. Evol. Comput. 2019, 23, 346–352. [Google Scholar] [CrossRef]

- Palakonda, V.; Mallipeddi, R. Pareto Dominance-Based Algorithms With Ranking Methods for Many-Objective Optimization. IEEE Access 2017, 5, 11043–11053. [Google Scholar] [CrossRef]

- Deb, K.; Jain, H. An Evolutionary Many-Objective Optimization Algorithm Using Reference-Point-Based Nondominated Sorting Approach, Part I: Solving Problems With Box Constraints. IEEE Trans. Evol. Comput. 2014, 18, 577–601. [Google Scholar] [CrossRef]

- Cheng, R.; Jin, Y.; Olhofer, M.; Sendhoff, B. A Reference Vector Guided Evolutionary Algorithm for Many-Objective Optimization. IEEE Trans. Evol. Comput. 2016, 20, 773–791. [Google Scholar] [CrossRef] [Green Version]

- Das, I.; Dennis, J.E. Normal-Boundary Intersection: A New Method for Generating the Pareto Surface in Nonlinear Multicriteria Optimization Problems. SIAM J. Optim. 1998, 8, 631–657. [Google Scholar] [CrossRef] [Green Version]

- Sato, H.; Aguirre, H.E.; Tanaka, K. Controlling Dominance Area of Solutions and Its Impact on the Performance of MOEAs. In Evolutionary Multi-Criterion Optimization; Obayashi, S., Deb, K., Poloni, C., Hiroyasu, T., Murata, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 5–20. [Google Scholar]

- Wang, G.; Jiang, H. Fuzzy-Dominance and Its Application in Evolutionary Many Objective Optimization. In Proceedings of the 2007 International Conference on Computational Intelligence and Security Workshops (CISW 2007), Harbin, China, 15–19 December 2007; pp. 195–198. [Google Scholar]

- Sato, H.; Aguirre, H.E.; Tanaka, K. Self-Controlling Dominance Area of Solutions in Evolutionary Many-Objective Optimization. In Simulated Evolution and Learning; Deb, K., Ed.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 455–465. [Google Scholar]

- Yuan, Y.; Xu, H.; Wang, B.; Yao, X. A New Dominance Relation-Based Evolutionary Algorithm for Many-Objective Optimization. IEEE Trans. Evol. Comput. 2016, 20, 16–37. [Google Scholar] [CrossRef]

- Zhu, C.; Xu, L.; Goodman, E.D. Generalization of Pareto-Optimality for Many-Objective Evolutionary Optimization. IEEE Trans. Evol. Comput. 2016, 20, 299–315. [Google Scholar] [CrossRef]

- Laumanns, M.; Thiele, L.; Deb, K.; Zitzler, E. Combining convergence and diversity in evolutionary multiobjective optimization. Evol. Comput. 2002, 10, 263–282. [Google Scholar] [CrossRef]

- Yang, S.; Li, M.; Liu, X.; Zheng, J. A Grid-Based Evolutionary Algorithm for Many-Objective Optimization. IEEE Trans. Evol. Comput. 2013, 17, 721–736. [Google Scholar] [CrossRef]

- Elarbi, M.; Bechikh, S.; Gupta, A.; Said, L.B.; Ong, Y. A New Decomposition-Based NSGA-II for Many-Objective Optimization. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 1191–1210. [Google Scholar] [CrossRef]

- Tian, Y.; Cheng, R.; Zhang, X.; Su, Y.; Jin, Y. A Strengthened Dominance Relation Considering Convergence and Diversity for Evolutionary Many-Objective Optimization. IEEE Trans. Evol. Comput. 2019, 23, 331–345. [Google Scholar] [CrossRef] [Green Version]

- Shen, J.; Wang, P.; Wang, X. A Controlled Strengthened Dominance Relation for Evolutionary Many-Objective Optimization. IEEE Trans. Cybern. 2020, 1–13. [Google Scholar] [CrossRef]

- Srinivas, N.; Deb, K. Muiltiobjective Optimization Using Nondominated Sorting in Genetic Algorithms. Evol. Comput. 1994, 2, 221–248. [Google Scholar] [CrossRef]

- Li, M.; Yang, S.; Liu, X. Shift-Based Density Estimation for Pareto-Based Algorithms in Many-Objective Optimization. IEEE Trans. Evol. Comput. 2014, 18, 348–365. [Google Scholar] [CrossRef] [Green Version]

- Hernández-Díaz, A.G.; Santana-Quintero, L.V.; Coello, C.A.C.; Molina, J. Pareto-adaptive ε-dominance. Evol. Comput. 2007, 15, 493–517. [Google Scholar] [CrossRef] [PubMed]

- Batista, L.S.; Campelo, F.; Guimarães, F.G.; Ramírez, J.A. Pareto Cone ε-Dominance: Improving Convergence and Diversity in Multiobjective Evolutionary Algorithms. In Evolutionary Multi-Criterion Optimization; Takahashi, R.H.C., Deb, K., Wanner, E.F., Greco, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 76–90. [Google Scholar]

- Liu, Y.; Zhu, N.; Li, K.; Li, M.; Zheng, J.; Li, K. An angle dominance criterion for evolutionary many-objective optimization. Inf. Sci. 2020, 509, 376–399. [Google Scholar] [CrossRef]

- Farina, M.; Amato, P. A fuzzy definition of “optimality” for many-criteria optimization problems. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2004, 34, 315–326. [Google Scholar] [CrossRef]

- Zou, X.; Chen, Y.; Liu, M.; Kang, L. A New Evolutionary Algorithm for Solving Many-Objective Optimization Problems. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2008, 38, 1402–1412. [Google Scholar]

- He, Z.; Yen, G.G.; Zhang, J. Fuzzy-Based Pareto Optimality for Many-Objective Evolutionary Algorithms. IEEE Trans. Evol. Comput. 2014, 18, 269–285. [Google Scholar] [CrossRef]

- Zitzler, E.; Deb, K.; Thiele, L. Comparison of Multiobjective Evolutionary Algorithms: Empirical Results. Evol. Comput. 2000, 8, 173–195. [Google Scholar] [CrossRef] [Green Version]

- Tian, Y.; Cheng, R.; Zhang, X.; Jin, Y. PlatEMO: A MATLAB Platform for Evolutionary Multi-Objective Optimization [Educational Forum]. IEEE Comput. Intell. Mag. 2017, 12, 73–87. [Google Scholar] [CrossRef] [Green Version]

| W/L/T | NSGA-III1* | NSGA-III2* | NSGA-III3* | NSGA-III4* | NSGA-III5* |

|---|---|---|---|---|---|

| NSGA-III1* | X | 7-9-64 | 4-3-73 | 4-4-72 | 8-7-65 |

| NSGA-III2* | 10-7-63 | X | 11-9-60 | 9-5-66 | 9-4-67 |

| NSGA-III3* | 3-4-73 | 10-7-63 | X | 2-4-74 | 3-4-73 |

| NSGA-III4* | 5-4-71 | 6-7-67 | 4-2-74 | X | 4-2-74 |

| NSGA-III5* | 7-5-68 | 4-7-69 | 4-3-73 | 3-3-74 | X |

| # | M | NSGA-III* | NSGA-II | NSGA-II/SDR | NSGA-II/CSDR | MOEA/D | MOEA/D-DE | NSGA-III | TDEA | ISDE + |

|---|---|---|---|---|---|---|---|---|---|---|

| DTLZ1 | 2 | 5.83 × 10−1 (5.40 × 10−5) | 5.81 × 10−1 (4.25 × 10−4) + | 3.00 × 10−1 (8.25 × 10−2) + | 5.82 × 10−1 (2.63 × 10−4) + | 5.82 × 10−1 (3.16 × 10−4) + | 5.83 × 10−1 (1.78 × 10−6) − | 5.82 × 10−1 (1.74 × 10−4) + | 5.82 × 10−1 (1.86 × 10−4) + | 5.82 × 10−1 (2.45 × 10−4) + |

| 4 | 9.45 × 10−1 (2.41 × 10−4) | 9.29 × 10−1 (2.02 × 10−3) + | 9.03 × 10−1 (1.35 × 10−2) + | 9.16 × 10−1 (1.05 × 10−1) ≈ | 9.45 × 10−1 (3.35 × 10−4) ≈ | 7.90 × 10−1 (1.36 × 10−1) + | 9.45 × 10−1 (2.22 × 10−4) ≈ | 9.45 × 10−1 (2.31 × 10−4) ≈ | 9.36 × 10−1 (2.45 × 10−3) + | |

| 6 | 9.90 × 10−1 (1.13 × 10−4) | 2.96 × 10−1 (3.85 × 10−1) + | 9.38 × 10−1 (1.80 × 10−2) + | 9.40 × 10−1 (1.39 × 10−1) + | 9.90 × 10−1 (2.00 × 10−4) + | 9.59 × 10−1 (1.16 × 10−2) + | 9.90 × 10−1 (1.31 × 10−4) ≈ | 9.90 × 10−1 (1.40 × 10−4) + | 9.83 × 10−1 (1.70 × 10−3) + | |

| 8 | 9.97 × 10−1 (1.14 × 10−3) | 8.71 × 10−3 (4.77 × 10−2) + | 9.53 × 10−1 (1.40 × 10−2) + | 9.88 × 10−1 (2.31 × 10−3) + | 9.93 × 10−1 (1.43 × 10−3) + | 9.67 × 10−1 (2.02 × 10−3) + | 9.97 × 10−1 (1.11 × 10−3) ≈ | 9.98 × 10−1 (3.99 × 10−4) ≈ | 9.95 × 10−1 (8.43 × 10−4) + | |

| 10 | 9.97 × 10−1 (1.49 × 10−2) | 0 × 100 (0 × 100) + | 9.35 × 10−1 (2.11 × 10−2) + | 9.99 × 10−1 (5.24 × 10−4) ≈ | 9.99 × 10−1 (6.33 × 10−5) ≈ | 9.72 × 10−1 (1.72 × 10−3) + | 9.97 × 10−1 (1.39 × 10−2) ≈ | 9.82 × 10−1 (6.61 × 10−2) ≈ | 9.98 × 10−1 (4.31 × 10−4) ≈ | |

| DTLZ2 | 2 | 3.47 × 10−1 (1.33 × 10−7) | 3.47 × 10−1 (1.64 × 10−4) + | 1.80 × 10−1 (1.09 × 10−2) + | 3.47 × 10−1 (1.85 × 10−4) + | 3.47 × 10−1 (1.11 × 10−5) + | 3.47 × 10−1 (3.19 × 10−5) + | 3.47 × 10−1 (6.72 × 10−6) + | 3.47 × 10−1 (9.83 × 10−6) + | 3.47 × 10−1 (1.46 × 10−4) + |

| 4 | 7.15 × 10−1 (4.62 × 10−4) | 6.40 × 10−1 (7.59 × 10−3) + | 6.16 × 10−1 (1.50 × 10−1) + | 6.64 × 10−1 (5.85 × 10−3) + | 7.14 × 10−1 (5.36 × 10−4) + | 6.20 × 10−1 (3.30 × 10−3) + | 7.14 × 10−1 (5.32 × 10−4) + | 7.15 × 10−1 (4.34 × 10−4) + | 7.12 × 10−1 (1.68 × 10−3) + | |

| 6 | 8.61 × 10−1 (4.42 × 10−4) | 8.38 × 10−3 (3.19 × 10−2) + | 8.45 × 10−1 (3.80 × 10−3) + | 7.81 × 10−1 (1.12 × 10−2) + | 8.58 × 10−1 (5.49 × 10−4) + | 6.49 × 10−1 (1.98 × 10−2) + | 8.57 × 10−1 (7.28 × 10−4) + | 8.59 × 10−1 (4.61 × 10−4) + | 8.65 × 10−1 (1.71 × 10−3) − | |

| 8 | 9.17 × 10−1 (2.53 × 10−2) | 3.29 × 10−4 (1.38 × 10−3) + | 9.26 × 10−1 (4.04 × 10−3) − | 9.05 × 10−1 (3.46 × 10−3) + | 9.17 × 10−1 (1.50 × 10−3) ≈ | 5.88 × 10−1 (1.26 × 10−2) + | 9.11 × 10−1 (2.03 × 10−2) ≈ | 9.23 × 10−1 (7.14 × 10−4) ≈ | 9.38 × 10−1 (1.63 × 10−3) − | |

| 10 | 9.66 × 10−1 (1.17 × 10−2) | 2.84 × 10−3 (6.45 × 10−3) + | 9.63 × 10−1 (2.99 × 10−3) ≈ | 9.57 × 10−1 (1.45 × 10−3) + | 9.70 × 10−1 (3.57 × 10−4) − | 5.49 × 10−1 (1.60 × 10−2) + | 9.58 × 10−1 (1.71 × 10−2) + | 9.68 × 10−1 (2.54 × 10−4) ≈ | 9.70 × 10−1 (1.36 × 10−3) − | |

| DTLZ3 | 2 | 3.46 × 10−1 (1.08 × 10−3) | 3.46 × 10−1 (9.23 × 10−4) ≈ | 1.94 × 10−1 (2.42 × 10−2) + | 3.46 × 10−1 (9.28 × 10−4) ≈ | 3.46 × 10−1 (8.47 × 10−4) ≈ | 3.45 × 10−1 (1.41 × 10−3) ≈ | 3.46 × 10−1 (1.15 × 10−3) ≈ | 3.46 × 10−1 (7.66 × 10−4) − | 3.45 × 10−1 (1.61 × 10−3) ≈ |

| 4 | 7.14 × 10−1 (1.32 × 10−3) | 6.53 × 10−1 (9.16 × 10−3) + | 6.85 × 10−1 (6.78 × 10−2) + | 6.75 × 10−1 (4.53 × 10−3) + | 7.13 × 10−1 (2.53 × 10−3) + | 5.96 × 10−1 (3.10 × 10−2) + | 7.13 × 10−1 (1.57 × 10−3) ≈ | 7.14 × 10−1 (1.89 × 10−3) ≈ | 7.11 × 10−1 (2.98 × 10−3) + | |

| 6 | 8.56 × 10−1 (3.34 × 10−3) | 0 × 100 (0 × 100) + | 8.48 × 10−1 (3.19 × 10−3) + | 8.09 × 10−1 (8.03 × 10−3) + | 8.57 × 10−1 (4.06 × 10−3) ≈ | 6.32 × 10−1 (4.56 × 10−2) + | 8.55 × 10−1 (3.74 × 10−3) ≈ | 8.59 × 10−1 (1.15 × 10−3) − | 8.60 × 10−1 (4.00 × 10−3) − | |

| 8 | 9.17 × 10−1 (3.14 × 10−2) | 0 × 100 (0 × 100) + | 9.27 × 10−1 (4.02 × 10−3) ≈ | 9.10 × 10−1 (1.28 × 10−2) ≈ | 9.21 × 10−1 (3.85 × 10−3) ≈ | 5.81 × 10−1 (1.57 × 10−2) + | 9.12 × 10−1 (5.14 × 10−2) ≈ | 9.15 × 10−1 (5.61 × 10−2) ≈ | 9.32 × 10−1 (3.42 × 10−3) − | |

| 10 | 8.85 × 10−1 (2.30 × 10−1) | 0 × 100 (0 × 100) + | 9.63 × 10−1 (2.27 × 10−3) − | 9.60 × 10−1 (2.65 × 10−3) − | 9.44 × 10−1 (1.36 × 10−1) ≈ | 5.42 × 10−1 (3.11 × 10−2) + | 8.86 × 10−1 (2.42 × 10−1) ≈ | 9.46 × 10−1 (4.77 × 10−2) ≈ | 9.62 × 10−1 (2.68 × 10−3) − | |

| DTLZ4 | 2 | 3.47 × 10−1 (1.20 × 10−4) | 2.70 × 10−1 (1.19 × 10−1) + | 2.57 × 10−1 (7.13 × 10−2) + | 3.38 × 10−1 (4.67 × 10−2) ≈ | 2.51 × 10−1 (1.24 × 10−1) + | 3.47 × 10−1 (8.80 × 10−5) + | 3.13 × 10−1 (8.86 × 10−2) + | 3.04 × 10−1 (9.71 × 10−2) + | 3.47 × 10−1 (1.56 × 10−4) + |

| 4 | 6.89 × 10−1 (6.09 × 10−2) | 6.47 × 10−1 (7.08 × 10−3) + | 3.37 × 10−1 (5.62 × 10−2) + | 6.64 × 10−1 (4.82 × 10−3) + | 5.34 × 10−1 (1.89 × 10−1) + | 6.07 × 10−1 (1.47 × 10−2) + | 7.09 × 10−1 (2.96 × 10−2) ≈ | 7.09 × 10−1 (3.12 × 10−2) ≈ | 7.12 × 10−1 (1.59 × 10−3) − | |

| 6 | 8.51 × 10−1 (3.20 × 10−2) | 8.29 × 10−2 (1.52 × 10−1) + | 3.95 × 10−1 (9.04 × 10−2) + | 7.97 × 10−1 (7.67 × 10−3) + | 7.25 × 10−1 (9.07 × 10−2) + | 6.95 × 10−1 (2.35 × 10−2) + | 8.52 × 10−1 (2.64 × 10−2) ≈ | 8.60 × 10−1 (5.25 × 10−4) ≈ | 8.58 × 10−1 (1.58 × 10−2) ≈ | |

| 8 | 9.12 × 10−1 (3.05 × 10−2) | 4.54 × 10−4 (2.37 × 10−3) + | 4.75 × 10−1 (6.14 × 10−2) + | 9.14 × 10−1 (3.61 × 10−3) ≈ | 7.79 × 10−1 (1.19 × 10−1) + | 6.50 × 10−1 (2.23 × 10−2) + | 9.06 × 10−1 (2.98 × 10−2) ≈ | 9.25 × 10−1 (4.00 × 10−4) − | 9.33 × 10−1 (4.44 × 10−3) − | |

| 10 | 9.66 × 10−1 (1.08 × 10−2) | 1.89 × 10−4 (7.26 × 10−4) + | 5.69 × 10−1 (8.52 × 10−2) + | 9.62 × 10−1 (1.21 × 10−3) + | 8.68 × 10−1 (9.44 × 10−2) + | 6.31 × 10−1 (2.54 × 10−2) + | 9.66 × 10−1 (1.13 × 10−2) ≈ | 9.70 × 10−1 (2.42 × 10−4) − | 9.66 × 10−1 (1.26 × 10−3) ≈ | |

| DTLZ5 | 2 | 3.47 × 10−1 (7.05 × 10−7) | 3.47 × 10−1 (1.82 × 10−4) + | 1.79 × 10−1 (7.76 × 10−3) + | 3.47 × 10−1 (2.15 × 10−4) + | 3.47 × 10−1 (1.04 × 10−5) + | 3.47 × 10−1 (3.63 × 10−5) + | 3.47 × 10−1 (5.63 × 10−6) + | 3.47 × 10−1 (5.76 × 10−6) + | 3.47 × 10−1 (1.73 × 10−4) + |

| 4 | 1.40 × 10−1 (2.10 × 10−3) | 1.42 × 10−1 (2.19 × 10−3) − | 1.30 × 10−1 (4.39 × 10−3) + | 1.40 × 10−1 (2.22 × 10−3) ≈ | 1.47 × 10−1 (3.06 × 10−4) − | 1.45 × 10−1 (4.30 × 10−4) − | 1.41 × 10−1 (2.31 × 10−3) − | 1.20 × 10−1 (9.23 × 10−3) + | 1.33 × 10−1 (2.94 × 10−3) + | |

| 6 | 9.75 × 10−2 (4.12 × 10−3) | 9.63 × 10−2 (7.95 × 10−3) ≈ | 9.66 × 10−2 (2.68 × 10−3) ≈ | 9.14 × 10−2 (3.37 × 10−3) + | 1.15 × 10−1 (2.59 × 10−4) − | 1.11 × 10−1 (3.89 × 10−4) − | 9.06 × 10−2 (6.21 × 10−3) ≈ | 1.00 × 10−1 (2.45 × 10−3) − | 9.20 × 10−2 (1.98 × 10−3) + | |

| 8 | 9.21 × 10−2 (2.27 × 10−3) | 6.82 × 10−2 (2.39 × 10−2) + | 8.85 × 10−2 (2.04 × 10−3) + | 8.06 × 10−2 (6.63 × 10−3) + | 1.04 × 10−1 (2.98 × 10−4) − | 1.02 × 10−1 (3.17 × 10−4) − | 7.82 × 10−2 (1.15 × 10−2) ≈ | 9.25 × 10−2 (2.65 × 10−3) ≈ | 8.29 × 10−2 (4.70 × 10−3) + | |

| 10 | 8.62 × 10−2 (5.54 × 10−3) | 4.73 × 10−2 (2.31 × 10−2) + | 8.41 × 10−2 (4.54 × 10−3) ≈ | 7.45 × 10−2 (9.08 × 10−3) + | 1.00 × 10−1 (2.75 × 10−4) − | 9.81 × 10−2 (2.66 × 10−4) − | 7.72 × 10−2 (1.21 × 10−2) ≈ | 9.28 × 10−2 (1.36 × 10−3) − | 7.55 × 10−2 (6.32 × 10−3) + | |

| DTLZ6 | 2 | 3.47 × 10−1 (4.08 × 10−8) | 3.46 × 10−1 (1.61 × 10−4) + | 3.18 × 10−1 (3.58 × 10−2) + | 3.47 × 10−1 (1.03 × 10−4) − | 3.47 × 10−1 (4.71 × 10−5) ≈ | 3.47 × 10−1 (9.23 × 10−8) + | 3.47 × 10−1 (3.30 × 10−7) ≈ | 3.47 × 10−1 (2.26 × 10−7) + | 3.47 × 10−1 (1.27 × 10−4) ≈ |

| 4 | 1.33 × 10−1 (1.03 × 10−2) | 1.14 × 10−1 (2.35 × 10−2) + | 1.33 × 10−1 (3.60 × 10−3) ≈ | 1.33 × 10−1 (4.83 × 10−3) ≈ | 1.47 × 10−1 (6.94 × 10−4) − | 1.45 × 10−1 (4.78 × 10−4) − | 1.37 × 10−1 (4.57 × 10−3) − | 1.13 × 10−1 (9.61 × 10−3) + | 1.27 × 10−1 (5.53 × 10−3) + | |

| 6 | 9.21 × 10−2 (2.91 × 10−3) | 0 × 100 (0 × 100) + | 9.92 × 10−2 (4.32 × 10−3) − | 8.38 × 10−2 (1.50 × 10−2) + | 1.14 × 10−1 (3.19 × 10−3) − | 1.12 × 10−1 (3.07 × 10−4) − | 6.05 × 10−2 (4.13 × 10−2) + | 9.12 × 10−2 (5.20 × 10−4) + | 9.63 × 10−2 (2.85 × 10−3) − | |

| 8 | 9.11 × 10−2 (5.32 × 10−4) | 0 × 100 (0 × 100) + | 8.87 × 10−2 (1.57 × 10−2) ≈ | 6.17 × 10−2 (3.56 × 10−2) + | 1.04 × 10−1 (3.10 × 10−4) − | 1.02 × 10−1 (2.27 × 10−4) − | 2.70 × 10−2 (3.89 × 10−2) + | 9.11 × 10−2 (2.88 × 10−4) ≈ | 9.11 × 10−2 (1.49 × 10−3) ≈ | |

| 10 | 8.18 × 10−2 (2.77 × 10−2) | 0 × 100 (0 × 100) + | 8.75 × 10−2 (9.37 × 10−3) ≈ | 5.66 × 10−2 (3.71 × 10−2) + | 9.97 × 10−2 (1.42 × 10−3) − | 9.82 × 10−2 (2.33 × 10−4) − | 5.83 × 10−3 (2.08 × 10−2) + | 9.11 × 10−2 (3.68 × 10−4) − | 8.38 × 10−2 (1.98 × 10−2) ≈ | |

| DTLZ7 | 2 | 2.43 × 10−1 (1.87 × 10−5) | 2.43 × 10−1 (4.22 × 10−5) + | 2.42 × 10−1 (3.55 × 10−4) + | 2.43 × 10−1 (3.61 × 10−5) + | 2.16 × 10−1 (3.12 × 10−2) + | 2.08 × 10−1 (3.34 × 10−2) + | 2.43 × 10−1 (3.30 × 10−5) + | 2.43 × 10−1 (3.05 × 10−5) + | 2.42 × 10−1 (2.84 × 10−4) + |

| 4 | 2.58 × 10−1 (5.50 × 10−3) | 2.42 × 10−1 (2.97 × 10−3) + | 2.69 × 10−1 (2.33 × 10−3) − | 2.61 × 10−1 (2.17 × 10−3) − | 1.88 × 10−1 (4.91 × 10−3) + | 3.01 × 10−2 (1.72 × 10−2) + | 2.54 × 10−1 (6.27 × 10−3) + | 2.68 × 10−1 (5.30 × 10−3) − | 2.73 × 10−1 (6.21 × 10−3) − | |

| 6 | 2.31 × 10−1 (2.74 × 10−3) | 5.10 × 10−2 (1.56 × 10−2) + | 2.37 × 10−1 (3.43 × 10−3) − | 1.74 × 10−1 (5.64 × 10−3) + | 9.88 × 10−3 (2.47 × 10−2) + | 1.35 × 10−3 (2.01 × 10−3) + | 2.19 × 10−1 (3.85 × 10−3) + | 1.73 × 10−1 (2.43 × 10−2) + | 2.40 × 10−1 (7.17 × 10−3) − | |

| 8 | 2.12 × 10−1 (2.94 × 10−3) | 2.36 × 10−4 (4.00 × 10−4) + | 2.00 × 10−1 (3.27 × 10−3) + | 1.12 × 10−1 (1.28 × 10−2) + | 5.61 × 10−5 (2.46 × 10−5) + | 1.25 × 10−4 (3.90 × 10−4) + | 1.80 × 10−1 (5.63 × 10−3) + | 1.76 × 10−1 (1.63 × 10−2) + | 2.12 × 10−1 (8.96 × 10−3) ≈ | |

| 10 | 1.81 × 10−1 (7.78 × 10−3) | 1.97 × 10−6 (5.32 × 10−6) + | 1.54 × 10−1 (4.08 × 10−2) + | 1.00 × 10−1 (1.68 × 10−2) + | 1.20 × 10−4 (2.53 × 10−4) + | 2.07 × 10−4 (5.66 × 10−4) + | 1.60 × 10−1 (5.69 × 10−3) + | 1.71 × 10−1 (1.49 × 10−2) + | 1.81 × 10−1 (1.76 × 10−2) ≈ | |

| WFG1 | 2 | 6.77 × 10−1 (5.95 × 10−3) | 6.92 × 10−1 (1.70 × 10−3) − | 6.88 × 10−1 (2.33 × 10−3) − | 6.80 × 10−1 (1.62 × 10−2) ≈ | 6.58 × 10−1 (8.94 × 10−3) + | 5.46 × 10−1 (8.38 × 10−2) + | 6.74 × 10−1 (1.03 × 10−2) ≈ | 6.73 × 10−1 (8.24 × 10−3) + | 6.67 × 10−1 (2.26 × 10−2) + |

| 4 | 9.84 × 10−1 (8.16 × 10−3) | 9.65 × 10−1 (4.63 × 10−3) + | 9.68 × 10−1 (5.70 × 10−3) + | 9.80 × 10−1 (2.25 × 10−3) + | 9.50 × 10−1 (1.37 × 10−2) + | 6.16 × 10−1 (7.07 × 10−2) + | 9.88 × 10−1 (4.80 × 10−3) − | 9.89 × 10−1 (1.54 × 10−3) − | 9.80 × 10−1 (2.77 × 10−3) + | |

| 6 | 8.70 × 10−1 (2.21 × 10−2) | 9.67 × 10−1 (1.45 × 10−2) − | 9.66 × 10−1 (1.98 × 10−2) − | 9.49 × 10−1 (1.85 × 10−2) − | 9.36 × 10−1 (9.53 × 10−3) − | 5.86 × 10−1 (6.87 × 10−2) + | 8.93 × 10−1 (2.52 × 10−2) − | 9.34 × 10−1 (1.87 × 10−2) − | 9.91 × 10−1 (1.48 × 10−3) − | |

| 8 | 9.83 × 10−1 (2.32 × 10−2) | 9.94 × 10−1 (1.82 × 10−3) − | 9.84 × 10−1 (1.60 × 10−2) ≈ | 9.98 × 10−1 (6.47 × 10−4) − | 9.16 × 10−1 (4.01 × 10−2) + | 5.22 × 10−1 (7.13 × 10−2) + | 9.98 × 10−1 (8.33 × 10−4) − | 9.96 × 10−1 (1.03 × 10−3) − | 9.94 × 10−1 (1.25 × 10−3) − | |

| 10 | 9.99 × 10−1 (6.37 × 10−4) | 9.97 × 10−1 (1.10 × 10−3) + | 9.88 × 10−1 (1.13 × 10−2) + | 9.99 × 10−1 (2.30 × 10−4) − | 7.24 × 10−1 (1.04 × 10−1) + | 9.81 × 10−1 (1.74 × 10−2) + | 9.99 × 10−1 (2.49 × 10−4) − | 9.96 × 10−1 (8.08 × 10−4) + | 9.95 × 10−1 (1.33 × 10−3) + | |

| WFG2 | 2 | 6.32 × 10−1 (3.82 × 10−4) | 6.32 × 10−1 (4.76 × 10−4) − | 6.29 × 10−1 (1.09 × 10−3) + | 6.32 × 10−1 (6.03 × 10−4) ≈ | 6.20 × 10−1 (3.14 × 10−3) + | 6.29 × 10−1 (8.18 × 10−4) + | 6.32 × 10−1 (4.99 × 10−4) ≈ | 6.32 × 10−1 (4.49 × 10−4) + | 6.32 × 10−1 (4.57 × 10−4) ≈ |

| 4 | 9.88 × 10−1 (4.99 × 10−4) | 9.74 × 10−1 (1.88 × 10−3) + | 9.60 × 10−1 (5.88 × 10−3) + | 9.79 × 10−1 (1.75 × 10−3) + | 9.58 × 10−1 (1.03 × 10−2) + | 9.05 × 10−1 (1.07 × 10−2) + | 9.86 × 10−1 (7.99 × 10−4) + | 9.87 × 10−1 (6.80 × 10−4) + | 9.77 × 10−1 (3.25 × 10−3) + | |

| 6 | 9.95 × 10−1 (9.24 × 10−4) | 9.90 × 10−1 (1.88 × 10−3) + | 9.68 × 10−1 (5.14 × 10−3) + | 9.92 × 10−1 (9.60 × 10−4) + | 9.25 × 10−1 (1.76 × 10−2) + | 9.62 × 10−1 (1.04 × 10−2) + | 9.92 × 10−1 (1.58 × 10−3) + | 9.87 × 10−1 (1.55 × 10−3) + | 9.83 × 10−1 (3.98 × 10−3) + | |

| 8 | 9.96 × 10−1 (1.11 × 10−3) | 9.97 × 10−1 (9.71 × 10−4) ≈ | 9.81 × 10−1 (5.41 × 10−3) + | 9.98 × 10−1 (3.77 × 10−4) − | 9.25 × 10−1 (9.32 × 10−3) + | 9.92 × 10−1 (2.93 × 10−3) + | 9.95 × 10−1 (1.92 × 10−3) + | 9.90 × 10−1 (5.65 × 10−3) + | 9.92 × 10−1 (1.83 × 10−3) + | |

| 10 | 9.97 × 10−1 (1.09 × 10−3) | 9.98 × 10−1 (7.40 × 10−4) − | 9.85 × 10−1 (6.00 × 10−3) + | 9.99 × 10−1 (2.11 × 10−4) − | 9.37 × 10−1 (3.93 × 10−3) + | 9.97 × 10−1 (1.83 × 10−3) − | 9.97 × 10−1 (1.37 × 10−3) ≈ | 9.92 × 10−1 (2.11 × 10−3) + | 9.94 × 10−1 (1.16 × 10−3) + | |

| WFG3 | 2 | 5.82 × 10−1 (2.75 × 10−4) | 5.77 × 10−1 (1.19 × 10−3) + | 5.79 × 10−1 (7.36 × 10−4) + | 5.78 × 10−1 (8.48 × 10−4) + | 5.63 × 10−1 (7.68 × 10−3) + | 5.73 × 10−1 (1.78 × 10−3) + | 5.78 × 10−1 (1.10 × 10−3) + | 5.79 × 10−1 (1.03 × 10−3) + | 5.80 × 10−1 (4.45 × 10−4) + |

| 4 | 2.56 × 10−1 (9.22 × 10−3) | 2.82 × 10−1 (9.46 × 10−3) − | 2.67 × 10−1 (9.85 × 10−3) − | 2.58 × 10−1 (1.34 × 10−2) ≈ | 1.05 × 10−1 (4.51 × 10−2) + | 1.43 × 10−1 (3.70 × 10−2) + | 2.37 × 10−1 (1.05 × 10−2) + | 2.43 × 10−1 (1.70 × 10−2) + | 2.75 × 10−1 (1.03 × 10−2) − | |

| 6 | 5.37 × 10−2 (1.83 × 10−2) | 8.83 × 10−2 (2.71 × 10−2) − | 1.02 × 10−1 (1.88 × 10−2) − | 5.02 × 10−2 (1.90 × 10−2) ≈ | 0 × 100 (0 × 100) + | 6.63 × 10−2 (1.33 × 10−2) − | 9.86 × 10−3 (8.14 × 10−3) + | 2.57 × 10−2 (1.26 × 10−2) + | 8.83 × 10−2 (2.12 × 10−2) − | |

| 8 | 1.99 × 10−2 (1.76 × 10−2) | 5.11 × 10−2 (1.77 × 10−2) − | 6.65 × 10−3 (9.64 × 10−3) + | 6.87 × 10−4 (1.61 × 10−3) + | 0 × 100 (0 × 100) + | 6.18 × 10−2 (1.52 × 10−2) − | 6.60 × 10−4 (1.64 × 10−3) + | 8.27 × 10−3 (1.16 × 10−2) + | 4.73 × 10−3 (8.71 × 10−3) + | |

| 10 | 2.06 × 10−3 (5.58 × 10−3) | 1.43 × 10−3 (4.83 × 10−3) ≈ | 0 × 100 (0 × 100) + | 0 × 100 (0 × 100) + | 0 × 100 (0 × 100) + | 8.80 × 10−2 (1.87 × 10−3) − | 0 × 100 (0 × 100) + | 1.26 × 10−4 (6.92 × 10−4) + | 0 × 100 (0 × 100) + | |

| WFG4 | 2 | 3.47 × 10−1 (4.10 × 10−5) | 3.45 × 10−1 (3.95 × 10−4) + | 3.45 × 10−1 (4.32 × 10−4) + | 3.45 × 10−1 (3.98 × 10−4) + | 3.30 × 10−1 (2.24 × 10−3) + | 3.14 × 10−1 (2.85 × 10−3) + | 3.45 × 10−1 (5.10 × 10−4) + | 3.45 × 10−1 (5.75 × 10−4) + | 3.46 × 10−1 (2.58 × 10−4) + |

| 4 | 7.12 × 10−1 (6.59 × 10−4) | 6.19 × 10−1 (7.37 × 10−3) + | 6.84 × 10−1 (3.35 × 10−3) + | 6.36 × 10−1 (6.36 × 10−3) + | 6.63 × 10−1 (5.78 × 10−3) + | 4.79 × 10−1 (1.92 × 10−2) + | 6.87 × 10−1 (2.05 × 10−3) + | 6.89 × 10−1 (2.42 × 10−3) + | 7.02 × 10−1 (2.17 × 10−3) + | |

| 6 | 8.48 × 10−1 (1.21 × 10−3) | 6.60 × 10−1 (1.61 × 10−2) + | 8.20 × 10−1 (3.78 × 10−3) + | 7.54 × 10−1 (7.40 × 10−3) + | 5.19 × 10−1 (3.68 × 10−2) + | 5.71 × 10−1 (2.99 × 10−2) + | 7.98 × 10−1 (4.93 × 10−3) + | 8.04 × 10−1 (4.18 × 10−3) + | 8.40 × 10−1 (4.02 × 10−3) + | |

| 8 | 9.07 × 10−1 (2.21 × 10−2) | 6.68 × 10−1 (2.12 × 10−2) + | 9.05 × 10−1 (3.76 × 10−3) ≈ | 8.63 × 10−1 (8.02 × 10−3) + | 3.70 × 10−1 (3.47 × 10−2) + | 6.58 × 10−1 (3.48 × 10−2) + | 8.59 × 10−1 (6.53 × 10−3) + | 8.64 × 10−1 (4.93 × 10−3) + | 9.11 × 10−1 (4.40 × 10−3) ≈ | |

| 10 | 9.60 × 10−1 (2.80 × 10−3) | 6.68 × 10−1 (2.43 × 10−2) + | 9.41 × 10−1 (3.66 × 10−3) + | 9.23 × 10−1 (6.08 × 10−3) + | 3.97 × 10−1 (4.99 × 10−2) + | 6.67 × 10−1 (4.80 × 10−2) + | 9.13 × 10−1 (6.28 × 10−3) + | 9.20 × 10−1 (5.79 × 10−3) + | 9.30 × 10−1 (4.38 × 10−3) + | |

| WFG5 | 2 | 3.13 × 10−1 (2.95 × 10−5) | 3.13 × 10−1 (5.11 × 10−4) + | 3.12 × 10−1 (1.47 × 10−3) + | 3.13 × 10−1 (1.01 × 10−3) + | 3.05 × 10−1 (1.09 × 10−3) + | 3.07 × 10−1 (6.08 × 10−4) + | 3.12 × 10−1 (1.77 × 10−3) + | 3.12 × 10−1 (1.27 × 10−3) + | 3.12 × 10−1 (1.62 × 10−3) + |

| 4 | 6.69 × 10−1 (4.72 × 10−4) | 5.95 × 10−1 (7.96 × 10−3) + | 6.54 × 10−1 (2.54 × 10−3) + | 6.11 × 10−1 (4.81 × 10−3) + | 6.36 × 10−1 (4.83 × 10−3) + | 4.64 × 10−1 (9.20 × 10−3) + | 6.62 × 10−1 (1.31 × 10−3) + | 6.63 × 10−1 (1.26 × 10−3) + | 6.64 × 10−1 (1.79 × 10−3) + | |

| 6 | 8.05 × 10−1 (4.21 × 10−4) | 6.25 × 10−1 (1.51 × 10−2) + | 7.88 × 10−1 (4.19 × 10−3) + | 7.32 × 10−1 (6.85 × 10−3) + | 5.66 × 10−1 (1.97 × 10−2) + | 5.35 × 10−1 (3.35 × 10−2) + | 7.81 × 10−1 (3.08 × 10−3) + | 7.85 × 10−1 (2.04 × 10−3) + | 8.05 × 10−1 (2.40 × 10−3) ≈ | |

| 8 | 8.63 × 10−1 (4.66 × 10−4) | 5.87 × 10−1 (2.06 × 10−2) + | 8.60 × 10−1 (2.50 × 10−3) + | 8.21 × 10−1 (7.41 × 10−3) + | 4.92 × 10−1 (2.17 × 10−2) + | 5.70 × 10−1 (2.33 × 10−2) + | 8.32 × 10−1 (3.30 × 10−3) + | 8.33 × 10−1 (2.82 × 10−3) + | 8.68 × 10−1 (2.56 × 10−3) − | |

| 10 | 9.04 × 10−1 (2.35 × 10−4) | 5.98 × 10−1 (2.20 × 10−2) + | 8.91 × 10−1 (2.32 × 10−3) + | 8.71 × 10−1 (4.63 × 10−3) + | 4.63 × 10−1 (2.54 × 10−2) + | 5.34 × 10−1 (4.71 × 10−2) + | 8.82 × 10−1 (2.00 × 10−3) + | 8.84 × 10−1 (1.60 × 10−3) + | 8.91 × 10−1 (2.23 × 10−3) + | |

| WFG6 | 2 | 3.07 × 10−1 (5.28 × 10−3) | 3.04 × 10−1 (8.24 × 10−3) + | 3.06 × 10−1 (7.35 × 10−3) ≈ | 3.09 × 10−1 (7.63 × 10−3) ≈ | 2.95 × 10−1 (1.22 × 10−2) + | 2.80 × 10−1 (3.61 × 10−2) + | 3.06 × 10−1 (9.24 × 10−3) ≈ | 3.06 × 10−1 (8.13 × 10−3) ≈ | 3.09 × 10−1 (8.40 × 10−3) ≈ |

| 4 | 6.61 × 10−1 (8.38 × 10−3) | 5.66 × 10−1 (1.34 × 10−2) + | 6.39 × 10−1 (8.67 × 10−3) + | 5.79 × 10−1 (1.28 × 10−2) + | 6.14 × 10−1 (1.72 × 10−2) + | 4.46 × 10−1 (2.05 × 10−2) + | 6.35 × 10−1 (8.92 × 10−3) + | 6.41 × 10−1 (8.44 × 10−3) + | 6.57 × 10−1 (9.53 × 10−3) ≈ | |

| 6 | 7.98 × 10−1 (9.34 × 10−3) | 6.13 × 10−1 (2.72 × 10−2) + | 7.82 × 10−1 (1.02 × 10−2) + | 6.75 × 10−1 (1.30 × 10−2) + | 3.89 × 10−1 (3.62 × 10−2) + | 5.49 × 10−1 (1.89 × 10−2) + | 7.55 × 10−1 (1.03 × 10−2) + | 7.63 × 10−1 (1.03 × 10−2) + | 8.01 × 10−1 (8.94 × 10−3) ≈ | |

| 8 | 8.39 × 10−1 (1.86 × 10−2) | 6.39 × 10−1 (3.48 × 10−2) + | 8.45 × 10−1 (1.78 × 10−2) ≈ | 7.82 × 10−1 (1.83 × 10−2) + | 2.65 × 10−1 (2.53 × 10−2) + | 4.74 × 10−1 (7.38 × 10−2) + | 8.00 × 10−1 (2.43 × 10−2) + | 8.01 × 10−1 (1.49 × 10−2) + | 8.49 × 10−1 (1.72 × 10−2) − | |

| 10 | 8.75 × 10−1 (2.12 × 10−2) | 6.45 × 10−1 (3.52 × 10−2) + | 8.77 × 10−1 (1.80 × 10−2) ≈ | 8.48 × 10−1 (2.63 × 10−2) + | 2.22 × 10−1 (5.35 × 10−2) + | 4.67 × 10−1 (8.26 × 10−2) + | 8.44 × 10−1 (1.46 × 10−2) + | 8.50 × 10−1 (1.21 × 10−2) + | 8.80 × 10−1 (1.62 × 10−2) ≈ | |

| WFG7 | 2 | 3.47 × 10−1 (1.98 × 10−5) | 3.45 × 10−1 (3.99 × 10−4) + | 3.46 × 10−1 (2.21 × 10−4) + | 3.46 × 10−1 (2.64 × 10−4) + | 3.29 × 10−1 (4.96 × 10−3) + | 3.43 × 10−1 (5.82 × 10−4) + | 3.46 × 10−1 (2.34 × 10−4) + | 3.46 × 10−1 (2.45 × 10−4) + | 3.47 × 10−1 (1.98 × 10−4) + |

| 4 | 7.12 × 10−1 (6.03 × 10−4) | 6.25 × 10−1 (7.05 × 10−3) + | 6.99 × 10−1 (1.87 × 10−3) + | 6.52 × 10−1 (5.47 × 10−3) + | 6.47 × 10−1 (1.74 × 10−2) + | 4.94 × 10−1 (1.74 × 10−2) + | 6.94 × 10−1 (2.29 × 10−3) + | 6.99 × 10−1 (1.44 × 10−3) + | 7.10 × 10−1 (1.93 × 10−3) + | |

| 6 | 8.55 × 10−1 (9.75 × 10−4) | 6.18 × 10−1 (2.67 × 10−2) + | 8.44 × 10−1 (3.37 × 10−3) + | 7.73 × 10−1 (6.24 × 10−3) + | 4.90 × 10−1 (3.20 × 10−2) + | 5.05 × 10−1 (3.54 × 10−2) + | 8.05 × 10−1 (8.03 × 10−3) + | 8.23 × 10−1 (4.82 × 10−3) + | 8.61 × 10−1 (1.81 × 10−3) − | |

| 8 | 9.17 × 10−1 (1.20 × 10−3) | 6.52 × 10−1 (3.30 × 10−2) + | 9.20 × 10−1 (2.83 × 10−3) − | 8.87 × 10−1 (5.92 × 10−3) + | 3.63 × 10−1 (2.24 × 10−2) + | 5.47 × 10−1 (3.30 × 10−2) + | 8.65 × 10−1 (8.44 × 10−3) + | 8.80 × 10−1 (5.09 × 10−3) + | 9.29 × 10−1 (1.91 × 10−3) − | |

| 10 | 9.62 × 10−1 (1.16 × 10−2) | 6.65 × 10−1 (2.67 × 10−2) + | 9.58 × 10−1 (2.22 × 10−3) + | 9.44 × 10−1 (3.54 × 10−3) + | 3.20 × 10−1 (4.77 × 10−2) + | 5.33 × 10−1 (5.95 × 10−2) + | 9.33 × 10−1 (8.63 × 10−3) + | 9.39 × 10−1 (3.60 × 10−3) + | 9.56 × 10−1 (2.56 × 10−3) + | |

| WFG8 | 2 | 3.02 × 10−1 (4.46 × 10−4) | 2.98 × 10−1 (1.18 × 10−3) + | 2.97 × 10−1 (1.45 × 10−3) + | 2.99 × 10−1 (1.38 × 10−3) + | 2.83 × 10−1 (6.67 × 10−3) + | 2.94 × 10−1 (2.34 × 10−3) + | 2.98 × 10−1 (1.40 × 10−3) + | 2.96 × 10−1 (3.10 × 10−3) + | 3.00 × 10−1 (1.21 × 10−3) + |

| 4 | 6.35 × 10−1 (9.54 × 10−4) | 5.29 × 10−1 (6.87 × 10−3) + | 6.08 × 10−1 (3.07 × 10−3) + | 5.49 × 10−1 (5.59 × 10−3) + | 5.90 × 10−1 (5.84 × 10−3) + | 3.59 × 10−1 (1.51 × 10−2) + | 6.10 × 10−1 (4.16 × 10−3) + | 6.11 × 10−1 (3.20 × 10−3) + | 6.28 × 10−1 (2.21 × 10−3) + | |

| 6 | 7.68 × 10−1 (1.54 × 10−3) | 5.70 × 10−1 (9.22 × 10−3) + | 7.29 × 10−1 (4.50 × 10−3) + | 6.24 × 10−1 (9.39 × 10−3) + | 2.19 × 10−1 (7.96 × 10−2) + | 3.49 × 10−1 (3.97 × 10−2) + | 7.14 × 10−1 (1.12 × 10−2) + | 7.12 × 10−1 (6.46 × 10−3) + | 7.60 × 10−1 (4.14 × 10−3) + | |

| 8 | 7.84 × 10−1 (2.39 × 10−2) | 6.09 × 10−1 (1.36 × 10−2) + | 8.12 × 10−1 (1.62 × 10−2) − | 6.56 × 10−1 (1.09 × 10−2) + | 6.10 × 10−2 (2.63 × 10−2) + | 3.97 × 10−1 (5.91 × 10−2) + | 7.25 × 10−1 (1.28 × 10−2) + | 7.06 × 10−1 (1.73 × 10−2) + | 8.11 × 10−1 (1.52 × 10−2) − | |

| 10 | 8.62 × 10−1 (1.41 × 10−2) | 6.36 × 10−1 (1.82 × 10−2) + | 8.91 × 10−1 (2.22 × 10−2) − | 7.35 × 10−1 (1.88 × 10−2) + | 5.49 × 10−2 (4.26 × 10−2) + | 3.88 × 10−1 (5.97 × 10−2) + | 8.33 × 10−1 (1.18 × 10−2) + | 8.11 × 10−1 (1.25 × 10−2) + | 9.02 × 10−1 (2.54 × 10−2) − | |

| WFG9 | 2 | 3.40 × 10−1 (1.67 × 10−2) | 3.29 × 10−1 (2.69 × 10−2) + | 3.26 × 10−1 (3.10 × 10−2) + | 3.33 × 10−1 (2.22 × 10−2) ≈ | 3.02 × 10−1 (2.37 × 10−2) + | 3.21 × 10−1 (2.47 × 10−2) + | 3.27 × 10−1 (2.31 × 10−2) + | 3.31 × 10−1 (2.19 × 10−2) ≈ | 3.34 × 10−1 (2.32 × 10−2) ≈ |

| 4 | 6.77 × 10−1 (3.05 × 10−3) | 5.78 × 10−1 (1.26 × 10−2) + | 6.68 × 10−1 (3.76 × 10−3) + | 6.27 × 10−1 (6.21 × 10−3) + | 5.75 × 10−1 (3.97 × 10−2) + | 4.81 × 10−1 (3.70 × 10−2) + | 6.37 × 10−1 (1.94 × 10−2) + | 6.53 × 10−1 (7.16 × 10−3) + | 6.84 × 10−1 (3.58 × 10−3) − | |

| 6 | 7.90 × 10−1 (1.70 × 10−2) | 5.13 × 10−1 (2.09 × 10−2) + | 7.90 × 10−1 (2.36 × 10−2) ≈ | 7.26 × 10−1 (9.88 × 10−3) + | 4.89 × 10−1 (6.91 × 10−2) + | 5.53 × 10−1 (4.41 × 10−2) + | 7.14 × 10−1 (2.75 × 10−2) + | 7.49 × 10−1 (1.37 × 10−2) + | 8.12 × 10−1 (4.04 × 10−3) − | |

| 8 | 8.40 × 10−1 (3.89 × 10−2) | 5.79 × 10−1 (2.57 × 10−2) + | 8.67 × 10−1 (3.91 × 10−3) − | 8.07 × 10−1 (8.73 × 10−3) + | 3.21 × 10−1 (1.32 × 10−1) + | 6.19 × 10−1 (3.92 × 10−2) + | 7.49 × 10−1 (5.26 × 10−2) + | 7.98 × 10−1 (3.06 × 10−2) + | 8.72 × 10−1 (4.57 × 10−3) − | |

| 10 | 8.74 × 10−1 (5.65 × 10−2) | 5.72 × 10−1 (3.08 × 10−2) + | 8.99 × 10−1 (5.22 × 10−3) − | 8.51 × 10−1 (6.37 × 10−3) + | 3.06 × 10−1 (1.18 × 10−1) + | 5.35 × 10−1 (6.45 × 10−2) + | 8.17 × 10−1 (5.67 × 10−2) + | 8.51 × 10−1 (1.07 × 10−2) + | 8.88 × 10−1 (4.59 × 10−3) ≈ | |

| W/T/L | 67/4/9 | 53/13/14 | 58/14/8 | 62/8/10 | 66/1/13 | 52/22/6 | 55/14/11 | 38/18/24 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dutta, S.; M, S.S.R.; Mallipeddi, R.; Das, K.N.; Lee, D.-G. A Mating Selection Based on Modified Strengthened Dominance Relation for NSGA-III. Mathematics 2021, 9, 2837. https://doi.org/10.3390/math9222837

Dutta S, M SSR, Mallipeddi R, Das KN, Lee D-G. A Mating Selection Based on Modified Strengthened Dominance Relation for NSGA-III. Mathematics. 2021; 9(22):2837. https://doi.org/10.3390/math9222837

Chicago/Turabian StyleDutta, Saykat, Sri Srinivasa Raju M, Rammohan Mallipeddi, Kedar Nath Das, and Dong-Gyu Lee. 2021. "A Mating Selection Based on Modified Strengthened Dominance Relation for NSGA-III" Mathematics 9, no. 22: 2837. https://doi.org/10.3390/math9222837

APA StyleDutta, S., M, S. S. R., Mallipeddi, R., Das, K. N., & Lee, D.-G. (2021). A Mating Selection Based on Modified Strengthened Dominance Relation for NSGA-III. Mathematics, 9(22), 2837. https://doi.org/10.3390/math9222837