Abstract

In this paper, we complement a study recently conducted in a paper of H.A. Mombeni, B. Masouri and M.R. Akhoond by introducing five new asymmetric kernel c.d.f. estimators on the half-line , namely the Gamma, inverse Gamma, LogNormal, inverse Gaussian and reciprocal inverse Gaussian kernel c.d.f. estimators. For these five new estimators, we prove the asymptotic normality and we find asymptotic expressions for the following quantities: bias, variance, mean squared error and mean integrated squared error. A numerical study then compares the performance of the five new c.d.f. estimators against traditional methods and the Birnbaum–Saunders and Weibull kernel c.d.f. estimators from Mombeni, Masouri and Akhoond. By using the same experimental design, we show that the LogNormal and Birnbaum–Saunders kernel c.d.f. estimators perform the best overall, while the other asymmetric kernel estimators are sometimes better but always at least competitive against the boundary kernel method from C. Tenreiro.

Keywords:

asymmetric kernels; asymptotic statistics; nonparametric statistics; Gamma kernel; inverse Gamma kernel; LogNormal kernel; inverse Gaussian kernel; reciprocal inverse Gaussian kernel; Birnbaum–Saunders kernel; Weibull kernel MSC:

Primary: 62G05; Secondary: 60F05, 62G20

In the context of density estimation, asymmetric kernel estimators were introduced by Aitchison and Lauder [1] on the simplex and studied theoretically for the first time by Chen [2] on (using a Beta kernel), and by Chen [3] on (using a Gamma kernel). These estimators are designed so that the bulk of the kernel function varies with each point x in the support of the target density. More specifically, the parameters of the kernel function can vary in a way that makes the mode, the median or the mean equal to x. This variable smoothing allows asymmetric kernel estimators to behave better than traditional kernel estimators (see, e.g., Rosenblatt [4], Parzen [5]) near the boundary of the support in terms of their bias. Since the variable smoothing is integrated directly in the parametrization of the kernel function, asymmetric kernel estimators are also usually simpler to implement than boundary kernel methods (see, e.g., Gasser and Müller [6], Rice [7], Gasser et al. [8], Müller [9], Zhang and Karunamuni [10,11]). For these two reasons, asymmetric kernel estimators are, by now, well-known solutions to the boundary bias problem from which traditional kernel estimators suffer. In the past twenty years, various asymmetric kernels have been considered in the literature on density estimation:

- Beta kernel, when the target density is supported on , see, e.g., Chen [2], Bouezmarni and Rolin [12], Renault and Scaillet [13], Fernandes and Monteiro [14], Hirukawa [15], Bouezmarni and Rombouts [16], Zhang and Karunamuni [17], Bertin and Klutchnikoff [18,19] Igarashi [20];

- Gamma, inverse Gamma, LogNormal, inverse Gaussian, reciprocal inverse Gaussian, Birnbaum–Saunders and Weibull kernels, when the target density is supported on , see, e.g., Chen [3], Jin and Kawczak [21], Scaillet [22], Bouezmarni and Scaillet [23], Fernandes and Monteiro [14], Bouezmarni and Rombouts [16], fBouezmarni and Rombouts [24], Bouezmarni and Rombouts [25], Igarashi and Kakizawa [26,27], Charpentier and Flachaire [28], Igarashi [29], Zougab and Adjabi [30], Kakizawa and Igarashi [31], Kakizawa [32], Zougab et al. [33], Zhang [34], Kakizawa [35];

- Dirichlet kernel, when the target density is supported on the d-dimensional unit simplex, see [1] and the first theoretical study by Ouimet and Tolosana-Delgado [36].

- Continuous associated kernels, the aim of which is to unify the theory of asymmetric kernels with the one for traditional kernels in both the univariate and multivariate settings, see, e.g., Kokonendji and Libengué Dobélé-Kpoka [37], Kokonendji and Somé [38,39].

The interested reader is referred to Hirukawa [40] and Section 2 of Ouimet and Tolosana-Delgado [36] for a review of some of these papers and an extensive list of papers dealing with asymmetric kernels in other settings.

In contrast, there are almost no papers dealing with the estimation of cumulative distribution functions (c.d.f.s) in the literature on asymmetric kernels. In fact, to the best of our knowledge, [41] seems to be the first (and only) paper in this direction if we exclude the closely related theory of Bernstein estimators. (In the setting of Bernstein estimators, c.d.f. estimation on compact sets was tackled, for example, by Babu et al. [42], Leblanc [43], Leblanc [44], Leblanc [45], Dutta [46], Jmaei et al. [47], Erdoğan et al. [48] and Wang et al. [49] in the univariate setting, and by Babu and Chaubey [50], Belalia [51], Dib et al. [52] and Ouimet [53,54] in the multivariate setting. In [55], the authors introduced Bernstein estimators with Poisson weights (also called Szasz estimators) for the estimation of c.d.f.s that are supported on , see also Ouimet [56]).

In the present paper, we complement the study reported in [41] by introducing five new asymmetric kernel c.d.f. estimators, namely the Gamma, inverse Gamma, LogNormal, inverse Gaussian and reciprocal inverse Gaussian kernel c.d.f. estimators. Our goal is to prove several asymptotic properties for these five new estimators (bias, variance, mean squared error, mean integrated squared error and asymptotic normality) and compare their numerical performance against traditional methods and against the Birnbaum–Saunders and Weibull kernel c.d.f. estimators from [41]. As we will see in the discussion of the results (Section 9), the LogNormal and Birnbaum–Saunders kernel c.d.f. estimators perform the best overall, while the other asymmetric kernel estimators are sometimes better but always at least competitive against the boundary kernel method from [57].

1. The Models

Let be a sequence of i.i.d. observations from an unknown cumulative distribution function F supported on the half-line . We consider the following seven asymmetric kernel estimators (the first five are new):

where is a smoothing (or bandwidth) parameter, and

denote, respectively, the survival function of the

- distribution (with the shape/scale parametrization);

- distribution (with the shape/scale parametrization);

- distribution;

- distribution;

- distribution;

- distribution;

- distribution.

The function denotes the upper incomplete gamma function (where ), and denotes the c.d.f. of the standard normal distribution. The parametrizations are chosen so that

- The mode of the kernel function in (1) is x;

In this paper, we will compare the numerical performance of the above seven asymmetric kernel c.d.f. estimators against the following three traditional estimators (K here is the c.d.f. of a kernel function):

which denote, respectively, the ordinary kernel (OK) c.d.f. estimator (from Tiago de Oliveira [58], Nadaraja [59] or Watson and Leadbetter [60]), the boundary kernel (BK) c.d.f. estimator (from Example 2.3 in [57]) and the empirical c.d.f. (EDF) estimator.

2. Outline, Assumptions and Notation

2.1. Outline

In Section 3, Section 4, Section 5, Section 6 and Section 7, the asymptotic normality, and the asymptotic expressions for the bias, variance, mean squared error (MSE) and mean integrated squared error (MISE), are stated for the , , , and kernel c.d.f. estimators, respectively. The proofs can be found in Appendix A, Appendix B, Appendix C, Appendix D and Appendix E, respectively. Aside from the asymptotic normality (which can easily be deduced), these results were obtained for the Birnbaum–Saunders and Weibull kernel c.d.f. estimators in [41]. In Section 8, we compare the performance of all seven asymmetric kernel estimators above with the three traditional estimators , and , defined in (8)–(10). A discussion of the results and our conclusion follow in Section 9 and Section 10. Technical calculations for the proofs of the asymptotic results are gathered in Appendix F.

2.2. Assumptions

Throughout the paper, we make the following two basic assumptions:

- The target c.d.f. F has two continuous and bounded derivatives;

- The smoothing (or bandwidth) parameter satisfies as .

2.3. Notation

Throughout the paper, the notation means that as . The positive constant C can depend on the target c.d.f. F, but no other variable unless explicitly written as a subscript. For example, if C depends on a given point , we would write . Similarly, the notation means that as . Subscripts indicate which parameters the convergence rate can depend on. The symbol over an arrow ‘⟶’ will denote the convergence in law (or distribution).

3. Asymptotic Properties of the c.d.f. Estimator with Gam Kernel

In this section, we find the asymptotic properties of the Gamma () kernel estimator defined in (1).

Lemma 1

(Bias and variance). For any given ,

Corollary 1

(Mean squared error). For any given ,

In particular, if , the asymptotically optimal choice of b, with respect to , is

with

Proposition 1

(Mean integrated squared error). Assuming that the target density satisfies

then we have

In particular, if , the asymptotically optimal choice of b, with respect to , is

with

Proposition 2

(Asymptotic normality). For any such that , we have the following convergence in distribution:

where . In particular, Lemma 1 implies

for any constant .

4. Asymptotic Properties of the c.d.f. Estimator with IGam Kernel

In this section, we find the asymptotic properties of the inverse Gamma () kernel estimator defined in (2).

Lemma 2

(Bias and variance). For any given ,

Corollary 2

(Mean squared error). For any given ,

In particular, if , the asymptotically optimal choice of b, with respect to , is

with

Proposition 3

(Mean integrated squared error). Assuming that the target density satisfies

then we have

In particular, if , the asymptotically optimal choice of b, with respect to , is

with

Proposition 4

(Asymptotic normality). For any such that , we have the following convergence in distribution:

where . In particular, Lemma 2 implies

for any constant .

5. Asymptotic Properties of the c.d.f. Estimator with LN Kernel

In this section, we find the asymptotic properties of the LogNormal (LN) kernel estimator defined in (3).

Lemma 3

(Bias and variance). For any given ,

Corollary 3

(Mean squared error). For any given ,

In particular, if , the asymptotically optimal choice of b, with respect to , is

with

Proposition 5

(Mean integrated squared error). Assuming that the target density satisfies

then we have

In particular, if , the asymptotically optimal choice of b, with respect to , is

with

Proposition 6

(Asymptotic normality). For any such that , we have the following convergence in distribution:

where . In particular, Lemma 3 implies

for any constant .

6. Asymptotic Properties of the c.d.f. Estimator with IGau Kernel

In this section, we find the asymptotic properties of the inverse Gaussian (IGau) kernel estimator defined in ().

Lemma 4

(Bias and variance). For any given ,

where .

Corollary 4

(Mean squared error). For any given ,

where . The quantity needs to be approximated numerically. In particular, if , the asymptotically optimal choice of b, with respect to , is

with

Proposition 7

(Mean integrated squared error). Assuming that the target density satisfies

where , then we have

The quantity needs to be approximated numerically. In particular, if , the asymptotically optimal choice of b, with respect to , is

with

Proposition 8

(Asymptotic normality). For any such that , we have the following convergence in distribution:

where . In particular, Lemma 4 implies

for any constant .

7. Asymptotic Properties of the c.d.f. Estimator with RIG Kernel

In this section, we find the asymptotic properties of the reciprocal inverse Gaussian (RIG) kernel estimator defined in (5).

Lemma 5

(Bias and variance). For any given ,

where .

Corollary 5

(Mean squared error). For any given ,

where . The quantity needs to be approximated numerically. In particular, if , the asymptotically optimal choice of b, with respect to , is

with

Proposition 9

(Mean integrated squared error). Assuming that the target density satisfies

where , then we have

The quantity needs to be approximated numerically. In particular, if , the asymptotically optimal choice of b, with respect to , is

with

Proposition 10

(Asymptotic normality). For any such that , we have the following convergence in distribution:

where . In particular, Lemma 5 implies

for any constant .

8. Numerical Study

As in [41], we generated samples of size and from eight target distributions:

- Burr , with the following parametrization for the density function:

- Gamma , with the following parametrization for the density function:

- Gamma , with the following parametrization for the density function:

- GeneralizedPareto , with the following parametrization for the density function:

- HalfNormal , with the following parametrization for the density function:

- LogNormal , with the following parametrization for the density function:

- Weibull , with the following parametrization for the density function:

- Weibull , with the following parametrization for the density function:

For each of the eight target distributions (), each of the ten estimators (), each sample size (), and each sample (), we calculated the integrated squared errors

where

- denotes the estimator from (1) applied to the k-th sample;

- denotes the estimator from (2) applied to the k-th sample;

- denotes the estimator from (3) applied to the k-th sample;

- denotes the estimator from (4) applied to the k-th sample;

- denotes the estimator from (5) applied to the k-th sample;

- denotes the estimator from (6) applied to the k-th sample;

- denotes the estimator from (7) applied to the k-th sample;

- , where

- denotes the c.d.f. of the Epanechnikov kernel;

- is selected by minimizing the Leave-None-Out criterion from page 197 in [61];

- is the boundary modified kernel estimator from Example 2.3 in [57], where

- denotes the c.d.f. of the Epanechnikov kernel;

- is selected by minimizing the Cross-Validation criterion from page 180 in [57];

- is the empirical c.d.f. applied to the k-th sample.

Everywhere in our R code, we approximated the integrals on using the integral function from the R package pracma (the base function integrate had serious precision issues). Table 1 below shows the mean and standard deviation of the ’s, i.e.,

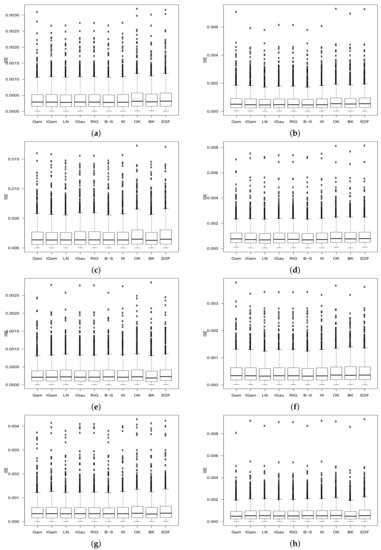

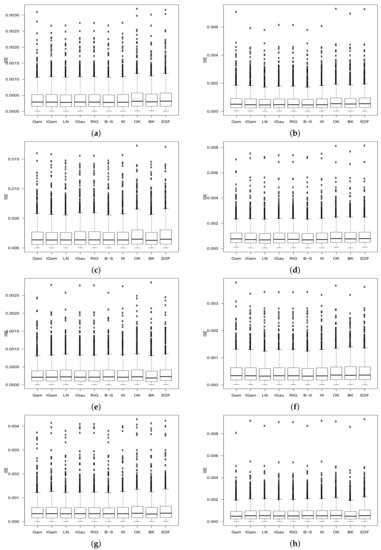

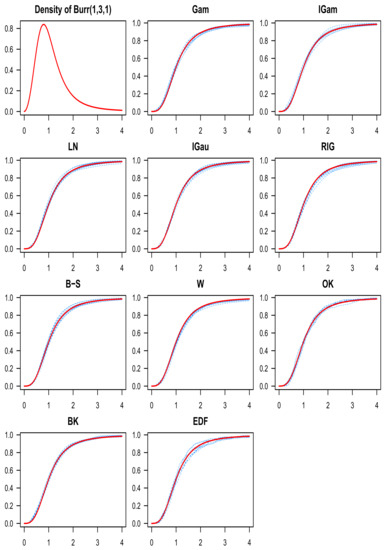

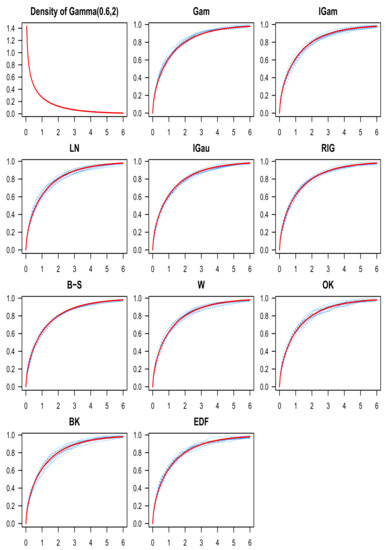

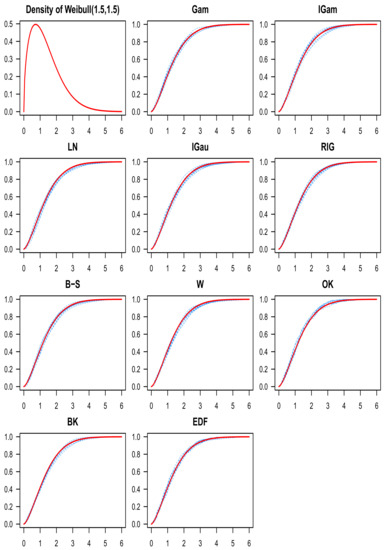

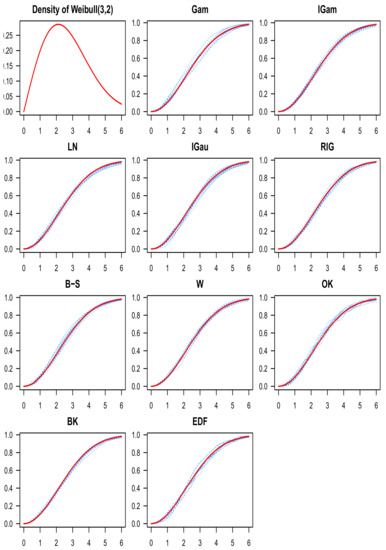

for the eight target distributions (), the ten estimators () and the two sample sizes (). All the values presented in the table have been multiplied by . In Table 2, we computed, for each target distribution and each sample size, the difference between the means and the lowest mean for the corresponding target distribution and sample size (i.e., the means minus the mean of the best estimator on the corresponding line). The totals of those differences are also calculated for each sample size on the two “total” lines. Figure 1 gives a better idea of the target distribution of ’s by displaying the boxplot of the ’s for every target distribution and every estimator, when the sample size is . Finally, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8 and Figure 9 (one figure for each of the eight target distributions) show a collection of ten c.d.f. estimates from each of the ten estimators when the sample size is 256.

Here are the results, which we discuss briefly in Section 9:

Figure 1.

Boxplots of the , for the eight target distributions and the ten estimators, when the sample size is n = 1000. (a) Burr(1, 3, 1). (b) Gamma(0.6, 2). (c) Gamma(4, 2). (d) GeneralizedPareto(0.4, 1, 0). (e) HalfNormal(1). (f) LogNormal(0, 0.75). (g) Weibull(1.5, 1.5). (h) Weibull(3, 2).

Table 1.

The mean and standard deviation of the , for the eight target distributions (), the ten estimators () and the two sample sizes (). All the values presented in the table have been multiplied by . The ordinary kernel estimator is denoted by , the boundary kernel estimator is denoted by , and the empirical c.d.f. is denoted by . For each line in the table, the lowest means are highlighted in cyan.

Table 1.

The mean and standard deviation of the , for the eight target distributions (), the ten estimators () and the two sample sizes (). All the values presented in the table have been multiplied by . The ordinary kernel estimator is denoted by , the boundary kernel estimator is denoted by , and the empirical c.d.f. is denoted by . For each line in the table, the lowest means are highlighted in cyan.

| i | Gam () | IGam () | LN () | IGau () | RIG () | B-S () | W () | OK () | BK () | EDF () | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Std. | Mean | Std. | Mean | Std. | Mean | Std. | Mean | Std. | Mean | Std. | Mean | Std. | Mean | Std. | Mean | Std. | Mean | Std. | ||

| 256 | 1 | 1.39 | 1.27 | 1.37 | 1.34 | 1.31 | 1.26 | 1.37 | 1.32 | 1.37 | 1.32 | 1.31 | 1.26 | 1.37 | 1.32 | 1.54 | 1.43 | 1.47 | 1.34 | 1.54 | 1.44 |

| 2 | 2.59 | 2.36 | 2.50 | 2.53 | 2.36 | 2.42 | 2.49 | 2.46 | 2.49 | 2.47 | 2.36 | 2.42 | 2.50 | 2.51 | 2.76 | 2.44 | 2.67 | 2.57 | 2.76 | 2.45 | |

| 3 | 6.70 | 6.28 | 6.77 | 6.58 | 6.62 | 6.28 | 6.69 | 6.45 | 6.69 | 6.45 | 6.62 | 6.28 | 6.74 | 6.54 | 7.44 | 7.01 | 6.70 | 6.39 | 7.44 | 7.00 | |

| 4 | 3.74 | 3.14 | 3.60 | 3.27 | 3.36 | 3.15 | 3.61 | 3.20 | 3.61 | 3.21 | 3.36 | 3.14 | 3.60 | 3.26 | 3.96 | 3.24 | 3.80 | 3.27 | 3.97 | 3.24 | |

| 5 | 1.14 | 1.10 | 1.18 | 1.13 | 1.18 | 1.07 | 1.17 | 1.13 | 1.17 | 1.13 | 1.18 | 1.07 | 1.17 | 1.12 | 1.26 | 1.19 | 1.10 | 1.14 | 1.26 | 1.19 | |

| 6 | 1.93 | 1.83 | 1.91 | 1.89 | 1.81 | 1.80 | 1.91 | 1.87 | 1.91 | 1.87 | 1.81 | 1.80 | 1.91 | 1.88 | 2.13 | 1.94 | 2.05 | 1.93 | 2.13 | 1.95 | |

| 7 | 1.75 | 1.82 | 1.77 | 1.99 | 1.68 | 1.83 | 1.76 | 1.96 | 1.76 | 1.96 | 1.68 | 1.83 | 1.76 | 1.95 | 1.95 | 1.93 | 1.73 | 2.04 | 1.95 | 1.92 | |

| 8 | 2.69 | 2.71 | 2.75 | 2.78 | 2.81 | 2.66 | 2.67 | 2.71 | 2.67 | 2.71 | 2.81 | 2.66 | 2.75 | 2.75 | 3.02 | 2.88 | 2.56 | 2.59 | 3.03 | 2.88 | |

| 1000 | 1 | 0.40 | 0.36 | 0.39 | 0.36 | 0.38 | 0.35 | 0.39 | 0.36 | 0.39 | 0.36 | 0.38 | 0.35 | 0.39 | 0.36 | 0.43 | 0.39 | 0.41 | 0.36 | 0.43 | 0.39 |

| 2 | 0.72 | 0.70 | 0.70 | 0.69 | 0.67 | 0.67 | 0.70 | 0.69 | 0.70 | 0.69 | 0.67 | 0.67 | 0.70 | 0.69 | 0.75 | 0.71 | 0.73 | 0.72 | 0.75 | 0.71 | |

| 3 | 2.01 | 2.09 | 2.05 | 2.22 | 2.02 | 2.16 | 2.04 | 2.15 | 2.04 | 2.15 | 2.02 | 2.16 | 2.05 | 2.20 | 2.23 | 2.29 | 1.99 | 2.09 | 2.23 | 2.30 | |

| 4 | 0.99 | 0.79 | 0.97 | 0.82 | 0.93 | 0.80 | 0.97 | 0.81 | 0.97 | 0.81 | 0.93 | 0.80 | 0.97 | 0.82 | 1.03 | 0.82 | 1.00 | 0.82 | 1.03 | 0.83 | |

| 5 | 0.31 | 0.31 | 0.31 | 0.31 | 0.31 | 0.30 | 0.31 | 0.31 | 0.31 | 0.31 | 0.31 | 0.30 | 0.31 | 0.31 | 0.33 | 0.32 | 0.30 | 0.32 | 0.33 | 0.32 | |

| 6 | 0.47 | 0.43 | 0.47 | 0.43 | 0.46 | 0.42 | 0.47 | 0.43 | 0.47 | 0.43 | 0.46 | 0.42 | 0.47 | 0.43 | 0.50 | 0.45 | 0.49 | 0.43 | 0.50 | 0.45 | |

| 7 | 0.46 | 0.46 | 0.46 | 0.48 | 0.44 | 0.45 | 0.46 | 0.48 | 0.46 | 0.48 | 0.44 | 0.45 | 0.46 | 0.48 | 0.49 | 0.50 | 0.46 | 0.48 | 0.49 | 0.50 | |

| 8 | 0.72 | 0.74 | 0.74 | 0.75 | 0.75 | 0.74 | 0.73 | 0.75 | 0.73 | 0.75 | 0.75 | 0.74 | 0.74 | 0.75 | 0.78 | 0.80 | 0.70 | 0.72 | 0.78 | 0.81 | |

Table 2.

For each of the eight target distributions () and each of the two sample sizes (), a cell represents the mean of the , minus the lowest mean for that line (i.e., minus the mean of the best estimator for that specific target distribution and sample size). For each estimator () and each sample size, the total of those differences to the best means is calculated on the line called “total”. For each sample size, the lowest totals are highlighted in cyan.

Table 2.

For each of the eight target distributions () and each of the two sample sizes (), a cell represents the mean of the , minus the lowest mean for that line (i.e., minus the mean of the best estimator for that specific target distribution and sample size). For each estimator () and each sample size, the total of those differences to the best means is calculated on the line called “total”. For each sample size, the lowest totals are highlighted in cyan.

| i | Gam () | IGam () | LN () | IGau () | RIG () | B-S () | W () | OK () | BK () | EDF () | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Diff. with | Diff. with | Diff. with | Diff. with | Diff. with | Diff. with | Diff. with | Diff. with | Diff. with | Diff. with | ||

| Lowest | Lowest | Lowest | Lowest | Lowest | Lowest | Lowest | Lowest | Lowest | Lowest | ||

| Mean | Mean | Mean | Mean | Mean | Mean | Mean | Mean | Mean | Mean | ||

| 256 | 1 | 0.08 | 0.06 | 0.00 | 0.06 | 0.06 | 0.00 | 0.06 | 0.23 | 0.16 | 0.23 |

| 2 | 0.23 | 0.14 | 0.00 | 0.14 | 0.13 | 0.00 | 0.14 | 0.40 | 0.32 | 0.40 | |

| 3 | 0.08 | 0.15 | 0.01 | 0.08 | 0.07 | 0.00 | 0.12 | 0.82 | 0.09 | 0.82 | |

| 4 | 0.38 | 0.24 | 0.00 | 0.25 | 0.24 | 0.00 | 0.23 | 0.60 | 0.43 | 0.60 | |

| 5 | 0.05 | 0.08 | 0.09 | 0.07 | 0.07 | 0.08 | 0.08 | 0.16 | 0.00 | 0.17 | |

| 6 | 0.12 | 0.10 | 0.00 | 0.10 | 0.10 | 0.00 | 0.10 | 0.32 | 0.24 | 0.32 | |

| 7 | 0.07 | 0.10 | 0.00 | 0.09 | 0.09 | 0.00 | 0.08 | 0.27 | 0.05 | 0.27 | |

| 8 | 0.13 | 0.19 | 0.25 | 0.11 | 0.11 | 0.25 | 0.19 | 0.46 | 0.00 | 0.46 | |

| total | 1.14 | 1.07 | 0.35 | 0.89 | 0.88 | 0.34 | 0.99 | 3.26 | 1.29 | 3.28 | |

| 1000 | 1 | 0.02 | 0.01 | 0.00 | 0.01 | 0.01 | 0.00 | 0.01 | 0.05 | 0.03 | 0.05 |

| 2 | 0.04 | 0.02 | 0.00 | 0.02 | 0.02 | 0.00 | 0.02 | 0.07 | 0.06 | 0.08 | |

| 3 | 0.02 | 0.06 | 0.03 | 0.05 | 0.05 | 0.03 | 0.06 | 0.24 | 0.00 | 0.24 | |

| 4 | 0.06 | 0.04 | 0.00 | 0.04 | 0.04 | 0.00 | 0.04 | 0.10 | 0.07 | 0.10 | |

| 5 | 0.01 | 0.02 | 0.02 | 0.01 | 0.01 | 0.02 | 0.02 | 0.03 | 0.00 | 0.03 | |

| 6 | 0.02 | 0.02 | 0.00 | 0.02 | 0.02 | 0.00 | 0.02 | 0.04 | 0.04 | 0.04 | |

| 7 | 0.01 | 0.02 | 0.00 | 0.02 | 0.02 | 0.00 | 0.02 | 0.05 | 0.02 | 0.05 | |

| 8 | 0.02 | 0.04 | 0.05 | 0.03 | 0.03 | 0.05 | 0.04 | 0.08 | 0.00 | 0.08 | |

| total | 0.20 | 0.23 | 0.10 | 0.20 | 0.20 | 0.10 | 0.24 | 0.66 | 0.22 | 0.68 | |

Figure 2.

The Burr density function appears on the top-left, and the target c.d.f. is depicted in red everywhere else. Each plot has ten estimates in blue for the Burr c.d.f. using one of the ten estimators (the name of the corresponding kernel is indicated above each graph) and .

Figure 3.

The Gamma density function appears on the top-left, and the target c.d.f. is depicted in red everywhere else. Each plot has ten estimates in blue for the Gamma c.d.f. using one of the ten estimators (the name of the corresponding kernel is indicated above each graph) and .

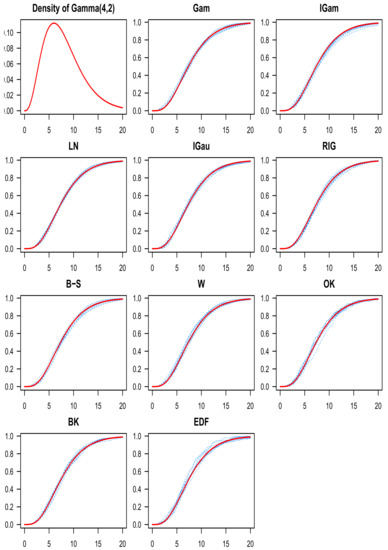

Figure 4.

The Gamma density function appears on the top-left, and the target c.d.f. is depicted in red everywhere else. Each plot has ten estimates in blue for the Gamma c.d.f. using one of the ten estimators (the name of the corresponding kernel is indicated above each graph) and .

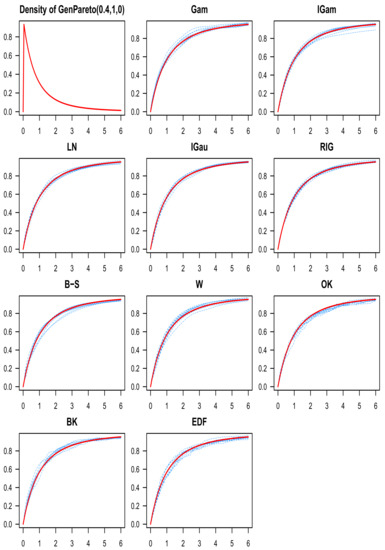

Figure 5.

The GeneralizedPareto density function appears on the top-left, and the target c.d.f. is depicted in red everywhere else. Each plot has ten estimates in blue for the GeneralizedPareto c.d.f. using one of the ten estimators (the name of the corresponding kernel is indicated above each graph) and .

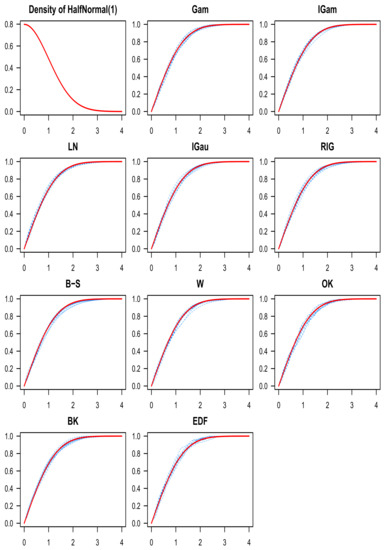

Figure 6.

The HalfNormal density function appears on the top-left, and the target c.d.f. is depicted in red everywhere else. Each plot has ten estimates in blue for the HalfNormal c.d.f. using one of the ten estimators (the name of the corresponding kernel is indicated above each graph) and .

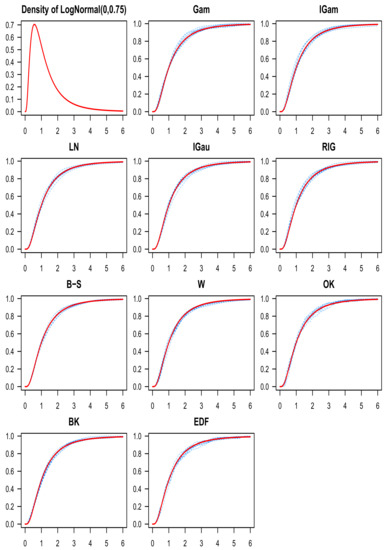

Figure 7.

The LogNormal density function appears on the top-left, and the target c.d.f. is depicted in red everywhere else. Each plot has ten estimates in blue for the LogNormal c.d.f. using one of the ten estimators (the name of the corresponding kernel is indicated above each graph) and .

Figure 8.

The Weibull density function appears on the top-left, and the target c.d.f. is depicted in red everywhere else. Each plot has ten estimates in blue for the Weibull c.d.f. using one of the ten estimators (the name of the corresponding kernel is indicated above each graph) and .

Figure 9.

The Weibull density function appears on the top-left, and the target c.d.f. is depicted in red everywhere else. Each plot has ten estimates in blue for the Weibull c.d.f. using one of the ten estimators (the name of the corresponding kernel is indicated above each graph) and .

9. Discussion of the Simulation Results

In Table 1, the mean and standard deviation of the , are displayed for the eight target distributions (), the ten estimators () and the two sample sizes (). All the values presented in the table have been multiplied by . For each line in the table (i.e., for each target distribution and each sample size), the lowest means are highlighted in cyan. We see that the LogNormal (LN) and Birnbaum–Saunders (B–S) kernel c.d.f. estimators performed the best (had the lowest means) for the majority of the target distributions considered (for when , and for when ). They also always did so in pair, with the same mean up to the second decimal. For the remaining cases, the boundary kernel c.d.f. estimator (BK) from Tenreiro [57] had the lowest means. As expected, the ordinary kernel c.d.f. estimator and the empirical c.d.f. performed the worst. The standard deviations are fairly stable across all estimators for any given target distribution and sample size (this can also be seen in Figure 1), so our analysis focuses on the means. In [41], the authors reported that the empirical c.d.f. performed better than the BK estimator, but this has to be a programming error (especially since the bandwidth was optimized with a plug-in method). Overall, our means and standard deviations in Table 1 seem to be lower than the ones reported in [41] at least in part because we used a more precise option (the pracma::integral function in R) to approximate the integrals involved in the bandwidth selection procedures and the computation of the ’s. In all cases, the asymmetric kernel estimators were at least competitive with the BK estimator in Table 1. To give an idea of the shape of the eight target distributions and the corresponding estimates for each of the ten estimators, we plotted the eight target c.d.f.s and ten estimates for each estimator (one figure for each of the eight target distributions, ten graphs per figure for the ten estimators, and ten estimates per graph) in Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8 and Figure 9 when the sample size is .

In Table 2, for each of the eight target distributions () and each of the two sample sizes (), a cell represents the mean of the , minus the lowest mean for that line (i.e., minus the mean of the best estimator for that specific target distribution and sample size). For each estimator () and each sample size, the total of those differences to the best means is calculated on the line called “total”. For each sample size, the lowest totals are highlighted in cyan. We see that Table 2 paints a nice picture of the asymmetric kernel c.d.f. estimators’ performance. Indeed, it shows that for each sample size (), the total of the differences to the best means is significantly lower for the LogNormal (LN) and Birnbaum–Saunders (B–S) kernel c.d.f. estimators compared to all the other alternatives. For instance, the boundary kernel (BK) c.d.f. estimator would have been the go-to method in the past, but our results show that the total (over the eight target distributions) of the mean differences to the best means is more than three times lower for the LN and B–S kernel c.d.f. estimators compared to the BK c.d.f. estimator when , and similarly, it is more than two times lower for the LN and B–S kernel c.d.f. estimators compared to the BK c.d.f. estimator when . Even if we put aside the best asymmetric kernel c.d.f. estimators, the totals of the mean differences to the best means for all the other asymmetric kernel c.d.f. estimators are also lower than for the BK c.d.f. estimator when , and they are in the same range (or better in the case of LN and B–S) when . This means that all the asymmetric kernel estimators are overall better alternatives (or at least always remain competitive) compared to the BK estimator, although the advantage seems to dissipate (except for LN and B–S) when n increases.

10. Conclusions

In this paper, we considered five new asymmetric kernel c.d.f. estimators, namely the Gamma (Gam), inverse Gamma (IGam), LogNormal (LN), inverse Gaussian (IGau) and reciprocal inverse Gaussian (RIG) kernel c.d.f. estimators. We proved the asymptotic normality of these estimators and we also found asymptotic expressions for their bias, variance, mean squared error and mean integrated squared error. The expressions for the optimal bandwidth under the mean integrated squared error were used in each case to implement a bandwidth selection procedure in our simulation study. With the same experimental design as Mombeni et al. [41] (but with an improved approximation of the integrals involved in the bandwidth selection procedures and the computation of the ’s), our results show that the LogNormal and Birnbaum–Saunders kernel c.d.f. estimators perform the best overall. The results also show that all seven asymmetric kernel c.d.f. estimators are better in some cases and always at least competitive against the boundary kernel alternative presented by Tenreiro [57]. In that sense, all seven asymmetric kernel c.d.f. estimators are safe to use in place of more traditional methods. We recommend using the LogNormal and Birnbaum–Saunders kernel c.d.f. estimators in the future.

Author Contributions

Conceptualisation, methodology, investigation, formal analysis, software, coding and simulations, validation, visualisation, writing—original draft preparation, writing—review and editing, review of the literature, theoretical results and proofs, F.O.; software, coding and simulations, validation, P.L.d.M. All authors have read and agreed to the published version of the manuscript.

Funding

F. Ouimet is supported by postdoctoral fellowships from the NSERC (PDF) and the FRQNT (B3X supplement and B3XR).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The R code for the simulations in Section 8 is available online.

Acknowledgments

We thank Benedikt Funke for reminding us of the representation , which helped tightening up the MSE and MISE results in Section 6 and Section 7. This research includes computations using the computational cluster Katana supported by Research Technology Services at UNSW Sydney. We thank the referees for their insightful remarks which led to improvements in the presentation of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of the Results for the Gam Kernel

Proof of Lemma 1.

If T denotes a random variable with the density

then integration by parts yields

Now, we want to compute the expression for the variance. Let S be a random variable with density and note that has that particular distribution if are independent. Then, integration by parts and Corollary A1 yield, for any given ,

so that

This ends the proof. □

Proof of Proposition 1.

Note that , where

are i.i.d. and centered random variables. It suffices to show the following Lindeberg condition for double arrays (see, e.g., Section 1.9.3 in [62]): for every ,

where and . This follows from the fact that for all , and as by Lemma 1. □

Appendix B. Proof of the Results for the IGam Kernel

Proof of Lemma 2.

If T denotes a random variable with the density

then integration by parts yields (assuming )

Now, we want to compute the expression for the variance. Let S be a random variable with density and note that has that particular distribution if are independent. Then, integration by parts and Corollary A2, for any given ,

so that

This ends the proof. □

Proof of Proposition 3.

Note that , where

are i.i.d. and centered random variables. It suffices to show the following Lindeberg condition for double arrays (see, e.g., Section 1.9.3 in [62]): for every ,

where and . This follows from the fact that for all , and as by Lemma 2. □

Appendix C. Proof of the Results for the LN Kernel

Proof of Lemma 3.

If T denotes a random variable with the density

then integration by parts yields

Now, we want to compute the expression for the variance. Let S be a random variable with density and note that has that particular distribution if are independent. Then, integration by parts and Corollary A3 yield, for any given ,

so that

This ends the proof. □

Proof of Proposition 5.

Note that , where

are i.i.d. and centered random variables. It suffices to show the following Lindeberg condition for double arrays (see, e.g., Section 1.9.3 in [62]): for every ,

where and . This follows from the fact that for all , and as by Lemma 3. □

Appendix D. Proof of the Results for the IGau Kernel

Proof of Lemma 4.

If T denotes a random variable with the density

then integration by parts yields

Now, we want to compute the expression for the variance. Let S be a random variable with density and note that , which can also be written as , has that particular distribution if are independent. Then, integration by parts together with the fact that yield, for any given ,

so that

This ends the proof. □

Proof of Proposition 7.

Note that , where

are i.i.d. and centered random variables. It suffices to show the following Lindeberg condition for double arrays (see, e.g., Section 1.9.3 in [62]): for every ,

where and . This follows from the fact that for all , and as by Lemma 4. □

Appendix E. Proof of the Results for the RIG Kernel

Proof of Lemma 5.

If T denotes a random variable with the density

then integration by parts yields

Now, we want to compute the expression for the variance. Let S be a random variable with density and note that , which can also be written as , has that particular distribution if are independent. Then, integration by parts together with the fact that yield, for any given ,

so that

This ends the proof. □

Proof of Proposition 9.

Note that , where

are i.i.d. and centered random variables. It suffices to show the following Lindeberg condition for double arrays (see, e.g., Section 1.9.3 in [62]): for every ,

where and . This follows from the fact that for all , and as by Lemma 5. □

Appendix F. Technical Lemmas

The lemma below computes the first two moments for the minimum of two i.i.d. random variables with a Gamma distribution. The proof is a slight generalization of the answer provided by Felix Marin in the following MathStackExchange post (https://math.stackexchange.com/questions/3910094/how-to-compute-this-double-integral-involving-the-gamma-function) (accessed on 15 September 2021).

Lemma A1.

Let , then

where denotes the gamma function. In particular, for all ,

Proof.

Assume throughout the proof that . By the simple change of variables , we have

By the integral representation of the Heaviside function

the above is

where denotes the Dirac delta function. The second term in the last brace is and the principal value is

where we crucially used the fact that to obtain the last equality. Putting all the work back in (A36), we obtain

The remaining integral can be evaluated using Ramanujan’s master theorem. Indeed, note that

Therefore,

By putting this result in (A38), we obtain

This ends the proof. □

Corollary A1.

Let for some , then

The lemma below computes the first two moments for the minimum of two i.i.d. random variables with an inverse Gamma distribution.

Lemma A2.

Let and assume , then

where Φ denotes the c.d.f. of the standard normal distribution. In particular, for all ,

Proof.

Assume throughout the proof that . By the simple change of variables and the reparametrization , we have

We already evaluated this double integral in the proof of Lemma A1 (with instead of ). The above is

This ends the proof. □

Corollary A2.

Let for some and , then

The lemma below computes the first two moments for the minimum of two i.i.d. random variables with a LogNormal distribution.

Lemma A3.

Let , then

where Φ denotes the c.d.f. of the standard normal distribution. In particular, for all ,

Proof.

With the change of variables

we have

This ends the proof. □

Corollary A3.

Let for some , then

References

- Aitchison, J.; Lauder, I.J. Kernel density estimation for compositional data. J. R. Stat. Soc. Ser. C 1985, 34, 129–137. [Google Scholar] [CrossRef]

- Chen, S.X. Beta kernel estimators for density functions. Comput. Stat. Data Anal. 1999, 31, 131–145. [Google Scholar] [CrossRef]

- Chen, S.X. Probability density function estimation using gamma kernels. Ann. Inst. Stat. Math. 2000, 52, 471–480. [Google Scholar] [CrossRef]

- Rosenblatt, M. Remarks on some nonparametric estimates of a density function. Ann. Math. Stat. 1956, 27, 832–837. [Google Scholar] [CrossRef]

- Parzen, E. On estimation of a probability density function and mode. Ann. Math. Stat. 1962, 33, 1065–1076. [Google Scholar] [CrossRef]

- Gasser, T.; Müller, H.G. Kernel estimation of regression functions. In Smoothing Techniques for Curve Estimation; Springer: Berlin/Heidelberg, Germany, 1979; pp. 23–68. [Google Scholar]

- Rice, J. Boundary modification for kernel regression. Comm. Stat. A Theory Methods 1984, 13, 893–900. [Google Scholar]

- Gasser, T.; Müller, H.G.; Mammitzsch, V. Kernels for nonparametric curve estimation. J. R. Stat. Soc. Ser. B 1985, 47, 238–252. [Google Scholar] [CrossRef]

- Müller, H.G. Smooth optimum kernel estimators near endpoints. Biometrika 1991, 78, 521–530. [Google Scholar] [CrossRef]

- Zhang, S.; Karunamuni, R.J. On kernel density estimation near endpoints. J. Stat. Plann. Inference 1998, 70, 301–316. [Google Scholar] [CrossRef]

- Zhang, S.; Karunamuni, R.J. On nonparametric density estimation at the boundary. J. Nonparametr. Stat. 2000, 12, 197–221. [Google Scholar] [CrossRef]

- Bouezmarni, T.; Rolin, J.M. Consistency of the beta kernel density function estimator. Canad. J. Stat. 2003, 31, 89–98. [Google Scholar] [CrossRef]

- Renault, O.; Scaillet, O. On the way to recovery: A nonparametric bias free estimation of recovery rate densities. J. Bank. Financ. 2004, 28, 2915–2931. [Google Scholar] [CrossRef] [Green Version]

- Fernandes, M.; Monteiro, P.K. Central limit theorem for asymmetric kernel functionals. Ann. Inst. Stat. Math. 2005, 57, 425–442. [Google Scholar] [CrossRef]

- Hirukawa, M. Nonparametric multiplicative bias correction for kernel-type density estimation on the unit interval. Comput. Stat. Data Anal. 2010, 54, 473–495. [Google Scholar] [CrossRef]

- Bouezmarni, T.; Rombouts, J.V.K. Nonparametric density estimation for multivariate bounded data. J. Stat. Plann. Inference 2010, 140, 139–152. [Google Scholar] [CrossRef] [Green Version]

- Zhang, S.; Karunamuni, R.J. Boundary performance of the beta kernel estimators. J. Nonparametr. Stat. 2010, 22, 81–104. [Google Scholar] [CrossRef]

- Bertin, K.; Klutchnikoff, N. Minimax properties of beta kernel estimators. J. Stat. Plann. Inference 2011, 141, 2287–2297. [Google Scholar] [CrossRef]

- Bertin, K.; Klutchnikoff, N. Adaptive estimation of a density function using beta kernels. ESAIM Probab. Stat. 2014, 18, 400–417. [Google Scholar] [CrossRef]

- Igarashi, G. Bias reductions for beta kernel estimation. J. Nonparametr. Stat. 2016, 28, 1–30. [Google Scholar] [CrossRef]

- Jin, X.; Kawczak, J. Birnbaum-Saunders and lognormal kernel estimators for modelling durations in high frequency financial data. Ann. Econ. Financ. 2003, 4, 103–124. Available online: http://aeconf.com/Articles/May2003/aef040106.pdf (accessed on 15 September 2021).

- Scaillet, O. Density estimation using inverse and reciprocal inverse Gaussian kernels. J. Nonparametr. Stat. 2004, 16, 217–226. [Google Scholar] [CrossRef] [Green Version]

- Bouezmarni, T.; Scaillet, O. Consistency of asymmetric kernel density estimators and smoothed histograms with application to income data. Econom. Theor. 2005, 21, 390–412. [Google Scholar] [CrossRef] [Green Version]

- Bouezmarni, T.; Rombouts, J.V.K. Density and hazard rate estimation for censored and α-mixing data using gamma kernels. J. Nonparametr. Stat. 2008, 20, 627–643. [Google Scholar] [CrossRef] [Green Version]

- Bouezmarni, T.; Rombouts, J.V.K. Nonparametric density estimation for positive time series. Comput. Stat. Data Anal. 2010, 54, 245–261. [Google Scholar] [CrossRef] [Green Version]

- Igarashi, G.; Kakizawa, Y. Re-formulation of inverse Gaussian, reciprocal inverse Gaussian, and Birnbaum-Saunders kernel estimators. Stat. Probab. Lett. 2014, 84, 235–246. [Google Scholar] [CrossRef]

- Igarashi, G.; Kakizawa, Y. Generalised gamma kernel density estimation for nonnegative data and its bias reduction. J. Nonparametr. Stat. 2018, 30, 598–639. [Google Scholar] [CrossRef]

- Charpentier, A.; Flachaire, E. Log-transform kernel density estimation of income distribution. L’actualité Économique Rev. D’analyse Économique 2015, 91, 141–159. [Google Scholar] [CrossRef] [Green Version]

- Igarashi, G. Weighted log-normal kernel density estimation. Comm. Stat. Theory Methods 2016, 45, 6670–6687. [Google Scholar] [CrossRef]

- Zougab, N.; Adjabi, S. Multiplicative bias correction for generalized Birnbaum-Saunders kernel density estimators and application to nonnegative heavy tailed data. J. Korean Stat. Soc. 2016, 45, 51–63. [Google Scholar] [CrossRef]

- Kakizawa, Y.; Igarashi, G. Inverse gamma kernel density estimation for nonnegative data. J. Korean Stat. Soc. 2017, 46, 194–207. [Google Scholar] [CrossRef]

- Kakizawa, Y. Nonparametric density estimation for nonnegative data, using symmetrical-based inverse and reciprocal inverse Gaussian kernels through dual transformation. J. Stat. Plann. Inference 2018, 193, 117–135. [Google Scholar] [CrossRef]

- Zougab, N.; Harfouche, L.; Ziane, Y.; Adjabi, S. Multivariate generalized Birnbaum-Saunders kernel density estimators. Comm. Stat. Theory Methods 2018, 47, 4534–4555. [Google Scholar] [CrossRef]

- Zhang, S. A note on the performance of the gamma kernel estimators at the boundary. Stat. Probab. Lett. 2010, 80, 548–557. [Google Scholar] [CrossRef]

- Kakizawa, Y. Multivariate non-central Birnbaum-Saunders kernel density estimator for nonnegative data. J. Stat. Plann. Inference 2020, 209, 187–207. [Google Scholar] [CrossRef]

- Ouimet, F.; Tolosana-Delgado, R. Asymptotic properties of Dirichlet kernel density estimators. J. Multivar. Anal. 2022, 187, 104832. [Google Scholar] [CrossRef]

- Kokonendji, C.C.; Libengué Dobélé-Kpoka, F.G.B. Asymptotic results for continuous associated kernel estimators of density functions. Afr. Diaspora J. Math. 2018, 21, 87–97. [Google Scholar]

- Kokonendji, C.C.; Somé, S.M. On multivariate associated kernels to estimate general density functions. J. Korean Stat. Soc. 2018, 47, 112–126. [Google Scholar] [CrossRef]

- Kokonendji, C.C.; Somé, S.M. Bayesian bandwidths in semiparametric modelling for nonnegative orthant data with diagnostics. Stats 2021, 4, 162–183. [Google Scholar] [CrossRef]

- Hirukawa, M. Asymmetric Kernel Smoothing; SpringerBriefs in Statistics; Springer: Singapore, 2018; p. xii+110. [Google Scholar]

- Mombeni, H.A.; Masouri, B.; Akhoond, M.R. Asymmetric Kernels for Boundary Modification in Distribution Function Estimation. Revstat 2019, 1–27. Available online: https://www.ine.pt/revstat/pdf/Asymmetrickernelsforboundarymodificationindistributionfunctionestimation.pdf (accessed on 15 September 2021).

- Babu, G.J.; Canty, A.J.; Chaubey, Y.P. Application of Bernstein polynomials for smooth estimation of a distribution and density function. J. Stat. Plann. Inference 2002, 105, 377–392. [Google Scholar] [CrossRef]

- Leblanc, A. Chung-Smirnov property for Bernstein estimators of distribution functions. J. Nonparametr. Stat. 2009, 21, 133–142. [Google Scholar] [CrossRef]

- Leblanc, A. On estimating distribution functions using Bernstein polynomials. Ann. Inst. Stat. Math. 2012, 64, 919–943. [Google Scholar] [CrossRef]

- Leblanc, A. On the boundary properties of Bernstein polynomial estimators of density and distribution functions. J. Stat. Plann. Inference 2012, 142, 2762–2778. [Google Scholar] [CrossRef]

- Dutta, S. Distribution function estimation via Bernstein polynomial of random degree. Metrika 2016, 79, 239–263. [Google Scholar] [CrossRef]

- Jmaei, A.; Slaoui, Y.; Dellagi, W. Recursive distribution estimator defined by stochastic approximation method using Bernstein polynomials. J. Nonparametr. Stat. 2017, 29, 792–805. [Google Scholar] [CrossRef]

- Erdoğan, M.S.; Dişibüyük, C.; Ege Oruç, O. An alternative distribution function estimation method using rational Bernstein polynomials. J. Comput. Appl. Math. 2019, 353, 232–242. [Google Scholar] [CrossRef]

- Wang, X.; Song, L.; Sun, L.; Gao, H. Nonparametric estimation of the ROC curve based on the Bernstein polynomial. J. Stat. Plann. Inference 2019, 203, 39–56. [Google Scholar] [CrossRef]

- Babu, G.J.; Chaubey, Y.P. Smooth estimation of a distribution and density function on a hypercube using Bernstein polynomials for dependent random vectors. Stat. Probab. Lett. 2006, 76, 959–969. [Google Scholar] [CrossRef]

- Belalia, M. On the asymptotic properties of the Bernstein estimator of the multivariate distribution function. Stat. Probab. Lett. 2016, 110, 249–256. [Google Scholar] [CrossRef]

- Dib, K.; Bouezmarni, T.; Belalia, M.; Kitouni, A. Nonparametric bivariate distribution estimation using Bernstein polynomials under right censoring. Comm. Stat. Theory Methods 2020, 1–11. [Google Scholar] [CrossRef]

- Ouimet, F. Asymptotic properties of Bernstein estimators on the simplex. J. Multivariate Anal. 2021, 185, 104784. [Google Scholar] [CrossRef]

- Ouimet, F. On the boundary properties of Bernstein estimators on the simplex. arXiv 2021, arXiv:2006.11756. [Google Scholar]

- Hanebeck, A.; Klar, B. Smooth distribution function estimation for lifetime distributions using Szasz-Mirakyan operators. Ann. Inst. Stat. Math. 2021, 1–19. [Google Scholar] [CrossRef]

- Ouimet, F. On the Le Cam distance between Poisson and Gaussian experiments and the asymptotic properties of Szasz estimators. J. Math. Anal. Appl. 2021, 499, 125033. [Google Scholar] [CrossRef]

- Tenreiro, C. Boundary kernels for distribution function estimation. REVSTAT Stat. J. 2013, 11, 169–190. [Google Scholar]

- Tiago de Oliveira, J. Estatística de densidades: Resultados assintóticos. Rev. Fac. Ciências Lisb. 1963, 9, 111–206. [Google Scholar]

- Nadaraja, E.A. Some new estimates for distribution functions. Teor. Verojatnost. i Primenen. 1964, 9, 550–554. [Google Scholar]

- Watson, G.S.; Leadbetter, M.R. Hazard analysis. II. Sankhyā Ser. A 1964, 26, 101–116. [Google Scholar]

- Altman, N.; Léger, C. Bandwidth selection for kernel distribution function estimation. J. Stat. Plann. Inference 1995, 46, 195–214. [Google Scholar] [CrossRef] [Green Version]

- Serfling, R.J. Approximation Theorems of Mathematical Statistics; Wiley Series in Probability and Mathematical Statistics; John Wiley & Sons, Inc.: New York, NY, USA, 1980; p. xvi+371. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).