Abstract

In this paper, we study the single machine vector scheduling problem (SMVS) with general penalties, in which each job is characterized by a d-dimensional vector and can be accepted and processed on the machine or rejected. The objective is to minimize the sum of the maximum load over all dimensions of the total vector of all accepted jobs and the rejection penalty of the rejected jobs, which is determined by a set function. We perform the following work in this paper. First, we prove that the lower bound for SMVS with general penalties is , where is any positive polynomial function of n. Then, we consider a special case in which both the diminishing-return ratio of the set function and the minimum load over all dimensions of any job are larger than zero, and we design an approximation algorithm based on the projected subgradient method. Second, we consider another special case in which the penalty set function is submodular. We propose a noncombinatorial -approximation algorithm and a combinatorial -approximation algorithm, where r is the maximum ratio of the maximum load to the minimum load on the d-dimensional vector.

1. Introduction

Parallel machine scheduling has had a long history since the pioneering work of Graham in [1]. Given n jobs , m parallel machines and that for each job , any machine could process it with the same time , each job should be processed on one of the machines, and the objective is to minimize the makespan, which is the maximum processing time over all machines. Graham [1] proved that parallel machine scheduling is strongly -hard and designed a classical list scheduling (LS) algorithm that achieved a worst-case guarantee of 2. Hochbaum and Shmoys [2] designed a polynomial time approximation scheme (PTAS), which was improved to an efficient polynomial time approximation scheme (EPTAS) designed by Alon et al. [3]. For this problem, the best algorithm for is the EPTAS designed by Jansen et al. [4].

However, in many practical make-to-order production systems, due to limited production capacity or tight delivery requirements, a manufacturer may only accept some jobs and rejects the others, scheduling the accepted jobs to the machines for processing. With this motivation, parallel machine scheduling with rejection was proposed by Bartal et al. [5], where a job can be rejected and a rejection penalty is paid. The objective of parallel machine scheduling with rejection is to minimize the makespan plus the total rejection penalty. Bartal et al. [5] proposed a 2-approximation algorithm with running time and a polynomial time approximation scheme (PTAS). Then, Ou et al. [6] proposed a -approximation algorithm with running time , where is a small given positive constant. This result was further improved by Ou and Zhong [7] who designed a -approximation algorithm with running time . With a number of parallel machines of 1, Shabtay [8] considered four different problem variations. Zhang et al. [9] considered single machine scheduling with release dates and rejection. In [9], they proved that this problem is -hard and presented a fully polynomial time approximation scheme (FPTAS). Recently, He et al. [10] and Ou et al. [11] independently designed an improved approximation algorithm with a running time of . More related results can be found in the surveys [12,13,14,15,16,17,18].

The vector scheduling problem proposed by Chekuri and Khanna [19], which has been studied for a long time, is a generalization of parallel machine scheduling, where each job is associated with a d-dimensional vector . The problem aims to schedule n d-dimensional jobs on m machines and the objective is to minimize the maximum load over all dimensions and all machines. With d as an arbitrary number, Chekuri and Khanna [19] presented a lower bound showing that there is no algorithm with a constant factor approximation for the vector scheduling problem and presented an -approximation algorithm. Later, Meyerson et al. [20] proposed a better algorithm with an -approximation, which was further improved to an -approximation by Im et al. [21]. With d as a constant, Chekuri and Khanna [19] presented a PTAS, which was improved to an EPTAS designed by Bansal et al. [22]. More related results can be found in the surveys [19,21,23].

Li and Cui [24] considered a single machine vector scheduling problem (SMVS) with rejection that aims to minimize the maximum load over all dimensions plus the sum of the penalties of the rejected jobs. They proved that this problem is -hard and designed a combinatorial d-approximation algorithm and -approximation algorithm based on randomized rounding. Then, Dai and Li [25] studied vector scheduling problem with rejection on two machines and designed a combinatorial 3-approximation algorithm and -approximation algorithm based on randomized rounding.

In recent years, nonlinear combinatorial optimization has become increasingly utilized. One of the important fields in this area is the set function optimization and the corresponding research is in application-driving, such as machine learning and data mining [26,27,28,29,30]. In particular, the set function is submodular if the function has decreasing marginal returns. Submodular functions are used in various fields, such as operations research, economics, engineering, computer science, and management science [31,32,33]. In particular, the rejection penalties can be considered to be the loss of the manufacturer’s prestige; in economics, it is common that the penalty is increasingly small as the number of rejected jobs increases. This means that the penalty function is a submodular function, where the submodular function is a special set function, which has decreasing marginal returns. Combination optimizations with submodular penalties have been proposed and studied, and recently, Zhang et al. [34] proposed a 3-approximation algorithm for precedence-constrained scheduling with submodular rejection on parallel machines. Liu et al. [35] proposed an -approximation combinatorial algorithm for the submodular load balancing problem with submodular penalties. Liu and Li [36] proposed a -approximation combinatorial algorithm for parallel machine scheduling with submodular penalties. Liu and Li [14] proposed a 2-approximation algorithm for single machine scheduling problem with release dates and submodular rejection penalties.

However, in the real world, complicated interpersonal relationships lead to the rejection set function not being submodular. Thus, in this paper, we consider single machine vector scheduling (SMVS) with general penalties, which is a generalized case of SMVS with rejection, where the rejection penalty is determined by normalized and nondecreasing set functions. As shown in Table 1, for SMVS with general penalties, we first present a lower bound showing that there is no approximation, where is any positive polynomial function of n. Then, we consider a special case in which for any dimension i of any job and the diminishing-return ratio of the penalty set function , and we design a combinatorial approximation algorithm that can output a solution in which the objective value is no more than , where is the optimal value of this problem and if ; otherwise, , is the maximum load of the ground set J and is a given parameter. Then, we consider another special case in which the penalty set function is submodular, and we propose a noncombinatorial -approximation algorithm and a combinatorial -approximation algorithm. If the rejection set function is submodular and , then the SMVS with general penalties is exactly the single machine scheduling problem with submodular rejection penalties. If the rejection set function is linear, then the SMVS with general penalties is exactly the SMVS with rejection. If the penalty of any nonempty job set is ∞, then the SMVS with general penalties is exactly single machine vector scheduling. If the rejection set function is linear and , then the SMVS with general penalties is exactly single machine scheduling with rejection.

Table 1.

The results in this paper.

The difficulty in the SMVS with general penalties is how to use the characteristics of the rejection penalty set function and the load to minimize the objective value of the feasible solution, where the load is determined by the relevant d-dimensional vectors. Since both the rejection penalty set function and the load are nonlinear, the output solution, generated by the standard techniques of either single machine scheduling problem with submodular penalties or single machine vector scheduling with rejection, may either accept many more jobs or reject many more jobs than needed. In this paper, according to the characteristics of the set function and the load, we find a balanced relationship between the set function and the load to design the approximation algorithm.

The remainder of this paper is structured as follows: In Section 2, we provide basic definitions and a formal problem statement. In Section 3, we first prove the hardness result for SMVS with general penalties. Next, we consider a special case of SMVS with general penalties and propose an approximation algorithm. In Section 4, we address the submodular case and propose a noncombinatorial approximation algorithm and a combinatorial approximation algorithm. We provide a brief conclusion in Section 5.

2. Preliminaries

Let be a given set of n jobs, and let be a real-valued set function defined on all subsets of J. A set function is called nondecreasing if

A set function is called normalized if

In particular, if

then set function is submodular; if

then set function is modular.

Let

be the marginal gain of with respect to and S. If set function is nondecreasing, then , . For any and , if is submodular, then ; if is modular, then .

Single machine vector scheduling (SMVS) with general penalties is defined as follows: We are given a single machine and a set of n jobs . Each job is associated with a d-dimensional vector , where is the amount of resource i needed by job . The penalty set function is normalized and nondecreasing. A pair is called a feasible schedule if and , where A is the set of jobs that are accepted and processed on the machine and R is the set of rejected jobs. The objective value of a feasible schedule is defined as , where

is the maximum load of set . The objective of SMVS with general penalties is to find a feasible schedule with minimum objective value.

Instead of taking an explicit description of the function , we consider it an oracle. In other words, for any subset , can be computed in polynomial time, where the “polynomial” is with regard to the size n.

3. SMVS with General Penalties

In this section, we first prove that set function minimization is a special case of SMVS with general penalties and propose a lower bound showing that there is no -approximation, where is any positive polynomial function of n. Next, we address a special case in which for any and any and the diminishing-return ratio of the set function and design an approximation algorithm.

3.1. Hardness Result

Before proving that set function minimization is a special case of SMVS with general penalties, we introduce some characteristics of set functions.

Lemma 1

([37]). For any set function defined on all subsets of V, can be expressed as the difference between two normalized nondecreasing submodular functions and ; i.e., for any .

Based on Lemma 1, we have the following:

Lemma 2.

For any set function defined on all subsets of V, can be expressed as the difference between one normalized nondecreasing set function and one normalized nondecreasing modular function ; i.e., for any .

Proof.

By Lemma 1, we can construct two nondecreasing submodular functions and defined on all subsets of V satisfying for any .

Then, we define the set function as follows:

Thus, for any , we have

This implies that is a normalized nondecreasing modular function. Moreover, let be the set function satisfying for any . Thus, we have

and, for any set T and any ,

where the inequality follows from , i.e., is a normalized nondecreasing set function. Therefore, the lemma holds. □

For completeness, we provide the formal statement for set function minimization and its hardness result.

Definition 1

(Set Function Minimization). Given an instance , where V is the ground set and is a set function defined on all subsets of V, set function minimization aims to find a set with for any .

Theorem 1

([37]). Let n be the size of the instance of a set function minimization, and be any positive polynomial function of n. Unless , there cannot exist any -approximation algorithm for set function minimization.

Then, we obtain the following theorem by proving that the set function minimization can be reduced to a special case of SMVS with general penalties:

Theorem 2.

Unless , there cannot exist any -approximation algorithm for SMVS with general penalties, where n is the number of jobs and is any positive polynomial function of n.

Proof.

Given any instance of set function minimization, where , by Lemma 2, can be expressed as one normalized nondecreasing set function and one normalized nondecreasing modular function satisfying for any .

We construct a corresponding instance of SMVS with general penalties and a 1-dimensional vector, where the job set . For any subset , let

Furthermore, the penalty set function satisfies

and each job is associated with a 1-dimensional vector

For convenience, let

be the value of the ground set V on modular function , where .

Given a feasible solution of instance , by equality (2), we can construct a schedule of instance where and . The objective value Z of is

Given a schedule for instance , by equality (2), we can construct a feasible solution of instance . Thus, we have

where Z is the objective value of and .

These statements imply that the above two problems are equivalent. By Theorem 1, the theorem holds. □

3.2. Approximation Algorithm for a Special Case

In the following part of this subsection, we assume that for any and and that the diminishing-return ratio of the set function . Using the projected subgradient method (PSM), we can find an approximation schedule of SMVS with general penalties where its objective value is no more than , where is the optimal value of SMVS with general penalties,

is the maximum ratio of the maximum load to the minimum load on the d-dimensional vector,

and is a given parameter.

The PSM proposed by Halabi and Jegelka [26] is an approximation algorithm for minimization of the difference between set function and set function . The key idea of the PSM is to approximate the difference between these two functions as a submodular function, using the Lovász extension to provide (approximate) subgradients. In particular, when is an -weakly DR-submodular function and is a -weakly DR-supermodular function, the PSM is the optimal approximation algorithm [26] satisfying the following theorem, where the definitions of both weakly DR-submodular and weakly DR-supermodular are described in Definition 2.

Theorem 3

([26]). Given and two nondecreasing set functions and is an α-weakly DR-submodular function and is a β-weakly DR-supermodular function. To minimize of the difference between the functions and , the PSM achieves a set S satisfying

where is an optimal solution of this problem.

Before introducing the definition of weakly DR-submodular/supermodular, we recall the definitions of diminishing return (DR, for short). The diminishing return, proposed by Lehmann et al. [38], is a characteristic of submodular function; i.e., for any submodular function ,

The diminishing return is generalized to the set function, called the diminishing-return ratio [28,39]. If is the diminishing-return ratio of a set function , then for any and , we have

In addition, is known as weakly DR-submodular in [26].

Definition 2

(Weakly DR-submodular and weakly DR-supermodular). A set function is an α-weakly DR-submodular function, with , if inequality (4) follows for any and . Similarly, a set function is a β-weakly DR-supermodular function, with , if for all and ,

To find an approximation schedule of SMVS with general penalties, we construct an auxiliary set function as follows: For any , let

where

is the maximum load of the ground set J and

is the same as the definition in (1). Thus, we have the following:

Lemma 3.

The set function is a normalized nondecreasing -weakly DR-supermodular.

Proof.

Let

be the resource number with maximum load for set .

By the definition of , for any and any , we have

and

Therefore, for any and any , we have

Since for any and , we have

where the last inequality follows from inequality (3). This implies that function is a -weakly DR-supermodular function. By and , function is normalized and nondecreasing. Thus, function is a normalized nondecreasing -weakly DR-supermodular function. □

For SMVS with general penalties, we design an approximation algorithm by the PSM and the detailed algorithm is described below.

Let Z be the objective value of generated by Algorithm 1 and let be the optimal value of SMVS with general penalties.

| Algorithm 1: AASC |

|

Theorem 4.

Proof.

Let be the optimal solution of SMVS with general penalties, and its objective value is

This implies that

Let be the optimal solution of the minimization of the difference between the function and . Thus, we have

By the assumption , is a normalized nondecreasing -weakly DR-submodular function. By Lemma 3, is a normalized nondecreasing -weakly DR-supermodular function. These and Theorem 3 imply that

where is a given parameter.

4. SMVS with Submodular Penalties

In this section, we consider SMVS with submodular penalties in which the general penalty set function is limited to submodular. We introduce two types of binary variables:

and

Let A be the set of accepted and processed jobs and the load of resource i of A is

Thus, we can formulate SMVS with submodular penalties as a natural integer program and relax the integrality constraints to obtain a linear program.

The second constraint in (9) states that every job must either be processed on the machine or be rejected.

Using the Lovász extensions, linear program (9) is equivalent to a convex program that can be solved in polynomial time by the ellipsoid method, and let be the optimal solution of the convex program, where and . Inspired by the general rounding framework in Li et al. [40], given a constant , a threshold is a random number from the uniform distribution over . Then, we can construct a feasible pair , where is the accepted set and is the rejected set.

Similar to the proof of Lemma 2.2 in Li et al. [40], we have the following:

Lemma 4.

The expected rejection penalty of the pair is no more than

The following claim is immediate.

Lemma 5.

The expected cost of the pair is no more than

where is the optimal value for SMVS with submodular penalties.

Proof.

For any job , if , is accepted and processed by the machine; otherwise, is rejected. Let be the load of resource i in the pair . Therefore, for every resource , we have

where the inequality follows from by and is the load of resource i in the solution .

This statement and Lemma 4 imply that the expected cost of the pair is

where the last inequality follows from the fact that is the optimal solution of the convex program and . □

Now, we present a noncombinatorial -approximation algorithm for SMVS with submodular penalties. Without loss of generality, assume that . For any , we construct a feasible schedule , where is the rejected subset and is the accepted set. We select from these schedules the one with the smallest overall objective value. By Lemma 5, we have the following:

Theorem 5.

There is a noncombinatorial -approximation algorithm for SMVS with submodular penalties.

Since this algorithm needs to solve some convex programs, it is a noncombinatorial. It is hard to implement and has a high time complexity. Therefore, we also present a combinatorial approximation algorithm for this problem.

As above, let

be the maximum ratio of the maximum load to the minimum load on the d-dimensional vector. In particular, we set if .

Construct an auxiliary processed time for each job , where

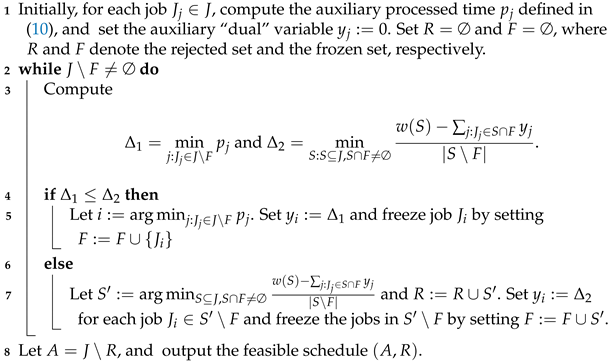

We introduce an auxiliary “dual” variable for each job to determine which job is accepted or rejected. Let F be the frozen set. Initially, and variable for each job . The variables increase simultaneously until either some set or some job becomes tight, where a set S is tight if and a job is tight if . If a set becomes tight, it is added to the rejected set R and the frozen set F. Otherwise, the tight job is added to the frozen set F. For jobs that have been added to the frozen set F, their variables are frozen, and the process is iterated until all points are frozen. Let be the accepted set and output the pair . The detailed algorithm is described below.

Lemma 6.

Algorithm 2 can be implemented in polynomial time.

Proof.

Considering line 3 of Algorithm 2, for any , we have . This implies that the value of can be found in linear time.

Then, for any , we analyze the computing time of . Let and for any subset ; it is easy to verify that and are modular functions. Since is a submodular function, is also a submodular function. Therefore, for any , the value of

can be computed in polynomial time using the combinatorial algorithm proposed by Fleischer and Iwata [41] for the ratio of a submodular to a modular function minimization problem.

Therefore, and for any while loop can be computed in polynomial time. This means that each iteration of the while loop can be computed in polynomial time. For each iteration of the while loop, if , the set F adds one job, otherwise, the set F adds at least one job. This means, the number of the while loops is at most n. Thus, Algorithm 2 can be implemented in polynomial time. □

| Algorithm 2: CSMSP |

|

For any job , the variable increases from 0 until job is added to the frozen set. If job is added to the frozen set by in line 5, the variable is fixed to . Thus, we have

otherwise, the variable is fixed by some set in line 7, while the variable of all jobs in T is fixed. Thus, we have

In particular, for any job and any set , we have ; otherwise, job is added to the rejected set R in line 7. This implies that job is added to the frozen set in line 5 and

Lemma 7.

The rejected job set R produced by Algorithm 1 satisfies

Proof.

Let be the objective value of generated by Algorithm 2, and let be the optimal value for SMVS with submodular penalties.

Theorem 6.

, where .

Proof.

Let be the optimal solution of SMVS with submodular penalties, and its objective value is

By Lemma 7, we have

where the inequality follows from inequality (11). By equality (13), we have

Thus, we have

Case 1., we have The objective value of is

where the first inequality follows from the definition of ; the second inequality follows from , and the penalty is nonnegative; the third inequality follows from inequality (14); the fourth inequality follows from inequality (12) and the last inequality follows from .

Case 2., we have The objective value of is

where the second inequality follows from inequality (14), the third inequality follows from inequality (12) and the last inequality follows from .

Therefore, the theorem holds. □

5. Conclusions

In this paper, we investigate the problem of SMVS with general penalties and SMVS with submodular penalties.

For SMVS with general penalties, we first present a lower bound showing that there is no -approximation algorithm. Then, using PSM, we design an approximation algorithm for the case under and for any and . This algorithm can find a schedule satisfying where Z and are the objective value of and optimal value, is the diminishing-return ratio of the set function , , and is a given parameter.

For SMVS with submodular penalties, we first design a noncombinatorial - approximation algorithm, and then, a combinatorial -approximation algorithm is proposed, where and, most of the time, .

For SMVS with linear penalties, there exists an EPTAS to solve it. For SMVS with submodular penalties, there is the question whether it is possible to design an EPTAS or better algorithm. Furthermore, finding a better lower bound is an interesting direction. In the real world, our assumption for SMVS with general penalties is not reasonable. Thus, it is important to design approximate algorithms for cases under more reasonable assumptions.

Moreover, when there are multiple machines rather than a single machine, algorithms under this setting could be further developed.

Author Contributions

Conceptualization, X.L. and W.L.; methodology, W.L.; software, X.L. and Y.Z.; validation, X.L., Y.Z. and W.L.; formal analysis, X.L.; investigation, W.L.; resources, W.L.; data curation, X.L. and Y.Z.; writing—original draft preparation, X.L.; writing—review and editing, W.L.; visualization, X.L.; supervision, W.L.; project administration, W.L.; funding acquisition, W.L. All authors have read and agreed to the published version of the manuscript.

Funding

The work is supported in part by the National Natural Science Foundation of China (No. 61662088), Program for Excellent Young Talents of Yunnan University, Training Program of National Science Fund for Distinguished Young Scholars, IRTSTYN, and Key Joint Project of the Science and Technology Department of Yunnan Province and Yunnan University (No. 2018FY001(-014)).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Graham, R.L. Bounds on multiprocessing timing anomalies. Siam J. Appl. Math. 1969, 17, 416–429. [Google Scholar] [CrossRef] [Green Version]

- Hochbaum, D.S.; Shmoys, D.B. Using dual approximation algorithms for scheduling problems: Theoretical and practical results. J. ACM 1987, 34, 144–162. [Google Scholar] [CrossRef]

- Alon, N.; Azar, Y.; Woeginger, G.J.; Yadid, T. Approximation schemes for scheduling on parallel machines. J. Sched. 1998, 1, 56–66. [Google Scholar] [CrossRef]

- Jansen, K.; Klein, K.; Verschae, J. Closing the gap for makespan scheduling via sparsification techniques. Math. Oper. Res. 2020, 45, 1371–1392. [Google Scholar] [CrossRef]

- Bartal, Y.; Leonardi, S.; Marchetti-Spaccamela, A.; Sgall, J.; Stougie, L. Multiprocessor scheduling with rejection. SIAM J. Discret. Math. 2000, 13, 64–78. [Google Scholar] [CrossRef]

- Ou, J.; Zhong, X.; Wang, G. An improved heuristic for parallel machine scheduling with rejection. Eur. J. Oper. Res. 2015, 241, 653–661. [Google Scholar] [CrossRef]

- Ou, J.; Zhong, X. Bicriteria order acceptance and scheduling with consideration of fill rate. Eur. J. Oper. Res. 2017, 262, 904–907. [Google Scholar] [CrossRef]

- Shabtay, D. The single machine serial batch scheduling problem with rejection to minimize total completion time and total rejection cost. Eur. J. Oper. Res. 2014, 233, 64–74. [Google Scholar] [CrossRef]

- Zhang, L.; Lu, L.; Yuan, J. Single machine scheduling with release dates and rejection. Eur. J. Oper. Res. 2009, 198, 975–978. [Google Scholar] [CrossRef]

- He, C.; Leung, J.Y.T.; Lee, K.; Pinedo, M.L. Improved algorithms for single machine scheduling with release dates and rejections. 4OR 2016, 14, 41–55. [Google Scholar] [CrossRef]

- Ou, J.; Zhong, X.; Li, C.L. Faster algorithms for single machine scheduling with release dates and rejection. Inf. Process. Lett. 2016, 116, 503–507. [Google Scholar] [CrossRef]

- Guan, L.; Li, W.; Xiao, M. Online algorithms for the mixed ring loading problem with two nodes. Optim. Lett. 2021, 15, 1229–1239. [Google Scholar] [CrossRef]

- Li, W.; Li, J.; Zhang, X.; Chen, Z. Penalty cost constrained identical parallel machine scheduling problem. Theor. Comput. Sci. 2015, 607, 181–192. [Google Scholar] [CrossRef]

- Liu, X.; Li, W. Approximation algorithm for the single machine scheduling problem with release dates and submodular rejection penalty. Mathematics 2020, 8, 133. [Google Scholar] [CrossRef] [Green Version]

- Mor, B.; Shapira, D. Scheduling with regular performance measures and optional job rejection on a single machine. J. Oper. Res. Soc. 2019, 71, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Lu, L.; Yuan, J. Single-machine scheduling under the job rejection constraint. Theor. Comput. Sci. 2010, 411, 1877–1882. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Lu, L. Parallel-machine scheduling with release dates and rejection. 4OR 2016, 14, 165–172. [Google Scholar] [CrossRef]

- Zhong, X.; Ou, J. Improved approximation algorithms for parallel machine scheduling with release dates and job rejection. 4OR 2017, 15, 387–406. [Google Scholar] [CrossRef]

- Chekuri, C.; Khanna, S. On multidimensional packing problems. SIAM J. Comput. 2004, 33, 837–851. [Google Scholar] [CrossRef]

- Meyerson, A.; Roytman, A.; Tagiku, B. Online multidimensional load balancing. In Proceedings of the 16th International Workshop on Approximation, Randomization, and Combinatorial Optimization. Algorithms and Techniques, and the 17th International Workshop on Randomization and Approximation Techniques in Computer Science, Berkeley, CA, USA, 21–23 August 2013; Raghavendra, P., Raskhodnikova, S., Jansen, K., Rolim, J.D.P., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 287–302. [Google Scholar]

- Im, S.; Kell, N.; Kulkarni, J.; Panigrahi, D. Tight bounds for online vector scheduling. Siam J. Comput. 2019, 48, 93–121. [Google Scholar] [CrossRef] [Green Version]

- Bansal, N.; Oosterwijk, T.; Vredeveld, T.; Zwaan, R. Approximating vector scheduling: Almost matching upper and lower bounds. Algorithmica 2016, 76, 1077–1096. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Azar, Y.; Cohen, I.R.; Kamara, S.; Shepherd, F.B. Tight bounds for online vector bin packing. In Proceedings of the 45th annual ACM Symposium on Theory of Computing, Palo Alto, CA, USA, 2–4 June 2013; ACM: New York, NY, USA, 2013; pp. 961–970. [Google Scholar]

- Li, W.; Cui, Q. Vector scheduling with rejection on a single machine. 4OR 2018, 16, 95–104. [Google Scholar] [CrossRef]

- Dai, B.; Li, W. Vector scheduling with rejection on two machines. Int. J. Comput. Math. 2020, 97, 2507–2515. [Google Scholar] [CrossRef]

- Halabi, M.E.; Jegelka, S. Optimal approximation for unconstrained non-submodular minimization. In Proceedings of the 37th International Conference on Machine Learning, Online, 13–18 July 2020; Daumé, H., III, Singh, A., Eds.; PMLR, Proceedings of Machine Learning Research. ACM: Vienna, Austria, 2020; Volume 119, pp. 3961–3972. [Google Scholar]

- Maehara, T.; Murota, K. A framework of discrete DC programming by discrete convex analysis. Math. Program. 2015, 152, 435–466. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, D.; Wang, Y.; Zhang, D. Non-submodular maximization on massive data streams. J. Glob. Optim. 2019, 76, 729–743. [Google Scholar] [CrossRef]

- Wu, W.L.; Zhang, Z.; Du, D.Z. Set function optimization. J. Oper. Res. Soc. China 2019, 7, 183–193. [Google Scholar] [CrossRef] [Green Version]

- Wu, C.; Wang, Y.; Lu, Z.; Pardalos, P.M.; Xu, D.; Zhang, Z.; Du, D.Z. Solving the degree-concentrated fault-tolerant spanning subgraph problem by DC programming. Math. Program. 2018, 169, 255–275. [Google Scholar] [CrossRef]

- Wei, K.; Iyer, R.K.; Wang, S.; Bai, W.; Bilmes, J.A. Mixed robust/average submodular partitioning: Fast algorithms, guarantees, and applications. In Proceedings of the 29th Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2015; pp. 2233–2241. [Google Scholar]

- Jia, X.D.; Hou, B.; Liu, W. An approximation algorithm for the generalized prize-collecting steiner forest problem with submodular penalties. J. Oper. Res. Soc. China 2021. [Google Scholar] [CrossRef]

- Liu, X.; Li, W. Combinatorial approximation algorithms for the submodular multicut problem in trees with submodular penalties. J. Comb. Optim. 2020. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, D.; Du, D.; Wu, C. Approximation algorithms for precedence-constrained identical machine scheduling with rejection. J. Comb. Optim. 2018, 35, 318–330. [Google Scholar] [CrossRef]

- Liu, X.; Xing, P.; Li, W. Approximation algorithms for the submodular load balancing with submodular penalties. Mathematics 2020, 8, 1785. [Google Scholar] [CrossRef]

- Liu, X.; Li, W. Approximation algorithms for the multiprocessor scheduling with submodular penalties. Optim. Lett. 2021, 15, 2165–2180. [Google Scholar] [CrossRef]

- Iyer, R.; Bilmes, J. Algorithms for approximate minimization of the difference between submodular functions, with applications. In Proceedings of the 28th Conference on Uncertainty in Artificial Intelligence (UAI’12), Catalina Island, CA, USA, 15–17 August 2012; AUAI Press: Arlington, VA, USA, 2012; pp. 407–417. [Google Scholar]

- Lehmann, B.; Lehmann, D.; Nisan, N. Combinatorial auctions with decreasing marginal utilities. Games Econ. Behav. 2006, 55, 270–296. [Google Scholar] [CrossRef]

- Kuhnle, A.; Smith, J.D.; Crawford, V.G.; Thai, M.T. Fast maximization of non-submodular, monotonic functions on the integer lattice. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; PLMR: Stockholm, Sweden, 2018; Volume 6, pp. 4350–4368. [Google Scholar]

- Li, Y.; Du, D.; Xiu, N.; Xu, D. Improved Approximation Algorithms for the Facility Location Problems with Linear/Submodular Penalties. Algorithmica 2015, 73, 460–482. [Google Scholar] [CrossRef]

- Fleischer, L.; Iwata, S. A push-relabel framework for submodular function minimization and applications to parametric optimization. Discret. Appl. Math. 2003, 131, 311–322. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).