Abstract

Convergence of a stochastic process is an intrinsic property quite relevant for its successful practical for example for the function optimization problem. Lyapunov functions are widely used as tools to prove convergence of optimization procedures. However, identifying a Lyapunov function for a specific stochastic process is a difficult and creative task. This work aims to provide a geometric explanation to convergence results and to state and identify conditions for the convergence of not exclusively optimization methods but any stochastic process. Basically, we relate the expected directions set of a stochastic process with the half-space of a conservative vector field, concepts defined along the text. After some reasonable conditions, it is possible to assure convergence when the expected direction resembles enough to some vector field. We translate two existent and useful convergence results into convergence of processes that resemble to particular conservative vector fields. This geometric point of view could make it easier to identify Lyapunov functions for new stochastic processes which we would like to prove its convergence.

1. Introduction

Along most practical research branches, the solution to a given problem is often entrusted to a function optimization problem, where the effectiveness of a solution is measured by a function to be optimized. Machine learning challenges are great examples of this situation. Therefore, optimization algorithms become crucial to solve such problems. Iterative optimization methods start at an initial point and move through parameter space towards trying to minimize the objective function. Its performance may dramatically vary depending on the initial point. This dependence is somewhat diminished if the algorithm is guaranteed to converge in the long term to a minimum. Furthermore, in stochastic optimization algorithms, the quality achieved varies randomly and sometimes there are chances that the algorithm fails to converge. As an example, the stochastic natural gradient descent (SNGD) of Amari [1] and its variants often show instability depending on the starting point and learning rate tuning. Even some experiments are proved to diverge with SNGD [2]. Clearly such issue weights considerably against its practical use.

Convergent algorithms are more stable with respect to both learning rate parameters and initial point estimations. For instance, in [3], the optimization method named convergent stochastic natural gradient descent (CSNGD) is proposed. CSNGD is designed to mimic SNGD but it is proven to be convergent. Sánchez-López and Cerquides show that, unlike SNGD, CSNDG shows stability in the experiments run.

As a consequence, we are interested in understanding better the conditions that make an algorithm convergent. Convergence proofs abound in the literature. In this work we concentrate on two apparently disconnected and well known convergence results. In [4] seminal work, Bottou proved the convergence of stochastic gradient descent (SGD). Later on in [5], Sunehag provided an extended result for variable metric modified SGD. The connection between the proofs of both results are not evident. It is not clear what they have in common, and, therefore, further generalizations seem not to be within reach.

To understand the convergence results (Both theorems are added in Appendix A. However, we alleviate the conditions of Theorem A2 in [5]. The alleviated conditions turn Theorem A2 into Theorem A3 found in the same appendix), it is helpful to take a look at their proofs. Bottou’s proof relies on the construction of a Lyapunov function [6]. On the other hand, Sunehag’s proof uses the Robbins–Siegmundtheorem [7] instead. It can be seen that the latter is proving that the function to optimize serves already as a Lyapunov function, similar to latter chapters in [4]. Therefore, both proofs share some similarity but it is not evident how to raise a connection. Establishing the connection and pointing out its relevance is the main contribution of this paper and results in a generalization from which both results can be easily proved as corollaries.

Stochastic optimization algorithms rely on observations extracted from some possibly unknown probability space. Algorithms subjected to random phenomena are stochastic process [8,9,10,11]. The generalized convergence result for stochastic processes in this article is obtained after 2 main concepts. Precisely, the first one is resemblance between a stochastic process and a vector field. The second one is the locally bounded property of a stochastic process by a function. These two ingredients are enough to state and prove our convergence theorem; a stochastic process Z converges if it is locally bounded by a convex real valued, twice differentiable function with bounded Hessian and Zresembles to .

Two corollaries are extracted from this result, which we prove to be equivalent to Bottou’s and Sunehag’s convergence theorems. Moreover, we observe that convergence proof in [12] of the algorithm called discrete DSNGD can be addressed by our main theorem, since original convergence theorem of Sunehag is not general enough.

Resemblance concept involves the expected directions set of a stochastic process, that we define in Section 2, and the half-space of a vector field, a concept introduced in Section 3. Then, in Section 4 we state and prove our general result, which highlights the commonalities between Bottou and Sunehag theorems, proving convergence of a wider variety of algorithms.

2. Main Result. Director Process and the Expected Direction Set

Let be a probability space and be a measurable space. A discrete stochastic process on indexed by is a sequence of random variables such that . In this work, and is the corresponding Borel -algebra. As random variables are used to describe general random phenomena, stochastic processes indexed by are usually used to model random sequences.

2.1. Locally Bounded Stochastic Processes and Objective of the Work

The difference between two random variables of a stochastic process is a random variable known as increment. We say that random variable with is an s-increment at time t. For example, the 1-increments of a stochastic process Z are

We focus our attention to a decomposition of into , such that is a positive real valued function and is a stochastic process on .

Definition 1.

Let Z and X be stochastic processes and a function. Then is a decomposition of 1-increments of Z if

Name X the director process of Z and γ the learning rate, and note it by .

This way of expressing a process allows to define with respect to , which gives us control of the difference between both values by means of , as Figure 1 shows. This is very useful if we intend to analyse the convergence of a stochastic process.

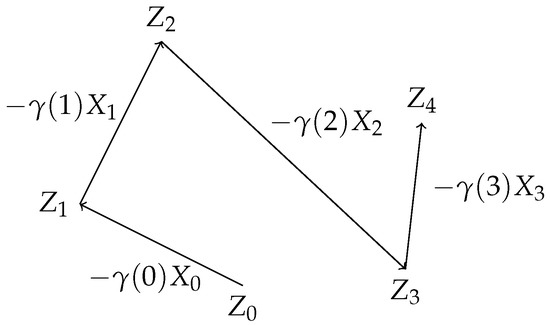

Figure 1.

Path of stochastic process Z with director process X and learning rate .

As represented in Figure 1, we can think of as the value of the process at time t, while is the vector going from to . For the article, it is important to remember this, since we are constantly referring to as points in while are managed as direction vectors in . This distinction is only practical for our purposes.

The trajectories of stochastic approximation algorithms, such as stochastic gradient descent (SGD), are indeed samples of stochastic processes. Furthermore, they are usually expressed by means of their decomposition of 1-increments, as can be seen in the following examples.

Example 1.

SGD [4] is the cornerstone of machine learning to solve the function optimization problem. The objective of SGD is to minimize an objective function for some unknown probability distribution and random variable defined on . This function l is known as loss function, and it is usually differentiable with respect to η, allowing the definition of SGD as

where are estimates of . We can see , and, therefore, SGD, as a stochastic process. Indeed, let

be the product probability space (This space is guaranteed to exist according to Kolmogorov extension theorem (see for example Theorem 2.4.4 and following examples in [13])) over infinite sequences. Hence we can define the stochastic process X on such that where for every it is . This implies that is a decomposition of 1-increments of SGD.

In addition, we observe that depends only on last observation and t, which is known as a non-stationary Markov chain.

Example 2.

This example is worked in [5]. Again, we focus on the function optimization problem, using the same notation as in previous example. In this case, the estimation update of the minimum is defined as

where is a matrix in known after information available at time t and , where Y is a function mapping each to a random variable on the same probability space .

Similarly as in previous example, Y can be thought as a random variable in the product probability space (Equation (4)) that depends on previous , such that for every it is . If we define , then is a decomposition of 1-increments of Z with .

Here, Z is not a (non-stationary) Markov chain, since may depend on for all .

The naming of as learning rate is commonly used in the machine learning research branch [4,14,15,16]. The director process X determines the direction at time t of the update Equation (1) with as reference point, while specifies a certain distance to travel along that direction . Moreover we demand some constraints to both factors. Condition imposed to is usually found in the literature [3,4,5]. A learning rate holds the standard constraint if;

Before we show the condition for the director process X, we fix some notation used throughout the article. Consider the natural filtration generated by stochastic process Z, that is, for all . Then is a filtration and by definition Z is adapted to .

Intuitively, every of a filtration is a -algebra that classifies the elements of . For example, if is the set of colours, can gather warm and cold colours into separate and complementary sets. The fact that a random variable is -measurable implies that sends all warm colours to the same value and all cold colours also to the same value. Somehow is then not providing any additional information about elements of beyond the classification of . The sequence is increasing, in the sense that for all t. Therefore, a filtration characterizes space with sequentially higher levels of information or classification. Denote the conditional expectation given [10]. Recall that if Y is a random variable in then is in turn a -measurable random variable.

Hence, if then X is locally and linearly bounded by function if

These two constraints are finally combined to present the kind of stochastic processes we are interested in.

Definition 2.

Let Z be a stochastic process and be a function. We say that Z is locally bounded byif there is a decomposition of 1-increments with γ holding the standard constraint and X locally and linearly bounded by ϕ.

Furthermore, if a.s. we say is the initial point of Z.

For instance, Examples 1 and 2 observed in this section define Z as a locally bounded process. We see it below.

Example 3.

Recall Example 1. In the same reference [4], the optimization algorithm is asked to hold additional conditions in order to prove its convergence. We added the convergence theorem in Appendix A. Some of the conditions are

where is the optimal point of L. Standard constraint to γ is clearly asked. Moreover is a starting point. It remains to be seen if X is locally and linearly bounded by some function . Indeed, if we define , then the property is easily checked. Hence Z is locally bounded by ϕ with initial point .

Example 4.

Recall Example 2. Convergence theorem in [5], which is added in the Appendix A, demands below conditions;

where L is a function to optimize. For this example, Z is then locally bounded by with initial point . Just as an observation, property of being determined after information available at time t, is the same as seeing as a -measurable random variable over the product probability space.

We are interested on studying the almost sure convergence of Z to a point . A stochastic process Z almost surely (a.s.) converges to a point if

Examples 3 and 4 show us that we can understand the results in [4,5] as the almost sure convergence of some locally bounded processes. In this paper, we are interested in characterizing the almost sure convergence of locally bounded processes. The objective of this work is to create a theory that allows to prove the a.s. convergence of locally bounded processes that covers Examples 3 and 4 and whose applicability generalizes to a wider set of processes, such as the one described below:

Example 5.

Assume the function defined in , and the optimization method Z defined by its director process where and are positive definite and symmetric matrices. For simplicity, this example shows a stochastic process with no random phenomena associated. We wonder about the convergence of process Z, and if so, whether it converges to the point of that optimizes function f. From Theorems A1 and A2 found in the literature (included in Appendix A) it is not possible to prove a.s. convergence of Z, since conditionsBottou resemblance and C.3, respectively, are not satisfied. That is, because is possibly negative a.s.

Further on, Z is assumed to be locally bounded by where is its corresponding decomposition of 1-increments, unless otherwise indicated.

2.2. Main Result

The objective of the article is to proof below theorem, that we prove in Section 4.1.

Theorem 1.

Let Z be a stochastic process on probability space . Then Z almost surely converges to a point if there is a twice differentiable convex function ϕ with unique minimum defined in with bounded Hessian norm, such that

- Z is locally bounded by ϕ;

- Z resembles .

There is one concept of the theorem that needs a definition. That is, when a stochastic process resembles to a vector field. Next sections have that end, with our main definition that fills the gap appearing at Section 3.2. As we will see in Section 4.4, simple Example 5 finds a solution with our main theorem.

2.3. Expected Direction Set

We now define one key object of our work named the expected direction set. It focuses on gathering all directions that the update may take at time t conditioned to . Before the definition we provide some concepts and notation.

Random variable determines all expected directions of Z at time t that the stochastic process may follow assuming . For example, if is an observation, then is a vector pointing to the expected update direction departing from point given . Denote the expected direction of Z at and time t as

The expected direction from point of Equation (11) depends on . That is, the path followed until reaching matters. For instance, if are different observations, such that , then possibly . We collect all expected directions at and time t in the vector set below;

The tools to define the expected direction set at after time are given, so we proceed to its formal definition.

Definition 3.

Let . Define the expected directions set of Z at after time as

With a few words, is a vector set containing all expected directions (provided by the director process X) conditioned to for every outcome such that where . In Definition 3, depends on T. That is because to assess the convergence of an algorithm it is not important to consider all expected directions throughout all the process. For example, if an algorithm converges we can modify randomly all directions of the director process for just a particular time , and the resulting algorithm still converges. Roughly speaking, only the tail of a process matters to determine the convergence property. This concept is better addressed with Definition 4 in next section.

Example 6.

Recall Example 1. Assume that Z is then SGD. Then is a singleton. Indeed, is the same vector for all and all ω with and hence with any with . Finally

This is the case of any non-stationary Markov chain.

2.4. Essential Expected Direction Set

Convergence property of an algorithm relates closely to directions followed after time as T tends to infinity. Equivalently, the direction set appearing repeatedly through the whole optimization process matters, while directions set only contemplated for a finite amount of iterations changes nothing, in terms of convergence guarantee. This direction set is named the essential expected directions set in this article.

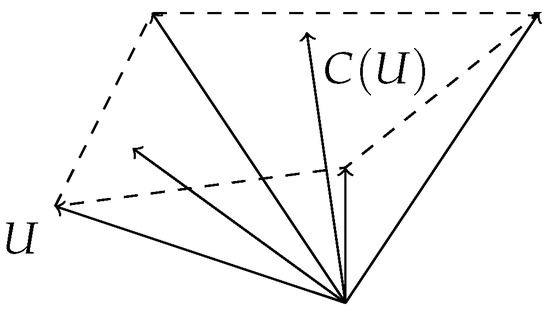

To define properly the essential expected directions set, we will use the convex vector subspace of a given vector set. Given a vector set U in , let be the smallest convex vector subspace containing U. See Figure 2 as an illustrative example. Observe that is always closed, but it may be unbounded.

Figure 2.

Set of vectors U and its convex vector subspace in .

Definition 4.

Let . Define the essential expected directions set of Z at η as

Example 7.

Assume Z is any non-stationary Markov chain, such that SGD in Example 1. Then for any T. Indeed, we have seen in Example 6 that for any and where . Hence

for any with .

Definition of delimits the smallest subspace where all directions at tend to. Clearly, is also convex and closed (possibly empty). Deeper properties of this set lead to identify divergence symptoms. For example, if it is empty or unbounded, we face instability of the process at . To see this, observe below result. The proof can be found in the Appendix B.

Corollary 1.

Let . Then is a non-empty bounded set if, and only if, there exists , such that is bounded.

This result relates with instability properties of Z. If is empty or unbounded, then the algorithm is unstable at , since expected directions with arbitrarily large norms exist after enough iterations. Clearly, if this situation is found for all points near the optimum, the algorithm can not converge to the solution. It is desirable instead that is compact (bounded) for some T for every , or equivalently, that is compact (bounded) and not empty.

In fact, since we are interested in the case where Z is locally bounded by (recall Definition 2), we can assume that is a non empty compact set, by virtue of below results.

Proposition 1.

Let stochastic process Z be locally bounded by ϕ. Then is a non-empty compact set.

Proof.

We know that X is locally and linearly bounded. Hence, applying Jensen’s inequality

Let and , such that for some . Therefore, every has bounded norm by , implying that is a non-empty compact set. □

Below corollary is a consequence of Proposition 1 and Corollary 1.

Corollary 2.

Let stochastic process Z be locally bounded by ϕ. Then is a non-empty compact set for all .

3. Vector Field Half-Spaces and Stochastic Processes. Resemblance

This section defines the main concept of this work; the property of resemblance between a stochastic process and a vector field. The definition highlight some commonalities between Theorems A1 and A3. Both of them prove the convergence of stochastic processes that resemble to particular vector fields. A geometric interpretation and explanation of convergence theorems conditions is established in next Section 4.

Some previous definitions are needed and stated before introducing the main concepts of the article, such as -acute vector pair sets and the half-space of a vector field. The section starts with some basic concepts about vectors.

Definition 5.

Let be two vectors. The pair is acute if u and v form an acute angle, that is, if . Furthermore, if then is ϵ-acute.

Proposition 2.

Let be two vectors. Then the pair is ϵ-acute if, and only if, there exists a symmetric positive-definite matrix B, such that and .

A vector pair set V is a set of vector pairs where I is an index set.

Definition 6.

Let V be a vector pair set. V is ϵ-acute if every vector pair is ϵ-acute.

Next result is a direct consequence.

Proposition 3.

Let V be a vector pair set, indexed by I. Then, V is ϵ-acute for some if, and only if;

Proposition 4.

Let V be a vector pair set, indexed by I. Then, V is ϵ-acute for some if, and only if, there exist a set of symmetric positive-definite matrices such that

Proof.

Prove, first, that if there exist a set of matrices holding Equation (19) then V is -acute for some . Observe that after Equation (19);

Then, Proposition 3 implies that V is -acute and finishes this part of the proof.

Now assume that V is -acute, prove then that there exist a set of matrices holding Equation (19). Since V is -acute, in particular, the pair is -acute for every . Apply Proposition 2: for every there exists a symmetric positive-definite matrix , such that and . This finishes the proof. □

3.1. The Half-Space of a Vector Field

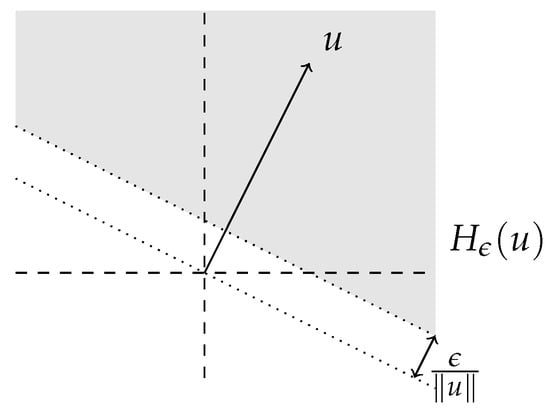

The half-space determined by a vector u is the set of vectors that conform an acute angle with u. This region clearly occupies half of the total space. Additionally, the -half-space of u with is the set of vectors v, such that the vector pair is -acute. This object is needed for afterwards defining the half-space of a vector field. We define these concepts below and illustrate the -half-space of a vector u in Figure 3.

Figure 3.

Shaded area representing .

Definition 7.

Let u be a vector of . The half-space of u is the set

Similarly, the ϵ-half-space of u with is the set

A vector field over is a function assigning to every a vector of , that is . For example, if is a twice differentiable function, we can consider the vector field consisting of the gradient vectors at each point . Precisely, denote the gradient vector field (GVF) as , where .

We are ready to define the half-space of a vector field.

Definition 8.

Let be a vector field over . The half-space of is a function mapping every η to . Similarly, the ϵ-half-space of with is a function mapping every η to .

3.2. Resemblance between a Stochastic Process and a Vector Field

The convergence of any locally bounded process can be proved comparing the expected directions set of the algorithm with some vector fields. When the expected directions resemble the vector field we compare it to, then we can ensure the almost sure convergence to a point of the stochastic process, after some reasonable conditions. By resemblance, we mean that the expected directions set after some time T is a subset of the -half-space of , among other things explained later. Therefore, resemblance asks for every that every vector with and every with form an acute angle with the vector field at .

However, if the vector field sends a specific point to , then no direction can be set by the to form an acute vector pair. Therefore, resemblance property is evaluated outside the neighborhood of these annulled points. That is why we must consider now the set of annulled points of a vector field and the neighborhoods around the points of this set.

Formally, let be a vector field defined in . The set is the set of points of annulled by , that is, . Moreover, consider the closed ball centered on of radius as where is the closed ball of radius centered on .

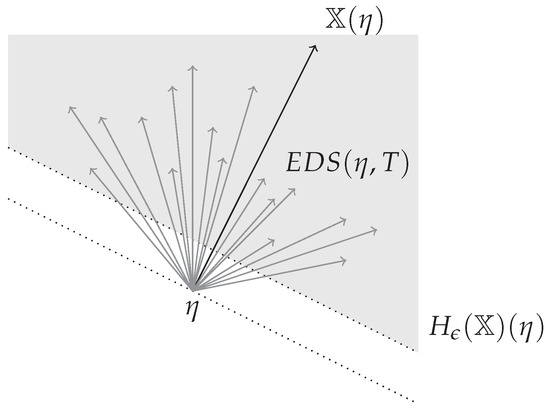

We also use the notation for the compliment set of subset . We say that Zϵ-resembles to at from T on if . Observe an illustrative example in Figure 4.

Figure 4.

A stochastic process Z that -resembles to at from T on, since vector set of all expected directions of Z at after time T belongs to .

This intuition is naturally extended to ϵ-resemblance at sets, when the property is satisfied for every in the set. With this in mind we can define the key concept of this article.

Definition 9.

Let be a stochastic process and be a vector field over . We say that Z resembles to from on, if;

We say that Z resembles to if there is such that it resembles to from T on.

Everything is set up to accomplish the goal of this paper. We refresh the main theorem of this article in next section and show its proof.

4. Proof of Main Result. Reinterpretation of Convergence Theorems

The objective of the article is within reach now. That is, proving main Theorem 1. Moreover, this section addresses afterwards the task of proving that Theorems A1 and A3 are particular examples of our main Theorem 1.

4.1. Resemblance to Conservative Vector Fields and Convergence

Recall main Theorem 1 and observe that it asks the stochastic process Z to be locally bounded by some function and Z to resemble to . Therefore, is a particular type of vector field called conservative vector field. That is, a vector field that appears from derivation of a function. That is why we understand our main theorem as a convergence result of locally bounded processes of resemblance to conservative vector field.

In the theorem statement, it says that has bounded Hessian norm. Similarly to Theorem A3, it means that:

We are ready to prove the main result of the paper.

Proof of main Theorem 1.

Observe that is bounded from below. Indeed, is a minimum and is convex with where . Therefore, there exists a constant such that for all . Define . Clearly, , and, therefore, Zresembles to . Moreover, Z is locally bounded by and clearly satisfies the Hessian norm bound.

From here, the prove follows the steps of Theorem A2’s proof. Taylor inequality and Hessian norm bound;

where . Apply expectation conditioned to information until time t and then use that Z is locally bounded by ;

Use now that Zresembles to . Then, there exists T such that for every , the term is negative. All other conditions of Robbins–Siegmund theorem (in [7], added in Appendix A) also hold for the algorithm after time T, thanks to learning rate constraints. Apply it and deduce that random variables converge almost surely to a random variable (and so does ) and that;

Prove now that stochastic process converges almost surely to value . Proceed by contradiction. Assume that for

this implies, by continuity and convexity of function , that there exists

By resemblance and definition of the limit, there exists T and such that for every . This leads to a contradiction, since using learning rate standard constraint we have

for every , which has measure different to 0 by Equation (28). This clearly contradicts Equation (26).

Hence, converges almost surely to and converges almost surely to as wanted. □

4.2. Reinterpretation of Bottou’s Convergence Theorem

The goal now is to deduce Theorem A1 as a direct consequence of main Theorem 1. Consider a particular case of main Theorem 1 where , that reads as follows.

Corollary 3.

Let and Z be a stochastic process on probability space . Then Z almost surely converges to if

- Z is locally bounded by ϕ;

- Z resembles .

Additional conditions to , such as Hessian bound or twice differentiability, are not specified in the corollary since with the particular definition of all those conditions are already satisfied.

To see that Corollary 3 proves Theorem A1 statement, we need to prove that Theorem A1 is assuming that Z is locally bounded by and that Z resembles . Example 3 already proves that Bottou is assuming that Z is locally bounded by . Therefore, it remains to check that Z resembles to . To that end, see below proposition proved in Appendix C.

Proposition 5.

Let be a stochastic process and be a vector field over . Then Z resembles to if, and only if,

Observe condition Bottou resemblance of Theorem A1 and Proposition 5. Deduce from it, that the algorithm Z of the theorem resembles to vector field .

Corollary 4.

Let be a stochastic process and . Then Z resembles to with if, and only if,Bottou resemblanceholds.

4.3. Reinterpretation of Sunehag’s Convergence Theorem

Theorem A3 is deduced from main Theorem 1. Similarly to previous section, we provide a version of our main theorem for the case where is a function that we aim to minimize.

Corollary 5.

Let be a twice differentiable cost function with a unique minimum and bounded Hessian norm, and let Z be a stochastic process on probability space . Then Z converges to the minimum of l almost surely if

- Z is locally bounded by l;

- Z resembles .

The stochastic process described in Theorem A3 has some more properties, such as . However, if we prove that Z of that theorem is locally bounded by l and that Zresembles, then it is clear that Corollary 5 deduces Theorem A3. Recall Example 4 and notice that we already proved that Z is locally bounded by l. The remaining property is acquired after below proposition that we prove in Appendix D.

Proposition 6.

Let be a stochastic process and be a vector field over . Then Z resembles to if, and only if, there exists T, such that for every there are random vectors to and symmetric and positive-definite -measurable random matrices such that

It is only necessary to put together Proposition 6 and condition C.1 and Sunehag resemblance to finish our objective with the following corollary

Corollary 6.

Let l be a differentiable function and be a stochastic process. Then Z resembles to if, and only if, there exist T such that for every there are random vectors to and symmetric and positive-definite -measurable random matrices such that and conditionsC.1andSunehag resemblancehold.

Corollaries 4 and 6 nicely show the value of Theorem 1 for proving convergence. To reinforce this, we notice that the convergence of algorithm DSNGD in [12] is easily proved by means of Corollary 5, by combining both Theorem A3 and Corollary 6. This shows that Theorem 1 allows to prove convergence of a wider set of stochastic processes and function optimization methods.

4.4. Convergence of Process in Example 5

Our theorem solves question proposed by Example 5. To see it, just define

Twice differentiable and convex function has bounded Hessian norm, since its Hessian is the constant matrix . Moreover, Z is clearly locally bounded by . Indeed, recall Equation (7) and observe;

where such that is the greatest eigenvalue of and is the least eigenvalue of .

Finally, check that Zresembles to . Observe that is a singleton for every T. Then for all and all it is

where . Hence Z resembles to and by virtue of our main Theorem 1 process Z converges a.s. to 0, and, therefore, minimizes function f as wanted.

5. Conclusions

We have presented a result that allows us to prove the convergence of stochastic processes. We have proven that two useful convergence results in the literature are a consequence of our theorem. This is made after a new theory that compares the expected directions of the algorithm to conservative vector fields. If the expected directions at a point resemble enough to vector with a conservative vector field, then the process is stable at that point. If this happens for every , and in addition the process is locally bounded by , then the process is globally stable and converges.

Some inspiring paths remain unexplored after this work. For example, finding function is the key to prove convergence, and it is asked to be a convex twice differentiable function. It is interesting to study how function can be obtained, for instance as a sum of other convex twice differentiable functions .

Another promising research line is a deeper analysis of and objects, which may guarantee the existence of a function without the need of finding it. If sufficient conditions are established for a stochastic process to ensure resemblance to some unknown conservative vector field, then searching can be dodged. Even proving the non-existence of such function after a wider study of and is useful, forbidding the use of our theorem.

It is also interesting to study the converse implication. Specifically, investigating the conditions that lead to divergent instances after ground theory explained in the article. In this sense, Lyapunov characterization of convergent processes becomes a helpful and key theory, since great similarities arise between these two techniques.

Furthermore, in many occasions the function to optimize can be established beforehand (convex and twice differentiable). Therefore, the opposite process can be considered, that is, generating a set of stochastic processes that resemble to , assuring in consequence the convergence of such candidates.

In [17], one finds another relevant convergence result. It assures the convergence in probability of a stochastic process, instead of almost sure convergence worked in this article. We wonder about the existing commonalities with our theorem, and the possibility to relax the conditions our theorem imposes, yet ensuring convergence in probability of a process.

We are currently working on two weaker resemblance properties, that we name weak and essential resemblance. The intention is to deduce almost sure convergence of a process by only studying its essential expected direction set (EEDS).

Author Contributions

Conceptualization, B.S.-L. and J.C.; writing—original draft preparation, B.S.-L. and J.C.; review and editing, B.S.-L. and J.C.; supervision, J.C.; funding acquisition, J.C. All authors contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This work is partially supported by the projects Crowd4SDG and Humane-AI-net, which have received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreements No. 872944 and No. 952026, respectively. This work is also partially supported by the project CI-SUSTAIN funded by the Spanish Ministry of Science and Innovation (PID2019-104156GB-I00).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the writing of the manuscript, or in the decision to publish the results.

Appendix A. Convergence Theorems

We state below Bottou’s convergence theorem appearing in [4] and Sunehag et al. convergence theorem in [5]. We provide a generalization of such theorems, whose proofs carry no complications from their original proofs. Moreover, we adapt the notation to our text and replace algorithm concepts by the corresponding terms appearing in the more generic stochastic process theory branch. We name every condition described by the result to refer to them in the article.

Theorem A1

(Bottou’s in [4]). Let be a function with a unique minimum and be a stochastic process. Then Z converges to almost surely if the following conditions hold;

Theorem A2

(Theorem 3.2 in [5]). Let be a twice differentiable cost function with a unique minimum and let be a stochastic process where is symmetric and only depends on information available at time t and. Then Z converges to the almost surely if the following conditions hold;

where are the eigenvalues of matrix B.

Now, we provide a generalization of theorem of sunehag in [5]. Specifically, we deleted condition C.5 and modified (and relaxed) conditions C.3 and C.4 of the original statement. The proof is trivial after the original theorem’s proof, so the modifications present no complications.

Theorem A3

(Generalization of Theorem A2). Let be a twice differentiable cost function with a unique minimum and let a stochastic process where is -measurable. Then Z converges to the almost surely if the following conditions hold;

Robbins–Siegmund theorem is the key result to prove almost sure convergence on previous theorems, as well as on our generalization result.

Theorem A4

(Robbins-Siegmund). Let be a probability space and a sequence of sub-σ-fields of . Let and , be non-negative -measurable random variables, such that

Then on the set , converges almost surely to a random variable, and almost surely.

Appendix B. Proof of Corollary 1

To prove the corollary, it is enough to prove the generic proposition below.

Proposition A1.

Let be non empty, closed and connected sets where for and let . Then V is a non empty bounded set if, and only if, is bounded for some .

Proof.

Prove first that if is bounded for some , then is a non-empty bounded set. Clearly, and, therefore, V is bounded, possibly empty. Observe that for all is compact and closed. Then V is not empty, by the Cantor’s intersection theorem.

Conversely, prove now that if V is a non empty bounded set, then there exists T such that is bounded. Assume V is non-empty bounded set, then there exists , such that where is the ball centered at 0 with radius r. Define

where is the closed ball of radius and center 0 and . The sequence is of compact and closed subsets, where and is empty. Therefore, by Cantor’s intersection theorem, there exists T such that is empty. Then . Since and is connected, then and hence it is bounded as wanted to prove. □

Appendix C. Bottou’s Resemblance

Proposition 5 is a direct consequence of Proposition A2, that we state and prove below, and Proposition 3.

Proposition A2.

Let be a stochastic process and be a vector field over . For and , define the vector pair set

Then Z resembles to if, and only if,

Proof.

By definition, is -acute if, and only if, every vector pair in is -acute. By definition, such vector pairs with are -acute if, and only if,

Previous equation holds if, and only if, , as wanted to prove. □

Appendix D. Sunehag’s Resemblance

The result that translates Theorem A3 with resemblance concepts is Proposition 6, that we prove below.

Proof.

After Proposition A2 and 4 deduce that Z belongs to the half-space of if, and only if, there exists such that for every and every there exist symmetric positive-definite -measurable random matrices , such that

This matches with Equation (33). Matrix is correctly and uniquely defined for all and all , such that . Define the identity matrix if and also define

Observe that and that Equation (32) is then met too finishing the proof. □

References

- Amari, S.I. Natural Gradient Works Efficiently in Learning. Neural Comput. 1998, 276, 251–276. [Google Scholar] [CrossRef]

- Thomas, P.S. GeNGA: A generalization of natural gradient ascent with positive and negative convergence results. In Proceedings of the 31st International Conference on Machine Learning, ICML 2014, Beijing, China, 21–26 June 2014; Volume 5, pp. 3533–3541. [Google Scholar]

- Sánchez-López, B.; Cerquides, J. Convergent Stochastic Almost Natural Gradient Descent. In Proceedings of the Artificial Intelligence Research and Development-Proceedings of the 22nd International Conference of the Catalan Association for Artificial Intelligence, Mallorca, Spain, 23–25 October 2019; Volume 319, pp. 54–63. [Google Scholar]

- Bottou, L. Online Algorithms and Stochastic Approximations. In Online Learning and Neural Networks; Saad, D., Ed.; Cambridge University Press: Cambridge, UK, 1998; Revised, October 2012. [Google Scholar]

- Sunehag, P.; Trumpf, J.; Vishwanathan, S.V.N.; Schraudolph, N. Variable Metric Stochastic Approximation Theory. In Proceedings of the Artificial Intelligence and Statistics, Clearwater, FL, USA, 16–19 April 2009; pp. 560–566. [Google Scholar]

- Lyapunov, A.M. The general problem of the stability of motion. Int. J. Control 1992, 55, 531–534. [Google Scholar] [CrossRef]

- Robbins, H.; Siegmund, D. A convergence theorem for non negative almost supermartingales and some applications. In Optimizing Methods in Statistics; Rustagi, J.S., Ed.; Academic Press: Cambridge, MA, USA, 1971; pp. 233–257. [Google Scholar]

- Karlin, S.; Taylor, H.M. Elements of stochastic processes. In A First Course in Stochastic Processes, 2nd ed.; Karlin, S., Taylor, H.M., Eds.; Academic Press: Boston, MA, USA, 1975; Chapter 1; pp. 1–44. [Google Scholar] [CrossRef]

- Ross, S.M.; Kelly, J.J.; Sullivan, R.J.; Perry, W.J.; Mercer, D.; Davis, R.M.; Washburn, T.D.; Sager, E.V.; Boyce, J.B.; Bristow, V.L. Stochastic Processes; Wiley: New York, NY, USA, 1996; Volume 2. [Google Scholar]

- Bass, R.F. Stochastic Processes; Cambridge University Press: Cambridge, UK, 2011; Volume 33. [Google Scholar]

- Grimmett, G.; Stirzaker, D. Probability and Random Processes; OUP Oxford: Oxford, UK, 2020. [Google Scholar]

- Sánchez-López, B.; Cerquides, J. Dual Stochastic Natural Gradient Descent and convergence of interior half-space gradient approximations. arXiv 2021, arXiv:2001.06744. [Google Scholar]

- Tao, T. An Introduction to Measure Theory; Graduate Studies in Mathematics, American Mathematical Society: Providence, RI, USA, 2011. [Google Scholar]

- Duchi, J.; Hazan, E.; Singer, Y. Adaptive Subgradient Methods for Online Learning and Stochastic Optimization. J. Mach. Learn. Res. 2011, 12, 2121–2159. [Google Scholar]

- Zeiler, M.D. ADADELTA: An Adaptive Learning Rate Method. arXiv 2012, arXiv:1212.5701. [Google Scholar]

- Kingma, D.P.; Ba, L.J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Robbins, H.; Monro, S. A Stochastic Approximation Method. Ann. Math. Stat. 1951, 22, 400–407. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).