An Improved Machine Learning-Based Employees Attrition Prediction Framework with Emphasis on Feature Selection

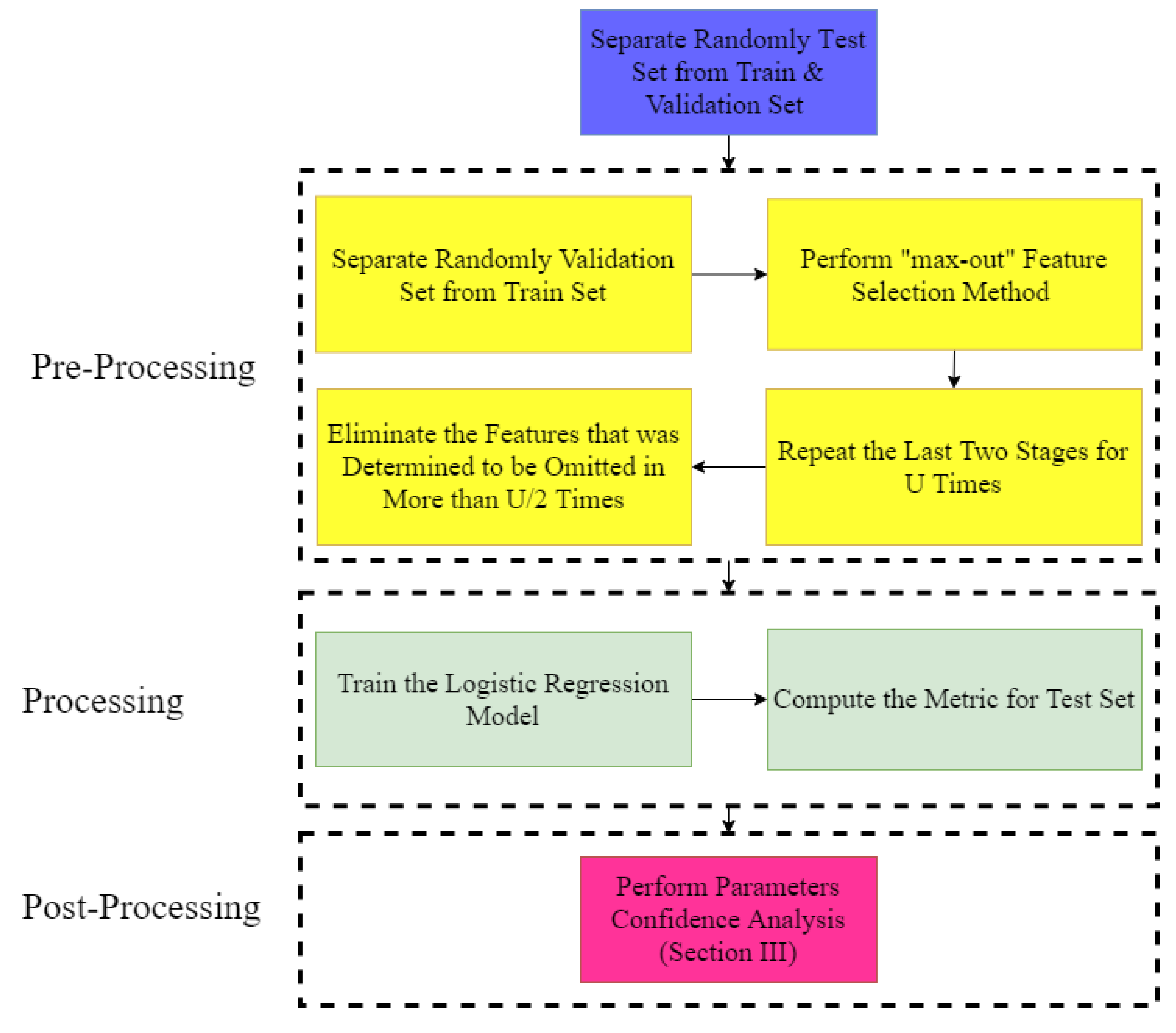

Abstract

1. Introduction

- Proper feature selection method

- Informative evaluation of the classifier’s performance

- Confidence levels for the value of the coefficient of each feature in the logistic regression model

2. Feature Selection

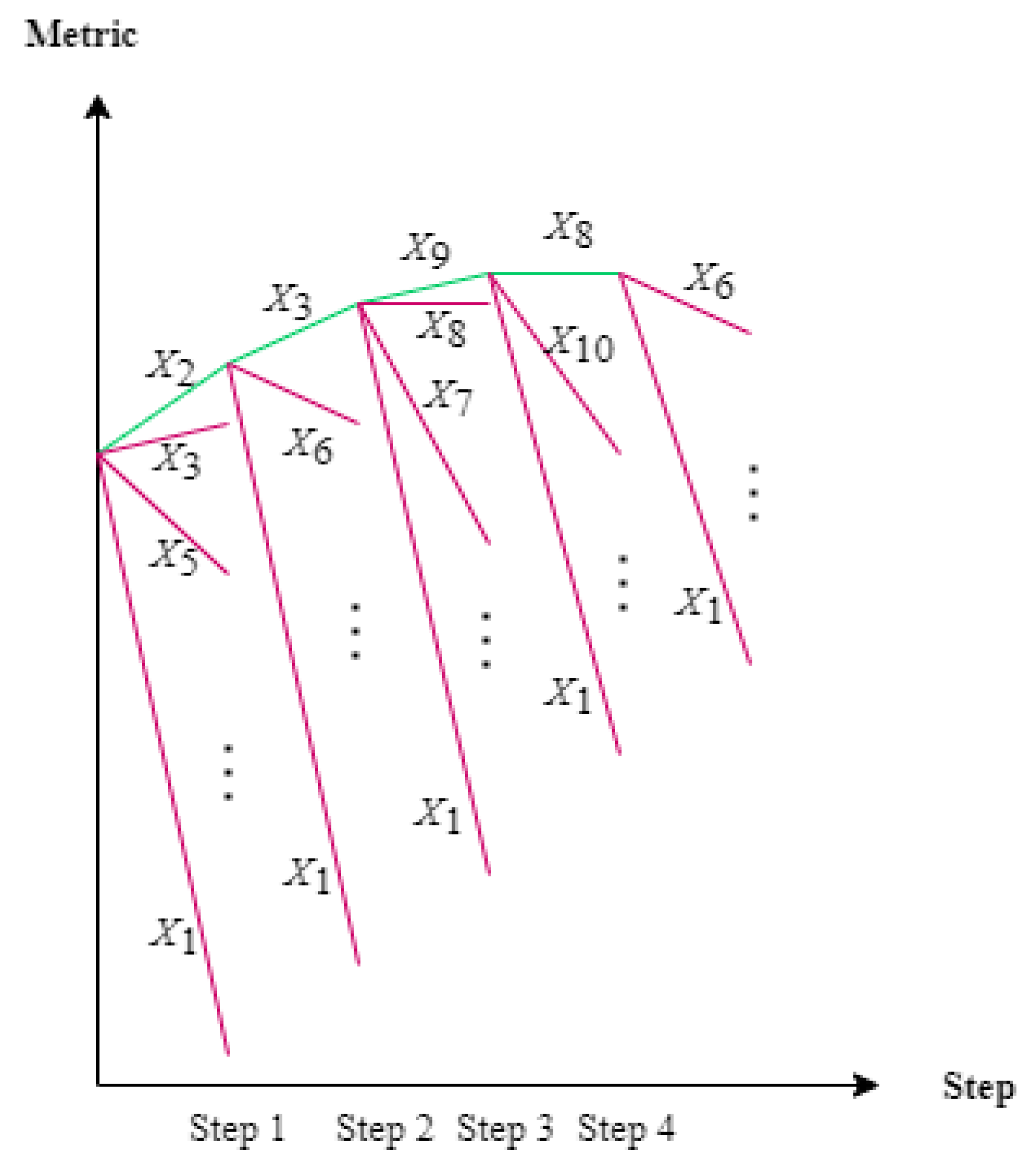

2.1. Max-Out Feature Selection Algorithm

| Algorithm 1 m-max-out |

|

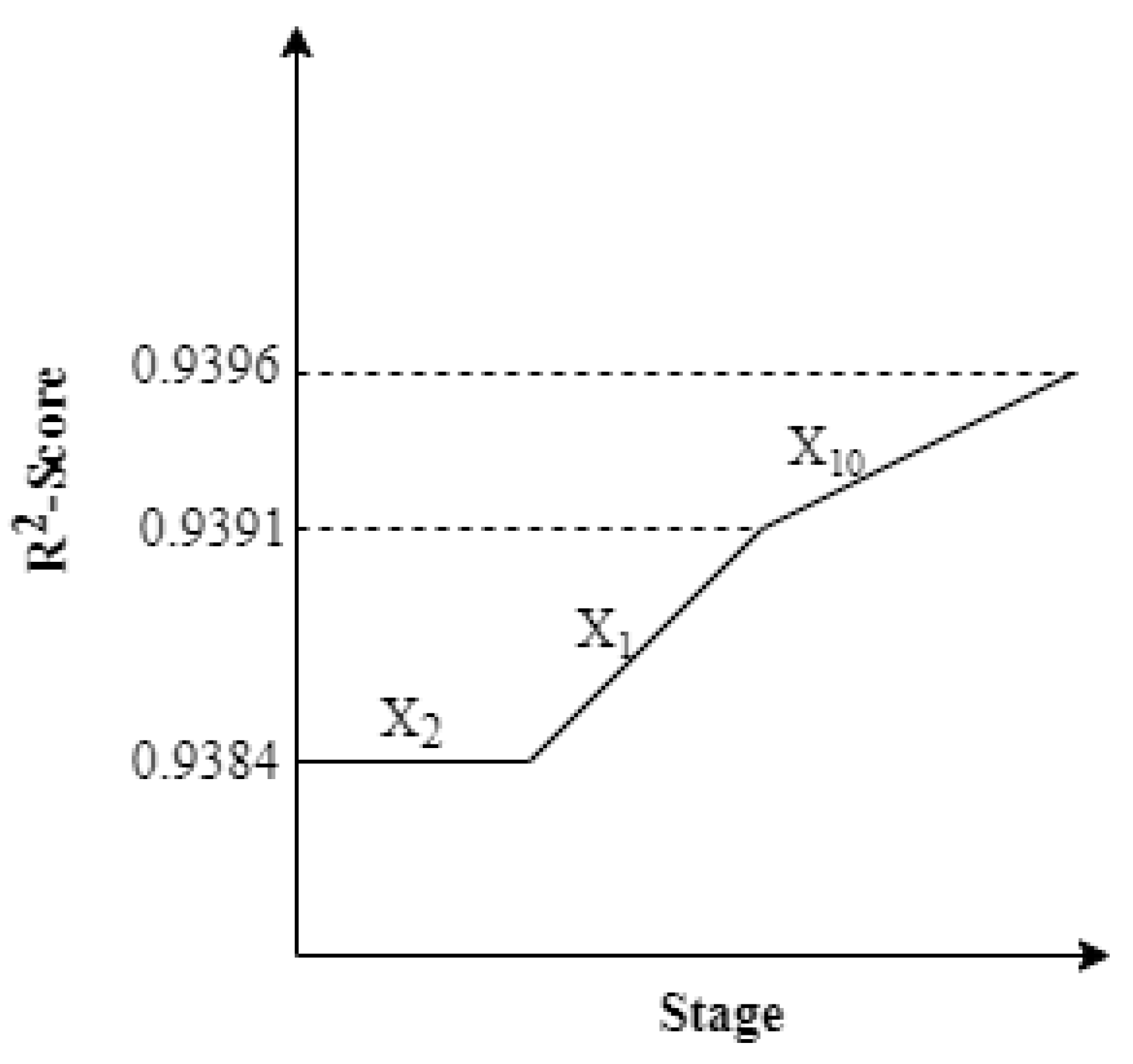

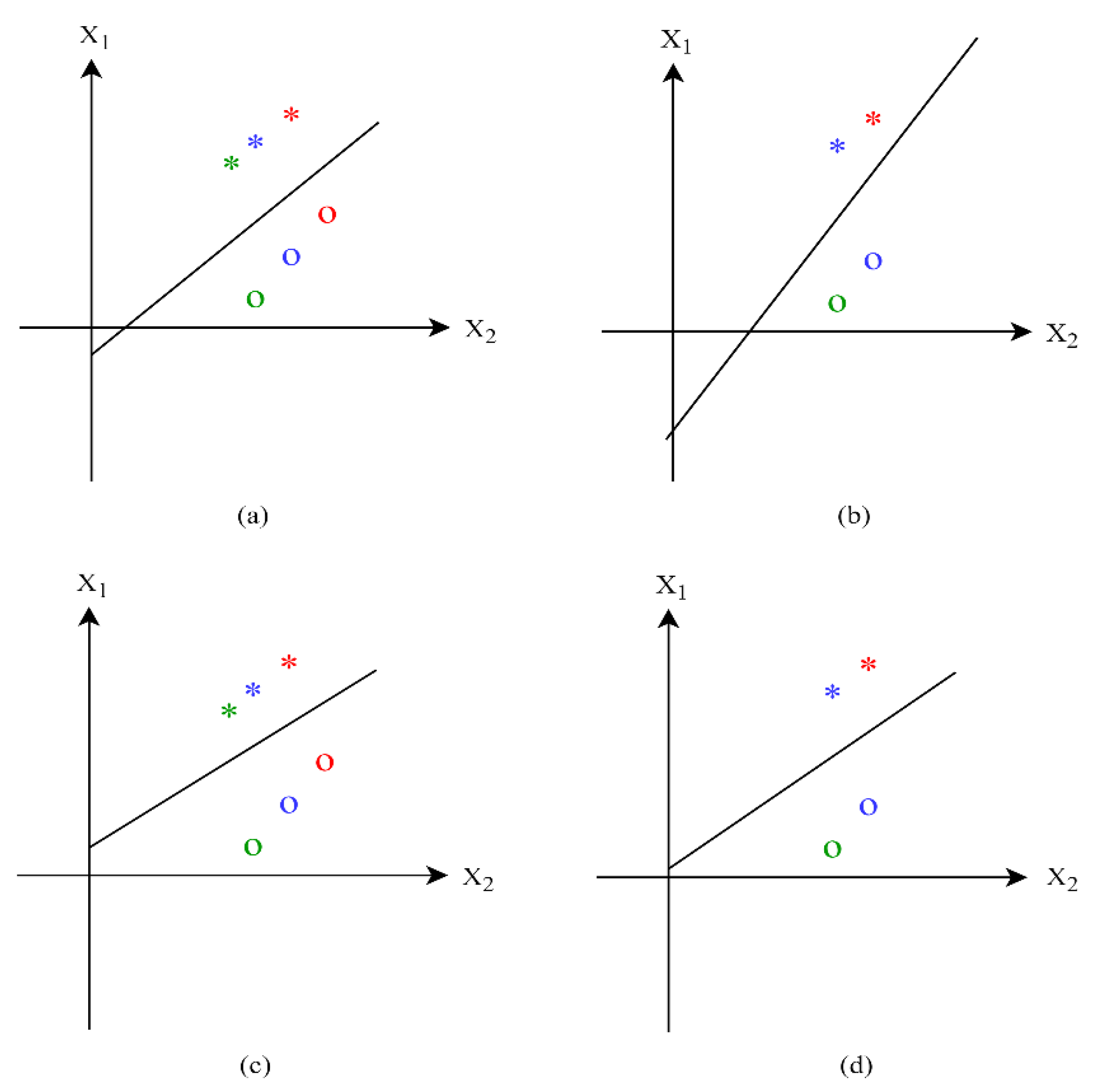

2.2. Illustrative 1-Max-out Example

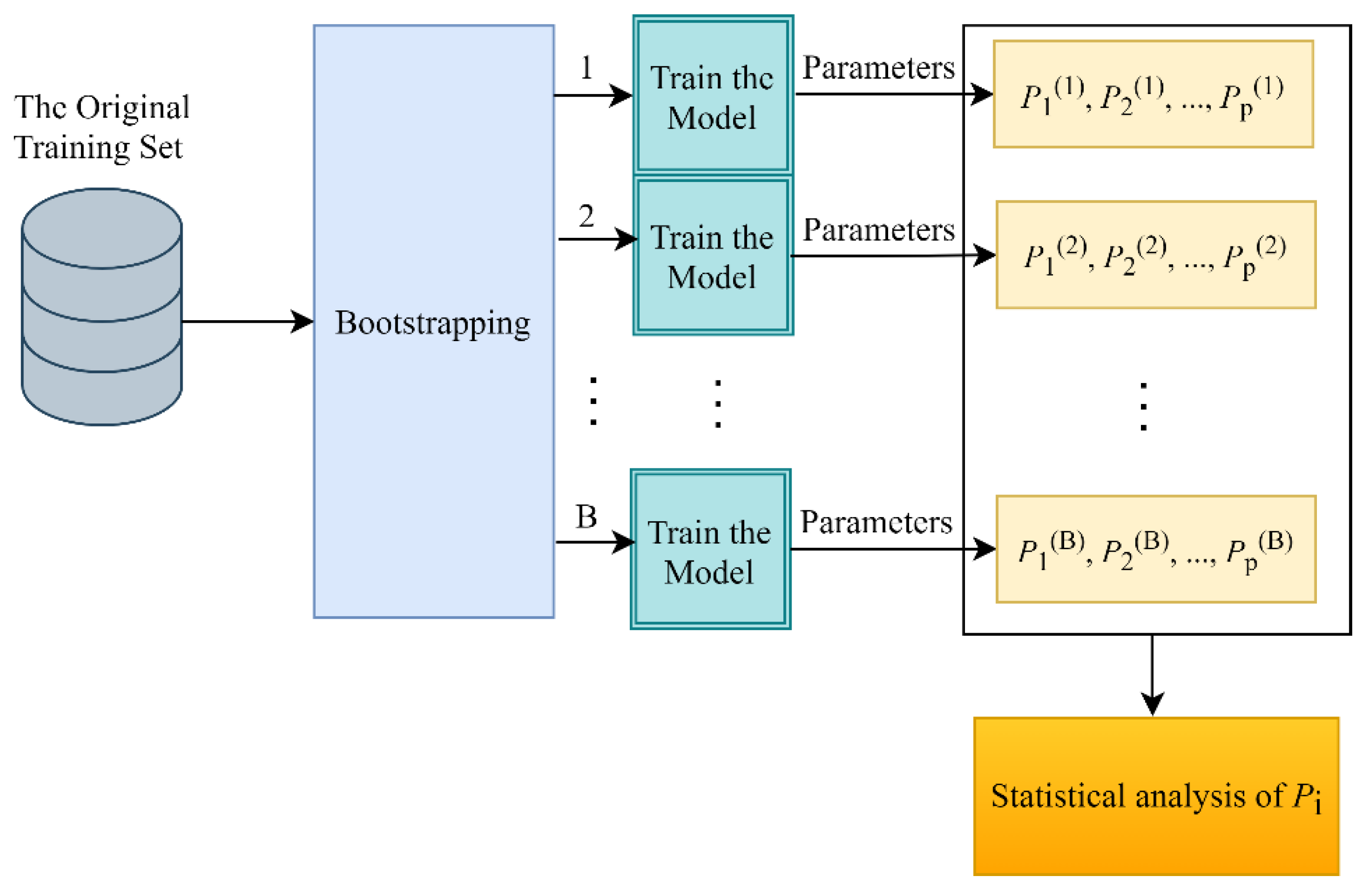

3. Parameter Confidence Analysis

4. Logistic Regression

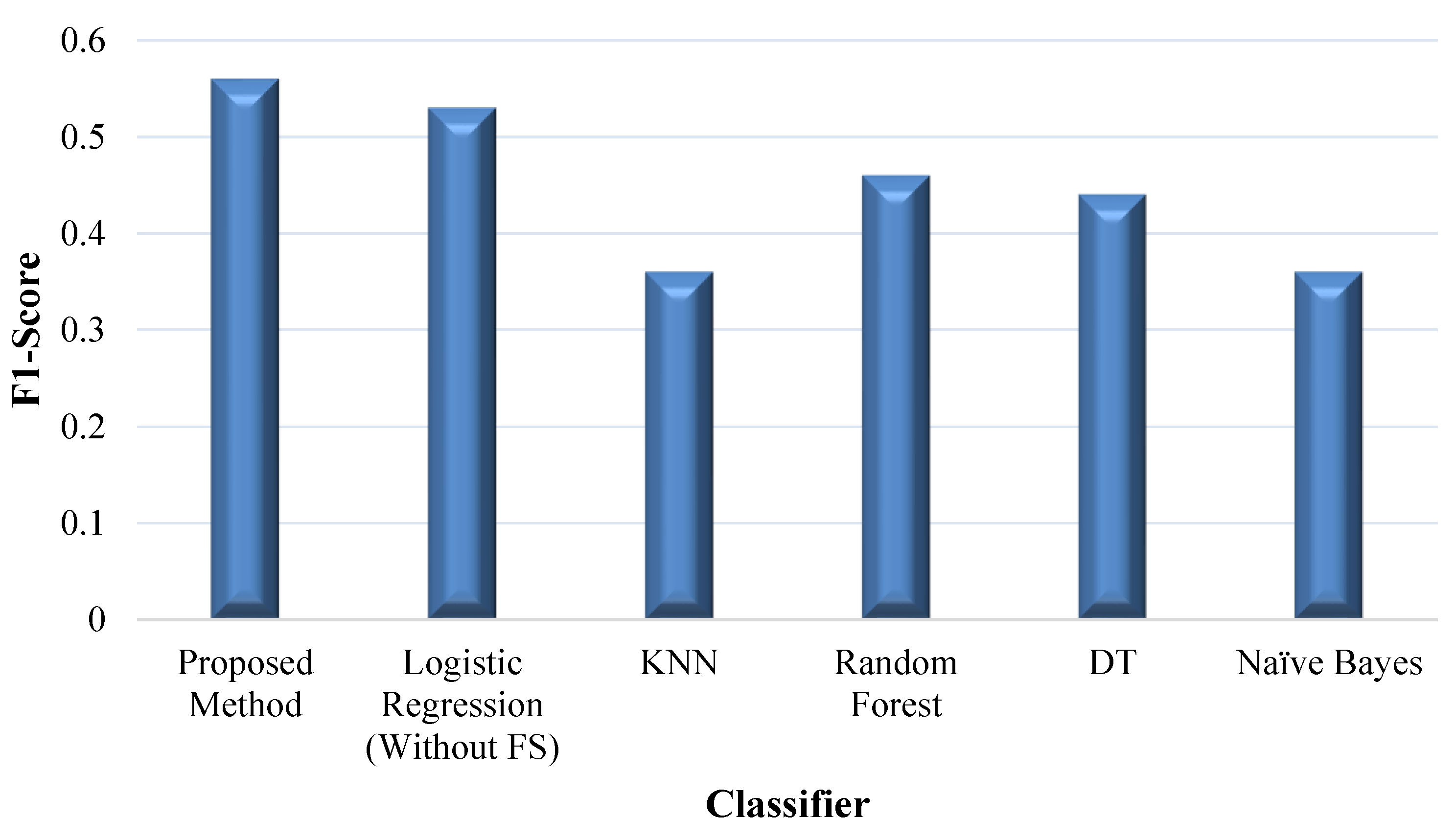

Performance Evaluation

5. Case Study

5.1. Feature Selection

5.2. Final Model

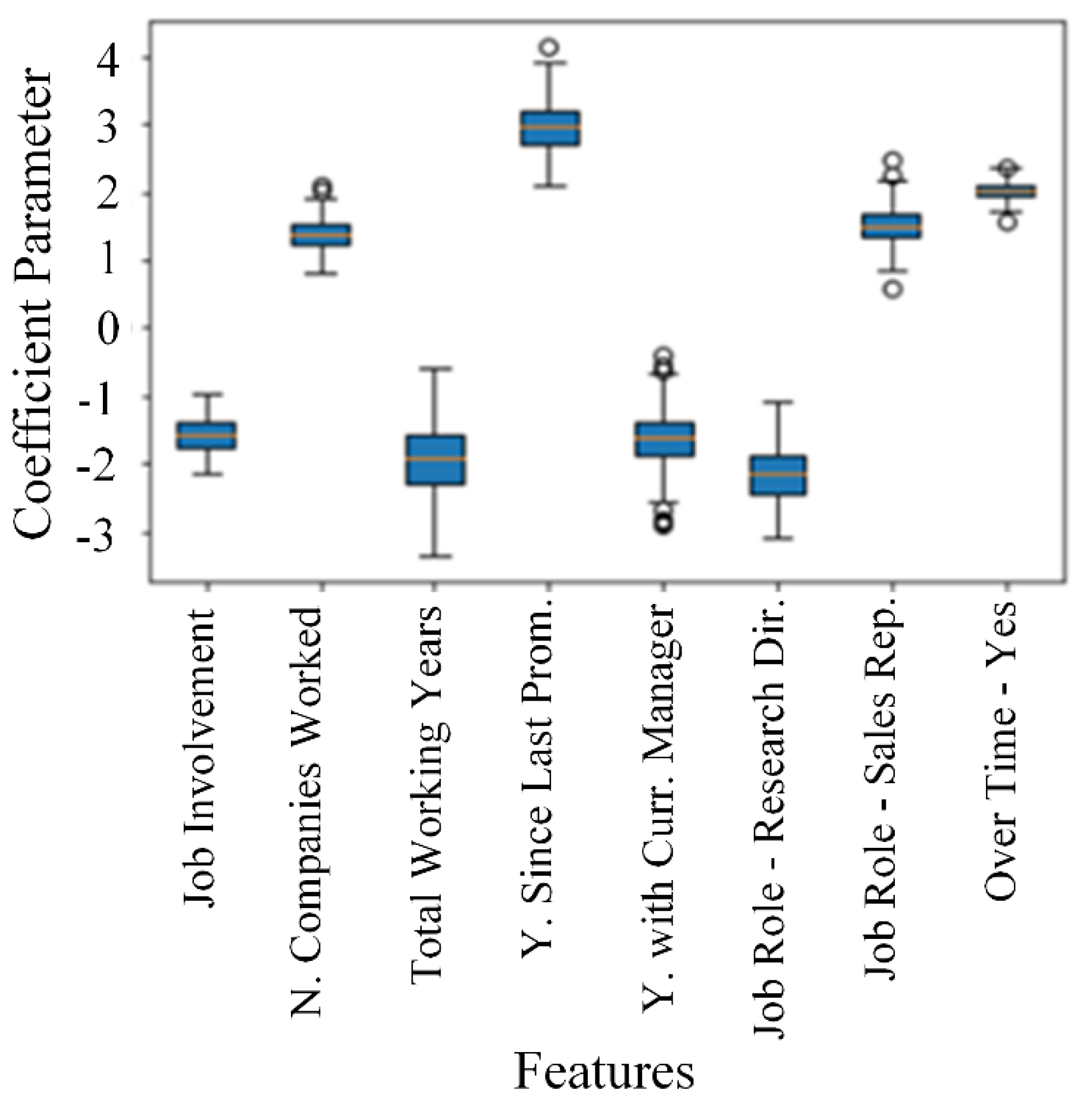

5.3. Parameters Confidence Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mohri, M.; Rostamizadeh, A.; Talwalkar, A. Foundations of Machine Learning; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Mohbey, K.K. Employee’s Attrition Prediction Using Machine Learning Approaches. In Machine Learning and Deep Learning in Real-Time Applications; IGI Global: Hershey, PA, USA, 2020; pp. 121–128. [Google Scholar]

- Ponnuru, S.; Merugumala, G.; Padigala, S.; Vanga, R.; Kantapalli, B. Employee Attrition Prediction using Logistic Regression. Int. J. Res. Appl. Sci. Eng. Technol. 2020, 8, 2871–2875. [Google Scholar] [CrossRef]

- Frye, A.; Boomhower, C.; Smith, M.; Vitovsky, L.; Fabricant, S. Employee Attrition: What Makes an Employee Quit? Smu Data Sci. Rev. 2018, 1, 9. [Google Scholar]

- Yang, S.; Ravikumar, P.; Shi, T. IBM Employee Attrition Analysis. arXiv 2020, arXiv:2012.01286. [Google Scholar]

- Alduayj, S.S.; Rajpoot, K. Predicting employee attrition using machine learning. In Proceedings of the 2018 International Conference on Innovations in Information Technology (IIT), Al Ain, United Arab Emirates, 18–19 November 2018; IEEE: New York, NY, USA, 2018; pp. 93–98. [Google Scholar]

- Bhuva, K.; Srivastava, K. Comparative Study of the Machine Learning Techniques for Predicting the Employee Attrition. IJRAR-Int. J. Res. Anal. Rev. 2018, 5, 568–577. IJRAR-Int. J. Res. Anal. Rev. 2018, 5, 568–577. [Google Scholar]

- Dutta, S.; Bandyopadhyay, S.K. Employee attrition prediction using neural network cross validation method. Available online: https://www.researchgate.net/profile/Shawni-Dutta/publication/341878934_Employee_attrition_prediction_using_neural_network_cross_validation_method/links/5ed7becf299bf1c67d352327/Employee-attrition-prediction-using-neural-network-cross-validation-method.pdf (accessed on 17 April 2021).

- Qutub, A.; Al-Mehmadi, A.; Al-Hssan, M.; Aljohani, R.; Alghamdi, H.S. Prediction of Employee Attrition Using Machine Learning and Ensemble Methods. Int. J. Mach. Learn. Comput. 2021, 11. [Google Scholar] [CrossRef]

- Fallucchi, F.; Coladangelo, M.; Giuliano, R.; William De Luca, E. Predicting Employee Attrition Using Machine Learning Techniques. Computers 2020, 9, 86. [Google Scholar] [CrossRef]

- Frierson, J.; Si, D. Who’s next: Evaluating attrition with machine learning algorithms and survival analysis. In Proceedings of the International Conference on Big Data, New York, NY, USA, 25–30 December 2018; Springer: Cham, Germany, 2018; pp. 251–259. [Google Scholar]

- Pavansubhash. IBM HR Analytics Employee Attrition & Performance, Version 1. 2017. Retrieved on 30 April 2020. Available online: https://www.kaggle.com/pavansubhasht/ibm-hr-analytics-attrition-dataset (accessed on 31 March 2017).

- Zebari, R.; Abdulazeez, A.; Zeebaree, D.; Zebari, D.; Saeed, J. A Comprehensive Review of Dimensionality Reduction Techniques for Feature Selection and Feature Extraction. J. Appl. Sci. Technol. Trends 2020, 1, 56–70. [Google Scholar] [CrossRef]

- Venkatesh, B.; Anuradha, J. A review of feature selection and its methods. Cybern. Inf. Technol. 2019, 19, 3–26. [Google Scholar] [CrossRef]

- Jin, X.; Xu, A.; Bie, R.; Guo, P. Machine Learning Tech-niques and Chi-Square Feature Selec-tion for Cancer Classification Using SAGE Gene Expres-sion Profiles. In Proceedings of the 2006 International Conference on Data Mining for Biomedical Applications, Singapore, 9 April 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 106–115. [Google Scholar]

- Kwak, N.; Chong-Ho, C. Input Feature Selection by Mutual Information Based on Parzen Window. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 1667–1671. [Google Scholar] [CrossRef]

- Moslehi, F.; Haeri, A. A novel feature selection approach based on clustering algorithm. J. Stat. Comput. Simul. 2021, 91, 581–604. [Google Scholar] [CrossRef]

- Yan, K.; Zhang, D. Feature selection and analysis on correlated gas sensor data with recursive feature elimination. Sens. Actuators B: Chem. 2015, 212, 353–363. [Google Scholar] [CrossRef]

- Demertzis, K.; Tsiknas, K.; Takezis, D.; Skianis, C.; Iliadis, L. Darknet Traffic Big-Data Analysis and Network Management for Real-Time Automating of the Malicious Intent Detection Process by a Weight Agnostic Neural Networks Framework. Electronics 2021, 10, 781. [Google Scholar] [CrossRef]

- Duval, B.; Hao, J.K.; Hernandez Hernandez, J.C. A memetic algorithm for gene selection and molecular classification of cancer. In Proceedings of the 11th Annual Conference on Genetic and Evolutionary Computation, New York, NY, USA, 10–14 July 2009; pp. 201–208. [Google Scholar]

- Mehrdad, R.; Kamal, B.; Saman, F. A novel community detection based genetic algorithm for feature selection. J. Big Data 2021, 8, 1–27. [Google Scholar]

- Kostrzewa, D.; Brzeski, R. 2017, October. The data dimensionality reduction in the classification process through greedy backward feature elimination. In Proceedings of the International Conference on Man–Machine Interactions, Cracow, Poland, 3–6 October 2017; Springer: Cham, Germany, 2017; pp. 397–407. [Google Scholar]

- Fish Market. Available online: https://www.kaggle.com/aungpyaeap/fish-market (accessed on 13 June 2019).

- Friedman, J.; Hastie, T.; Tibshirani, R. The Elements of Statistical Learning; Series in Statistics; Springer: New York, NY, USA, 2001. [Google Scholar]

- Scikit-Learn User Manual. Available online: https://scikit-learn.org/stable/modules/model_evaluation.html#precision-recall-f-measure-metrics (accessed on 12 April 2021).

- Daly, A.; Dekker, T.; Hess, S. Dummy coding vs effects coding for categorical variables: Clarifications and extensions. J. Choice Model. 2016, 21, 36–41. [Google Scholar] [CrossRef]

| Categories | Examples | Pros. | Cons. |

|---|---|---|---|

| Filter | [15,16,17] |

|

|

| Wrapper | [18,19] |

|

|

| Embedded | [20] |

|

|

| Hybrid | [21] |

|

|

| Education Field | EF_HR | EF_LS | EF_Ma | EF_Me | EF_Oth |

|---|---|---|---|---|---|

| Human Resource | 1 | 0 | 0 | 0 | 0 |

| Life Science | 0 | 1 | 0 | 0 | 0 |

| Marketing | 0 | 0 | 1 | 0 | 0 |

| Medical | 0 | 0 | 0 | 1 | 0 |

| Other | 0 | 0 | 0 | 0 | 1 |

| Feature | Coef. | Feature | Coef. | Feature | Coef. |

|---|---|---|---|---|---|

| Age | −0.776 | Environment Satisfaction | −1.174 | Education Field_Life Sciences | −0.181 |

| Daily Rate | −0.738 | Business Travel_Travel_Frequently | 0.810 | Education Field_Technical Degree | 0.341 |

| Distance From Home | 1.004 | Percent Salary Hike | −0.642 | Training Times Last Year | −0.835 |

| Job Involvement | −1.536 | Number Companies Worked | 1.375 | Job Role_Laboratory Technician | 1.009 |

| Relationship Satisfaction | −0.701 | Job Satisfaction | −1.116 | Job Role_Sales Executive | 0.762 |

| Stock Option Level | −0.255 | Total Working Years | −1.887 | Marital Status_Divorced | −0.728 |

| Work Life Balance | −0.852 | Years with Current Manager | −1.615 | Job Role_Manager | −0.670 |

| Years in Current Role | −1.287 | Years Since Last Promotion | 2.925 | Job Role_Sales Representative | 1.483 |

| Job Role_Health care Representative | −0.333 | Gender_Male | 0.606 | OverTime_Yes | 1.996 |

| Job Role_Human Resources | 0.463 | Job Role_Research Scientist | −0.096 | Job Role_Research Director | −2.178 |

| Marital Status_Single | 0.755 | Constant | 2.176 |

| Feature | Ave. | Feature | Ave. | Feature | Ave. |

|---|---|---|---|---|---|

| Std. | Std. | Std. | |||

| CV | CV | CV | |||

| Age | −0.786 | Daily Rate | −0.753 | Distance From Home | 1.007 |

| 0.320 | 0.221 | 0.214 | |||

| 0.407 | 0.293 | 0.212 | |||

| Environment Satisfaction | −1.181 | Business Travel_Travel_Frequently | 0.824 | Job Involvement | −1.572 |

| 0.162 | 0.147 | 0.235 | |||

| 0.137 | 0.178 | 0.149 | |||

| Job Satisfaction | −1.131 | Number Companies Worked | 1.390 | Percent Salary Hike | −0.650 |

| 0.166 | 0.219 | 0.228 | |||

| 0.146 | 0.157 | 0.350 | |||

| Education Field_Life Sciences | −0.182 | Relationship Satisfaction | −0.717 | Stock Option Level | −0.262 |

| 0.122 | 0.172 | 0.264 | |||

| 0.670 | 0.239 | 1.007 | |||

| Total Working Years | −1.962 | Training Times Last Year | −0.850 | Education Field_Technical Degree | 0.358 |

| 0.503 | 0.255 | 0.213 | |||

| 0.256 | 0.3 | 0.595 | |||

| Work Life Balance | −0.869 | Years in Current Role | −1.308 | Years Since Last Promotion | 2.952 |

| 0.220 | 0.403 | 0.361 | |||

| 0.253 | 0.308 | 0.122 | |||

| Years with Current Manager | −1.630 | Gender_Male | 0.358 | Job Role_Health care Representative | −0.329 |

| 0.409 | 0.213 | 0.340 | |||

| 0.250 | 0.595 | 1.03 | |||

| Job Role_Research Director | −2.147 | Job Role_Human Resources | 0.450 | Job Role_Laboratory Technician | 1.021 |

| 0.371 | 0.351 | 0.208 | |||

| 0.172 | 0.78 | 0.203 | |||

| Job Role_Manager | −0.622 | Marital Status_Divorced | −0.755 | Job Role_Research Scientist | −0.101 |

| 0.280 | 0.170 | 0.192 | |||

| 0.450 | 0.225 | 1.9 | |||

| Job Role_Sales Executive | 0.776 | Job Role_Sales Representative | 1.500 | OverTime_Yes | 2.029 |

| 0.200 | 0.264 | 0.124 | |||

| 0.257 | 0.176 | 0.061 | |||

| Marital Status_Single | 0.764 | Constant | 2.215 | ||

| 0.170 | 0.403 | ||||

| 0.222 | 0.182 | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Najafi-Zangeneh, S.; Shams-Gharneh, N.; Arjomandi-Nezhad, A.; Hashemkhani Zolfani, S. An Improved Machine Learning-Based Employees Attrition Prediction Framework with Emphasis on Feature Selection. Mathematics 2021, 9, 1226. https://doi.org/10.3390/math9111226

Najafi-Zangeneh S, Shams-Gharneh N, Arjomandi-Nezhad A, Hashemkhani Zolfani S. An Improved Machine Learning-Based Employees Attrition Prediction Framework with Emphasis on Feature Selection. Mathematics. 2021; 9(11):1226. https://doi.org/10.3390/math9111226

Chicago/Turabian StyleNajafi-Zangeneh, Saeed, Naser Shams-Gharneh, Ali Arjomandi-Nezhad, and Sarfaraz Hashemkhani Zolfani. 2021. "An Improved Machine Learning-Based Employees Attrition Prediction Framework with Emphasis on Feature Selection" Mathematics 9, no. 11: 1226. https://doi.org/10.3390/math9111226

APA StyleNajafi-Zangeneh, S., Shams-Gharneh, N., Arjomandi-Nezhad, A., & Hashemkhani Zolfani, S. (2021). An Improved Machine Learning-Based Employees Attrition Prediction Framework with Emphasis on Feature Selection. Mathematics, 9(11), 1226. https://doi.org/10.3390/math9111226