An Adaptive Cuckoo Search-Based Optimization Model for Addressing Cyber-Physical Security Problems

Abstract

1. Introduction

- (a)

- Improving the classical CSA using an effective strategy called the convergence improvement strategy (CIS) to produce a new variant able to accurately tackle NESs. This variant was named ICSA.

- (b)

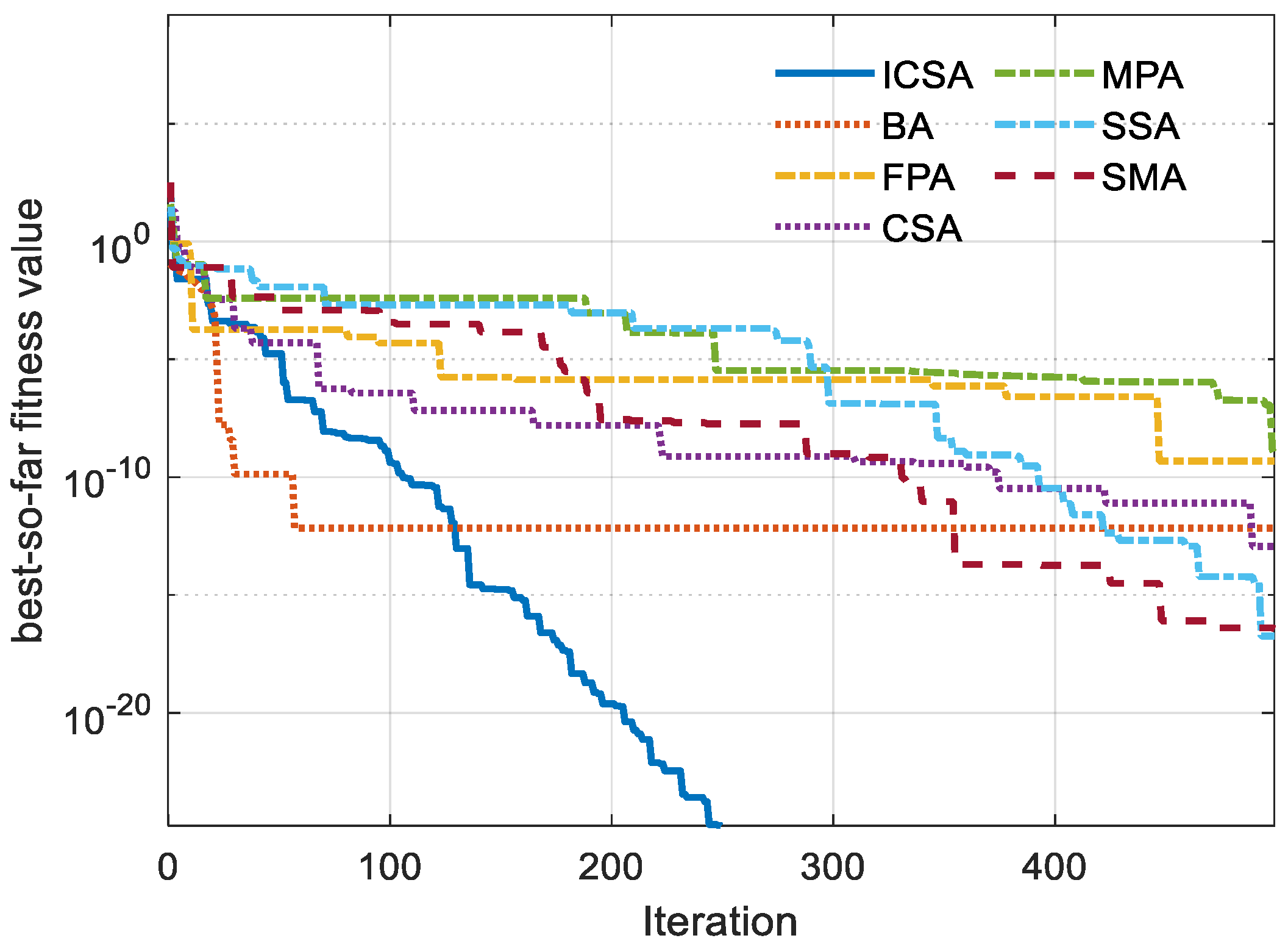

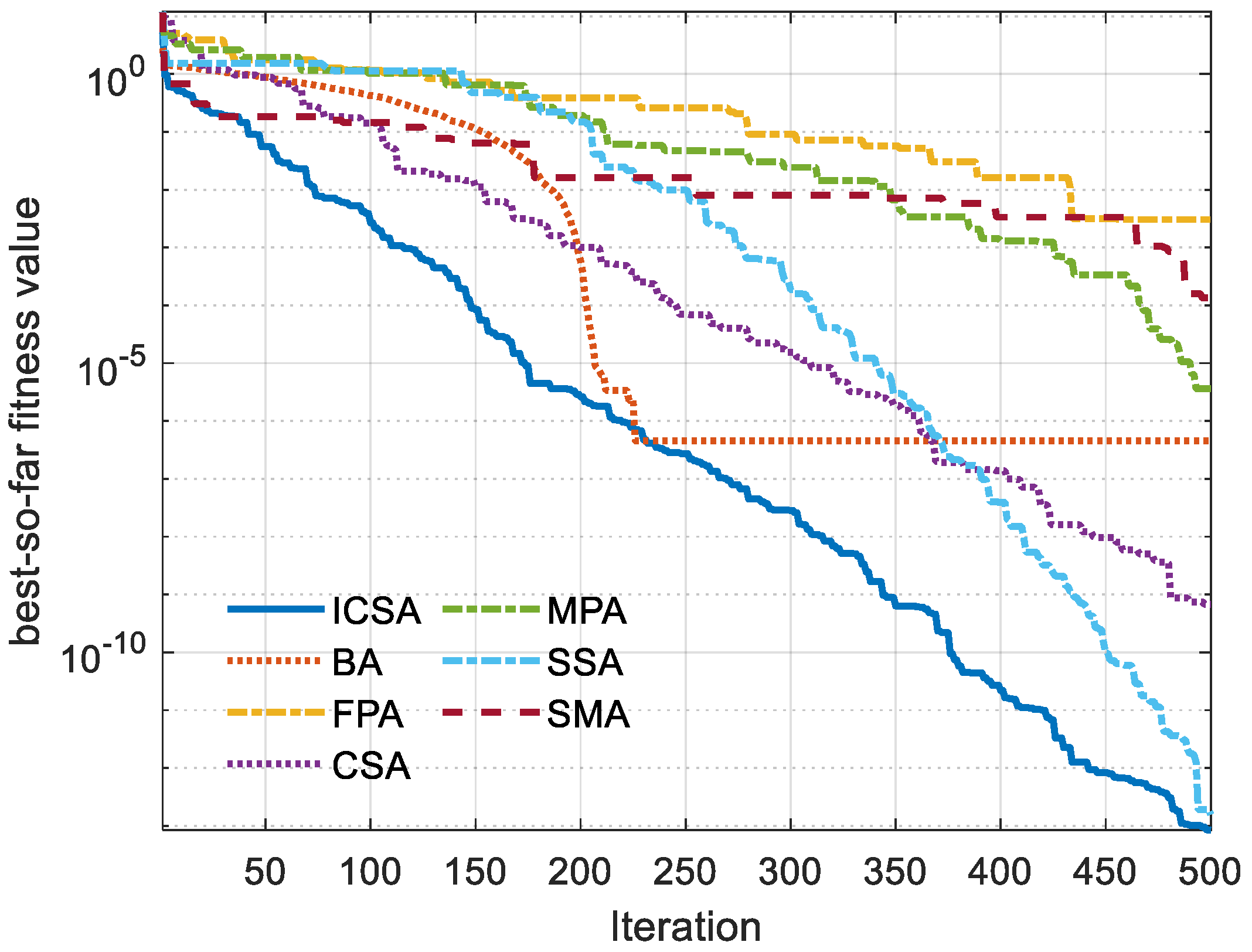

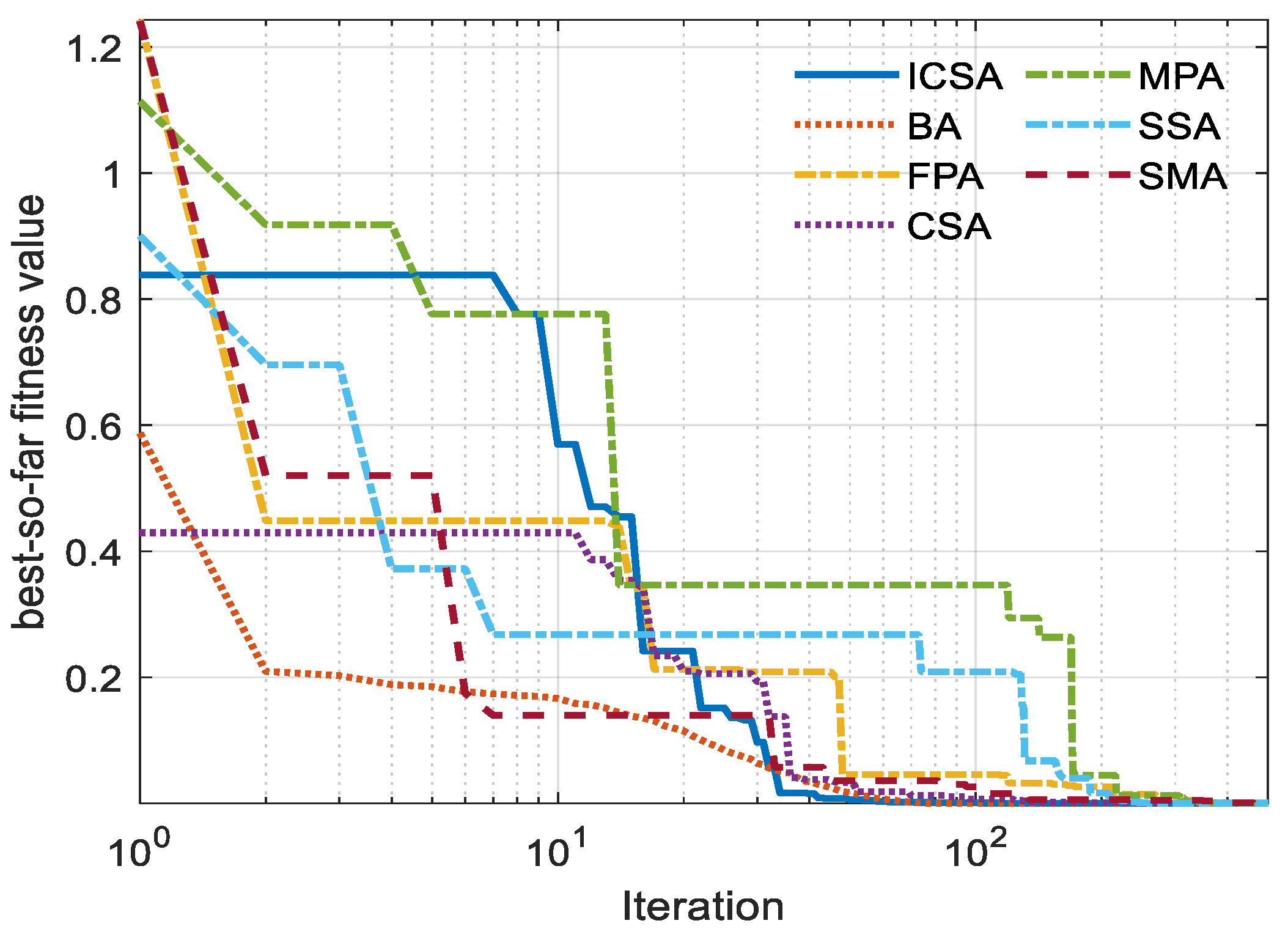

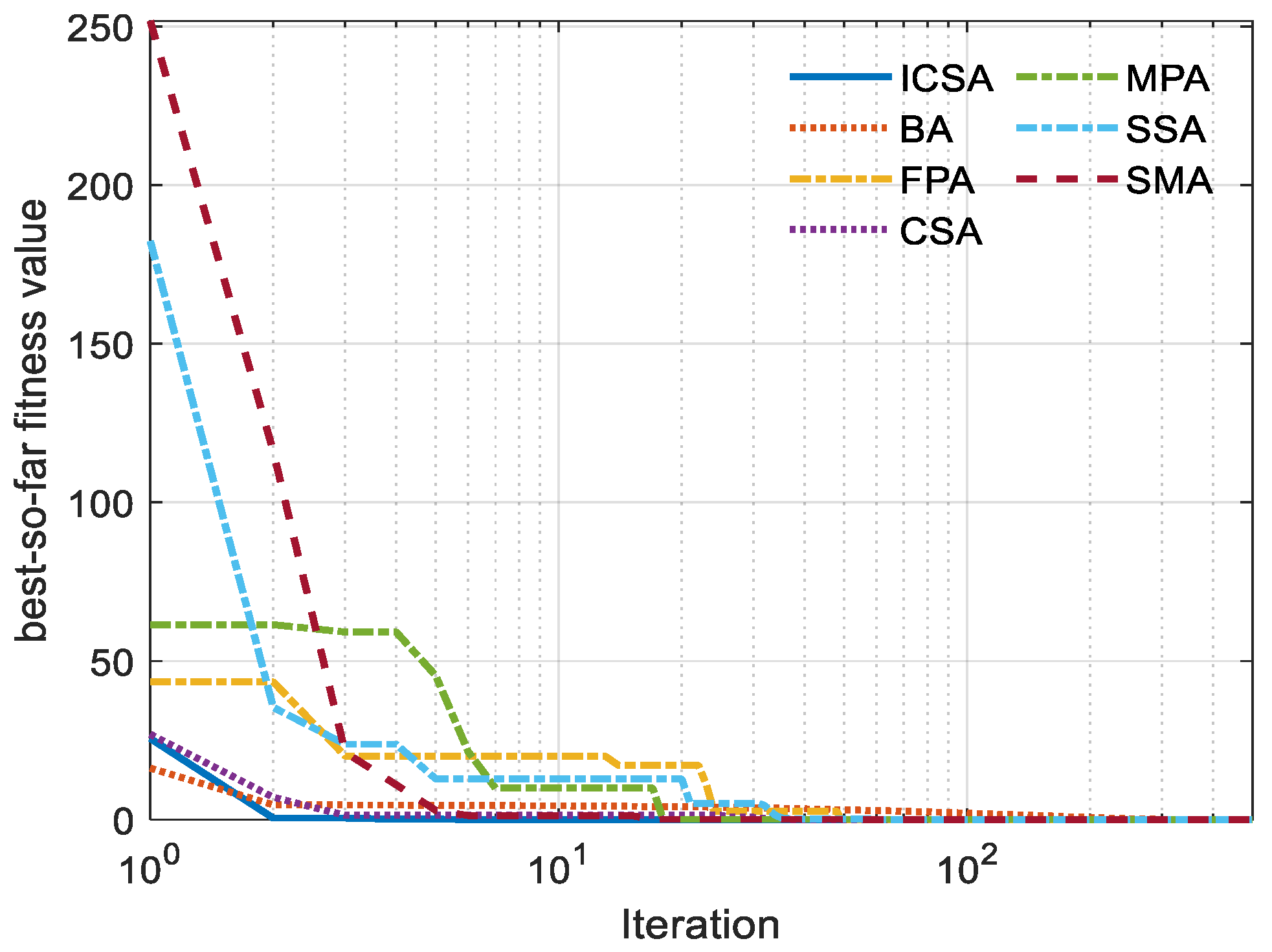

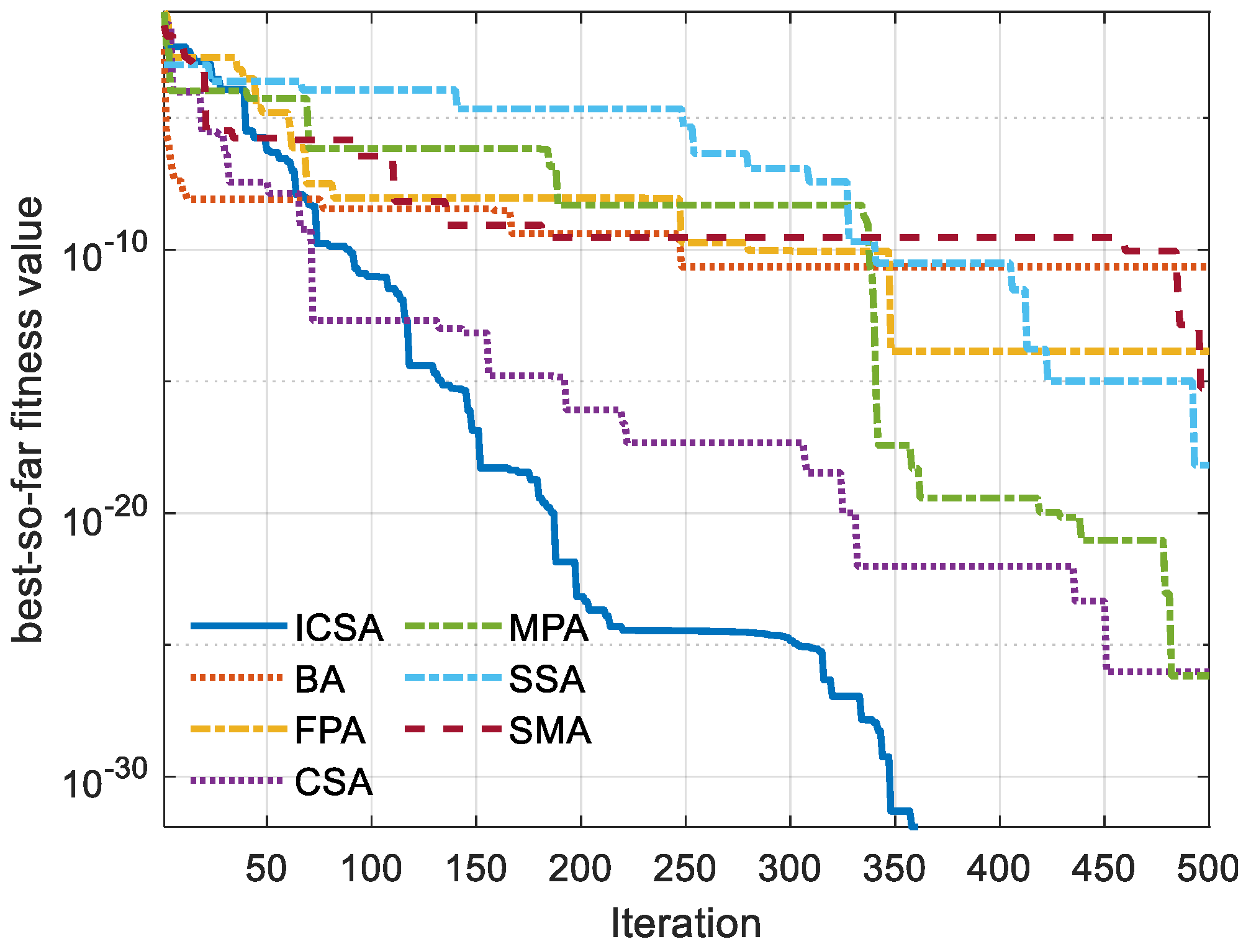

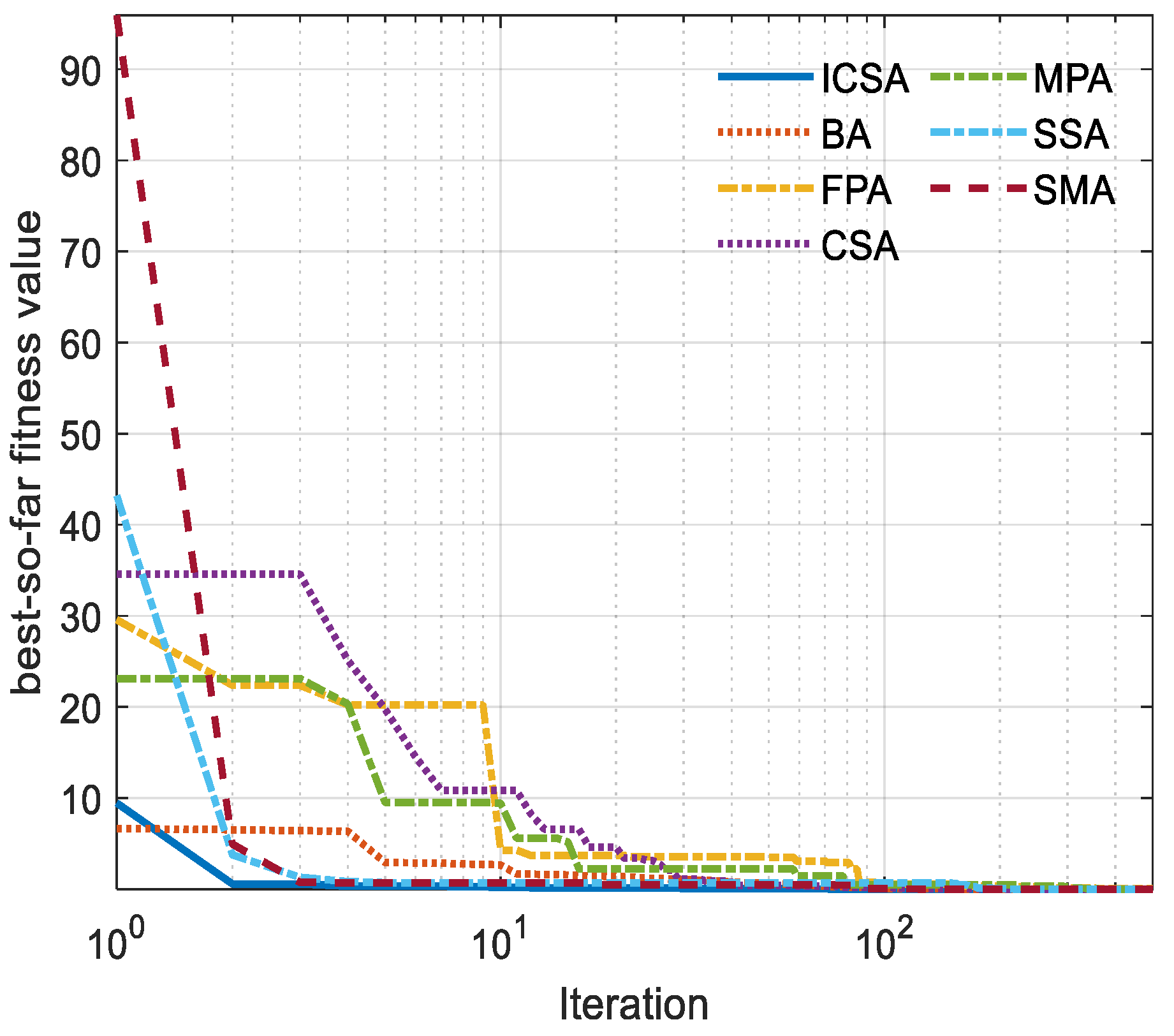

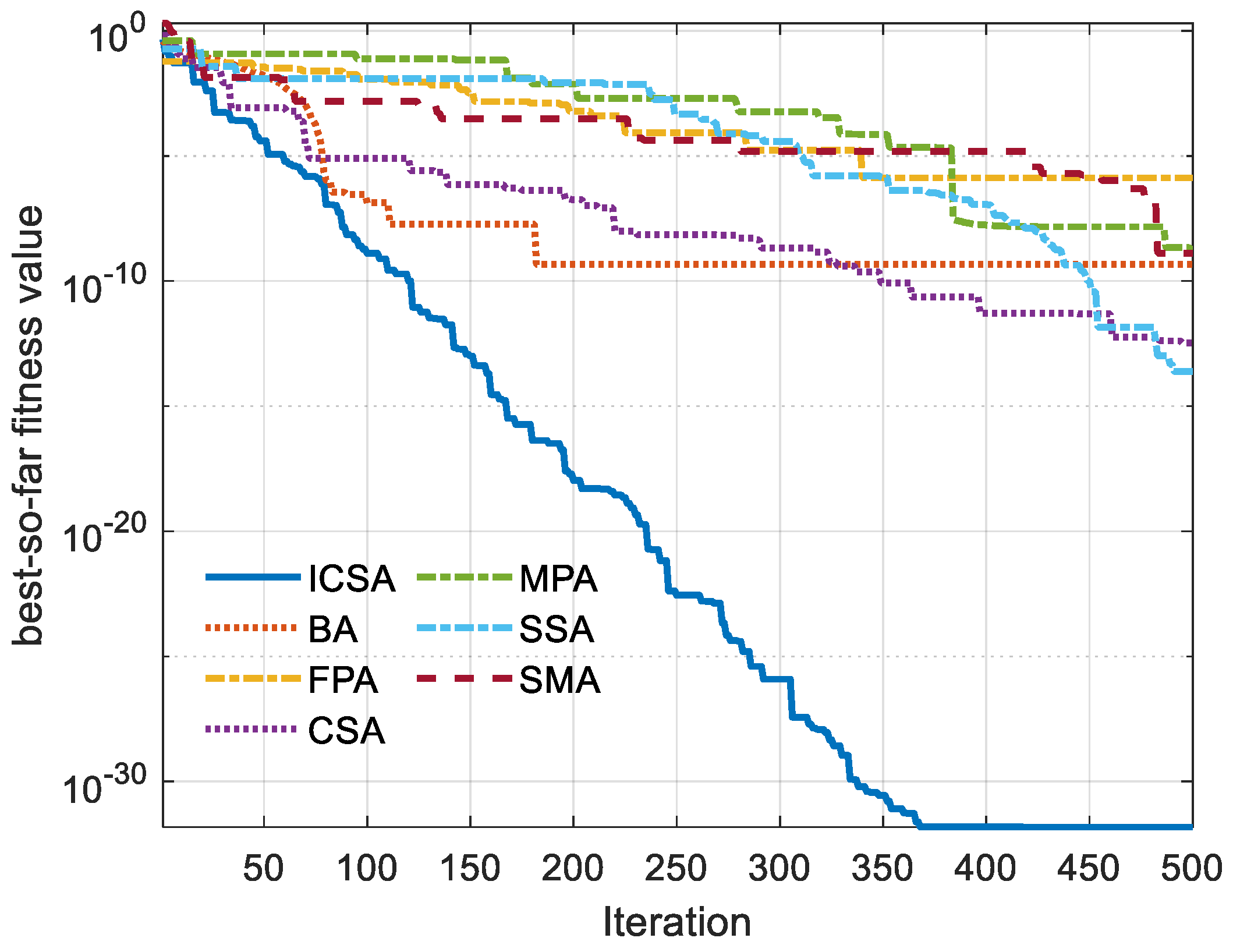

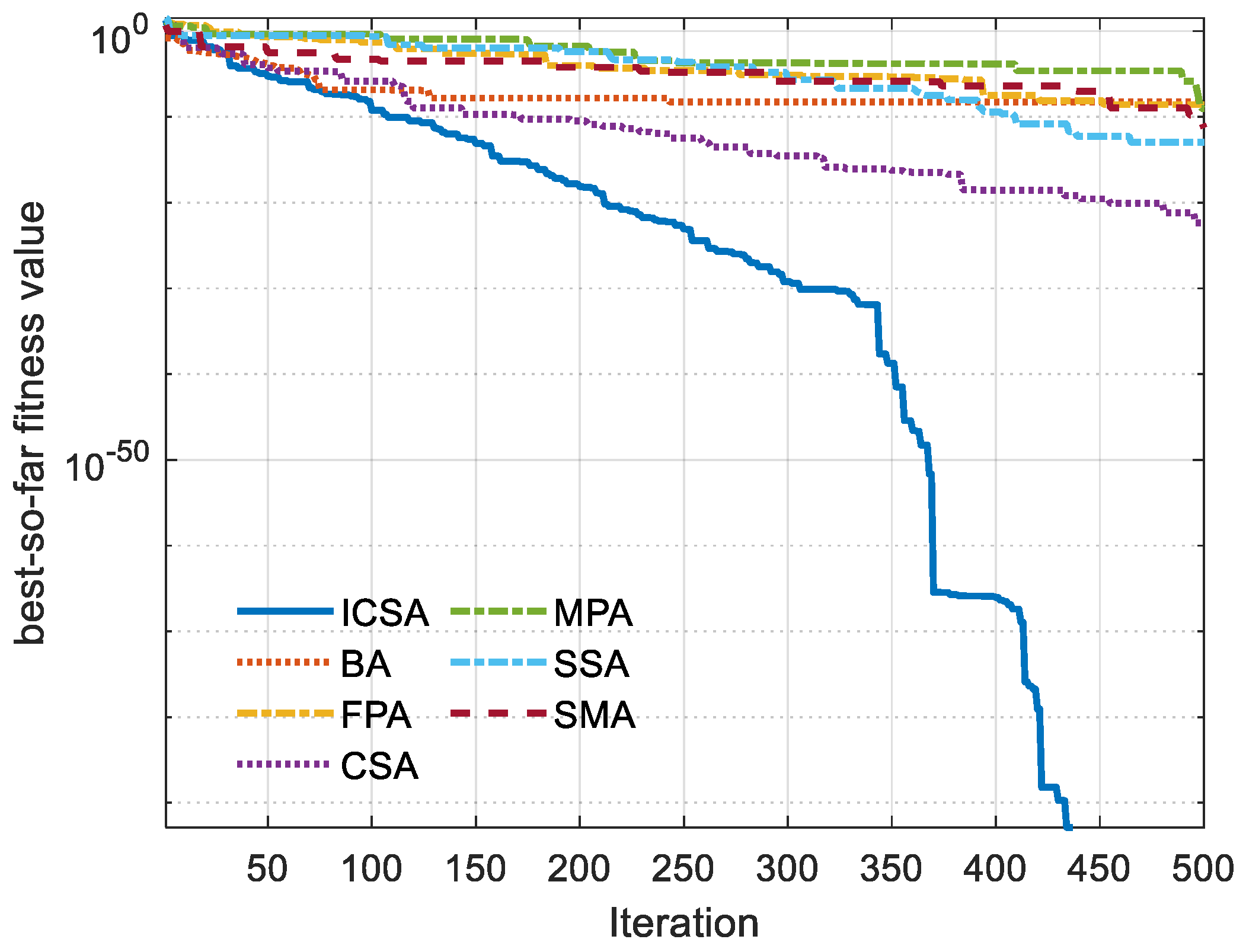

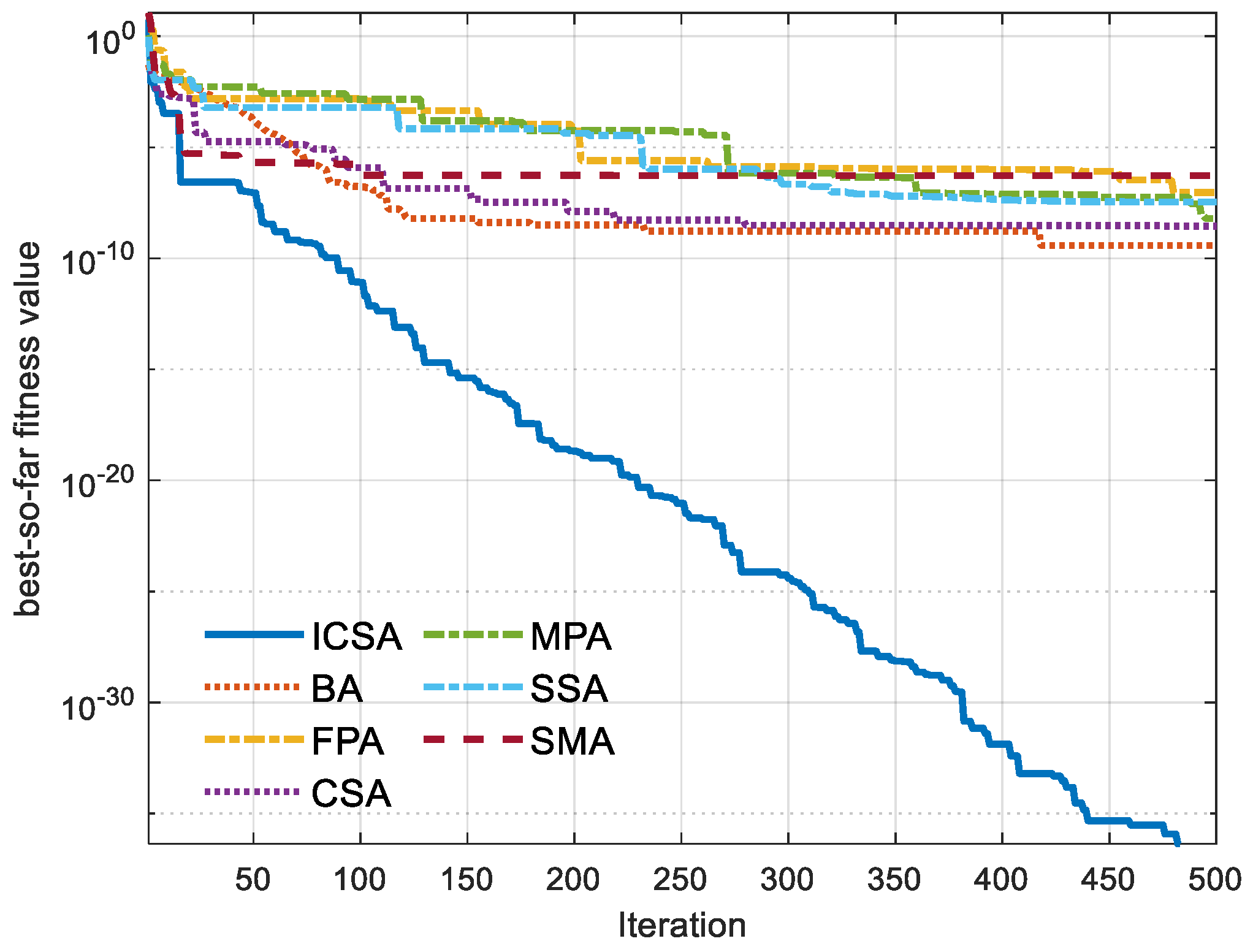

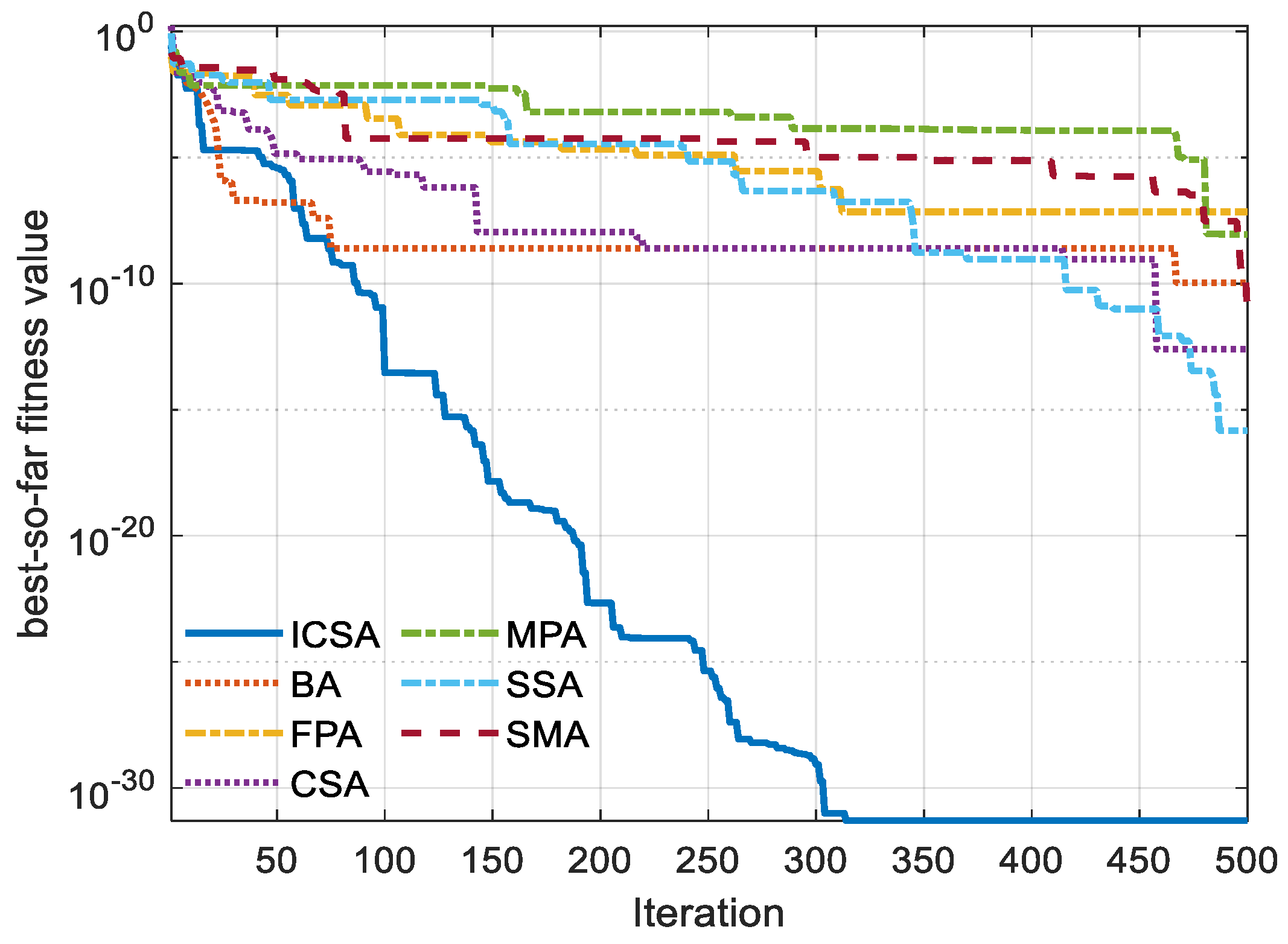

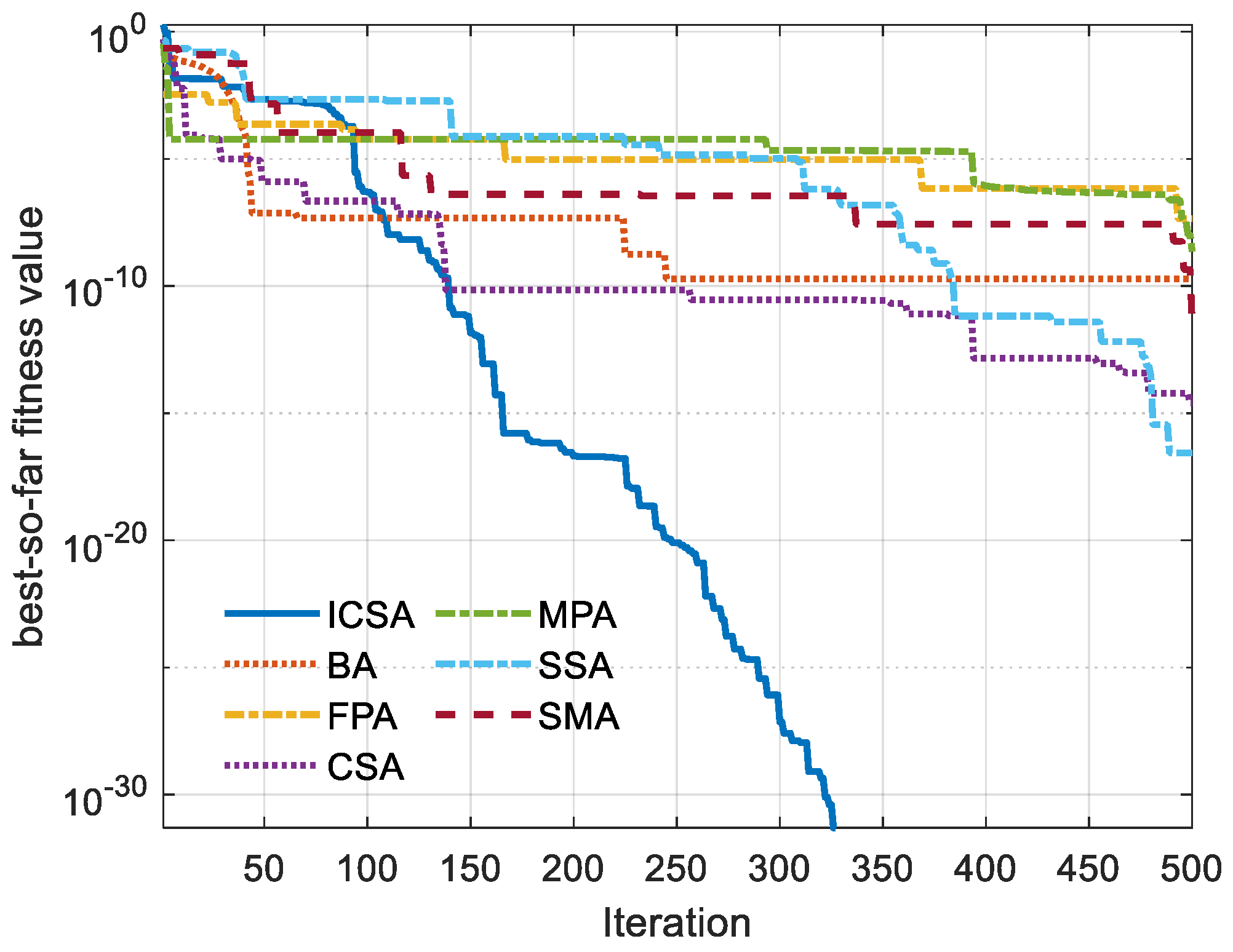

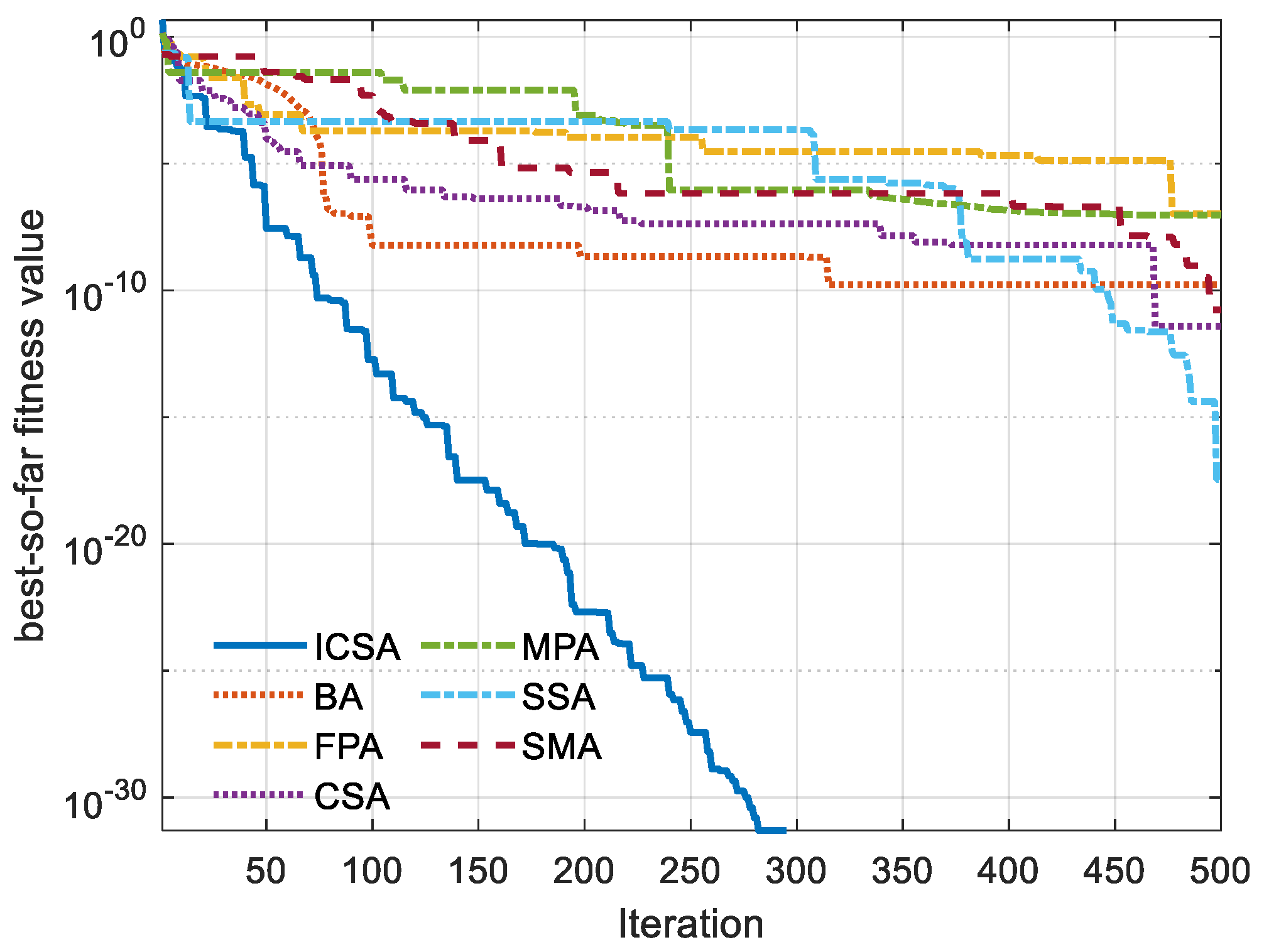

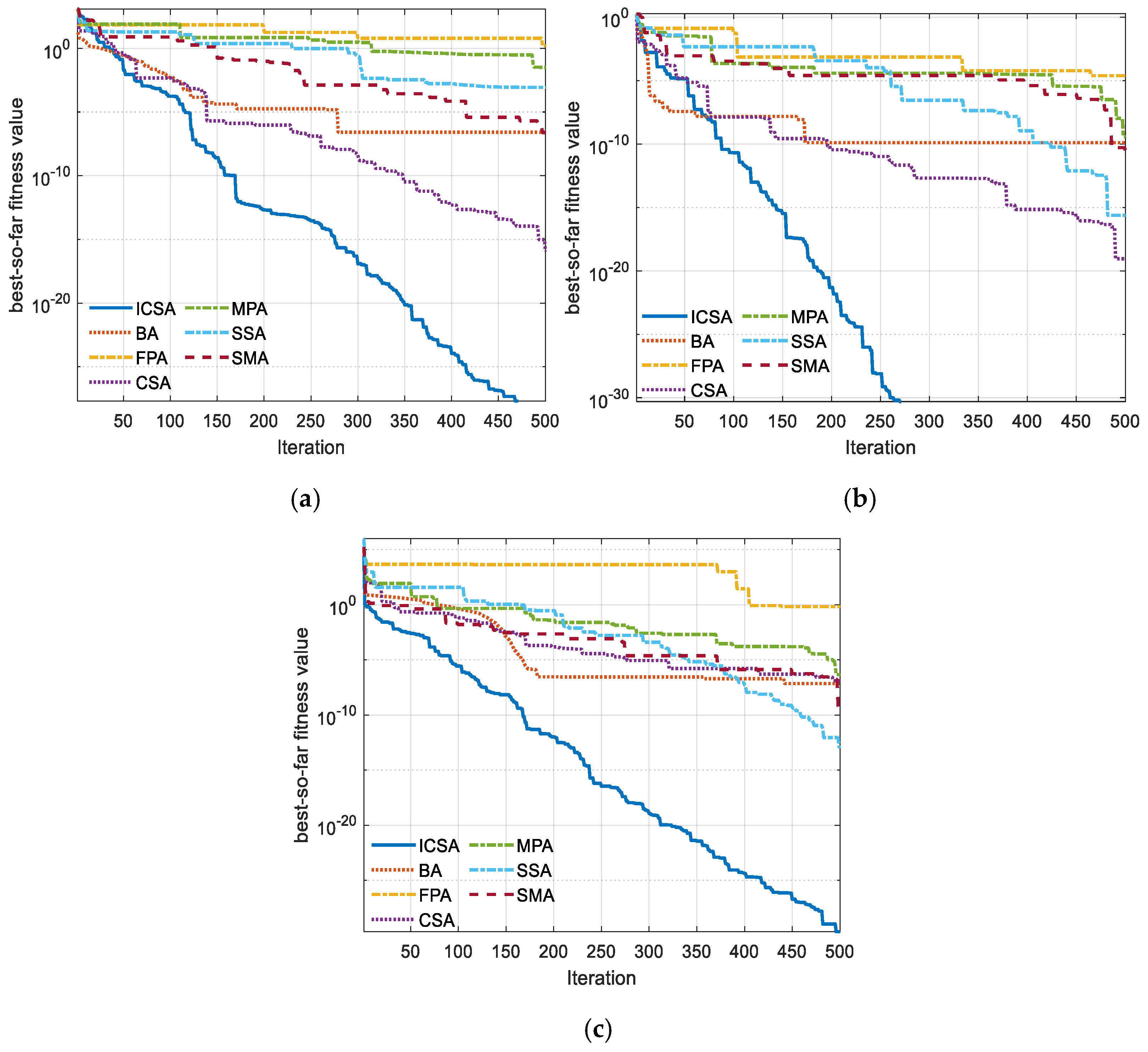

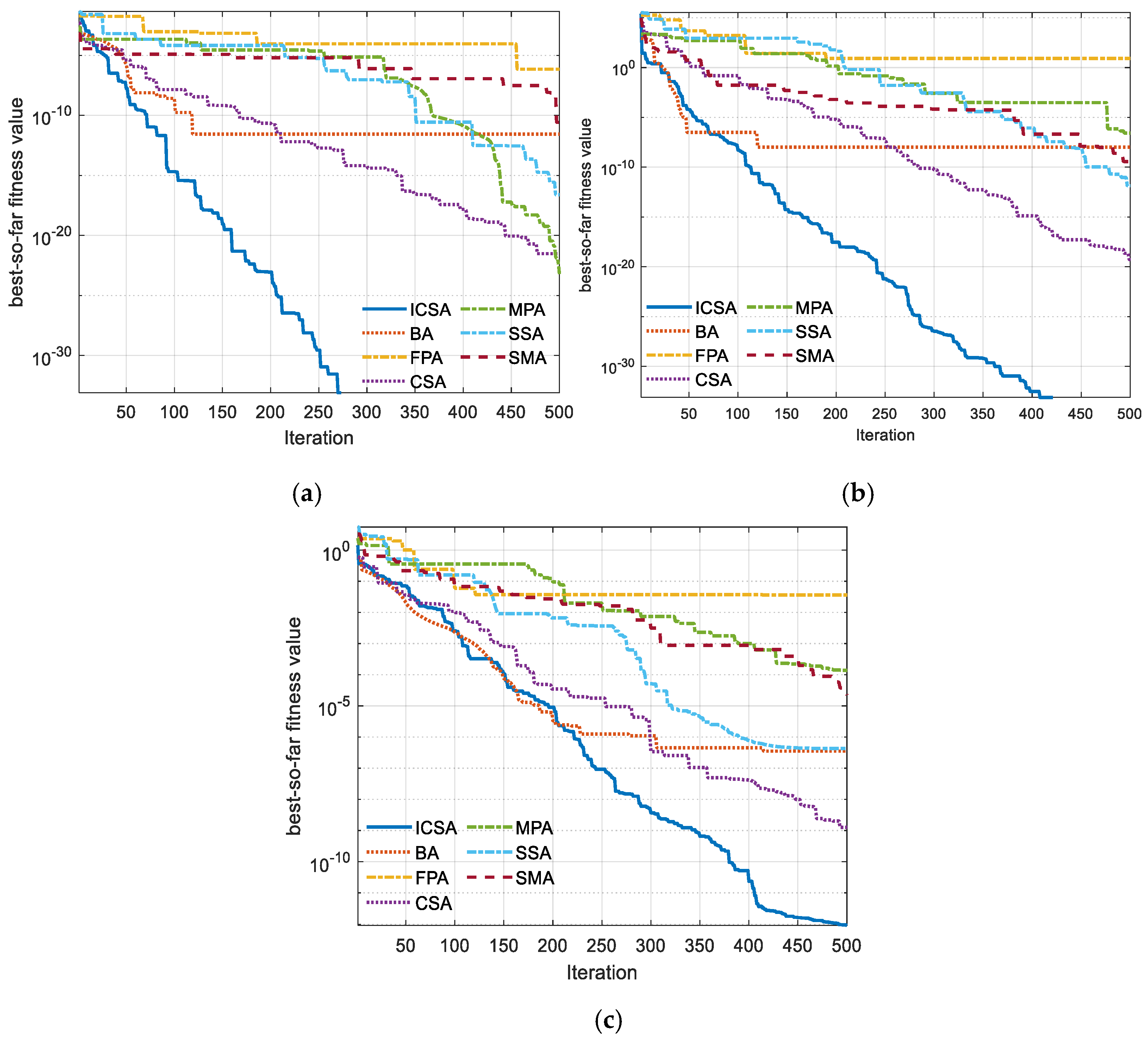

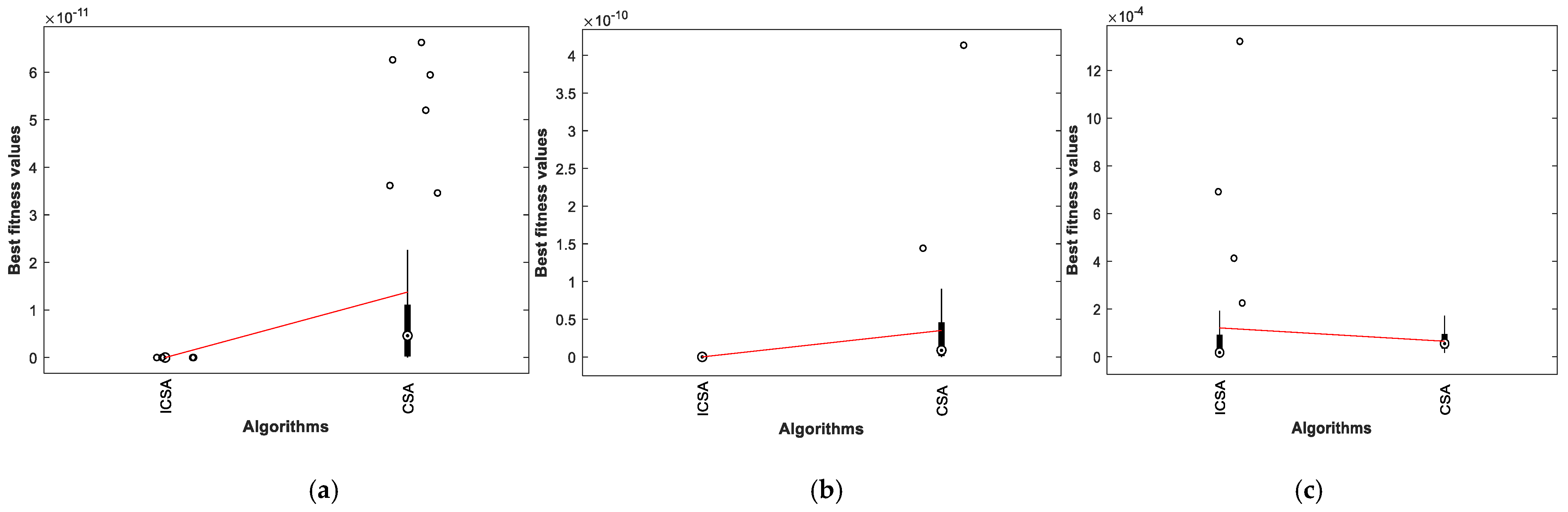

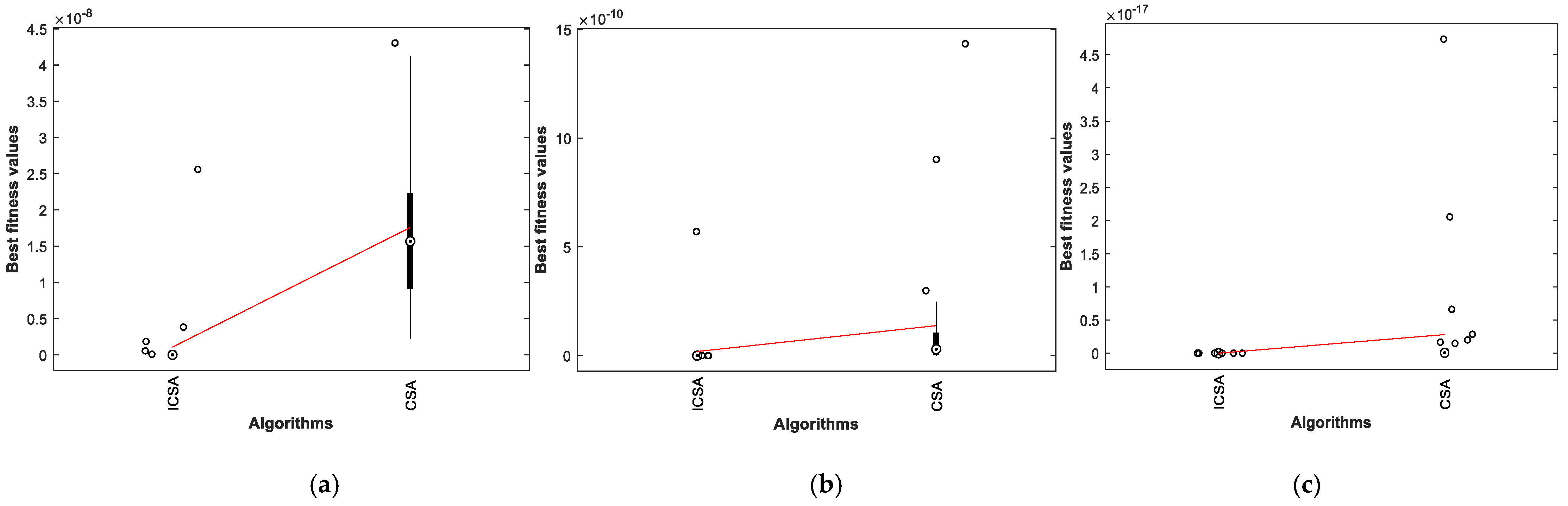

- The experiments conducted on 34 well-known NES cases to assess the performance of this variant, in addition to comparing its performance with 6 well-established optimization algorithms, show the efficacy of this variant in terms of the convergence speed and final accuracy for most test cases.

2. Literature Review

2.1. Problem Description

2.2. Swarm and Evolutionary Algorithms

3. Standard Algorithm: Cuckoo Search Algorithm

- (1)

- Each cuckoo lays one egg at a time and put its egg in a randomly chosen nest;

- (2)

- The best nests with eggs having high quality will be used in the next generation;

- (3)

- The available host nests number is fixed, and the cuckoos can discover a foreign egg with a probability that varies between 0 and 1.

| Algorithm 1 The steps of CSA |

|

4. Proposed Algorithm

4.1. Initialization

4.2. Convergence Improvement Strategy (CIS)

4.3. Improved Cuckoo Search Algorithm (ICSA)

| Algorithm 2 The steps of ICSA |

|

5. Outcomes and Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Moustafa, N.; Slay, J. The evaluation of Network Anomaly Detection Systems: Statistical analysis of the UNSW-NB15 data set and the comparison with the KDD99 data set. Inf. Secur. J. A Glob. Perspect. 2016, 25, 18–31. [Google Scholar] [CrossRef]

- Moustafa, N.; Slay, J.; Creech, G. Novel Geometric Area Analysis Technique for Anomaly Detection Using Trapezoidal Area Estimation on Large-Scale Networks. IEEE Trans. Big Data 2019, 5, 481–494. [Google Scholar] [CrossRef]

- Facchinei, F.; Kanzow, C. Generalized Nash Equilibrium Problems. Ann. Oper. Res. 2009, 175, 177–211. [Google Scholar] [CrossRef]

- Liao, Z.; Gong, W.; Wang, L. Memetic niching-based evolutionary algorithms for solving nonlinear equation system. Expert Syst. Appl. 2020, 149, 113261. [Google Scholar] [CrossRef]

- Darvishi, M.; Barati, A. A third-order Newton-type method to solve systems of nonlinear equations. Appl. Math. Comput. 2007, 187, 630–635. [Google Scholar] [CrossRef]

- Knoll, D.; Keyes, D. Jacobian-free Newton–Krylov methods: A survey of approaches and applications. J. Comput. Phys. 2004, 193, 357–397. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Chang, V.; Mohamed, R. A novel equilibrium optimization algorithm for multi-thresholding image segmentation problems. Neural Comput. Appl. 2020, 1–34. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Chang, V.; Mohamed, R. HSMA_WOA: A hybrid novel Slime mould algorithm with whale optimization algorithm for tackling the image segmentation problem of chest X-ray images. Appl. Soft Comput. 2020, 95, 106642. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; El-Shahat, D.; Chakrabortty, R.K.; Ryan, M. Parameter estimation of photovoltaic models using an improved marine predators algorithm. Energy Convers. Manag. 2021, 227, 113491. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Abouhawwash, M. Balanced multi-objective optimization algorithm using improvement based reference points approach. Swarm Evol. Comput. 2021, 60, 100791. [Google Scholar] [CrossRef]

- Allaoui, Mohcin, Belaïd Ahiod, and Mohamed El Yafrani. A hybrid crow search algorithm for solving the DNA fragment assembly problem. Expert Syst. Appl. 2018, 102, 44–56. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Abouhawwash, M.; Chakrabortty, R.; Ryan, M. A Simple and Effective Approach for Tackling the Permutation Flow Shop Scheduling Problem. Mathematics 2021, 9, 270. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Chakrabortty, R.K.; Sallam, K.; Ryan, M.J. An efficient teaching-learning-based optimization algorithm for parameters identification of photovoltaic models: Analysis and validations. Energy Convers. Manag. 2021, 227, 113614. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Elhoseny, M.; Bashir, A.K.; Jolfaei, A.; Kumar, N. Energy-aware marine predators algorithm for task scheduling in IoT-based fog computing applications. IEEE Trans. Ind. Inform. 2020, 17, 5068–5076. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Elhoseny, M.; Chakrabortty, R.K.; Ryan, M. A Hybrid COVID-19 Detection Model Using an Improved Marine Predators Algorithm and a Ranking-Based Diversity Reduction Strategy. IEEE Access 2020, 8, 79521–79540. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Elhoseny, M.; Chakrabortty, R.K.; Ryan, M.J. An efficient heap-based optimization algorithm for parameters identification of proton exchange membrane fuel cells model: Analysis and case studies. Int. J. Hydrogen Energy 2021, 46, 11908–11925. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Chakrabortty, R.K.; Ryan, M.J.; Mirjalili, S. An efficient binary slime mould algorithm integrated with a novel attacking-feeding strategy for feature selection. Comput. Ind. Eng. 2021, 153, 107078. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Abouhawwash, M.; Chakrabortty, R.K.; Ryan, M.J. EA-MSCA: An effective energy-aware multi-objective modified sine-cosine algorithm for real-time task scheduling in multiprocessor systems: Methods and analysis. Expert Syst. Appl. 2021, 173, 114699. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Mirjalili, S.; Chakrabortty, R.K.; Ryan, M.J. Solar photovoltaic parameter estimation using an improved equilibrium optimizer. Sol. Energy 2020, 209, 694–708. [Google Scholar] [CrossRef]

- Civicioglu, P.; Besdok, E. Bernstain-search differential evolution algorithm for numerical function optimization. Expert Syst. Appl. 2019, 138, 112831. [Google Scholar] [CrossRef]

- Keshk, M.; Sitnikova, E.; Moustafa, N.; Hu, J.; Khalil, I. An Integrated Framework for Privacy-Preserving Based Anomaly Detection for Cyber-Physical Systems. IEEE Trans. Sustain. Comput. 2019, 6, 66–79. [Google Scholar] [CrossRef]

- Li, S.; Chen, H.; Wang, M.; Heidari, A.A.; Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Future Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Yang, X.-S.; He, X. Bat algorithm: Literature review and applications. Int. J. Bio Inspired Comput. 2013, 5, 141–149. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Yang, X.-S.; Deb, S. Cuckoo search via Lévy flights. In Proceedings of the 2009 World Congress on Nature & Biologically Inspired Computing (NaBIC), Coimbatore, India, 9–11 December 2009. [Google Scholar]

- Yang, X.-S. Flower pollination algorithm for global optimization. In Proceedings of the International Conference on Unconventional Computing and Natural Computation, Orléans, France, 3–7 September 2012. [Google Scholar]

- Wu, J.; Cui, Z.; Liu, J. Using hybrid social emotional optimization algorithm with metropolis rule to solve nonlinear equations. In Proceedings of the IEEE 10th International Conference on Cognitive Informatics and Cognitive Computing (ICCI-CC’11), Banff, UK, 18–20 August 2011. [Google Scholar]

- Wu, Z.; Kang, L. A fast and elitist parallel evolutionary algorithm for solving systems of non-linear equations. In Proceedings of the 2003 Congress on Evolutionary Computation, 2003. CEC’03, Canberra, ACT, Australia, 8–12 December 2003. [Google Scholar]

- Luo, Y.-Z.; Tang, G.-J.; Zhou, L.-N. Hybrid approach for solving systems of nonlinear equations using chaos optimization and quasi-Newton method. Appl. Soft Comput. 2008, 8, 1068–1073. [Google Scholar] [CrossRef]

- Wu, J.; Gong, W.; Wang, L. A clustering-based differential evolution with different crowding factors for nonlinear equations system. Appl. Soft Comput. 2021, 98, 106733. [Google Scholar] [CrossRef]

- Rizk-Allah, R.M. A quantum-based sine cosine algorithm for solving general systems of nonlinear equations. Artif. Intell. Rev. 2021, 1–52. [Google Scholar] [CrossRef]

- Mangla, C.; Ahmad, M.; Uddin, M. Optimization of complex nonlinear systems using genetic algorithm. Int. J. Inf. Technol. 2020, 1–13. [Google Scholar] [CrossRef]

- Hassan, O.F.; Jamal, A.; Abdel-Khalek, S. Genetic algorithm and numerical methods for solving linear and nonlinear system of equations: A comparative study. J. Intell. Fuzzy Syst. 2020, 38, 2867–2872. [Google Scholar] [CrossRef]

- Jaiswal, S.; Kumar, C.S.; Seepana, M.M.; Babu, G.U.B. Design of Fractional Order PID Controller Using Genetic Algorithm Optimization Technique for Nonlinear System. Chem. Prod. Process. Model. 2020, 15. [Google Scholar] [CrossRef]

- El-Shorbagy, M.A.; El-Refaey, A.M. Hybridization of Grasshopper Optimization Algorithm with Genetic Algorithm for Solving System of Non-Linear Equations. IEEE Access 2020, 8, 220944–220961. [Google Scholar] [CrossRef]

- Wetweerapong, J.; Puphasuk, P. An improved differential evolution algorithm with a restart technique to solve systems of nonlinear equations. Int. J. Optim. Control. Theor. Appl. (IJOCTA) 2020, 10, 118–136. [Google Scholar] [CrossRef]

- Wierstra, D.; Schaul, T.; Peters, J.; Schmidhuber, J. Natural evolution strategies. In Proceedings of the 2008 IEEE Congress on Evolutionary Computation (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–6 June 2008. [Google Scholar]

- Santucci, V.; Milani, A. Particle swarm optimization in the EDAs framework. In Soft Computing in Industrial Applications; Springer: Berlin/Heidelberg, Germany, 2011; pp. 87–96. [Google Scholar]

- Larrañaga, P.; Lozano, J.A. Estimation of Distribution Algorithms: A New Tool for Evolutionary Computation; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2001; Volume 2. [Google Scholar]

- Hansen, N. The CMA evolution strategy: A comparing review. Towards New Evol. Comput. 2006, 192, 75–102. [Google Scholar]

- Sarvari, S.; Sani, N.F.M.; Hanapi, Z.M.; Abdullah, M.T. An efficient anomaly intrusion detection method with feature selection and evolutionary neural network. IEEE Access 2020, 8, 70651–70663. [Google Scholar] [CrossRef]

- Song, W.; Wang, Y.; Li, H.-X.; Cai, Z. Locating multiple optimal solutions of nonlinear equation systems based on multiobjective optimization. IEEE Trans. Evol. Comput. 2015, 19, 414–431. [Google Scholar] [CrossRef]

- Grosan, C.; Abraham, A. A New Approach for Solving Nonlinear Equations Systems. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2008, 38, 698–714. [Google Scholar] [CrossRef]

- Pourjafari, E.; Mojallali, H. Solving nonlinear equations systems with a new approach based on invasive weed optimization algorithm and clustering. Swarm Evol. Comput. 2012, 4, 33–43. [Google Scholar] [CrossRef]

- Sacco, W.; Henderson, N. Finding all solutions of nonlinear systems using a hybrid metaheuristic with Fuzzy Clustering Means. Appl. Soft Comput. 2011, 11, 5424–5432. [Google Scholar] [CrossRef]

- Hirsch, M.J.; Pardalos, P.M.; Resende, M.G. Solving systems of nonlinear equations with continuous GRASP. Nonlinear Anal. Real World Appl. 2009, 10, 2000–2006. [Google Scholar] [CrossRef]

- Sharma, J.R.; Arora, H. On efficient weighted-Newton methods for solving systems of nonlinear equations. Appl. Math. Comput. 2013, 222, 497–506. [Google Scholar] [CrossRef]

- Ingber, L.; Petraglia, A.; Petraglia, M.R. Adaptive simulated annealing. In Stochastic Global Optimization and Its Applications with Fuzzy Adaptive Simulated Annealing; Springer: Berlin/Heidelberg, Germany, 2012; pp. 33–62. [Google Scholar]

- Morgan, A.; Shapiro, V. Box-bisection for solving second-degree systems and the problem of clustering. ACM Trans. Math. Softw. 1987, 13, 152–167. [Google Scholar] [CrossRef]

- Grau-Sánchez, M.; Grau, À.; Noguera, M. Frozen divided difference scheme for solving systems of nonlinear equations. J. Comput. Appl. Math. 2011, 235, 1739–1743. [Google Scholar] [CrossRef]

- Hueso, J.L.; Martínez, E.; Torregrosa, J.R. Modified Newton’s method for systems of nonlinear equations with singular Jacobian. J. Comput. Appl. Math. 2009, 224, 77–83. [Google Scholar] [CrossRef]

- Waziri, M.; Leong, W.J.; Hassan, M.A.; Monsi, M. An efficient solver for systems of nonlinear equations with singular Jacobian via diagonal updating. Appl. Math. Sci. 2010, 4, 3403–3412. [Google Scholar]

- Turgut, O.E.; Turgut, M.S.; Coban, M.T. Chaotic quantum behaved particle swarm optimization algorithm for solving nonlinear system of equations. Comput. Math. Appl. 2014, 68, 508–530. [Google Scholar] [CrossRef]

- Kasuya, E. Mann-Whitney U-test when variances are unequal. Anim. Behav. 2001, 61, 1247–1249. [Google Scholar] [CrossRef]

| Function | Formulas | D | References | |

|---|---|---|---|---|

| F1 | 2 | [43] | ||

| F2 | 2 | [43] | ||

| F3 | 10 | [44] | ||

| F4 | 4 | [45] | ||

| F5 | 2 | [46] | ||

| F6 | 2 | [47] | ||

| F7 | 8 | [4] | ||

| F8 | 3 | [48] | ||

| F9 | 2 | [45] | ||

| F10 | 2 | [49] | ||

| F11 | 2 | [49] | ||

| F12 | 20 | [43] | ||

| F13 | 5 | [50] | ||

| F14 | 5 | [51] | ||

| F15 | 3 | [4] | ||

| F16 | 3 | [52] | ||

| F17 | 3 | [52] | ||

| F18 | 3 | [53] | ||

| F19 | 2 | [4] | ||

| F20 | 2 | [4] | ||

| F21 | 2 | [4] | ||

| F22 | 3 | [4] | ||

| F23 | 2 | [4] | ||

| F24 | 2 | [4] | ||

| F25 | 2 | [4] | ||

| F26 | 2 | [4] | ||

| F27 | 2 | [4] | ||

| F28 | 2 | [4] | ||

| F29 | 3 | [54] | ||

| F30 | 2 | [54] | ||

| F31 | 6 | [54] | ||

| F32 | 2 | [54] | ||

| F33 | 2 | [52] | ||

| F34 | 5 | [54] |

| F | ICSA | BA | FPA | CSA | MPA | SSA | SMA | ICSA | BA | FPA | CSA | MPA | SSA | SMA | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | Best | 0 | 3 × 10−10 | 2 × 10−9 | 7 × 10−14 | 2 × 10−22 | 1 × 10−17 | 0 | F15 | 1 × 10−32 | 5 × 10−8 | 5 × 10−5 | 2 × 10−6; | 7 × 10−19 | 1 × 10−14 | 3 × 10−6; |

| - | Avg | 0 | 3 × 10−9 | 7 × 10−8 | 3 × 10−11 | 6 × 10−7 | 2 × 10−15 | 2 × 10−305 | - | 1 × 10−2 | 9 × 10+02 | 7 × 10−3 | 4 × 10−5 | 1 × 10−4 | 2 × 10−12 | 3 × 10−4 |

| - | Worst | 0 | 1 × 10−8 | 3 × 10−7 | 2 × 10−10 | 6 × 10−6; | 1 × 10−14 | 5 × 10−304 | - | 6 × 10−2 | 2 × 10+04 | 7 × 10−2 | 3 × 10−4 | 3 × 10−3 | 2 × 10−11 | 7 × 10−3 |

| - | SD | 0 | 3 × 10−9 | 9 × 10−8 | 5 × 10−11 | 1 × 10−6; | 3 × 10−15 | 0 | - | 2 × 10−2 | 4 × 10+03 | 1 × 10−2 | 6 × 10−5 | 5 × 10−4 | 4 × 10−12 | 1 × 10−3 |

| F2 | Best | 0 | 1 × 10−10 | 5 × 10−9 | 6 × 10−14 | 1 × 10−8 | 1 × 10−19 | 2 × 10−18 | F16 | 0 | 1 × 10−43 | 6 × 10−44 | 1 × 10−93 | 3 × 10−40 | 7 × 10−57 | 2 × 10−41 |

| - | Avg | 5 × 10−32 | 6 × 10−2 | 5 × 10−7 | 3 × 10−11 | 8 × 10−6; | 8 × 10−14 | 2 × 10−9 | - | 9 × 10−14 | 2 × 10−3 | 2 × 10−32 | 5 × 10−69 | 7 × 10−22 | 9 × 10−49 | 3 × 10−32 |

| - | Worst | 3 × 10−31 | 7 × 10−1 | 2 × 10−6; | 2 × 10−10 | 8 × 10−5 | 5 × 10−13 | 3 × 10−8 | - | 2 × 10−12 | 5 × 10−2 | 6 × 10−31 | 2 × 10−67 | 1 × 10−20 | 7 × 10−48 | 6 × 10−31 |

| - | SD | 1 × 10−31 | 2 × 10−1 | 5 × 10−7 | 4 × 10−11 | 2 × 10−5 | 1 × 10−13 | 7 × 10−9 | - | 4 × 10−13 | 9 × 10−3 | 1 × 10−31 | 3 × 10−68 | 3 × 10−21 | 2 × 10−48 | 1 × 10−31 |

| F3 | Best | 6 × 10−11 | 8 × 10−7 | 4 × 10−3 | 9 × 10−10 | 4 × 10−6; | 1 × 10−13 | 2 × 10−4 | F17 | 0 | 9 × 10−9 | 2 × 10−11 | 6 × 10−24 | 5 × 10−9 | 1 × 10−13 | 5 × 10−12 |

| - | Avg | 5 × 10−8 | 2 × 10−6; | 1 × 10−2 | 5 × 10−9 | 6 × 10−5 | 4 × 10−13 | 2 × 10−3 | - | 2 × 10−31 | 2 × 10−4 | 9 × 10−7 | 7 × 10−18 | 5 × 10−6; | 3 × 10−5 | 6 × 10−9 |

| - | Worst | 6 × 10−7 | 2 × 10−5 | 3 × 10−2 | 1 × 10−8 | 2 × 10−4 | 7 × 10−13 | 7 × 10−3 | - | 3 × 10−30 | 3 × 10−3 | 8 × 10−6; | 2 × 10−16; | 4 × 10−5 | 4 × 10−4 | 1 × 10−7 |

| - | SD | 1 × 10−7 | 3 × 10−6; | 6 × 10−3 | 3 × 10−9 | 6 × 10−5 | 1 × 10−13 | 2 × 10−3 | - | 8 × 10−31 | 5 × 10−4 | 2 × 10−6; | 3 × 10−17 | 1 × 10−5 | 7 × 10−5 | 3 × 10−8 |

| F4 | Best | 3 × 10−21 | 9 × 10−4 | 2 × 10−4 | 6 × 10−9 | 1 × 10−3 | 3 × 10−6; | 4.00 | F18 | 0 | 5 × 10−10 | 6 × 10−8 | 9 × 10−12 | 7 × 10−8 | 4 × 10−12 | 1 × 10−6; |

| - | Avg | 6 × 10−2 | 4.00 | 2 × 10−2 | 5 × 10−4 | 3 × 10−2 | 6 × 10−2 | 4.00 | - | 2 × 10−7 | 2 × 10−4 | 9 × 10−7 | 8 × 10−8 | 4 × 10−5 | 3 × 10−5 | 4 × 10−6; |

| - | Worst | 4 × 10−1 | 1 × 10 | 9 × 10−2 | 6 × 10−3 | 3 × 10−1 | 2 × 10−1 | 4.00 | - | 1 × 10−6; | 4 × 10−3 | 3 × 10−6; | 4 × 10−7 | 2 × 10−4 | 2 × 10−4 | 9 × 10−5 |

| - | SD | 1 × 10−1 | 5.00 | 2 × 10−2 | 1 × 10−3 | 5 × 10−2 | 8 × 10−2 | 7 × 10−2 | - | 4 × 10−7 | 8 × 10−4 | 7 × 10−7 | 1 × 10−7 | 6 × 10−5 | 5 × 10−5 | 2 × 10−5 |

| F5 | Best | 0 | 2 × 10−8 | 4 × 10−6; | 1 × 10−11 | 2 × 10−6; | 2 × 10−12 | 2 × 10−10 | F19 | 0 | 1 × 10−6; | 8 × 10−10 | 6 × 10−22 | 5 × 10−11 | 4 × 10−13 | 4 × 10−12 |

| - | Avg | 4 × 10−29 | 6 × 10−7 | 2 × 10−4 | 5 × 10−9 | 4 × 10−3 | 1 × 10−10 | 5 × 10−8 | - | 1 × 10−32 | 1 × 10−1 | 4 × 10−7 | 5 × 10−19 | 2 × 10−6; | 2 × 10−5 | 1 × 10−8 |

| - | Worst | 8 × 10−28 | 3 × 10−6; | 6 × 10−4 | 5.×10−8 | 8 × 10−2 | 9.× 10−10 | 4 × 10−7 | - | 4 × 10−31 | 3.00 | 7 × 10−6; | 1 × 10−17 | 3 × 10−5 | 2 × 10−4 | 4 × 10−7 |

| - | SD | 2 × 10−28 | 8 × 10−7 | 2 × 10−4 | 1 × 10−8 | 1 × 10−2 | 2 × 10−10 | 1 × 10−7 | - | 8 × 10−32 | 6 × 10−1 | 1 × 10−6; | 2 × 10−18 | 6 × 10−6; | 4 × 10−5 | 7 × 10−8 |

| F6 | Best | 0 | 0 | 0 | 0 | 0 | 0 | 0 | F20 | 0 | 3 × 10−12 | 7 × 10−11 | 2 × 10−16; | 1 × 10−10 | 5 × 10−18 | 4 × 10−13 |

| - | Avg | 0 | 3 × 10−31 | 2 × 10−32 | 1 × 10−32 | 0 | 0 | 0 | - | 0 | 6 × 10−10 | 1 × 10−8 | 3 × 10−13 | 3 × 10−7 | 5 × 10−16; | 5 × 10−10 |

| - | Worst | 0 | 1 × 10−30 | 3 × 10−31 | 3 × 10−31 | 0 | 0 | 0 | - | 0 | 6 × 10−9 | 1 × 10−7 | 3 × 10−12 | 2 × 10−6; | 2 × 10−15 | 5.×10−9 |

| - | SD | 0 | 4 × 10−31 | 8 × 10−32 | 5 × 10−32 | 0 | 0 | 0 | - | 0 | 1 × 10−9 | 2 × 10−8 | 6 × 10−13 | 5 × 10−7 | 5 × 10−16; | 1 × 10−9 |

| F7 | Best | 2 × 10−15 | 7 × 10−7 | 4 × 10−3 | 4 × 10−5 | 1 × 10−6; | 8 × 10−14 | 9 × 10−13 | F21 | 0 | 9 × 10−11 | 2 × 10−8 | 3 × 10−15 | 8 × 10−10 | 3 × 10−16; | 2 × 10−11 |

| - | Avg | 4× 10−5 | 1 × 10−2 | 2 × 10−2 | 2 × 10−4 | 6 × 10−4 | 7 × 10−3 | 4×10−5 | - | 3 × 10−32 | 5 × 10−9 | 2 × 10−6; | 1 × 10−11 | 4 × 10−6; | 7 × 10−15 | 8 × 10−9 |

| - | Worst | 5× 10−4 | 2 × 10−1 | 4 × 10−2 | 7 × 10−4 | 1 × 10−2 | 2 × 10−1 | 5×10−4 | - | 2 × 10−31 | 3 × 10−8 | 1 × 10−5 | 1 × 10−10 | 5 × 10−5 | 3 × 10−14 | 1 × 10−7 |

| - | SD | 1 × 10−4 | 5 × 10−2 | 9 × 10−3 | 1 × 10−4 | 2 × 10−3 | 4 × 10−2 | 1×10−4 | - | 6 × 10−32 | 5 × 10−9 | 3 × 10−6; | 2 × 10−11 | 9 × 10−6; | 7 × 10−15 | 2 × 10−8 |

| F8 | Best | 0 | 2 × 10−9 | 2 × 10−7 | 2 × 10−11 | 3 × 10−7 | 5 × 10−15 | 3 × 10−9 | F22 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| - | Avg | 7 × 10−33 | 5,00 | 1 × 10−5 | 9 × 10−10 | 1 × 10−4 | 8 × 10−13 | 9 × 10−8 | - | 0 | 5 × 10−9 | 0 | 0 | 0 | 0 | 0 |

| - | Worst | 6 × 10−32 | 5 × 10 | 8 × 10−5 | 6 × 10−9 | 1 × 10−3 | 4 × 10−12 | 1 × 10−6; | - | 0 | 4 × 10−8 | 0 | 0 | 0 | 0 | 0 |

| - | SD | 1 × 10−32 | 1 × 10 | 1 × 10−5 | 1 × 10−9 | 2 × 10−4 | 1 × 10−12 | 2 × 10−7 | - | 0 | 9 × 10−9 | 0 | 0 | 0 | 0 | 0 |

| F9 | Best | 0 | 2 × 10−13 | 3 × 10−15 | 2 × 10−31 | 2 × 10−27 | 6 × 10−19 | 3 × 10−16; | F23 | 0 | 2 × 10−10 | 2 × 10−7 | 1 × 10−12 | 9 × 10−8 | 3 × 10−14 | 4 × 10−11 |

| - | Avg | 0 | 3 × 10−10 | 7 × 10−11 | 1 × 10−18 | 7 × 10−7 | 6 × 10−16; | 2 × 10−11 | - | 1 × 10−31 | 2 × 10−8 | 4 × 10−6; | 2 × 10−9 | 2 × 10−4 | 2 × 10−12 | 4 × 10−8 |

| - | Worst | 0 | 8 × 10−10 | 1 × 10−9 | 2 × 10−17 | 2 × 10−5 | 3 × 10−15 | 4 × 10−10 | - | 8 × 10−31 | 1 × 10−7 | 2 × 10−5 | 2 × 10−8 | 2 × 10−3 | 1 × 10−11 | 4 × 10−7 |

| - | SD | 0 | 2 × 10−10 | 3 × 10−10 | 4 × 10−18 | 4 × 10−6; | 8 × 10−16; | 8 × 10−11 | - | 3 × 10−31 | 2 × 10−8 | 5 × 10−6; | 4. × 10−9 | 4 × 10−4 | 3 × 10−12 | 1 × 10−7 |

| F10 | Best | 0 | 1 × 10−10 | 5 × 10−8 | 5 × 10−15 | 2 × 10−7 | 1 × 10−14 | 3 × 10−11 | F24 | 0 | 5 × 10−12 | 1 × 10−8 | 1 × 10−13 | 3 × 10−8 | 1 × 10−16; | 1 × 10−10 |

| - | Avg | 4 × 10−30 | 5.00 | 3 × 10−6; | 9 × 10−12 | 3 × 10−4 | 2 × 10−12 | 3 × 10−8 | - | 5 × 10−31 | 8 × 10−2 | 7 × 10−6; | 2 × 10−10 | 2 × 10−5 | 1 × 10−13 | 6 × 10−2 |

| - | Worst | 1 × 10−28 | 7 × 10 | 8 × 10−6; | 5 × 10−11 | 2 × 10−3 | 7 × 10−12 | 4 × 10−7 | - | 3 × 10−30 | 2.00 | 5 × 10−5 | 1 × 10−9 | 2 × 10−4 | 5 × 10−13 | 9 × 10−1 |

| - | SD | 2 × 10−29 | 2 × 10 | 2 × 10−6; | 1 × 10−11 | 6 × 10−4 | 2 × 10−12 | 7 × 10−8 | - | 1 × 10−30 | 5 × 10−1 | 1 × 10−5 | 4 × 10−10 | 5 × 10−5 | 1 × 10−13 | 2 × 10−1 |

| F11 | Best | 3 × 10−32 | 2 × 10−10 | 4 × 10−7 | 2 × 10−13 | 5 × 10−22 | 5 × 10−17 | 2 × 10−10 | F25 | 0 | 1 × 10−9 | 4 × 10−7 | 3 × 10−11 | 6 × 10−21 | 1 × 10−13 | 3 × 10−10 |

| - | Avg | 1 × 10−31 | 4 × 10−9 | 9 × 10−6; | 3 × 10−11 | 5 × 10−5 | 3 × 10−14 | 3 × 10−8 | - | 7 × 10−3 | 4 × 10−2 | 2 × 10−5 | 8 × 10−9 | 7 × 10−3 | 7 × 10−3 | 1 × 10−2 |

| - | Worst | 2 × 10−31 | 2 × 10−8 | 3 × 10−5 | 5 × 10−10 | 3 × 10−4 | 2 × 10−13 | 1 × 10−7 | - | 1 × 10−1 | 1 × 10−1 | 1 × 10−4 | 4 × 10−8 | 1 × 10−1 | 1 × 10−1 | 1 × 10−1 |

| - | SD | 1 × 10−31 | 3 × 10−9 | 1 × 10−5 | 9 × 10−11 | 9 × 10−5 | 4 × 10−14 | 3 × 10−8 | - | 3 × 10−2 | 5 × 10−2 | 3 × 10−5 | 1 × 10−8 | 3 × 10−2 | 3 × 10−2 | 3 × 10−2 |

| F12 | Best | 8 × 10−6; | 6 × 10−6; | 2 × 10−2 | 1 × 10−5 | 4 × 10−5 | 3 × 10−5 | 1 × 10−12 | F26 | 0 | 2 × 10−11 | 4 × 10−7 | 2 × 10−11 | 4 × 10−8 | 4 × 10−15 | 4 × 10−11 |

| - | Avg | 2 × 10−3 | 7 × 10−4 | 1 × 10−1 | 5 × 10−5 | 2 × 10−2 | 7 × 10−5 | 4 × 10−9 | - | 4 × 10−31 | 2 × 10−9 | 1 × 10−5 | 1 × 10−8 | 2 × 10−3 | 2 × 10−3 | 7 × 10−4 |

| - | Worst | 3 × 10−2 | 1 × 10−2 | 2 × 10−1 | 9 × 10−5 | 3 × 10−1 | 2 × 10−4 | 3 × 10−8 | - | 3 × 10−30 | 8 × 10−9 | 1 × 10−4 | 8 × 10−8 | 7 × 10−3 | 7 × 10−3 | 7 × 10−3 |

| - | SD | 6 × 10−3 | 2 × 10−3 | 5 × 10−2 | 3 × 10−5 | 6 × 10−2 | 3 × 10−5 | 7 × 10−9 | - | 9 × 10−31 | 2 × 10−9 | 2 × 10−5 | 2 × 10−8 | 3 × 10−3 | 3 × 10−3 | 2 × 10−3 |

| F13 | Best | 3× 10−14 | 5 × 10−8 | 4 × 10−6; | 2 × 10−9 | 8 × 10−6; | 2 × 10−8 | 3 × 10−7 | F27 | 0 | 3 × 10−11 | 2 × 10−8 | 5 × 10−18 | 1 × 10−10 | 5 × 10−16; | 2 × 10−12 |

| - | Avg | 9× 10−13 | 1 × 10−1 | 2 × 10−5 | 2 × 10−8 | 1 × 10−3 | 5 × 10−5 | 8 × 10−5 | - | 2 × 10−31 | 1 × 10−1 | 1 × 10−6; | 2 × 10−11 | 8 × 10−6; | 2 × 10−14 | 3 × 10−8 |

| - | Worst | 4× 10−12 | 3.00 | 6 × 10−5 | 1 × 10−7 | 9 × 10−3 | 3 × 10−4 | 4 × 10−4 | - | 2 × 10−30 | 1.00 | 9 × 10−6; | 4 × 10−10 | 9 × 10−5 | 1 × 10−13 | 1 × 10−7 |

| - | SD | 1 × 10−12 | 6 × 10−1 | 1 × 10−5 | 2 × 10−8 | 2 × 10−3 | 8 × 10−5 | 1 × 10−4 | - | 5 × 10−31 | 4 × 10−1 | 2 × 10−6; | 8 × 10−11 | 2 × 10−5 | 3 × 10−14 | 4 × 10−8 |

| F14 | Best | 2 × 10−32 | 5 × 10−9 | 3 × 10−6; | 5 × 10−14 | 1 × 10−8 | 3 × 10−14 | 2 × 10−9 | F28 | 0 | 2 × 10−9 | 3 × 10−8 | 3 × 10−15 | 4 × 10−8 | 1 × 10−16; | 3 × 10−11 |

| - | Avg | 2 × 10−32 | 3 × 10−8 | 2 × 10−5 | 2 × 10−10 | 2 × 10−4 | 1 × 10−4 | 8 × 10−7 | - | 2 × 10−2 | 3 × 10−2 | 4 × 10−6; | 2 × 10−10 | 1 × 10−5 | 7 × 10−3 | 1 × 10−2 |

| - | Worst | 5 × 10−32 | 8 × 10−8 | 5 × 10−5 | 4 × 10−9 | 2 × 10−3 | 3 × 10−3 | 1 × 10−5 | - | 5 × 10−2 | 5 × 10−2 | 4 × 10−5 | 4 × 10−9 | 1 × 10−4 | 5 × 10−2 | 5 × 10−2 |

| - | SD | 8 × 10−33 | 2 × 10−8 | 1 × 10−5 | 7 × 10−10 | 4 × 10−4 | 5 × 10−4 | 3 × 10−6; | - | 3 × 10−2 | 3 × 10−2 | 7 × 10−6; | 8 × 10−10 | 2 × 10−5 | 2 × 10−2 | 2 × 10−2 |

| F | BA | FPA | CSA | MPA | SSA | SMA | BA | FPA | CSA | MPA | SSA | SMA | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P-value | h | P-value | h | P-value | h | P-value | h | P-value | h | P-value | h | F | P-value | h | P-value | h | P-value | h | P-value | h | P-value | h | P-value | h | |

| F1 | 1 × 10−12 | 1 | 1 × 10−12 | 1 | 1 × 10−12 | 1 | 1 × 10−12 | 1 | 1 × 10−12 | 1 | 4 × 10−2 | 1 | F15 | 3 × 10−1 | 0 | 5 × 10−1 | 0 | 1 × 10−5 | 1 | 1 × 10−5 | 1 | 1 × 10−6; | 1 | 4 × 10−5 | 1 |

| F2 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | F16 | 9 × 10−10 | 1 | 3 × 10−9 | 1 | 3 × 10−9 | 1 | 3 × 10−9 | 1 | 3 × 10−9 | 1 | 3 × 10−9 | 1 |

| F3 | 3 × 10−11 | 1 | 3 × 10−11 | 1 | 1 × 10−1 | 0 | 3 × 10−11 | 1 | 3 × 10−11 | 1 | 3 × 10−11 | 1 | F17 | 9 × 10−12 | 1 | 9 × 10−12 | 1 | 9 × 10−12 | 1 | 9 × 10−12 | 1 | 9 × 10−12 | 1 | 9 × 10−12 | 1 |

| F4 | 1 × 10−6; | 1 | 6 × 10−2 | 0 | 4 × 10−1 | 0 | 1 × 10−2 | 1 | 1 × 10−2 | 1 | 3 × 10−11 | 1 | F18 | 7 × 10−8 | 1 | 2 × 10−6; | 1 | 2 × 10−2 | 1 | 5 × 10−9 | 1 | 4 × 10−9 | 1 | 4 × 10−11 | 1 |

| F5 | 4 × 10−12 | 1 | 4 × 10−12 | 1 | 4 × 10−12 | 1 | 4 × 10−12 | 1 | 4 × 10−12 | 1 | 4 × 10−12 | 1 | F19 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 |

| F6 | 3 × 10−7 | 1 | 2 × 10−1 | 0 | 3 × 10−1 | 0 | NaN | 0 | NaN | 0 | NaN | 0 | F20 | 1 × 10−12 | 1 | 1 × 10−12 | 1 | 1 × 10−12 | 1 | 1 × 10−12 | 1 | 1 × 10−12 | 1 | 1 × 10−12 | 1 |

| F7 | 6 × 10−10 | 1 | 3 × 10−11 | 1 | 4 × 10−10 | 1 | 4 × 10−10 | 1 | 2 × 10−3 | 1 | 2 × 10−10 | 1 | F21 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 |

| F8 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | F22 | 1 × 10−4 | 1 | NaN | 0 | NaN | 0 | NaN | 0 | NaN | 0 | NaN | 0 |

| F9 | 1 × 10−12 | 1 | 1 × 10−12 | 1 | 1 × 10−12 | 1 | 1 × 10−12 | 1 | 1 × 10−12 | 1 | 1 × 10−12 | 1 | F23 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 | 2 × 10−11 | 1 |

| F10 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | F24 | 9 × 10−12 | 1 | 9 × 10−12 | 1 | 9 × 10−12 | 1 | 9 × 10−12 | 1 | 9 × 10−12 | 1 | 9 × 10−12 | 1 |

| F11 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | F25 | 8 × 10−10 | 1 | 6 × 10−9 | 1 | 6 × 10−9 | 1 | 4 × 10−9 | 1 | 4 × 10−9 | 1 | 3 × 10−9 | 1 |

| F12 | 6 × 10−3 | 1 | 4 × 10−11 | 1 | 8 × 10−6; | 1 | 9 × 10−2 | 0 | 2 × 10−4 | 1 | 3 × 10−11 | 1 | F26 | 8 × 10−12 | 1 | 8 × 10−12 | 1 | 8 × 10−12 | 1 | 8 × 10−12 | 1 | 8 × 10−12 | 1 | 8 × 10−12 | 1 |

| F13 | 1 × 10−8 | 1 | 7 × 10−8 | 1 | 1 × 10−7 | 1 | 2 × 10−8 | 1 | 8 × 10−8 | 1 | 5 × 10−8 | 1 | F27 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 | 1 × 10−11 | 1 |

| F14 | 9 × 10−12 | 1 | 9 × 10−12 | 1 | 9 × 10−12 | 1 | 9 × 10−12 | 1 | 9 × 10−12 | 1 | 9 × 10−12 | 1 | F28 | 4 × 10−6; | 1 | 4 × 10−1 | 0 | 4 × 10−1 | 0 | 4 × 10−1 | 0 | 9 × 10−2 | 0 | 1 × 10−2 | 1 |

| F | ICSA | BA | FPA | CSA | MPA | SSA | SMA | F | ICSA | BA | FPA | CSA | MPA | SSA | SMA | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F29 | Best | 0 | 2 × 10−7 | 1 × 10−8 | 3 × 10−17 | 5 × 10−2 | 2 × 10−2 | 2 × 10−6; | F32 | Best | 0 | 3 × 10−12 | 2 × 10−11 | 1 × 10−22 | 4 × 10−9 | 8 × 10−17 |

| - | Avg | 2 × 10−27 | 2 × 10−5 | 4 × 10−7 | 1 × 10−14 | 2.00 | 5 × 10−1 | 2 × 10−4 | - | Avg | 5 × 10−34 | 4 × 10−4 | 3 × 10−9 | 1 × 10−16; | 2 × 10−6; | 5 × 10−14 |

| - | Worst | 5 × 10−26; | 5 × 10−5 | 3 × 10−6; | 1 × 10−13 | 6.00 | 3.00 | 2 × 10−3 | - | Worst | 3 × 10−33 | 1 × 10−2 | 1 × 10−8 | 7 × 10−16; | 1 × 10−5 | 2 × 10−13 |

| - | SD | 1 × 10−26; | 1 × 10−5 | 7 × 10−7 | 2 × 10−14 | 1.00 | 5 × 10−1 | 5 × 10−4 | - | SD | 6 × 10−34 | 2 × 10−3 | 4 × 10−9 | 2 × 10−16; | 3 × 10−6; | 8 × 10−14 |

| F30 | Best | 0 | 2 × 10−11 | 2 × 10−9 | 7 × 10−19 | 4 × 10−11 | 1 × 10−16; | 9 × 10−13 | F33 | Best | 1 × 10−17 | 2 × 10+02 | 4 × 10 | 6.00 | 2 × 10+02 | 9 × 10+02 |

| - | Avg | 1 × 10−31 | 5 × 10−9 | 4 × 10−7 | 2 × 10−16; | 4 × 10−7 | 1 × 10−14 | 3 × 10−9 | - | Avg | 1 × 10+04 | 9 × 10+05 | 3 × 10+03 | 2 × 10+03 | 4 × 10+04 | 3 × 10+04 |

| - | Worst | 5 × 10−31 | 2 × 10−8 | 2 × 10−6; | 2 × 10−15 | 4 × 10−6; | 6 × 10−14 | 4 × 10−8 | - | Worst | 2 × 10+04 | 2 × 10+07 | 1 × 10+04 | 1 × 10+04 | 9 × 10+04 | 8 × 10+04 |

| - | SD | 1 × 10−31 | 5 × 10−9 | 6 × 10−7 | 4 × 10−16; | 8 × 10−7 | 1 × 10−14 | 7 × 10−9 | - | SD | 5 × 10+03 | 4 × 10+06; | 3 × 10+03 | 3 × 10+03 | 3 × 10+04 | 2 × 10+04 |

| F31 | Best | 2 × 10−30 | 5 × 10−8 | 1 × 10−3 | 3 × 10−7 | 8 × 10−8 | 2 × 10−13 | 3 × 10−11 | F34 | Best | 8 × 10−16; | 3 × 10−7 | 1 × 10−9 | 1 × 10−4 | 4 × 10−5 | 2 × 10−6 |

| - | Avg | 9 × 10−22 | 2 × 10+03 | 3 × 10−2 | 3 × 10−6; | 2 × 10−2 | 2 × 10−2 | 1 × 10−6; | - | Avg | 8 × 10−3 | 4 × 10−2 | 2 × 10−7 | 5 × 10−4 | 2 × 10−2 | 1 × 10−2 |

| - | Worst | 3 × 10−20 | 7 × 10+04 | 1 × 10−1 | 8 × 10−6; | 2 × 10−1 | 3 × 10−1 | 1 × 10−5 | - | Worst | 8 × 10−2 | 4 × 10−1 | 4 × 10−6; | 1 × 10−3 | 8 × 10−2 | 8 × 10−2 |

| - | SD | 5 × 10−21 | 1 × 10+04 | 2 × 10−2 | 2 × 10−6 | 4 × 10−2 | 6 × 10−2 | 3 × 10−6 | - | SD | 3 × 10−2 | 8 × 10−2 | 6 × 10−7 | 3 × 10−4 | 3 × 10−2 | 3 × 10−2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdel-Basset, M.; Mohamed, R.; Mohammad, N.; Sallam, K.; Moustafa, N. An Adaptive Cuckoo Search-Based Optimization Model for Addressing Cyber-Physical Security Problems. Mathematics 2021, 9, 1140. https://doi.org/10.3390/math9101140

Abdel-Basset M, Mohamed R, Mohammad N, Sallam K, Moustafa N. An Adaptive Cuckoo Search-Based Optimization Model for Addressing Cyber-Physical Security Problems. Mathematics. 2021; 9(10):1140. https://doi.org/10.3390/math9101140

Chicago/Turabian StyleAbdel-Basset, Mohamed, Reda Mohamed, Nazeeruddin Mohammad, Karam Sallam, and Nour Moustafa. 2021. "An Adaptive Cuckoo Search-Based Optimization Model for Addressing Cyber-Physical Security Problems" Mathematics 9, no. 10: 1140. https://doi.org/10.3390/math9101140

APA StyleAbdel-Basset, M., Mohamed, R., Mohammad, N., Sallam, K., & Moustafa, N. (2021). An Adaptive Cuckoo Search-Based Optimization Model for Addressing Cyber-Physical Security Problems. Mathematics, 9(10), 1140. https://doi.org/10.3390/math9101140