1. Introduction

It is common in a sociological (or physical) investigation to differ amid three primary societal (or system) levels: the micro-level, the meso-level, and the macro-level. In sociology, the analysis deals with a person in a social context or with a small people unit in a particular social setting. The consequences across a large population that result in significant inner transmissions are a matter of the macro-level commonly associated with the so-named global level. In turn, a meso-level study is provided for groups lying in their size amid the micro and macro levels, and often explicitly invented in order to disclose relations between micro and macro levels. Following this general taxonomy, we can analogously consider the Information (Digital) Age (a famous appellation of the modern period) as the macro-level Industrial Revolution, expressed by a quick transmission from the traditional industry to the massive application of information technology. Thus, humanity reconsiders the old-fashioned machinery aiming for new manufacturing solutions for new, regularly arising problems. Modern technologies lead to difficult supplementary tasks in the “global balancing” in humans’ interactions and nature. Most people hardly comprehend their lifestyle undergo significantly modifying on the micro-level than the prior industrial standard of living. The majority barely seriously consider modern discoveries in natural sciences, such as in high-energy physics or cosmology. For instance, even though the quantum theory has proven itself to be essential in nanotechnology [

1,

2], as well as particle accelerators in tumor treatment [

3], yet, for many people, these fundamental achievements remain “abstract nonsense”. Consecutively, the scientific community facing a severe problem cannot often deliver further strict theoretical evaluation regarding new previously unknown phenomena. It takes place due to the known crisis of the modern scientific look at the “picture of the world”. Aiming to handle this global difficulty, it seems reasonable to primarily pay attention to an appropriate theory that is based on a suitable mathematical model. Note that a real-world system often cannot be described sufficiently entirely with a partial model that raises the need to have one with the lowest potential bias. While discussing the model concept, it appears to be essential to mention such a notion as information. According to [

4], data are inextricably presented as a reflection of a mutable object and it can be described through other primaries notions, such as “matter”, “structure”, or “system”. Information can be stored and transferred with a material carrier, giving a form further perceived. It should also be noted that such a data concept is dedicated (from a system) to be supplementarily exploited. Generally speaking, it is a complex of ideas and representations of patterns that are discovered by a person in the real world. Thus, one may visualize a typical personal information process, as follows:

Thus, a model is nothing else but a way of information processing to knowledge. It is important to note that the human "perception" is an uninterrupted procedure of data accumulating with an "integration" that is needed in order to attain the required awareness.

Now, the question is whether we can decompose the information process further. In fact, psychology and cybernetics make attempts to do so; however, the further it goes, the harder it is to track the relation between a complex cognitive system and its fundamental components.

1.1. A New Approach to Compression

We can uncover the common features and fundamental differences at the outset, when comparing Data and Control Science with Physics. The central distinction between them is that Control Science studies systems with different complexity levels, commonly perceiving it as a scale. While physics deals in some extent with various systems in space and time, expressed in scales, like “length”. For example, the galaxy is immense in its extent in space, and the brain is immense in the complexity of its inner performance. As co-existing measures, these gradations are indeed tools uniting Physics with Data and Control Science in comprehending the world around us. Following the conventional view of the history of the Universe, we can suggest that the initial chaos of elementary particles strives to arrange (clusterize) into the molecular connectivity studied by chemistry. This structure later led to the origin (clusterization) of life as a highly organized form of matter, studied by biology. The described clustering process is a subject of Control Science, which can seemingly connect the natural Sciences while studying each scale of complexity of the organization of matter from its own standpoint.

It appears that clustering: is closely related to a term lik “compression”. With the aim to understand compression, it is helpful to mention that a human usually is not capable of accumulating every detail in the personal sensory perception (registered by each cone and rod), but only the essential details. Such details could be considered to be clusterization of low-level data from the retina into high-level features. Moreover, the general tendency is to forget even these points, mainly due to the brain’s limited capabilities. Accordingly, the data are being distorted or lost, which leads to a deterioration in the quality of information recovery. In general, as it was discussed in [

5], our mind quite often compresses data while using induction or deduction, in order to simplify information regarding the world.

This peculiarity of human perception is broadly exploited in computer systems. The famous Kotelnikov–Nyquist–Shannon sampling theorem [

6,

7] dictates the genaral rules of signal digitization, which allow for compressing continuous signal flow into a discrete set of numbers without a loss of information. However, this approach exhausts its limits over the years: it is exponentially harder to quantize signals when their dimensionality grows linearly, so that, where a 1-D signal requires

samples to be preserved, a corresponding 2-D signal would require

samples, not to mention the signals of higher dimensionality, which are being routinely transmitted today. Thus, compression algorithms are incredebly relevant nowadays, since they allow for speeding up information exchange at fixed bandwith: e.g., MP3 [

8,

9] allows for getting rid of unnecessary high sound frequencies in music, while JPEG [

10,

11] reduces the picture file size at the cost of coarsening high frequency components that are responsible for small details. In this way, a kind of compression is believed to occur in the flow of visual information (if we follow the most popular hypothesis) among the brain’s ventral and dorsal streams. However, such processes perform “on the fly” in the brain. On the other side, the computer can only manipulate preliminarly given data to be compressed.

Mathematically, a real-time compression can be understood as selecting essential parts of the surrounding big data containing the comprehensive information and then storing linear combinations of these parts (possibly with random weights). In order to implement this idea, a method like compressive sensing was proposed and discussed in [

12,

13,

14]. In this paradigm, it is possible to compress a signal gradually, as it is read or perceived by a sensing system. Assume that a signal

is

s-sparse in some domain

, namely

, where

x has, at most,

s (

) non-zero elements (thus being called an

s-sparse vector). In our interpretation, it means that, on some basis, the comprehensive information is stored in only

s units of data out of

N. Subsequently, compressive sensing can be described as:

where

is an

sampling matrix,

A is the so-called measurement matrix, and

is called the measurement vector or vector of compressed observations. Because

, the estimation of

x by given

y drives infinitely many solutions. However, in the case

x is

s-sparse, it becomes possible to reconstruct it without much loss of useful data with only

variables being required. According to [

12,

13,

14], amid the solutions of (

1), we prefer those that maximize the number of zero elements in

x. While one would be interested in minimizing an

norm, as it provides the sparsest solution, this problem is NP-hard and a linear relaxation via the

norm provides a good compromise between sparsity and computations complexity [

12,

15]. It is possible to compute

-optimized solution in polynomial time, using, for example, the interior-point method [

16,

17]. Optimization with the

metric for the task of pattern recognition was first discussed in [

18]. An optimal sparse

-stabilizing controller for a non-minimum phase system (a solution for the task of

-optimization in an infinite-dimensional space) was proposed in [

19].

While the design of

is, in general, complicated, some randomized choices of

are possible according to the restricted isometry property (RIP) and minimization of incoherence [

4]. It was shown that only

measurements are sufficient for reconstructing

x. In a special case when the elements

of

A are identically independently distributed with respect to the normal distribution

then

measurements are sufficient. In practice, it is known that

measurements are often enough for the initial sparse data to be effectively reconstructed.

1.2. Multiagent Systems

Studied in Data and Control Science, the complexity levels in the matter’s configuration lead to the emergence of the "complex system" notion. It is important to note that the complexity is not ascribed to the system that is comprehended as a static object, but, to a greater extent, to the dynamic processes occurring within the system. They are the primary purpose of our research. A lack of computing power and limitations of the cognition methods encourage many severe difficulties in such research. In this regard, an approach that is intended to study a particular complex process resorts to various generalizations simplifying the considered task. For a while, systems that consist of numerous practically indistinguishable segments with obscure disordered interactions have only been statistically described. It appears to be impossible to describe the system’s overall behavior while using just macroscopic statistical characteristics of its composite elements. This approach can merely explain a minimal class of the perceived effects. While many complex systems properties may significantly disagree with relatively simple states of equilibrium or chaos, the statistical approaches deal with actually averaged or integrated subsystems. This circumstance can lead to the omission of important details, if, for example, the system forms a pattern or structure of clusters.

The above-described manifestation of the whole system properties that are not inherent to its separate elements is called emergence [

20,

21,

22]. Indeed, any non-comprehended characteristic of a structure can be considered to be emergent. For example, if a person cannot understand why a car moves, then moving is understood as an emergent behavior of the system-the car. However, this process is undoubtedly well apprehended from an engineering standpoint of the smooth operations in the engine, transmission, and control system. Thus, emergence may turn out to be a common subjective misunderstanding, and it is quite another matter if the system’s behavior is a mystery beyond the capacity of any scientist. Say, consciousness is an emergent behavior of the brain from a naturalist’s point of view. To date, there are no apparent means for explaining the concept of consciousness itself resting upon just elements composing the brain. Moreover, even there is no way to check whether such a system is whatever cognizable with the help of somewhat arbitrarily powerful existing intelligence—or whether it is fundamentally, objectively unknowable. In the last case, the emergence is ostensive [

21].

Assuming that the surrounded systems are still subjectively emergent, we can critically appraise the statistical methods that were applied to study complex systems. As an alternative to the accepted statistical methodology, the current article suggests an agent-based approach designed to overcome this practice’s limitations. In the agent-oriented framework, a complex system (called a multiagent system) is respected as an arrangement of basic independent elements (termed intelligent agents or just agents), which communicate to each other [

23,

24,

25]. The current study intends to suggest a unified general theory connecting the macroscopic scale of the whole system and the individual components’ microscopic scale. It aims to understand the reasons for "complexity" occurring in multiagent networks. An expected result of such an approach consists of constructing managing multiagent systems (MAS) without an in-depth investigation of the agents’ profound nature.

The known belief–desire–intention model (BDI) of intelligent agents is a model of intelligent agents suggesting that each agent has a "convinced" achievable goal, including excitation of particular states, stabilization, or mutual synchronization. The latter of particular interest [

26,

27] subject is frequently associated with the agents’ self-organization in a network. The conception behindhand this consequence lies in the behavior of agents within a MAS. I.e., agents can regulate their internal states by exchanging messages that aim to form a system structure on a macroscopic scale. It is worth noting that a system can start from an arbitrary initial state, and not all agents are required to interact with others. These structures may include persistent global states (for example, task distribution in load balancing for a computer network [

28]) or synchronized oscillations (Kuramoto networks [

29]).

Because dynamic systems usually describe multiagent networks, the synchronization state signifies a convergence of dynamic trajectories to some unique synchronized one. This consequence is well studied in the case of linear systems [

23,

30,

31]. The more general case of nonlinear control rules is first considered in [

32], where the Kuramoto model of coupled oscillators is analyzed as an example of a system with nonlinear dynamics.

Cluster synchronization is a particular type of such procedures, which is essential in control tasks. The genuine compound of large-scale systems, like alliances of robots operating in a continuously changing setting, are frequently overly complicated to be regulated by traditional procedures, incorporating, for example, approximation by classical ODE models. However, sometimes, some groups in a population synchronize in clusters. The agents who fit in the same group are synchronized, but ones that belong to different groups are not. Because these clusters can be deemed to be separate variables, it is possible to significantly reduce the control system inputs.

Thus, we can now introduce three levels of complex multiagent systems: microscopic (the level of individual agents), mesoscopic (the level of clusters), and macroscopic (the level of the system as a whole). The following equation can write down the described relation

where

N is the number of agents and

M is the number of clusters, which is supposed to be of the same order as the sparsity of the system.

In this paper, we reformalize the framework of multiagent networks proposed in [

23] and refined in [

32], aiming to apply compressive sensing for efficient observations of dynamic trajectories. For this purpose, in the current work, we propose an algorithm, on the first step of which the trajectories are quantized, then compressed. Afterward, we visualize the results and compare the quantized representations of the original state space with the reconstructed one. In some cases, the visual representation of the state space may lack contrast, especially when the resolution is quite high (the quantization step is low). For this issue to be resolved, some contrast enhancement techniques may be considered [

33,

34].

1.3. The Kuramoto Model

Y. Kuramoto [

29] proposed a straightforward, yet versatile, nonlinear model of coupled oscillators for their oscillatory dynamics. Given a network of

N agents (by

here and further we denote a set of

N agents), each with one degree of freedom frequently entitled a phase of an oscillator, the following system of differential equations describes its dynamics:

where

is a phase of an agent-oscillator

i,

is a weighted adjacency matrix of the network, and

is an own (ingerent) frequency of an oscillator. Consistent with [

35,

36,

37], agents move towards the position of frequency (

) or phase (

,

) synchronization below particular conditions on

and

.

Many generalizations of this model, like time-varying coupling constants (adjacency matrix

) and frequencies

, are proposed [

38]. The Kuramoto model with phase delays is considered in [

39,

40]. Furthermore, there exist extraordinary multiplex [

41] or quantum [

42] and cluster synchronization [

43] variants of this model.

The mentioned model is an effective instrument for the cortical activity explanation in the human brain [

41], exposing three brain activity types linking to three states of the Kuramoto network: unsynchronized, highly synchronized, and cluster synchronization. The methodology that is behind the model is also applied in robotics. Accordingly, in [

44], the task of pattern formation on a circle using rules encouraged by it is presented. A paper [

45] proposes an idea of an artificial brain that is based on the Kuramoto oscillators. In [

32], cluster synchronization appearing in the discussed framework is examined in the multiagent systems context. Given the framework that we reformalize in the current paper, the restrictions for the clusterization to remain invariant were formulated. Thus, the simplicity and usefulness of this model caught our attention, so that we choose it further in order to demonstrate how compressive sensing can be applied to multiagent clustering.

2. Cluster Flows

The framework of cluster flows was first proposed in [

23] and then supplemented by additional concepts and definitions in [

32]. In the current work, we introduce this framework in the same form, as it is formulated in our previous article [

32]. Thus, the definitions that we present in this paper strictly replicate those proposed in the mentioned previous works.

Intending to investigating the Kuramoto model, we discuss at the beginning general multiagent systems. Throughout, we consider non-isolated systems that consist of

N agents, whose evolution is determined by their current state, their overall configuration, and the environment’s external influence. The following system of differential equations characterizes the dynamics of the agents’ interaction:

where

t is unidirectional time,

is a so-called state vector of an agent

;

is a microscopic control input describing local interactions between agents;

is another function, which is responsible for macro- or mesoscopic control simultaneously affecting large groups of agents; and,

is an uncertain vector, which adds stochastic disturbances to the model. Despite that

and

can be included in the state vector of a system, it is essential to separate them from the state vectors for two reasons: in the context of information models, it is necessary to distinguish both the local process of communication (

) and external effects (

) that are usually caused by a macroscopic agent with an actuator, allowing to influence the whole system; more than that, it allows for clarifying the model itself. The state vector

may also have a continuous index, so that it becomes a field

, where

a is a continuous set of numbers. This could allow for reducing the summation to integration in some cases.

As it widely accepted, the topology of an agents’ network is presented by means of a directed interaction graph: , where is an agent vertex set and is the set of directed arcs. Introduce as a time-dependent neighborhood of an agent i that consists of a set of in-neighbors of this agent. We further denote the in-degree of a vertex-agent i () by , where are the elements of an adjacency matrix A of (the sum of the weights of the corresponding arcs). Likewise, the in-degree of a vertex-agent i excluding j () is . A strongly connected graph is said to be if there is a directed path from any vertex-agent to every other one.

Our next purpose is to introduce two agents outputs, one intending to their communication and another evaluating the synchronization between agents. For this purpose, we associate with each agent i its output function :

Definition 1. A function is called an output of an agent i if , where l does not depend on i.

Onwards, we denote two outputs, the former of which is applied to the communication process, while the latter is responsible for measurement of inter-agent synchronization.

First, let

be a communication output of an agent

j from the neighborhood of

i. We assume that the dynamics of an agent

i at time

t is affected by the outputs

of the agents

j from its neighborhood. Further, we refer to this relation between agents as "coupling". In practice, the output data flow

can be transmitted from

j to

i or shown by the agent

j and then identified by

i. The exact transmission rules are usually outlined in

(see (

4)):

where

depends on the outputs

. The equation (

5) provides control rules for

i based on all outputs

j recognized by

i. With this regard, as in the network science and control theory, we call it a coupling protocol. Thus, we present the definition of a multiagent network, as in [

32]:

Definition 2. The triple consisting of (1) family of agents (see (4)); (2) interaction graph ; and, (3) coupling protocol defined as in equation (5) is called a multiagent network. Henceforth, we denote a multiagent network by the letter and then sassociate it with its set of agents. Let be an output of an agent i, which we introduce for the measurement of synchronization.

Definition 3. Let stand for the deviation between outputs and at time t, where is a corresponding norm. Then:

- 1.

agents i and j are (output) synchronized, or reach (output) consensus at time t if ; similarly, agents i and j are asymptotically (output) synchronized if ; and,

- 2.

agents i and j are (output) ε-synchronized, or reach (output) ε-consensus at time t if ; similarly, agents i and j are asymptotically (output) ε-synchronized if ;

Summarizing the above, cluster synchronization of microscopic agents may lead to mesoscopic-scale patterns that are recognizable by a macroscopic sensor. However, there is no need to restrict those patterns to be static, since the most interesting cases appear when the patterns evolve and change in time. Such alterations may be caused either by external impacts, or as a result of critical changes inside the system.

Definition 4. A family of subsets of is told to be a time-dependent partition over (in this work, time-dependent partition is also called just “partition” for the sake of simplicity) at time t if the following conditions are respected:

- 1.

;

- 2.

; and,

- 3.

.

The following definition interprets cluster synchronization in terms of a set partition with conditions for the outputs .

Definition 5. A multiagent network with a partition over is (output) -synchronized, or reach (output) -consensus at time t for some if

- 1.

and

- 2.

, .

A -synchronization is henceforth referred to as cluster synchronization. We also say that the is a clustering over .

Each cluster in a system with clusters also has a set of cluster integrals: such an approach is usually used for dimensionality reduction in physical models.

4. Simulations

The simulations are set up while using Python, as the simplicity of this programming language allows for us to focus on algorithms, rather than syntax. We have implemented the code in the Jupyter Notebook tool, where the visual representation of results interactively and consequently supports the corresponding blocks of code. The simulation environment can be found in the GitHub repository [

46] and is free to use.

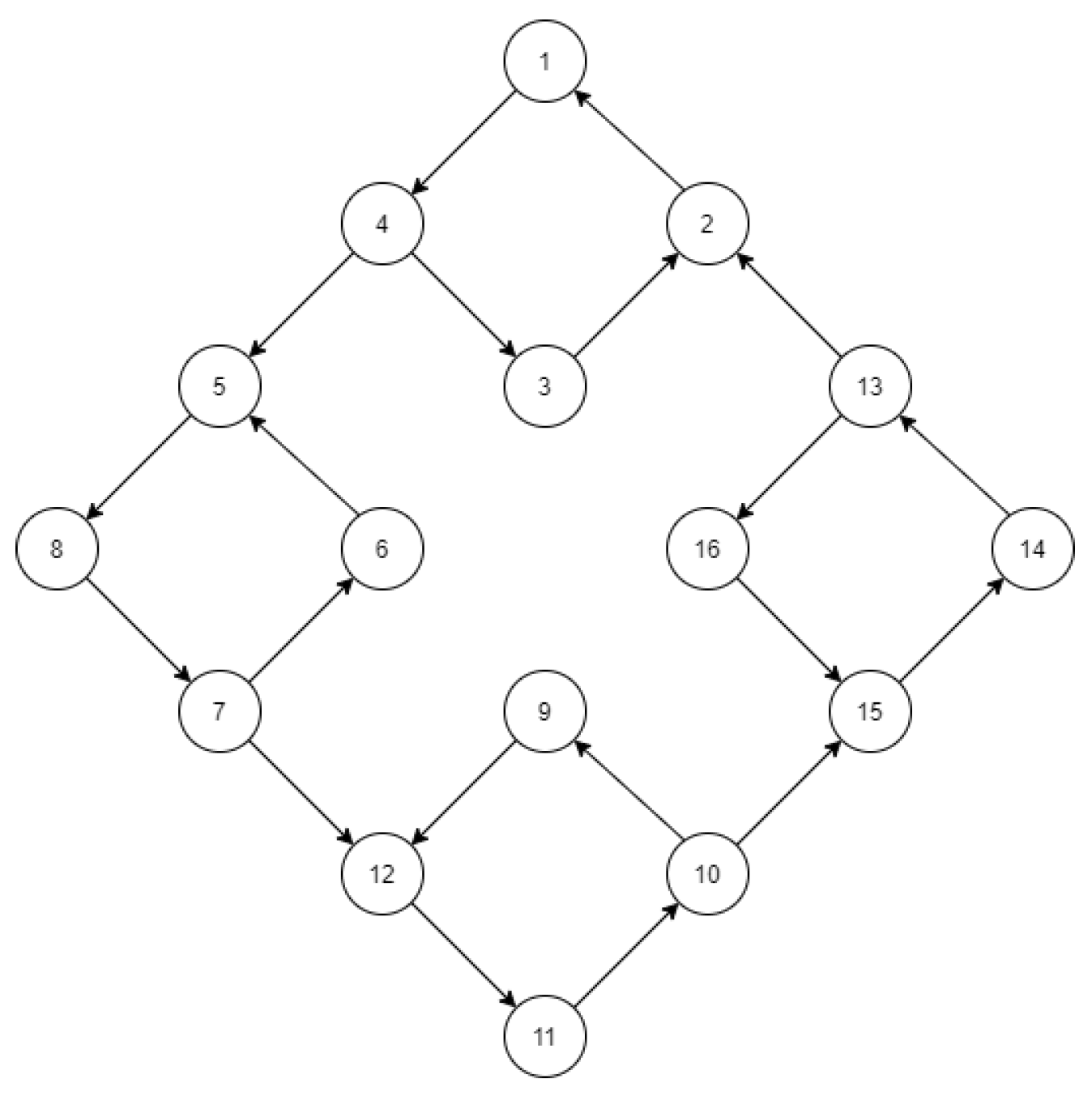

Consider model (

7) and its solutions on

. Let the number of agents

N be equal to 16, and the topology of connections between them be as on

Figure 1. Despite that we choose

N to be not quite large, its quantized state space appears to be of significant dimensionality, as it will be shown below. Such a state space may undoubtedly include a much larger amount of agents. However, after the cluster synchronization, they would occupy a relatively small zone (thus making the state space sparse), whether there are 16 or 1000 agents. With that being said, in the current work, we focus on a simple example just to demonstrate the main features of our approach.

Additionally, let the global connection strength

and inherent frequencies

be, as follows:

,

, so that agents from one “square”-shaped component of the graph

(see

Figure 1) satisfy the condition of the Theorem 1 manifested in (

8). On the other hand, agents from different "squares" does not obey those conditions.

Assuming that the initial phases

are uniformly distributed on a circle

, in the absence of any mesoscopic control (

) and given that the outputs

coincide with

, the described configuration leads to cluster synchronization with a partition

consisting of four subsets of agents. In other words, we obtain

-synchronized clusters for some values

and

, such that

, which are analyzed in [

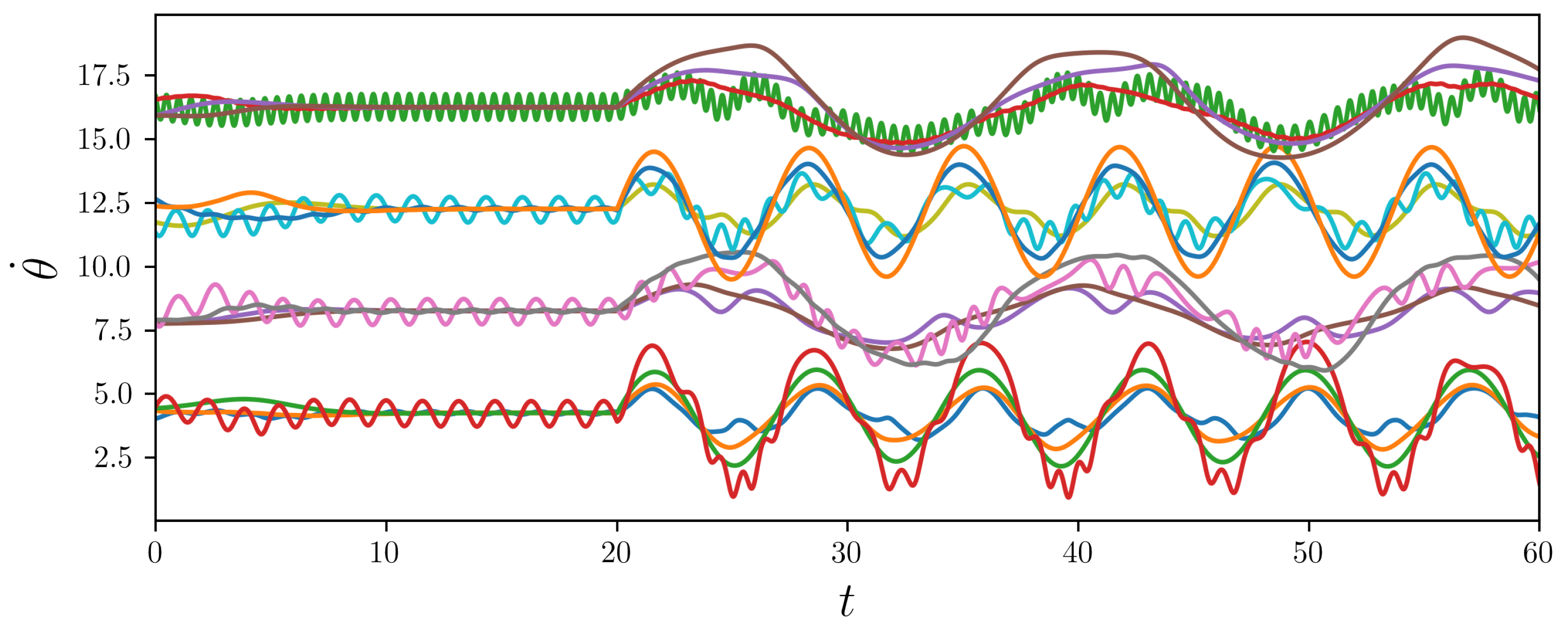

32]. However, in this work, we focus on non-trivial “flowing” cluster patterns, which emerge after adding a sinusoidal control function

at

, i.e., when

-synchronization visually estabilish:

where

are uniformly distributed on an interval

. We introduce the set of values

:

. According to the Theorem 1 (see (

8) and (

11)), the assumed values of agents’ sensitivity may break cluster invariance. The simulated results that are illustrated in

Figure 2 prove this statement to be correct.

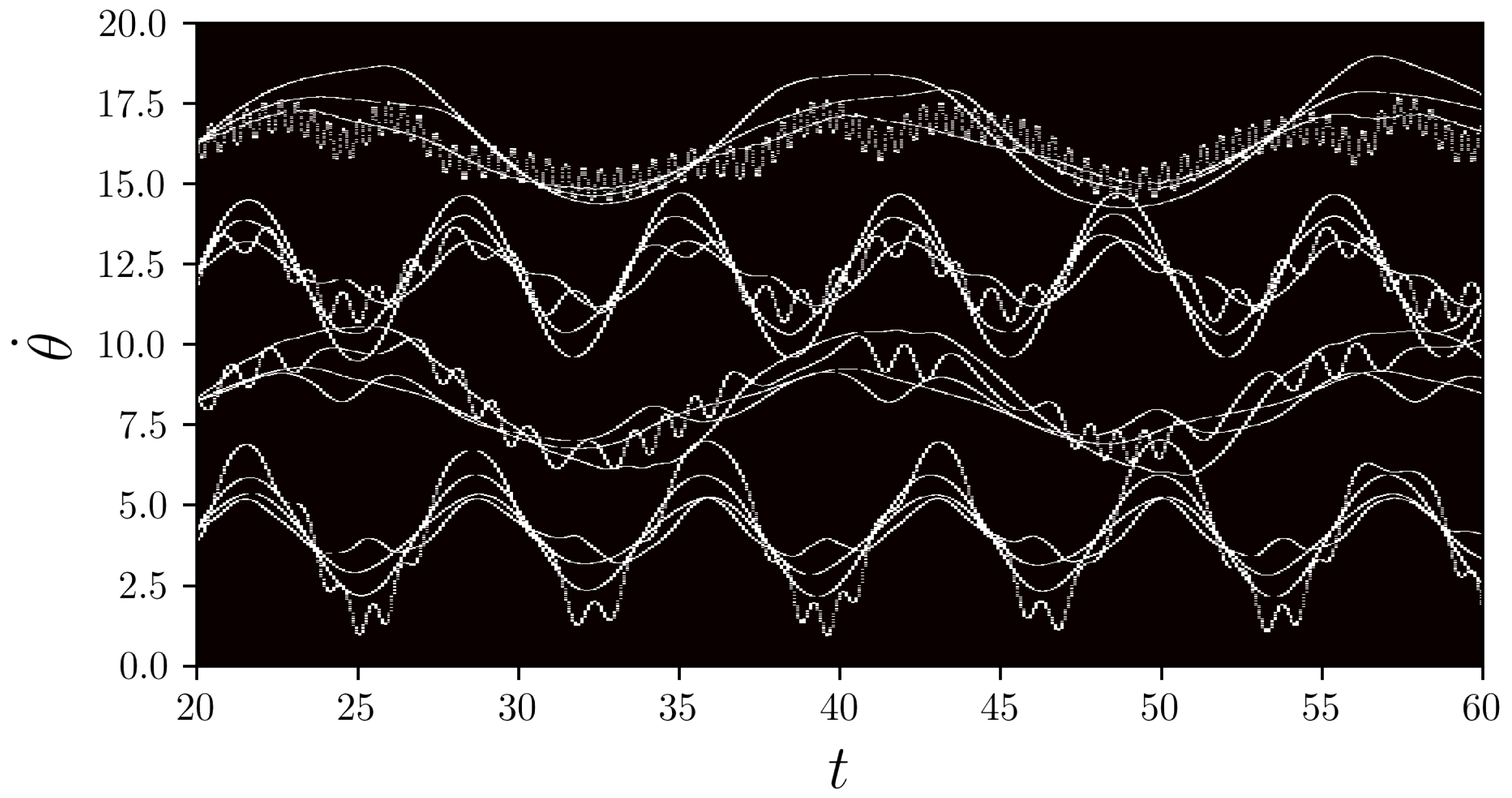

We denote the parameters for quantization of the state space:

, , ; and,

, , .

The corresponding

matrix

is constructed according to the algorithm described above and it is shown in

Figure 3.

It turned out that of ’s elements are zero, so that its columns have sparse representation in the standard basis. We choose to compress the columns of , since they represent the states of the considered systems observed during the time interval .

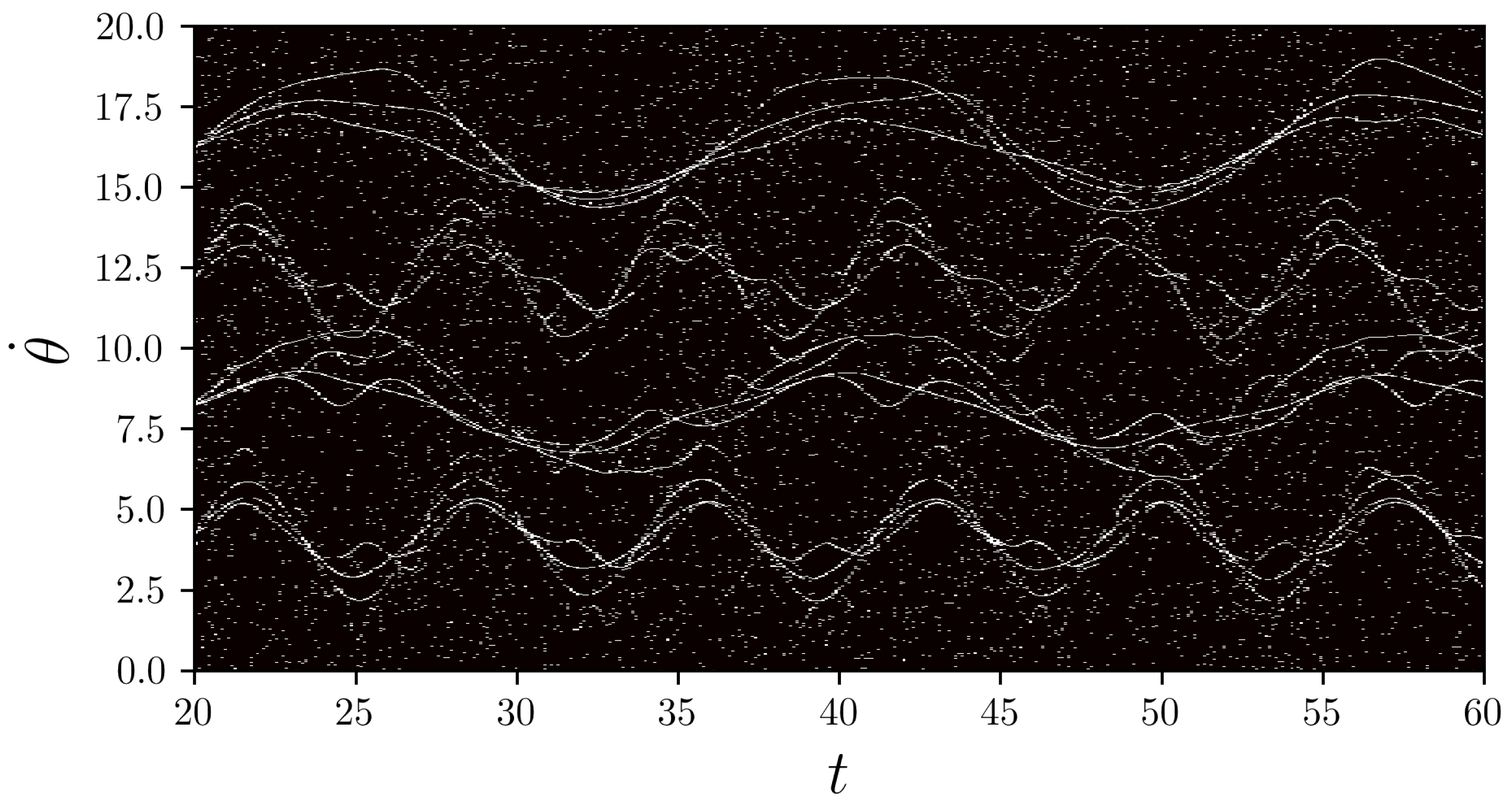

The next step is to generate

, which is chosen to origin its elements from normal distribution

(according to [

4]), where

m is the number of measurements, which we choose to be

. We choose

s to be the average number of non-zero elements among the columns of

. As it will be shown in the image with reconstructed trajectories, the multiplier 2 is sufficient, despite that, in [

4], it was equal to 4. This empirical decision can be justified by the fact that the dynamic trajectories have very sparse representation, unlike ordinary images. Thus, we provide decent compression for each column, reducing its length from 1000 to 139. Such data reduction brings us closely from the level of individual agents to the mesoscopic scale of clusters. A decompressed matrix

is obtained as a collection of restored columns as

-optimized vectors, numerically calculated with the interior-point method; it is shown in

Figure 4. Despite that the decompressed matrix appears to be noisy, the overall features of the trajectories are still perceptable, which could inform us of the close to lossless compression.