NetVote: A Strict-Coercion Resistance Re-Voting Based Internet Voting Scheme with Linear Filtering †

Abstract

1. Introduction

2. Related Work and Contributions

2.1. Related Work

2.2. Our Contribution

3. Parties and Building Blocks

3.1. Parties and Threat Model

- Ballot privacy guarantees that an adversary cannot determine more information on the vote of a specific voter than the one given by the final results. In our model, ballot secrecy is achieved assuming a subset of the tellers is honest.

- Practical everlasting privacy assures that ballot secrecy will be maintained with no limit in time. This is, even considering a computationally unbounded adversary, ballot secrecy is not broken. Assuming the certification authority follows the protocol honestly, our construction satisfies, in addition, practical everlasting privacy.

- Verifiability allows any third party to verify that the last ballot per voter is tallied, the adversary cannot include more votes than the number of voters it controls, and that honest ballots cannot be replaced. Assuming that CA is honest, the protocol provides verifiability. This assumption is required to grant voters voting certificates. The existence of a trusted entity creating and assigning authentication credentials to eligible voters is intrinsic in the remote voting scenario.Strict coercion resistance allows voters to escape coercion without the coercer noticing. This is, if the voter escapes coercion resistance, the adversary is incapable of detecting it. NetVote satisfies strict coercion given that the tallying server is honest. Note that this assumption is not required for verifiability. However, to reduce the filtering complexity from quadratic to linear, we let TS learn which are the valid votes.

3.2. Building Blocks

4. Description of Our Protocol

4.1. Overview

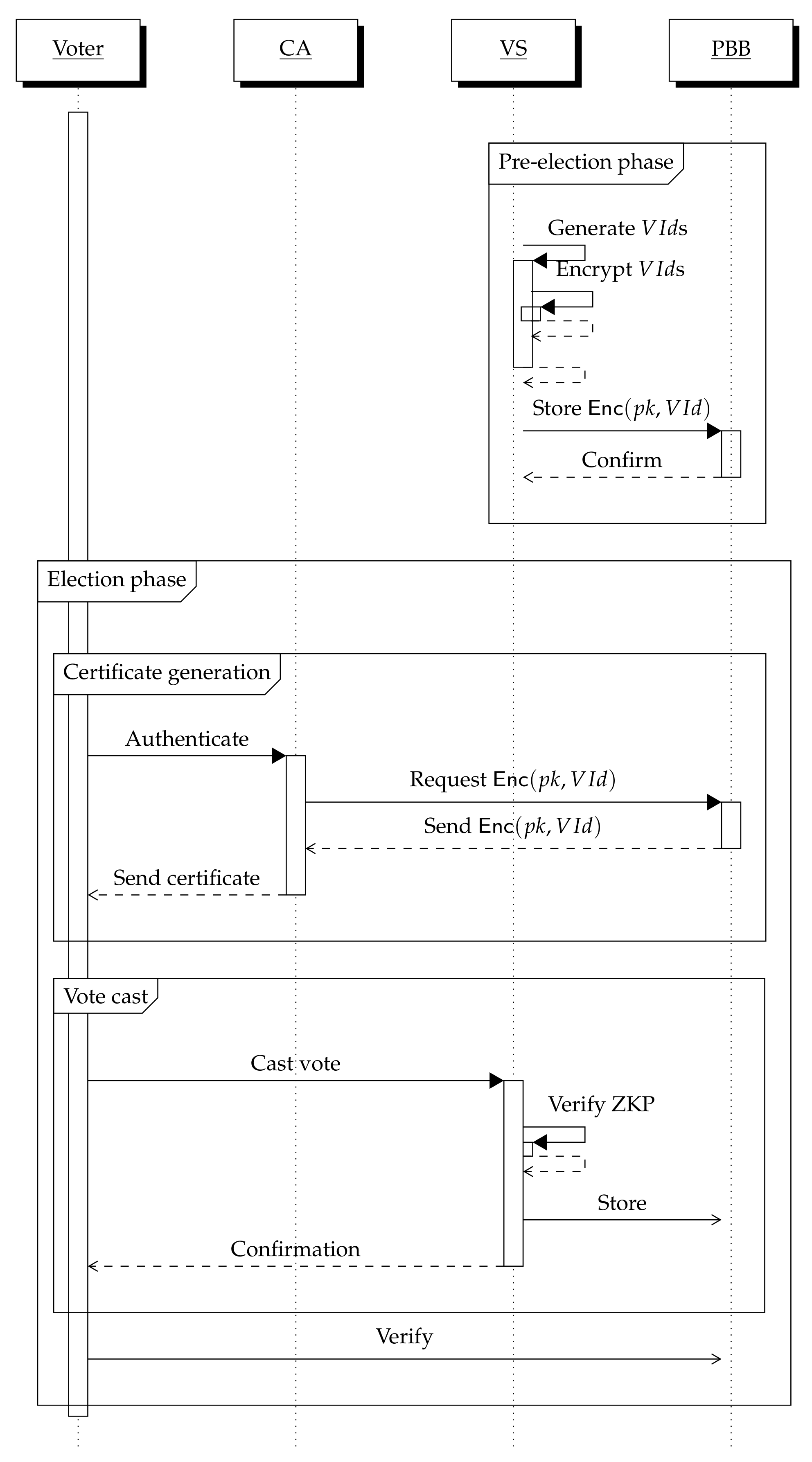

4.2. Pre-Election Phase

- PBB publishes the list of candidates, , and initialises a counter .

- CA, VS and TS generate their key-pairs and respectively, by calling . They proceed by publishing their public keys in PBB.

- The trustees distributively run , where the voting key, , is generated, and each trustee owns a share of the private key . They proceed by publishing the voting key, in PBB.

- CA takes as input the total number of voters, , and generates random and distinct voting identifiers, for with for . It keeps the relation between and voter private. Next, it encrypts all identifiers:Next, it signs each encrypted identifier, :Finally, it commits to these values by publishing the pair in PBB.

4.3. Election Phase

- The voter authenticates to CA and requests an anonymous certificate generation .

- CA selects the corresponding encrypted voter identifier, , an randomises the ciphertext by levering the homomorphic property:for , to generate a randomisation of the encrypted identifier, , and a proof of correct randomisation, .

- CA generates an anonymous certificate, , with as an attribute, and sends it to the user together with and the proof of re-encryption ,

- The user verifies that the attribute of the certificate is an encryption of by verifying . If it is not the first time it casts a vote, it may also verify that it has received the same during both vote cast phases.

- The voter encrypts the chosen candidate, and generates a proof of correctness by callingand signs it using their ephemeral certificate, . Let be the private key related to , then the user computesFinally, they send it to VS,

- VS verifies that the certificate was issued by the CA, the signature is a valid signature by , and the proofs ensures that the encrypted vote corresponds to a valid candidate:If everything verifies correctly, it sends the vote to PBB and sends an acknowledgement to the voter.

- PBB augments the counter and publishes the vote in the board

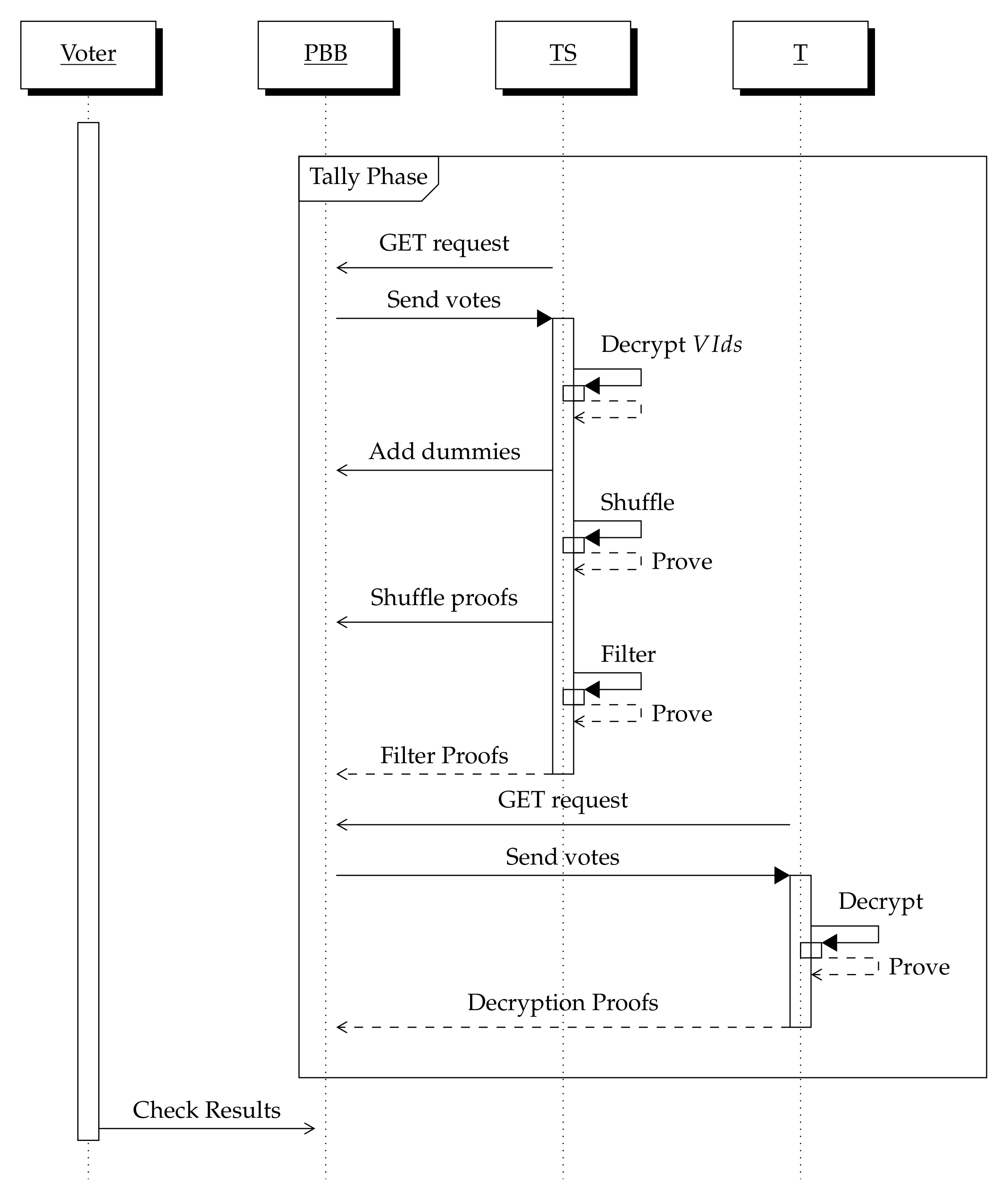

4.4. Tallying Phase

- TS begins stripping the information from the ballot, keeping only the necessary information to proceed the filtering phase, mainly the counter, the encrypted identifier and the encrypted vote, and adds them to the PBB.

- Next, the TS decrypts the voter identifier and ads a random number of dummy voters per voter. We describe how the TS chooses the number of voters to add per voter in Section 5. We want the counter to be always less than the counters of honest voters, and hence, TS always adds a dummy with a zero counter. Moreover, every added dummy will have the zero vector and zero randomness, ,where is another randomisation of distinct to . This allows anyone to verify the correctness of this process, while maintaining private to which voter each dummy vote is assigned.

- Next, TS encrypts each of the counters with randomness zero for verification purposes for every counter i, resulting in each entry of PBB as follows:

- Now, TS proceeds by performing a verifiable shuffle to all the entries in the bulletin board. Let be the stripped ballots with the encrypted counter. It uses a provable shuffle to obtain a shuffled list of ballots, and a proof of correctness . It appends and to PBB.

- Next, it proceeds to group encrypted votes cast by the same voters. To achieve this, it proceeds by decrypting each identifier, and adding a proof of correct decryption, ,

- Finally, TS filters the votes by taking the encrypted vote with the higher counter. To do this, it locally decrypts every counter and selects the one with the highest counter. It proceeds by publishing the filtered votes, and together with proves that the counter is greater than all the other counters related to votes cast by the same voter, where is the number of votes cast by voter . It publishes it in the bulletin boardwhere are the groups formed by votes cast with the same identifier .

5. Including Dummy Votes

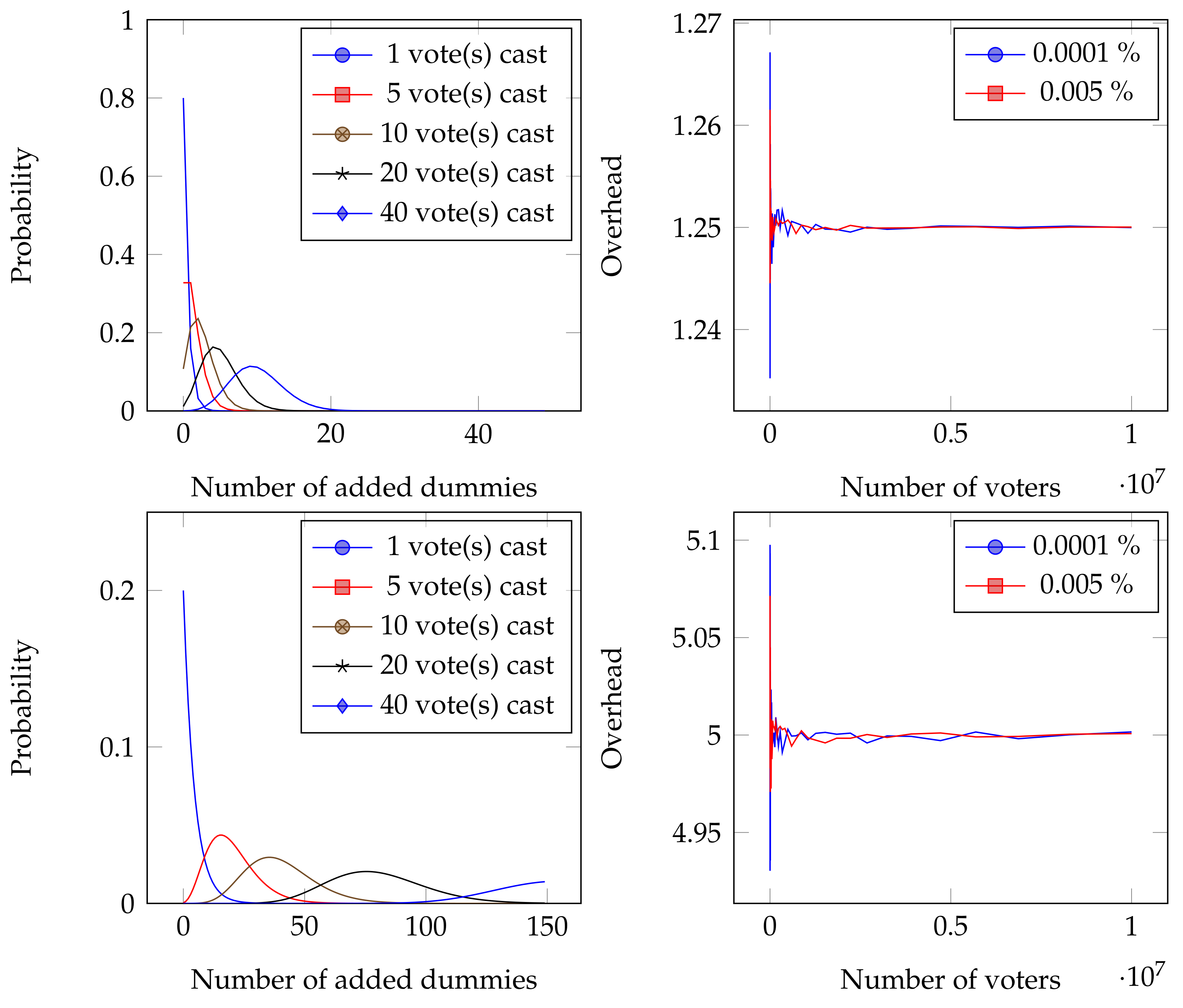

- It is straightforward to see why a fixed number of dummies for all voters would not serve the purpose here. So say that a random number of dummies for voter , , is added. If the set where we take from is , then the overhead would become prohibitive, as there is no upperbound. However, if , where u is the upperbound, then with probability , which would blow the wistle if voter decided to re-vote to escape coercion (as now a total of votes would have been added, where not all could have been dummies).

- However, while we want to hide the groups of votes with unusual group sizes (e.g., 1009), we do not need to add an overhead to small groups (which are not prone to receive the 1009 attack). To this end, our solution adds random votes to voters depending on the votes they have cast, following the negative binomial distribution, defined as:where is the number of votes cast by the voter in question, and is the number of dummy votes to add for that user.

6. Security Analysis

- . In the pre-election phase, the scheme runs to prepare the voting scheme for voting. This protocol takes as input the electoral roll , the list of all eligible voters, and the list of candidates .

- . Before casting a vote, voter run to obtain a token that allows them to cast a vote.

- . After registering, voters use their token and selected candidate C to cast their vote using the protocol. The user produces a ballot containing their choice, and interacts with the voting scheme. If the user’s ballot is accepted by the voting scheme, the ballot is added to the public bulletin board.

- . After casting a vote, voters can verify that the ballot was recorded as cast, i.e., they verify that the vote was successfully stored in .

- . Once the scheme receives a vote, it verifies that it is valid.

- . After the voting phase, the voting scheme runs . This protocol takes as input the set of ballots on the bulletin board. It filters the votes and outputs an election result, z, and a proof of correctness of the election results.

- . Finally, any third party can run . This protocol takes the set of ballots, the result, z, the proof of correct tally, , and checks its correctness.

6.1. Ballot Privacy

- which allows the adversary to see the information posted until that moment in the bulletin board. It can call this oracle at any point of the game.

- where selects two possible candidates, for voter . The challenger produces voting tokens and generates one ballot for each candidate, . It then places and in and respectively. It can call this oracle at any point of the game.

- where has the ability to cast a vote for any voter. The same ballot, , is generated for both bulletin boards. It can call this oracle at any point of the game.

- , which allows to request the result of the election. To avoid information leakage of the tally result, the result is always counted on , so in the experiment 1, the results and proofs are simulated. It can call this oracle once, and after receiving the answer, must output a guess of the bit (representing the world the game is happening in).

| Algorithm 1 In the ballot privacy experiment , the adversary has access to the oracles . The adversary controls the tallying server (TS), the voting server (VS) and the certificate authority (CA). It can call only once. |

| 1: : |

| 2: |

| 3: |

| 4: Output |

| 5: : |

| 6: Let and |

| 7: If return ⊥ |

| 8: Else and |

| 9: : |

| 10: If return ⊥ |

| 11: Else and |

| 12: : |

| 13: return |

| 14: |

| 15: |

| 16: |

| 17: return |

- Game:

- Let be as defined in Algorithm 1 where the adversary has access to the bulletin board .

- Game:

- is defined exactly as with the exception that the tally proof is simulated. This is, the result is still computed from the votes in , but the proof of tally is simulated. The proofs to be simulated are the shuffle proof in Step 4, the proofs of correct decryption in Step 5, and the proofs of greater or equal relation in Step 6, of Procedure 5. Given that all these proofs are zero-knowledge proofs, they require (in order to have the zero-knowledge property) the existence of a simulator algorithm that generates simulations of the proofs that are indistinguishable from real proofs. We use the random oracle to describe as such our SimTally algorithm.

- Game:

- The difference between and is only one. If ballot of differs from ballot of , it exchanges by .

- Game:

- We define as . Note that this view is equivalent to the view of . Hence, all that remains to prove is that this set of transitions of games are indistinguishable among each other.

6.2. Practical Everlasting Privacy

- where the adversary chooses two voters and two candidates and requests the challenger to run the election. The challenger runs the election, by first running the Setup protocol, generating keys for all parties, and distinct random identifiers to each of the voters. It proceeds with the protocol for each voter, generating voting credentials for each of the voters, then proceeds by casting votes for both voters in both worlds, and, finally, it runs the tally protocol.

| Algorithm 2 In the practical everlasting privacy game, , the adversary has access to the oracle . In this scenario, the setup needs to happen inside the oracle, so that the voter identifiers are ‘reset’. |

| 1: : |

| 2: |

| 3: Output |

| 4: : |

| 5: |

| 6: Let and |

| 7: Let and . |

| 8: and |

| 9: |

| 10: |

| 11: return |

- Game:

- This is defined as a run of as defined in Algorithm 2, where the adversary has access to the bulletin board .

- Game:

- This game is defined exactly as with the sole exception that now, we change the register phase, and instead run: and

6.3. Verifiability

- to get a token for voter .

- , to make voter cast a vote for candidate C.

| Algorithm 3 Verifiability game presented by Lueks et al. [29]. In the verifiability game experiment , the adversary has access to the oracles . |

| 1: : |

| 2: |

| 3: Set and |

| 4: |

| 5: If return 0 |

| 6: Let correspond to checked ballots. |

| 7: Let |

| 8: Let |

| 9: Let |

| 10: Let |

| 11: If where |

| 12: , distinct |

| 13: s.t. |

| 14: s.t. |

| 15: Then return 0, otherwise return 1 |

| 16: : |

| 17: |

| 18: Add to |

| 19: Return |

| 20: : |

| 21: Let |

| 22: Add to |

| 23: return |

6.4. Strict Coercion Resistance

- , to make voter cast a vote for candidate , and voter cast a dummy vote for a dummy candidate, 0, in , and to make voter cast a vote for candidate , and voter cast a dummy vote for a dummy candidate, 0, in . We use, to denote the dummy registration of voter . Moreover, we denote by the dummy vote cast for candidate C using token . The adversary is allowed to make this call multiple times.

- , allows the adversary to register and obtain a token, , for voter .

- , using a token , the adversary can call this oracle to cast a vote for candidate C in and .

- allows the adversary to see the bulletin board.

- allows the adversary to compute the tally of the election. It can call this oracle only once.

| Algorithm 4 In the strict coercion resistance experiment, , adversary has access to oracles . It can call only once. |

| 1: : |

| 2: |

| 3: |

| 4: Output |

| 5: : |

| 6: Let and |

| 7: and |

| 8: Let and |

| 9: and |

| 10: If or |

| 11: return ⊥ |

| 12: Else and |

| 13: |

| 14: : |

| 15: Let |

| 16: return |

| 17: : |

| 18: If or |

| 19: return ⊥ |

| 20: Else and |

| 21: |

| 22: : |

| 23:; return |

| 24: |

| 25: Let |

| 26: |

| 27: return |

- Game:

- This game is defined as of Algorithm 4. Note that we differ from the proof of ballot privacy in that we do not start with a fixed value of b.

- Game:

- Later, we are going to replace all votes by random votes. To this end, in this game, we compute the result taking the decrypted ballots of . Consider the stripped ballots of Step 3 of Procedure 5, . It computes the tally of these ballots, , where is the total number of votes cast. It does so by first decrypting using and respectively. Now, it proceeds by computing the final result by taking the last vote cast per . Note that, as the result is always taken from and the game does not publish these decrypted values, game is indistinguishable from game .

- Game:

- Same as the previous game, but now all zero-knowledge proofs, regardless of b, are replaced by simulations. This is, the proof of shuffle, , of all votes (including the dummies), the proof of correct decryption, , of each identifier, , the greater than proofs used to filter all but the last vote, , and finally, the proof of decryption of the final result, .

- Game:

- We define the same as with the exception that we exchange all ciphertexts in the certificates by random ciphertexts. Note that due to the changes of , the result is correctly calculated from the votes initially in and hence this does not affect the filtering stage. A hybrid argument reduces the indistinguishability of games and to the CPA security of ElGamal encryption. Note that this reduction is possible as we no longer need to decrypt the ciphertexts in the tallying.

- Game:

- We follow by replacing all votes cast by by zero votes. Again, following the lines of the ballot privacy proof, the indistinguishability of this step is reduced to the NM-CPA security of ElGamal.

- Game:

- To ensure that the filter does not leak information to the coercer, we also replace all ciphertexts generated thereafter. We replace the encryption of the counters, , by random ciphertexts. We do the same with all ciphertexts that exit the shuffle. Namely, we replace the shuffled encrypted counters, encrypted votes and encrypted s. This exchange is possible as we do not need to decrypt the ciphertexts (as of game ). Moreover, the indistinguishability of this step follows from the simulation of the zero-knowledge proofs (of game ) and the NM-CPA security of the encryption scheme.

7. Discussion on the Assumptions of Fake Credentials vs. Re-Voting

- User needs inalienable means of authentication.

- User needs to lie convincingly while being coerced.

- User needs to store cryptographic material securely and privately.

- Coercer needs to be absent during the registration and at some point during the election.

- User needs inalienable means of authentication.

- Coercer needs to be absent at the end of the election.

8. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| DDH | Decisional Diffie–Hellman |

| CPA | Chosen Plaintext Attack |

| NM-CPA | Non-Malleability under Chosen Plaintext Attack |

| V | Voters |

| T | Trustees |

| CA | Certificate Authority |

| VS | Voting Server |

| TS | Tallying Server |

| PBB | Public Bulletin Board |

References

- The Guardian. Dutch Will Count All Election Ballots by Hand to Thwart Hacking. May 2018. Available online: https://www.theguardian.com/world/2017/feb/02/dutch-will-count-all-election-ballots-by-hand-to-thwart-cyber-hacking (accessed on 3 May 2020).

- Reuters. France Drops Electronic Voting for Citizens Abroad Over Cybersecurity Fears. 2017. Available online: http://www.reuters.com/article/us-france-election-cyber-idUSKBN16D233 (accessed on 3 May 2020).

- Hapsara, M.; Imran, A.; Turner, T. E-Voting in Developing Countries. Lect. Notes Comput. Sci. 2017, 10141, 36–55. [Google Scholar] [CrossRef]

- Electoral-Comission. The Administration of the June 2017 UK General Election. May 2018. Available online: https://www.electoralcommission.org.uk/__data/assets/pdf_file/0003/238044/The-administration-of-the-June-2017-UK-general-election.pdf (accessed on 3 May 2020).

- Chase, J. German Election Could Be Won by Early Voting. May 2018. Available online: http://www.dw.com/en/german-election-could-be-won-by-early-voting/a-40296550 (accessed on 3 May 2020).

- Ministerio del Interior. Voto Desde Fuera de España. 2013. Available online: http://www.infoelectoral.mir.es/voto-desde-fuera-de-espana (accessed on 3 May 2020).

- Ministère de l’Europe et des Affaires étrangères. Vote par Correspondance. May 2018. Available online: https://www.diplomatie.gouv.fr/fr/services-aux-francais/voter-a-l-etranger/modalites-de-vote/vote-par-correspondance/ (accessed on 3 May 2020).

- United Kingdom Government. Completing and Returning Your Postal Vote. May 2018. Available online: https://www.gov.uk/voting-in-the-uk/postal-voting (accessed on 3 May 2020).

- Barker, E.B. SP 800-57. Recommendation for Key Management, Part 1: General. Available online: http://dx.doi.org/10.6028/NIST.SP.800-57pt1r4 (accessed on 3 May 2020).

- Ministerio del Interior. La Subsecretaria Soledad López Explica en el Senado las Características del DNI Electrónico. 2006. Available online: https://goo.gl/r6MVYJ (accessed on 3 May 2020).

- Gimeno, M.; Villamía-Uriarte, B.; Suárez-Saa, V. eEspaña—Informe Anual Sobre el Desarrollo de la Sociedad de la Información en España. 2014. Available online: https://www.proyectosfundacionorange.es/docs/eE2014/Informe_eE2014.pdf (accessed on 3 May 2020).

- Cripps, H.; Standing, C.; Prijatelj, V. Smart health case cards: Are they applicable in the australian context? In Proceedings of the 25th Bled eConference eDependability: Reliable and Trustworthy eStructures, eProcesses, eOperations and eServices for the Future, Bled, Slovenia, 17–20 June 2012. [Google Scholar]

- Hernandez-Ardieta, J.L.; Gonzalez-Tablas, A.I.; De Fuentes, J.M.; Ramos, B. A Taxonomy and Survey of Attacks on Digital Signatures. Comput. Secur. 2013, 34, 67–112. [Google Scholar] [CrossRef]

- Bernstein, D.J.; Chang, Y.A.; Cheng, C.M.; Chou, L.P.; Heninger, N.; Lange, T.; van Someren, N. Factoring RSA Keys from Certified Smart Cards: Coppersmith in the Wild. Advances in Cryptology—ASIACRYPT’2013. In Proceedings of the 19th International Conference on the Theory and Application of Cryptology and Information Security, Bengaluru, India, 1–5 December 2013; Sako, K., Sarkar, P., Eds.; pp. 341–360. [Google Scholar] [CrossRef]

- Nemec, M.; Sys, M.; Svenda, P.; Klinec, D.; Matyas, V. The Return of Coppersmith’s Attack: Practical Factorization of Widely Used RSA Moduli. In Proceedings of the 24th ACM Conference on Computer and Communications Security (CCS’2017), Dallas, TX, USA, 30 October–3 November 2017; ACM: New York, NY, USA, 2017; pp. 1631–1648. [Google Scholar] [CrossRef]

- Juels, A.; Catalano, D.; Jakobsson, M. Coercion-resistant Electronic Elections. In Proceedings of the 2005 ACM Workshop on Privacy in the Electronic Society; WPES ’05, Alexandria, VA, USA, 7 November 2005; ACM: New York, NY, USA, 2005; pp. 61–70. [Google Scholar] [CrossRef]

- Araújo, R.; Barki, A.; Brunet, S.; Traoré, J. Remote electronic voting can be efficient, verifiable and coercion-resistant. In Financial Cryptography and Data Security—2016 International Workshops, BITCOIN, VOTING, and WAHC; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2016; Volume 9604, pp. 224–232. [Google Scholar] [CrossRef]

- Bursuc, S.; Grewal, G.S.; Ryan, M.D. Trivitas: Voters Directly Verifying Votes. In E-Voting and Identity: Proceedings of the Third International Conference, VoteID 2011, Tallinn, Estonia, 28–30 September 2011; Revised Selected Papers; Kiayias, A., Lipmaa, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 190–207. [Google Scholar] [CrossRef]

- Clark, J.; Hengartner, U. Selections: Internet voting with over-the-shoulder coercion-resistance. In Financial Cryptography and Data Security; Springer: Berlin/Heidelberg, Germany, 2012; pp. 47–61. [Google Scholar] [CrossRef]

- Myers, A.C.; Clarkson, M.; Chong, S. Civitas: Toward a secure voting system. In IEEE Symposium on Security and Privacy; IEEE: Piscataway, NJ, USA, 2008; pp. 354–368. [Google Scholar] [CrossRef]

- Grontas, P.; Pagourtzis, A.; Zacharakis, A.; Zhang, B. Towards everlasting privacy and efficient coercion resistance in remote electronic voting. In Financial Cryptography and Data Security; Zohar, A., Eyal, I., Teague, V., Clark, J., Bracciali, A., Pintore, F., Sala, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; pp. 210–231. [Google Scholar] [CrossRef]

- Rønne, P.B.; Atashpendar, A.; Gjøsteen, K.; Ryan, P.Y.A. Short paper: Coercion-resistant voting in linear time via fully homomorphic encryption. In Financial Cryptography and Data Security; Bracciali, A., Clark, J., Pintore, F., Rønne, P.B., Sala, M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 289–298. [Google Scholar]

- Spycher, O.; Haenni, R.; Dubuis, E. Coercion-resistant hybrid voting systems. Proceedings of Electronic Voting 2010, EVOTE 2010, 4th International Conference, Bregenz, Austria, 21– 24 July 2010; pp. 269–282. [Google Scholar]

- Gjøsteen, K. Analysis of an Internet Voting Protocol; Technical Report. Available online: https://eprint.iacr.org/2010/380.pdf (accessed on 3 May 2020).

- Dimitriou, T. Efficient, Coercion-free and Universally Verifiable Blockchain-based Voting. Comput. Netw. 2020, 174, 107234. [Google Scholar] [CrossRef]

- Achenbach, D.; Kempka, C.; Löwe, B.; Müller-Quade, J. Improved Coercion-Resistant Electronic Elections through Deniable Re-Voting. USENIX J. Elect. Technol. Syst. JETS 2015, 3, 26–45. [Google Scholar]

- Locher, P.; Haenni, R.; Koenig, R.E. Coercion-resistant internet voting with everlasting privacy. In Financial Cryptography and Data Security; Springer: Berlin/Heidelberg, Germany, 2016; pp. 161–175. [Google Scholar] [CrossRef]

- Locher, P.; Haenni, R. Verifiable Internet Elections with Everlasting Privacy and Minimal Trust. In E-Voting and Identity; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 74–91. [Google Scholar] [CrossRef]

- Lueks, W.; Querejeta-Azurmendi, I.; Troncoso, C. VoteAgain: A scalable coercion-resistant voting system. Proceedings of 29th USENIX Security Symposium (USENIX Security 20), Boston, MA, USA, 12–14 August 2020; pp. 1553–1570. [Google Scholar]

- Moran, T.; Naor, M. Receipt-Free Universally-Verifiable Voting with Everlasting Privacy. In Proceedings of the 26th Annual International Conference on Advances in Cryptology; CRYPTO’06, Santa Barbara, CA, USA, 20–24 August 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 373–392. [Google Scholar] [CrossRef]

- Querejeta-Azurmendi, I.; Hernández Encinas, L.; Arroyo Guardeño, D.; Hernandez-Ardieta, J.L. An internet voting proposal towards improving usability and coercion resistance. In Advances in Intelligent Systems and Computing, Proceedings of the International Joint Conference: 12th International Conference on Computational Intelligence in Security for Information Systems (CISIS 2019) and 10th International Conference on EUropean Transnational Education (ICEUTE 2019), Seville, Spain, 13–15 May 2019; Martínez-Álvarez, F., Lora, A.T., Muñoz, J.A.S., Quintián, H., Corchado, E., Eds.; Springer: Cham, Switzerland, 2019; Volume 951, pp. 155–164. [Google Scholar] [CrossRef]

- Pedersen, T.P. A Threshold Cryptosystem without a Trusted Party. In Advances in Cryptology—EUROCRYPT’91; Springer: Berlin/Heidelberg, Germany, 1991; Volume 547, pp. 522–526. [Google Scholar] [CrossRef]

- Groth, J. A Verifiable Secret Shuffle of Homomorphic Encryptions. J. Cryptol. 2010, 23, 546–579. [Google Scholar] [CrossRef]

- Bayer, S.; Groth, J. Efficient Zero-Knowledge Argument for Correctness of a Shuffle. Available online: http://www0.cs.ucl.ac.uk/staff/J.Groth/MinimalShuffle.pdf (accessed on 3 May 2020).

- Goldwasser, S.; Micali, S.; Rackoff, C. The Knowledge Complexity of Interactive Proof-Systems. In STOC ’85: Proceedings of the Seventeenth Annual ACM Symposium on Theory of Computing; Sedgewick, R., Ed.; Association for Computing Machinery: New York, NY, USA, 1985; pp. 291–304. [Google Scholar] [CrossRef]

- Fiat, A.; Shamir, A. How to Prove Yourself: Practical Solutions to Identification and Signature Problems. In Proceedings of the Conference on Advances in Cryptology—CRYPTO ’86; Springer: Berlin/Heidelberg, Germany, 1987; pp. 186–194. [Google Scholar] [CrossRef]

- Camenisch, J.; Stadler, M. Efficient Group Signature Schemes for Large Groups (Extended Abstract). In Proceedings of the Conference 17th Annual International Cryptology Conference, Santa Barbara, CA, USA, 17–21 August 1997; Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.33.1954&rep=rep1&type=pdf (accessed on 3 May 2020).

- Bünz, B.; Bootle, J.; Boneh, D.; Poelstra, A.; Wuille, P.; Maxwell, G. Bulletproofs: Short Proofs for Confidential Transactions and More. In Proceedings of the 2018 IEEE Symposium on Security and Privacy, SP 2018, Proceedings, San Francisco, CA, USA, 21–23 May 2018; IEEE Computer Society: Piscataway, NJ, USA, 2018; pp. 315–334. [Google Scholar] [CrossRef]

- Camenisch, J.; Lysyanskaya, A. A Signature Scheme with Efficient Protocols. In Security in Communication Networks: Proceedings of the Third International Conference, SCN 2002 Amalfi, Italy, 11–13 September 2002; Revised Papers; Cimato, S., Persiano, G., Galdi, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; pp. 268–289. [Google Scholar] [CrossRef]

- Brands, S.A. Rethinking Public Key Infrastructures and Digital Certificates: Building in Privacy; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Park, S.; Park, H.; Won, Y.; Lee, J.; Kent, S. Traceable Anonymous Certificate. Available online: https://tools.ietf.org/pdf/rfc5636 (accessed on 3 May 2020).

- Heather, J.; Lundin, D. The append-only web bulletin board. In Formal Aspects in Security and Trust; Springer: Berlin/Heidelberg, Germany, 2009; pp. 242–256. [Google Scholar] [CrossRef]

- McCorry, P.; Shahandashti, S.F.; Hao, F. A smart contract for boardroom voting with maximum voter privacy. In Financial Cryptography and Data Security; LNCS; Springer: Berlin/Heidelberg, Germany, 2017; pp. 357–375. Available online: https://dblp.org/rec/bib/conf/fc/McCorrySH17 (accessed on 3 May 2020). [CrossRef]

- Smith, W. New cryptographic election protocol with best-known theoretical properties. In Proceedings of the ECRYPT-Frontiers in Electronic Elections (FEE), Milan, Italy, 15–16 September 2005. [Google Scholar]

- Bernhard, D.; Cortier, V.; Galindo, D.; Pereira, O.; Warinschi, B. SoK: A Comprehensive Analysis of Game-Based Ballot Privacy Definitions. In Proceedings of the 2015 IEEE Symposium on Security and Privacy, SP 2015, San Jose, CA, USA, 17–21 May 2015; IEEE Computer Society: Piscataway, NJ, USA, 2015; pp. 499–516. [Google Scholar] [CrossRef]

- Adida, B. Helios: Web-based Open-audit Voting. In Proceedings of the 17th Conference on Security Symposium, SS’08; USENIX, Boston, MA, USA, 22–27 June 2008; Association: Berkeley, CA, USA, 2008; pp. 335–348. [Google Scholar]

- Bernhard, D.; Pereira, O.; Warinschi, B. How Not to Prove Yourself: Pitfalls of the Fiat-Shamir Heuristic and Applications to Helios. Available online: https://eprint.iacr.org/2016/771.pdf (accessed on 3 May 2020).

- Arapinis, M.; Cortier, V.; Kremer, S.; Ryan, M. Practical everlasting privacy. In Principles of Security and Trust—Proceedings of the Second International Conference, POST 2013, Held as Part of the European Joint Conferences on Theory and Practice of Software, ETAPS 2013, Rome, Italy, 16–24 March 2013; Lecture Notes in Computer, Science; Basin, D.A., Mitchell, J.C., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; Volume 7796, pp. 21–40. [Google Scholar] [CrossRef]

- Grontas, P.; Pagourtzis, A.; Zacharakis, A. Security Models for Everlasting Privacy. Available online: https://eprint.iacr.org/2019/1193.pdf (accessed on 3 May 2020).

- Cortier, V.; Galindo, D.; Küsters, R.; Müller, J.; Truderung, T. SoK: Verifiability Notions for E-Voting Protocols. In Proceedings of the IEEE Symposium on Security and Privacy, SP 2016, San Jose, CA, USA, 22–26 May 2016; IEEE Computer Society: Piscataway, NJ, USA, 2016; pp. 779–798. [Google Scholar] [CrossRef]

- CryptoZ. Chainanalysis—3.8 Million Bitcoin Is Lost Forever. Steemit. 2018. Available online: https://steemit.com/cryptocurrency/@crypto-z/chainanalysis-3-8-million-bitcoin-is-lost-forever (accessed on 3 May 2020).

- Neto, A.S.; Leite, M.; Araújo, R.; Mota, M.P.; Neto, N.C.S.; Traoré, J. Usability Considerations For Coercion-Resistant Election Systems. In Proceedings of the 17th Brazilian Symposium on Human Factors in Computing Systems (IHC 2018), Belém, Brazil, 22–26 October 2018. [Google Scholar] [CrossRef]

- Marea Granate. Calendario Electoral Voto Exterior 2019. Available online: https://mareagranate.org/2019/02/calendario-electoral-voto-exterior-2019/ (accessed on 3 May 2020).

| Filtering | Coercion | ||||||

|---|---|---|---|---|---|---|---|

| Deniable | Verifiable | Complexity | Crypto State | Assumption | Strict | IC | |

| JCJ [16,18,20] | No | Yes | yes | k-out-of-t + AC | No | No | |

| Rønne et al. [22] | No | Yes | n | yes | k-out-of-t + AC | No | Yes |

| Blackbox [24] | TTP | No | n | Yes | TTP | No | No |

| Achenbach et al. [26] | k-out-of-t | Yes | Yes | k-out-of-t + AC | No | No | |

| Locher et al. [27] | k-out-of-t | Yes | Yes | k-out-of-t + AC | No | No | |

| Lueks et al. [29] | TTP | Yes | No | TTP | No | No | |

| NetVote | TTP | Yes | n | No | TTP | Yes | No |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Querejeta-Azurmendi, I.; Arroyo Guardeño, D.; Hernández-Ardieta, J.L.; Hernández Encinas, L. NetVote: A Strict-Coercion Resistance Re-Voting Based Internet Voting Scheme with Linear Filtering. Mathematics 2020, 8, 1618. https://doi.org/10.3390/math8091618

Querejeta-Azurmendi I, Arroyo Guardeño D, Hernández-Ardieta JL, Hernández Encinas L. NetVote: A Strict-Coercion Resistance Re-Voting Based Internet Voting Scheme with Linear Filtering. Mathematics. 2020; 8(9):1618. https://doi.org/10.3390/math8091618

Chicago/Turabian StyleQuerejeta-Azurmendi, Iñigo, David Arroyo Guardeño, Jorge L. Hernández-Ardieta, and Luis Hernández Encinas. 2020. "NetVote: A Strict-Coercion Resistance Re-Voting Based Internet Voting Scheme with Linear Filtering" Mathematics 8, no. 9: 1618. https://doi.org/10.3390/math8091618

APA StyleQuerejeta-Azurmendi, I., Arroyo Guardeño, D., Hernández-Ardieta, J. L., & Hernández Encinas, L. (2020). NetVote: A Strict-Coercion Resistance Re-Voting Based Internet Voting Scheme with Linear Filtering. Mathematics, 8(9), 1618. https://doi.org/10.3390/math8091618