Spatial-Perceptual Embedding with Robust Just Noticeable Difference Model for Color Image Watermarking

Abstract

1. Introduction

2. Related Work

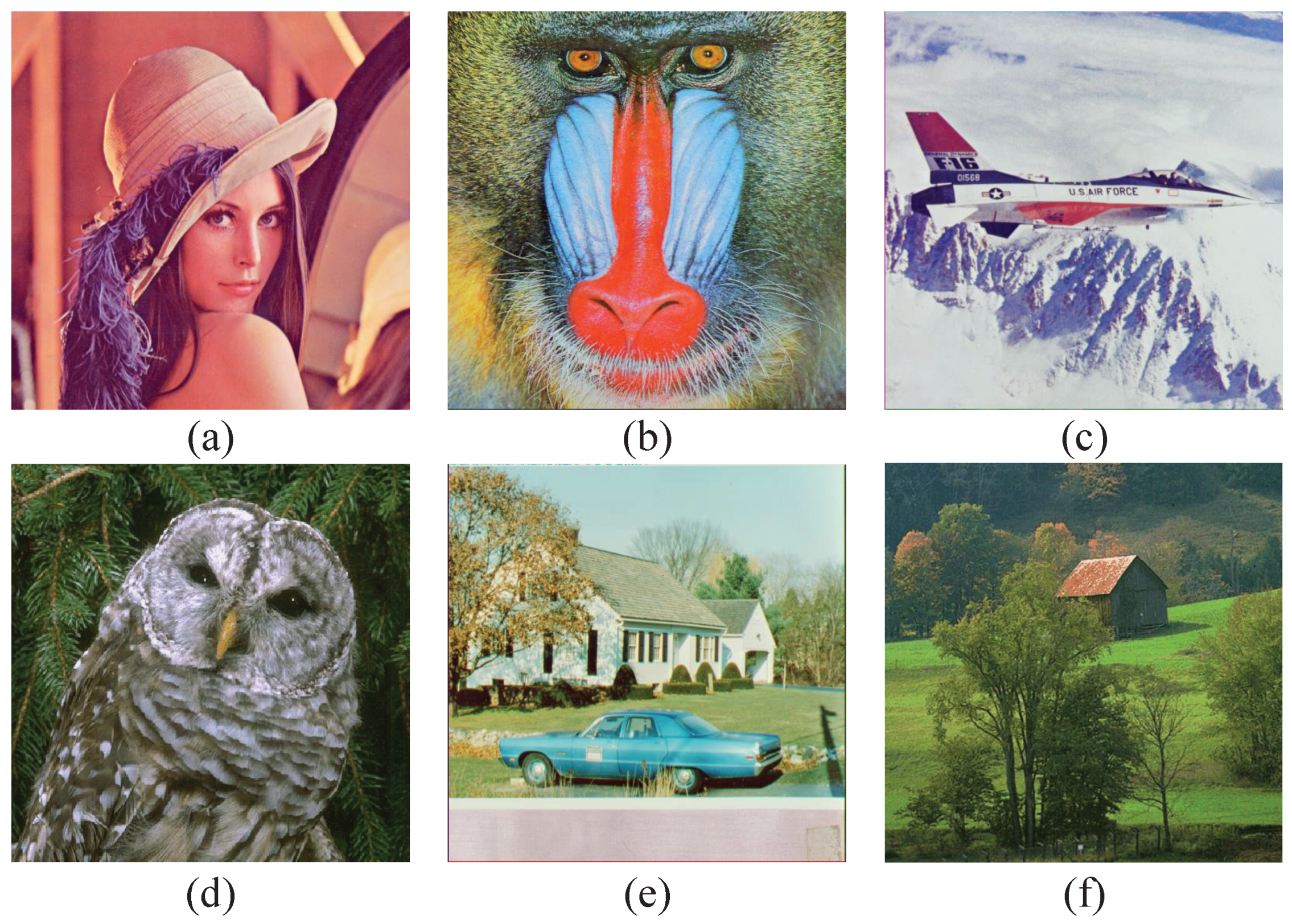

2.1. JND Modeling

2.1.1. The Baseline CSF Threshold

2.1.2. Luminance Adaptation

2.1.3. Contrast Masking

2.1.4. Robust JND Model

3. Proposed Watermarking Scheme

3.1. Watermark Embedding Scheme

| Algorithm 1 Watermark Embedding |

| Input: The host image, I; Watermark message, m; Output: Watermarked image ;

|

3.2. Watermark Extracting Scheme

| Algorithm 2 Watermark Extracting |

| Input: The received watermarked image, ; Output: Watermark message ;

|

3.3. Adaptive Quantization Step

4. Experiments and Results Analysis

4.1. Comparison with Different JND Models within Watermarking Framework

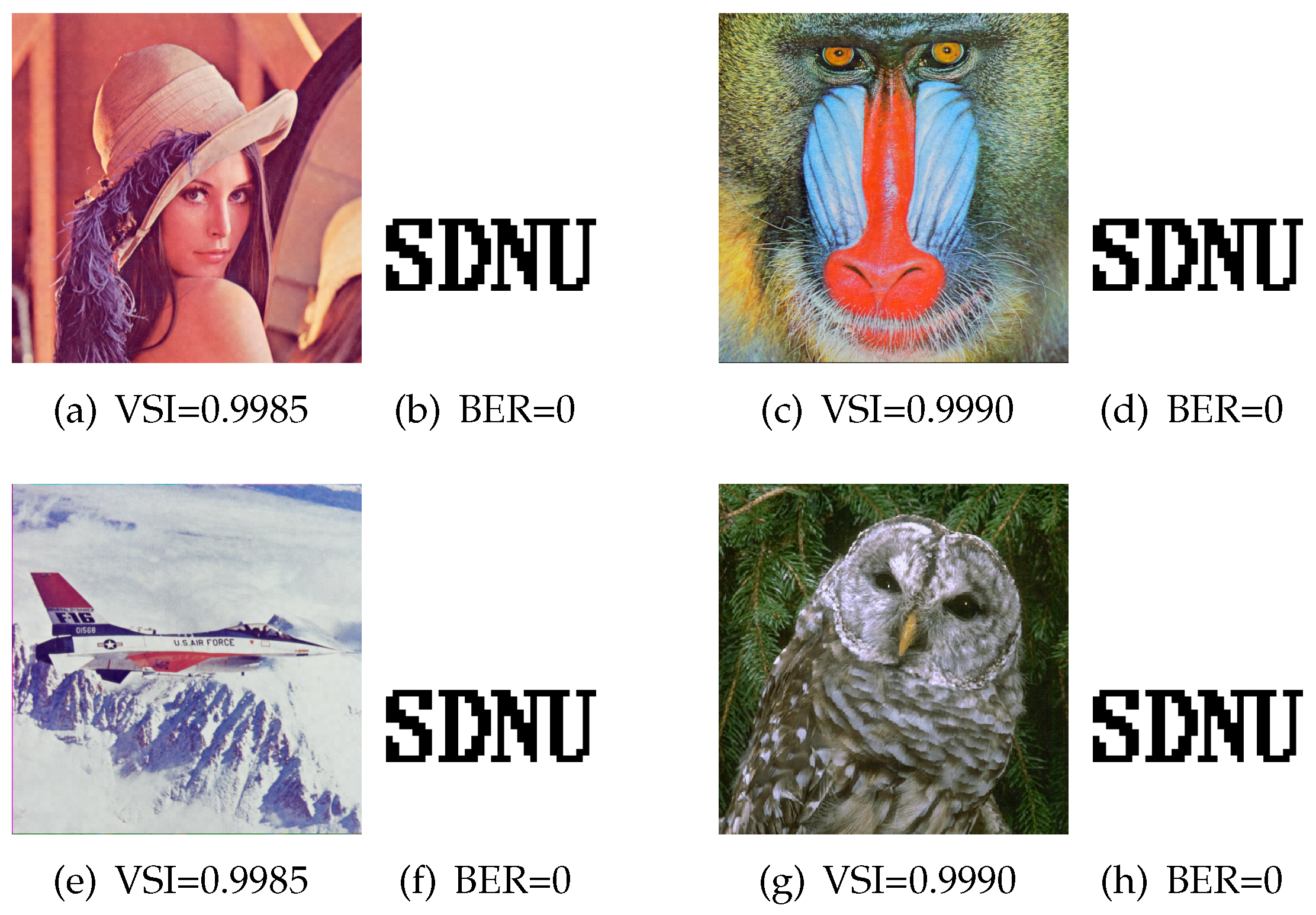

4.2. Imperceptibility Test for Watermarking Scheme

4.3. Robust Test for Watermarking Scheme

4.3.1. Comparison with Individual Quantization-Based Watermarking Methods

4.3.2. Comparison with Spatial-Uniform Embedding-Based Watermarking Methods

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Cox, I.; Miller, M.; Bloom, J.; Fridrich, J.; Kalker, T. Digital Watermarking and Steganography; Morgan Kaufmann: Burlington, MA, USA, 2007. [Google Scholar]

- Wu, X.; Yang, C.N. Invertible secret image sharing with steganography and authentication for AMBTC compressed images. Signal Process. Image Commun. 2019, 78, 437–447. [Google Scholar] [CrossRef]

- Liu, Y.X.; Yang, C.N.; Sun, Q.D.; Wu, S.Y.; Lin, S.S.; Chou, Y.S. Enhanced embedding capacity for the SMSD-based data-hiding method. Signal Process. Image Commun. 2019, 78, 216–222. [Google Scholar] [CrossRef]

- Kim, C.; Yang, C.N. Watermark with DSA signature using predictive coding. Multimed. Tools Appl. 2015, 74, 5189–5203. [Google Scholar] [CrossRef][Green Version]

- Liu, H.; Xu, B.; Lu, D.; Zhang, G. A path planning approach for crowd evacuation in buildings based on improved artificial bee colony algorithm. Appl. Soft Comput. 2018, 68, 360–376. [Google Scholar] [CrossRef]

- De Vleeschouwer, C.; Delaigle, J.F.; Macq, B. Invisibility and application functionalities in perceptual watermarking an overview. Proc. IEEE 2002, 90, 64–77. [Google Scholar] [CrossRef]

- Zong, J.; Meng, L.; Zhang, H.; Wan, W. JND-based Multiple Description Image Coding. KSII Trans. Internet Inf. Syst. 2017, 11, 3935–3949. [Google Scholar]

- Fridrich, J. Steganography in Digital Media: Principles, Algorithms, and Applications; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Wang, J.; Wan, W. A novel attention-guided JND Model for improving robust image watermarking. Multimed. Tools Appl. 2020, 79, 24057–24073. [Google Scholar] [CrossRef]

- Roy, S.; Pal, A.K. A blind DCT based color watermarking algorithm for embedding multiple watermarks. AEU-Int. J. Electron. Commun. 2017, 72, 149–161. [Google Scholar] [CrossRef]

- Das, C.; Panigrahi, S.; Sharma, V.K.; Mahapatra, K. A novel blind robust image watermarking in DCT domain using inter-block coefficient correlation. AEU-Int. J. Electron. Commun. 2014, 68, 244–253. [Google Scholar] [CrossRef]

- Ansari, R.; Devanalamath, M.M.; Manikantan, K.; Ramachandran, S. Robust digital image watermarking algorithm in DWT-DFT-SVD domain for color images. In Proceedings of the 2012 IEEE International Conference on Communication, Information & Computing Technology (ICCICT), Mumbai, India, 19–20 October 2012; pp. 1–6. [Google Scholar]

- Cedillo-Hernandez, M.; Garcia-Ugalde, F.; Nakano-Miyatake, M.; Perez-Meana, H. Robust watermarking method in DFT domain for effective management of medical imaging. Signal Image Video Process. 2015, 9, 1163–1178. [Google Scholar] [CrossRef]

- Pradhan, C.; Rath, S.; Bisoi, A.K. Non blind digital watermarking technique using DWT and cross chaos. Procedia Technol. 2012, 6, 897–904. [Google Scholar] [CrossRef][Green Version]

- Araghi, T.K.; Abd Manaf, A.; Araghi, S.K. A secure blind discrete wavelet transform based watermarking scheme using two-level singular value decomposition. Expert Syst. Appl. 2018, 112, 208–228. [Google Scholar] [CrossRef]

- Lin, S.D.; Shie, S.C.; Guo, J.Y. Improving the robustness of DCT-based image watermarking against JPEG compression. Comput. Stand. Interfaces 2010, 32, 54–60. [Google Scholar] [CrossRef]

- Huang, J.; Shi, Y.Q.; Shi, Y. Embedding image watermarks in DC components. IEEE Trans. Circuits Syst. Video Technol. 2000, 10, 974–979. [Google Scholar] [CrossRef]

- Su, Q.; Chen, B. Robust color image watermarking technique in the spatial domain. Soft Comput. 2018, 22, 91–106. [Google Scholar] [CrossRef]

- Su, Q.; Yuan, Z.; Liu, D. An approximate schur decomposition-based spatial domain color image watermarking method. IEEE Access 2018, 7, 4358–4370. [Google Scholar] [CrossRef]

- Su, Q.; Liu, D.; Yuan, Z.; Wang, G.; Zhang, X.; Chen, B.; Yao, T. New rapid and robust color image watermarking technique in spatial domain. IEEE Access 2019, 7, 30398–30409. [Google Scholar] [CrossRef]

- Bae, S.H.; Kim, M. A new DCT-based JND model of monochrome images for contrast masking effects with texture complexity and frequency. In Proceedings of the IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; Volume 1, pp. 431–434. [Google Scholar]

- Wu, J.; Li, L.; Dong, W.; Shi, G.; Lin, W.; Kuo, C.C.J. Enhanced just noticeable difference model for images with pattern complexity. IEEE Trans. Image Process. 2017, 26, 2682–2693. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Liu, B.; Zhang, H.; Li, L.; Qin, X.; Zhang, G. Crowd evacuation simulation approach based on navigation knowledge and two-layer control mechanism. Inf. Sci. 2018, 436, 247–267. [Google Scholar] [CrossRef]

- Wan, W.; Wu, J.; Xie, X.; Shi, G. A novel just noticeable difference model via orientation regularity in DCT domain. IEEE Access 2017, 5, 22953–22964. [Google Scholar] [CrossRef]

- Wan, W.; Wang, J.; Li, J.; Meng, L.; Sun, J.; Zhang, H.; Liu, J. Pattern complexity-based JND estimation for quantization watermarking. Pattern Recognit. Lett. 2020, 130, 157–164. [Google Scholar] [CrossRef]

- Wang, C.X.; Xu, M.; Wan, W.; Wang, J.; Meng, L.; Li, J.; Sun, J. Robust Image Watermarking via Perceptual Structural Regularity-based JND Model. TIIS 2019, 13, 1080–1099. [Google Scholar]

- Wan, W.; Wang, J.; Xu, M.; Li, J.; Sun, J.; Zhang, H. Robust image watermarking based on two-layer visual saliency-induced JND profile. IEEE Access 2019, 7, 39826–39841. [Google Scholar] [CrossRef]

- Tsui, T.K.; Zhang, X.P.; Androutsos, D. Color image watermarking using multidimensional Fourier transforms. IEEE Trans. Inf. Forensics Secur. 2008, 3, 16–28. [Google Scholar] [CrossRef]

- Hu, H.T.; Chang, J.R. Dual image watermarking by exploiting the properties of selected DCT coefficients with JND modeling. Multimed. Tools Appl. 2018, 77, 26965–26990. [Google Scholar] [CrossRef]

- Wan, W.; Wang, J.; Li, J.; Sun, J.; Zhang, H.; Liu, J. Hybrid JND model-guided watermarking method for screen content images. Multimed. Tools Appl. 2020, 79, 4907–4930. [Google Scholar] [CrossRef]

- Wang, J.; Wan, W.B.; Li, X.X.; De Sun, J.; Zhang, H.X. Color image watermarking based on orientation diversity and color complexity. Expert Syst. Appl. 2020, 140, 112868. [Google Scholar] [CrossRef]

- Ma, L.; Yu, D.; Wei, G.; Tian, J.; Lu, H. Adaptive spread-transform dither modulation using a new perceptual model for color image watermarking. IEICE Trans. Inf. Syst. 2010, 93, 843–857. [Google Scholar] [CrossRef]

- Wu, J.; Lin, W.; Shi, G.; Wang, X.; Li, F. Pattern masking estimation in image with structural uncertainty. IEEE Trans. Image Process. 2013, 22, 4892–4904. [Google Scholar] [CrossRef]

- Ahumada, A.J., Jr.; Peterson, H.A. Luminance-model-based DCT quantization for color image compression. In Proceedings of the Human Vision, Visual Processing, and Digital Display III, San Jose, CA, USA, 27 August 1992; Volume 1666, pp. 365–374. [Google Scholar]

- Mannos, J.; Sakrison, D. The effects of a visual fidelity criterion of the encoding of images. IEEE Trans. Inf. Theory 1974, 20, 525–536. [Google Scholar] [CrossRef]

- Peterson, H.A.; Ahumada, A.J., Jr.; Watson, A.B. Improved detection model for DCT coefficient quantization. In Proceedings of the Human Vision, Visual Processing, and Digital Display IV, San Jose, CA, USA, 9 September 1993; Volume 1913, pp. 191–201. [Google Scholar]

- Wei, Z.; Ngan, K.N. Spatio-temporal just noticeable distortion profile for grey scale image/video in DCT domain. IEEE Trans. Circuits Syst. Video Technol. 2009, 19, 337–346. [Google Scholar]

- CVG-UGR. The CVG-UGR Image Database. 2012. Available online: http://decsai.ugr.es/cvg/dbimagenes/c512.php (accessed on 11 March 2018).

- Zhang, L.; Shen, Y.; Li, H. VSI: A visual saliency-induced index for perceptual image quality assessment. IEEE Trans. Image Process. 2014, 23, 4270–4281. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Lin, W.; Xue, P. Improved estimation for just-noticeable visual distortion. Signal Process. 2005, 85, 795–808. [Google Scholar] [CrossRef]

- Li, Q.; Cox, I.J. Improved spread transform dither modulation using a perceptual model: Robustness to amplitude scaling and JPEG compression. In Proceedings of the 2007 IEEE International Conference on Acoustics, Speech and Signal Processing-ICASSP’07, Honolulu, HI, USA, 15–20 April 2007; Volume 2, pp. 185–188. [Google Scholar]

- Li, X.; Liu, J.; Sun, J.; Yang, X.; Liu, W. Step-projection-based spread transform dither modulation. IET Inf. Secur. 2011, 5, 170–180. [Google Scholar] [CrossRef]

| Attack | Lena | Baboon | Avion | ||||||

|---|---|---|---|---|---|---|---|---|---|

| [21] | [40] | Proposed | [21] | [40] | Proposed | [21] | [40] | Proposed | |

| GN(0.0010) | 0.1235 | 0.1812 | 0.0056 | 0.1367 | 0.1921 | 0.0300 | 0.1250 | 0.1175 | 0.0229 |

| GN(0.0015) | 0.2048 | 0.2625 | 0.0183 | 0.2219 | 0.2788 | 0.0767 | 0.2036 | 0.2654 | 0.0527 |

| SPN(0.0010) | 0.0549 | 0.0566 | 0.0056 | 0.0557 | 0.0549 | 0.0166 | 0.0527 | 0.0510 | 0.0159 |

| SPN(0.0015) | 0.0696 | 0.0803 | 0.0063 | 0.0710 | 0.0764 | 0.0247 | 0.0676 | 0.0696 | 0.0232 |

| JPEG(40) | 0.1787 | 0.2588 | 0.0056 | 0.1641 | 0.2549 | 0.0120 | 0.1589 | 0.2500 | 0.0085 |

| JPEG(50) | 0.0767 | 0.1255 | 0.0029 | 0.0837 | 0.1138 | 0.0090 | 0.0708 | 0.1230 | 0.0037 |

| RO(60) | 0.0154 | 0.0171 | 0.0015 | 0.0313 | 0.0288 | 0.0073 | 0.0242 | 0.0237 | 0.0039 |

| GF(3×3) | 0.0576 | 0.0591 | 0.0120 | 0.0735 | 0.0828 | 0.0300 | 0.0615 | 0.0623 | 0.0320 |

| Attack | Parameters | Ref. [26] | Ref. [41] | Ref. [42] | Ref. [32] | Proposed |

|---|---|---|---|---|---|---|

| Gaussian noise | (0.0005) | 0.0105 | 0.1045 | 0.0181 | 0.0088 | 0.0075 |

| (0.0010) | 0.0349 | 0.1561 | 0.0592 | 0.1444 | 0.0237 | |

| (0.0015) | 0.0693 | 0.1856 | 0.1038 | 0.1890 | 0.0562 | |

| Salt & Pepper noise | (0.0005) | 0.0068 | 0.0567 | 0.0074 | 0.0201 | 0.0073 |

| (0.0010) | 0.0162 | 0.0673 | 0.0179 | 0.0382 | 0.0137 | |

| (0.0015) | 0.0235 | 0.0762 | 0.0262 | 0.0409 | 0.0228 |

| Attack | Parameters | Ref. [26] | Ref. [41] | Ref. [42] | Ref. [32] | Proposed |

|---|---|---|---|---|---|---|

| JPEG | (40) | 0.0318 | 0.1699 | 0.0544 | 0.1677 | 0.0063 |

| (50) | 0.0089 | 0.1149 | 0.0203 | 0.1182 | 0.0052 | |

| (60) | 0.0031 | 0.0869 | 0.0088 | 0.0833 | 0.0034 |

| Attack | Parameters | Ref. [26] | Ref. [41] | Ref. [42] | Ref. [32] | Proposed |

|---|---|---|---|---|---|---|

| Gaussian filter | () | 0.0215 | 0.0898 | 0.0576 | 0.0323 | 0.0200 |

| Median filter | () | 0.1628 | 0.1400 | 0.0817 | 0.1068 | 0.0916 |

| Attack | Parameters | Ref. [26] | Ref. [41] | Ref. [42] | Ref. [32] | Proposed |

|---|---|---|---|---|---|---|

| Rotation | (15°) | 0.0078 | 0.0461 | 0.0075 | 0.1091 | 0.0058 |

| (30°) | 0.0052 | 0.0412 | 0.0044 | 0.0204 | 0.0047 | |

| (60°) | 0.0056 | 0.0426 | 0.0048 | 0.0202 | 0.0041 |

| Attack | Parameters | Ref. [26] | Ref. [41] | Ref. [42] | Ref. [32] | Proposed |

|---|---|---|---|---|---|---|

| JPEG+ Gaussian noise | (50+0.0005) | 0.0391 | 0.1794 | 0.0663 | 0.1628 | 0.0361 |

| (50+0.0010) | 0.0770 | 0.2275 | 0.1123 | 0.1990 | 0.0724 | |

| (50+0.0015) | 0.1152 | 0.2717 | 0.1539 | 0.2259 | 0.1073 |

| GN 0.0025 | SPN 0.0025 | JPEG 30 | Scaling 0.25 | MF (3,3) | GF (3,3) | VA 0.5 | RO (25°) | J + GN (50 + 0.002) | |

|---|---|---|---|---|---|---|---|---|---|

| Ref. [18] | 0.0439 | 0.0439 | 0.0059 | 0.0264 | 0.0078 | 0.0000 | 0.3905 | 0.0684 | 0.0479 |

| Ref. [19] | 0.0166 | 0.1387 | 0.0020 | 0.2940 | 0.0264 | 0.0737 | 0.3936 | 0.1680 | 0.0225 |

| Proposed | 0.0128 | 0.0313 | 0.0068 | 0.0254 | 0.0071 | 0.0000 | 0.0000 | 0.1033 | 0.0074 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, K.; Zhang, Y.; Li, J.; Zhan, Y.; Wan, W. Spatial-Perceptual Embedding with Robust Just Noticeable Difference Model for Color Image Watermarking. Mathematics 2020, 8, 1506. https://doi.org/10.3390/math8091506

Zhou K, Zhang Y, Li J, Zhan Y, Wan W. Spatial-Perceptual Embedding with Robust Just Noticeable Difference Model for Color Image Watermarking. Mathematics. 2020; 8(9):1506. https://doi.org/10.3390/math8091506

Chicago/Turabian StyleZhou, Kai, Yunming Zhang, Jing Li, Yantong Zhan, and Wenbo Wan. 2020. "Spatial-Perceptual Embedding with Robust Just Noticeable Difference Model for Color Image Watermarking" Mathematics 8, no. 9: 1506. https://doi.org/10.3390/math8091506

APA StyleZhou, K., Zhang, Y., Li, J., Zhan, Y., & Wan, W. (2020). Spatial-Perceptual Embedding with Robust Just Noticeable Difference Model for Color Image Watermarking. Mathematics, 8(9), 1506. https://doi.org/10.3390/math8091506