Abstract

The relaxed inertial Tseng-type method for solving the inclusion problem involving a maximally monotone mapping and a monotone mapping is proposed in this article. The study modifies the Tseng forward-backward forward splitting method by using both the relaxation parameter, as well as the inertial extrapolation step. The proposed method follows from time explicit discretization of a dynamical system. A weak convergence of the iterates generated by the method involving monotone operators is given. Moreover, the iterative scheme uses a variable step size, which does not depend on the Lipschitz constant of the underlying operator given by a simple updating rule. Furthermore, the proposed algorithm is modified and used to derive a scheme for solving a split feasibility problem. The proposed schemes are used in solving the image deblurring problem to illustrate the applicability of the proposed methods in comparison with the existing state-of-the-art methods.

Keywords:

variational inclusion problem; Lipschitz-type conditions; forward-backward method; zero point; image restoration; maximal monotone operator MSC:

47H05; 47J20; 47J25; 65K15

1. Introduction

This paper considers the problem of finding a point such that:

where and are respectively single-valued and multi-valued operators on a real Hilbert space . The variational inclusion (VI) problem (1) is a fundamental problem in optimization theory, which is applied in many areas of study such as image processing, machine learning, transportation problems, equilibrium, economics, and engineering [,,,,,,,,,,,,,,,,].

There are several approaches to the VI problem, the popular one being the forward-backward splitting method introduced in [,]. Several studies have been carried out, and a number of algorithms have been considered and proposed to solve (1) [,,,,,,,,,,,].

To study the formulation of the monotone inclusion problem (1), the relaxation techniques are important tools as they give iterative schemes more versatility [,]. In order to accelerate the convergence of numerical methods, inertial effects were introduced. This technique traces back to the pioneering work of Polyak [] who introduced the heavy ball method to speed up the gradient algorithm’s convergence behavior and allow the identification of various critical points. The inertial idea was later used and developed by Nesterov [] and Alvarez and Attouch (see [,]) in the sense of solving smooth convex minimization problems and monotone inclusions/non-smooth convex minimization problems, respectively. A considerable amount of literature has contributed to inertial algorithms over the last decade [,,,,].

Due to the advantages of the inertial effects and relaxation techniques, Attouch and Cabot extensively studied the inertial algorithm for monotone inclusion and convex optimization problems. To be precise, they focused on the relaxed inertial proximal method (RIPA) in [,] and the relaxed inertial forward-backward method (RIFB) in []. In [], and a relaxed inertial Douglas–Rachford algorithm for monotone inclusions was proposed. Similarly, in [], Iutzeler and Hendrickx studied the influence of inertial effects and relaxation techniques on the numerical performance of algorithms. The similarity between relaxation and inertial parameters for relative-error inexact under-relaxed algorithms was addressed in [,].

The last equality follows from an explicit discretization of (2) in time with a step size Taking we obtain:

Setting in (4), we get:

It can be observed that in the case Equation (5) reduces to Tseng’s forward-backward-forward method []. The convergence of the scheme in [] requires that where L is the Lipschitz constant of or can be computed using a line search procedure with a finite stopping criterion. It has been known that line search procedures involve extra functions’ evaluations, thereby reducing the computational performance of a given scheme. In this article, we propose a simple variable step size, which does not involve any line search.

The main iterative scheme in this study is given by:

where is the relaxation parameter and is the extrapolation parameter. It is well known that the extrapolation step speeds up the convergence of a scheme. The step size is defined to be self-adaptively updated according to a new simple step size rule.

Furthermore, (6) without the additional last step is exactly the scheme proposed in [], which converges weakly to the solution of (1) with a restrictive assumption on Moreover, (6) can be considered as a relaxed version of the scheme proposed by Tseng [].

Recently, Gibali et al. [] proposed a modified Tseng algorithm by incorporating the Mann method with a variable step size for solving (1). The question now is: Can we have a fast iterative scheme involving a more general class of operators with a variable step size? We provide a positive answer to this question in this study.

Inspired and motivated by [,,], we propose a relaxed inertial scheme with variable step sizes by incorporating the inertial extrapolation step and the relaxed parameter with the forward and backward scheme. The aim of this modification is to obtain a self-adaptive scheme with fast convergence properties involving a more general class of operators. Furthermore, we present a modified version of the proposed scheme for solving the split feasibility problem. Moreover, to illustrate the performance and to show the applicability of the proposed methods when compared to the existing algorithms in the literature, we apply the proposed algorithms to solve the problem of image recovery.

The outline of this work is as follows: We give some definitions and lemmas that we will use in our convergence analysis in the next section. We present the convergence analysis of our proposed scheme in Section 3, and lastly, in Section 4, we illustrate the inertial effect and the computational performance of our algorithms by giving some experiments by using the proposed algorithms to solve the problem of image recovery.

2. Preliminaries

This section recalls some known facts and necessary tools that we need for the convergence analysis of our method. Throughout this article, is a real Hilbert space with the inner product and norm denoted respectively as and , and is a nonempty closed and convex subset of . The notation is used to indicate that, respectively, the sequence converges weakly (strongly) to u. The following is known to hold in a Hilbert space:

and for every []. The following definitions can be found for example in [,].

Definition 1.

Let be a mapping defined on a real Hilbert space . For all is said to be:

- (1)

- Monotone if:

- (2)

- Firmly nonexpansive if:or equivalently,

- (3)

- L-Lipschitz continuous on if there exists a constant such that:If then A is called nonexpansive.

Definition 2

([]). A multi-valued mapping is said to be monotone, if for every and Furthermore, B is said to be maximal monotone if it is monotone and if for every for every Graph

Definition 3.

Let be a multi-valued maximal monotone mapping. Then, the resolvent mapping associated with B is defined by:

for some where I stands for the identity operator on

It is worth mentioning that it is well known that if is a set-valued maximal monotone mapping and , then Dom, and is a single-valued and firmly nonexpansive mapping (see [] for more properties of maximal monotone mapping).

Lemma 1

([]). Let be a Lipschitz continuous and monotone mapping and be a maximal monotone mapping, then the mapping is a maximal monotone mapping.

Lemma 2

([]). Suppose , and are sequences in such that, for all ,

and there exists with for all Then, the following are satisfied:

- (i)

- , where ;

- (ii)

- there exists with .

Lemma 3

([]). Let be a nonempty set and a sequence in such that the following are satisfied:

- (a)

- for every exists;

- (b)

- every sequentially weak cluster point of is in E.

Then, converges weakly in

Lemma 4.

Let be a non-negative real number sequence, be a sequence of real numbers in with , and be a sequence of real numbers satisfying:

If for every subsequence of satisfying then

3. Relaxed Inertial Tseng-Type Algorithm for the Variational Inclusion Problem

In this section, we give a detailed description of our proposed algorithm, and we present the weak convergence analysis of the iterates generated by the algorithm to the solution of the inclusion problem (1) involving the sum of a maximally monotone and monotone operator. We suppose the following assumptions for the analysis of our method.

Assumption 1.

Lemma 5.

The generated sequence by (11) is monotonically decreasing and bounded from below by .

Proof.

It can be observed that the sequence is monotonically decreasing. Since A is a Lipschitz function with Lipschitz’s constant L, for we have:

It is obvious for that the inequality (8) is satisfied. Hence, it follows that . □

Remark 1.

By Lemma 5, the update (11) is well defined and:

Next, the following lemma and its proof are crucial for the convergence analysis of the sequence generated by Algorithm 1.

| Algorithm 1 Relaxed inertial Tseng-type algorithm for the VI problem. |

Initialization: Choose and Iterative steps: Given the current iterates and . Step 1. Set as:

Step 2. Compute:

If , stop. is the solution of (1). Else, go to Step 3. Step 3. Compute:

where the stepsize sequence is updated as follows:

Set , and go back to Step 1. |

Lemma 6.

Let A be an operator satisfying the assumption (). Then, for all we have:

where

Proof.

From the fact that the resolvent is firmly nonexpansive and we have:

Hence, we get:

which is the same as:

However,

On the other hand, from the definition of we have:

Using Equation (7), we have:

Lemma 7.

Let be a sequence generated by Algorithm 1 and Assumption be satisfied. If there exists a subsequence weakly convergent to with then

Proof.

Suppose Graph that is , and since we get:

This implies that:

By the maximal monotonicity of we have:

Hence,

From the fact that A is Lipschitz continuous and it follows that since exists, we get:

The above inequality together with the maximal monotonicity of implies that that is hence the proof. □

Theorem 1.

Let A be an operator satisfying the assumptions () and:

with Then, for all the sequence generated by Algorithm 1, converges weakly to

Proof.

From the definition of and (9), we have:

Furthermore,

Hence,

Putting Equation (24) and Lemma 6 together, we have:

Set:

Observe that, as we get:

It follows from (20) that there exists a such that:

This together with Equation (22) imply that:

On the other hand, from the Definition (10), we have:

Furthermore, from Definition (10) and the Cauchy–Schwartz inequality, we get:

Set:

Thus,

Notice that,

Therefore,

with it follows from (20) that Therefore, the sequence is nonincreasing. Further, from the definition of we have

In addition, we have:

The last inequality implies that:

Letting in the above expression implies that,

Moreover, from:

we can obtain:

By Relation (30) together with Lemma 2, we obtain:

By Equation (28), we also obtain:

Moreover,

It follows from Equation (25) that:

4. Application to the Split Feasibility Problem

In this section, we derive a scheme for solving the split feasibility problem from Algorithm 1. The split feasibility problem (SFP) is a problem of finding a point such that where are nonempty closed and convex subsets of and , respectively, and is a bounded linear operator. Censor and Elfving in [] introduced the problem (SFP) in finite-dimensional Hilbert spaces by using a multi-distance method to obtain an iterative method for solving SFP. A number of problems that arise from phase retrievals and in medical image reconstruction can be formulated as split variational feasibility problems [,]. The problem (SFP) can also be used in various disciplines such as image restoration, dynamic emission tomographic image reconstruction, and radiation therapy treatment planning [,,]. Suppose is proper lower semi-continuous convex. Then, for all the subdifferential of f is defined as follows:

For a nonempty closed and convex subset C of the indicator function of C is given by:

Furthermore, the normal cone of C at u is given as:

It is known that the indicator function is a proper, lower semi-continuous and convex function on . Thus, the subdifferential of is a maximal monotone operator and:

Therefore, for all we can define the resolvent of as for each Hence, we can see that for :

Now, based on the above derivation, Algorithm 1 can be reduced to the following scheme.

Let C and Q be nonempty closed convex subsets of Hilbert spaces and , respectively, be a bounded linear operator with adjoint , and be the solution set of the problem (SFP). Let be arbitrary, , and Let be a sequence generated by the following scheme:

where the step size is updated using Equation (11). If then the sequence converges weakly to an element of

Application to the Image Restoration Problem

The VI problem as mentioned in Section 1 can be applied for solving many problems. Of particular interest, in this subsection, we use Algorithm 1 and Scheme (43) (Algorithm 2) to solve the problem of image deblurring. Furthermore, to illustrate the effectiveness of the proposed scheme, we give a comparative analysis of Algorithm 1 and the algorithms proposed in [,]. Furthermore, we compare Scheme 43 with Byrne’s algorithm proposed in [] for solving the split feasibility problem.

Recall that the image deblurring problem in image processing can be expressed as:

where represents the original image, M is the deblurring matrix, c is the observed image, and is the Gaussian noise. It has been known that solving (44) is equivalent to solving the convex unconstrained optimization problem:

with as the regularization parameter. To solve (45), we suppose and where and then we have that is -cocoercive. Therefore, for any is nonexpansive []. The subgradient is maximal monotone []. It is well known that:

where for more details, see [].

For the split feasibility problem (SFP), we reformulate Problem 45 as a convex constrained optimization problem:

where is a given constant, and to solve (46), we take . We consider and

To measure the quality of the recovered image, we adopted the improved signal-to-noise ratio (ISNR) [] and structural similarity index measure (SSIM) []. We considered motion blur from MATLAB as the blurring function using (“fspecial(‘motion’,9,40)”). For the comparison, we considered the standard test images of Butterfly , Lena , and Pepper (see Figure 1). For the control parameters, we took , and for Algorithm 1 and Algorithm 2 (Scheme 43). and for Algorithm in [], Algorithm in [], and Algorithm in []. For all the algorithms, we took as the stopping criterion. For reference, all codes were written using MATLAB2018b on a personnel computer.

Figure 1.

Original test images. (a) Butterfly, (b) Lena, and (c) Pepper.

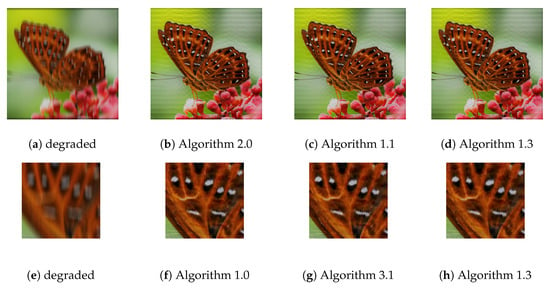

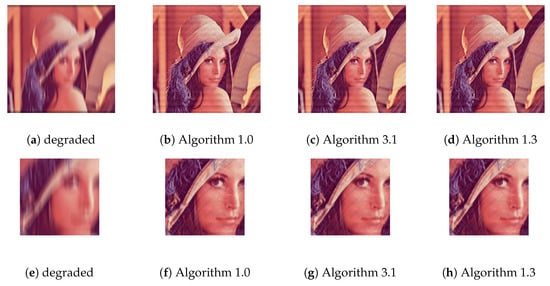

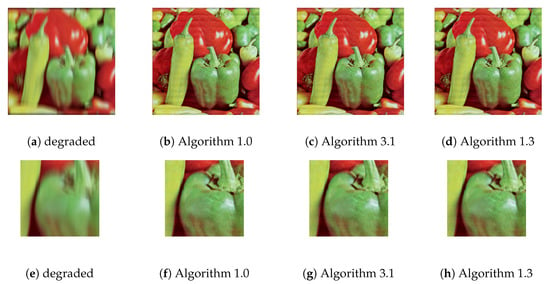

It can be seen from Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6 and Table 1 that the recovered images by the proposed Algorithm 1 had higher ISNR and SSIM values, which meant that the quality of the images recovered by Algorithm 1 was better than the compared algorithms.

Figure 2.

Degraded and restored (a–d) Butterfly images and (e–h) enlarged Butterfly images by the various algorithms.

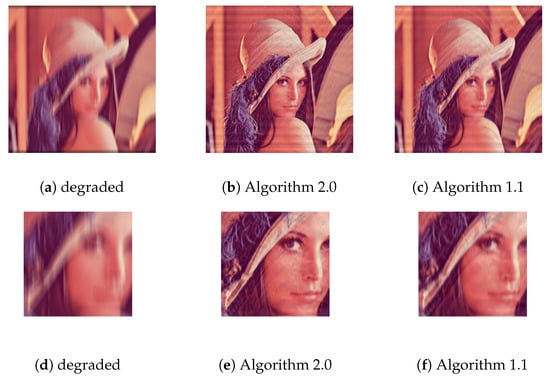

Figure 3.

Degraded and restored (a–d) Lena images and (e–h) enlarged Lena images by the various algorithms.

Figure 4.

Degraded and restored (a–d) Pepper images and (e–h) enlarged Pepper images by the various algorithms.

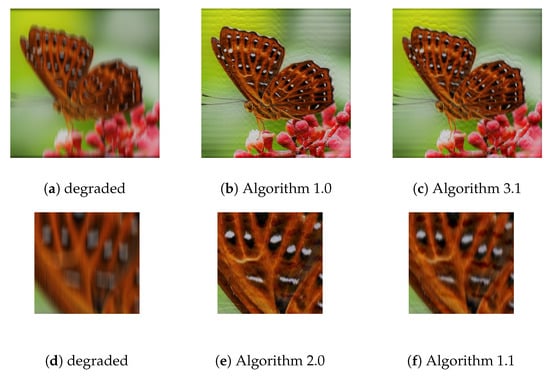

Figure 5.

Degraded and restored (a–c) Butterfly images and (d–f) enlarged Butterfly images by the various algorithms.

Figure 6.

Degraded and restored (a–c) Lena images and (d–f) enlarged Lena images by the various algorithms.

Table 1.

The ISNR and SSIM values of the compared algorithms.

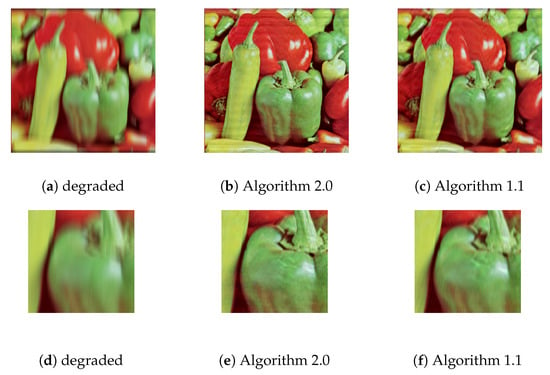

It can be observed from Figure 5, Figure 6 and Figure 7 that the restoration quality of the images restored by the modified algorithm was better than the quality of the images restored by the compared algorithm, and this is verified by the higher ISNR and SSIM values of Algorithm 2 in Table 2.

Figure 7.

Degraded and restored (a–c) Pepper images (d–f) enlarged Pepper images by the various algorithms.

Table 2.

The ISNR and SSIM values of the compared algorithms.

5. Conclusions

A relaxed inertial self-adaptive Tseng-type method for solving the variational inclusion problem was proposed in this work, and the scheme was derived from the explicit time discretization of the dynamical system. The main advantage of this scheme was that it involved both the use of an extrapolation step, as well as a relaxation parameter, and the iterates generated by the proposed scheme converged weakly to the solution of the zeros of the sum of a maximally monotone operator and a monotone operator. Furthermore, the proposed method did not require prior knowledge of the Lipschitz constant of the cost operator, and the iterates generated converged fast to the solution of the problem due to the inertial extrapolation step. A modified scheme derived from the proposed method was given for solving the split feasibility problem. The application of the proposed methods in image recovery and comparison with some of the existing state-of-the-art methods illustrated that the proposed methods are robust and efficient.

Author Contributions

Conceptualization, J.A. and A.H.I.; methodology, J.A.; software, A.P.; validation, J.A., P.K. and A.H.I.; formal analysis, J.A.; investigation, P.K.; resources, P.K.; data curation, A.P.; writing—original draft preparation, J.A.; writing—review and editing, J.A. and A.H.I.; visualization, A.P.; supervision, P.K.; project administration, P.K.; funding acquisition, P.K. All authors have read and agreed to the published version of the manuscript.

Funding

The authors acknowledge the financial support provided by the Center of Excellence in Theoretical and Computational Science (TaCS-CoE), Faculty of Science, KMUTT. The first and the third authors were supported by “the Petchra Pra Jom Klao Ph.D. Research Scholarship” from King Mongkut’s University of Technology Thonburi (Grant Nos. 38/2018 and 16/2018, respectively).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Combettes, P.L.; Wajs, V.R. Signal recovery by proximal forward-backward splitting. Multiscale Model. Simul. 2005, 4, 1168–1200. [Google Scholar] [CrossRef]

- Byrne, C. A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 2003, 20, 103. [Google Scholar] [CrossRef]

- Byrne, C. Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 2002, 18, 441. [Google Scholar] [CrossRef]

- Hanjing, A.; Suantai, S. A Fast Image Restoration Algorithm Based on a Fixed Point and Optimization Method. Mathematics 2020, 8, 378. [Google Scholar] [CrossRef]

- Thong, D.V.; Cholamjiak, P. Strong convergence of a forward–backward splitting method with a new step size for solving monotone inclusions. Comput. Appl. Math. 2019, 38, 94. [Google Scholar] [CrossRef]

- Marcotte, P. Application of Khobotov’s algorithm to variational inequalities and network equilibrium problems. INFOR Inf. Syst. Oper. Res. 1991, 29, 258–270. [Google Scholar] [CrossRef]

- Gibali, A.; Thong, D.V. Tseng type methods for solving inclusion problems and its applications. Calcolo 2018, 55, 49. [Google Scholar] [CrossRef]

- Khobotov, E.N. Modification of the extra-gradient method for solving variational inequalities and certain optimization problems. USSR Comput. Math. Math. Phys. 1987, 27, 120–127. [Google Scholar] [CrossRef]

- Kinderlehrer, D.; Stampacchia, G. An Introduction to Variational Inequalities and Their Applications; Academic Press: New York, NY, USA, 1980; Volume 31. [Google Scholar]

- Trémolières, R.; Lions, J.L.; Glowinski, R. Numerical Analysis of Variational Inequalities; North Holland: Amsterdam, The Netherlands, 2011. [Google Scholar]

- Baiocchi, C. Variational and quasivariational inequalities. In Applications to Free-boundary Problems; Springer: Basel, Switzerland, 1984. [Google Scholar]

- Konnov, I. Combined Relaxation Methods for Variational Inequalities; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2001; Volume 495. [Google Scholar]

- Jaiboon, C.; Kumam, P. An extragradient approximation method for system of equilibrium problems and variational inequality problems. Thai J. Math. 2012, 7, 77–104. [Google Scholar]

- Kumam, W.; Piri, H.; Kumam, P. Solutions of system of equilibrium and variational inequality problems on fixed points of infinite family of nonexpansive mappings. Appl. Math. Comput. 2014, 248, 441–455. [Google Scholar] [CrossRef]

- Chamnarnpan, T.; Phiangsungnoen, S.; Kumam, P. A new hybrid extragradient algorithm for solving the equilibrium and variational inequality problems. Afrika Matematika 2015, 26, 87–98. [Google Scholar] [CrossRef]

- Deepho, J.; Kumam, W.; Kumam, P. A new hybrid projection algorithm for solving the split generalized equilibrium problems and the system of variational inequality problems. J. Math. Model. Algorithms Oper. Res. 2014, 13, 405–423. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Cho, Y.J.; Yordsorn, P. Weak convergence of explicit extragradient algorithms for solving equilibirum problems. J. Inequal. Appl. 2019, 2019, 1–25. [Google Scholar] [CrossRef]

- Lions, P.L.; Mercier, B. Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 1979, 16, 964–979. [Google Scholar] [CrossRef]

- Passty, G.B. Ergodic convergence to a zero of the sum of monotone operators in Hilbert space. J. Math. Anal. Appl. 1979, 72, 383–390. [Google Scholar] [CrossRef]

- Tseng, P. A modified forward-backward splitting method for maximal monotone mappings. SIAM J. Control Optim. 2000, 38, 431–446. [Google Scholar] [CrossRef]

- Rockafellar, R.T. Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 1976, 14, 877–898. [Google Scholar] [CrossRef]

- Kitkuan, D.; Kumam, P.; Martínez-Moreno, J. Generalized Halpern-type forward–backward splitting methods for convex minimization problems with application to image restoration problems. Optimization 2019, 1–25. [Google Scholar] [CrossRef]

- Kitkuan, D.; Kumam, P.; Martínez-Moreno, J.; Sitthithakerngkiet, K. Inertial viscosity forward–backward splitting algorithm for monotone inclusions and its application to image restoration problems. Int. J. Comput. Math. 2020, 97, 482–497. [Google Scholar] [CrossRef]

- Huang, Y.; Dong, Y. New properties of forward–backward splitting and a practical proximal-descent algorithm. Appl. Math. Comput. 2014, 237, 60–68. [Google Scholar] [CrossRef]

- Goldstein, A.A. Convex programming in Hilbert space. Bull. Am. Math. Soc. 1964, 70, 709–710. [Google Scholar] [CrossRef]

- Padcharoen, A.; Kitkuan, D.; Kumam, W.; Kumam, P. Tseng methods with inertial for solving inclusion problems and application to image deblurring and image recovery problems. Comput. Math. Method 2020, e1088. [Google Scholar] [CrossRef]

- Attouch, H.; Peypouquet, J.; Redont, P. Backward–forward algorithms for structured monotone inclusions in Hilbert spaces. J. Math. Anal. Appl. 2018, 457, 1095–1117. [Google Scholar] [CrossRef]

- Dadashi, V.; Postolache, M. Forward–backward splitting algorithm for fixed point problems and zeros of the sum of monotone operators. Arab. J. Math. 2020, 9, 89–99. [Google Scholar] [CrossRef]

- Suparatulatorn, R.; Khemphet, A. Tseng type methods for inclusion and fixed point problems with applications. Mathematics 2019, 7, 1175. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: New York, NY, USA, 2011; Volume 408. [Google Scholar]

- Eckstein, J.; Bertsekas, D.P. On the Douglas—Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 1992, 55, 293–318. [Google Scholar] [CrossRef]

- Polyak, B.T. Some methods of speeding up the convergence of iteration methods. USSR Comput.Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Nesterov, Y. A method for unconstrained convex minimization problem with the rate of convergence O (1/k2). Doklady Ussr 1983, 269, 543–547. [Google Scholar]

- Alvarez, F. On the minimizing property of a second order dissipative system in Hilbert spaces. SIAM J. Control Optim. 2000, 38, 1102–1119. [Google Scholar] [CrossRef]

- Alvarez, F.; Attouch, H. An inertial proximal method for maximal monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal. 2001, 9, 3–11. [Google Scholar] [CrossRef]

- Dong, Q.; Cho, Y.; Zhong, L.; Rassias, T.M. Inertial projection and contraction algorithms for variational inequalities. J. Glob. Optim. 2018, 70, 687–704. [Google Scholar] [CrossRef]

- Abubakar, J.; Sombut, K.; Ibrahim, A.H. An Accelerated Subgradient Extragradient Algorithm for Strongly Pseudomonotone Variational Inequality Problems. Thai J. Math. 2019, 18, 166–187. [Google Scholar]

- Van Hieu, D. An inertial-like proximal algorithm for equilibrium problems. Math. Methods Oper. Res. 2018, 1–17. [Google Scholar] [CrossRef]

- Thong, D.V.; Van Hieu, D. Inertial extragradient algorithms for strongly pseudomonotone variational inequalities. J. Comput. Appl. Math. 2018, 341, 80–98. [Google Scholar] [CrossRef]

- Abubakar, J.; Kumam, P.; Rehman, H.; Ibrahim, A.H. Inertial Iterative Schemes with Variable Step Sizes for Variational Inequality Problem Involving Pseudomonotone Operator. Mathematics 2020, 8, 609. [Google Scholar] [CrossRef]

- Attouch, H.; Cabot, A. Convergence of a relaxed inertial proximal algorithm for maximally monotone operators. Math. Program. 2019. [Google Scholar] [CrossRef]

- Attouch, H.; Cabot, A. Convergence rate of a relaxed inertial proximal algorithm for convex minimization. Optimization 2019. [Google Scholar] [CrossRef]

- Attouch, H.; Cabot, A. Convergence of a Relaxed Inertial Forward–Backward Algorithm for Structured Monotone Inclusions. Appl. Math. Optim. 2019, 80, 547–598. [Google Scholar] [CrossRef]

- Boţ, R.I.; Csetnek, E.R.; Hendrich, C. Inertial Douglas–Rachford splitting for monotone inclusion problems. Appl. Math. Comput. 2015, 256, 472–487. [Google Scholar] [CrossRef]

- Iutzeler, F.; Hendrickx, J.M. A generic online acceleration scheme for optimization algorithms via relaxation and inertia. Optim. Methods Softw. 2019, 34, 383–405. [Google Scholar] [CrossRef]

- Alves, M.M.; Marcavillaca, R.T. On inexact relative-error hybrid proximal extragradient, forward-backward and Tseng’s modified forward-backward methods with inertial effects. Set-Valued Variat. Anal. 2019, 1–25. [Google Scholar] [CrossRef]

- Alves, M.M.; Eckstein, J.; Geremia, M.; Melo, J.G. Relative-error inertial-relaxed inexact versions of Douglas-Rachford and ADMM splitting algorithms. Comput. Optim. Appl. 2020, 75, 389–422. [Google Scholar] [CrossRef]

- Xia, Y.; Wang, J. A general methodology for designing globally convergent optimization neural networks. IEEE Trans. Neural Netw. 1998, 9, 1331–1343. [Google Scholar] [PubMed]

- Lorenz, D.A.; Pock, T. An inertial forward-backward algorithm for monotone inclusions. J. Math. Imaging Vis. 2015, 51, 311–325. [Google Scholar] [CrossRef]

- Takahashi, W. Nonlinear Functional Analysis-Fixed Point Theory and its Applications; Springer: New York, NY, USA, 2000. [Google Scholar]

- Brezis, H. Ope Rateurs Maximaux Monotones Et Semi-Groupes De Contractions Dans Les Espaces De Hilbert; Elsevier: North Holland, The Netherlands, 1973. [Google Scholar]

- Ofoedu, E. Strong convergence theorem for uniformly L-Lipschitzian asymptotically pseudocontractive mapping in real Banach space. J. Math. Anal. Appl. 2006, 321, 722–728. [Google Scholar] [CrossRef]

- Opial, Z. Weak convergence of the sequence of successive approximations for nonexpansive mappings. Bull. Am. Math. Soc. 1967, 73, 591–597. [Google Scholar] [CrossRef]

- Censor, Y.; Elfving, T. A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms 1994, 8, 221–239. [Google Scholar] [CrossRef]

- Censor, Y.; Elfving, T.; Kopf, N.; Bortfeld, T. The multiple-sets split feasibility problem and its applications for inverse problems. Inverse Probl. 2005, 21, 2071. [Google Scholar] [CrossRef]

- Censor, Y.; Bortfeld, T.; Martin, B.; Trofimov, A. A unified approach for inversion problems in intensity-modulated radiation therapy. Phys. Med. Biol. 2006, 51, 2353. [Google Scholar] [CrossRef]

- Moudafi, A.; Oliny, M. Convergence of a splitting inertial proximal method for monotone operators. J. Comput. Appl. Math. 2003, 155, 447–454. [Google Scholar] [CrossRef]

- Iiduka, H.; Takahashi, W. Strong convergence theorems for nonexpansive nonself-mappings and inverse-strongly-monotone mappings. J. Convex Anal. 2004, 11, 69–79. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).