Treating Nonresponse in Probability-Based Online Panels through Calibration: Empirical Evidence from a Survey of Political Decision-Making Procedures

Abstract

1. Introduction

2. Proposed Calibration Strategies in Panel Surveys

2.1. Calibration in One Step

2.2. Calibration in Two Steps

- A two-step calibration method can be defined in this manner:Step 1: Adjusting the representativeness of the panel in the population.The calibration on population auxiliary variables whose population totals are known yields the calibrations weights , where are the panel weights under the restrictionsThen, each unit in the panel has a weight that summarizes the auxiliary information obtained from the population.Step 2: Adjusting the non-response of the sample in the panel.The panel auxiliary information is incorporated through a calibration estimator whose calibrated weights (here the starting weights for calibration are the design weights of the units in the panel) verifiedThe final weights are obtained by multiplying these weights and the two-step calibration estimator proposed is

- By using the same idea as in [22], we can consider a second procedure:Step 1. Computing first intermediate weights by calibrating the survey response set to the panel. Thus, we calculate the weights by subject toThe calibrated weights obtained in this first step are noted by .Step 2. Then, using these intermediate weights for calibration to the population. The problem is now subject toWe denote the resulting calibration estimator by .

- We consider other alternative by calibrating the survey response set to the sample that is:Step 1. Compare the response set with the sample by subject toThe calibrated weights obtained calibrating the survey response set are noted by .Step 2. Compare, using these intermediate weights, the sample with the population by subject toWe denote the resulting calibration estimator by .

3. Simulation Study

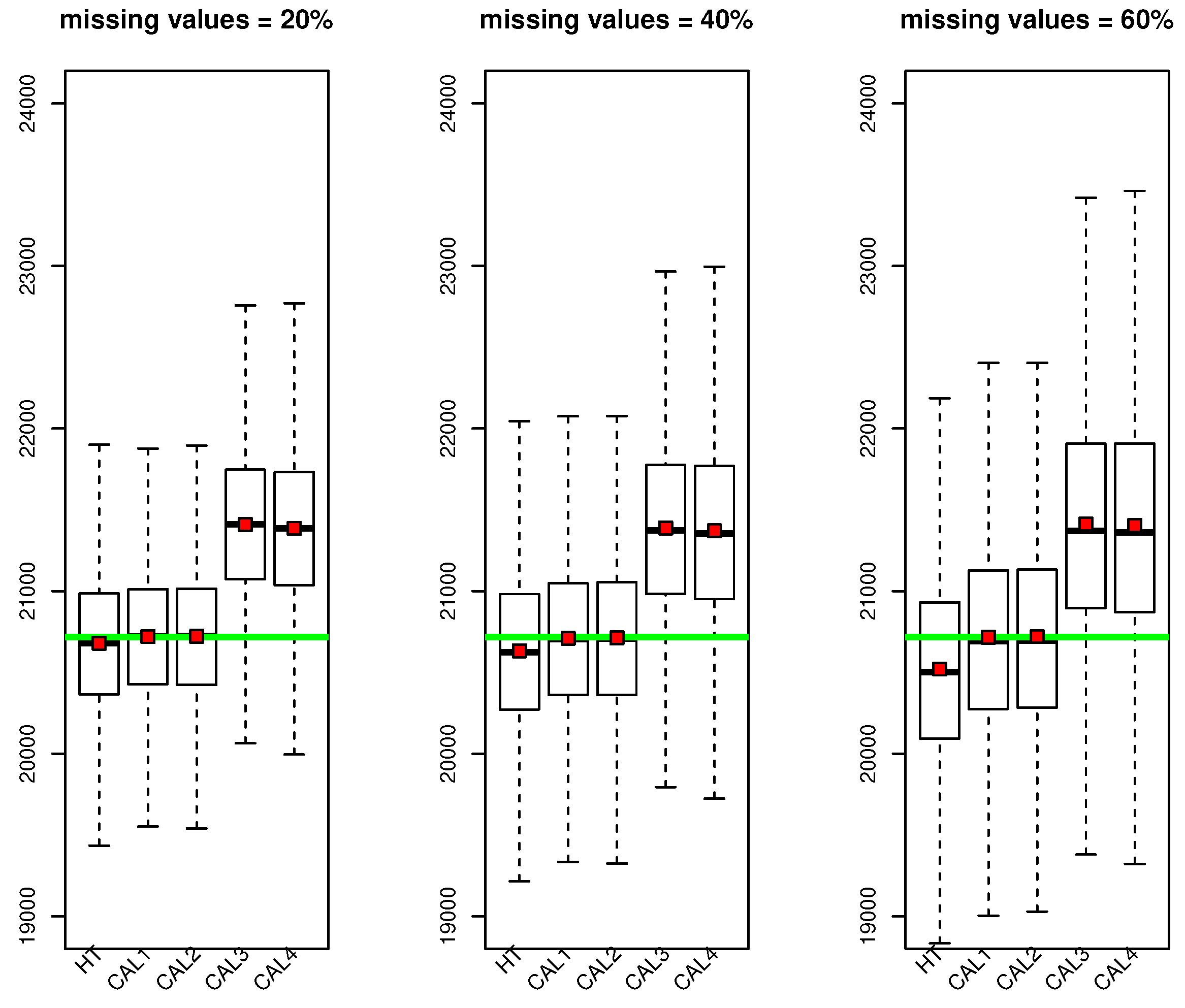

- The Horvitz–Thompson estimator is a biased estimator and the non-response bias increases with the non-response rate. The best behavior is taken by estimators cal1 and cal2: they correct bias very well even for the largest non-response rates and have the least variability. In this situation of MAR non-response, the calibration estimators cal3 and cal4 are not able to correct the non-response bias: they are even more biased than the HT estimator. Note that the bias of the Horvitz–Thompson estimator is negative, while the cal3 and cal4 estimators have a positive bias.

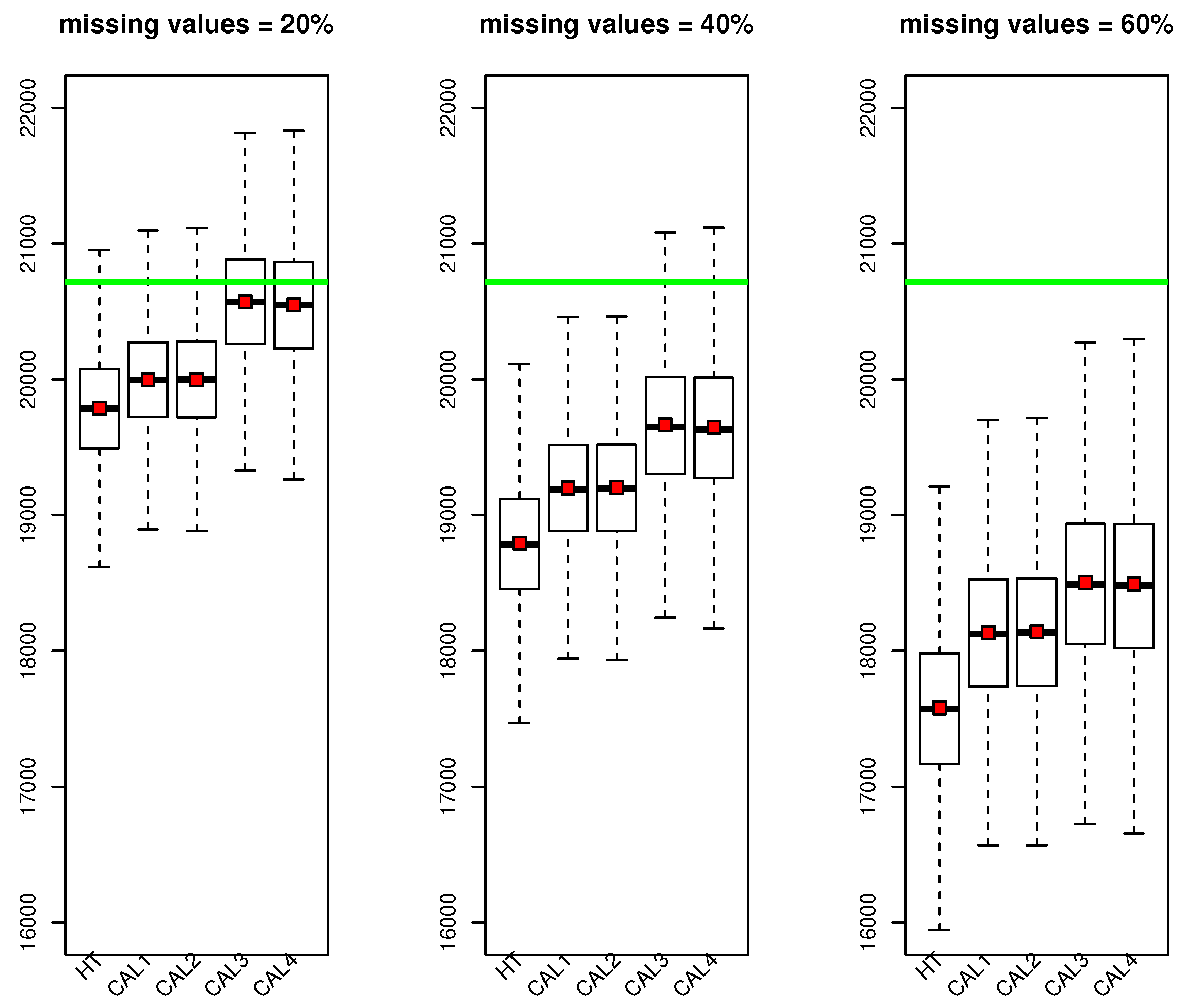

- The behavior of the estimators in the second scenario is very different. None of the estimators can correct the non-response bias (note that the green line is off the graph when the non-response rate is 60%) especially when the non-response rate is large. The estimators that are best profiled to reduce them are the cal3 and cal4 estimators although the bias is very large for high response rates. This result was expected since the MNAR non-response is very complex to deal with.

- It is noteworthy that the estimators cal1 and cal2 have a similar behavior to each other as well as cal3 and cal4. It is also observed that the estimators cal1 and cal2 have less variability in general than cal3 and cal4.

4. Application to the Pacis Survey on Andalusian Citizen Preferences for Political Decision-Making Processes

4.1. The Dataset

4.2. Nonresponse in PACIS Surveys

4.3. Main and Auxiliary Variables in the PACIS

4.4. Results

- Survey results after applying calibration weights are different from unweighted results.

- Differences in survey results after applying one-step or two-step calibration estimators are small in estimates and in errors.

- Calibration estimator in one step cal1 come close in RMSE to cal2. The two-step calibration estimators cal3 and cal4 estimators also give similar results to each other.

- There is no estimator that always has the lowest RMSE errors.

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- ESOMAR; Global Market Research: Amsterdam, The Netherlands, 2016.

- Peytchev, A.; Carley-Baxter, L.R.; Black, M.C. Multiple Sources of Nonobservation Error in Telephone Surveys: Coverage and Nonresponse. Sociol. Methods Res. 2011, 40, 138–168. [Google Scholar] [CrossRef]

- Eckman, S.; Kreuter, F. Undercoverage Rates and Undercoverage Bias in Traditional Housing Unit Listing. Sociol. Methods Res. 2013, 42, 264–293. [Google Scholar] [CrossRef]

- Gummer, T. Assessing Trends and Decomposing Change in Nonresponse Bias: The Case of Bias in Cohort Distributions. Sociol. Methods Res. 2017. [Google Scholar] [CrossRef]

- De Leeuw, E. Counting and Measuring Online: The Quality of Internet Surveys. Bull. Sociol. Methodol. Methodol. Sociol. 2012, 114, 68–78. [Google Scholar] [CrossRef]

- Dixon, J.; Tucker, C. Survey Nonresponse. In Handbook of Survey Research; Marsden, P., Wright, J., Eds.; Emerald Group Publishing: Bingley, UK, 2010; pp. 593–630. [Google Scholar]

- Tucker, C.; Lepkowski, J. Telephone Survey Methods: Adapting to Change. In Advances in Telephone Survey Methodology; Lepkowski, J., Tucker, C., Brick, J., de Leeuw, E., Japec, L., Lavrakas, P., Sangster, R., Eds.; Wiley-Interscience: Hoboken, NJ, USA, 2008; pp. 3–26. [Google Scholar]

- Vehovar, V.; Berzelak, N.; Lozar Manfreda, K. Mobile Phones in an Environment of Competing Survey Modes: Applying Metric for Evaluation of Costs and Errors. Soc. Sci. Comput. Rev. 2010, 28, 303–318. [Google Scholar] [CrossRef]

- Lu, Y.; Chen, Z.; Law, R. Mapping the progress of social media research in hospitality and tourism management from 2004 to 2014. J. Travel Tour. Mark. 2018, 35, 102–118. [Google Scholar] [CrossRef]

- Manca, M.; Boratto, L.; Roman, V.M.; i Gallissà, O.M.; Kaltenbrunner, A. Using social media to characterize urban mobility patterns: State-of-the-art survey and case-study. Online Soc. Netw. Media 2017, 1, 56–69. [Google Scholar] [CrossRef]

- Sinnenberg, L.; Buttenheim, A.M.; Padrez, K.; Mancheno, C.; Ungar, L.; Merchant, R.M. Twitter as a tool for health research: A systematic review. Am. J. Public Health 2017, 107, e1–e8. [Google Scholar] [CrossRef]

- Li, R.; Crowe, J.; Leifer, D.; Zou, L.; Schoof, J. Beyond big data: Social media challenges and opportunities for understanding social perception of energy. Energy Res. Soc. Sci. 2019, 56, 101217. [Google Scholar] [CrossRef]

- Japec, L.; Kreuter, F.; Berg, M.; Biemer, P.; Decker, P.; Lampe, C.; Usher, A. Big data in survey research: AAPOR task force report. Public Opin. Q. 2015, 79, 839–880. [Google Scholar] [CrossRef]

- Callegaro, M.; Manfreda, K.L.; Vehovar, V. Web Survey Methodology; SAGE: Thousand Oaks, CA, USA, 2015. [Google Scholar]

- Fricker, R.D.; Schonlau, M. Advantages and Disadvantages of Internet Research Surveys: Evidence from the Literature. Field Methods 2002, 14, 347–367. [Google Scholar] [CrossRef]

- Tsetsi, E.; Rains, S.A. Smartphone Internet access and use: Extending the digital divide and usage gap. Mob. Media Commun. 2017, 5, 239–255. [Google Scholar] [CrossRef]

- Lee, M.H. Statistical Methods for Reducing Bias in Web Surveys. Ph.D. Thesis, Simon Fraser University, Burnaby, BC, Canada, 2011. Available online: http://summit.sfu.ca/item/11783 (accessed on 15 November 2019).

- DiSogra, C.; Callegaro, M. Metrics and Design Tool for Building and Evaluating Probability-Based Online Panels. Soc. Sci. Comput. Rev. 2016, 34, 26–40. [Google Scholar] [CrossRef]

- Callegaro, M.; Baker, R.; Bethlehem, J.; Göritz, A.S.; Krosnick, J.A.; Lavrakas, P.J. Online panel research: History, concepts, applications and a look at the future, Chapter 1. In Online Panel Research: A Data Quality Perspective; Callegaro, M., Baker, R., Bethlehem, J., Göritz, A.S., Krosnick, J.A., Lavrakas, P.J., Eds.; John Wiley & Sons, Ltd: Chichester, UK, 2014; pp. 1–22. [Google Scholar]

- Blom, A.G.; Bosnjak, M.; Cornilleau, A.; Cousteaux, A.-S.; Das, M.; Douhou, S.; Krieger, U. A Comparison of Four Probability-Based Online and Mixed-Mode Panels in Europe. Soc. Sci. Comput. Rev. 2016, 34, 8–25. [Google Scholar] [CrossRef]

- Cheng, A.; Zamarro, G.; Orriens, B. Personality as a predictor of unit non-response in an internet panel. Sociol. Methods Res. 2018. [Google Scholar] [CrossRef]

- Andersson, P.; Särndal, C.E. Calibration for non-response treatment: In one or two stepsf. Stat. J. IAOS 2014, 32, 375–381. [Google Scholar] [CrossRef]

- Särndal, C.E.; Lundström, S. Estimation in Surveys with Nonresponse; John Wiley and Sons: New York, NY, USA, 2005. [Google Scholar]

- Särndal, C.E.; Lundström, S. Assessing auxiliary vectors for control of non-response bias in the calibration estimator. J. Off. Stat. 2008, 4, 251–260. [Google Scholar]

- Särndal, C.E.; Lundström, S. Design for estimation: Identifying auxiliary vectors to reduce non-response bias. Surv. Methodol. 2010, 36, 131–144. [Google Scholar]

- Kott, P.S. Using Calibration Weighting to Adjust for Nonresponse and Coverage Errors. Surv. Methodol. 2006, 32, 133–142. [Google Scholar]

- Kott, P.S.; Liao, D. Providing double protection for unit non-response with a nonlinear calibration-weighting routine. Surv. Res. Methods 2012, 6, 105–111. [Google Scholar]

- Kott, P.S.; Liao, D. One step or two? Calibration weihting form a complete list frame with non-response. Surv. Methodolgy 2015, 41, 165–181. [Google Scholar]

- Bethlehem, J.; Callegaro, M. Introduction to Part IV. In Online Panel Research: A Data Quality Perspective; Callegaro, M., Baker, R., Bethlehem, J., Göritz, A.S., Krosnick, J.A., Lavrakas, P.J., Eds.; John Wiley & Sons, Ltd: Chichester, UK, 2014; pp. 263–272. [Google Scholar]

- Särndal, C.E. The calibration approach in survey theory and practice. Surv. Methol. 2007, 33, 99–119. [Google Scholar]

- Arcos, A.; Rueda, M.; Trujillo, M.; Molina, D. Review of estimation methods for landline and cell phone surveys. Sociol. Methods Res. 2015, 44, 458–485. [Google Scholar] [CrossRef]

- Arcos, A.; Contreras, J.M.; Rueda, M. A novel calibration estimator in social surveys. Sociol. Methods Res. 2014, 43, 465–489. [Google Scholar] [CrossRef]

- Deville, J.C.; Särndal, C.E. Calibration estimators in survey sampling. J. Am. Stat. Assoc. 1992, 87, 376–382. [Google Scholar]

- Devaud, D.; Tillé, Y. Deville and Särndal’s calibration: Revisiting a 25-years-old successful optimization problem. TEST 2019. [Google Scholar] [CrossRef]

- Estevao, V.M.; Särndal, C.E. A functional form approach to calibration. J. Off. Stat. 2000, 16, 379–399. [Google Scholar]

- Särndal, C.E. Methods for estimating the precision of survey estimates when imputation has been used. Surv. Methodol. 1992, 18, 241–252. [Google Scholar]

- Estevao, V.M.; Särndal, C.E. Survey Estimates by Calibration on Complex Auxiliary Information. Internat. Statist. Rev. 2006, 74, 127–147. [Google Scholar] [CrossRef]

- Alfons, A.; Templ, M.; Filzmoser, P. An Object-Oriented Framework for Statistical Simulation: The R Package simFrame. J. Stat. Softw. 2010, 37, 1–36. [Google Scholar] [CrossRef]

- Templ, M.; Meindl, B.; Kowarik, A.; Dupriez, O. Simulation of Synthetic Complex Data: The R Package simPop. J. Stat. Softw. 2017, 79, 1–38. [Google Scholar] [CrossRef]

- Alarcón, P.; Font, J.; Fernéz, J. Tell me what you trust and what you think about political actors and I will tell you what democracy you prefer. In XIII AECPA Conference. “La fortaleza de Europa: Vallas y puentes”; Universidad de Santiago de Compostela: Santiago de Compostela, Galicia, 2017. [Google Scholar]

- Verma, V.; Betti, G.; Ghellini, G. Cross-sectional and longitudinal weighting in a rotational household panel: Applications to EU-SILC. Stat. Transit. 2007, 8, 5–50. [Google Scholar]

- ESS Round 8: European Social Survey Round 8 Data; Data file edition 2.1; Data- Archive and distributor of ESS data for ESS ERIC; Norwegian Centre for Research Data for ESS ERIC: Bergen, Norway, 2016.

- Font, J.; Wojcieszak, M.; Navarro, C.J. Participation, Representation and Expertise: Citizen Preferences for Political Decision-Making Processes. Political Stud. 2015, 63, 153–172. [Google Scholar] [CrossRef]

- Rizzo, L.; Kalton, G.; Brick, J.M. A comparison of some weighting adjustment methods for panel non-response. Surv. Methodol. 1996, 22, 43–53. [Google Scholar]

- Mercer, A.; Lau, A.; Kennedy, C. For Weighting Online Opt-In Samples, What Matters Most? Pew Res. Center. 2018, 2018, 28. [Google Scholar]

| n | ||

|---|---|---|

| Interview | 2803 | |

| Valid registration after postal | ||

| contact (online or hotline phone) | 186 | |

| Valid registration after F2F | ||

| contact (visits to households) | 2617 | |

| Eligible, non-interview | 5886 | |

| Refusals | 5474 | |

| Contacted, visit postponed | 378 | |

| Language barriers | 34 | |

| Unknown eligibility | 6123 | |

| No answer | 5690 | |

| Unable to reach / Unsafe area | 308 | |

| Unable to locate address | 125 | |

| Not eligible | 2345 | |

| Non residence | 866 | |

| Vacant housing unit | 1479 | |

| Total of units in the sample | 17,517 | |

| Response Rate RR1 | 18.9% | |

| Response Rate RR3 | 21.6% | |

| Refusal Rate RF1 | 39.7% | |

| Cooperation Rate COOP2 | 32.3% | |

| Contact Rate CON2 | 64.3% |

| n | ||

|---|---|---|

| Interview | 1081 | |

| Conducted online | 623 | |

| Conducted by CATI | 458 | |

| Eligible, non-interview | 911 | |

| Refusals | 812 | |

| Break off/ Implicit refusal (internet surveys) | 64 | |

| Logged on to survey, did not complete any item | 33 | |

| Telephone answering device (confirming HH) | 2 | |

| Unknown eligibility | 254 | |

| No answer | 254 | |

| Not eligible | 152 | |

| Fax/Data line | 2 | |

| Phone number doesn’t match with panelist | 150 | |

| Total of units in the sample | 2398 | |

| Response Rate RR1 | 48.1% | |

| Response Rate RR3 | 48.5% | |

| Refusal Rate RF1 | 40.5% | |

| Cooperation Rate COOP2 | 54.3% | |

| Contact Rate CON2 | 89.3% |

| Sample Distribution | Panel | Diff. with | 2nd Wave | Diff. with | Population |

|---|---|---|---|---|---|

| Members | Population | Respondents | Population | ||

| Women | 57.1% | +6.0 % | 49.2% | − 1.9 % | 51.1% |

| Over 60 years old | 16.9% | −9.0 % | 19.1% | −6.8 % | 25.9% |

| Residence | |||||

| Malaga | 14.7% | −4.7 % | 15.6% | −3.8 % | 19.4% |

| Granada | 14.5% | +3.6 % | 12.3% | +1.4 % | 10.9% |

| Education level | |||||

| Up to primary | 19.9% | −8.1 % | 13.3% | −14.7 % | 28.0% |

| Secondary | 58.0% | +8.4 % | 59.5% | +9.9 % | 49.6% |

| Tertiary | 22.2% | −0.2 % | 27.2% | +4.8 % | 22.4% |

| Labor status | |||||

| Employed | 37.4% | −1.6 % | 38.2% | −0.8 % | 39.0% |

| Unemployed | 30.6% | +10.3 % | 33.7% | +13.4 % | 20.3% |

| Economically inactive | 32.0% | −8.7 % | 28.1% | −12.6 % | 40.7% |

| Foreign nationality | 3.8% | −2.9 % | 2.6% | −4.1 % | 7.9% |

| Primary | Secondary | Higher | Primary | Secondary | Higher | ||

|---|---|---|---|---|---|---|---|

| Men | |||||||

| 16–29 | 71,968 | 537,814 | 59,433 | 35 | 217 | 50 | |

| 30–44 | 91,673 | 702,591 | 194,410 | 40 | 310 | 143 | |

| 45–59 | 197,901 | 586,726 | 126,083 | 58 | 239 | 77 | |

| >60 | 471,729 | 231,988 | 97,686 | 119 | 94 | 52 | |

| Women | |||||||

| 16–29 | 44,609 | 487,020 | 112,122 | 44 | 211 | 88 | |

| 30–44 | 78,176 | 619,471 | 272,774 | 45 | 466 | 210 | |

| 45–59 | 194,971 | 572,750 | 149,620 | 121 | 322 | 94 | |

| >60 | 681,508 | 224,651 | 72,158 | 204 | 82 | 28 | |

| Laboral Status | |||||||

| Working | Retired | Unemployed | Student | Housework | |||

| 37.41 | 16.12 | 30.58 | 7.46 | 8.42 | |||

| Province | |||||||

| Almería | Cádiz | Córdoba | Granada | Huelva | Jaén | Málaga | Sevilla |

| 7.67 | 14.72 | 9.67 | 14.51 | 8.33 | 8.33 | 14.66 | 22.10 |

| PC | Net | Land phone | Cellular phone | ||||

| % of owners | |||||||

| 78.05 | 79.1 | 57.96 | 37.12 | 78.59 | |||

| How Interested Would You Say You Are in Politics? | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Est. | RMSE(%) | |||||||||||

| ht | cal1 | cal2 | cal3 | cal4 | ht | cal1 | cal2 | cal3 | cal4 | |||

| Extremely | 17.02 | 14.63 | 15.05 | 14.88 | 14.71 | 1.53 | 1.28 | 1.39 | 1.28 | 1.27 | ||

| Very | 33.30 | 30.04 | 30.03 | 31.39 | 31.05 | 2.54 | 2.16 | 2.18 | 2.05 | 2.03 | ||

| Moderately | 27.34 | 27.02 | 26.85 | 27.04 | 27.07 | 1.95 | 1.85 | 1.84 | 1.77 | 1.78 | ||

| Slightly | 14.79 | 17.50 | 17.20 | 16.55 | 16.84 | 1.96 | 1.91 | 1.76 | 1.59 | 1.61 | ||

| Not at all | 7.55 | 10.82 | 10.86 | 10.13 | 10.33 | 1.86 | 1.55 | 1.50 | 1.36 | 1.39 | ||

| How Would You Like to Be Made Political Decisions in ...? | ||||||||

|---|---|---|---|---|---|---|---|---|

| Spain | Andalusia | Municipality | ||||||

| Est. | RMSE | Est. | RMSE | Est. | RMSE | |||

| ht | 4.59 | 0.19 | 4.35 | 0.19 | 4.28 | 0.19 | ||

| cal1 | 4.53 | 0.17 | 4.41 | 0.17 | 4.27 | 0.17 | ||

| cal2 | 4.53 | 0.17 | 4.45 | 0.17 | 4.30 | 0.17 | ||

| cal3 | 4.54 | 0.15 | 4.45 | 0.15 | 4.32 | 0.16 | ||

| cal4 | 4.55 | 0.15 | 4.46 | 0.15 | 4.32 | 0.16 | ||

| We Would Like You to Rate the Following | |||||||

|---|---|---|---|---|---|---|---|

| Ways of Making Political Decisions in Andalusia? | |||||||

| Elections | Consult to Experts | Assemblies | Allow Experts | Referendums | Political Leaders | ||

| ht | 7.34 | 7.10 | 6.40 | 6.44 | 5.88 | 4.30 | |

| 0.30 | 0.29 | 0.25 | 0.29 | 0.23 | 0.19 | ||

| cal1 | 7.33 | 7.03 | 6.70 | 6.51 | 5.90 | 4.28 | |

| 0.24 | 0.22 | 0.24 | 0.22 | 0.22 | 0.18 | ||

| cal2 | 7.27 | 7.05 | 6.70 | 6.57 | 5.87 | 4.29 | |

| 0.23 | 0.22 | 0.23 | 0.23 | 0.21 | 0.18 | ||

| cal3 | 7.27 | 7.07 | 6.63 | 6.50 | 5.84 | 4.25 | |

| 0.21 | 0.20 | 0.20 | 0.19 | 0.19 | 0.16 | ||

| cal4 | 7.27 | 7.07 | 6.64 | 6.51 | 5.84 | 4.26 | |

| 0.21 | 0.20 | 0.20 | 0.20 | 0.19 | 0.16 | ||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arcos , A.; Rueda , M.d.M.; Pasadas-del-Amo, S. Treating Nonresponse in Probability-Based Online Panels through Calibration: Empirical Evidence from a Survey of Political Decision-Making Procedures. Mathematics 2020, 8, 423. https://doi.org/10.3390/math8030423

Arcos A, Rueda MdM, Pasadas-del-Amo S. Treating Nonresponse in Probability-Based Online Panels through Calibration: Empirical Evidence from a Survey of Political Decision-Making Procedures. Mathematics. 2020; 8(3):423. https://doi.org/10.3390/math8030423

Chicago/Turabian StyleArcos , Antonio, Maria del Mar Rueda , and Sara Pasadas-del-Amo. 2020. "Treating Nonresponse in Probability-Based Online Panels through Calibration: Empirical Evidence from a Survey of Political Decision-Making Procedures" Mathematics 8, no. 3: 423. https://doi.org/10.3390/math8030423

APA StyleArcos , A., Rueda , M. d. M., & Pasadas-del-Amo, S. (2020). Treating Nonresponse in Probability-Based Online Panels through Calibration: Empirical Evidence from a Survey of Political Decision-Making Procedures. Mathematics, 8(3), 423. https://doi.org/10.3390/math8030423