Abstract

Spectral conjugate gradient method (SCGM) is an important generalization of the conjugate gradient method (CGM), and it is also one of the effective numerical methods for large-scale unconstrained optimization. The designing for the spectral parameter and the conjugate parameter in SCGM is a core work. And the aim of this paper is to propose a new and effective alternative method for these two parameters. First, motivated by the strong Wolfe line search requirement, we design a new spectral parameter. Second, we propose a hybrid conjugate parameter. Such a way for yielding the two parameters can ensure that the search directions always possess descent property without depending on any line search rule. As a result, a new SCGM with the standard Wolfe line search is proposed. Under usual assumptions, the global convergence of the proposed SCGM is proved. Finally, by testing 108 test instances from 2 to 1,000,000 dimensions in the CUTE library and other classic test collections, a large number of numerical experiments, comparing with both SCGMs and CGMs, for the presented SCGM are executed. The detail results and their corresponding performance profiles are reported, which show that the proposed SCGM is effective and promising.

1. Introduction

Conjugate gradient method (CGM) is one class of the prevailing methods commonly used for solving large-scale optimization problems, since it possesses simple iterations, fast convergence properties and low memory requirements. In this paper, we consider the following unconstrained optimization problem:

where is a continuously differentiable function and its gradient is denoted by . The iterates of the classical CGM can be formulated as

and

where , is the search direction, is the so-called conjugate parameter, and is the steplength which obtained by a suitable exact or inexact line search. However, as the high cost of the exact line search, is usually generated by an inexact line search, such as the Wolfe line search

or the strong Wolfe line search

The parameters and above generally are required to satisfy . As we know, different choice for would generate different CGM. The most well-known CGMs are the Hestenes-Stiefel (HS, 1952) method [1], Fletcher-Reeves (FR, 1964) method [2], Polak-Ribière-Polyak (PRP, 1969) method [3,4] and the Dai-Yuan (DY, 1999) method [5], where the corresponding formulas for are

respectively, where is the standard Euclidean norm. Generally, these four methods are often referred to the classical CGMs. Under the corresponding assumptions, the authors analysed the convergence properties and tested the numerical performance of the four CGMs in References [2,3,4,5,6], respectively.

It is well known that the FR CGM and DY CGM possess nice convergence properties. However, their numerical performances are not so good. Inversely, the PRP and HS methods have excellent performance in practical computation. But their convergence properties are hardly to be obtained. Thus, to overcome these shortcomings of the classical CGMs, many researchers pay great attention to improve the CGMs. As a result, many improvements with excellent theoretical properties and numerical performance of the CGMs results were proposed, for example, References [7,8,9,10,11,12,13,14,15,16,17,18,19,20]. Where, the spectral conjugate gradient method (SCGM) proposed by Birgin and Martinez [12] can be seen as an important development of CGM. The main difference between SCGM and CGM lies in the computation of the search direction. The search direction of SCGM is usually yielded as follows:

where is a spectral parameter. Obviously, for a SCGM, the selection techniques for the spectral parameter and the conjugate parameter are core work and very important.

In Reference [12], after giving the concrete conjugate parameter , Birgin and Martinez required the spectral search direction yielded by (6) satisfying (a special sufficient descent property), and then obtained the corresponding spectral parameter:

Under suitable line search, the SCGM yielded by (2) and (6) as well as (7) performs superiorly to the PRP CGM, the FR CGM and the Perry method [21].

Yielding the conjugate parameter by a modified DY formula, based on the Newton’s direction and the quasi-Newton equation as well as the conjugate conditions, respectively, Andrei [13] considered two approaches to generate the spectral parameter , namely,

and

The two SCGMs associated with and are all sufficient descent without depending on any line search, and are global convergent with the Wolfe line search (4). Also, the numerical results show that the SCGM associated with is more encouraging.

Recently, by requiring the spectral direction defined by (6) satisfying the special sufficient descent condition for general , Liu et al. [15] proposed a class of choice for as follows:

Under the conventional assumptions and request as well as the Wolfe line search (4), the SCGM developed by is global convergent, and implemented with good computation performance.

On the other hand, Jiang et al. [19] considered to improve both the FR method and the DY method by utilizing the strong Wolfe line search (5), and achieved their good numerical effect. As a result, two schemes for the conjugate parameter are proposed, namely,

Interestingly, it is found, from formulas (3) and (6), that the SCGM can lead to more decrease than the classical CGM for a same and any . Therefore, in this work, motivated by the ideas of the modified FR method and DY method [19], and making full use of the second condition of the strong Wolfe line search (5), we first introduce a new approach for yielding the spectral parameter as follows:

Obviously, if the previous is a descent direction.

Secondly, based on the scheme of the conjugate parameter in Reference [20] with the form

and fully absorbing the hybrid idea of Reference [11], we propose a new conjugate parameter in the following manner

So far, a basic conception of our SCGM has been formed. As a result, a new SCGM is proposed, and the theoretical features and numerical performance is analysed and reported.

2. Algorithm and the Descent Property

Based on the formulas (2) and (6) as well as (9), we establish the new SCGM as follows (Algorithm 1).

| Algorithm 1: JYJLL-SCGM |

| Step 0. Given any initial point , parameters and satisfying , and an accuracy tolerance . Let , set . |

| Step 1. If , terminate. Otherwise, go to Step 2. |

| Step 2. Determine a steplength by an inexact line search. |

| Step 3. Generate the next iterate by , compute gradient and the spectral parameter by (8), as well as the conjugate parameter by (9). |

| Step 4. Let . Set , repeat Step 1. |

The following lemma indicates that the JYJLL-SCGM always satisfies the descent condition without depending on any line search, and the conjugate parameter has the similar properties as the DY formula.

Lemma 1.

Suppose that the search direction is generated by JYJLL-SCGM. Then, we have for , that is, the search direction satisfies the descent condition. Furthermore, we obtain

Proof.

We first prove the former claim by induction. For , it is easy to see that . Assume that holds for . Now, we prove that holds for Let be the angle between and . The proof is divided into two cases as follows.

Thus, holds for .

3. Convergence Analysis

To analyze and ensure the global convergence of the JYJLL-SCGM, we choose the Wolfe line search (4) to yield the steplength . Further, a basic assumption about the objective function as follows is needed.

Assumption 1.

(H1) For any initial point , the level set is bounded; (H2) is continuously differentiable in a neighborhood U of Λ, and its gradient is Lipschitz continuous, namely, there exists a constant such that

In the following lemma, we review the well-known Zoutendijk condition [6], which plays an important role in the convergence analysis of CGMs. Also, the Zoutendijk condition is suitable for the convergence analysis of the JYJLL-SCGM.

Lemma 2.

Suppose that Assumption 1 holds. Consider a general iterative method where is a descent direction such that , and the steplength satisfies the Wolfe line search condition (4). Then

Based on Lemmas 1 and 2, we can establish the global convergence of the JYJLL-SCGM.

Theorem 1.

Suppose that Assumption 1 holds, and let the sequence be generated by the JYJLL-SCGM with the wolfe line search (4). Then

Proof.

By contradiction, suppose that the conclusion is not true. Then there exists a positive constant such that . Again, from (8), we obtain . Combining this equation and Lemma 1, we have

Next, dividing both sides of the above inequality by , we obtain

In terms of , together with the above relations and , we have

that is, . Hence , which contradicts Lemma 2. Therefore, the proof is complete. □

4. Numerical Results

In this section, we test the numerical performance of our method (denoted by JYJLL for short) via 108 test problems, and compare it with the four methods HZ [7], KD [8], AN1 [13] and LFZ [15]. The HZ and KD methods belong to the CGMs with excellent effect, and the AN1 and LFZ methods are the SCGMs with more efficient performance. The first 53 (from to ) test problems are taken from the CUTE library in N. I. M. Gould et al. [22], and the last 55 are from References [23,24], their dimensions ranging from 2 to 1,000,000. All codes were written in Matlab 2016a and run on a DELL PC with 4GB of memory and windows 10 operating system. All the steplength is generated by the Wolfe line search with and .

In the experiments, notations Itr, NF, NG, and Tcpu and denote the number of iteration, function evaluation, gradient evaluation, computing time of CPU and gradient values, respectively. We stop the iteration, if one of the following two cases is satisfied: (i) ; (ii) . When case (ii) appears, the method is deemed to be invalid and is denoted by “F”.

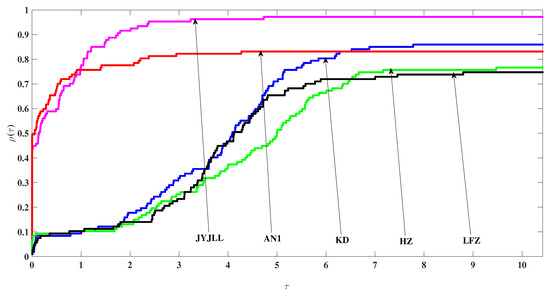

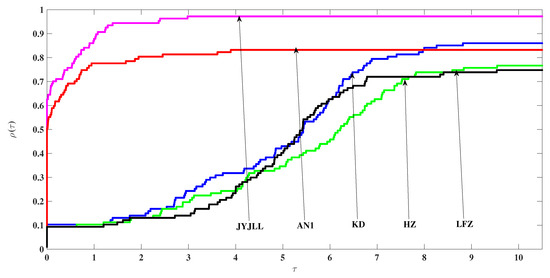

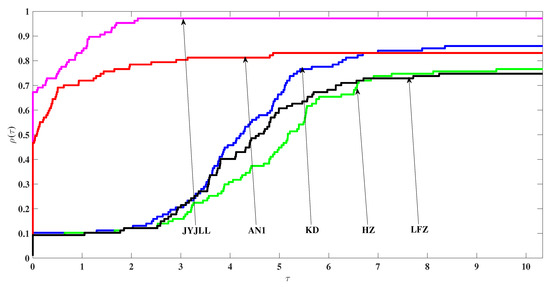

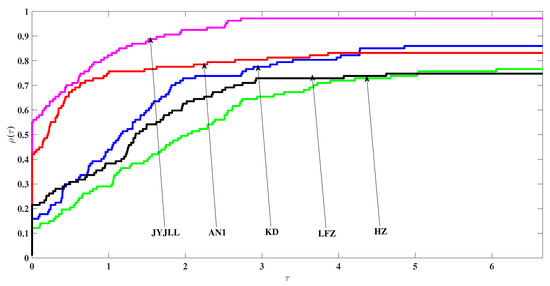

To show the numerical performance of the tested methods, we observed and reported the values of Itr, NF, NG, Tcpu and generated by the five tested methods for each test instance, see Table 1 and Table 2 below. On the other hand, to visually characterize and compare the numerical results in Table 1 and Table 2, we use the performance profiles introduced by Dolan and Morè [25] to describe the performance of the five tested methods according to Itr, NF, NG and Tcpu, respectively, see Figure 1, Figure 2, Figure 3 and Figure 4 below.

Table 1.

Numerical test reports for the five tested methods.

Table 2.

Numerical test reports for the five tested methods (Continue).

Figure 1.

Performance profiles on Tcpu.

Figure 2.

Performance profiles on NF.

Figure 3.

Performance profiles on NG

Figure 4.

Performance profiles on Itr.

5. Discussion of Results

First, it is known, from the characters of the performance profiles, that the higher the curve in the figures, the better the associated method. Second, by summarizing the convergence analysis and numerical reports in Table 1 and Table 2 and Figure 1, Figure 2, Figure 3 and Figure 4, the proposed JYJLL-SCGM shows the following three advantages.

(i) It has good global convergence under mild assumptions.

(ii) It is practically effective, at least for the 108 tested instances.

(iii) It is the most effective in the five tested methods. In addition, the numerical performance of AN1 [13] method and the JYJLL-SCGM is relatively stable.

6. Conclusions

The contributions of this work are two aspects. The one is to design a new computing schemes for the spectral parameter which ensures the . The other is to propose a new computing method for the conjugate parameter. These two techniques such that the search directions always possess descent property independent of the line search technique. As a result, the presented JYJLL-SCGM possesses global convergence if using the Wolfe line search to yield the steplength. A lot of numerical experiments in comparison with relative methods show that our SCGM is promising.

As further works, we think the following two problems are interesting and worth studying. The one is to design new approaches for the spectral parameter to guarantee , such as, combining the Newton direction and some new quasi-Newton equations, or the new conjugate conditions. The other is to find new computation techniques for the conjugate parameter with the help of the existing approaches, for example, the hybrid parameter, the three–term conjugate parameter et al.

Author Contributions

Conceptualization, J.J. and X.J.; methodology, J.J. and X.J.; formal analysis, L.Y. and P.L.; numerical experiments, L.Y. and P.L.; writing—original draft preparation, M.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Natural Science Foundation of China under Grant No. 11771383, Natural Science Foundation of Guangxi Province under Grant No. 2016GXNSFAA380028, Research Foundation of Guangxi University for Nationalities under Grant No. 2018KJQD02, and Middle-aged and Young Teachers’ Basic Ability Promotion Project of Guangxi Province under Grant No. 2017KY0537.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hestenes, M.R.; Stiefel, E. Method of conjugate gradient for solving linear equations. J. Res. Nati. Bur. Stand. 1952, 49, 409–436. [Google Scholar] [CrossRef]

- Fletcher, R.; Reeves, C. Function minimization by conjugate gradients. Comput. J. 1964, 7, 149–154. [Google Scholar] [CrossRef]

- Polak, E.; Ribière, G. Note surla convergence de directions conjugèes. Rev. Fr. Inform. Rech. Oper. 3e. Ann. 1969, 16, 35–43. [Google Scholar]

- Polyak, B.T. The conjugate gradient method in extreme problems. USSR Comput. Math. Math. Phys. 1969, 9, 94–112. [Google Scholar] [CrossRef]

- Dai, Y.H.; Yuan, Y.X. A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 1999, 10, 177–182. [Google Scholar] [CrossRef]

- Zoutendijk, G. Nonlinear programming computational methods. In Integer and Nonlinear Programming; Abadie, J., Ed.; North-Holland: Amsterdam, The Netherlands, 1970; pp. 37–86. [Google Scholar]

- Hager, W.W.; Zhang, H. A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 2005, 16, 170–192. [Google Scholar] [CrossRef]

- Kou, C.X.; Dai, Y.H. A modified self-scaling memoryless Broyden-Fletcher-Goldfarb-Shanno method for unconstrained optimization. J. Optimiz. Theory. App. 2015, 165, 209–224. [Google Scholar] [CrossRef]

- Dai, Y.H.; Yuan, Y.X. An efficient hybrid conjugate gradient method for unconstrained optimization. Ann. Oper. Res. 2001, 103, 33–47. [Google Scholar] [CrossRef]

- Andrei, N. Hybrid conjugate gradient algorithm for unconstrained optimization. J. Optimiz. Theory. Appl. 2009, 141, 249–264. [Google Scholar] [CrossRef]

- Jian, J.B.; Han, L.; Jiang, X.Z. A hybrid conjugate gradient method with descent property for unconstrained optimization. J. Comput. Appl. Math. 2015, 39, 1281–1290. [Google Scholar] [CrossRef]

- Birgin, E.G.; Martine, J.M. A spectral conjugate gradient method for unconstrained optimization. Appl. Math. Optim. 2001, 43, 117–128. [Google Scholar] [CrossRef]

- Andrei, N. New acceleration conjugate gradient algorithms as a modification of Dai-Yuan’s computational scheme for unconstrained optimization. J. Comput. Appl. Math. 2010, 234, 3397–3410. [Google Scholar] [CrossRef]

- Lin, S.H.; Huang, H. A new spectral congjugate gradient method. Chin. J. Eng. Math. (Chin. Ser.) 2014, 31, 837–846. [Google Scholar]

- Liu, J.K.; Feng, Y.M.; Zou, L.M. A spectral conjugate gradient method for solving large-scale unconstrained optimization. Comput. Math. Appl. 2019, 77, 731–739. [Google Scholar] [CrossRef]

- Zhang, L.; Zhou, W.; Li, D.H. A descent modified Polak-Ribiere-Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal. 2006, 26, 629–640. [Google Scholar] [CrossRef]

- Sun, M.; Liu, J. Three modified Polak-Ribiere-Polyak conjugate gradient methods with sufficient descent property. IMA J. Numer. Anal. 2015, 2015, 125–129. [Google Scholar] [CrossRef][Green Version]

- Li, X.L.; Shi, J.J.; Dong, X.L.; Yu, J.L. A new conjugate gradient method based on Quasi-Newton equation for unconstrained optimization. J. Comput. Appl. Math. 2019, 350, 372–379. [Google Scholar] [CrossRef]

- Jiang, X.Z.; Jian, J.B. Improved Fletcher-Reeves and Dai-Yuan conjugate gradient methods with the strong Wolfe line search. J. Comput. Appl. Math. 2019, 348, 525–534. [Google Scholar] [CrossRef]

- Jian, J.B.; Yin, J.H.; Jiang, X.Z. An efficient conjugate gradient method with sufficient descent property. Math. Num. Sin.(Chin. Ser.) 2015, 11, 415–424. [Google Scholar]

- Perry, A. A modified conjugate gradient algorithm. Oper. Res. 1978, 26, 1073–1078. [Google Scholar] [CrossRef]

- Gould, N.I.M.; Orban, D.; Toint, P.L. CUTEr and SifDec: A constrained and unconstrained testing environment, revisited. ACM Trans. Math. Softw. 2003, 29, 373–394. [Google Scholar] [CrossRef]

- Morè, J.; Garbow, B.S.; Hillstrome, K.E. Testing unconstrained optimization software. ACM Trans. Math. Softw. 1981, 7, 17–41. [Google Scholar] [CrossRef]

- Andrei, N. An unconstrained optimization test functions collection. Adv. Model. Optim. 2008, 10, 147–161. [Google Scholar]

- Dolan, E.D.; Morè, J. Benchmarking optimization software with performance profiles. Math. Program. 2002, 91, 201–213. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).