1. Introduction

In this paper, some topics are considered that are related to the stability of both homogeneous and non-homogeneous continuous-time Markov chains with respect to the perturbation of their intensities (infinitesimal characteristics). It is assumed that the evolution of the system under consideration is described by a Markov chain with the known state space, and it is the infinitesimal matrix that is given inexactly. Different classes of admissible perturbations can be considered. The “perturbed” infinitesimal matrix can be arbitrary, and the small deviation of its norm from that of the original matrix is assumed or it can be assumed that the structure of the infinitesimal matrix is known and only its elements are “perturbed” within the same structure. Below we will give a detailed description of these cases. In some papers it is assumed that the perturbations have a special form and, for example, are expanded in a power series of a small parameter. This assumption seems to be too restrictive and unrealistic.

The study of stability of characteristics of stochastic models has been actively developing since the 1970s [

1,

2,

3]. At that time, Zolotarev proposed to treat limit theorems of probability theory as special stability theorems. Zolotarev created the theoretical foundation of the key method used within this approach, namely, the theory of probability metrics [

4]. This approach assumes that statements establishing the convergence must be accompanied by statements establishing the convergence rate. Zolotarev called the conditions of convergence that simultaneously serve as convergence rate estimates “natural.” This approach was developed in the works of Zolotarev, Kalashnikov, Kruglov, Senatov, Yu, Korolev, Yu, Khokhlov, and their colleagues in the framework of international seminars on stability problems for stochastic models. This seminar was founded by Zolotarev in the early 1970s and still continues to hold its regular (as a rule, annual) international sessions (see the series of the proceedings of the seminar published as Springer Lecture Notes starting from [

5] or as issues of the Journal of Mathematical Sciences). In particular, this approach proved to be very productive for the study of random sums in queueing theory, renewal theory, and the theory of branching processes [

6].

Since the 1980s, the problems related to the estimation of stability of Markov chains with respect to perturbations of their characteristics have been thoroughly studied by Kartashov for homogeneous discrete-time chains with general state space and, in parallel, by Zeifman for inhomogeneous continuous-time chains within the seminar mentioned above (see [

7,

8,

9]). In particular, a general approach for inhomogeneous continuous-time chains was developed in [

9]. That paper was published in the proceedings of the seminar “Stability Problems for Stochastic Models” and dealt with both uniform and strong cases.

Later birth-death processes were considered in [

10], and general properties and estimates for inhomogeneous finite chains were considered in [

11]. The paper [

12] was specially devoted to estimates for general birth-death processes, with the queueing system

considered as an example. It should be mentioned that these papers were not noticed by Western authors. For example, in [

13], it was stated that there were no papers on the stability of the (simplest stationary!!) system

. For the first time, we used the term “perturbation bounds” instead of “stability” in the paper [

14] on the referee’s prompt. The same situation takes place with Kartashov’s papers cited above. The methods proposed in those papers seem to be used by most authors of subsequent studies in estimations of perturbations of discrete-time chains. Possibly, poor acquaintance with the early papers of Kartashov and Zeifman can be explained by the differences in terminology mentioned above: in the original (and foundational) papers, the term “stability” was used (in the proceedings of the seminar with the consonant appellation “Stability Problems for Stochastic Models”).

The present paper deals only with continuous-time chains, so the subsequent remarks mainly regard such a case.

Note that, to obtain explicit and exact estimates of the perturbation bounds of a chain, it is required to have estimates of the rate of convergence of the chain to its limit characteristics in the form of explicit inequalities. Moreover, the sharper the convergence rate estimates are, the more accurate the perturbation bounds are. These bounds can be more easily obtained for finite homogeneous Markov chains. Therefore, most publications concern this situation only (see, e.g., [

15,

16,

17,

18,

19,

20]). Thus, two main approaches can be highlighted.

The first of them can be used for the case of weak ergodicity of a chain in the uniform operator topology. The first bounds in this direction were obtained in [

9]. The principal progress related to the replacement of the constant

S with

in the bound was implemented in [

17] and continued in Mitrophanov’s papers [

18,

19,

20] for the case of homogeneous chains and then in [

14,

21] and in the subsequent papers of these authors for the inhomogeneous chains. The contemporary state of affairs in this field and new applied problems related to the link between convergence rate and perturbation bounds in the “uniform” case were described in [

22]. In some recent papers, uniform perturbation bounds of homogeneous Markov chains were studied by the techniques of stochastic differential equations (see, for instance, [

23] and the references therein).

The second approach is used in the case where the uniform ergodicity is not assured, which is typical for the processes most interesting from a practical viewpoint. For example, birth-death processes used for modeling queueing systems, and real processes in biology, chemistry, and physics, as a rule, are not uniformly ergodic.

Following the ideas of Kartashov (see a detailed description in [

24]), most authors use the probability methods to study ergodicity and perturbation bounds of stationary chains (with a finite, countable, or general state space) in various norms [

13,

25,

26]. For a wide class of (mainly) stationary discrete-time chains, a close approach was considered in [

27] and more recent papers [

28,

29,

30,

31,

32,

33,

34,

35,

36,

37,

38].

In the works of the authors of the present paper, perturbation bounds for non-stationary finite or infinite continuous-time chains were studied by other methods.

The first papers dealing with non-stationary queueing models appeared in the 1970s (see [

39,

40], and the more recent paper [

41]). Moreover, as far back as the year in which [

42] was published, it was noted that it is principally possible to use the logarithmic matrix norm for the study of the convergence rate of continuous-time Markov chains. The corresponding general approach employing the theory of differential equations in Banach spaces was developed in a series of papers by the authors of the present paper(see a detailed description in [

43,

44]). In [

9] (see also [

10,

11]), a method for the study of perturbation bounds for the vector of state probabilities of a continuous-time Markov chain with respect to the perturbations of infinitesimal characteristics of the chain in the total variation norm (

-norm) was proposed. The paper [

12] contained a detailed study of the stability of essentially non-stationary birth-death processes with respect to conditionally small perturbations. Convergence rate estimates in terms of weight norms, and hence the corresponding bounds for new classes of Markov chains, were considered in [

45,

46,

47,

48].

In the present paper, both approaches are considered along with the classes of inhomogeneous Markov chains, for which at least one of these approaches yields reasonable perturbation bounds for basic probability characteristics.

The paper is organized as follows. In

Section 2, basic notions and preliminary results are introduced. In

Section 3, general theorems on perturbation bounds are considered.

Section 4 contains convergence rate estimates and perturbation bounds for basic classes of the chains under consideration. Finally, in

Section 5, some special queueing models are studied.

2. Basic Notions and Preliminaries

Let

,

, be, in general, an inhomogeneous continuous-time Markov chain with a finite or countable state space

,

. The transition probabilities for

will be denoted

,

. Let

be the state probabilities of the chain and

be the corresponding vector of state probabilities. In what follows, it is assumed that

where all

are

uniformly in

i, that is,

.

As usual, we assume that, if a chain is inhomogeneous, then all the infinitesimal characteristics (intensity functions) are integrable in t on any interval , .

Let for and .

Further, to provide the possibility to obtain more evident estimates, we will assume that

for almost all

.

The state probabilities then satisfy the forward Kolmogorov system

where

, and

is the infinitesimal matrix of the process.

Let

be the usual

-norm, i.e.

, and

for

. Denote

. Therefore,

for almost all

, and we can apply the results of [

49] to Equation (

3) in the space

. Namely, in [

49] it was shown that the Cauchy problem for Equation (

3) has a unique solution for an arbitrary initial condition. Moreover, if

, then

, for any

and any initial condition

.

Let .

Put .

Therefore, from Equation (

3), we obtain the equation

where

,

where all expressions depend on

t.

By , we will denote the “perturbed” Markov chain with the same state space, state probabilities , transposed infinitesimal matrix , and so on, and the “perturbations” themselves, that is, the differences between the corresponding “perturbed” and original characteristics will be denoted by , .

Let . Recall that a Markov chain is weakly ergodic if as for any initial condition, and it has the limiting mean if as for any k.

Now we briefly describe the main classes of the chains under consideration. The details concerning the first four classes can be found in [

47,

50].

Case 1. Let for all if , and , . This is an inhomogeneous birth-death process (BDP) with the intensities (of birth) and (of death) correspondingly.

Case 2. Now let for , for , and . This chain describes, for instance, the number of customers in a queueing system in which the customers arrive in groups, but are served one by one (in this case, is the arrival intensity of a group of k customers, and is the service intensity of the ith customer). The simplest models of this type were considered in [51] (see also [47,50]). Case 3. Let for , , , and . This situation occurs in modeling queueing systems with the arrivals of single customers and group service.

Case 4. Let , for . This process appears in the description of a system with group arrival and group service, for earlier studies see [46,52,53]. Case 5. Consider a Markov chain with “catastrophes” used for modeling of some queueing systems (see, e.g., [14,54,55,56,57,58]). Here the intensities have a general form, whereas a single (although substantial) restriction consists in that the zero state is attainable from any other state, and the corresponding intensities for are called the intensities of catastrophes. Now consider the following example illustrating some specific features of the problem under consideration.

Example 1 ([

14]).

Consider a homogeneous BDP (Class I) with the intensities , for all t and k and denote by A the corresponding transposed intensity matrix. Therefore, as is known (see, e.g., [59]), the BDP is strongly ergodic and stable in the corresponding norm. On the other hand, take a perturbed process with the transposed infinitesimal matrix , where , for , and for the other . The perturbed Markov chain (describing the “M|M|c queue with mass arrivals when empty” (see [54,58,60])) is then not ergodic, since, from the condition , it follows that the coordinates of the stationary distribution (if it exists) must satisfy the condition , which is impossible. As has already been noted, the (upper) bounds of perturbations are closely connected with the (correspondingly, upper) estimates for the convergence rate (see also the two next sections). On the other side, it is also possible to construct important lower estimates of the rate of convergence provided that the influence of the initial conditions cannot fade too rapidly (see [

61]). It turns out that it is principally impossible to construct lower bounds for perturbations. Indeed, if we consider the same BDP and, as a perturbed BDP, choose a BDP with the intensities

,

, then the stationary distribution for the perturbed process will be the same as that for the original BDP for any positive

.

3. General Theorems Concerning Perturbation Bounds

First consider uniform bounds that provide the first approach to perturbation estimation. This approach is applied to uniformly ergodic Markov chains and the study of stability of the state probability vector. The most important class of such processes is that of Markov chains on finite state space, both homogeneous and inhomogeneous.

Theorem 1. Let the Markov chain be exponentially weakly ergodic; that is, for any initial conditions , and any , , there holds the inequality Therefore, for the perturbations small enough ( for almost all ), the perturbed chain is also exponentially weakly ergodic, and the following perturbation bound takes place: For the proof, we will use the approach proposed in [

17] and modified in [

21] for the inhomogeneous case, see also [

14]. Let

Hence,

whence, as

, we obtain Equation (

7).

Corollary 1. If under the conditions of Theorem 1 the Markov chain has a finite state space, then both Markov chains and have limit expectations and Now consider the second approach. Namely, we turn to weighted bounds. Such estimates can be applied to a wide class of Markov chains which are exponentially ergodic in some weighted norms. Moreover, as a rule, these estimates also allow one to study stability characteristics of the mathematical expectation for countable Markov chains, both homogeneous and inhomogeneous. Here we use the approach proposed in [

9] (see also the detailed description in [

43,

44]).

Let . Therefore, . In addition, let .

Below we will assume that the following conditions hold:

for almost all

.

Recall that

is a

-exponentially weakly ergodic Markov chain if

for some

,

and any

:

, any initial conditions

,

.

If one can choose , then the chain is -exponentially strongly ergodic.

Let

for almost all

.

Theorem 2. If a Markov chain is -exponentially weakly ergodic, then is also -exponentially weakly ergodic and the following perturbation estimate in the -norm holds: If , then both chains and have limiting means and Proof. The detailed consideration can be found in [

44]. Here we only outline the scheme of reasoning. Let

and

be the Cauchy operators for Equation (

4) and for the corresponding “perturbed” equation, respectively. Therefore,

for all

. Therefore, rewriting Equation (

4) as

after some algebra, we obtain the following inequality in the

-norm:

On the other hand,

, for any

. Hence, under any initial condition

, we obtain the following inequalities for the

-norm:

Therefore, the first assertion of the theorem is proved.

Therefore, the second assertion follows from the inequality

(see, e.g., [

62]) and the estimate expressed by Equation (

22), where

□.

Remark 1. A number of consequences of this statement can be formulated, for example, The respective perturbation bounds can be formulated for strongly ergodic (for instance, homogeneous) Markov chains (see [44]). Remark 2. As shown in [44], the bounds presented in Theorem 2 and its corollaries are sufficiently sharp. Namely, in [44], we considered the queue-length process for the simplest ordinary queue and proved that the bounds established in Theorem 2 have the proper order. 4. Convergence Rate Estimates and Perturbation Bounds for Main Classes

For Markov chains of Classes 1–4, an important role is played by the matrix . To begin with, write out this matrix for each of these classes.

For Class 1, this matrix has the form

in the case of a countable state space (

);

in the case of a finite state space (

).

For Class 2, this matrix has the form

in the case of a countable state space (

);

in the case of a finite state space (

).

For Class 3, this matrix has the form

in the case of a countable state space (

);

in the case of a finite state space (

).

Finally, for Class 4, this matrix has the form

in the case of a countable state space (

);

in the case of a finite state space (

).

In the proofs of the following theorems, we use the notion of the logarithmic norm of a linear operator function and the related estimates of the norm of the Cauchy operator of a linear differential equation. The corresponding results are described in detail in our preceding works (see [

47,

62,

63]). Here we restrict ourselves only to the necessary minimum.

Recall that the logarithmic norm of an operator function

is defined as the number

Let

be the Cauchy operator of the differential equation

Therefore, the estimate

holds. Moreover, if for each

maps

into itself, then the logarithmic norm can be calculated by the formula

Also note that, if in Classes 2–4 the intensities

and

do not increase in

k for each

t, then in all the cases the matrix

is essentially nonnegative (that is, its non-diagonal elements are nonnegative); therefore, in Equations (

33) and (

34), the signs of the absolute value can be omitted.

The following statement ([

47]) Theorem 1 is given here for convenience.

Theorem 3. Let, for some sequence of positive numbers, the conditions , andhold. Therefore, the Markov chain is weakly ergodic and for any initial condition , , and for all the following estimate holds: Now let, instead of Equation (

35), for all

, a stronger condition

hold.

Theorem 4. Let, under the conditions of Theorem 3, Inequality (37) hold. Therefore, the Markov chain is -exponentially weakly ergodic, and for all and , , Inequality (15) holds with and . Remark 3. In the case of a homogeneous Markov chain, or if all intensities are periodic with one and the same period, conditions expressed by Equations (35) and (37) are equivalent. Theorem 5. Let the conditions of Theorem 4 hold. Therefore, the Markov chain is -exponentially weakly ergodic. Under perturbations small enough (see Equation (16)), the perturbed chain is also -exponentially weakly ergodic, and the perturbation bound expressed by Equation (17) in the -norm holds. If, moreover, , then both chains and have limit expectations and the estimate expressed by Equation (18) holds for the perturbation of the mathematical expectation. To obtain perturbation bounds in the natural norm, it suffices to use Inequality (

24) mentioned above.

Corollary 2. Under the conditions of Theorem 5, the following perturbation bound in the natural - (total variation) norm holds: Note that it is convenient to use the results formulated above for the construction of perturbation bounds for Markov chains of the first four classes (see, e.g., [

12,

44,

46,

47]).

For chains of the fifth class, as a rule, it is convenient to use the approach based on uniform bounds as shown below. These models were considered, e.g., in [

14,

64,

65].

Theorem 6. Let the intensities of catastrophes be essential, that is Therefore, the chain is weakly ergodic in the uniform operator topology and for any initial conditions , and any , the following convergence rate estimate holds: To prove this theorem, we will use the same technique as in [

14]. Rewrite the forward Kolmogorov system expressed by Equation (

3) in the form

Here

,

, and

The solution to this equation can be written as

where

is the Cauchy operator of the differential equation

Note that the matrix

is essentially nonnegative for all

. Its logarithmic norm is equal to

Theorem 7. Let, instead of Equation (40), the stronger conditionhold. Therefore, the chain is weakly exponentially ergodic in the uniform operator topology, and if the perturbations are small enough, that is, for almost all , then the perturbed chain is also exponentially weakly ergodic, and the perturbation bound expressed by Equation (7) holds with and . 5. Examples

First note that many examples of perturbation bounds for queueing systems have been considered in [

11,

12,

14,

44,

46,

66,

67].

Here, to compare both approaches, we will mostly deal with the queueing system

with losses and 1-periodic intensities. In the preceding papers on this model, other problems were considered. For example, in [

68], the asymptotics of the rate of convergence to the stationary mode as

, was studied, whereas the paper [

69] dealt with the asymptotics of the convergence parameter under various limit relations between the intensities and the dimensionality of the model. In [

66,

67], perturbation bounds were considered under additional assumptions.

Let be the number of servers in the system. Assume that the customers arrival intensity and the service intensity of a server are 1-periodic nonnegative functions integrable on the interval . Therefore, the number of customers in the system (queue length) is a finite Markov chain of Class 1, that is, a BDP with the intensities , for .

It should be especially noted that the process

is weakly ergodic (obviously exponentially and uniformly ergodic, since the intensities are periodic and the state space is finite) if and only if

(see, e.g., [

70]).

For definiteness, assume that

Apply the approach described in Theorems 3 and 4.

Let all

. Therefore,

and in Equation (

34) we have

for all

i; hence,

.

Therefore, Theorem 3 yields the estimate

To find the constants in the estimates, let

and consider

Find the bound for the second summand in Equation (

52). Assuming

, we obtain

Therefore, for the queueing system

, the conditions of Theorem 5 and Corollary 2

These statements imply the following perturbation bounds:

for the vector od=f state probabilities, and

for limit expectations.

Moreover, for these bounds to be consistent, additional information is required concerning the form of the perturbed intensity matrix. The simplest bounds can be obtained, if it is assumed that the perturbed Markov chain is also a BDP with the same state space and the birth and death intensities

and

, respectively. Therefore, if the birth and death intensities themselves do not exceed

for almost all

, then

and

, so that the bounds expressed by Equations (

56) and (

57) have the form

for the vectors of state probabilities, and

for the limit expectations.

On the other hand, Theorem 7 can be applied as well. To construct the bounds for the corresponding parameters, Equation (

24) and the fact that

is exploited. Therefore, Theorem 7 is valid for the queueing system

with the following values of the parameters:

According to this theorem, we obtain the estimate

Moreover, the Markov chains

and

have limit expectations and

It is worth noting that, for the estimates expressed by Equations (

61) and (

62) to hold, only the condition of the smallness of perturbations is required, and

no additional information concerning the structure of the intensity matrix is required.

Thus, in the example with the finite state space under consideration, uniform bounds turn out to be more exact.

Now consider a more special example. Let , , .

On the other hand, as has already been noted, for the Markov chains of Classes 1–4 with countable state space, no uniform bounds could be constructed.

Consider the construction of bounds on the example of a rather simple model, which, however, does not belong to the most well-studied Class 1 (that is, which is not a BDP).

Let a queueing system be given in which the customers can appear separately or in pairs with the corresponding intensities

and

, but are served one by one on one of two servers with constant intensities

, where

is a 1-periodic function integrable on the interval

. Therefore, the number of customers in this system belongs to Class 2, and the corresponding matrix

has the form

where

,

, if

. This matrix is essentially nonnegative, such that, in the expression for the logarithmic norm, the signs of the absolute value can be omitted. Let

,

, and

. For this purpose, consider the expressions from Equation (

34). We have

Therefore, for

, we obtain

and the condition

will a fortiori hold if

with a corresponding choice of

.

The further reasoning is almost the same as in the preceding example: instead of Equation (

54), we obtain

where now

Hence, the conditions of Theorem 5 and Corollary 2 for the number of customers in the system under consideration hold for

To construct meaningful perturbation bounds, it is necessarily required to have additional information concerning the form of the perturbed intensity matrix. Therefore, Example 1 in

Section 2 shows that, if a possibility of the arrival of an arbitrary number of customers (“mass arrival” in the terminology of [

58]) to an empty queue is assumed, then an arbitrarily small (in the uniform norm) perturbation of the intensity matrix can “spoil” all the characteristics of the process. For example, satisfactory bounds can be constructed if we know that the intensity matrix of the perturbed system has the same form; that is, the customers can appear either separately or in pairs and are served one by one. Therefore, if the perturbations of the intensities themselves do not exceed

for almost all

, then

and

, such that, instead of Equations (

56) and (

57), we obtain

for the vectors of state probabilities and

for the limit expectations.

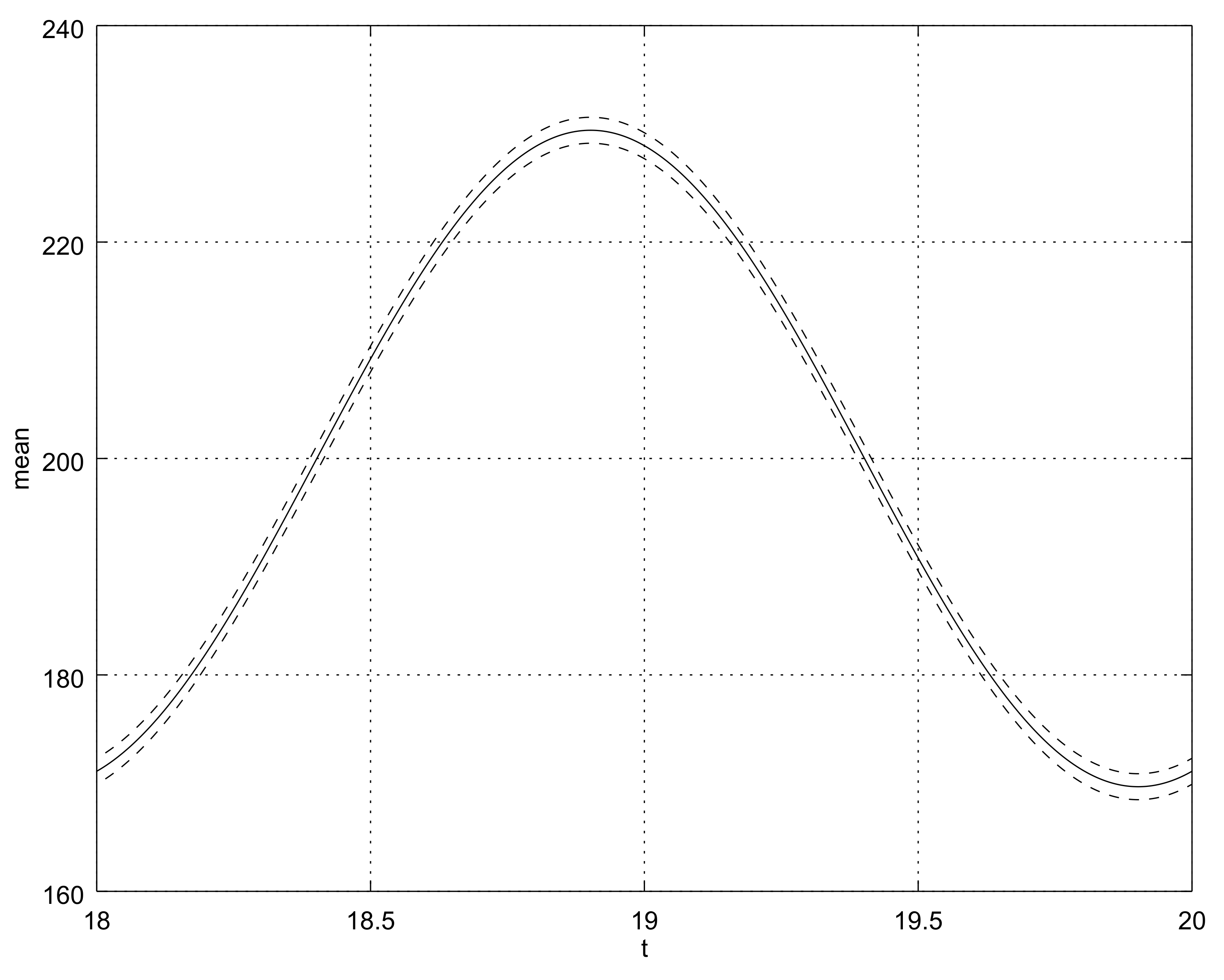

For example, let

,

, and

. Therefore, we have

Furthermore, we follow the method described in [

71,

72] in detail. Namely, we choose the dimensionality of the truncated process (300 in our case), the interval on which the desired accuracy is achieved (

) in the example under consideration) and the limit interval itself (here it is

).